Page 1

User Guide - English

ServerView Suite

ServerView Virtual-IO Manager V3.1

User Guide

Edition October 2012

Page 2

Comments… Suggestions… Corrections…

The User Documentation Department would like to know your opinion of

this manual. Your feedback helps us optimize our documentation to suit

your individual needs.

Feel free to send us your comments by e-mail to

manuals@ts.fujitsu.com.

Certified documentation according to DIN EN

ISO 9001:2008

To ensure a consistently high quality standard and user-friendliness, this

documentation was created to meet the regulations of a quality management system which complies with the requirements of the standard

DIN EN ISO 9001:2008.

cognitas. Gesellschaft für Technik-Dokumentation mbH

www.cognitas.de

Copyright and trademarks

Copyright © 1998 - 2012 Fujitsu Technology Solutions GmbH.

All rights reserved.

Delivery subject to availability; right of technical modifications reserved.

All hardware and software names used are trademarks of their respective

manufacturers.

Page 3

Contents

Contents 3

1 Introduction 11

1.1 Target groups and objective of this manual 12

1.2 System requirements 12

1.3 Supported Hardware 14

1.4 Changes since the previous edition 21

1.5 ServerView Suite link collection 21

1.6 Documentation for the ServerView Suite 23

1.7 Typographic conventions 24

2 Virtual-IO Manager - Introduction 25

2.1 Virtual addresses 25

2.2 Special connection blade for blade server 25

2.3 Management with VIOM - Procedure 28

2.4 Defining networks (LAN) (for blade servers only) 30

2.5 Server profiles 43

2.5.1 Defining server profiles 44

2.5.2 Assigning server profiles 44

2.5.3 Dedicated LAN connections (only for blade servers) 45

2.5.4 Virtualizing I/O parameters 45

2.6 Server profile failover (for blade servers only) 47

2.7 High-Availability (HA) support 48

3 Installation and uninstallation 57

3.1 Prerequisites for the VIOM installation 57

3.2 Installing the Virtual-IO Manager on a Windows-based CMS 58

3.2.1 Installing the Virtual-IO Manager using a graphical interface

3.2.2 Installing the Virtual-IO Manager using the command line

interface 68

3.3 Updating the Virtual-IO Manager on a Windows-based CMS 72

3.4 Installing the Virtual-IO Manager on a Linux-based CMS 73

3.4.1 Installing the Virtual-IO Manager using a graphical interface

3.4.2 Installing the Virtual-IO Manager using the command line 84

3.4.3 Important directories of Virtual-IO Manager 88

3.4.4 Collecting diagnostic information 89

59

74

ServerView Virtual-IO Manager 3

Page 4

Contents

3.5 Updating the Virtual-IO Manager on a Linux-based CMS 89

3.6 License management 90

3.7 Updating ServerView Operations Manager 94

3.8 Upgrading or moving the SQL Server database 95

3.9 Uninstalling the Virtual-IO Manager 96

3.9.1 Uninstalling the Virtual-IO Manager on a Windows-based

CMS 96

3.9.2 Uninstalling the Virtual-IO Manager on a Linux-based CMS

4 Configuration 97

4.1 Configurations on the managed BX600 Blade Server 97

4.1.1 Supported hardware configurations for the connection

blades 97

4.1.1.1 LAN hardware configuration 98

4.1.1.2 Fibre Channel hardware configuration 99

4.1.2 Configuring the BX600 management blade 99

4.1.3 Configuring the I/O connection blades 101

4.1.4 Connecting IBP modules 104

4.1.4.1 Network - Overview 105

4.1.4.2 Notes and recommendations 106

4.2 Configurations on the managed BX400 Blade Server 109

4.2.1 Supported hardware configurations for the connection

blades 110

4.2.1.1 LAN hardware configuration 111

4.2.1.2 Fibre Channel hardware configuration 112

4.2.2 Configuring the BX400 management blade 113

4.2.3 Configuring the I/O connection blades 114

4.2.4 Connecting IBP modules 115

4.2.4.1 Network - Overview 116

4.2.5 Switch stacking support 117

4.3 Configurations on the managed BX900 Blade Server 118

4.3.1 Supported hardware configurations for the connection

blades 119

4.3.1.1 LAN hardware configuration 119

4.3.1.2 Fibre Channel hardware configuration 121

4.3.2 Configuring the BX900 management blade 121

4.3.3 Configuring the I/O connection blades 123

96

4 ServerView Virtual-IO Manager

Page 5

Contents

4.3.4 Connecting IBP modules 126

4.3.4.1 Network - Overview 126

4.3.5 Switch stacking support 127

4.4 Configurations on the managed PRIMERGY rack server 129

4.5 VIOM server profile mapping 132

4.6 PCI slot location in PRIMERGY rack servers 133

4.7 Adding a server to the ServerView server list 135

5 Virtual-IO Manager user interface 137

5.1 Virtual-IO Manager main window 137

5.2 Tree view 139

5.2.1 Tree structure (Server List) 140

5.2.2 Tree structure (Profiles) 141

5.3 Tabs 142

5.3.1 Virtual-IO Manager tab 142

5.3.2 Setup tab 144

5.3.3 Ext. LAN Connections tab 148

5.3.3.1 Graphic tab on Ext. LAN Connections tab 148

5.3.3.2 Details tab on Ext. LAN Connections tab 150

5.3.4 Server Configuration tab 151

5.3.5 Chassis Configuration tab 156

5.3.6 Server Profiles view 158

5.4 Wizards 161

5.4.1 Create Network for IBP wizard (only for blade servers) 162

5.4.1.1 Select Type step (Create Network wizard) 163

5.4.1.2 Edit Properties step (Create Network wizard - internal

network) 164

5.4.1.3 Edit Properties step (Create Network wizard - single

/VLAN network) 165

5.4.1.4 Edit Properties step (Create Network wizard - dedicated service network) 168

5.4.1.5 DCB Properties step (Create Network wizard - single/VLAN network) 172

5.4.1.6 Add Networks step (Create Network wizard - VLAN

network) 174

5.4.2 Edit Uplink Set wizard 176

5.4.2.1 Edit Properties step (Edit Uplink Set wizard - single/VLAN network) 176

ServerView Virtual-IO Manager 5

Page 6

Contents

5.4.2.2 Edit Properties step (Edit Uplink Set wizard - dedicated

service network) 180

5.4.2.3 DCB Properties (Edit Uplink Set wizard - single/VLAN

network) 183

5.4.2.4 Add Networks step (Edit Uplink Set wizard - VLAN network) 185

5.4.3 Create Server Profile wizard 187

5.4.3.1 Name step (Create Server Profile wizard) 187

5.4.3.2 Configure Cards step (Create Server Profile wizard) 189

5.4.3.3 IO-Channels step (Create Server Profile wizard) 190

5.4.3.4 Boot Parameter step (Create Server Profile wizard) 194

5.4.3.5 CNA Parameter step (Create Server Profile wizard) 200

5.4.3.6 Virtual Addresses step (Create Server Profile wizard)

5.4.3.7 Confirm step (Create Server Profile wizard) 204

5.4.4 Edit Server Profile wizard 205

5.4.4.1 Name step (Edit Server Profile wizard) 205

5.4.4.2 Configure Cards step (Edit Server Profile wizard) 207

5.4.4.3 IO-Channels step (Edit Server Profile wizard) 208

5.4.4.4 Boot Parameter step (Edit Server Profile wizard) 212

5.4.4.5 CNA Parameter step (Edit Server Profile wizard) 218

5.4.4.6 Virtual Addresses step (Edit Server Profile wizard) 220

5.4.4.7 Confirm step (Edit Server Profile wizard) 222

5.4.5 Save Configuration wizard 223

5.4.5.1 Select Action step (Configuration Backup/Restore wizard) 223

5.4.5.2 Select File step (Save Configuration Wizard) 224

5.4.5.3 Select File step (Restore Configuration wizard) 225

5.4.5.4 Select File step (Delete Backup Files wizard) 227

5.4.5.5 Select Data step (Save Configuration wizard) 228

5.4.5.6 Select Data step (Restore Configuration wizard) 229

5.4.5.7 Select Data step (Delete Backup Files wizard) 230

5.5 Dialog boxes 231

5.5.1 Authentication dialog box (single blade server) 231

5.5.2 Authentication dialog box (PRIMERGY rack server) 234

5.5.3 Authentication dialog box (PRIMERGY rack server and

blade server) 236

5.5.4 Licenses Information dialog box 237

202

6 ServerView Virtual-IO Manager

Page 7

Contents

5.5.5 Preferences dialog box 238

5.5.6 Restore Options dialog box (servers) 241

5.5.7 Restore Options dialog box (server profiles) 243

5.5.8 Select Profile dialog box 245

5.6 Context menus 247

5.6.1 Context menus on the Ext. LAN Connections tab 247

5.6.2 Context menus in the Server Profiles view 248

5.6.3 Context menu on the Server Configuration tab 249

5.7 General buttons 251

5.7.1 Buttons in the area on the left 251

5.7.2 Button in the area on the right 251

5.7.3 General buttons in other dialog boxes 251

5.8 Icons 252

6 Using the Virtual-IO Manager 253

6.1 Starting the Virtual-IO Manager 253

6.2 Closing Virtual-IO Manager 253

6.3 Logging the actions using VIOM 254

6.3.1 Logging the actions on Windows 254

6.3.2 Logging the actions on Linux 256

7 Managing servers with VIOM 257

7.1 Activating management with VIOM 257

7.2 Changing access rights and ports 259

7.3 Deactivating management with VIOM 261

7.4 VIOM internal operations on blade servers 261

7.5 VIOM-internal operations on a PRIMERGYrack server 266

7.6 Displaying license information 275

8 Defining network paths (LAN) 277

8.1 Defining an uplink set 278

8.1.1 Defining an internal network 279

8.1.2 Defining a single network 280

8.1.3 Defining VLAN networks 282

8.1.4 Defining a dedicated service network 285

ServerView Virtual-IO Manager 7

Page 8

Contents

8.2 Modifying an uplink set 285

8.3 Deleting networks 286

8.4 Copying an IBP configuration 287

8.5 Copying configuration 288

9 Defining and assigning server profiles 289

9.1 Defining server profiles 290

9.2 Viewing server profiles 294

9.3 Modifying server profiles 294

9.4 Copying server profiles 295

9.5 Deleting server profiles 296

9.6 Assigning server profiles 296

9.7 Deleting profile assignments 298

10 Viewing the blade server configuration 301

11 Saving and restoring 303

11.1 Saving your configuration and server profiles 303

11.2 Restoring the configuration 304

11.2.1 Restoring server profiles 305

11.2.2 Restoring blade server configurations 306

11.2.3 Restoring PRIMERGYrack server configurations 307

11.3 Deleting backup files on the management station 308

11.4 Restoring VIOM-specific configurations 308

11.4.1 Restoring an IBP module configuration 308

11.4.2 Deleting the configuration of an uninstalled IBP module 309

11.4.3 Restoring the configuration of a server blade slot 310

11.4.4 Restoring the blade server chassis configuration 311

12 Importing and exporting server profiles 313

12.1 Exporting server profiles 313

12.2 Importing server profiles 313

12.3 Format of export files 314

12.3.1 The Objects element 314

12.3.2 The ServerProfiles element 315

12.3.3 The ServerProfile element 316

12.3.4 The IOChannel element 319

12.3.5 The Address element 323

12.3.6 The BootEnvironment element 323

12.3.7 The ISCSIBootConfiguration element 324

8 ServerView Virtual-IO Manager

Page 9

Contents

12.3.8 The FCBootConfiguration element 327

12.3.9 The DCBConfiguration element 329

12.3.10 The FunctionConfiguration element 329

13 VIOM scenarios 331

13.1 Shifting tasks from one server blade to another 331

13.2 Moving tasks using the server profile failover 332

13.3 Disaster Recovery 333

14 VIOM database 337

14.1 VIOM Backup Service 338

14.1.1 Configuring the job schedule on Windows 339

14.1.1.1 Syntax of Quartz cron expressions 340

14.1.2 Configuring the job schedule on Linux 342

14.1.3 Configuring the output directories 343

14.1.4 Starting the Backup Service on Windows 344

14.1.5 Starting the VIOM Backup Service on Linux 344

14.1.6 Logging the Backup Service 345

14.2 Restoring the VIOM database on Windows 345

14.2.1 Restoration via SQL Server Management Studio 345

14.2.2 Restoration via Enterprise Manager 349

14.2.3 Checking the database backup 349

14.3 Restoring the VIOM database on Linux 350

15 Appendix 353

15.1 Replacing IBP modules 353

15.2 VIOM address ranges 354

15.3 Creating diagnostic data 356

15.4 Event logging 359

ServerView Virtual-IO Manager 9

Page 10

10 ServerView Virtual-IO Manager

Page 11

1 Introduction

You use the ServerView Virtual-IO Manager (Virtual-IO Manager or VIOM for

short) software to manage the input/output parameters (I/O parameters) of following servers:

l PRIMERGYblade server (BX600, BX400, BX900)

In Japan, BX600 blade servers are not supported.

l PRIMERGY rack server (RX200 S7, RX300 S7, RX350 S7)

l PRIMERGY tower server (TX300 S7)

When PRIMERGY rack servers are mentioned below, both, the

PRIMERGY rack servers and the PRIMERGY tower servers, are

meant.

Additionally the LAN connection blade, the Intelligent Blade Panel (IBP) in

PRIMERGY blade servers, can be managed via VIOM.

As an extension to the ServerView Operations Manager, it is possible to manage a large number of PRIMERGY blade servers and PRIMERGY rack

servers centrally by the central management station using VIOM. This

includes virtualizing and, for blade servers, saving the server blade-specific

I/O parameters (MAC addresses, WWN addresses, I/O connections including the boot parameters) and configuring and managing a blade server's Intelligent Blade Panelin a hardware-independent server profile.

This server profile can be assigned to a PRIMERGY rack server or server

blade:

l For PRIMERGY rack servers: A server profile can be assigned to a

PRIMERGY rack server and can also be moved from one PRIMERGY

rack server to another.

l For blade servers: The server profile can be assigned to a server blade

using VIOM and can also be moved between different server blades of

the same or of another blade server.

By assigning the server profiles to a server, you can start the required application without having to reconfigure the SAN and LAN network.

ServerView Virtual-IO Manager 11

Page 12

1 Introduction

VIOM provides an easy-to-use Web-based graphical user interface, which

you can launch using the ServerView Operations Manager. Using this interface, you can carry out all the necessary tasks for managing the I/O parameters of a PRIMERGY blade server or PRIMERGY rack server and for the

LAN connection blade, the IBP module in PRIMERGY blade server.

VIOM also provides a comprehensive command line interface, which you

can use to perform administrative VIOM tasks in a script-based environment.

The VIOM CLI (command line interface) provides an easy-to-use interface for

creating scripts and automating administrative tasks.

The command line interface is available both on Windows and Linux platforms, and you install it using separate installation packages. For more information on VIOM CLI, see the documentation entitled "ServerView Virtual-IO

Manager Command Line Interface".

1.1 Target groups and objective of this manual

This manual is aimed at system administrators, network administrators and

service professionals, who have a sound knowledge of hardware and software. The manual describes the functionality and user interface of theVirtualIO Manager.

1.2 System requirements

Central management station

l Operating system for the central management station

o

Microsoft Windows® ServerTM2003 all editions

o

Microsoft Windows® ServerTM2003 R2 all editions

o

Microsoft Windows® ServerTM2008 all editions

o

Microsoft Windows® ServerTM2008 R2 all editions

o

Linux Novell (SLES10): SP2 and SP3

o

Novell (SLES 11): SP1 and SP2

12 ServerView Virtual-IO Manager

Page 13

1.2 System requirements

o

Red Hat RHEL5.6/5.7/5.8

o

Red Hat RHEL 6, 6.1/6.2

In Japan: Novell SLES is not supported.

ServerView Virtual-IO Manager can also be installed in Virtual Machine

(VM) under Windows Hyper-V or VMware ESX server. The operating

system running on the VM must be one of the above listed operating systems and must be supported by the used hypervisor.

l Installed software packages

o

ServerView Operations Manager as of Version 5.50.13

o

Java Runtime Environment (JRE) version 6.0, update 31 or higher

Together with ServerView Operations Manager 6.10, it is

also possible to use JRE version 7.0, update 7 or higher.

l Fire wall settings

o

Port 3172 must be opened for TCP/IP connection to Remote Connector Service.

o

Port 162 must be opened to receive SNMP traps from iRMC when

managing PRIMERGY rack servers.

You can also obtain the current requirements from the release notes. You find

the release notes e.g. on a Windows-based management station under Start

- [All] Programs - Fujitsu - ServerView Suite - Virtual-IO Manager Release Notes.

License

You must purchase licenses to use the Virtual-IO Manager. At least one

license is required. Each license contains a count which determines the

allowed number of server profile assigns. If more than one license is registered, the counts are added together.

ServerView Virtual-IO Manager 13

Page 14

1 Introduction

1.3 Supported Hardware

Managed BX600 blade servers

Supported systems: BX600 S3 with MMB S3. For information on the required

firmware version, see the release notes included.

The following table shows which server blades are supported with which

range of functions.

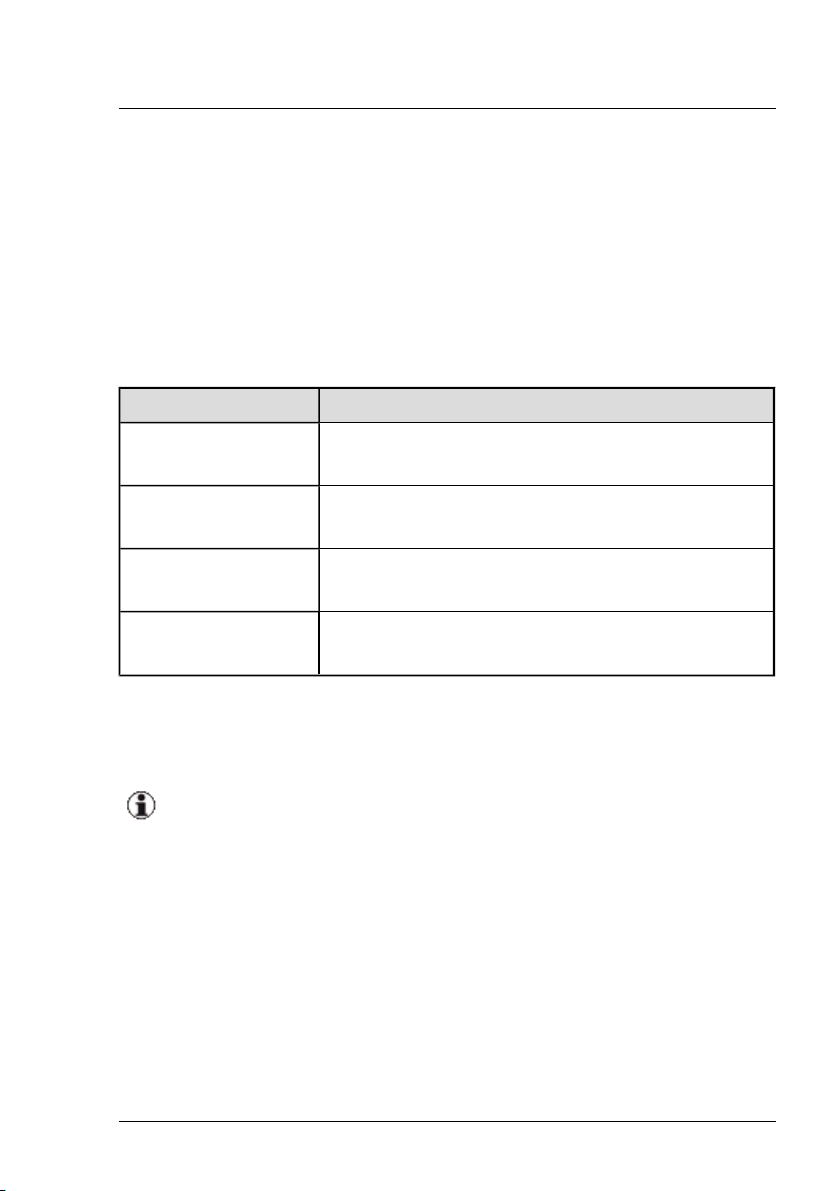

Server blade Scope of functions

BX620 S2, BX620 S3 Server profiles without I/O virtualization but with net-

work connection definition

BX620 S4, BX620 S5,

BX620 S6

Server profiles with I/O virtualization and network

connection definition

BX630 Server profiles without I/O virtualization but with net-

work connection definition

BX630 S2 Server profiles with I/O virtualization and network

connection definition

Table 1: Supported server blades

For information on the BIOS and iRMC firmware version, see the release

notes supplied.

The Virtual-IO Manager can only manage BX600 chassis with S3

management blades (MMB S3) that are assembled with the following:

l In fabric 1: IBP or LAN modules

l In fabric 2: IBP modules, LAN modules or FC switch blades of

the type SW4016

You must not mix the modules within a fabric.

Fabric 2 can also be empty. Only one of the permitted connection

blades can be inserted in fabric 1 and 2 at each time.

14 ServerView Virtual-IO Manager

Page 15

1.3 Supported Hardware

In Japan, BX600 blade servers are not supported.

Managed BX400 blade servers

Supported systems: BX400 with MMB S1. For information on the required

firmware version, see the release notes supplied.

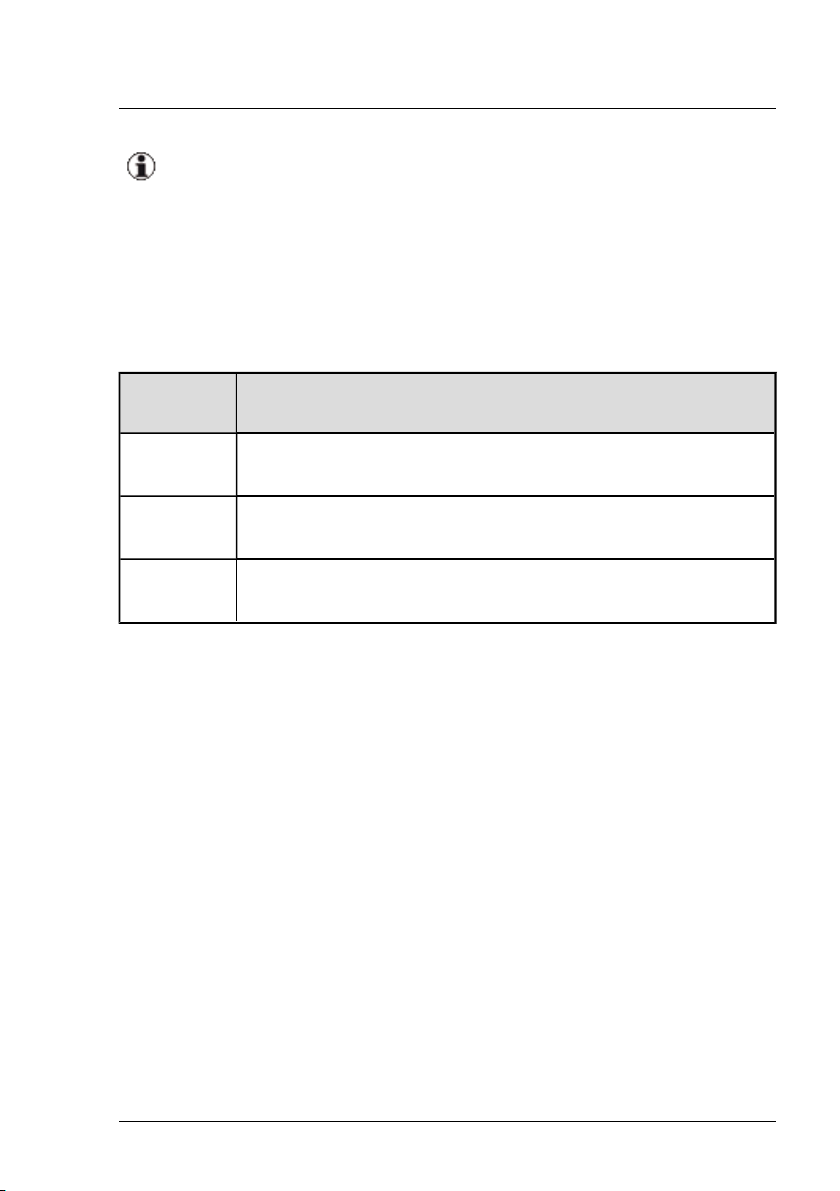

The following table shows which server blades are supported with which

range of functions.

Server

blade

BX920 S2,

BX920 S3

BX922 S2 Server profiles with I/O virtualization and network connection

BX924 S2,

BX924 S3

Table 2: Supported server blades

For information on the BIOS and iRMC firmware version, see the release

notes supplied.

Scope of functions

Server profiles with I/O virtualization and network connection

definition

definition

Server profiles with I/O virtualization and network connection

definition

ServerView Virtual-IO Manager 15

Page 16

1 Introduction

The Virtual-IO Manager can only manage BX400 chassis with S1

management blades (MMB S1) that are assembled with the following:

l In fabric 1:

l In fabric 2:

o

LAN connection blades (PY CB Eth Switch/IBP 1 Gb

36/8+2 (SB11), PY CB Eth Switch/IBP 1 Gb 36/12

(SB11A), PY CB Eth Switch/IBP 1 Gb 18/6 (SB6), or PY

CB Eth Switch/IBP 10 Gb 18/8 (SBAX2)) in switch mode or

IBP mode, or

o

LAN pass thru connection blades (PY CB Eth Pass Thru 10

Gb 18/18)

o

LAN connection blades (PY CB Eth Switch/IBP 1 Gb

36/8+2, PY CB Eth Switch/IBP 1 Gb 36/12, PY CB Eth

Switch/IBP 1 Gb 18/6, or PY CB Eth Switch/IBP 10 Gb

18/8) in switch mode or IBP mode,

o

LAN pass thru connection blades (PY CB Eth Pass Thru 10

Gb 18/18),

o

FC switch blades of the type Brocade 5450, or

o

FC pass thru connection blades (PY CB FC Pass Thru 8

Gb 18/18)

l In fabric 3: same as fabric 2

The LAN connection blades in fabric 3 must run in the same mode.

However, only one connection blade can be inserted in fabric 3.

You must not switch the mode of a LAN connection blade if you are

using the Virtual-IO Manager to manage the BX400 chassis.

16 ServerView Virtual-IO Manager

Page 17

1.3 Supported Hardware

Managed BX900 blade servers

Supported systems: BX900 with MMB S1. For information on the required

firmware version, see the release notes supplied.

The following table shows which server blades are supported with which

range of functions.

Server

blade

BX920 S1,

BX920 S2,

BX920 S3

BX922 S2 Server profiles with I/O virtualization and network connection

BX924 S2,

BX924 S3

BX960 S1 Server profiles with I/O virtualization and network connection

Table 3: Supported server blades

For information on the BIOS and iRMC firmware version, see the release

notes supplied.

Scope of functions

Server profiles with I/O virtualization and network connection

definition

definition

Server profiles with I/O virtualization and network connection

definition

definition

ServerView Virtual-IO Manager 17

Page 18

1 Introduction

The Virtual-IO Manager can only manage BX900 chassis with S1

management blades (MMB S1) that are assembled with the following:

l In fabric 1:

l In fabric 2:

o

LAN connection blades (PY CB Eth Switch/IBP 1 Gb

36/8+2 (SB11), PY CB Eth Switch/IBP 1 Gb 36/12

(SB11A), PY CB Eth Switch/IBP 1 Gb 18/6 (SB6), or PY

CB Eth Switch/IBP 10 Gb 18/8 (SBAX2)) in switch mode or

IBP mode, or

o

LAN pass thru connection blades (PY CB Eth Pass Thru 10

Gb 18/18)

o

LAN connection blades (PY CB Eth Switch/IBP 1Gb

36/8+2, PY CB Eth Switch/IBP 1Gb 36/12, PY CB Eth

Switch/IBP 1Gb 18/6, or PY CB Eth Switch/IBP 10 Gb

18/8) in switch mode or IBP mode, or

o

LAN pass thru connection blades (PY CB Eth Pass Thru 10

Gb 18/18),

o

FC switch blades of the type Brocade 5450

o

FC pass thru connection blades (PY CB FC Pass Thru 8

Gb 18/18)

l In fabric 3: same as fabric 2

l In fabric 4: same as fabric 1

The LAN connection blades in a fabric must run in the same mode.

However, only one connection blade can be inserted in a fabric.

You must not switch the mode of a LAN connection blade if you are

using the Virtual-IO Manager to manage the BX900 chassis.

18 ServerView Virtual-IO Manager

Page 19

1.3 Supported Hardware

Managed PRIMERGY rack servers und PRIMERGY tower servers

The following PRIMERGY rack and tower server models are supported:

PRIMERGY

Scope of functions

model

RX200 S7 Assign VIOM server profiles with I/O address virtualization

RX300 S7

RX350 S7

and boot configuration for the onboard LAN ports and the supported PCI controller.

Network connection definition is not supported.

TX300 S7

For information on the BIOS and iRMC firmware version, see the release

notes supplied.

The following PCI controllers are supported for all the above PRIMERGY

rack server systems:

l Emulex 10GbE OCe10102 CNA

l Emulex 8Gb FC HBA LPe 12002

l Emulex 8Gb FC HBA LPe 1250 (1 channel)

l INTEL 2-port 10GbE (D2755 – Niantec)

l INTEL 4-port (D2745 - Barton Hills)

l INTEL 2-port (D2735 - Kawela 82576NS)

l INTEL Eth Ctrl 4x1Gb Cu PCIe x4 (D3045)

l INTEL Eth Ctrl 2x1Gb Cu PCIe x4 (D3035)

l INTEL 10GbE 10GBase-T(RJ45) PCIe LAN

ServerView Virtual-IO Manager 19

Page 20

1 Introduction

PCI controller Scope of functions

Emulex 10GbE OCe10102

CNA

Emulex 8Gb FC HBA

LPe 12002

Emulex 8Gb FC HBA LPe

1250 (1 channel)

INTEL 2-port 10GbE

(D2755 – Niantec)

INTEL 4-port (D2745 - Barton Hills)

INTEL 2-port (D2735 Kawela 82576NS)

INTEL Eth Ctrl 4x1Gb Cu

PCIe x4 (D3045)

INTEL Eth Ctrl 2x1Gb Cu

PCIe x4 (D3035)

Define physical functions and type (LAN,

FCoE, iSCSI) of physical functions.

Assign virtual addresses to physical function

and optionally define boot parameter.

This CNA supports two physical functions for

each of both physical ports. The first physical

function must be of type LAN. The physical

functions for the two physical ports must be

defined similarly. This means that the storage

function for both physical ports must be of the

same type. When using iSCSI with iSCSI boot

the iSCSI initiator must be identical. Exception: physical port is completely disabled.

Assign virtual WWPN and WWNN.

Optionally define the first and second boot target and LUN.

Disable I/O ports.

Assign virtual MAC.

Optionally define PXE boot per port.

Disable I/O ports.

There is no explicit disable

functionality for I/O ports in

VIOM. I/O ports that are not

defined in a VIOM profile will

be implicitly disabled if the

device supports this functionality.

INTEL 10GbE 10GBaseT(RJ45) PCIe LAN

20 ServerView Virtual-IO Manager

Page 21

1.4 Changes since the previous edition

For information on the required firmware version, see the release notes supplied.

1.4 Changes since the previous edition

The current edition is valid for ServerView Virtual-IO Manager V3.1 and

replaces the online manual "PRIMERGY ServerView Suite, ServerView Virtual-IO Manager V3.0", Edition March 2012.

ServerView Virtual-IO Manager V3.1 includes the following new features:

l Support for INTEL Eth Ctrl 4x1Gb Cu PCIe x4 (D3045), INTEL Eth Ctrl

2x1Gb Cu PCIe x4 (D3035), and INTEL 10GbE 10GBase-T(RJ45) PCIe

LAN in PRIMERGY rack servers.

l Support of VLAN groups in tagged mode in VIOM server profile (see

"Defining networks (LAN) (for blade servers only)" on page 30, "IO-Channels step (Create Server Profile wizard)" on page 190, and "IO-Channels

step (Edit Server Profile wizard)" on page 208).

l DCB settings also possible for iSCSI (see " CNA Parameter step

(Create Server Profile wizard)" on page 200 and "CNA Parameter step

(Edit Server Profile wizard)" on page 218).

l Video Redirection for server blades and rack servers (see "Server Con-

figuration tab" on page 151).

l User-specific display properties are stored session independent (see

"Preferences dialog box" on page 238).

l Support of JAVA Runtime Environment 7 (only with ServerView Oper-

ation Manager 6.10)

l The section "High Availability - HA" has been updated and now includes

VMware HA (see "High-Availability (HA) support" on page 48).

1.5 ServerView Suite link collection

Via the link collection, Fujitsu Technology Solutions provides you with numerous downloads and further information on the ServerView Suite and PRIMERGY servers.

ServerView Virtual-IO Manager 21

Page 22

1 Introduction

For ServerView Suite, links are offered on the following topics:

l Forum

l Service Desk

l Manuals

l Product information

l Security information

l Software downloads

l Training

The downloads include the following:

o

Current software statuses for the ServerView Suite as well as additional Readme files.

o

Information files and update sets for system software components

(BIOS, firmware, drivers, ServerView agents and ServerView

update agents) for updating the PRIMERGY servers via ServerView Update Manager or for locally updating individual servers via

ServerView Update Manager Express.

o

The current versions of all documentation on the ServerView

Suite.

You can retrieve the downloads free of charge from the Fujitsu Technology Solutions Web server.

For PRIMERGY servers, links are offered on the following topics:

l Service Desk

l Manuals

l Product information

l Spare parts catalogue

Access to the link collection

You can reach the link collection of the ServerView Suite in various ways:

1. Via ServerView Operations Manager.

22 ServerView Virtual-IO Manager

Page 23

1.6 Documentation for the ServerView Suite

l Select Help – Links on the start page or on the menu bar.

This opens the start page of the ServerView link collection.

2. Via the ServerView Suite DVD 2 or via the start page of the online documentation for the ServerView Suite on the Fujitsu Technology Solutions

manual server.

You access the start page of the online documentation via the following link:

http://manuals.ts.fujitsu.com

l In the selection list on the left, select Industry standard servers.

l Click the menu item PRIMERGY ServerView Links.

This opens the start page of the ServerView link collection.

3. Via the ServerView Suite DVD 1.

l In the start window of the ServerView Suite DVD 1, select the

option Select ServerView Software Products.

l Click Start. This takes you to the page with the software products of

the ServerView Suite.

l On the menu bar select Links.

This opens the start page of the ServerView link collection.

1.6 Documentation for the ServerView Suite

The documentation for the ServerView Suite can be found on the ServerView

Suite DVD 2 supplied with each server system.

The documentation can also be downloaded free of charge from the Internet.

You will find the online documentation at http://manuals.ts.fujitsu.com under

the link Industry standard servers.

For an overview of the documentation to be found under ServerView Suite

as well as the filing structure, see the ServerView Suite sitemap (Server-

View Suite – Site Overview).

ServerView Virtual-IO Manager 23

Page 24

1 Introduction

1.7 Typographic conventions

The following typographic conventions are used:

Convention Explanation

Indicates various types of risk, namely health risks, risk of data

loss and risk of damage to devices.

Indicates additional relevant information and tips.

bold Indicates references to names of interface elements.

monospace

monospace

semibold

Indicates system output and system elements, e.g., file names

and paths.

Indicates statements that are to be entered using the keyboard.

blue continuous text

pink continuous text

<abc> Indicates variables which must be replaced with real values.

[abc] Indicates options that can be specified (syntax).

[key]

Indicates a link to a related topic.

Indicates a link to a location you have already visited.

Indicates a key on your keyboard. If you need to enter text in

uppercase, the Shift key is specified, for example, [SHIFT] +

[A] for A. If you need to press two keys at the same time, this is

indicated by a plus sign between the two key symbols.

Screenshots

Some of the screenshots are system-dependent, so some of the details

shown may differ from your system. There may also be system-specific differences in menu options and commands.

24 ServerView Virtual-IO Manager

Page 25

2 Virtual-IO Manager - Introduction

This chapter provides a general introduction to the concept of the Virtual-IO

Manager (VIOM).

2.1 Virtual addresses

Physical MAC addresses and WWN addresses are stored on the network

card or in the host bus adapter (HBA) of a server blade or PRIMERGY rack

server. If a server blade or PRIMERGY rack server has to be exchanged or

the operating system and/or the application has to be started on another

server, usually the LAN or SAN network has to be reconfigured. This means

that whilst the MAC address and the WWN addresses identify a physical

server blade, several administrators have to be involved.

To separate the administration areas from each other, it is necessary to keep

the I/O parameters (MAC and WWN) outwardly constant.

Using virtual addresses instead of the MAC addresses or WWN addresses

stored on the NIC (network interface card) or in the HBA, the addressing

remains constant even when a server blade is exchanged at the slot or a

PRIMERGY rack server is replaced by another one.

2.2 Special connection blade for blade server

Up to now, blade servers have been used essentially to connect the LAN

(Local Area Network) and Fibre Channel ports (FC ports) of individual server

blades to the LAN and SAN networks (SAN - Storage Area Network) using

switch blades or pass-thru blades, which are inserted in the blade chassis. It

is the responsibility of the LAN or SAN administrators to manage these

switches. This leads to an overlap of the different administration areas.

ServerView Virtual-IO Manager 25

Page 26

2 Virtual-IO Manager - Introduction

Figure 1: Overlapping areas of responsibility

As the areas of responsibility overlap, this means that up to three administrators may be involved if a server blade's configuration changes, e. g.

because a server blade has to be replaced due to hardware problems and, as

a result, the switches have to be reconfigured.

The onboard LAN and FC controllers in the server blade are connected to the

installed LAN or FC switches via a midplane and are, in turn, connected to

the LAN and SAN network via their uplink ports. Providers use specific protocols or protocol extensions for switches from different manufacturers,

which can lead to interoperability problems between the internal and external

switches of different providers.

26 ServerView Virtual-IO Manager

Page 27

2.2 Special connection blade for blade server

To resolve these problems, the switch blades installed in the blade server

can be replaced by special connection blades. The following connection

blade are available for this:

l For SAN:

BX600: BX600 4/4Gb FC Switch 12port (SW4016, SW4016-D4) in the

Access Gateway mode (FC AG)

BX400/BX900: Brocade 5450 8 Gb Fibre Channel Switch in the Access

Gateway mode (FC AG)

l For LAN:

BX600: BX600 GbE Intelligent Blade Panel 30/12 or 10/6 (IBP GbE)

BX400/BX900: Connection Blade PY CB Eth Switch/IBP 1Gb 36/8+2,

PY CB Eth Switch/IBP 1Gb 36/12, PY CB Eth Switch/IBP 1Gb 18/6, or

PY CB Eth Switch/IBP 10 Gb 18/8 in the IBP mode. (The connection

blades can run in switch mode, in IBP mode, or in End Host Mode

(EHM).)

These connection blades offer the advantage of a switch (cable consolidation) without the above-mentioned disadvantages.

ServerView Virtual-IO Manager 27

Page 28

2 Virtual-IO Manager - Introduction

Figure 2: Separate areas of responsibility

2.3 Management with VIOM - Procedure

You use the ServerView Virtual-IO Manager (VIOM) to manage the connection blades of a blade server and to maintain the relevant I/O parameters

constant at the chassis slot of a blade server or at the PRIMERGY rack

server. VIOM is installed on the central management station and integrated in

the ServerView Operations Manager.

28 ServerView Virtual-IO Manager

Page 29

2.3 Management with VIOM - Procedure

For blade servers, management with VIOM essentially includes the following

functions:

l Defining the network paths on the Intelligent Blade Panel (IBP module)

l Defining the I/O parameters (including virtual addresses) of a server

blade

l Saving the I/O parameters in combination with the required network

paths in server profiles

l Assigning the server profiles to the server blades or to empty slots as

well as moving a server profile between any number of server blades

You can assign the server profiles to any number of different server blades of

a blade server or even to another blade server, provided that the required network connections are available on the respective chassis.

For PRIMERGY rack servers, management with VIOM essentially includes

the following functions:

l Defining the I/O parameters (including virtual addresses) of a PRIM-

ERGY rack server

l Saving the I/O parameters in server profiles

l Assigning the server profiles to a PRIMERGY rack server

Before you can execute the functions above, a blade server chassis or PRIMERGY rack server must be managed by VIOM. You also do this using the

GUI of the Virtual-IO Manager (see chapter "Managing servers with VIOM"

on page 257).

Management using VIOM is divided into the following key steps:

1. Before VIOM can work with a blade server chassis or PRIMERGY rack

server it must be managed by VIOM.

2. For blade servers with IBP, you can define external network connections.

3. You then define the corresponding profiles for all applications/images

and save them in the server profile repository on the central management

station.

ServerView Virtual-IO Manager 29

Page 30

2 Virtual-IO Manager - Introduction

4. You can then assign these server profiles to any of the individual slots of

a blade server or to a PRIMERGY rack server.

5. If required, you can remove the assignment of the server profile.

For blade server, you can move the profiles from one blade server slot to

another, or move them to another blade server. For PRIMERGY rack

servers, you can move the profiles from one server to another server.

2.4 Defining networks (LAN) (for blade servers only)

In order for VIOM to be able to switch the network paths correctly when

assigning profiles, first VIOM has to know which networks are present on the

respective chassis on which uplink ports.

This also makes it possible to separate individual server blades or groups of

server blades from a network perspective so that two server blade groups do

not have any connection to each other in terms of network.

Defining network paths on an IBP module includes the following steps:

l Defining an uplink set.

An uplink set comprises one or several uplink ports. An uplink port is an

external port that connects the chassis with your LAN infrastructure. If

an uplink set is used by several virtual network connections (VLANs),

the uplink set is referred to as a shared uplink set.

l Defining one or several networks that are assigned to the uplink set.

The definition of a network in the context of VIOM, refers to

the allocation of a meaningful name for network access from

outside the network

30 ServerView Virtual-IO Manager

Page 31

2.4 Defining networks (LAN) (for blade servers only)

If a blade server chassis is managed by VIOM, manual configurations (not done by VIOM) of an IBP connection blade are not

supported. Manual configuration of IBP connection blades might

result in incorrect behavior of VIOM or get lost during configuration

by VIOM. Before managing a chassis by VIOM IBP connection

blades should be set to factory default setting except IP address configuration of the administrative interface, user assigned name and

access protocol (SSH or telnet).

By default, the IBP module (IBP 10/6) is supplied with the following configuration.

Figure 3: Standard configuration of the IBP module (10/6)

All uplink ports of the IBP module (IBP 10/6) are combined in one uplink set.

ServerView Virtual-IO Manager 31

Page 32

2 Virtual-IO Manager - Introduction

In the case of IBP 30/12, the first 8 uplink ports are combined in one

uplink set by default, and all 30 downlinks are connected with this

standard uplink set.

Using VIOM, you can change the standard configuration of an IBP module.

You can combine several uplink ports into one uplink set as well as define

several uplink sets for a LAN connection blade. This gives you several independent network paths e. g. for different applications (e. g. database server,

communication server) or individual areas (e. g. development, accounting or

personnel administration).

To find out what happens when you activate the management of a

blade server using the Virtual-IO Manager with the standard IBP configuration, see section"VIOM internal operations on blade servers"

on page 261 .

The following figure provides an overview of typical uplink sets that you can

configure using VIOM.

32 ServerView Virtual-IO Manager

Page 33

2.4 Defining networks (LAN) (for blade servers only)

Figure 4: Typical uplink sets

The uplink ports can be assigned to an uplink set as active ports or as backup ports. As a result, there are different ways of configuring an uplink set:

l "Port backup" configuration

When you configure a "port backup", you define an uplink set with at

least two uplink ports, and configure one of these as an active port and

the other as a backup port. In this case, the active port switches to the

backup port if an error occurs (linkdown event for all active ports). In the

figure shown, this could be the uplink sets (1) and (2) if for each of these

one port of the uplink set is configured as an active port and one port as a

backup port.

l Link aggregation group

By grouping several active uplink ports in one uplink set, a link

ServerView Virtual-IO Manager 33

Page 34

2 Virtual-IO Manager - Introduction

aggregation group (LAG) is formed. By providing several parallel connections, you achieve higher level of availability and a greater connection capacity. In the figure above, this could be the uplink sets (1) and

(2) if both uplink ports of these uplink sets are configured as active ports.

If an uplink set has several backup ports, these backup ports also form a

link aggregation group automatically in the case of a failover. It is essential that the ports of an external LAN switch, which are linked to a LAG,

form a static LAG.

Using VIOM, it is possible to define a number of networks:

l Internal networks (Internal network)

l Single networks (Single network)

l Virtual networks with VLAN IDs (VLAN networks)

l Virtual networks with VLAN IDs/native VLAN ID (VLAN networks)

l Service LAN (Dedicated service network)

l Service VLAN (Service VLAN networks)

The network types in bold indicate their corresponding names in the VIOM

GUI.

Internal networks

An internal network refers to a network connection within the IBP, in which

server blades are only linked to each other. However, no uplink ports are

assigned to this network connection.

In this case, it is an internal connection via the IBP module.

It makes sense to have an internal network if the server blades only need to

communicate amongst each other and, for security reasons, there must be

no connection to an external network.

In the figure, (3) represents an internal network.

"Single" networks

VIOM interprets a "single" network as an uplink set that is only used for

access in one network.

34 ServerView Virtual-IO Manager

Page 35

2.4 Defining networks (LAN) (for blade servers only)

A key attribute of a "single" network is that it is VLAN transparent. You can

therefore channel several external networks with different VLAN tags (or also

without VLAN tags) through a "single" network.

Packets with or even without a VLAN tag, which arrive at the uplink ports

from outside the network, are channeled to the related server blades with the

corresponding network. The same applies to the network packets that come

from the server blades.

In the figure, (1) and (2) illustrate "single" networks.

Virtual networks with VLAN IDs

Depending on the IBP module, you have 6 to 12 uplink ports available. You

can define as many different networks as there are uplink ports. If networks

are to be created with backup ports or with a link aggregation group, then the

number of possible networks on an IBP module is automatically reduced.

You can get around this restriction regarding the uplinks that are physically

available by defining virtual networks (Virtual Local Area Network - VLAN).

By setting up virtual networks, which can be identified by unique numbers

known as VLAN IDs, you can set up several logical networks that are completely separate from each other from a technical and network perspective.

These networks share an uplink set ("shared uplink set") without the server

blades of one virtual network being able to communicate with server blades

of the other virtual networks.

ServerView Virtual-IO Manager 35

Page 36

2 Virtual-IO Manager - Introduction

Figure 5: Networks with VLAN ID

In the figure above, two shared uplink sets are configured on the IBP module.

Two virtual networks VLAN10 and VLAN20 with the VLAN IDs 10 and 20

are assigned to the upper shared uplink set, and two virtual networks

VLAN21 and VLAN30 with the VLAN IDs 20 and 30 are assigned to the

lower shared uplink set. Although both uplink sets have virtual networks with

the VLAN ID 20, these are two different virtual networks. Server blade 2 cannot communicate with server blade 3.

Packets that come from outside the network, which have a VLAN tag that

corresponds to the VLAN ID of a virtual network, are transferred in precisely

this VLAN network. Before the packet exits the module on the server blade

side, the VLAN tag of a virtual network is removed in the same way as a "portbased" VLAN.

Packets that come from outside the network, which have a VLAN tag that

does not match any VLAN ID of a virtual network, are not transferred. They

are dropped.

36 ServerView Virtual-IO Manager

Page 37

2.4 Defining networks (LAN) (for blade servers only)

Packets that come from outside the network with no VLAN tag are also

dropped. This behavior can be changed by configuring a virtual network as

native VLAN (see "Virtual network with a VLAN ID as native VLAN" on page

37).

Packets that come from a server blade, which do not have a VLAN tag, are

routed in the VLAN network to which the LAN port of the server blade is connected. In the process, VLAN tags with the VLAN ID of the virtual network

are added to these packets. These packets exit the IBP module at the uplink

ports of the related uplink set with this VLAN tag.

Packets that come from a server blade, which have a VLAN tag, are not

transferred to a VLAN network. They are either dropped or transferred elsewhere (e.g. to service networks). But VLAN networks can also be used with

tagged packets when the network is used in tagged mode (see "Virtual net-

works with VLAN ID used in tagged mode" on page 38 ).

Virtual network with a VLAN ID as native VLAN

You can select a virtual network of a shared up link set as the default or

"native" VLAN. All packages that do not contain a VLAN ID will be allowed

through this connection.

Packets that come from outside the network, which do not have a VLAN tag,

are routed in the network with the native VLAN ID and assigned a corresponding VLAN tag in the process.

Packets that come from outside the network, which have a VLAN tag that

corresponds to the native VLAN ID, are not transferred in any of the networks

belonging to the uplink set. They are dropped.

Packets that come from a server blade to the native VLAN network, exit an

IBP module without a VLAN tag. The VLAN tag is therefore removed from

the network packet before it exits the IBP module at the uplink port.

ServerView Virtual-IO Manager 37

Page 38

2 Virtual-IO Manager - Introduction

Figure 6: Networks with a VLAN ID and a native VLAN ID

In the figure above, the VLAN ID 10 is defined as the native VLAN ID in the

upper shared uplink set. As a result, the data packets of server blade 1 with

the VLAN ID 10 (red) exit the uplink without a VLAN ID tag. Incoming data

packets without a VLAN ID tag are assigned the VLAN ID 10 internally.

These data packets are only transferred to server blade 1.

Virtual networks with VLAN ID used in tagged mode

While normally the VLAN IDs of packets that leave the IBP on the server

blade side are removed, it is possible to use a VLAN network in tagged

mode. This means that all packets retain their VLAN tag when they are transmitted to the server blade. Packets that arrive on the server blade side of the

IBP must have a VLAN tag with the corresponding VLAN ID if they are to

transferred to this VLAN network in tagged mode.

Packets without a VLAN tag are dropped unless there is a VLAN network in

untagged mode associated with the same downlink.

38 ServerView Virtual-IO Manager

Page 39

2.4 Defining networks (LAN) (for blade servers only)

Figure 7: Virtual networks with VLAN ID used in tagged mode

Several VLAN networks in tagged mode can be used on the same IBP downlink port. They can also be combined with service networks.

The mode in which a VLAN network is used is controlled by network definitions in a server profile. It cannot be specified within the network settings.

The advantage of the tagged mode is that the same VLAN networks can be

used either untagged for separate server blades on different downlinks or

tagged for one server blade with separate virtual machines on one downlink.

ServerView Virtual-IO Manager 39

Page 40

2 Virtual-IO Manager - Introduction

Dedicated service networks

Figure 8: Dedicated service networks

The dedicated service network is designed to separate LAN traffic of an

iRMC from the operating system LAN traffic if the iRMC is not using a separate management LAN but is configured to share its LAN traffic with an

onboard LAN port of the server blade. In order to separate the LAN traffic of

iRMC and operating system in this case, the iRMC must also be configured

to use a VLAN tag for its LAN packets. A dedicated service network defined

with the same VLAN ID as used by the iRMC allows the tagged iRMC LAN

packets to be routed to specific uplink port(s) (external port(s)), whereas the

other LAN packets from the operating system are routed to a separate uplink

port.

In addition, the dedicated service network can also be used to route the LAN

packages of a virtual NIC defined in the operating system running on the

server blade to specific uplink ports. In order to do this, the virtual NIC in the

operating system must be configured to send all packets with a VLAN tag.

40 ServerView Virtual-IO Manager

Page 41

2.4 Defining networks (LAN) (for blade servers only)

The same VLAN tag must be specified when defining the dedicated service

network that is to transport these packages.

The behavior of a dedicated service network is such that it receives tagged

packets from the server blade, but the tags are stripped when they leave the

uplink port. Incoming untagged packets at the uplink port are tagged and sent

to the corresponding downlink ports (internal ports)/blade server as tagged

packets. Incoming tagged packets at uplink ports are dropped.

Note that dedicated service networks may overlap on the downlink ports with

single networks, VLAN networks, other dedicated service networks, and

Service VLAN networks (explained below). The untagged packets received

from the server blade or uplink port should obey the rule of the single network

or VLAN network that overlaps with the dedicated service network.

The VLAN tags of the overlapping VLAN networks, dedicated service networks and Service VLAN networks must be different.

Dedicated service networks cannot overlap with any other network at the

uplink ports. This means the uplink ports of a dedicated service network can

only be assigned to this dedicated network.

ServerView Virtual-IO Manager 41

Page 42

2 Virtual-IO Manager - Introduction

Service VLAN networks

Figure 9: Service VLAN networks

The Service VLAN networks are designed to separate LAN packages of multiple virtual NICs defined in the operating system running on the server blade

and route them to specific uplink (external) ports. To do this, the different virtual NICs in the operating system must be configured to send their packages

with a VLAN tag that is identical to the Service VLAN ID of the Service

VLAN network.

The behavior of a Service VLAN network is such that it receives tagged packets with the Service VLAN ID from the server blade and forwards them to

uplink ports as tagged packets. The LAN packages leave the IBP tagged at

the uplink ports. Incoming tagged packets with the Service VLAN ID (at the

uplink port) are sent to the corresponding downlink (internal) ports/blade

servers as tagged packets.

42 ServerView Virtual-IO Manager

Page 43

Note that Service VLAN networks may overlap on the downlink ports (with

single networks, VLAN networks, dedicated service networks and other Service VLAN networks). The untagged packets received from the server blade or

uplink port should obey the rule of the overlapping single network or VLAN

network.

The VLAN tags of the overlapping VLAN networks, dedicated service networks and Service VLAN networks must be different.

Different Service VLAN networks may share the same uplink ports. If the

port that is member of the Service VLAN network receives tagged packets

with the Service VLAN ID (SVID) of a specific Service VLAN network, these

received tagged packets will be forwarded based on the definition of this Service VLAN network. The Service VLAN networks with disjoint uplink sets may

have identical SVIDs.

Service VLAN networks may also share the same uplink ports with VLAN

networks. The VLAN tag of Service VLAN networks and VLAN networks

sharing the same uplink ports must be different.

2.5 Server profiles

2.5 Server profiles

A server profile contains the following VIOM-specific parameters:

1. Defining the connection in external networks (see section "Defining net-

work paths (LAN)" on page 277), only for blade servers

2. Defining the physical identity in the form of I/O addresses (MAC, WWN)

3. Defining the boot devices with parameters

To activate a server profile of this type, it must be assigned to a server blade

slot or a PRIMERGY rack server.

For a blade server, it can be moved to another slot if required (e. g. in the

event of server blade failure). The server blade in another slot thereby

assumes the identity of the previous server blade. In this way, server profiles

allow the available blade hardware to be used flexibly.

In this context, VIOM provides the option to define a slot as a spare slot. If a

problem occurs or maintenance work needs to be carried out, you can trigger

ServerView Virtual-IO Manager 43

Page 44

2 Virtual-IO Manager - Introduction

a server profile failover, which searches for a suitable spare server blade that

will assume the tasks of the failed server blade.

To use server profiles, you must do the following in the Virtual-IO Manager:

1. Define a server profile

2. Assign the profile to a slot or a PRIMERGY rack server

2.5.1 Defining server profiles

A server profile is made up of a set of parameters that contain the related

VIOM parameters. These include:

l (Virtual) MAC addresses and WWN addresses

l Boot parameters

l For blade servers only: LAN connections for the I/O channels of a server

blade

The server profiles are stored centrally and independently of hardware under

a user-defined name in a server profile repository on the central management

station.

2.5.2 Assigning server profiles

The server profiles that are stored in the central server profile repository can

be assigned to the slots of a blade server or to a PRIMERGY rack server

using VIOM. In order to do this, the blade server or PRIMERGY rack server

must be managed by VIOM. In addition, you must switch off the server blade

in the corresponding slot or the PRIMERGY rack server in order to assign a

server profile to this slot or PRIMERGY rack server.

For blade servers, a server profile can also be assigned to an empty slot. A

slot can thus be prepared for use at a later date. Using virtual addressing, you

can, e. g. quickly replace a faulty server blade by preconfiguring another

server blade without changing the configuration.

44 ServerView Virtual-IO Manager

Page 45

2.5 Server profiles

2.5.3 Dedicated LAN connections (only for blade servers)

You can assign each I/O port of a server blade to an explicit network in the

server profile. As a server profile is not connected to any hardware, only the

network name is recorded in it.

If a server profile is assigned to a slot, the downlinks connected to the I/O

channels of the slot are added to the IBP modules in the specified network.

The networks explicitly named in the server profile must be configured beforehand in the affected IBP modules.

If non-VIOM capable LAN modules are installed (Open Fabric mode), you

cannot set any dedicated LAN connections (paths). In this case, you must

work with profiles whose I/O ports do not contain any network assignment.

2.5.4 Virtualizing I/O parameters

Virtualizing the physical server identity in the form of physical MAC addresses, WWN addresses and boot parameters is a key function of the ServerView Virtual-IO Manager software.

By defining virtual I/O addresses and boot parameters as part of a server profile, you can easily move an operating system image or an application from

one server blade or PRIMERGY rack server to another.

The following basic I/O parameters belong to the virtualization parameters:

l Virtual MAC address (LAN)

l Virtual WWN addresses (Fibre Channel)

You can also define the iSCSI boot parameters for LAN ports which are

defined as iSCSI boot devices. For each Fibre Channel HBA port the following SAN boot configuration parameters can be virtualized:

l Boot

l 1st target port name (WWPN of the target device)

l 1st target LUN

l 2nd target port name (WWPN of the target device)

l 2nd target LUN

ServerView Virtual-IO Manager 45

Page 46

2 Virtual-IO Manager - Introduction

Blade Servers

The virtualization I/O parameters of all the server blades of a chassis are

stored in a specific table in the management blade (MMB) of this blade

server. When a server blade powered on, checks are run in the boot phase to

determine whether virtualization parameters are defined in the MMB table for

this server blade slot. These parameters are transferred to the I/O adapters

so that the virtualized addresses are used in the same way as the physical

addresses assigned by the manufacturer. This ensures that no changes need

to be made if a server blade is exchanged or a server profile moved.

If a server blade or a mezzanine card is removed from a blade server and

inserted in the slot of another blade server that is not managed by VIOM, then

the physical I/O addresses assigned by the manufacturer will be used automatically. The same applies if the virtualization of the I/O addresses for a slot

is switched off, e. g. if the corresponding server profile is moved.

If the central management server is switched off or the connection between

the management station and the management blade is interrupted, all the

blade servers use the configuration last defined.

Once the connection to the external networks is configured and the server

profiles assigned with virtualization parameters by the ServerView Virtual-IO

Manager, the management station does not necessarily have to run with the

Virtual-IO Manager software. To operate the "virtualized" blade server chassis, the software is not required.

PRIMERGY rack servers

The virtualization I/O parameters of a PRIMERGY rack server are stored in a

specific table in the baseboard management controller (iRMC) of the server.

When a PRIMERGY rack server is powered on, checks are running in the

boot phase to determine whether virtualization parameters are defined in the

iRMC table. These parameters are transferred to the I/O adapters so that virtualized addresses are used in the same way as the physical addresses

assigned by the manufacturer.

46 ServerView Virtual-IO Manager

Page 47

2.6 Server profile failover (for blade servers only)

If the virtualization of the I/O addresses for a slot is switched off, e. g. if the

corresponding server profile is unassigned, the physical I/O addresses

assigned by the manufacturer will automatically be reactivated in the next

boot phase.

The iRMC of a PRIMERGY rack server loses virtualization I/O parameter

table during power failures. So the table has to be rewritten by ServerView Virtual-IO Manager before the server is powered on again. This restoration process is done automatically. But this requires that the management station has

to be kept running as long as PRIMERGY rack servers are managed. For further information, see "VIOM-internal operations on a PRIMERGYrack

server" on page 266.

2.6 Server profile failover (for blade servers only)

If a problem occurs or maintenance work needs to be carried out, VIOM provides the option to move the server profiles from the affected server blade to

a suitable server blade within the same blade server.

In order to do this, you must define spare slots that assume the tasks of the

other server blade in such a case. It is advisable to install server blades at

the spare slots so that they are available if a problem occurs or maintenance

work needs to be carried out. A failover of this type can only take place if the

server blade on which the failover is to take place is switched off.

If a server blade fails, for example, you launch the failover function via the

context menu of the corresponding server blade. VIOM then searches for a

spare slot that has a server blade to which the server profile can be assigned.

Once such a slot has been found, the profile assignment on the affected

server blade is deleted, and the server profile is assigned to the new server

blade. The new server blade thus assumes the role of the failed server blade

including the network addresses.

The Virtual-IO Manager does not make any changes to the boot

image in a SAN and does not clone any disk images to the local hard

disk of the replacement server blade.

ServerView Virtual-IO Manager 47

Page 48

2 Virtual-IO Manager - Introduction

2.7 High-Availability (HA) support

VIOM supports the following high-availability environment:

l Windows 2008 R2 Hyper-V cluster with ServerView Operations Man-

ager and ServerView Virtual-IO Manager installed on a virtual machine

with Windows Server operating system.

l VMware HA with ServerView Operations Manager and ServerView Vir-

tual-IO Manager installed on a virtual machine with Windows Server operating system.

This means that the ServerView management station is a virtual machine running on a Windows 2008 Hyper-V cluster or in a VMware HA environment.

High availability of Hyper-V cluster

The following Hyper-V high-availability configurations will be supported:

Operating system Admin server if HA

GuestOSHypervisor

Windows Server 2003 R2 Enterprise (x86, x64) SP2

P

---

or higher

Windows Server 2003 R2 Standard (x86, x64) SP2

P

---

or higher

Windows Server 2008 R2 Datacenter [*]

P

P

[Hyper-V]

Windows Server 2008 R2 Enterprise [*]

P

P

[Hyper-V]

Windows Server 2008 R2 Standard [*]

P

P

[Hyper-V]

48 ServerView Virtual-IO Manager

Page 49

2.7 High-Availability (HA) support

Operating system Admin server if HA

GuestOSHypervisor

Windows Server 2008 R2 Foundation [*]

P

P

[Hyper-V]

Windows Server 2008 Standard (x86, x64) [*]

P

P

[Hyper-V]

(only x64)

Windows Server 2008 Enterprise (x86, x64) [*]

P

P

[Hyper-V]

(only x64)

Figure 10: Supported Hyper-V high-availability configurations

[*] The Windows Server Core Installation option is not supported for admin

server and guest OS on VM.

To set up the Windows 2008 Hyper-V cluster and the virtual machine that will

be controlled from it, click here for the Microsoft instructions:

http://technet.microsoft.com/en-us/library/cc732181%28v=ws.10%29.aspx

If there is a fault in the Hyper-V cluster node, the Microsoft cluster will perform a failover action of the Hyper-V environment to the other cluster node

and restart the virtual machine that is acting as the ServerView Suite management station.

ServerView Virtual-IO Manager 49

Page 50

2 Virtual-IO Manager - Introduction

Figure 11: Failover action of the Hyper-V environment to the other cluster node

In the failover clustering of the Hyper-V environment, ServerView

supports the cold migration of virtual machines.

To setup the Hyper-V cluster, proceed as follows:

On the primary node:

1. Connect with shared storage.

2. Configure BIOS.

3. Install Hyper-V roles.

4. Install and configure EMC Solutions Enabler (if used).

5. Add a failover clustering function.

6. Create a Hyper-V virtual network.

7. Create clusters.

8. Prepare virtual machines.

9. Register virtual machines in clusters.

10. Install and configure storage management software.

11. Install and configure VM management software.

50 ServerView Virtual-IO Manager

Page 51

2.7 High-Availability (HA) support

12. Install and configure ServerView Operations Manager and ServerView

Virtual-IO Manager.

On the secondary node:

1. Connect with shared storage.

2. Configure BIOS.

3. Install Hyper-V roles.

4. Install and configure EMC Solutions Enabler (if used).

5. Add a failover clustering function.

6. Create a Hyper-V virtual network.

7. Install Hyper-V roles.

8. Add a failover clustering function.

9. Create a Hyper-V virtual network.

10. Create clusters.

11. Prepare virtual machines.

12. Register virtual machines in clusters.

13. Operate the management station in a cluster.

For details of items 7 to 13, refer to the Hyper-V manual.

If an error occurs on a VM guest, the operation will continue if the VM guest is

switched over.

High availability of VMware HA

To make use of the high-availability functionality of VMware HA, you must

use the operating system VMware Infrastructure 3 with the two concepts

Cluster and Resource Pool.

ServerView Virtual-IO Manager 51

Page 52

2 Virtual-IO Manager - Introduction

Figure 12: Architecture and typical configuration of VMware Infrastructure 3

52 ServerView Virtual-IO Manager

Page 53

2.7 High-Availability (HA) support

Figure 13: Host failover with VMware HA

VMware HA links up multiple ESX/ESXi servers to form a cluster with

shared resources. If one host fails, VMware HA reacts immediately by

restarting any affected virtual machine on a different host. The cluster is

created and managed via VirtualCenter.

For a detailed description of the high-availability functionality with VMware

HA, visit http://www.vmware.com/pdf/vmware_ha_wp.pdf.

HA functionality supported by Virtual-IO Manager

HA functionality is supported by Virtual-IO Manager by the following measures:

l The central ServerView Virtual-IO Manager service is configured to be

restarted automatically in the event of failure. This automatic restart of

the service is configured during installation of Virtual-IO manager. By

default the following restart behavior is configured:

o

The first restart of the service is tried 5 seconds after unexpected termination of the service.

o

The second restart is tried 30 seconds after termination of the service.

o

Subsequent restarts are tried 60 seconds after termination of the

service.

o

The restart counter is reset after 600 seconds.

ServerView Virtual-IO Manager 53

Page 54

2 Virtual-IO Manager - Introduction

The details of this configuration cannot be seen via the normal graphical user interface of the service manager. To see

the details, you must use the command line interface of the

service manager. The command sc qfailure Server-

ViewVirtualIOManagerService displays the current con-

figuration, where ServerViewVirtualIOManagerService is

the name of the service.

l The ServerView Virtual-IO Manager Backup service is also configured

for automatic restart in the event of failure during installation.

By default the Virtual-IO Manager Backup service is not configured to start automatically, as it requires configuration.

By default the following restart behavior is configured:

o

The first restart of the service is tried 5 seconds after unexpected termination of the service.

o

The second restart is tried 30 seconds after termination of the service.

o

Subsequent restarts are tried 120 seconds after termination of the

service.

o

The restart counter is reset after 600 seconds.

The details of this configuration cannot be seen via the normal graphical user interface of the service manager. To see

the details, you must use the command line interface of the

service manager. The command sc qfailure Server-

ViewVirtualIOBackupService displays the current con-

figuration where ServerViewVirtualIOManagerService is

the name of the service.

l If the Virtual-IO Manager is interrupted during a configuration request (for

example, creation of networks in an IBP connection blade or assignment

of a VIOM server profile) while executing configuration commands on

hardware modules, Virtual-IO Manager will undo the changes already

made the next time the service starts. This means that, when the serv-

54 ServerView Virtual-IO Manager

Page 55

2.7 High-Availability (HA) support

ice has restarted, the configuration should be the same as it was just

before the interrupted request.

Some configuration actions of the Virtual-IO Manager user

interface consist of several “independent” internal configuration requests. The Virtual-IO Manager can only undo

the last internal configuration request.

If Virtual-IO Manager successfully executes all necessary

changes for a request but is just interrupted while sending

the response to the Virtual-IO Manager client, the changes

will not be undone.

l The transaction concept of the Virtual-IO Manager should allow you to

restart the service and execute the described undo actions if the database is not corrupted by the SQL database service used.

What Virtual-IO Manager does not do

Virtual-IO Manager does not control the availability of the ServerView VirtualIO Manager service. It also does not check the availability of the virtual

machine that is used as the ServerView Suite management station. The

letter should be done by the Microsoft Hyper-V cluster if it is correctly configured.

ServerView Virtual-IO Manager 55

Page 56

56 ServerView Virtual-IO Manager

Page 57

3 Installation and uninstallation

You can install the Virtual-IO Manager on a central management station

(CMS) under Windows or Linux (see section "Installing the Virtual-IO Man-

ager on a Windows-based CMS" on page 58 and "Installing the Virtual-IO

Manager on a Linux-based CMS" on page 73).

Please check first the requirements for installing the Virtual-IO Manager on

CMS (see section "Prerequisites for the VIOM installation" on page 57).

If a previous version is already installed on the management station, an

update installation runs automatically when you install the new version. All

previous VIOM configurations and definitions remain the same (see section

"Updating the Virtual-IO Manager on a Windows-based CMS" on page 72)

and "Updating the Virtual-IO Manager on a Linux-based CMS" on page 89.

If you want to use the command line interface of the Virtual-IO Manager

(VIOM CLI), you must install the VIOM CLI software package. You will find

details on how to install and use VIOM CLI in the "Virtual-IO Manager Command Line Interface" manual.

3.1 Prerequisites for the VIOM installation

The requirements for installing the Virtual-IO Manager on a central management station are as follows:

l Operating system for the central management station

o

Microsoft Windows® ServerTM2003 all editions

o

Microsoft Windows® ServerTM2003 R2 all editions

o

Microsoft Windows® ServerTM2008 all editions

o

Microsoft Windows® ServerTM2008 R2 all editions

o

Linux Novell (SLES10): SP2 and SP3

o

Novell (SLES 11): SP1 and SP2

o

Red Hat RHEL5.6/5.7/5.8

o

Red Hat RHEL 6, 6.1/6.2

ServerView Virtual-IO Manager 57

Page 58

3 Installation and uninstallation

In Japan: Novell SLES is not supported.

ServerView Virtual-IO Manager can also be installed in Virtual Machine

(VM) under Windows Hyper-V or VMware ESX server. The operating

system running on the VM must be one of the above listed operating systems and must be supported by the used hypervisor.

l Installed software packages

o

ServerView Operations Manager as of Version 5.50.13

o

Java Runtime Environment (JRE) version 6.0, update 31 or higher

Together with ServerView Operations Manager 6.10, it is

also possible to use JRE version 7.0, update 7 or higher.

l Fire wall settings

o

Port 3172 must be opened for TCP/IP connection to Remote Connector Service.

o

Port 162 must be opened to receive SNMP traps from iRMC when

managing PRIMERGY rack servers.

You can also obtain the current requirements from the release notes. You find

the release notes e.g. on a Windows-based management station under Start

- [All] Programs - Fujitsu - ServerView Suite - Virtual-IO Manager Release Notes.

3.2 Installing the Virtual-IO Manager on a Windowsbased CMS

The corresponding software is supplied with the PRIMERGY ServerView

Suite DVD1. You can find the entire software for the PRIMERGY ServerView Suite under ServerView Software Product Selection. To find the Vir-

tual-IO Manager software package SV_VIOM.exe in this product selection,

choose ServerView – Virtual-IO Manager.

58 ServerView Virtual-IO Manager

Page 59

3.2 Installing the Virtual-IO Manager on a Windows-based CMS

3.2.1 Installing the Virtual-IO Manager using a graphical interface

Installation process

1. Insert the PRIMERGY ServerView Suite DVD 1 in the DVD-ROM drive.

If the DVD does not start automatically, click the setup.exe file in the

root directory of the DVD-ROM.

2. Select the option ServerView Software Products.

3. Click Start.

4. In the next window, select the required language.

5. Select ServerView – Virtual-IO Manager.

6. Double-click the SV_VIOM.exe. The installation wizard is launched.

After determining a number of parameters of the existing operating system base, the following window is displayed: