Page 1

User’s manual

FLIR X6570sc series

Page 2

Page 3

User’s manual

FLIR X6570sc series

#T810204; r. AA/43079/43091; en-US

iii

Page 4

Page 5

Table of contents

1 Disclaimers ........ .. .. .. ................................. .. .. .. ................................. 1

1.1 Legal disclaimer ....................................................................... 1

1.2 Usage statistics ........................................................................1

1.3 Changes to registry ...................................................................1

1.4 U.S. Government Regulations...................................................... 2

1.5 Copyright ................................................................................2

1.6 Quality assurance .....................................................................2

1.7 Patents...................................................................................2

1.8 Third-party licenses...................................................................2

1.8.1 GNU Lesser General Public License (LGPL) ........................ 2

1.8.2 Fonts (Source Han Sans)................................................. 2

1.8.3 Fonts (DejaVu) ..............................................................3

2 Safety information .......... .. ..................................... .. ..........................4

3 Notice to user ..... .. .. .. ................................. .. .. .. ................................. 5

3.1 User-to-user forums .................................................................. 5

3.2 Calibration...............................................................................5

3.3 Accuracy ................................................................................ 5

3.4 Disposal of electronic waste........................................................ 5

3.5 Training .................................................................................. 5

3.6 Documentation updates ............................................................. 5

3.7 Important note about this manual.................................................. 5

3.8 Note about authoritative versions..................................................6

4 Customer help .............. .. .. .. ............................... .. .. .. ......................... 7

4.1 General ..................................................................................7

4.2 Submitting a question ................................................................7

4.3 Downloads ..............................................................................7

5 Introduction........ .. .. .. ................................. .. .. .. ................................. 9

5.1 Camera system components ....................................................... 9

5.2 System overview ...................................................................... 9

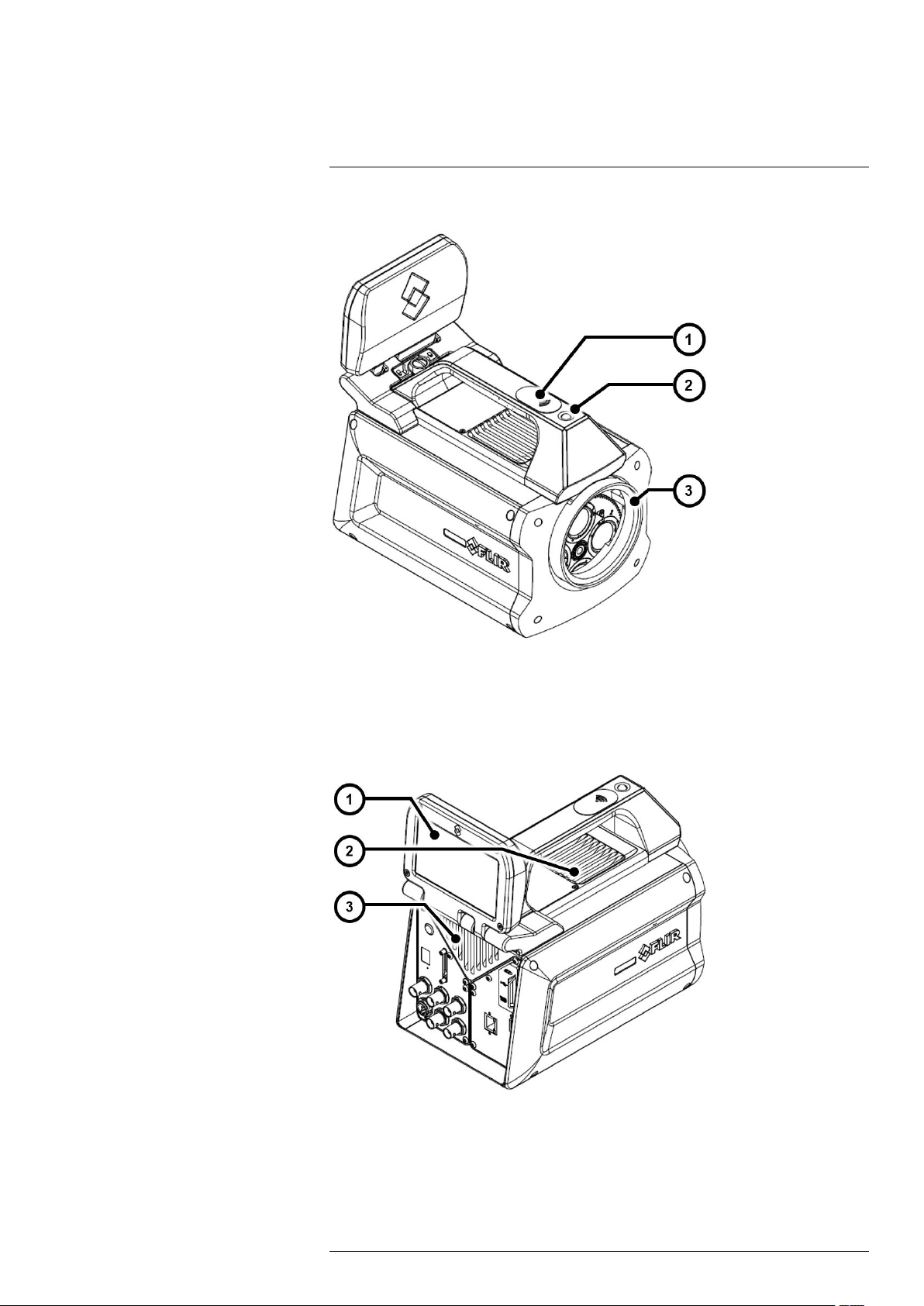

5.2.1 View from the front—M80 mount...................................... 10

5.2.2 View from the rear ........................................................ 10

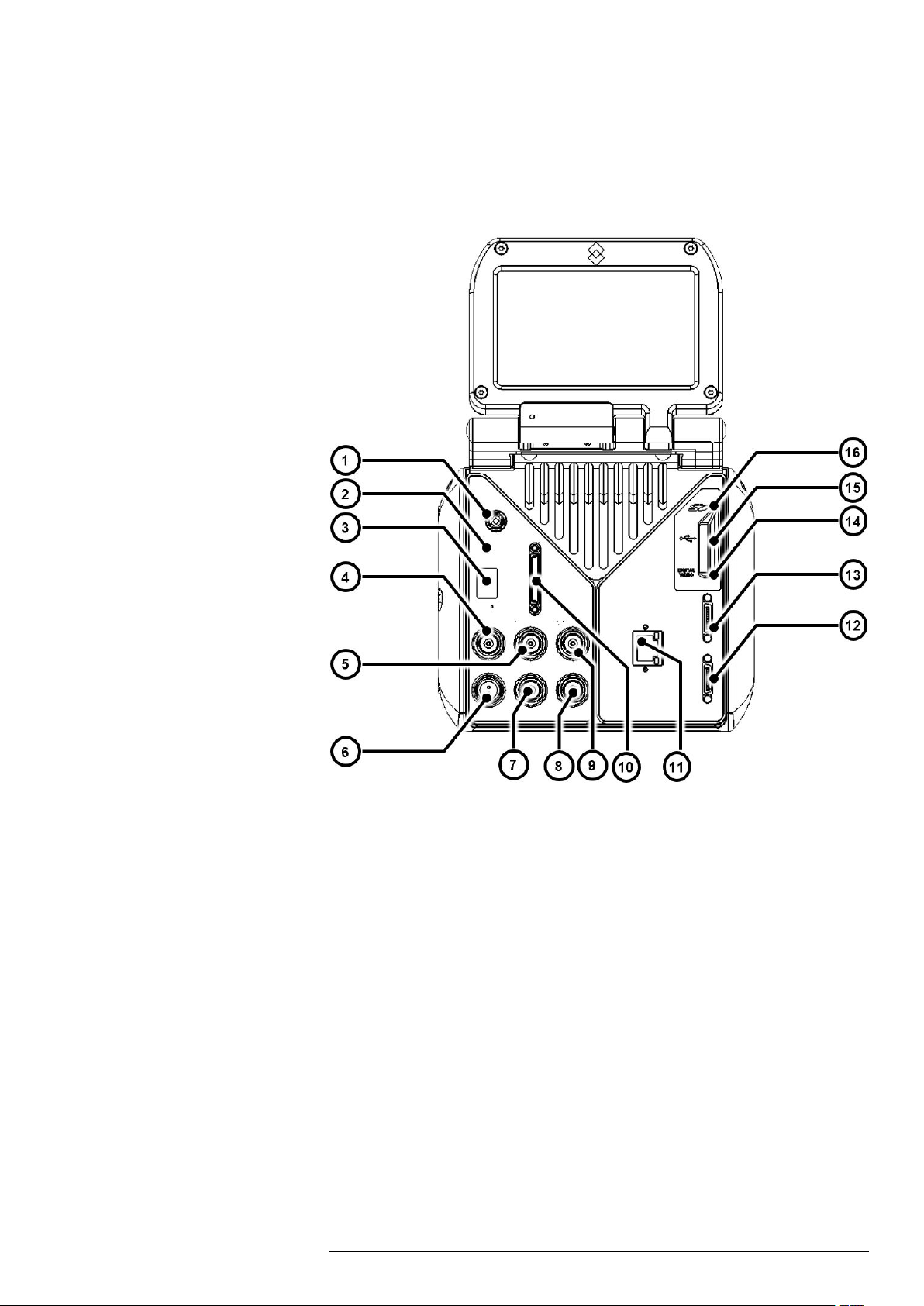

5.2.3 Back panel ................................................................. 11

5.3 Key features .......................................................................... 12

6 Installing the camera ..... .. .. .. ............................... .. .. .. ....................... 14

6.1 Mounting the camera............................................................... 14

6.2 Powering the camera ............................................................... 14

6.2.1 Power supply............................................................... 14

6.2.2 Power button............................................................... 15

6.2.3 Camera boot-up and cooling down................................... 15

6.3 Adjusting the field of view ......................................................... 15

6.3.1 LCD screen................................................................. 15

6.3.2 Lens.......................................................................... 17

6.4 Setting the camera parameters .................................................. 19

6.4.1 Connection to the computer............................................ 19

6.4.2 Connection to FLIR ResearchIR Max................................ 19

6.4.3 Image size adjustment................................................... 20

6.4.4 Measurement configuration ............................................ 21

6.4.5 Temperature range adjustment........................................ 22

6.4.6 Frame frequency.......................................................... 23

6.4.7 Synchronizing the camera to an external signal................... 23

6.4.8 Advanced camera controls ............................................. 24

6.4.9 Extended camera information ......................................... 25

7 Operation .... .. .. .. ................................. .. .. .. ................................. .. .. . 27

7.1 Filter wheel............................................................................ 27

#T810204; r. AA/43079/43091; en-US

v

Page 6

Table of contents

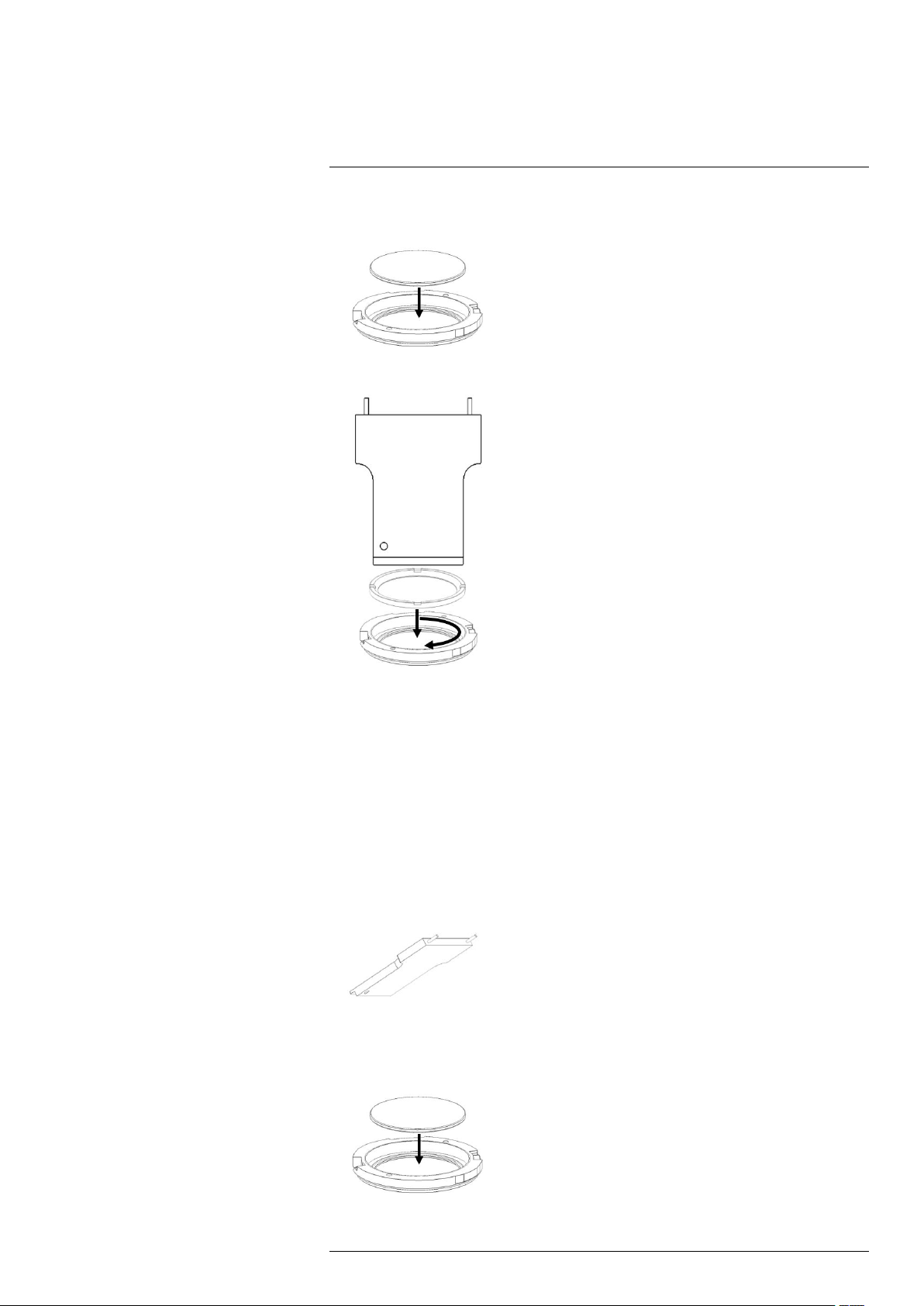

7.1.1 Removing an optical filter holder...................................... 27

7.1.2 Installing an optical filter holder........................................ 27

7.1.3 Filter holder identification ............................................... 28

7.1.4 Creating a custom filter holder......................................... 28

7.1.5 Installing two filters in the filter holder................................ 29

7.1.6 Adding a custom filter parameter into the camera ................ 30

7.1.7 Filter definition file description ......................................... 31

7.2 Camera configuration file management........................................ 32

7.2.1 CNUC file management................................................. 32

7.3 Camera Wi-Fi application ......................................................... 32

7.3.1 General...................................................................... 32

7.3.2 Procedure .................................................................. 32

7.3.3 Camera web page description......................................... 33

7.4 Infrared remote....................................................................... 33

7.4.1 General...................................................................... 33

7.4.2 Procedure .................................................................. 33

8 Radiometric measurement ................... .. .. .. ................................. .. .. . 34

8.1 Non-uniformity correction (NUC) ................................................ 34

8.1.1 General...................................................................... 34

8.1.2 CNUC........................................................................ 34

8.1.3 Two-point correction process .......................................... 34

8.1.4 One-point correction (offset correction) ............................. 35

8.2 Temperature calibration............................................................ 35

8.2.1 Hypercal .................................................................... 35

8.2.2 Auto-exposure............................................................. 35

8.3 Bad pixel replacement ............................................................. 36

8.3.1 General...................................................................... 36

8.4 Camera file management.......................................................... 36

8.4.1 Procedure .................................................................. 36

8.5 Frame rate and integration modes .............................................. 36

8.5.1 General...................................................................... 36

8.5.2 The ITR process .......................................................... 37

8.5.3 Procedure .................................................................. 39

8.6 Dynamic range extension—superframing..................................... 39

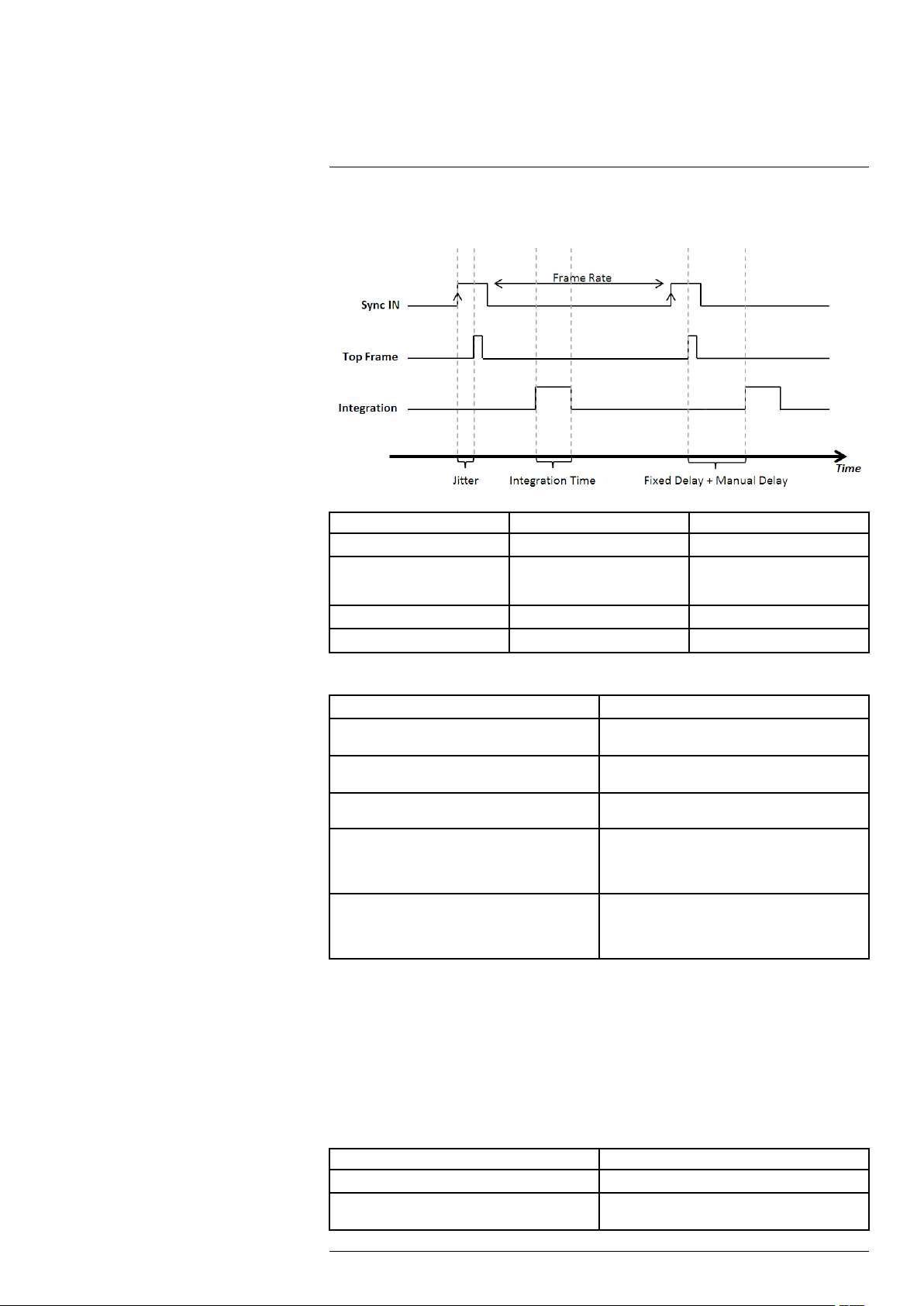

8.7 Camera synchronization........................................................... 39

8.7.1 Sync In ...................................................................... 40

8.7.2 Sync Out .................................................................... 41

8.8 Trigger In............................................................................... 42

8.8.1 General...................................................................... 42

8.8.2 Characteristics ............................................................ 43

8.8.3 LED description ........................................................... 43

8.9 Lock-in ................................................................................. 43

8.9.1 General...................................................................... 43

8.9.2 Characteristics ............................................................ 43

8.10 IRIG-B.................................................................................. 44

9 Interfaces ............................ ..................................... .. .................... 45

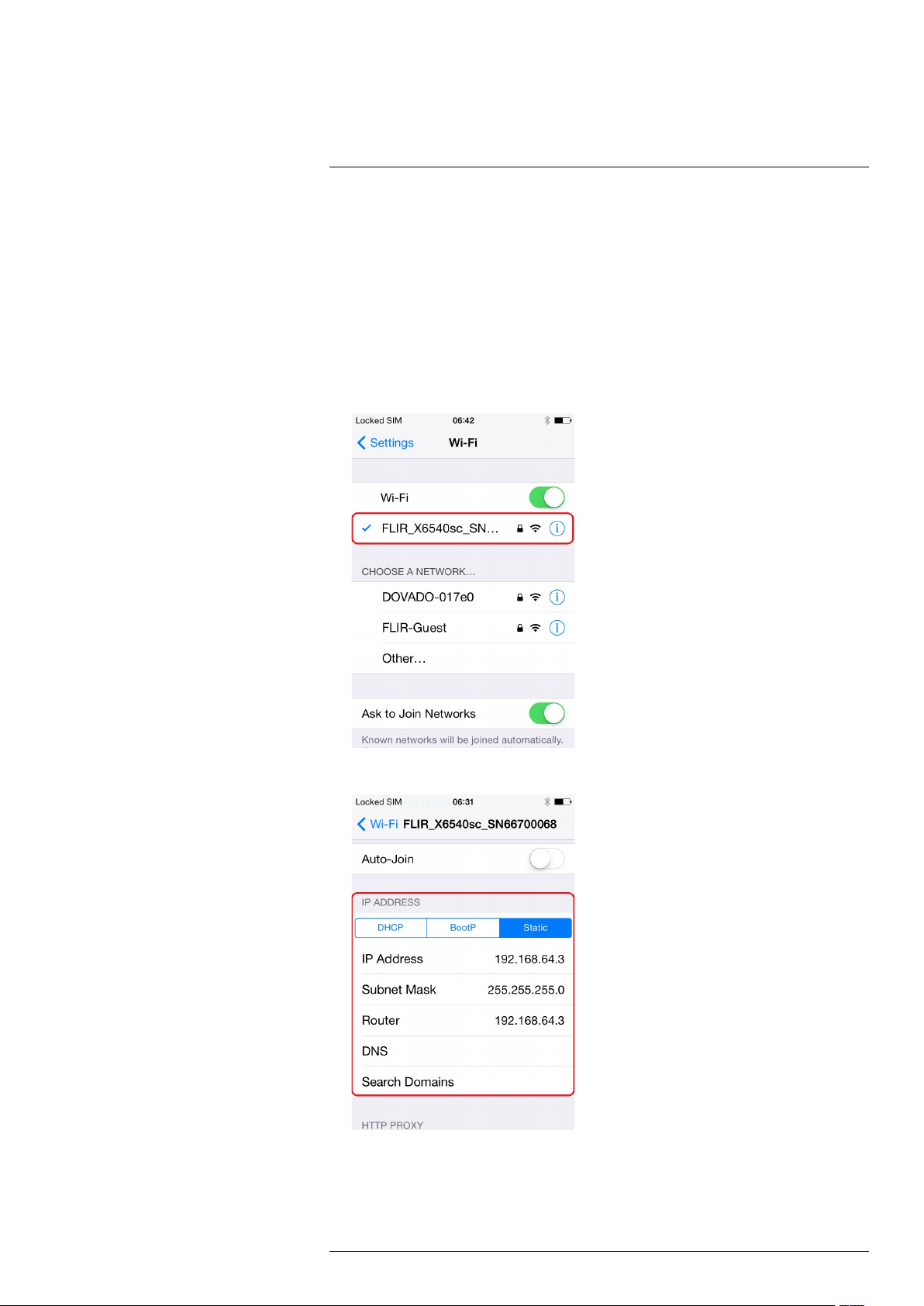

9.1 Wi-Fi connection..................................................................... 45

9.1.1 General...................................................................... 45

9.1.2 Procedure .................................................................. 45

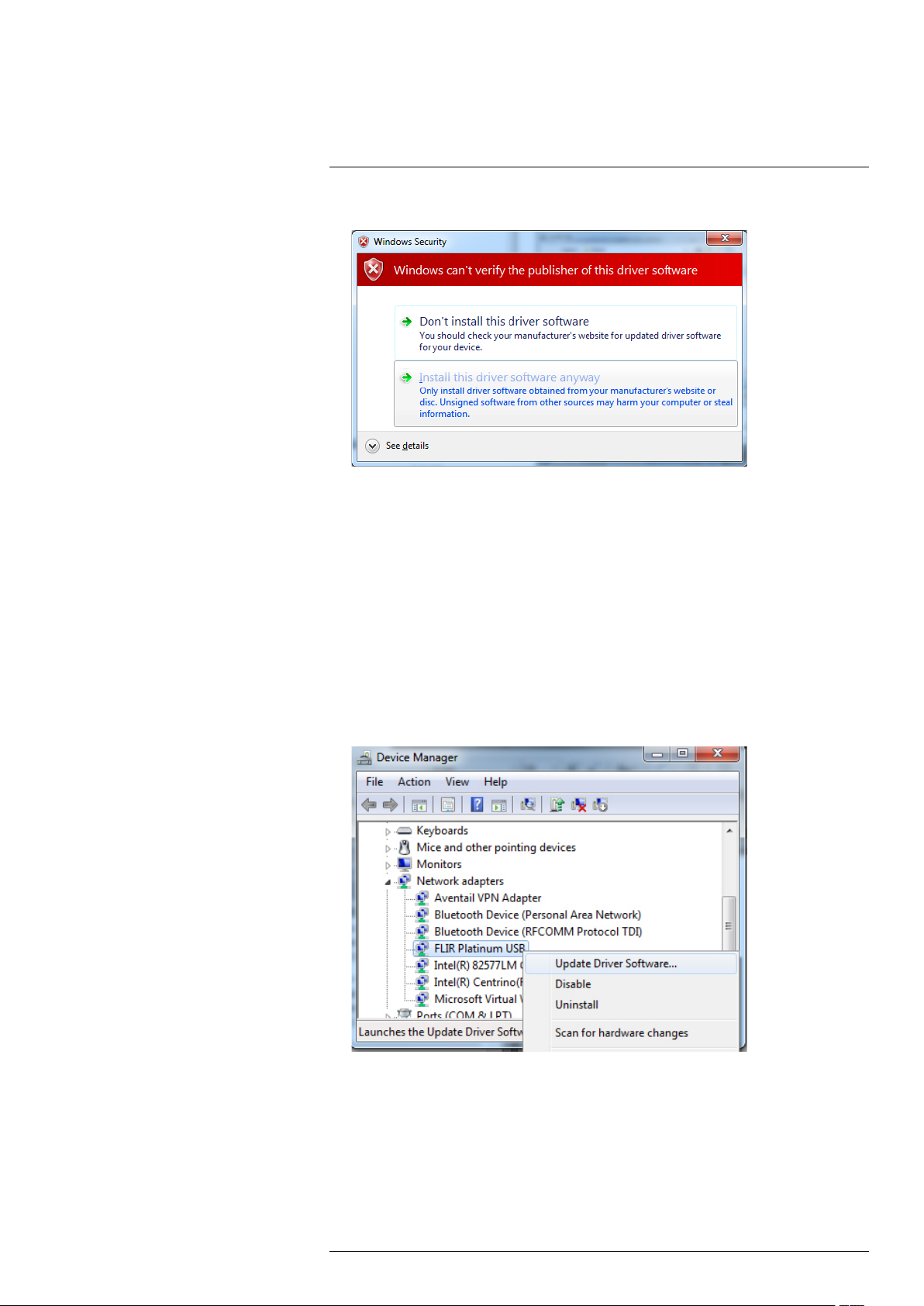

9.2 USB connection ..................................................................... 46

9.2.1 General...................................................................... 46

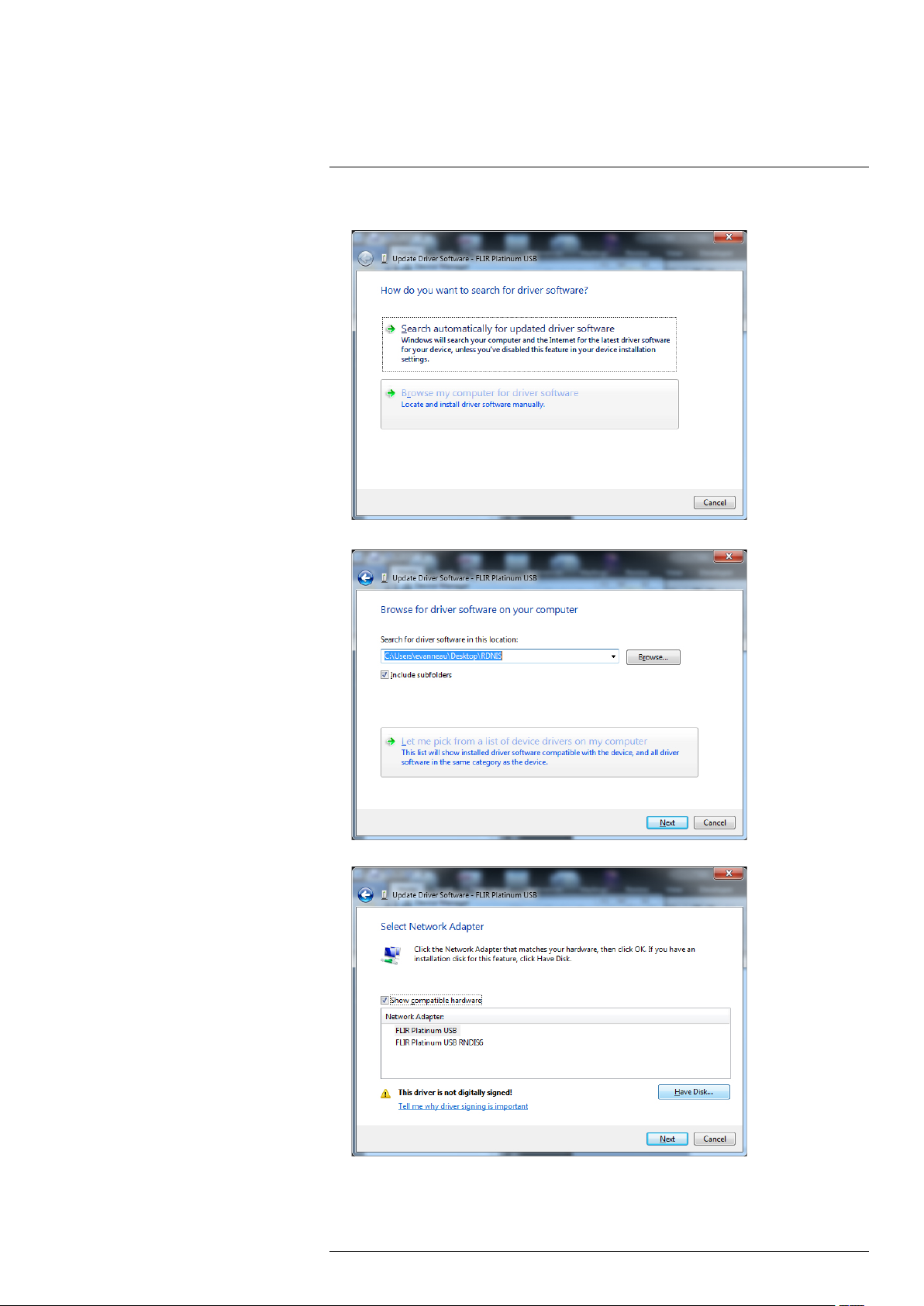

9.2.2 USB driver installation ................................................... 47

9.2.3 Configuration of the network interface ............................... 51

9.2.4 Accessing the camera files with Windows Explorer.............. 53

#T810204; r. AA/43079/43091; en-US

vi

Page 7

Table of contents

10 Mechanical drawings .. .. ................................. .. .. .............................. 55

11 Technical data ..... .. .. .. ................................. .. .. .. ............................... 57

11.1 Note about technical data ......................................................... 57

11.2 Note about authoritative versions................................................ 57

11.3 FLIR X6570sc ........................................................................ 58

12 Maintenance and service............................. .. .. .. ............................... 60

12.1 Cleaning the camera ............................................................... 60

12.1.1 Camera housing, cables, and other items .......................... 60

12.1.2 Infrared lens ................................................................ 60

12.2 Cooler maintenance ................................................................ 61

12.2.1 General...................................................................... 61

12.2.2 Signs to watch for......................................................... 61

13 Quality ................... .. .. .. ................................. .. .. .. ........................... 62

13.1 Quality assurance ................................................................... 62

13.2 For the US market................................................................... 62

13.3 For the Canadian market .......................................................... 62

13.4 For the whole world ................................................................. 62

14 About FLIR Systems .............. .. .. .. ................................. .. .. .. ............. 63

14.1 More than just an infrared camera .............................................. 64

14.2 Sharing our knowledge ............................................................ 64

14.3 Supporting our customers......................................................... 65

15 Terms, laws, and definitions............... .. ..................................... .. ...... 66

16 Thermographic measurement techniques .......................... .. .. .. .......... 68

16.1 Introduction .......................................................................... 68

16.2 Emissivity.............................................................................. 68

16.2.1 Finding the emissivity of a sample.................................... 68

16.3 Reflected apparent temperature ................................................. 72

16.4 Distance ............................................................................... 72

16.5 Relative humidity .................................................................... 72

16.6 Other parameters.................................................................... 72

17 About calibration........ .. .. .. ................................. .. .. .. ........................ 73

17.1 Introduction ........................................................................... 73

17.2 Definition—what is calibration? .................................................. 73

17.3 Camera calibration at FLIR Systems ........................................... 73

17.4 The differences between a calibration performed by a user and

that performed directly at FLIR Systems....................................... 74

17.5 Calibration, verification and adjustment........................................ 74

17.6 Non-uniformity correction.......................................................... 75

17.7 Thermal image adjustment (thermal tuning) .................................. 75

18 History of infrared technology................................. .. .. .. .................... 76

19 Theory of thermography.. .. ................................. .. .. .. ........................ 79

19.1 Introduction ........................................................................... 79

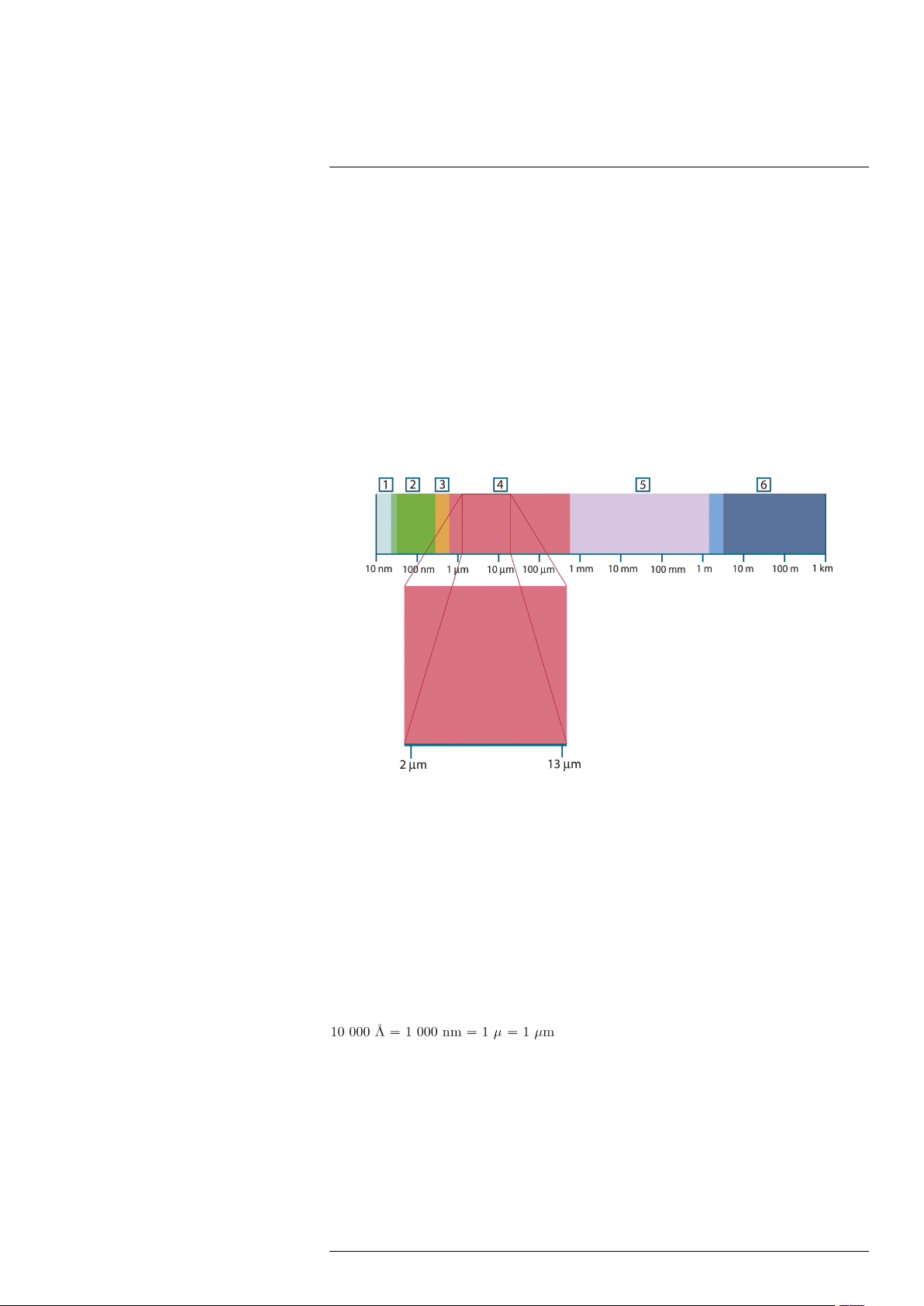

19.2 The electromagnetic spectrum................................................... 79

19.3 Blackbody radiation................................................................. 79

19.3.1 Planck’s law ................................................................ 80

19.3.2 Wien’s displacement law................................................ 81

19.3.3 Stefan-Boltzmann's law ................................................. 82

19.3.4 Non-blackbody emitters................................................. 83

19.4 Infrared semi-transparent materials............................................. 85

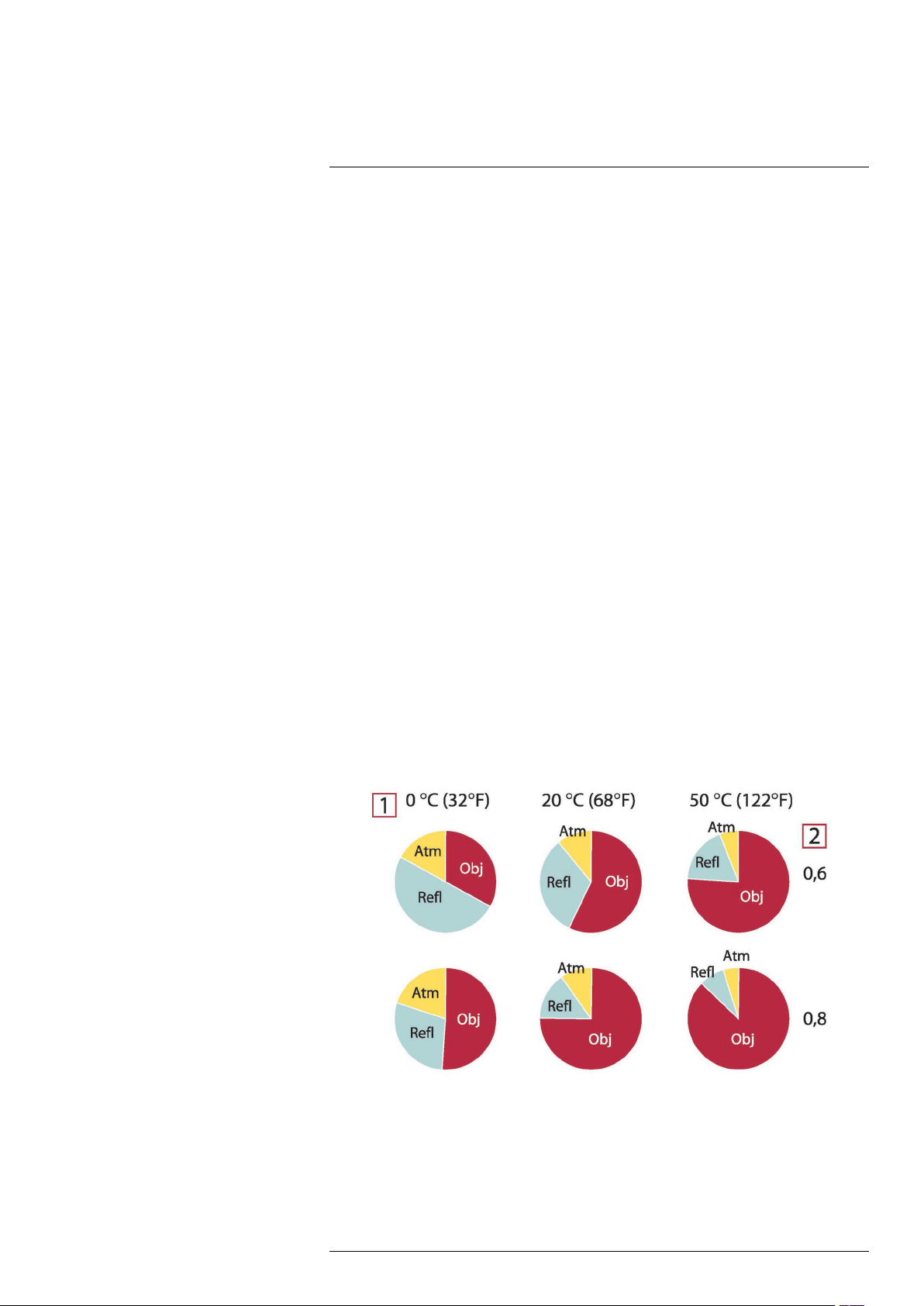

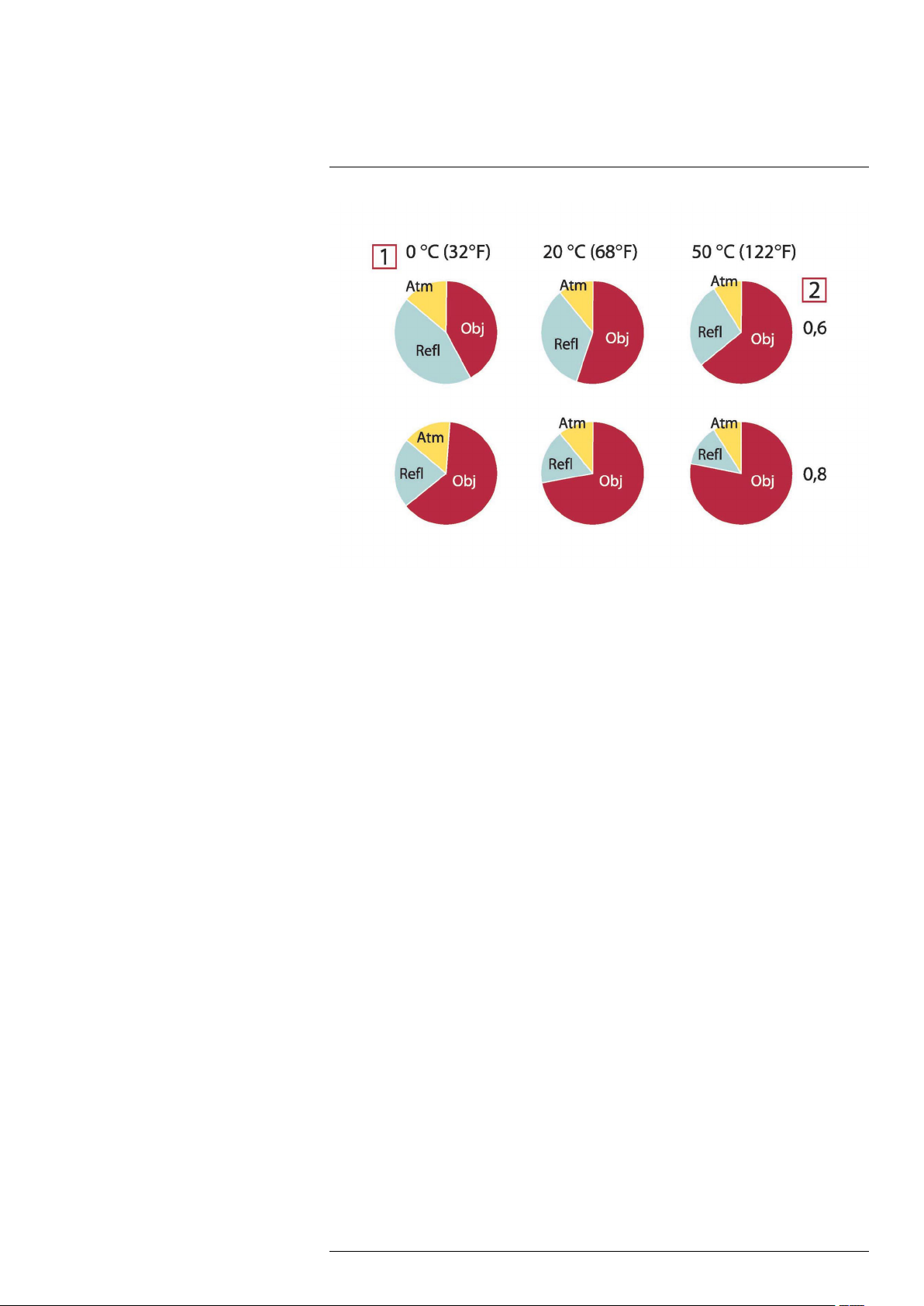

20 The measurement formula................. .. .. .. ................................. .. .. .. .. 86

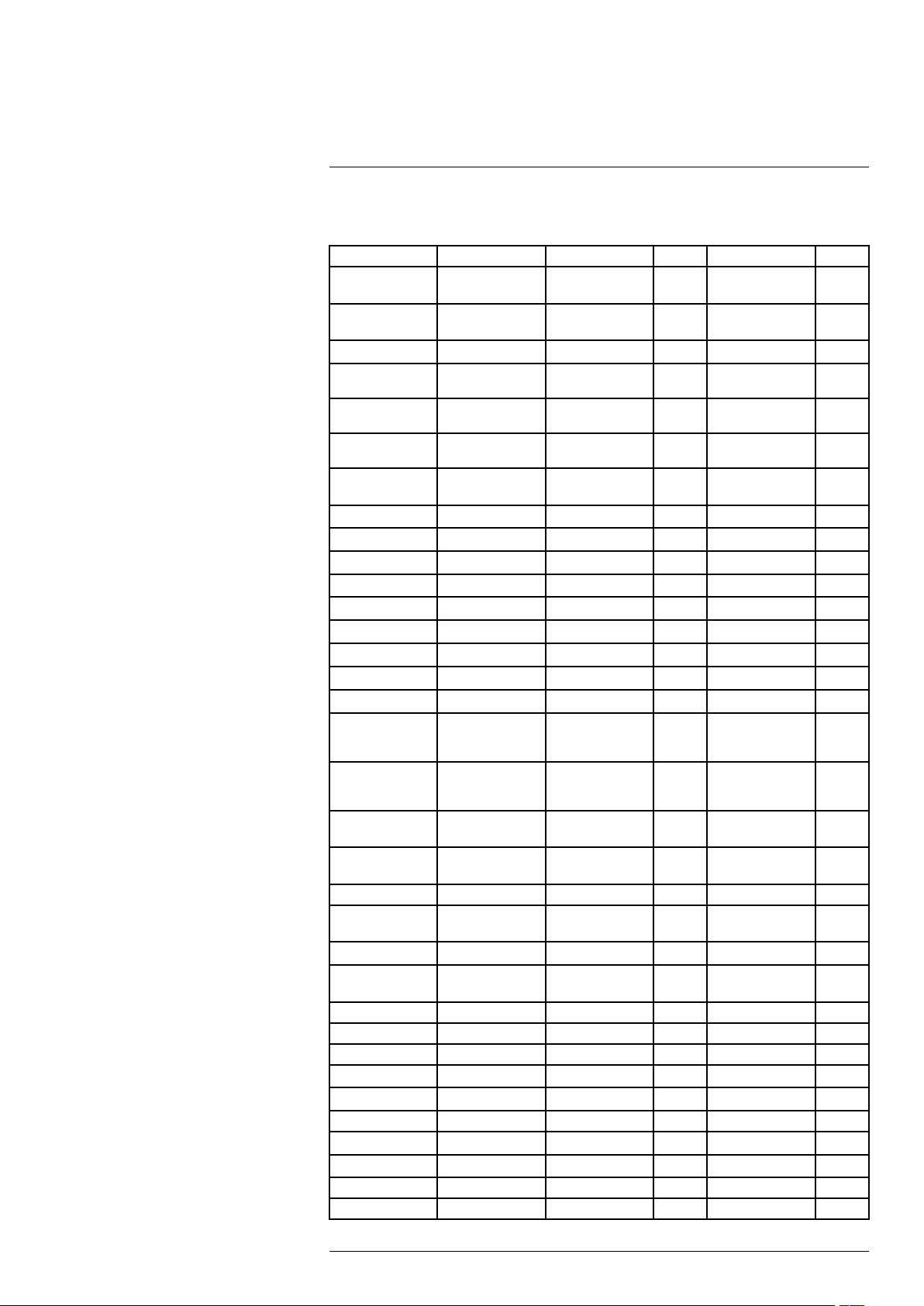

21 Emissivity tables ................................. .. .. .. ................................. .. .. . 90

21.1 References............................................................................ 90

21.2 Tables .................................................................................. 90

#T810204; r. AA/43079/43091; en-US

vii

Page 8

Page 9

1

Disclaimers

1.1 Legal disclaimer

All products manufactured by FLIR Systems are warranted against defective materials

and workmanship for a period of one (1) year from the delivery date of the original purchase, provided such products have been under normal storage, use and service, and in

accordance with FLIR Systems instruction.

Uncooled handheld infrared cameras manufactured by FLIR Systems are warranted

against defective materials and workmanship for a period of two (2) years from the delivery date of the original purchase, provided such products have been under normal storage, use and service, and in accordance with FLIR Systems instruction, and provided

that the camera has been registered within 60 days of original purchase.

Detectors for uncooled handheld infrared cameras manufactured by FLIR Systems are

warranted against defective materials and workmanship for a period of ten (10) years

from the delivery date of the original purchase, provided such products have been under

normal storage, use and service, and in accordance with FLIR Systems instruction, and

provided that the camera has been registered within 60 days of original purchase.

Products which are not manufactured by FLIR Systems but included in systems delivered by FLIR Systems to the original purchaser, carry the warranty, if any, of the particular supplier only. FLIR Systems has no responsibility whatsoever for such products.

The warranty extends only to the original purchaser and is not transferable. It is not applicable to any product which has been subjected to misuse, neglect, accident or abnormal

conditions of operation. Expendable parts are excluded from the warranty.

In the case of a defect in a product covered by this warranty the product must not be further used in order to prevent additional damage. The purchaser shall promptly report any

defect to FLIR Systems or this warranty will not apply.

FLIR Systems will, at its option, repair or replace any such defective product free of

charge if, upon inspection, it proves to be defective in material or workmanship and provided that it is returned to FLIR Systems within the said one-year period.

FLIR Systems has no other obligation or liability for defects than those set forth above.

No other warranty is expressed or implied. FLIR Systems specifically disclaims the im-

plied warranties of merchantability and fitness for a particular purpose.

FLIR Systems shall not be liable for any direct, indirect, special, incidental or consequen-

tial loss or damage, whether based on contract, tort or any other legal theory.

This warranty shall be governed by Swedish law.

Any dispute, controversy or claim arising out of or in connection with this warranty, shall

be finally settled by arbitration in accordance with the Rules of the Arbitration Institute of

the Stockholm Chamber of Commerce. The place of arbitration shall be Stockholm. The

language to be used in the arbitral proceedings shall be English.

1.2 Usage statistics

FLIR Systems reserves the right to gather anonymous usage statistics to help maintain

and improve the quality of our software and services.

1.3 Changes to registry

The registry entry HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\Lsa

\LmCompatibilityLevel will be automatically changed to level 2 if the FLIR Camera Monitor service detects a FLIR camera connected to the computer with a USB cable. The

modification will only be executed if the camera device implements a remote network

service that supports network logons.

#T810204; r. AA/43079/43091; en-US

1

Page 10

Disclaimers1

1.4 U.S. Government Regulations

This product may be subject to U.S. Export Regulations. Please send any inquiries to exportquestions@flir.com.

1.5 Copyright

© 2016, FLIR Systems, Inc. All rights reserved worldwide. No parts of the software including source code may be reproduced, transmitted, transcribed or translated into any

language or computer language in any form or by any means, electronic, magnetic, optical, manual or otherwise, without the prior written permission of FLIR Systems.

The documentation must not, in whole or part, be copied, photocopied, reproduced,

translated or transmitted to any electronic medium or machine readable form without prior consent, in writing, from FLIR Systems.

Names and marks appearing on the products herein are either registered trademarks or

trademarks of FLIR Systems and/or its subsidiaries. All other trademarks, trade names

or company names referenced herein are used for identification only and are the property of their respective owners.

1.6 Quality assurance

The Quality Management System under which these products are developed and manufactured has been certified in accordance with the ISO 9001 standard.

FLIR Systems is committed to a policy of continuous development; therefore we reserve

the right to make changes and improvements on any of the products without prior notice.

1.7 Patents

000439161; 000653423; 000726344; 000859020; 001707738; 001707746; 001707787;

001776519; 001954074; 002021543; 002021543-0002; 002058180; 002249953;

002531178; 002816785; 002816793; 011200326; 014347553; 057692; 061609;

07002405; 100414275; 101796816; 101796817; 101796818; 102334141; 1062100;

11063060001; 11517895; 1226865; 12300216; 12300224; 1285345; 1299699;

1325808; 1336775; 1391114; 1402918; 1404291; 1411581; 1415075; 1421497;

1458284; 1678485; 1732314; 17399650; 1880950; 1886650; 2007301511414;

2007303395047; 2008301285812; 2009301900619; 20100060357; 2010301761271;

2010301761303; 2010301761572; 2010305959313; 2011304423549; 2012304717443;

2012306207318; 2013302676195; 2015202354035; 2015304259171; 204465713;

204967995; 2106017; 2107799; 2115696; 2172004; 2315433; 2381417; 2794760001;

3006596; 3006597; 303330211; 4358936; 483782; 484155; 4889913; 4937897;

4995790001; 5177595; 540838; 579475; 584755; 599392; 60122153; 6020040116815;

602006006500.0; 6020080347796; 6020110003453; 615113; 615116; 664580; 664581;

665004; 665440; 67023029; 6707044; 677298; 68657; 69036179; 70022216;

70028915; 70028923; 70057990; 7034300; 710424; 7110035; 7154093; 7157705;

718801; 723605; 7237946; 7312822; 7332716; 7336823; 734803; 7544944; 7606484;

7634157; 7667198; 7809258; 7826736; 8018649; 8153971; 8212210; 8289372;

8340414; 8354639; 8384783; 8520970; 8565547; 8595689; 8599262; 8654239;

8680468; 8803093; 8823803; 8853631; 8933403; 9171361; 9191583; 9279728;

9280812; 9338352; 9423940; 9471970; 9595087; D549758.

1.8 Third-party licenses

1.8.1 GNU Lesser General Public License (LGPL)

http://www.gnu.org/licenses/lgpl-2.1.en.html

(Retrieved May 27, 2015)

1.8.2 Fonts (Source Han Sans)

https://github.com/adobe-fonts/source-han-sans/blob/master/LICENSE.txt

(Retrieved May 27, 2015)

#T810204; r. AA/43079/43091; en-US

2

Page 11

Disclaimers1

1.8.3 Fonts (DejaVu)

http://dejavu-fonts.org/wiki/License

(Retrieved May 27, 2015)

#T810204; r. AA/43079/43091; en-US

3

Page 12

2

Safety information

For best results and user safety, the following warnings and precautions should be followed when handling and operating the camera.

• Do not open the camera body for any reason. Disassembly of the camera (including

removal of the cover) can cause permanent damage and will void the warranty.

• Great care should be exercised with your camera optics. Refer to section 12.1.2 Infra-

red lens, page 60 for lens cleaning.

• Operating the camera outside of the specified input voltage range or the specified operating temperature range can cause permanent damage.

• Do not image extremely high-intensity radiation sources, e.g., the sun, lasers, or arc

welders.

• The camera is a precision optical instrument and should not be exposed to excessive

shock and/or vibration.

• The camera contains static-sensitive electronics and should be handled appropriately.

• Do not put any item on the external cooling intake, to maintain the cooling of the

camera.

#T810204; r. AA/43079/43091; en-US

4

Page 13

3

Notice to user

3.1 User-to-user forums

Exchange ideas, problems, and infrared solutions with fellow thermographers around the

world in our user-to-user forums. To go to the forums, visit:

http://forum.infraredtraining.com/

3.2 Calibration

We recommend that you send in the camera for calibration once a year. Contact your local sales office for instructions on where to send the camera.

3.3 Accuracy

For very accurate results, we recommend that you wait 5 minutes after you have started

the camera before measuring a temperature.

3.4 Disposal of electronic waste

As with most electronic products, this equipment must be disposed of in an environmentally friendly way, and in accordance with existing regulations for electronic waste.

Please contact your FLIR Systems representative for more details.

3.5 Training

To read about infrared training, visit:

• http://www.infraredtraining.com

• http://www.irtraining.com

• http://www.irtraining.eu

3.6 Documentation updates

Our manuals are updated several times per year, and we also issue product-critical notifications of changes on a regular basis.

To access the latest manuals, translations of manuals, and notifications, go to the Download tab at:

http://support.flir.com

It only takes a few minutes to register online. In the download area you will also find the

latest releases of manuals for our other products, as well as manuals for our historical

and obsolete products.

3.7 Important note about this manual

FLIR Systems issues generic manuals that cover several cameras within a model line.

#T810204; r. AA/43079/43091; en-US

5

Page 14

Notice to user3

This means that this manual may contain descriptions and explanations that do not apply

to your particular camera model.

3.8 Note about authoritative versions

The authoritative version of this publication is English. In the event of divergences due to

translation errors, the English text has precedence.

Any late changes are first implemented in English.

#T810204; r. AA/43079/43091; en-US

6

Page 15

4

Customer help

4.1 General

For customer help, visit:

http://support.flir.com

4.2 Submitting a question

To submit a question to the customer help team, you must be a registered user. It only

takes a few minutes to register online. If you only want to search the knowledgebase for

existing questions and answers, you do not need to be a registered user.

When you want to submit a question, make sure that you have the following information

to hand:

• The camera model

• The camera serial number

• The communication protocol, or method, between the camera and your device (for example, SD card reader, HDMI, Ethernet, USB, or FireWire)

• Device type (PC/Mac/iPhone/iPad/Android device, etc.)

• Version of any programs from FLIR Systems

• Full name, publication number, and revision number of the manual

4.3 Downloads

On the customer help site you can also download the following, when applicable for the

product:

#T810204; r. AA/43079/43091; en-US

7

Page 16

4

Customer help

• Firmware updates for your infrared camera.

• Program updates for your PC/Mac software.

• Freeware and evaluation versions of PC/Mac software.

• User documentation for current, obsolete, and historical products.

• Mechanical drawings (in *.dxf and *.pdf format).

• Cad data models (in *.stp format).

• Application stories.

• Technical datasheets.

• Product catalogs.

#T810204; r. AA/43079/43091; en-US

8

Page 17

5

Introduction

5.1 Camera system components

The FLIR X6570sc infrared camera and its accessories are delivered in a transport case

that typically contains the items below.

• FLIR X6570sc camera with removable LCD touchscreen.

• Portfolio that includes important information on the camera:

◦ Packing list.

◦ Factory acceptance report.

◦ Calibration curves (if applicable).

◦ Camera files on a CD-ROM.

◦ Optical cleaning tissue.

◦ A set of user instructions.

◦ Filter-holding tool.

◦ Micro SD card with an SD adapter.

• Camera power supply.

• Camera cables:

◦ Power supply.

◦ Gigabit Ethernet (GigE) with locks.

◦ 50 Ω coaxial cable for sync (yellow colored).

◦ 50 Ω coaxial cable for triggering (orange colored).

◦ 50 Ω coaxial cable for lock-in (green colored).

◦ 75 Ω coaxial cable for general purposes (blue colored).

◦ LCD extender cable (with right-angle USB connectors).

• LCD connector protective cap.

There may also be additional items that you have ordered such as software or CDs.

5.2 System overview

The FLIR X6570sc infrared camera system has been developed by FLIR to meet the

needs of the research community. The camera makes use of an advanced 640 × 512

readout circuit (ROIC), mated to a mercury cadmium telluride (MCT) detector to cover

the 7.7–9.3 µm long-wave infrared band.

The FLIR X6570sc is a stand-alone imaging camera that interfaces to host PCs using

standard interfaces, including GigE and Camera Link Base.

#T810204; r. AA/43079/43091; en-US

9

Page 18

5

Introduction

5.2.1 View from the front—M80 mount

Figure 5.1 View from the front—M80 mount.

1. Wi-Fi antenna.

2. Global status LED.

3. Lens M80 interface.

5.2.2 View from the rear

Figure 5.2 View from the rear.

1. Removable touch screen LCD.

2. External cooling intake.

3. External cooling exhaust.

#T810204; r. AA/43079/43091; en-US

10

Page 19

5

Introduction

5.2.3 Back panel

Figure 5.3 Camera back panel description.

1. Power button.

2. Status LED.

3. Infrared remote sensor.

4. Sync In.

5. Sync Out.

6. Power In.

7. Lock-in In.

8. General-purpose IO.

9. Trigger In.

10. Auxiliary port.

11. GigE Vision.

12. Camera Link Base.

13. Camera Link Medium.

14. Digital video interface.

15. USB .

16. Micro SD card.

#T810204; r. AA/43079/43091; en-US

11

Page 20

5

Introduction

5.3 Key features

• Fast frame rate

The FLIR X6570sc series has an adjustable frame rate. Windowing allows a subset of

the total image to be selectively read out with a user-adjustable window size. The

sub-sample windows can be arbitrarily chosen and are easily defined.

• 14-bit image data

The FLIR X6570sc camera streams out 14-bit thermal images.

• Outstanding measurement accuracy

The high accuracy of ±1°C or ±1% produces sensitive thermal images. The FLIR

X6570sc camera detects temperature differences smaller than 25 mK (20 mK typical).

• CNUC calibration

CNUC is a proprietary calibration process that provides beautiful imagery and measurement stability. CNUC allows for flexible integration time adjustments without the

need to perform non-uniformity corrections. CNUC calibration also produces accurate

measurement stability regardless of exposure of the camera to ambient temperature

variations.

• Hypercal

Hypercal ensures the best measurement range with the highest sensitivity. Simply set

the desired lower and upper temperature limits, and the camera will automatically adjust to the appropriate integration (exposure) time.

• Auto-exposure

The camera automatically adjusts its temperature range to best fit the thermal scene.

• Presets

Up to eight presets and their associated parameters, e.g., integration time, frame rate,

window size, and window location, are available for instant selection with a single

command. These presets can be used in Dynamic Range Extension (DRX) mode (also called “superframing”), which allows the acquisition of thermal data from up to four

user-defined temperature ranges simultaneously, then merges those streams into a

single real-time data stream that spans all four temperature ranges, effectively extending dynamic range from 14 bit to 16 bit.

• Multiple triggering modes and synchronizing interfaces

The FLIR X6570sc camera provides different interfaces to support maximum flexibility

for synchronizing the camera to external events, as well as synchronizing external

events to the camera:

◦ Sync In (TTL).

◦ Sync Out.

◦ Trigger In.

• Multiple video outputs

The FLIR X6570sc camera features multiple independent and simultaneous video:

◦ Digital 14-bit video—Camera Link Base.

◦ Digital 14-bit video—GigE.

◦ Digital video—DVI format 1080p30 digital output.

• The FLIR X6570sc camera has an advanced high-performance optical design. The

lenses feature a professional M80 mount.

• Motorized filter wheel

The FLIR X6570sc camera has a four-slot motorized filter wheel with automatic filter

recognition and measurement parameter adjustment. The removable filter holders

contain an integrated temperature probe for improved measurement accuracy.

• Removable touch screen LCD

The detachable touch screen LCD provides you with on-site thermal image feedback.

The LCD screen also provides camera information, adjustment controls, and ResearchIR Max acquisition control. The LCD touch screen can be removed from the

#T810204; r. AA/43079/43091; en-US

12

Page 21

5

Introduction

FLIR X6570sc camera when the camera needs to be installed in a hard to reach location. Simply position the camera and control it at a distance.

• Wi-Fi

The camera includes a Wi-Fi interface, which enables it to be controlled by a smart

phone (iPhone) or a tablet (iPad).

• Video color palettes

The FLIR X6570sc camera supports a selection of standard and user-defined color

palettes (or grayscale) for DVI video.

• Configuration management

Save your camera configuration to the SD card (e.g., when loaning your camera to

your colleague). To use a configuration saved on an SD card, simply insert the SD

card.

• Global-status LED

Located on the top of the camera, the global-status LED provides instant system status, including the ResearchIR Max status (a green light indicates fully acquired). The

back panel LEDs instantly inform you about the camera status.

#T810204; r. AA/43079/43091; en-US

13

Page 22

6

Installing the camera

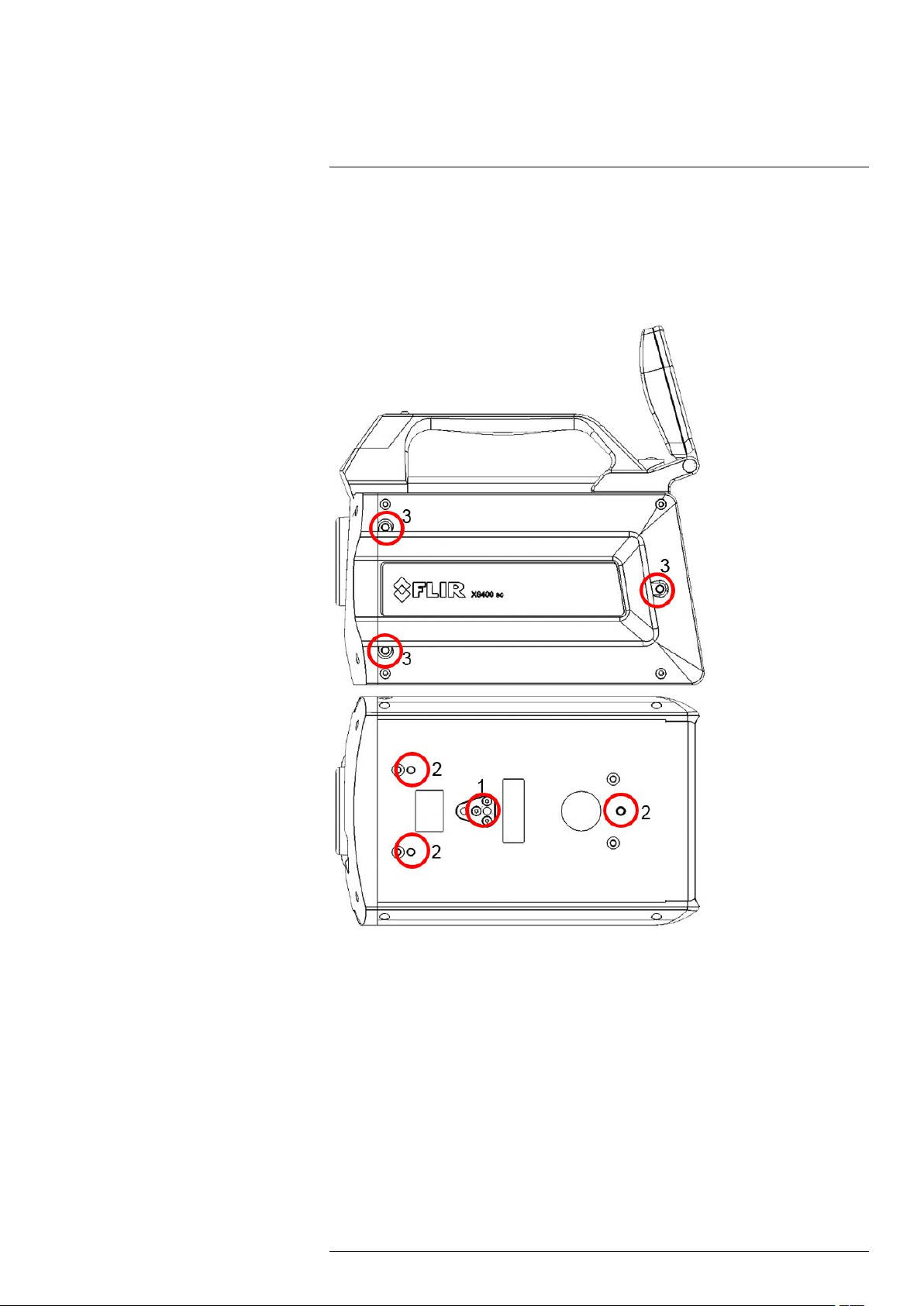

6.1 Mounting the camera

The camera can be operated installed either on a workbench or mounted on a tripod or

custom mount. A standard photo mount (¼-20 UNC) or 3 × M5 mounting holes on the

camera base as well as 3 × M5 mounting holes on the left side of the camera are

provided.

1. ¼-20 UNC mount.

2. Camera base M5 mounting holes.

3. Camera left side M5 mounting holes.

6.2 Powering the camera

6.2.1 Power supply

The camera is powered through the red power connector (6 in Figure 5.3 Camera back

panel description., page 11) on the back panel. When the 24 V DC power supply pro-

vided with the camera (PN X1159) is connected, the power button (1 in Figure 5.3 Camera back panel description., page 11) blinks slowly, indicating that the camera is

receiving power.

Refer to section 11 Technical data, page 57 for the power supply technical data.

#T810204; r. AA/43079/43091; en-US

14

Page 23

Installing the camera6

6.2.2 Power button

The power button (1 in Figure 5.3 Camera back panel description., page 11) is located

behind the touch screen LCD. Open the touch screen LCD to its maximum extension to

access the button.

Note Keep the LCD screen opened or detach it when the camera is being operated to

prevent the external cooling vent from being blocked.

A short press on the power button starts the camera.

To turn off the camera:

• A short press on the power button starts the camera shutdown procedure. The camera is switched off a few seconds later.

• A long press on the power button forces the camera to turn off immediately, bypassing

the shutdown procedure.

6.2.3 Camera boot-up and cooling down

When the camera is turned on, its Stirling cooler starts first. Stirling coolers produce

noise. A high volume of noise is normal for advanced cooled thermal cameras.

The camera requires up to 7 minutes to reach the detector temperature of 80 K. In parallel, the camera performs a built-in test of its components and initializes the internal software and interfaces.

The camera is ready to use when all the status LEDs on the back panel are green (2 in

Figure 5.3 Camera back panel description., page 11).

6.3 Adjusting the field of view

Once the camera is installed and operating, its field of view is adjusted to suit the thermal

scene being imaged. This adjustment is done by selecting a suitable lens for the desired

field of view, and then fine tuning the camera position to the scene.

Use of the LCD screen is helpful during this procedure.

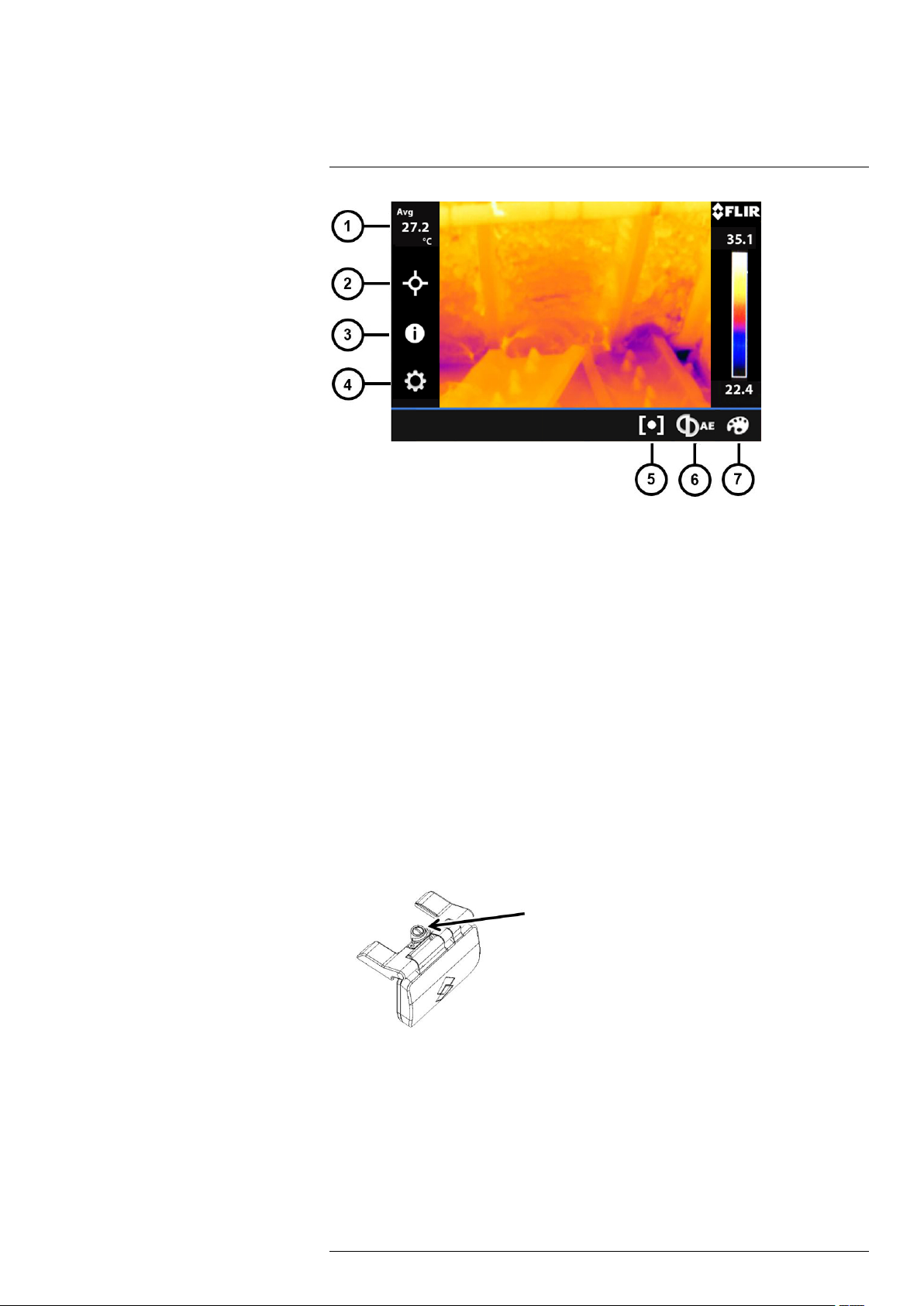

6.3.1 LCD screen

The FLIR X6570sc includes a detachable touch screen LCD that provides instant thermal image feedback. The LCD screen also provides camera information, adjustment

controls, and ResearchIR Max acquisition control.

In the LCD screen examples below, the camera measurement configuration and temperature range have been adapted to the thermal scene. If the camera is not correctly set up

for the scene, the image can become saturated.

The temperature range of the camera can be automatically adjusted using the auto-exposure button on the touch screen. For more information, see section 8.2.2 Auto-expo-

sure, page 35.

If the thermal scene does not match the configuration measurement (i.e., the spectral filter on the filter wheel), it is necessary to select the correct configuration measurement in

ResearchIR Max. For more information, see section 6.4.4 Measurement configuration,

page 21.

#T810204; r. AA/43079/43091; en-US

15

Page 24

Installing the camera6

Figure 6.1 LCD touch screen.

1. Image statistics.

2. Add spot tool: center, cold, or hot.

3. Camera information.

4. Measurement configuration.

5. Start acquisition in ResearchIR Max.

6. Auto-exposure.

7. Change the color palette.

6.3.1.1 Detaching the touch screen LCD

The LCD screen can be detached from the camera and used remotely when the camera

is mounted in a hard to reach location.

Note

• The camera can still be operated without the LCD screen connected.

• The LCD screen can be detached and attached while the camera is in operation.

6.3.1.2 Procedure

Follow the procedure below to install and detach the LCD screen from the camera:

1. Remove the LCD screw using a flat screwdriver or a coin.

2. Gently lift up the screen to disconnect it from the camera, being careful of the USB

connector.

#T810204; r. AA/43079/43091; en-US

16

Page 25

Installing the camera6

3. Place the provided protective cap on the camera, to avoid dust or water entering the

camera.

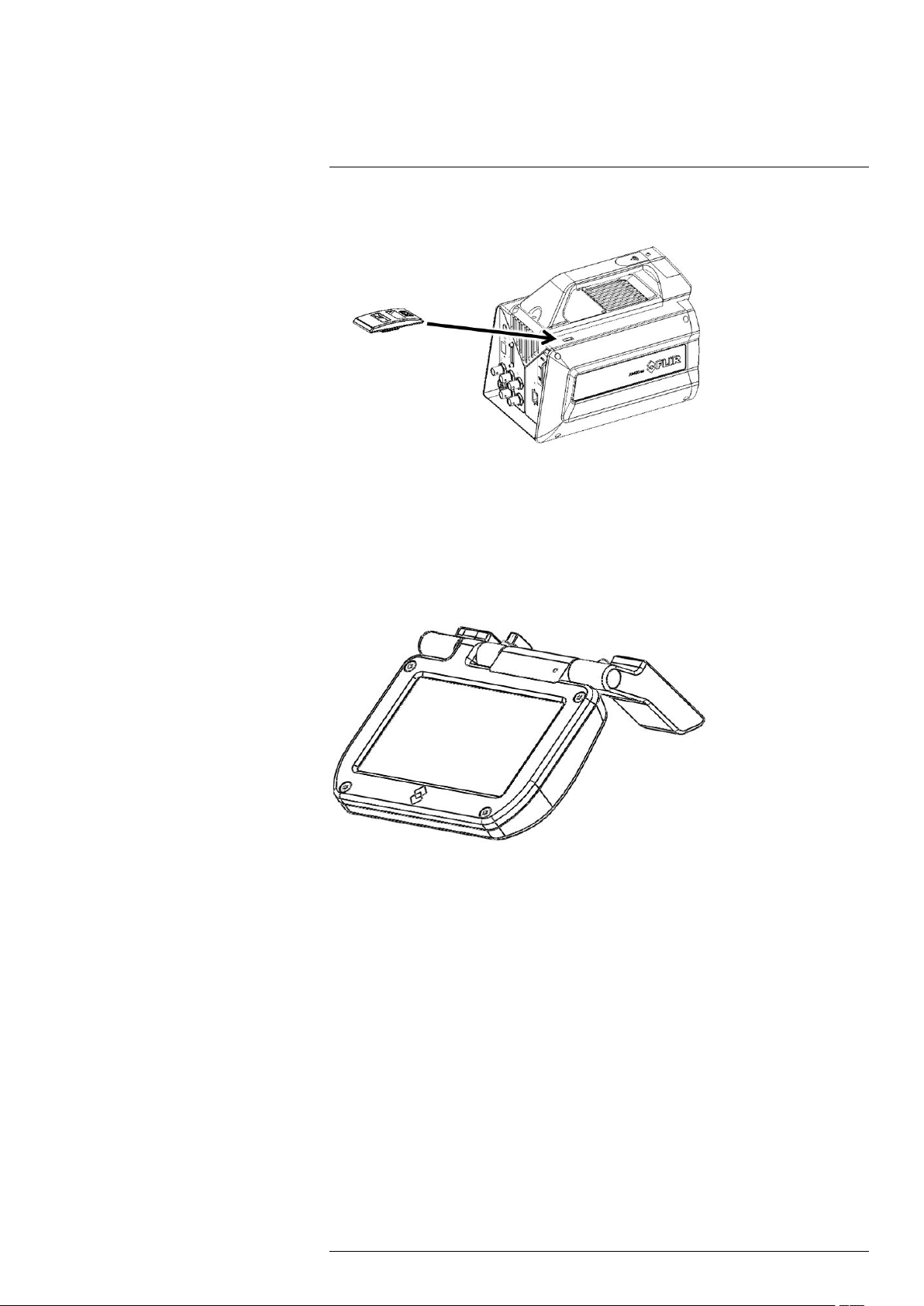

6.3.1.3 General

When detached, the LCD screen can be connected to the camera using the provided

right-angled USB extender cable. An additional USB cable can be added to extend the

length. The efficiency of operating the camera in this way is highly dependent on the

quality of the additional USB cable and the environment in which the camera is being

used.

The screen has been designed for ease of use on a workbench, as shown in the figure

below.

The screen automatically detects its orientation, and flips the interface accordingly. The

orientation can be locked in the ResearchIR Max camera user interface (see section

6.4.8 Advanced camera controls, page 24).

6.3.2 Lens

A large range of lenses is available for the FLIR X6570sc. The lenses feature a professional M80 mount.

Note FLIR is continuously extending its range of available optics. Contact your FLIR

sales representative for more information on newly available optics.

6.3.2.1 Installing an infrared lens

Note

• The detector is a very sensitive sensor. It must not be directed toward strong visible

light, e.g., sunlight.

• Do not touch the lens surface when you install the lens. If this happens, clean the lens

according to the instructions in section 12.1.2 Infrared lens, page 60.

• Do not touch the filter surface when you install the lens. If this happens, clean the filter

according to the instructions in section 12.1.2 Infrared lens, page 60.

#T810204; r. AA/43079/43091; en-US

17

Page 26

Installing the camera6

6.3.2.1.1 Procedure—M80 mount

Follow the procedure below to install an infrared lens with an M80 mount:

1. If present, remove the installed lens or the protection in front of the detector/filter

wheel.

2. Carefully push the infrared lens into position.

3. Rotate the infrared lens clockwise (looking at the front of the lens) until it stops.

4. You must manually select the measurement configuration using the FLIR ResearchIR

Max software. For more information, see section 6.4.4 Measurement configuration,

page 21.

6.3.2.2 Removing an infrared lens

Note

• The detector is a very sensitive sensor. It must not be directed toward strong light, e.

g., sunlight.

• Do not touch the lens surface when you install the lens. If this happens, clean the lens

according to the instructions in section 12.1.2 Infrared lens, page 60.

• Lenses can be heavy, so take care not to be surprised by their weight. Some lenses

weight several hundred grams.

• When you have removed the infrared lens, put the lens caps on the lens to protect it

from dust and fingerprints.

6.3.2.2.1 Procedure—M80 mount

Follow the procedure below to remove an infrared lens with an M80 mount:

1. Rotate the infrared lens counterclockwise (looking at the front of the lens).

2. Carefully pull out the infrared lens.

3. Install the protective cap or a new optic on the camera to avoid visible light striking

the detector.

6.3.2.3 Adjusting the camera focus

Note Do not touch the lens surface when you adjust the camera focus. If this happens,

clean the lens according to the instructions in section 12.1.2 Infrared lens, page 60.

Camera focus can be done manually by rotating the focus ring on the lens:

• For far focus, rotate the focus ring counterclockwise (looking at the front of the lens).

• For near focus, rotate the focus ring clockwise (looking at the front of the lens).

6.3.2.4 Using an extension ring

Note

• The detector is a very sensitive sensor. It must not be directed toward strong visible

light, e.g., sunlight.

• Do not touch the lens surface when you install the lens. If this happens, clean the lens

according to the instructions in section 12.1.2 Infrared lens, page 60.

• Using extension rings requires a good understanding of their radiometric effects and

the resulting measurement errors. The Infrared Training Center (ITC) offers courses

and training. For more information on any training you require, contact your FLIR sales

representative or ITC at www.infraredtraining.com.

Extension rings can be added between the camera and the infrared lens in order to

change the minimum focus distance and thus the field of view of the camera. It is possible to use more than one extension ring at the same time.

Refer to the specification sheet for your infrared lens for available extension ring sizes

and other data.

Depending on the extension ring, automatic lens identification may not function. This

means you must manually select the measurement configuration using the FLIR ResearchIR Max software.

#T810204; r. AA/43079/43091; en-US

18

Page 27

Installing the camera6

6.4 Setting the camera parameters

6.4.1 Connection to the computer

The camera can be connected to a computer using either Camera Link or GigE.

Although it is possible to use both interfaces in parallel, only one of these should be used

send commands to the camera. The second computer should be used only to retrieve

images.

6.4.1.1 Connection through the Camera Link interface

Camera Link is a standard data interface for high-end visible and infrared cameras. The

FLIR X6570sc uses a Camera Link Base interface in a single-tap, 16-bit configuration. In

terms of ports, the A and B ports are used, with bit A0 being the LSB of the data transferred, and bit B7 being the MSB. The header row uses the entire 16-bit value while the

pixel data has a 14-bit range, with the upper MSBs masked to 0.

The camera is connected to the computer using one camera link cable (refer to section

11 Technical data, page 57 for cable reference and Camera Link information). Connect

the cable to connector 12 in Figure 5.3 Camera back panel description., page 11.

The Camera Link mode is selected using the ResearchIR Max camera control panel interface (refer to section 6.4.8 Advanced camera controls, page 24). It should always be

set to Base for the FLIR X6570sc.

Note

• Various connector notations can be found for Camera Link medium frame grabbers

(0&1, 1&2, A&B). Make sure to connect camera connector 1 to the first port of the

frame grabber.

• ResearchIR Max software supports a variety of frame grabbers. Contact your FLIR

sales representative for more information on compatibility.

6.4.1.2 Connection through the GigE interface

The FLIR X6570sc features a GigE connection. The GigE interface can be used for image acquisition and/or camera control. The GigE interface is GigE Vision compliant.

GigE is available when the camera is in Base mode only. Refer to section 6.4.8 Ad-

vanced camera controls, page 24 for mode selection.

Note

• Use only the high-quality Ethernet cable provided with the camera or a CAT 6 equivalent cable.

• The GigE driver installation procedure must be followed exactly. Contact your FLIR local support if required.

6.4.2 Connection to FLIR ResearchIR Max

Note Refer to section 6.4.1 Connection to the computer, page 19 to make sure that the

camera is correctly connected to the computer.

6.4.2.1 General

The FLIR X6570sc interfaces with the FLIR ResearchIR Max software. FLIR ResearchIR

Max is a powerful image acquisition and analysis tool. Refer to the ResearchIR Max user

manual for operating instructions. FLIR X6570sc specific camera control is described in

this document.

#T810204; r. AA/43079/43091; en-US

19

Page 28

Installing the camera6

6.4.2.2 Procedure

Follow the procedure below to select and connect the camera:

1. Click the Select camera button.

2. Select the FLIR X6570sc camera.

The camera’s IP address is displayed when connected using GigE.

The Camera Link port is displayed when connected using Camera Link.

3. Click the Connect button to activate the camera connection.

Once connected, the camera control interface is populated with the camera parameters,

and the live image is displayed on the current tab.

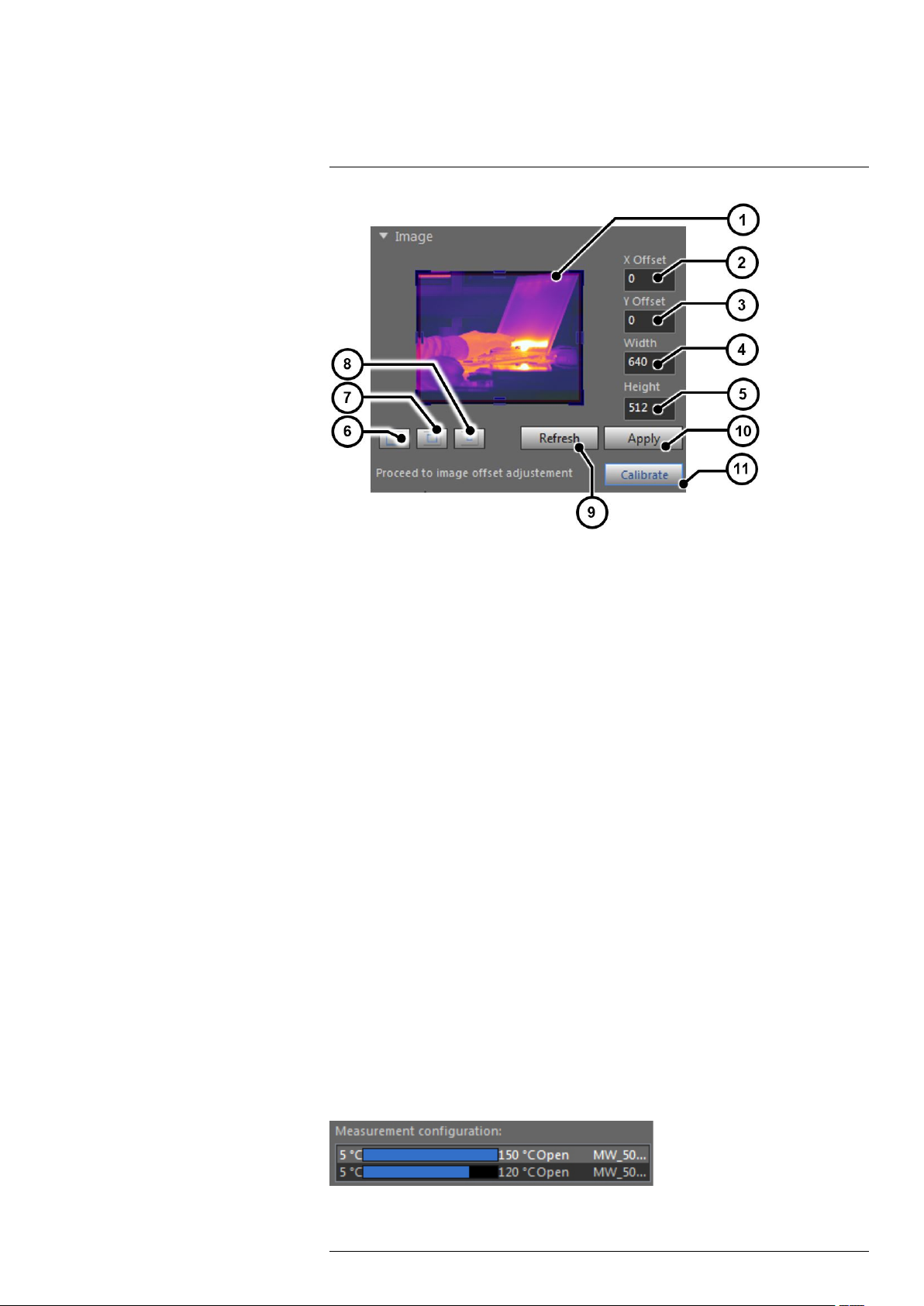

6.4.3 Image size adjustment

6.4.3.1 General

The FLIR X6570sc can be set up to use only part of the detector. This allows the camera

can be operated at higher frame rates. The selection is done through the upper part of

the camera control panel.

#T810204; r. AA/43079/43091; en-US

20

Page 29

Installing the camera6

Figure 6.2 Image size adjustment

1. Preview window. The window size can be selected by dragging the handles. The en-

tire box can be dragged to set the location.

2. The X offset can be manually set in this field.

3. The Y offset can be manually set in this field.

4. The window width can be manually set in this field.

5. The window height can be manually set in this field.

6. Set the window size to full detector size (640 × 512).

7. Set the window size to half detector size (320 × 256 centered).

8. Set the window size to quarter detector size (160 × 128 centered).

9. Refresh the preview window with the last acquired image from the camera.

10. Apply the settings to the camera.

11. If needed, calibrate the image against a homogeneous reference target (also called

“1 point NUC”).

6.4.4 Measurement configuration

6.4.4.1 General

A measurement configuration is a combination of optical setup (lens and spectral filter),

integration mode setting, and Camera Link setting.

The measurement configurations available in the camera are displayed in the FLIR ResearchIR Max interface. Each configuration is described with minimum and maximum

calibrated temperatures, the lens and filter type, the integration mode (ITR or IWR), and

the Camera Link setting (Base/Medium).

For lenses with automatic identification (see section , page ), the camera automatically

selects the measurement configuration corresponding to the lens.

You can also select the measurement configuration manually. Take care to select the

measurement configuration corresponding to the lens, filter, integration mode setting

(ITR or IWR), and the Camera Link setting (Base or Medium) in use. The configuration is

selected by clicking on it. It is then highlighted in light gray. Once selected, the camera is

automatically set to this configuration.

#T810204; r. AA/43079/43091; en-US

21

Page 30

Installing the camera6

It is possible to deactivate the configuration filter by unchecking the check box shown

below.

When unchecked, all configurations available in the camera are listed. It is then possible

to select a configuration that does not match the current optical and detector setup. This

is useful for advanced users when, for instance, using an infrared lens for which no calibration files are available: in this example, the camera will provide temperature data even

if the calibration does not apply to the lens.

Note

• Only one measurement configuration is valid at a time.

• Make sure you select a configuration that matches the temperature of the scene to be

measured. If not, your measurements will be incorrect because they will be outside

the limits of the calibration.

6.4.5 Temperature range adjustment

6.4.5.1 General

The temperature range is defined by the minimum and maximum temperatures that can

be measured for a given integration time.

1. Integration time for the range. Double click on the integration time to manually enter a

value. The range is indicated in red and will be applied to the camera after clicking on

the Apply Configuration button.

2. Drag the range slider to adjust the integration time. The corresponding lower and

upper temperatures of the range are displayed. The range is indicated in red and will

be applied to the camera after clicking on the Apply Configuration button.

3. Activate the range by checking the box. If more than one range is activated, the cam-

era enters superframing mode, selecting each range in turn. Refer to section 8.6 Dy-

namic range extension—superframing, page 39 for more information on

superframing.

4. The FLIR X6570sc features an automatic exposure control that automatically selects

the best integration time for the current thermal scene. Refer to section 8.2.2 Auto-ex-

posure, page 35 for more information on auto-exposure.

5. The temperature range wizard automates the selection of integration times and

superframing.

#T810204; r. AA/43079/43091; en-US

22

Page 31

Installing the camera6

5.1. Select the temperature range to measure.

5.2. The wizard automatically calculates the best integration times to cover the desired temperature range.

Click on the Finish button to set up the camera accordingly.

6. Apply the temperature range configuration to the camera.

7. Read the actual camera configuration.

6.4.6 Frame frequency

6.4.6.1 General

The frame rate is the number of images taken by the camera per second. Achievable

frame rates are based on the camera settings, the camera overhead, and the integration

settings.

6.4.7 Synchronizing the camera to an external signal

6.4.7.1 General

Note Refer to section 8.7 Camera synchronization, page 39 for detailed information on

synchronization.

The camera can be synchronized to an external signal. This is useful in, for example,

brake disk testing. A signal from the testing machine will synchronize the camera to the

disk speed.

#T810204; r. AA/43079/43091; en-US

23

Page 32

Installing the camera6

Synchronization parameters are set through the ResearchIR Max user interface:

1. Activate/deactivate external synchronization. Select the active edge and input

impedance.

2. Based on the camera configuration (e.g., the window size, integration time, or integration mode), the maximum allowable Sync In frequency is displayed.

3. The actual Sync In signal frequency is measured by the camera and displayed here.

If the Sync In frequency is higher than the maximum allowable frame rate, a warning

message is displayed. In this case, the input signal is under-sampled.

4. The jitter on the Sync In signal, which is typically one pixel clock, is displayed here.

5. The integration time length is displayed here. The integration time is defined in the

measurement range panel.

6. A delay between the Sync In signal and the start of integration time can be defined

here.

7. Several camera signals can be routed to the Sync Out connector. The polarity of

these signals is also defined here.

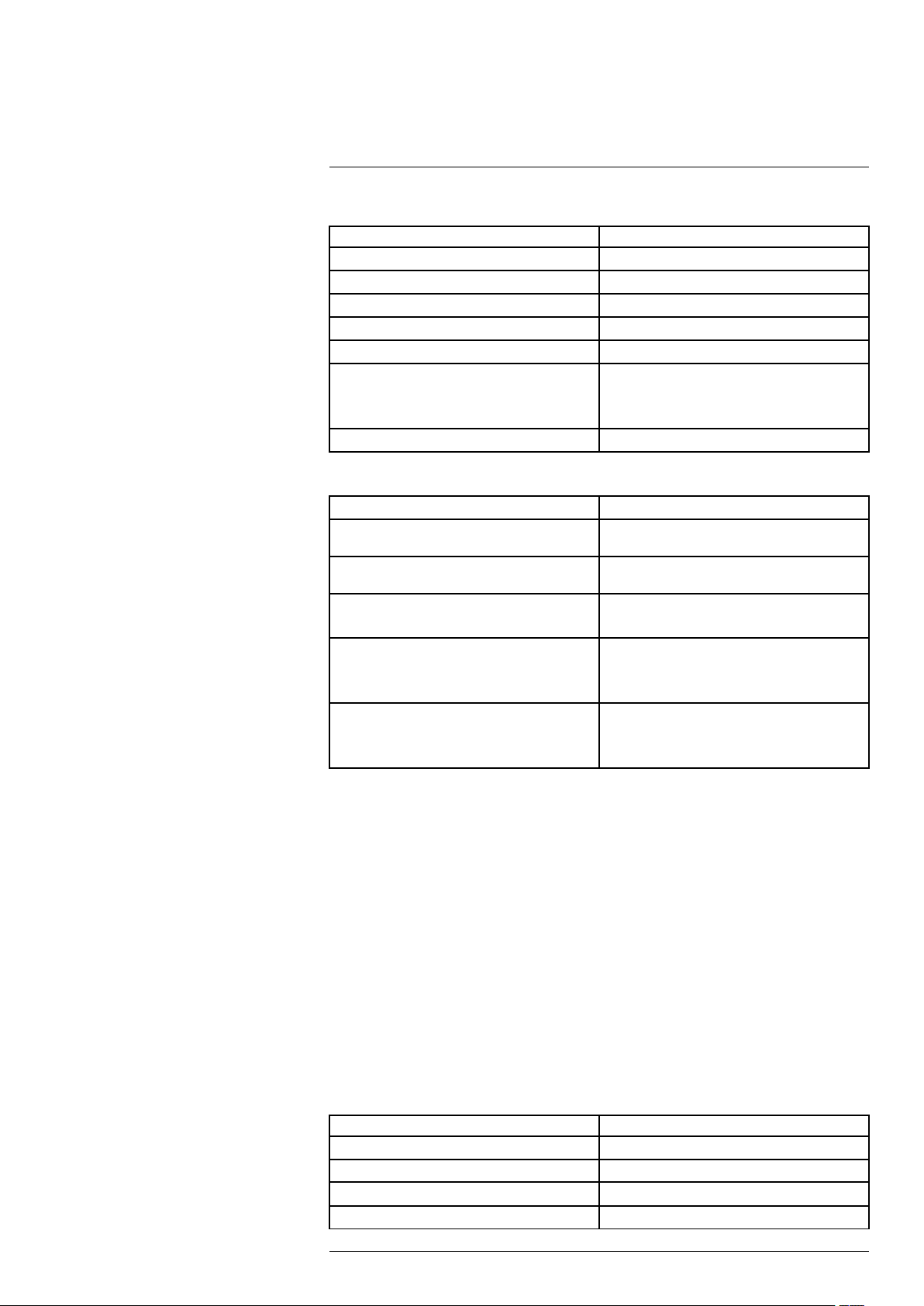

6.4.8 Advanced camera controls

6.4.8.1 General

This section describes the Advanced Camera Controls.

#T810204; r. AA/43079/43091; en-US

24

Page 33

Installing the camera6

Image Orientation Select the orientation of the image at the detector

Integration Mode

Streaming Mode Select Base or Medium. For more information,

Auto measurement configuration selection When this option is checked, the camera auto-

Synchronize filter on measurement configuration When this option is checked (default), the filter

Lock LCD orientation Freeze the LCD screen automatic orientation.

Remote control action

level. This impacts digital radiometric outputs as

well as video outputs.

Select between integrate then read (ITR) and integrate while read (IWR). (IWR mode is not available in all camera models.)

Refer to section 8.5 Frame rate and integration

modes, page 36 for more information about these

modes.

The integration mode impacts the available measurement ranges, depending on the calibration

configuration of the camera.

see section 6.4.1.1 Connection through the Cam-

era Link interface, page 19.

The streaming mode should always be set to

Base for the FLIR X6570sc.

matically searches the measurement configuration corresponding to the exact optical path (filter

+ lens) and detector configuration.

If no measurement configuration is available in the

camera, selecting this option will have no effect.

For lenses with the M80 mount (no automatic lens

identification), this setting has no effect.

corresponding to the selected measurement configuration is automatically placed by the filter

wheel in front of the detector.

Deactivating this option should be reserved for

advanced setups where the user requires a different spectral filter for a measurement configuration.

Select the action associated with the infrared remote controller. Refer to section 7.4 Infrared re-

mote, page 33 for more information on the infared

remote.

6.4.9 Extended camera information

6.4.9.1 General

Extended camera information can be found in the Extended information section in the

ResearchIR Max camera tab.

#T810204; r. AA/43079/43091; en-US

25

Page 34

Installing the camera6

Temperature Probes The camera is equipped with various temperature

Miscellaneous This section lists the firmware version information,

Image Statistics The image statistics as measured by the camera

probes that are used for improving measurement

accuracy or for camera diagnostics.

Click the refresh button to update the temperature

values.

the model, and the serial number of the camera

are shown here.

Click the refresh button to update the statistics

values.

#T810204; r. AA/43079/43091; en-US

26

Page 35

7

Operation

7.1 Filter wheel

The FLIR X6570sc includes a four-slot filter wheel. Each slot can hold a 1 in. (2.5 cm) diameter filter with a thickness of up to 2.5 mm. An identification system has been implemented so that the camera recognizes the inserted slot and automatically adjusts the

measurement configuration.

7.1.1 Removing an optical filter holder

Note

• This operation is undertaken close to the detector window. Take extreme care not to

touch or scratch the detector window. Contact FLIR service if you require assistance

with this operation.

• The detector is a very sensitive sensor. It must not be directed toward strong light, e.

g., sunlight. It is better to remove filters with the camera turned on, as the detector,

when cooled, is less sensitive to visible light.

• A filter holder tool is provided with the camera.

• Do not touch the filter surface when you install the filter. If this happens, clean the filter

according to the manufacturer’s instructions.

Follow the procedure below to remove a filter holder from the camera filter wheel:

1. Select the measurement configuration range corresponding to the filter to be used.

This places the filter in front of the detector, allowing access to it.

2. If there is no measurement configuration range corresponding to the filter, switch off

the camera and manually rotate the wheel to place the filter to be removed in front of

the detector.

3. Gently insert the two pins of the filter holder tool into the corresponding holes.

4. Rotate the filter holder counterclockwise to release the holder from the wheel.

5. Gently remove the holder from the camera, and store it in its case.

7.1.2 Installing an optical filter holder

Note

• This operation is undertaken close to the detector window. Take extreme care not to

touch or scratch the detector window. Contact FLIR service if you require assistance

with this operation.

• The detector is a very sensitive sensor. It must not be directed toward strong light, e.

g., sunlight. It is best to remove filters with the camera turned on, as the detector,

when cooled, is less sensitive to visible light.

• A filter holder tool is provided with the camera.

• Do not touch the filter surface when you install the filter. If this happens, clean the filter

according to the manufacturer’s instructions.

Follow the procedure below to install a filter holder in the camera filter wheel:

1. Select the measurement configuration range using the filter to be used. The corresponding filter slot is placed in front of the detector allowing access to it.

2. If there is no measurement configuration range corresponding to the filter, switch off

the camera and manually rotate the wheel to place the appropriate filter slot in front

of the detector.

#T810204; r. AA/43079/43091; en-US

27

Page 36

7

Operation

3. Gently insert the two pins of the filter holder tool into the corresponding holes of the

holder to install.

4. Insert the filter holder, making sure that the two slots in the holder are in line with the

two springs on the filter wheel.

5. Rotate the filter holder clockwise until the springs are correctly maintaining the holder.

7.1.3 Filter holder identification

Each filter holder is identified by a combination of magnets glued onto the filter holder.

FLIR provides standard filter configurations with corresponding identification numbers

(IDs). At start-up, the camera scans the filter wheel and identifies the inserted holders. If

equipped with a lens with a bayonet mount, the camera also adjusts the measurement

configuration in accordance with the identified filter holders.

The IDs 40 to 58 are reserved for customer-defined holders.

7.1.4 Creating a custom filter holder

Note

• Filters are fragile. Handle them with great care.

• Do not touch the filter surface when you install the filter. If this happens, clean the filter

according to the manufacturer’s instructions.

• Wear gloves or finger tips to handle the filter.

7.1.4.1 General

You can configure a filter holder to use your own spectral filter. You need an empty holder

(P/N SC8_SC6_FILT_HOLD—contact your FLIR representative for more information on

blank filter holders).

7.1.4.2 Procedure

Follow the procedure below to assemble a filter within a filter holder:

1. Select a holder ID within the range 40 to 58. This will be used by the camera to identify your filter.

2. Convert this number to binary. For example, 40 is 101000.

3. The magnets provided with the empty holder are glued to the holder in accordance

with the binary code. For every “1” in the binary code, a magnet is glued in the appropriate hole in the holder (see the figure below), with the north pole of the magnet facing into the hole. For example, for binary code 101000, you need to place a magnet

at positions 8 and 32.

The use of Loctite Hysol 3430 A&B glue is recommended.

Note The Microsoft Windows calculator in programmer mode provides an easy

way to convert decimal numbers into binary code.

#T810204; r. AA/43079/43091; en-US

28

Page 37

7

Operation

4. Place your filter in the holder. Take care to ensure correct filter orientation, to avoid errors in the radiometric measurement. Contact your filter provider for this information.

5. Gently insert the threaded filter ring, and tighten it using the filter tool. Take great care

not to damage the filter with the tool.

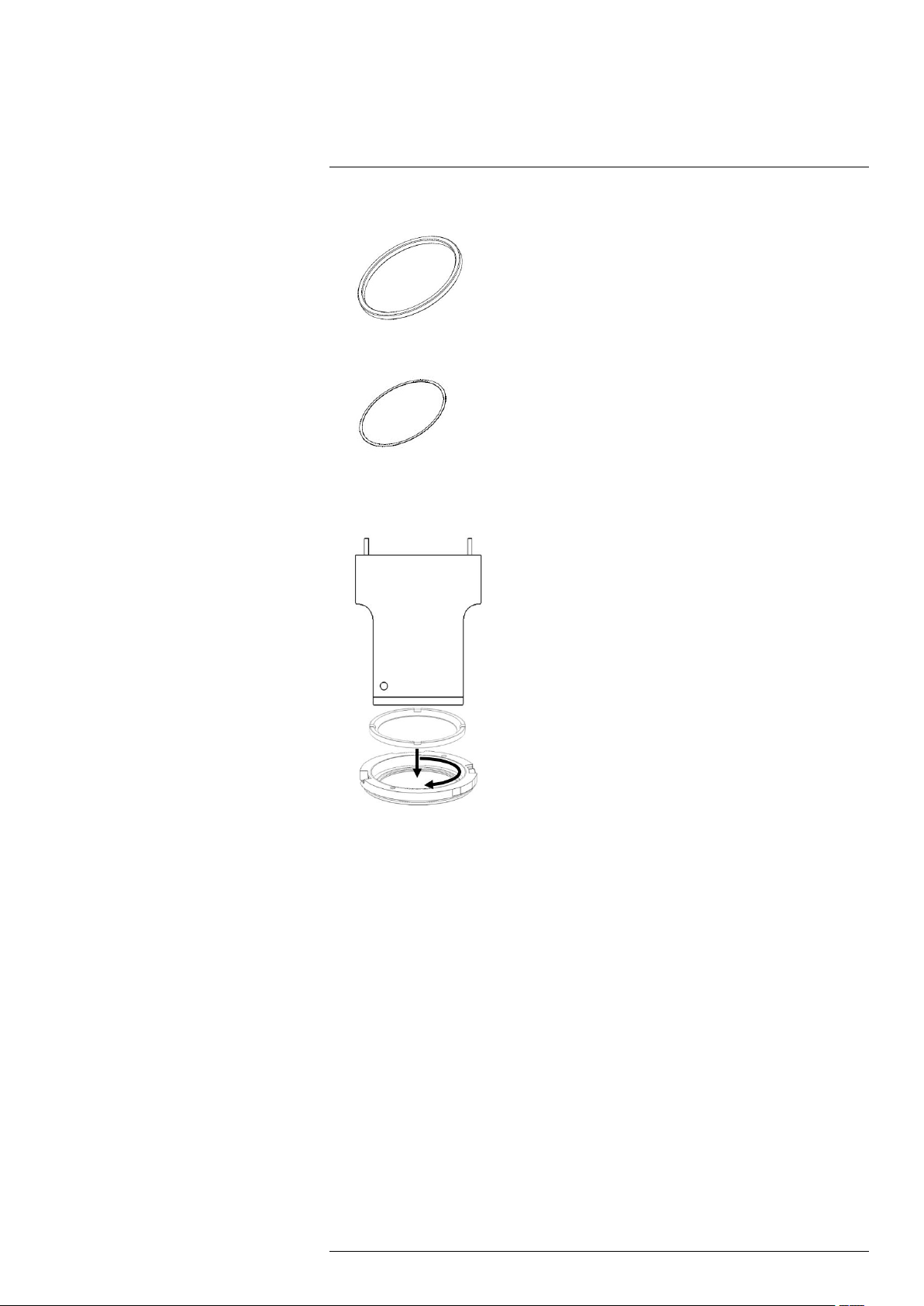

7.1.5 Installing two filters in the filter holder

You can install two filters in the filter holder.

Note

• The total thickness of the two filters must not exceed 2 mm.

• The order in which you install the filters will not affect their performance. However, to

avoid narcissus effects, it is recommended that the filters are installed with the least

reflective side downwards in the filter holder (i.e., toward the detector).

• Filters are fragile. Handle them with the great care.

• Do not touch the filter surface when you install the filters. If this happens, clean the fil-

ter according to the manufacturer’s instructions.

• Wear gloves or finger tips to handle the filters

• A set of filter holder equipment (filter holder, centering ring, filter spacer, threaded filter

ring, and magnets) and a filter holder tool are provided with the camera.

Follow the procedure below to install two filters in the filter holder.

1. Create a custom filter ID, as described in section 7.1.4 Creating a custom filter holder,

page 28.

2. Place the first filter in the holder.

#T810204; r. AA/43079/43091; en-US

29

Page 38

7

Operation

3. Place the centering ring into the holder.

4. Clean the visible filter surface.

5. Place the filter spacer on top of the first filter.

6. Place the second filter into the holder. Make sure it seats correctly on top of the filter

spacer and is centered with the centering ring.

7. Gently insert the threaded filter ring and tighten it using the filter tool. Take great care

not to damage the filter with the tool.

7.1.6 Adding a custom filter parameter into the camera

7.1.6.1 General

Up to two filters can be mounted in a slot. A slot is defined in the camera’s slot.ini file,

and a filter’s definition is stored in a text file in the camera.

Slot.ini file is a text file containing the holder identification and the corresponding filter

numbers.

[Holder XXX]

F1 = FYYYY

F2 = FZZZZ

Where XXX is the unique identifier of the slot, and YYYYand ZZZZ are filter numbers referring to the existing FYYYY.txt and FZZZZ.txt files, respectively.

For instance, for a holder defined by ID 42 in which the filter F3221 is mounted, the following should be added to the slot.ini file:

[Holder 42]

F1 = F3221

F2 = F9999

For a holder defined by ID 45 in which the filters F3221 and F1518 are mounted, the following should be added to the slot.ini file:

[Holder 45]

#T810204; r. AA/43079/43091; en-US

30

Page 39

7

Operation

F1 = F3221

F2 = F1518

Note

• Refer to section 9.2 USB connection, page 46 to access the camera files through the

USB connection.

• Refer to section 7.1.7 Filter definition file description, page 31 for the description of

the filter definition.

7.1.6.2 Procedure

1. Connect your camera to your computer using the USB port.

2. Edit the file Slot.ini file located in the location FlashFS/filters/.

3. Save and close the file Slot.ini.

4. If the filter definition files for the added holder are not present in FlashFS/filters/, they

have to be created.

5. Repeat steps 2 to 4 for all filters and holders to be added.

6. Reboot the camera (a short press on the power button) to apply the modification.

7.1.7 Filter definition file description

7.1.7.1 General

Filter definition files contain identification and spectral information for the corresponding

filters. This information is used by the camera and ResearchIR Max to adjust measurement configurations.

Filter definition files must contain all the sections described below. The values in each

section must not be longer than the specified number of characters. It may be easier to

copy an existing filter file and modify it.

#reference max 20 char

[reference]

F1201

#name max 20 char

[name]

NA_4094_4388_60%

#application max 20 char

[application]

Blue CO

#band max 10 char

[band]

MW

#material max 20 char

[material]

Silicon

#type max 20 char

[type]

Narrow

#peak in µm

[peak]

4.22

2

filter

The filter reference. This reference is used in the

Slot.ini file and must start with the capital letter “F.”

The user-friendly name that is displayed in the ResearchIR Max user interface. FLIR uses the following naming convention, but it can be freely

modified.

XX_YYYY_ZZZZ_WW%

• XX : type of filter (NA, narrow; LP, low pass; HP,

high pass; BP, band pass)

• YYYY: cut-in (in nm)

• ZZZZ: cut-off (in nm)

• WW: average transmission

Application in which the filter is used. This name

is displayed on the camera’s LCD screen.

BB: broadband midwave (1.5–5 µm)

MW: midwave (3–5 µm)

If unknown, enter “N/A.”

Filter substrate

If unknown, enter “N/A.”

Type of filter (narrow, band pass, high pass, low

pass)

If unknown, enter “N/A”.

Peak transmission

If unknown, enter “0.”

#T810204; r. AA/43079/43091; en-US

31

Page 40

7

Operation

#cuton in nm

[cuton]

4094

#cutoff in nm

[cutoff]

4388

#transmission in %

[transmission]

60

#tolerance in %

[tolerance]

0.3

#[thickness] in mm

[thickness]

0.5

#spectral response max 160 char

[spectral response]

1:0;3,94:0,01;3,95:0,02;3,96:0,07;

4,04:0,04;4,06:0,01;4,08:0;6:0

Filter cut-in (in nm)

If unknown, enter “0.”

Filter cut-off (in nm)

If unknown, enter “0.”

Average filter transmission (in %)

If unknown, enter “0.”

Filter spectral tolerance

If unknown, enter “0.”

Filter substrate thickness

If unknown, enter “0”.

Spectral response curve definition

The wavelength and corresponding transmission

(the maximum is 1) are separated by a colon.

Pairs of values are separated by a semicolon.

If unknown, enter “N/A.”

7.2 Camera configuration file management

7.2.1 CNUC file management

7.2.1.1 General

Note

• CNUC files are related to the measurement configurations available for the camera.

Refer to section 6.4.4 Measurement configuration, page 21.

• Accessing camera files exposes the camera system files. Do not erase or modify files

other than the configuration files.

CNUC files are accessible by an FTP connection to the camera. Refer to section 9.2

USB connection, page 46 to connect to camera files.

7.2.1.2 Procedure

1. Connect your camera to your computer through the USB port.

2. You can add or delete camera calibration files directly in the directory FlashFS/nuc/.

3. Reboot the camera to apply the modification

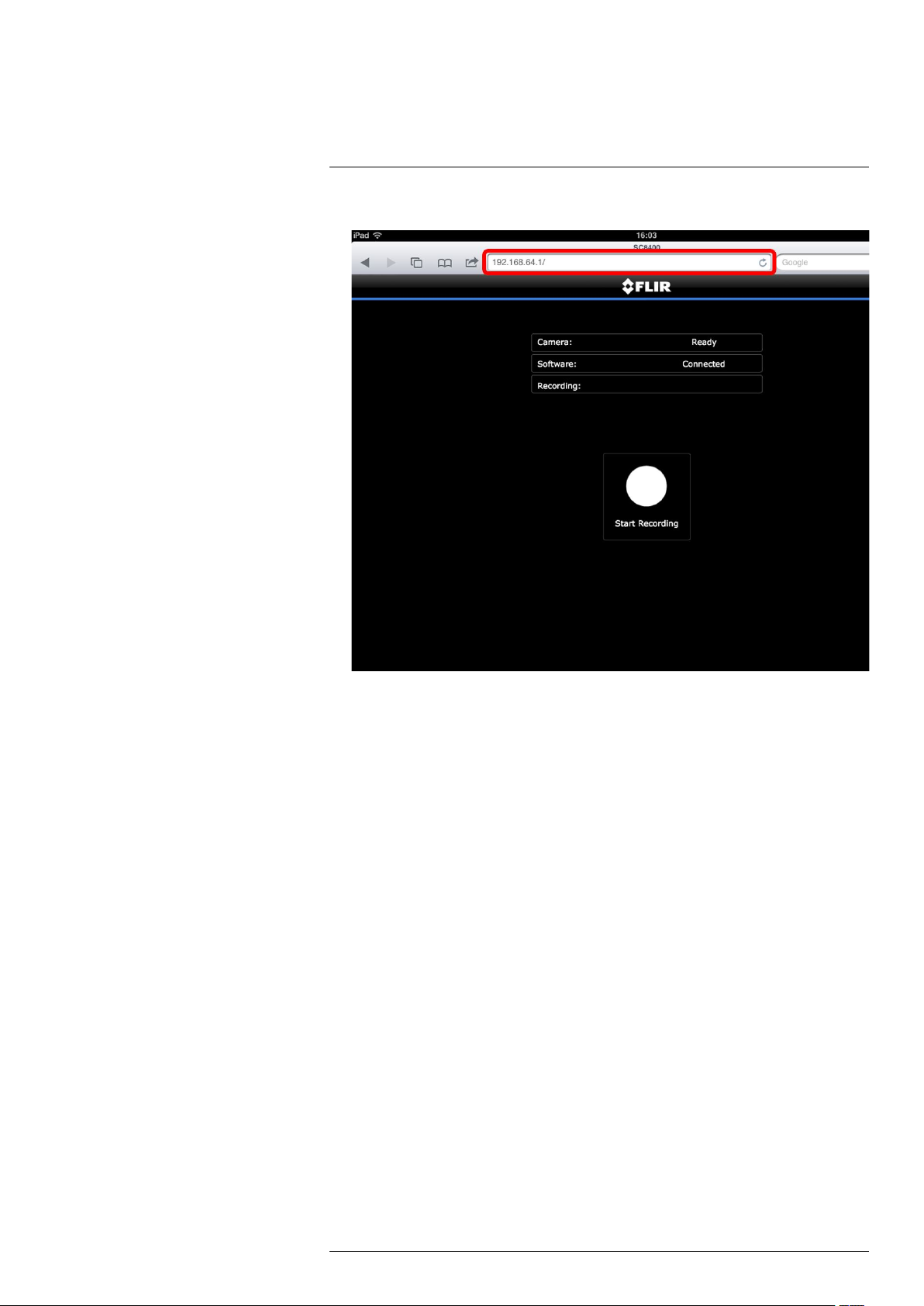

7.3 Camera Wi-Fi application

7.3.1 General

Note Refer to section 9.1 Wi-Fi connection, page 45 to set up a Wi-Fi connection to

the camera.

A web application is available when using Wi-Fi to connect to the camera. This applica-

tion allows image recording to be started and stopped in ResearchIR Max.

7.3.2 Procedure

1. Connect your device (smartphone or computer) to your camera.

2. On a web browser, go to http://169.254.242.23.

3. Control the ResearchIR Max recording from the web page.

#T810204; r. AA/43079/43091; en-US

32

Page 41

7

Operation

7.3.3 Camera web page description

1. Indicates camera status:

• Ready: The camera is running properly and providing infrared images.

• Not Ready: The camera is not providing infrared images. Check the camera status

LEDs for detailed information.

2. Indicates ResearchIR Max connection status:

• Connected: ResearchIR Max is connected to the camera and ready to acquire an

image sequence.

• Not Connected: No sequence acquisition is possible. Check ResearchIR Max status on the computer.

3. Indicates the current sequence recording status:

• Blank: Recording is not in progress in ResearchIR Max.

• Recording: ResearchIR Max is currently recording an image sequence.

4. Press the start/stop acquisition button to start or stop the image sequence acquisition

in ResearchIR Max.

7.4 Infrared remote

7.4.1 General

The FLIR X6570sc can be controlled with the provided infrared remote or any XLR camera remote control using the Nikon protocol.

The actions available are as follows:

• Start acquisition in ResearchIR Max.

• Trigger 1 point NUC calibration.

• Trigger auto-exposure.

7.4.2 Procedure

Follow the procedure below to select the infrared remote action:

1. Connect the camera to ResearchIR Max.

2. In the camera tab, under Advanced Camera Control, select the infrared remote

action.

#T810204; r. AA/43079/43091; en-US

33

Page 42

8

Radiometric measurement

8.1 Non-uniformity correction (NUC)

8.1.1 General

NUC refers to the process by which the camera electronics correct for the differences in

the pixel-to-pixel response of each individual pixel in the detector array. The camera can

create (or allow for the user to load) a NUC table that consists of a unique gain and offset

coefficients and a bad pixel indicator for each pixel. The table is then applied in the digital

processing pipeline as shown in Figure 8.1. The result is corrected data, where each pixel responds consistently across the detector input range, creating a uniform image.

Figure 8.1 Digital process showing the application of NUC tables.

To create the NUC table, the camera images either one or two uniform temperature sources. The source is an external source provided by the user. The source should be uniform and large enough to overfill the camera’s field of view. By analyzing the pixel data

from these constant sources, the non-uniformity of the pixels can be determined and corrected. There are two types of processes that are used to create the NUC table: one

point and two point.

8.1.2 CNUC

8.1.2.1 General

CNUC is a proprietary calibration process. A camera calibrated with CNUC allows for

flexible integration time adjustments without the need to perform NUCs. Additionally, the

CNUC calibration produces accurate measurement stability regardless of the camera’s

exposure to ambient temperature variations.

A CNUC correction is valid for a specific optical configuration comprising a lens and

spectral filters combination. CNUC corrections are generated by FLIR service offices

where advanced calibration benches are available. Contact your FLIR representative for

CNUC correction on new spectral filters or infrared lenses.

The CNUC process generates a gain and offset map based on the camera’s internal parameters and environmental probes.

8.1.3 Two-point correction process

8.1.3.1 General

The two-point correction process builds a NUC table that contains individually computed

gain and offset coefficients for each pixel, as shown in Figure 8.2. Two uniform sources

are required for this correction: one source at the low end of the usable detector input

range, and a second source at the upper end.

#T810204; r. AA/43079/43091; en-US

34

Page 43

Radiometric measurement8

Figure 8.2 Two-point correction.

8.1.4 One-point correction (offset correction)

8.1.4.1 General

The NUC correction is strongly dependent on the optical path in front of the detector,

and on the detector setup itself. Often, any change in the camera or detector settings will

require a new NUC. However, this change is mainly in the offset response of the image

while the gain component stays constant. An offset update simply computes a new offset

coefficient using the existing gain coefficient and corrects the image non-uniformity. An

offset update requires only one uniform source, usually set at a temperature on the lower

edge of the operational range.

One-point correction is done when clicking the calibrate button in the ResearchIR Max

camera tab (11 in Figure 6.2 Image size adjustment, page 21).

8.2 Temperature calibration

8.2.1 Hypercal

8.2.1.1 General

Hypercal is a proprietary temperature measurement process that complements CNUC.

With Hypercal, for any integration time selected, the camera produces accurate measurement within ±1°C or ±1% over the configured measurement range. Therefore, it

makes the selection of the optimal measurement range for a given thermal scene an

easy task.

Note ±1°C or ±1% accuracy is standard for the FLIR X6570sc, unless explicitly specified otherwise. Typically, calibration on custom spectral filters or custom optical configurations have higher-accuracy tolerances.

8.2.2 Auto-exposure

8.2.2.1 General

Because the dynamic range of a natural thermal scene can be larger than the range of

the camera, some images taken by the camera may be saturated. When an image is in

the bottom part of the dynamic range, the sensitivity is affected; therefore, the integration

time has to be increased. Conversely, when an image is in the higher part of the dynamic

range and saturated, the integration time has to be decreased.

When activated, the camera will search for the highest integration time for which the image dynamic range is contained in the upper part of the linearity domain of the detector.

Auto-exposure can be started from ResearchIR Max (see section 6.4.5 Temperature

range adjustment, page 22) or from the LCD screen (see Figure 6.1 LCD touch screen.,

page 16).

#T810204; r. AA/43079/43091; en-US

35

Page 44

Radiometric measurement8

Note

• The auto-exposure process looks for the best integration time for the actual thermal

scene. It may be the case that this preferred integration time is not achievable because it is limited by the camera’s frame rate. In this case, the auto-exposure process

is stopped, and the preferred integration is not applied.

The auto-exposure process is not designed to handle multiple integration times.

8.3 Bad pixel replacement

8.3.1 General

Once an NUC has been carried out, bad pixels can be detected and replaced. This is

done by replacing the bad pixels by the median value of the eight neighboring pixels.

There are three kinds of bad pixels:

• Bad pixels relative to the gain of the non-uniformity correction. In this case the system

will consider a pixel as bad if the gain coefficient from the NUC is lower or higher than