Page 1

Scali MPI ConnectTM Users Guide

Software release 4.4

Page 2

Acknowledgement

The development of Scali MPI Connect has benefited greatly from the work of people not

connected to Scali. We wish especially to thank the developers of MPICH for their work which

served as a reference when implementing the first version of Scali MPI Connect.

The list of persons contributing to algorithmic Scali MPI Connect improvements is impossible

to compile here. We apologize to those who remain unnamed and mention only those who

certainly are responsible for a step forward.

Scali is thankful to Rolf Rabenseifner for the improved reduce algorithm used in Scali MPI

Connect.

Copyright © 1999-2005 Scali AS. All rights reserved

7 September 2005 17:54

Page 3

SCALI “BRONZE” SOFTWARE CERTIFICATE

(hereinafter referred to as the “CERTIFICATE”)

issued by

Scali AS, Olaf Helsets Vei 6, 0619 Oslo, Norway

(hereinafter referred to as “SCALI”)

DEFINITIONS

-“SCALI SOFTWARE” shall mean all contents of the software disc(s) or download(s) for

the number of nodes the LICENSEE has purchased a license for (as specified in purchase

order/invoice/order confirmation or similar) including modified versions, upgrades,

updates, DOCUMENTATION, additions, and copies of software. The term SCALI

SOFTWARE includes Software in its entirety, including RELEASES, REVISIONS and BUG

FIXES, but not DISTRIBUTED SOFTWARE.

-“DISTRIBUTED SOFTWARE” shall mean any third-party software products, licensed

directly to SCALI or to the LICENSEE by third party and identified as such.

-“DOCUMENTATION” shall mean manuals, maintenance libraries, explanatory materials

and other publications delivered with the SCALI SOFTWARE or in connection with SCALI

BRONZE SOFTWARE MAINTENANCE AND SUPPORT SERVICES . The term

“DOCUMENTATION” (can be paper or on-line documentation) does not include

specification of Hardware, SCALI SOFTWARE or DISTRIBUTED SOFTWARE.

-A “RELEASE” is defined as a completely new program with new functionality and new

features over its predecessors identified as such by SCALI according to the ordinary SCALI

identification procedures.

-A “REVISION” is defined as changes to a program with the aim to improve functionality

and to remove deficiencies, identified as such by SCALI according to the ordinary SCALI

identification procedures.

-A “BUG FIX” is defined as an immediate repair of dysfunctional software, identified as

such by SCALI according to the ordinary SCALI identification procedures.

- “INSTALLATION ADDRESS” shall mean the physical location of the computer hardware

and the location at which SCALI will have installed the SCALI SOFTWARE.

-“INTELLECTUAL PROPERTY RIGHTS” includes, but is not limited to all rights to

inventions, patents, designs, trademarks, trade names, copyright, copyrighted material,

programming, source code, object code, trade secrets and know how.

-“SCALI REPRESENTATIVE” shall mean any party authorized by SCALI to import,

export, sell, resell or in any other way represent SCALI or SCALI’s products.

- “SHIPPING DATE” shall mean the date the SCALI SOFTWARE was sent from SCALI or

SCALI REPRESENTATIVE to the Licensee.

- “INSTALLATION DATE” shall mean the date the SCALI SOFTWARE is installed at the

LICENSEE’s premises.

- “COMMENCEMENT DAY” shall mean the day the SCALI SOFTWARE is made available to

LICENSEE by SCALI for installation for permanent use on LICENSEE’s computer system

(permanent license granted by SCALI.

- “LICENSEE” shall mean the formal entity ordering and purchasing the license to use the

SCALI SOFTWARE.

Scali MPI Connect Release 4.4 - Users Guide i

Page 4

- “CANCELLATION PERIOD” shall mean the period between SHIPPING DATE AND

INSTALLATION DATE, or if installation is not carried out, the period of 30 days after

SHIPPING DATE, counted from the first NORWEGIAN WORKING DAYS after SHIPPING

DATE.

- “US WORKING DAYS” shall mean Monday to Friday, except USA Public Holidays.

- “US BUSINESS HOURS” shall mean 9.00 AM to 5.00 PM Eastern Standard Time.

- “NORWEGIAN WORKING DAYS” shall mean Monday to Friday, except Norwegian Public

Holidays.

- “NORWEGIAN BUSINESS HOURS” shall mean 9.00 AM to 5.00 PM Central European

Time.

- “SCALI BRONZE SOFTWARE MAINTENANCE AND SUPPORT SERVICES” shall mean

the Maintenance and Support Services as set out in this CERTIFICATE

IATTENTION

USE OF THE “SCALI SOFTWARE” IS SUBJECT TO THE POSSESSION OF THIS SCALI

BRONZE SOFTWARE CERTIFICATE AND THE ACCEPTANCE OF THE TERMS AND

CONDITIONS SET OUT HEREIN. THE FOLLOWING TERMS AND CONDITIONS APPLIES

TO ALL SCALI SOFTWARE. BY USING THE SCALI SOFTWARE THE LICENSEE

EXPRESSLY CONFIRMS THE LICENSEE’s ACCEPTANCE OF TERMS AND CONDITIONS

SET OUT BELOW.

THE SCALI SOFTWARE MAY BE RETURNED TO THE SCALI’S REPRESENTATIVE

WITHIN THE END OF CANCELLATION PERIOD IF THE LICENSEE DOES NOT ACCEPT

THE TERMS AND CONDITIONS SET OUT IN THIS CERTIFICATE.

THE TERMS AND CONDITIONS IN THIS CERTIFICATE ARE DEEMED ACCEPTED

UNLESS THE LICENSEE RETURNS THE SCALI SOFTWARE TO SCALI’S

REPRESENTATIVE BEFORE THE END OF CANCELLATION PERIOD DEFINED ABOVE.

DISTRIBUTED SOFTWARE IS SPECIFIED ON THE URL ADDRESS http://

www.scali.com/distributedsw . THE USE OF THE DISTRIBUTED SOFTWARE IS NOT

GOVERNED UNDER THE SCOPE OF THIS CERTIFICATE, BUT SUBJECT TO

ACCEPTANCE OF THE TERMS AND CONDITIONS SET OUT IN THE SEPARATE LICENSE

AGREEMENTS APPLICABLE TO THE RESPECTIVE DISTRIBUTED SOFTWARE IN

QUESTION. SUCH LICENSEE AGREEMENTS ARE MADE AVAILABLE AT THE URL

ADDRESS http://www.scali.com/distributedsw

.

II SOFTWARE LICENSE TERMS

Commencement

This CERTIFICATE is effective from the end of CANCELLATION PERIOD as defined above,

unless the SCALI SOFTWARE has been returned to SCALI REPRESENTATIVE or SCALI before

the end of CANCELLATION PERIOD.

Grant of License

Scali grants by this CERTIFICATE to LICENSEE a perpetual, non-exclusive, limited license to

use the SCALI SOFTWARE during the term of this CERTIFICATE.

This grant of license shall not constitute any restriction for SCALI to grant a license to any

other third party.

Maintenance

SCALI may, from time to time, produce new REVISIONS and BUG FIXES of the RELEASE of the

SCALI SOFTWARE with corrections of errors and defects and expanded or enhanced

functionality. For 1 year after COMMENCEMENT DAY, SCALI will provide the LICENSEE with

such REVISIONS and BUG FIXES for the purchased SCALI SOFTWARE at the URL address

Scali MPI Connect Release 4.4 - Users Guide ii

Page 5

www.scali.com/download free of charge. The Licensee may request such new REVISIONS and

BUG FIXES of the RELEASE, and supplementary material thereof, made available on CD-ROM

or paper upon payment of a media and handling fee in accordance with SCALI’s pending price

list at the time such order is placed.

The above maintenance services may, in certain cases, be excluded from the order placed by

non-commercial customers, as defined by SCALI. In such case, the below provisions regarding

maintenance does not apply for such non-commercial customers.

Support

For 1 year after COMMENCEMENT DAY, the LICENSEE may request technical assistance in

accordance with the terms and conditions current from time to time for the SCALI BRONZE

SOFTWARE MAINTENANCE AND SUPPORT SERVICES as set out below. Upon additional

payment in accordance with the current price list from time to time, and acceptance of the

specific terms and conditions related thereto, the LICENSEE may request prolonged or

upgraded support services in accordance with the support policies made available from time

to time by SCALI.

The above support services may, in certain cases, be excluded from the order placed by noncommercial customers, as defined by SCALI. In such case, the below provisions regarding

support does not apply for such non-commercial customers.

Restrictions in the use of the SCALI SOFTWARE

LICENSEE may not modify or tamper the content of any of the files of the software or the

online documentation or other deliverables made available by SCALI or SCALI

REPRESENTATIVE, without the prior written authorization by SCALI.

The SCALI SOFTWARE contains proprietary algorithms and methods. LICENSEE may not

attempt to;

- reverse engineer, decompile, disassemble or modify; or

- make any attempt to discover the source code of the SCALI SOFTWARE or create

derivative works form such; or

- use a previous version or copy of the SCALI SOFTWARE after an updated version has

been made available as a replacement of the prior version. Upon updating the SCALI

SOFTWARE, all copies of prior versions shall be destroyed.

- translate, copy, duplicate or reproduce for any other purpose than for backup for

archival purposes.

LICENSEE may only make copies or adaptations of the SCALI SOFTWARE for archival purposes

or when copying or adaptation is an essential step in the authorized use of the SCALI

SOFTWARE. LICENSEE must reproduce all copyright notices in the original SCALI SOFTWARE

on all copies or adaptations. LICENSEE may not copy the SCALI SOFTWARE onto any public

network.

License Manager

The SCALI SOFTWARE is operated under the control of a license manager, which is controlling

the access and licensed usage of the SCALI SOFTWARE. LICENSEE may not attempt to

modify or tamper with any function of this license manager.

Sub-license and distribution

LICENSEE may not sub-license, rent or lease the SCALI SOFTWARE partly or in whole, or use

the SCALI SOFTWARE in the manner neither of a service bureau nor as an Application Service

Provider unless specifically agreed to in writing by SCALI.

LICENSEE is permitted to print and distribute paper copies of the unmodified online

documentation freely. In this case LICENSEE may not charge a fee for any such distribution.

Export Requirements

LICENSEE may not export or re-export the SCALI SOFTWARE or any copy or adaptation in

violation of any applicable laws or regulations.

Scali MPI Connect Release 4.4 - Users Guide iii

Page 6

III SCALI SERVICES TERMS

SCALI BRONZE SOFTWARE MAINTENANCE AND SUPPORT SERVICES

Unless otherwise specified in the purchase order placed by the LICENSEE, SCALI shall provide

SCALI BRONZE SOFTWARE MAINTENANCE AND SUPPORT SERVICES in accordance with its

maintenance and support policy as referred to in this Clause and the Clause “SCALI’s

Obligations” hereunder, which includes error corrections, RELEASES, REVISIONS and BUG

FIXES to the RELEASE of the SCALI SOFTWARE.

For customers in the Americas, SCALI shall provide technical assistance via E-mail on US

WORKING DAYS from, 9.00 AM to 5.00 PM Eastern Standard Time.

For customers in the Americas, SCALI shall respond to the LICENSEE via e-mail and start

technical assistance and error corrections within eight (8) US BUSINESS HOURS after the

error or defect has been reported to SCALI via SCALI’S standard problem report procedures

as defined from time to time on the url-address

For customers outside the Americas, SCALI shall provide technical assistance via E-mail on

NORWEGIAN WORKING DAYS from, 9.00 AM to 5.00 PM Central European Time.

For customers outside the Americas, SCALI shall respond to the LICENSEE via e-mail and start

technical assistance and error corrections within eight (8) NORWEGIAN BUSINESS HOURS

after the error or defect has been reported to SCALI via SCALI’S standard problem report

procedures as defined from time to time on the url-address

SCALI’s Obligations

In the event that the LICENSEE detects any significant error or defect in the SCALI

SOFTWARE, SCALI, in accordance with the standard warranty of the Scali Software License

granted to the LICENSEE, undertakes to repair, replace or provide an adequate work-around

of the SCALI SOFTWARE installed at the INSTALLATION ADDRESS within the response times

listed in the Clause “SCALI BRONZE SOFTWARE MAINTENANCE AND SUPPORT SERVICES”

above.

SCALI may provide a fix or update to the SCALI SOFTWARE in the normal course of business

according to SCALI’s scheduled or unscheduled new REVISIONS of the SCALI SOFTWARE.

SCALI will provide, at the LICENSEE’s request, a temporary fix for non-material errors or

defects until the issuance of such NEW REVISION.

The services covered by this CERTIFICATE will be provided only for operation of the SCALI

SOFTWARE. SCALI will provide services for the SCALI SOFTWARE only on the release level

current at the time of service and the immediately preceding release level. SCALI may, within

its sole discretion, provide support on previous release levels, for which the LICENSEE may be

required to pay SCALI time and materials for the services rendered.

Should it become impossible to maintain the LICENSEE’s RELEASE of the SCALI SOFTWARE

during the currency of this CERTIFICATE, then SCALI may in its sole discretion, upon giving

the LICENSEE 3 (three) months written notice to the effect, upgrade the SCALI SOFTWARE at

the INSTALLATION ADDRESS to any later release of the SCALI SOFTWARE such that it can

once again be maintained.

www.scali.com/support.

www.scali.com/support.

LICENSEE’s Obligations

The LICENSEE shall notify SCALI in writing via the SCALI Standard Problem Report Procedure

as defined from time to time on the url-address www.scali.com/support , following the

discovery of any error or defect in the SCALI SOFTWARE or otherwise if support services by

SCALI are requested.

The LICENSEE shall provide to SCALI a comprehensive listing of output and all such other data

that SCALI may request in order to reproduce operating conditions similar to those present

when the error or defect occurred or was discovered. In the event that it is determined that

the problem was due to LICENSEE error in the use of the SCALI SOFTWARE, or otherwise not

Scali MPI Connect Release 4.4 - Users Guide iv

Page 7

related to, referring to or caused by SCALI SOFTWARE, then the LICENSEE shall pay SCALI’s

standard commercial time rates for all off-site and eventually any on-site services provided

plus actual travel and per diem expenses relating to such services.

IV GENERAL TERMS

Fees for SCALI Software License and SCALI SOFTWARE MAINTENANCE AND

SUPPORT SERVICES

Fees for the SCALI SOFTWARE, License and SCALI SOFTWARE MAINTENANCE AND SUPPORT

SERVICES to be paid by the LICENSEE to SCALI under this CERTIFICATE are determined

based on the current Scali Product Price List from time to time

Any requests for services or problems reported to SCALI, which in the opinion of SCALI are

clearly defined as professional services not included in the payment made under the scope of

this CERTIFICATE, including but not limited to;

- on-Site support;

- “tuning” and machine optimization;

- problems related to Hardware and Software not delivered by SCALI;

- backup Processing;

- installation of any software, including newer releases;

- consultancy and Training;

- DISTRIBUTED SOFTWARE;

shall be at SCALI’s then prevailing prices, policies (several support levels, hereunder fees

referring thereto, may be offered by SCALI), terms and conditions for such services. SCALI

will, however, advise the LICENSEE of any such requests and obtain an official company order

from the LICENSEE before executing the said request.

Any out of pocket expenses directly relating to the services rendered, and not included in the

payment made under the scope of this CERTIFICATE, such as;

- travel and accommodation;

- per diem allowances as per Norwegian travel regulations;

- Internet connection fees; and

- cost of Internet access

shall be paid in addition to the total purchase price for this CERTIFICATE, and payable by the

LICENSEE within their normal accepted terms with SCALI upon presentation of an invoice,

which shall be at the value incurred and without any form of mark-up. The LICENSEE

undertakes to arrange and cover all accommodation requirements that arise out of, or in

conjunction with, this CERTIFICATE.

Title to INTELLECTUAL PROPERTY RIGHTS

SCALI, or any part identified as such by SCALI, is the sole proprietor and holds all powers,

hereunder but not limited to exploit, use, make any changes and amendments of all

INTELLECTUAL PROPERTY RIGHTS, related to the SCALI SOFTWARE, or its parts, and any new

version, hereunder but not limited to REVISION, BUG FIX or NEW RELEASES of the SCALI

SOFTWARE or its parts, as well as to all other INTELLECTUAL PROPERTY RIGHTS resulting

from the co-operation within the frame of this CERTIFICATE.

The LICENSEE hereby declares to respect title to INTELLECTUAL PROPERTY RIGHTS as set out

above also after the expiration, termination or transfer of this CERTIFICATE, independent of

the cause for such expiration, termination or transfer.

Transfer

SCALI may transfer this CERTIFICATE to any third party. The LICENSEE may transfer this

CERTIFICATE to a third party, upon the Transferee’s written acceptance in advance of being

Scali MPI Connect Release 4.4 - Users Guide v

Page 8

fully obliged by the terms and conditions set out in this CERTIFICATE and SCALI’S prior

written approval of the transfer. SCALI’s approval shall anyway be deemed granted unless

contrary notice is sent from SCALI within 7 NORWEGIAN WORKING DAYS from receipt of

notification of the transfer in question from the LICENSEE.

Upon transfer, LICENSEE must deliver the SCALI SOFTWARE, including any copies and related

documentation, to the Transferee.

Compliance with Licenses

LICENSEE shall upon request from SCALI or its authorized representatives, within 30 days

following the receipt of such request, fully document and certify that the use of the SCALI

SOFTWARE is in accordance with this CERTIFICATE. If the LICENSEE fails to fully document

that this CERTIFICATE is suitable and sufficient for the LICENSEE’s use of the SCALI

SOFTWARE, SCALI will use any legal measure to protect its ownership and rights in its SCALI

SOFTWARE and to seek monetary damages from LICENSEE.

Warranty of Title and Substantial Performance

SCALI hereby represents and warrants that SCALI is the owner of the SCALI SOFTWARE.

SCALI hereby warrants that the SCALI SOFTWARE will perform substantially in accordance to

the DOCUMENTATION for the ninety (90) day period following the LICENSEE’s receipt of the

SCALI SOFTWARE (“Limited Warranty”). To make a warranty claim, the LICENSEE must return

the products to the location the SCALI SOFTWARE was purchased (“Back-to-Base”) within

such ninety- (90) day period. Any supplements or updates to the SCALI SOFTWARE, including

without limitation, any service packs or hot fixes provided to the LICENSEE after the

expiration of the ninety (90) day Limited Warranty period are not covered by any warranty or

condition, express, implied or statutory.

If an implied warranty or condition is created by the LICENSEE’s state/jurisdiction and federal

or state/provincial law prohibits disclaimer of it, the LICENSEE also has an implied warranty or

condition, but only as to defects discovered during the period of this Limited Warranty (ninety

days). As to any defects discovered after the ninety (90) day period, there is no warranty or

condition of any kind.

Disclaimer of Warranty

Except for the limited warranty under the Clause “Warranty of Title and Substantial

Performance” above, and to the maximum extent permitted by applicable law,

SCALI and SCALI REPRESENTATIVES provide SCALI SOFTWARE and SCALI

SOFTWARE MAINTENANCE AND SUPPORT SERVICES, if any, as is and with all faults,

and hereby disclaim all other warranties and conditions, either express, implied or

statutory, including, but not limited to, any (if any) implied warranties, duties or

conditions of merchantability, of fitness for a particular purpose, of accuracy or

completeness or responses, of results, of workmanlike effort, of lack of viruses and

of lack of negligence, all with regard to the software or other deliverables by SCALI.

Also, there is no warranty or condition of title, quiet enjoyment, quiet possession,

correspondence to description or non-infringement with regard to the SCALI

SOFTWARE or the provision of or failure to provide SCALI SOFTWARE MAINTENANCE

AND SUPPORT SERVICES, SCALI does not warrant any title, performance,

compatibility, co-operability or other functionality of the DISTRIBUTED SOFTWARE

or other deliverables by SCALI.

Without limiting the generality of the foregoing, SCALI specifically disclaims any

implied warranty, condition, or representation that the SCALI SOFTWARE;

- shall correspond with a particular description;

- are of merchantable quality;

- are fit for a particular purpose; or

- are durable for a reasonable period of time.

Scali MPI Connect Release 4.4 - Users Guide vi

Page 9

Nothing in this CERTIFICATE shall be construed as;

- a warranty or representation by SCALI as to that anything made, used, sold or

otherwise disposed of under the license granted in the CERTIFICATE is or will be

free from infringement of patents, copyrights, TRADEMARKS, industrial design

or other INTELLECTUAL PROPERTY RIGHTS ; or

- an obligation by SCALI to bring or prosecute or defend actions or suits against

third parties for infringement of patents, copyrights, trade-marks, industrial

designs or other INTELLECTUAL PROPERTY or contractual rights.

Licensee’s Exclusive Remedy

In the event of any breach or threatened breach of this CERTIFICATE, hereunder the foregoing

representation and warranty, the LICENSEE’s sole remedy shall be to require SCALI and its

SCALI REPRESENTATIVE's to either;

- procure, at SCALI’s expense the right to use the SCALI SOFTWARE; or

- replace the SCALI SOFTWARE or any part thereof that is in breach and replace it with

software of comparable functionality that does not cause any breach; or

- refund to the LICENSEE the full amount of the total purchase price paid by the LICENSEE

for this CERTIFICATE upon the return of the SCALI SOFTWARE and all copies thereof to

SCALI, deducted with the amount equivalent to the license and other services rendered

until the matter causing the remedy in question occurred.

THE LICENSEE will receive the remedy elected by SCALI without charge, except that The

LICENSEE is responsible for any expenses the LICENSEE may incur (e.g. cost of shipping the

SCALI SOFTWARE to SCALI). Any commitment or obligation of SCALI to remedy LICENSEE in

accordance with this CERTIFICATE is void if failure of the SCALI SOFTWARE or other breach of

the CERTIFICATE has resulted from accident, abuse, misapplication, abnormal use or a virus.

Any replacement SCALI SOFTWARE will be warranted for the remainder of the original

warranty period or thirty (30) days, whichever is longer. Neither these remedies nor any

product maintenance and support services offered by SCALI are available without proof of

purchase directly from SCALI or through a SCALI REPRESENTATIVE. To exercise the

LICENSEE’s remedy, contact: SCALI as set out in ULR address

REPRESENTATIVE serving the LICENSEE’s district.

WWW.scali.com or the SCALI

Limitation on Remedies and Liabilities

The LICENSEE’s exclusive and maximum remedy for any breach of the CERTIFICATE is as set

forth above. Except for any refund elected by SCALI, the LICENSEE is not entitled to any

damages, including but not limited to consequential damages, if the SCALI SOFTWARE does

not meet the DOCUMENTATION or SCALI otherwise does not meet the CERTIFICATE, and, to

the maximum extent allowed by applicable law, even if any remedy fails of its essential

purpose.

To the maximum event permitted by applicable law, in no event shall SCALI or SCALI

REPRESENTATIVES be liable for any special, incidental, indirect or consequential damages

whatsoever (including, but not limited to, damages for loss of profits or confidential or other

information, for business interruption, for personal injury, for loss of privacy, for failure to

meet any duty including of good faith or of reasonable care, for negligence, and for any other

pecuniary or other loss whatsoever) arising out of or in any way related to the use of or

inability to use the SCALI SOFTWARE, the provision of or failure to provide maintenance and

support services, or otherwise under or in connection with any provision of this CERTIFICATE,

even in the event of the fault, tort (including negligence), strict liability, breach of contract or

breach of warranty of SCALI or a SCALI REPRESENTATIVE, and even if SCALI or a SCALI

REPRESENTATIVE has been advised of the possibility of such damages.

Scali MPI Connect Release 4.4 - Users Guide vii

Page 10

No action, whether in contract or tort (including negligence), or otherwise arising out of or in

connection this CERTIFICATE may be brought more than six months after the cause of action

has occurred.

Termination.

SCALI has the right to terminate this CERTIFICATE with immediate effect if the LICENSEE

breaches or is in default of any obligation hereunder which default is incapable of cure or

which, being capable of cure, has not been cured within fifteen (15) days after receipt of

notice of such default (or such additional cure period as the non-defaulting party may

authorize).

SCALI may terminate this CERTIFICATE with immediate effect by written notice to the

LICENSEE and may regard the LICENSEE as in default of this CERTIFICATE, if the LICENSEE

substantially breaches the CERTIFICATE, becomes insolvent, makes a general assignment for

the benefit of its creditors, files a voluntary petition of bankruptcy, suffers or permits the

appointment of a receiver for its business or assets, or becomes subject to any proceeding

under the bankruptcy or insolvency law, whether domestic or foreign, or has wound up or

liquidated, voluntarily or otherwise. In the event that any of the above events occur, the

LICENSEE shall immediately notify SCALI of its occurrence.

In the event that either party is unable to perform any of its obligations under this

CERTIFICATE or to enjoy any of its benefits because of (or if loss of the Services is caused by)

natural disaster, action or decreed or governmental bodies or communication line failure not

the fault of the affected party (normally and hereinafter referred to as a “FORCE MAJEURE

EVENT”) the party who has been so affected shall immediately give notice to the other party

and shall do everything possible to resume performance. Upon receipt of such notice, all

obligations under this CERTIFICATE shall be immediately suspended. If the period of nonperformance exceeds twenty-one (21) days from the receipt of notice of the FORCE MAJEURE

EVENT, the party whose performance has not been so affected may, by giving written notice,

terminate this CERTIFICATE with immediate effect.

In the event that this CERTIFICATE is terminated for any reason the LICENSEE shall destroy

all data, materials, and other properties of SCALI then in its possession, provided as a

consequence of this CERTIFICATE, hereunder but not limited to SCALI SOFTWARE, copies of

the software, adaptations and merged portions in any form.

Proprietary Information

The LICENSEE acknowledges that all information concerning SCALI that is not generally

known to the public is “CONFIDENTIAL AND PROPRIETARY INFORMATION”. THE LICENSEE

agrees that it will not permit the duplication, use or disclosure of any such CONFIDENTIAL

AND PROPRIETARY INFORMATION to any person (other than its own employees who must

have such information for the performance of their obligations under this CERTIFICATE),

unless authorized in writing by SCALI.

These confidentiality obligations survive the expiration, termination or transfer of this

Certificate, independent of the cause for such expiration, termination or transfer.

Miscellaneous

The Headings and Clauses of this CERTIFICATE are intended for convenience only and shall in

no way affect their interpretation. Words importing natural persons shall include bodies

corporate and other legal personae and vice versa. Any particular gender shall mean the other

gender, and vice-versa. The singular shall include the plural and vice-versa.

All remedies available to either party for the breach of this CERTIFICATE are cumulative and

may be exercised concurrently or separately, and the exercise of any one remedy shall not be

deemed an election of such remedy to the exclusion of other remedies.

Any invalidity, in whole or in part, of any of the provisions of this CERTIFICATE shall not affect

the validity of any other of its provisions.

Any notice or other communication hereunder shall be in writing.

Scali MPI Connect Release 4.4 - Users Guide viii

Page 11

No term or provision hereof shall be deemed waived and no breach excused unless such

waiver or consent shall be in writing and signed by the party claimed to have waived or

consented.

Governing Law

This CERTIFICATE shall be governed by and construed in accordance with the laws of Norway,

with Oslo City Court (Oslo tingrett) as proper legal venue.

Scali MPI Connect Release 4.4 - Users Guide ix

Page 12

Scali MPI Connect Release 4.4 - Users Guide x

Page 13

Table of contents

Chapter 1 Introduction .................................................................... 5

1.1 Scali MPI Connect product context ......................................................................5

1.2 Support...........................................................................................................6

1.2.1 Scali mailing lists.......................................................................................6

1.2.2 SMC FAQ..................................................................................................6

1.2.3 SMC release documents..............................................................................6

1.2.4 Problem reports.........................................................................................6

1.2.5 Platforms supported...................................................................................6

1.2.6 Licensing..................................................................................................7

1.2.7 Feedback..................................................................................................7

1.3 How to read this guide ......................................................................................7

1.4 Acronyms and abbreviations .............................................................................7

1.5 Terms and conventions......................................................................................9

1.6 Typographic conventions ...................................................................................9

Chapter 2 Description of Scali MPI Connect ................................... 11

2.1 Scali MPI Connect components ......................................................................... 11

2.2 SMC network devices ...................................................................................... 12

2.2.1 Network devices......................................................................................13

2.2.2 Shared Memory Device............................................................................. 13

2.2.3 Ethernet Devices .....................................................................................13

2.2.4 Myrinet ..................................................................................................15

2.2.5 Infiniband............................................................................................... 15

2.2.6 SCI........................................................................................................ 16

2.3 Communication protocols on DAT-devices .......................................................... 16

2.3.1 Channel buffer ........................................................................................ 16

2.3.2 Inlining protocol ...................................................................................... 17

2.3.3 Eagerbuffering protocol ............................................................................ 17

2.3.4 Transporter protocol ................................................................................17

2.3.5 Zerocopy protocol.................................................................................... 18

2.4 Support for other interconnects ........................................................................18

2.5 MPI-2 Features............................................................................................... 18

Chapter 3 Using Scali MPI Connect ................................................ 21

3.1 Setting up a Scali MPI Connect environment....................................................... 21

3.1.1 Scali MPI Connect environment variables ....................................................21

3.2 Compiling and linking ......................................................................................21

3.2.1 Running .................................................................................................21

3.2.2 Compiler support..................................................................................... 22

3.2.3 Linker flags............................................................................................. 22

3.2.4 Notes on Compiling and linking on AMD64 and EM64T .................................. 22

3.2.5 Notes on Compiling and linking on Power series........................................... 23

Scali MPI Connect Release 4.4 Users Guide 1

Page 14

3.2.6 Notes on compiling with MPI-2 features ...................................................... 23

3.3 Running Scali MPI Connect programs................................................................. 23

3.3.1 Naming conventions................................................................................. 23

3.3.2 mpimon - monitor program.......................................................................24

3.3.3 mpirun - wrapper script............................................................................27

3.4 Suspending and resuming jobs ......................................................................... 28

3.5 Running with dynamic interconnect failover capabilities ....................................... 28

3.6 Running with tcp error detection - TFDR ............................................................ 28

3.7 Debugging and profiling................................................................................... 29

3.7.1 Debugging with a sequential debugger .......................................................29

3.7.2 Built-in-tools for debugging....................................................................... 30

3.7.3 Assistance for external profiling ................................................................. 30

3.7.4 Debugging with Etnus Totalview ................................................................ 30

3.8 Controlling communication resources ................................................................ 31

3.8.1 Communication resources on DAT-devices .................................................. 31

3.9 Good programming practice with SMC ...............................................................32

3.9.1 Matching MPI_Recv() with MPI_Probe() ......................................................32

3.9.2 Using MPI_Isend(), MPI_Irecv().................................................................32

3.9.3 Using MPI_Bsend() .................................................................................. 32

3.9.4 Avoid starving MPI-processes - fairness ......................................................32

3.9.5 Unsafe MPI programs............................................................................... 33

3.9.6 Name space pollution............................................................................... 33

3.10 Error and warning messages .......................................................................... 33

3.10.1 User interface errors and warnings...........................................................33

3.10.2 Fatal errors ........................................................................................... 33

3.11 Mpimon options ............................................................................................ 34

3.11.1 Giving numeric values to mpimon ............................................................ 35

Chapter 4 Profiling with Scali MPI Connect .................................... 37

4.1 Example ........................................................................................................ 37

4.2 Tracing.......................................................................................................... 38

4.2.1 Using Scali MPI Connect built-in trace......................................................... 38

4.2.2 Features................................................................................................. 40

4.3 Timing .......................................................................................................... 41

4.3.1 Using Scali MPI Connect built-in timing....................................................... 41

4.4 Using the scanalyze ........................................................................................ 43

4.4.1 Analysing all2all ...................................................................................... 43

4.5 Using SMC's built-in CPU-usage functionality ...................................................... 45

Chapter 5 Tuning SMC to your application ..................................... 47

5.1 Tuning communication resources ...................................................................... 47

5.1.1 Automatic buffer management ..................................................................47

5.2 How to optimize MPI performance.....................................................................48

5.2.1 Performance analysis ............................................................................... 48

5.2.2 Using processor-power to poll ................................................................... 48

5.2.3 Reorder network traffic to avoid conflicts .................................................... 48

5.3 Benchmarking ................................................................................................48

Scali MPI Connect Release 4.4 Users Guide 2

Page 15

5.3.1 How to get expected performance.............................................................. 48

5.3.2 Memory consumption increase after warm-up..............................................49

5.4 Collective operations ....................................................................................... 49

5.4.1 Finding the best algorithm ........................................................................50

Appendix A Example MPI code....................................................... 51

A-1 Programs in the ScaMPItst package.......................................................................51

A-2 Image contrast enhancement............................................................................... 51

Appendix B Troubleshooting ......................................................... 54

B-1 When things do not work - troubleshooting ............................................................ 54

Appendix C Install Scali MPI Connect............................................. 56

C-1 Per node installation of Scali MPI Connect .............................................................. 56

C-2 Install Scali MPI Connect for TCP/IP ...................................................................... 57

C-3 Install Scali MPI Connect for Direct Ethernet........................................................... 57

C-4 Install Scali MPI Connect for Myrinet ..................................................................... 57

C-5 Install Scali MPI Connect for Infiniband..................................................................58

C-6 Install Scali MPI Connect for SCI........................................................................... 58

C-7 Install and configure SCI management software ..................................................... 58

C-8 License options .................................................................................................. 58

C-9 Scali kernel drivers ............................................................................................. 59

C-10 Uninstalling SMC............................................................................................... 59

C-11 Troubleshooting Network providers ..................................................................... 59

Appendix D Bracket expansion and grouping ................................. 62

D-1 Bracket expansion .............................................................................................. 62

D-2 Grouping...........................................................................................................62

Appendix E Related documentation................................................ 64

Scali MPI Connect Release 4.4 Users Guide 3

Page 16

Scali MPI Connect Release 4.4 Users Guide 4

Page 17

Chapter 1 Introduction

This manual describes Scali MPI Connect (SMC) in detail. SMC is sold as a separate stand-alone

product, with an SMC distribution, and integrated with Scali Manage in the SSP distribution.

Some integration issues and features of the MPI are also discussed in the Scali Manage Users

Guide, the user's manual for Scali Manage.

This manual is written for users who have a basic programming knowledge of C or Fortran, as

well as an understanding of MPI.

1.1 Scali MPI Connect product context

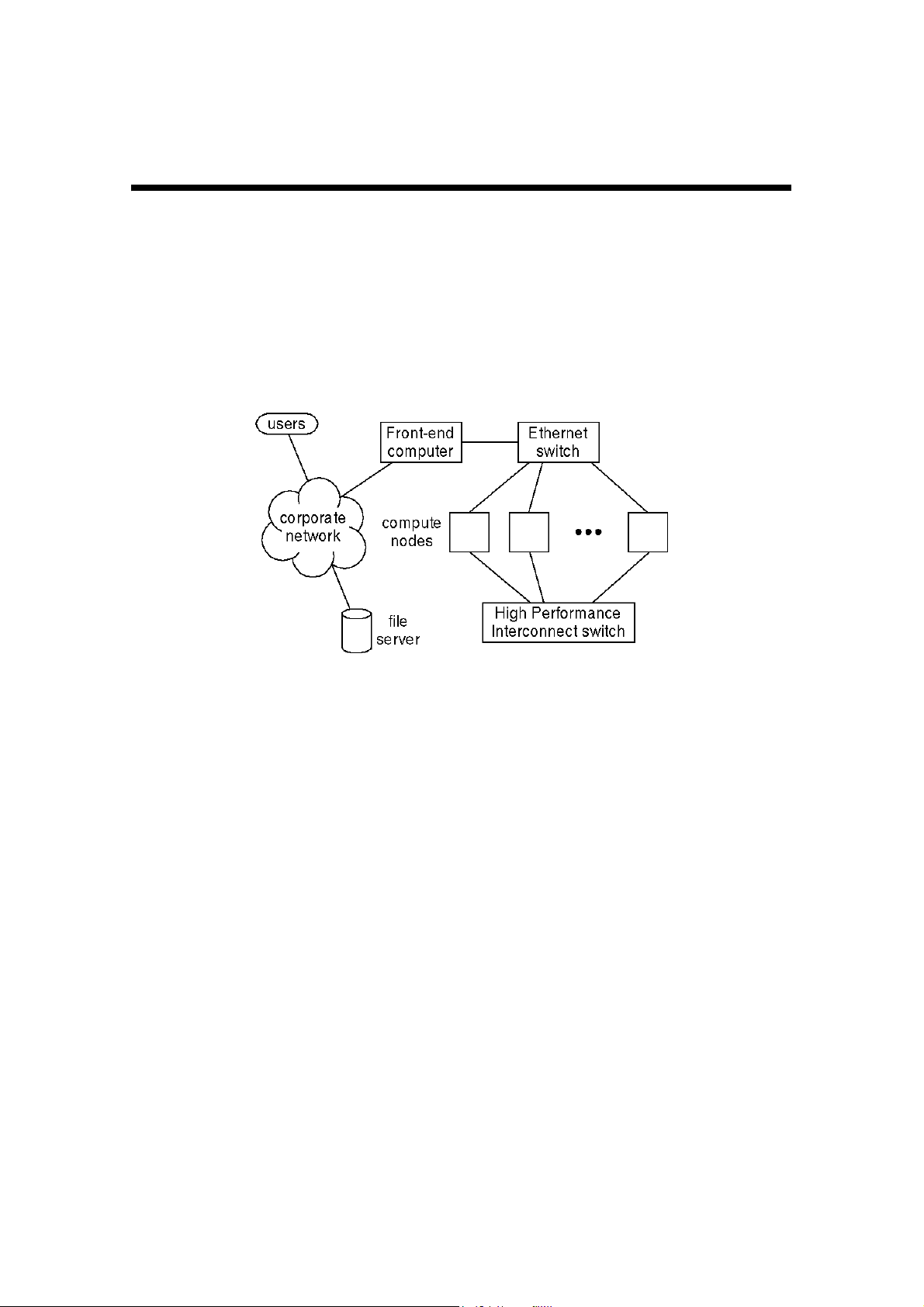

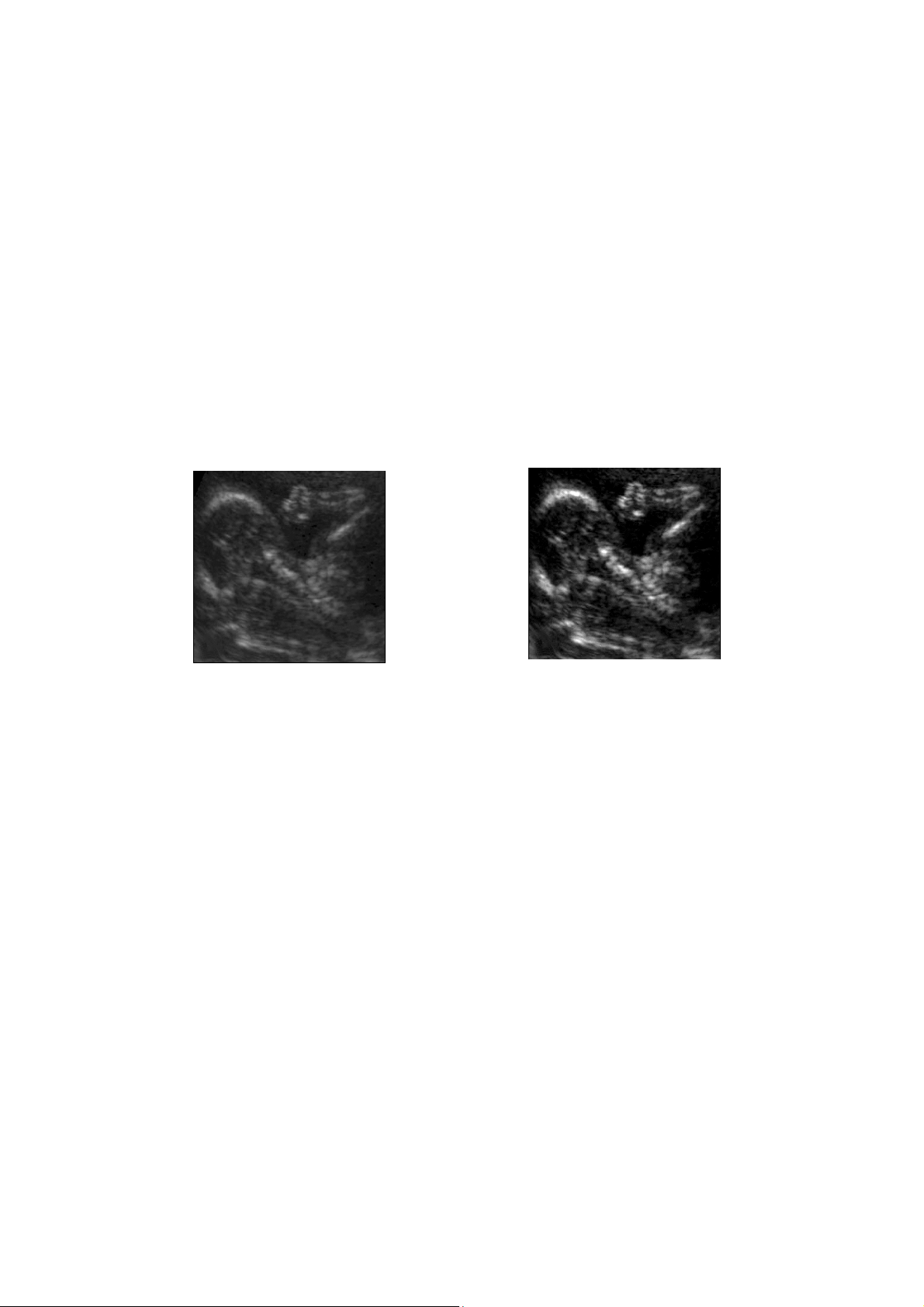

Figure 1-1: A cluster system

Figure 1-1: shows a simplified view of the underlying architecture of clusters using Scali MPI

Connect: A number of compute nodes are connected together in a Ethernet network through

which a front-end interfaces the cluster with the corporate network. A high performance

interconnect can be attached to service communication requirements of key applications.

The front-end imports services like file systems from the corporate network to allow users to

run applications and access their data.

Scali MPI Connect implements the MPI standard for a number of popular high performance

interconnects, like Gigiabit Ethenet, Infiniband, Myrinet and SCI.

While the high performance interconnect is optional, the networking infrastructure is

mandatory. Without it the nodes in the cluster will have no way of sharing resources. TCP/IP

functionality implemented by the Ethernet network enables the front-end to issue commands

to the nodes, provide them with data and application images, and collect results from the

processing the nodes perform.

The Scali Software Platform provides the necessary software components to combine a number

of commodity computers running Linux into a single computer entity, henceforth called a

cluster.

Scali is targeting its software at users involved in High Performance Computing, also known as

supercomputing, which typically includes CPU-intensive parallel applications. Scali aims to

produce software tools which assist its users in maximizing the power and ease of use of the

computing hardware purchased.

Scali MPI Connect Release 4.4 Users Guide 5

Page 18

Section: 1.2 Support

CPU-intensive parallel applications are programmed using a programming library called MPI

(Message Passing Interface), the state-of-the-art library for high performance computing. Note

that the MPI library is NOT described within this manual; MPI is defined by a standards

committee, and the API, along with guides for its use is available free of charge on the Internet.

A link to the MPI Standard and other MPI resources can be found in chapter 7, "Related

documentation", and on Scali's web site, http://www.scali.com.

Scali MPI Connect (SMC) consists of Scali's implementation of the MPI programming library and

the necessary support programs to launch and run MPI applications. This manual often uses

the term ScaMPI to refer to the specifics of the MPI itself, and not the support applications.

Please note that in earlier releases of Scali Software Platform (SSP), the term ScaMPI was often

used to refer to the parts of SSP which are now called SMC.

SSP is the complete cluster management solution, and includes a GUI, full remote

management, power control, remote console and monitoring functionality, as well as a full

OS+Scali Manage install/reinstall utility. While we strive to make SSP as simple and painless

to use as possible, SMC as a stand-alone product is the bare minimum for MPI usage, and

requires that the user installs another management solution. Please note that SMC continues

to be included in SSP; at no time should they be installed together, and SSP and SMC

distributions should never be mixed within a single cluster.

1.2 Support

1.2.1 Scali mailing lists

Scali provides two mailing lists for support and information distribution. For instructions on how

to subscribe to a mailing list (i.e., scali-announce or scali-user), please see the Mailing Lists

section of http://www.scali.com/.

1.2.2 SMC FAQ

An updated list of Frequently Asked Questions is posted on http://www.scali.com. In

addition, for users who have installed SMC, the version of the FAQ that was current when SMC

was installed is available as a text file in /opt/scali/doc/ScaMPI/FAQ.

1.2.3 SMC release documents

When SMC has been installed, a number of smaller documents such as the FAQ, RELEASE

NOTES, README, SUPPORT, LICENSE_TERMS, INSTALL are available as text files in the /opt/

scali/doc/ScaMPI directory.

1.2.4 Problem reports

Problem reports should, whenever possible, include both a description of the problem, the

software version(s), the computer architecture, a code example, and a record of the sequence

of events causing the problem. In particular, any information that you can include about what

triggered the error will be helpful. The report should be sent by e-mail to support@scali.com.

1.2.5 Platforms supported

SMC is available for a number of platforms. For up-to-date information, please see the SMC

section of http://www.scali.com/. For additional information, please contact Scali at

sales@scali.com.

Scali MPI Connect Release 4.4 Users Guide 6

Page 19

Section: 1.3 How to read this guide

1.2.6 Licensing

SMC is licensed using Scali license manager system. In order to run SMC a valid demo or a

permanent license must be obtained. Customers with valid software maintenance contracts

with Scali may request this directly from license@scali.com. All other requests, including

DEMO licenses, should be directed to sales@scali.com.

1.2.7 Feedback

Scali appreciates any suggestions users may have for improving both this Scali MPI Connect

User’s Guide and the software described herein. Please send your comments by e-mail to

support@scali.com.

Users of parallel tools software using SMC on a Scali System are also encouraged to provide

feedback to the National HPCC Software Exchange (NHSE) - Parallel Tools Library [10]. The

Parallel Tools Library provides information about parallel system software and tools, and also

provides for communication between software authors and users.

1.3 How to read this guide

This guide is written for skilled computer users and professionals. It is assumed that the reader

is familiar with the basic concepts and terminology of computer hardware and software since

none of these will be explained in any detail. Depending on your user profile, some chapters

are more relevant than others.

1.4 Acronyms and abbreviations

Abbreviation Meaning

AMD64 The 64 bit Instruction set arcitecture (ISA) that is the 64 bit extention to the Intel

x86 ISA. Also known as x86-64. The Opteron and Athlon64 from AMD are the

first implementations of this ISA.

DAPL Direct Access Provider Library DAT Instantiation for a given interconnect

DAT Direct Access Transport - Transport-independent, platform-independent Applica-

tion Programming Interfaces that exploit RDMA

DET Direct Ethernet Transport - Scali's DAT implementation for Ethernet-like devices,

including channel aggregation

EM64T The Intel implementation of the 64 bit extention to the x86 ISA. Also See

AMD64.

GM A software interface provided by Myricom for their Myrinet interconnect

hardware.

HCA Hardware Channel Adapter. Term used by Infiniband vendors referencing to the

hardware adapter.

HPC High Performance Computer

IA32 Instruction set Architecture 32 Intel x86 architecture

Table 1-1: Acronyms and abbreviations

Scali MPI Connect Release 4.4 Users Guide 7

Page 20

Section: 1.4 Acronyms and abbreviations

Abbreviation Meaning

IA64 Instruction set Architecture 64 Intel 64-bit architecture, Itanium, EPIC

Infiniband A high speed interconnect standard available from a number of vendors

MPI Message Passing Interface - De-facto standard for message passing

Myrinet™ An interconnect developed by Myricom. Myrinet is the product name for the

hardware. (See GM).

NIC Network Interface Card

OEM Original Equipment Manufacturer

Power A generic term that cover the PowerPC and POWER processor families. These

processors are both 32 and 64 bit capable. The common case is to have a 64 bit

OS that support both 32 and 64 bit executables. See also PPC64

PowerPC The IBM/Motorola PowerPC processor family. See PPC64

POWER The IBM POWER processor family. Scali support the 4 and 5 versions. See

PPC64

PPC64 Abbreviation for PowerPC 64, which is the common 64 bit instruction set archi-

tecture(ISA) name used in Linux for the PowerPC and POWER processor families. These processors have a common core ISA that allow one single Linux

version to be made for all three processor families.

RDMA Remote DMA Read or Write Data in a remote memory at a given address

ScaMPI Scali's MPI - First generation MPI Connect product, replaced by SMC

SCI Scalable Coherent Interface

SMC Scali MPI Connect - Scali's second generation MPI

SMI Scali Manage Install - OS installation part of Scali Manage

SSP Scali Software Platform is the name of the bundling of all Scali software pack-

ages.

SSP 3.x.y First generation SSP - WulfKit, Universe, Universe XE, ClusterEdge

SSP 4.x.y Second generation SSP - Scali Manage + SMC (option)

VAR Value Added Reseller

x86-64 see AMD64 and EM64T

Table 1-1: Acronyms and abbreviations

Scali MPI Connect Release 4.4 Users Guide 8

Page 21

Section: 1.5 Terms and conventions

1.5 Terms and conventions

Unless explicitly specified otherwise, gcc (gnu c-compiler) and bash (gnu Bourne-Again-SHell)

are used in all examples.

Term Description.

Node A single computer in an interconnected system consisting of more than one com-

puter

Cluster A cluster is a set of interconnected nodes with the aim to act as one single unit

torus greek word for ring, used in Scali documents in the context of 2- and 3-dimen-

sional interconnect topologies

Scali system A cluster consisting of Scali components

Front end A computer outside the cluster nodes dedicated to run configuration, monitoring

and licensing software

MPI process Instance of application program with unique rank within MPI_COMM_WORLD

UNIX Refers to all UNIX and lookalike OSes supported by the SSP, i.e. Solaris and

Linux.

Windows Refers to Microsoft Windows 98/Me/NT/2000/XP

Table 1-2: Basic terms

1.6 Typographic conventions

Ter m Description.

Bold Program names, options and default values

Italics User input

mono spaced Computer related: Shell commands, examples, environment variables,

file locations (directories) and contents

GUI style font Refers to Menu, Button, check box or other items of a GUI

# Command prompt in shell with super user privileges

% Command prompt in shell with normal user privileges

Table 1-3: Typographic conventions

Scali MPI Connect Release 4.4 Users Guide 9

Page 22

Section: 1.6 Typographic conventions

Scali MPI Connect Release 4.4 Users Guide 10

Page 23

Chapter 2 Description of Scali MPI Connect

This chapter gives the details of the operations of Scali MPI Connect (SMC). SMC consists of

libraries to be linked and loaded with user application program(s), and a set of executables

which control the start-up and execution of the user application program(s). The relationship

between these components and their interfaces are described in this chapter. It is necessary

to understand this chapter in order to control the execution of parallel processes and be able

to tune Scali MPI Connect for optimal application performance.

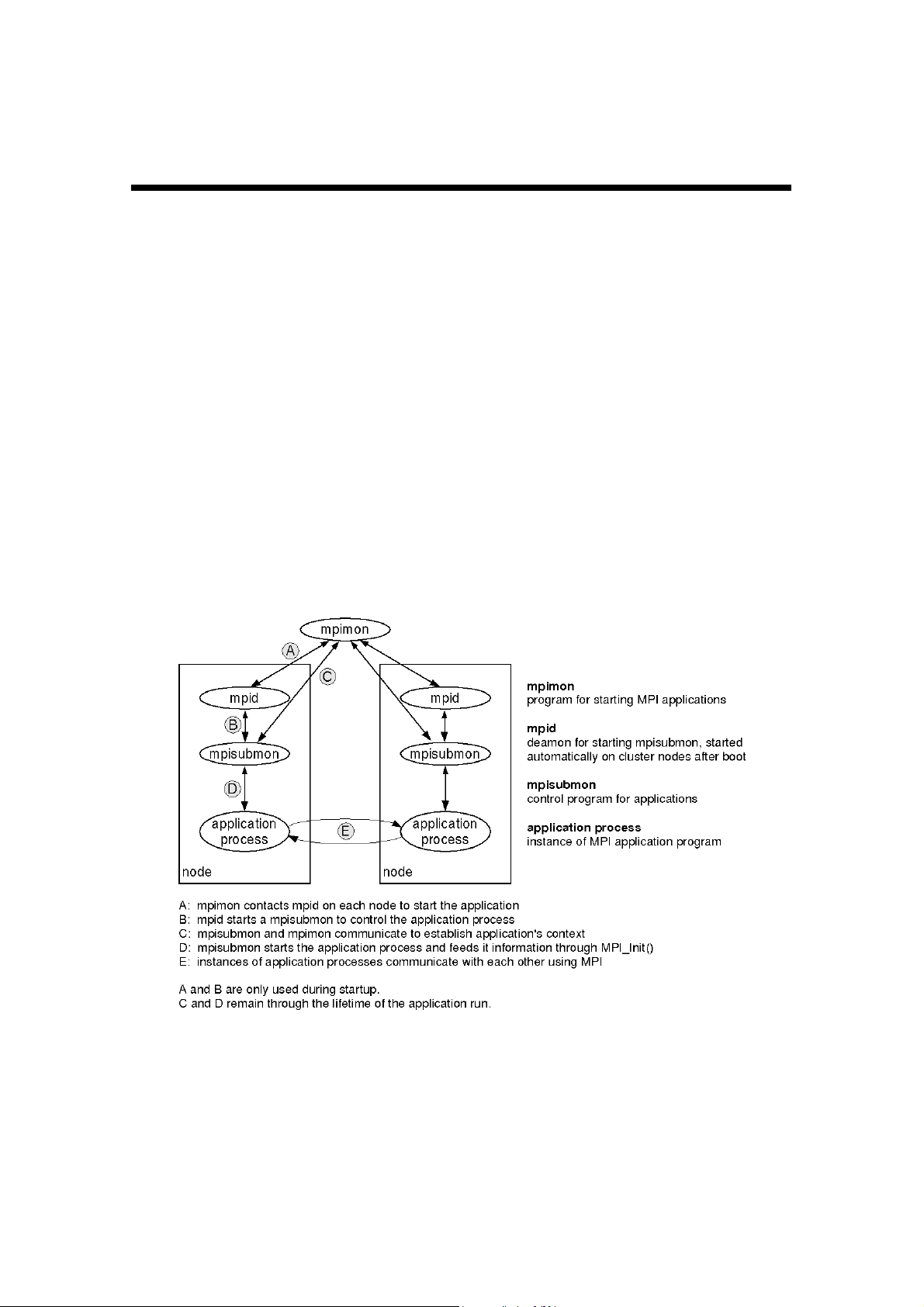

2.1 Scali MPI Connect components

Scali MPI Connect consists of a number of programs, daemons, libraries, include and

configuration files that together implements the MPI functionality needed by applications.

Starting applications rely on the following daemons and launchers:

• mpimon is a monitor program which is the user’s interface for running the application

program.

• mpisubmon is a submonitor program which controls the execution of application

programs. One submonitor program is started on each node per run.

• mpiboot is a bootstrap program used when running in manual-/debug-mode.

• mpid is a daemon program running on all nodes that are able to run SMC. mpid is used

for starting the mpisubmon programs (to avoid using Unix facilities like the remote shell

rsh). mpid is started automatically when a node boots, and must run at all times

Figure 2-1: The way from application startup to execution

Scali MPI Connect Release 4.4 Users Guide 11

Page 24

Section: 2.2 SMC network devices

Figure 2-1: illustrates how applications started with mpimon have their communication system

established by a system of daemons on the nodes. This process uses TCP/IP communication

over the networking Ethernet, whereas optional high performance interconnects are used for

communication between processes.

Parameter control is performed by mpimon to check as many of the specified options and

parameters as possible. The user program names are checked for validity, and the nodes are

contacted (using sockets) to ensure they are responding and that mpid is running.

Via mpid mpimon establishes contact with the nodes and transfers basic information to enable

mpid to start the submonitor mpisubmon on each node. Each submonitor establishes a

connection to mpimon for exchange of control information between each mpisubmon and

mpimon to enable mpisubmon to start the specified userprograms (MPI-processes).

As mpisubmon starts all the MPI-processes to be executed they MPI_Init(). Once inside here

the user processes wait for all the mpisubmons inovolved to coordinate via mpimon. Once all

processes are ready mpimon will return a “start running” message to the processes. They will

then return from MPI_Init() and start executing the user code.

Stopping MPI application programs is requested by the user processes as they enter the

MPI_Finalize() call. The local mpisubmon will signal mpimon and wait for mpimon to return a

“all stopped message”. This comes when all processes are waiting in MPI_Finalize(). As the user

processes return from the MPI_Finalize() they release their resources and terminates. Then the

local mpisubmon terminates and eventuall mpimon terinates.

2.2 SMC network devices

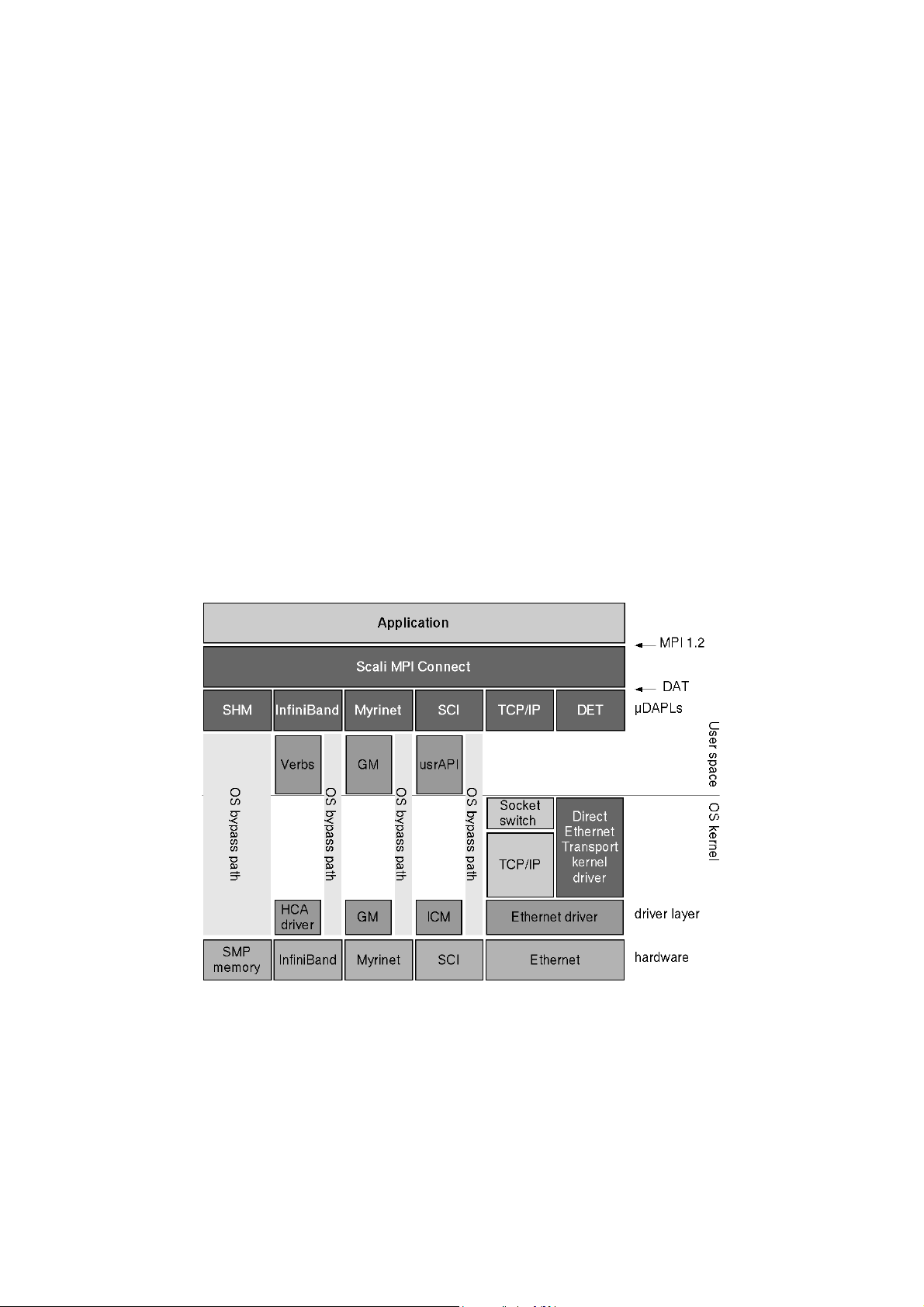

Figure 2-2: Scali MPI Connect relies on DAT to interface to a number of interconnects

Beginning with SSP 4.0.0 and SMC 4.0.0 the Scali MPI offers generic support for interconnects.

This does not yet mean that every interconnect is supported out of the box, since SMC still

requires a driver for each interconnect. But from SMC's point of view, a driver is just a

Scali MPI Connect Release 4.4 Users Guide 12

Page 25

Section: 2.2 SMC network devices

library, which in turn may (e.g. Myrinet or SCI) or may not require a kernel driver (e.g. TCP/IP).

These provider libraries provide a network device to SMC.

2.2.1 Network devices

There are two basic types of network devices in SMC, native and DAT. The native devices are

built-in and are neither replaceble nor upgradable without replacing the Scali MPI Connect

package. There are currently five built in devices, SMP, TCP, IB, GM and SCI; the Release Notes

included with the Scali MPI Connect package should have more details on this issue.

To find out what network device is used between two processes, set the environment variable

SCAMPI_NETWORKS_VERBOSE=2. With value 2 the MPI library will print out during startup a

table over every process and what device it's using to every other process.

2.2.1.1 Direct Access Transport (DAT)

The other type of devices use the DAT uDAPL API in order to have an open API for generic third

party vendors. uDAPL is an abbrevation for User DAT Provider library. This is a shared library

that SMC loads at runtime through the static DAT registry. These libraries are normally listed

in /etc/dat.conf. For clusters using ‘exotic’ interconnects whose vendor provides a uDAPL

shared object, these can be added to this file (if this isn’t done automatically by the vendor).

The device name is given by the uDAPL, and the interconnect vendor must provide it.

Please note that Scali has a certification program, and may not provide support for unknown

third party vendors.

The DAT header files and registry library conforming to the uDAPL v1.1 specification, is

provided by the dat-registry package.

For more information on DAT, please refer to http://www.datcollaborative.org.

2.2.2 Shared Memory Device

The SMP device is a shared memory device that is used exclusively for intra-node

communication and use SYS V IPC shared memory. Mulit CPU nodes are frequent in clusters,

and SMP provide optimal communication between the CPUs. In cases where only one processor

per node is used, SMP is not used.

2.2.3 Ethernet Devices

An Ethernet for networking is a basic requirement fpr a cluster. For some uses this also has

enough performance for carrying application communication. To serve this Scali MPI Connect

has a TCP device. In addition there are Direct Ethernet Transport (DET) devices which

implement a protocol devised by Scali for aggregating multiple TCP-type interconnects.

2.2.3.1 TCP

The TCP device is really a generic device that works over any TCP/IP network, even WANs. This

network device requires only that the node names given to mpimon map correctly to the nodes

IP address. TCP/IP connectivity is required for SMC operation, and for this reason the TCP

device is always perational.

Note: Users should always append the TCP device at the end of a devicelist as the device of

last resort. This way communication will fall back to the management ethernet that anyway has

to be present for the cluster to work.

Scali MPI Connect Release 4.4 Users Guide 13

Page 26

Section: 2.2 SMC network devices

2.2.3.2 DET

Scali has developed a device called Direct Ethernet Transport (DET) to improve Ethernet

performance. This device that bypasses the TCP/IP stack and uses raw Ethernet frames for

sending messages. These devices are bondable over multiple Ethernets.

The /opt/scali/sbin/detctl command provides a means of creating and deleting DET

devices. /opt/scali/bin/detstat can be used to obtain statistics on the devices.

2.2.3.3 Using detctl

detctl has the following syntax :

detctl -a [-q] [-c] <hca index> <device1> <device2> ...

-d [-q] <hca index>

-l [-q]

Examples:

• Adding new DET devices temporarily with the detctl utility:

root# detctl -a 0 eth0 # creates a det0 device using eth0 as transport device.

root# detctl -a 1 eth1 eth2 # creates a det1 device using eth1 and eth2 as

aggregated transport devices.

• Removing DET devices with detctl:

root# detctl -d 0 # removes DET device 0 (det0) from the current configuration.

root# detctl -d 1 # removes DET device 1 (det1) from the current configuration.

• Listing active DET devices

root# detctl -l # lists all DET devices currently configured.

Please note that aggregating devices usually requires a special switch configuration.

Both devices have the same Ethernet address (MAC), and so there must either be one VLAN

for the eth1's and another for the eth2’s, or all the eth1's must be on one Ethernet switch, and

all the eth2's on another switch.

Using detctl to add and remove devices is not permanent, as the contents of the /opt/scali/

kernel/scadet.conf configuration file takes presedence. The contents of this file has the

following format:

# hca <hca index> <ethernet devices>

Permanent changes must be done by editing opt/scali/kernel/scadet.conf , e.g., to add

permanently the example above add the following lines:

hca 0 eth0

hca 1 eth1 eth2

2.2.3.4 Using detstat

To gather transmission statistics (packets transmitted, received and lost) for a DET device use

detstat. It can also be used to reset the statistics for DET devices.

detstat has the following syntax :

detstat [-r] [-a] <hca name>

Examples:

• root# detstat det0 #listing statistics for the det0 device, if it exists.

• root# detstat -a #listsing statistics for all existing DET devices.

Scali MPI Connect Release 4.4 Users Guide 14

Page 27

Section: 2.2 SMC network devices

• root# detstat -r det0 # reset statistics for the det0 device.

• root# detstat -r -a # resets statistics for all DET devices.

2.2.4 Myrinet

2.2.4.1 GM

This is a RDMA capable device that uses the Myricom GM driver and library. A GM release above

2.0 is required. This device is straight forward and requires no configuration other than the

presence of the libgm.so library in the library path (see /etc/ld.so.conf).

Note:

Myricom GM software is not provided by Scali. If you have purchased a Myrinet interconnect

you have the right to use the GM source, and a source tar ball is available from Myricom. It is

necessary to obtain the GM source since it must be compiled per kernel version. Scali provides

tools for generating binary RPMs to ease installing and management. These tools are provided

in the scagmbuilder package; see the Release Notes/Readme file for detailed instructions.

If you used Scali Manage to install your compute nodes, and supplied it with the GM source tar

ball, the installation is already complete.

2.2.5 Infiniband

2.2.5.1 IB

Infiniband is a relatively new interconnect that has been available since 2002, and became

affordable in 2003. On PCI-X based systems you can expect latencies around 5

bandwidth up to 700-800Mb/s (please note that performance results may vary based on

processors, memory sub system, and the PCI bridge in the chipsets).

There are various Infiniband vendors that provide slightly different hardware and software

environments. Scali have established relationships with the following vendors: Mellanox,

Silverstorm, Cisco, and Voltaire.

See release notes on the exact versions of software stack that is supported. Scali provide a

utility known as ScaIBbuilder that does an automated install of some of these stacks. (See

IBbuilders release notes).

The different vendors’ InfiniBand switches vary in feature sets, but the most important

difference is whether they have a built in subnet manager or not. An InfiniBand network must

have a subnet manager (SM) and if the switches don't come with a builtin SM, one has to be

started on a node attached to the IB network. The SMs of choice for software SMs are OpenSM

or minism. If you have SM-less switches your vendor will provide one as part of their software

bundle.

SMC uses either the uDAPL (User DAT Provider Library) supplied by the IB vendor, or the low

level VAPI/IBA layer. DAT is an established standard and is guaranteed to work with SMC.

However better performance is usually achieved with the VAPI/IBT interfaces. However, VAPI

is an API that is in flux and SMC is not guaranteed to work with all (current nor future) versions

of VAPI.

υS and

Scali MPI Connect Release 4.4 Users Guide 15

Page 28

Section: 2.3 Communication protocols on DAT-devices

2.2.6 SCI

This is a built-in device that uses the Scali SCI driver and library (ScaSCI). This driver is for the

Dolphin SCI network cards. Please see the ScaSCI Release Notes for specific requirements. This

device is straight forward and requires no configuration itself, but for multi-dimensional toruses

(2D and 3D) the Scali SCI Management system (ScaConf) needs to be running somewhere in

your system. Refer to Appendix C for installation and configuration of the Scali SCI

Management software.

2.3 Communication protocols on DAT-devices

In SMC, the communication protocol used to transfer data between a sender and a receiver

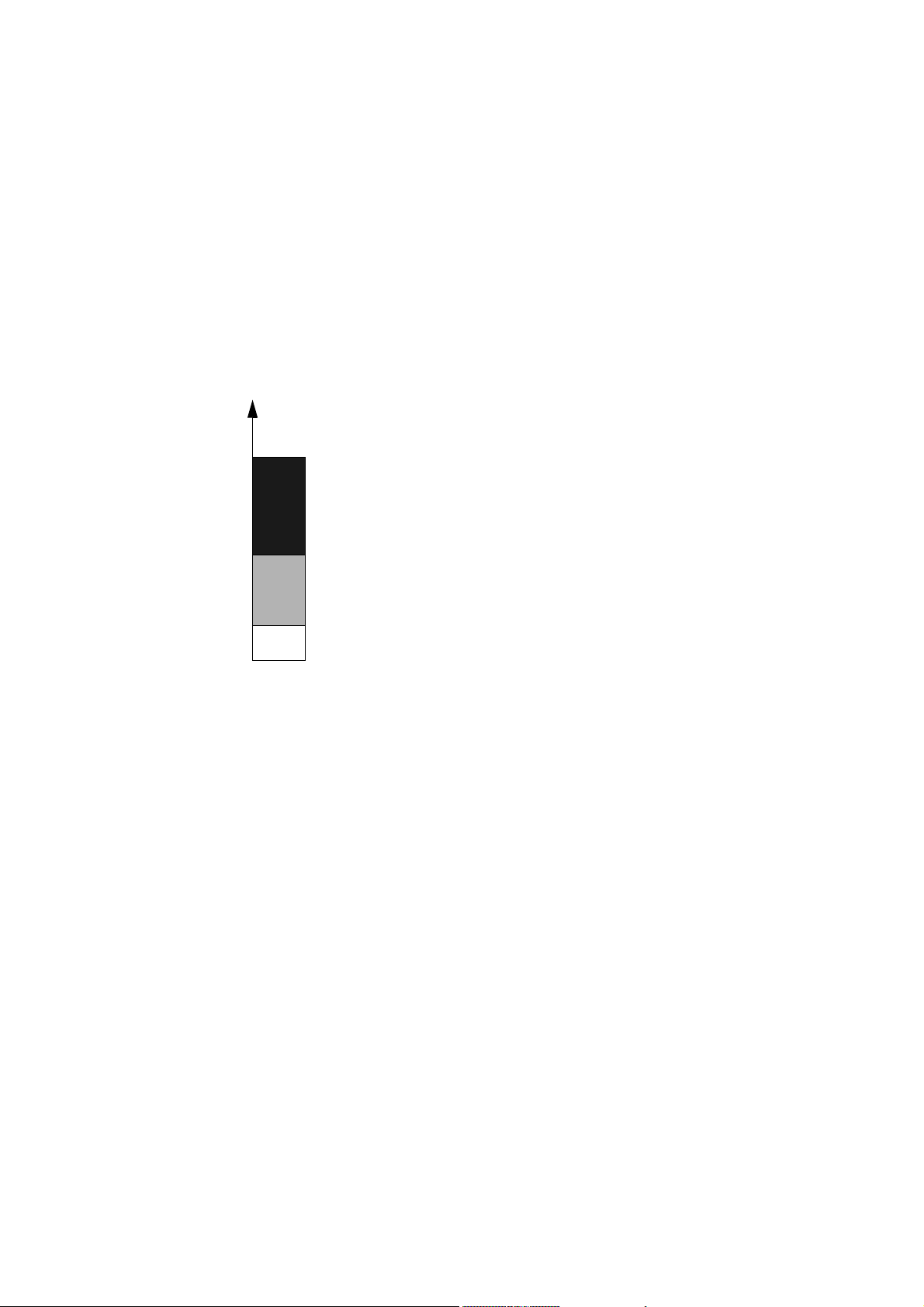

depends on the size of the message to transmit, as illustrated in Figure 2-3:.

Increasing

message size

Transporter protocol:

message size > eager_size

Eagerbuffering protocol:

channel_inline_threshold < message size <= eager_size

Inlining protocol

0 <= message size <= channel_inline_threshold

Figure 2-3: Thresholds for different communication protocol

The default thresholds that control whether a message belongs to the inlining, eagerbuffering

or transporter protocols can be controlled from the application launch program (mpimon)

described in chapter 3.

Figure 2-4: illustrates the node resources associated with communication and mechanisms

implemented in Scali MPI Connect for handling messages of different sizes. The three

communication protocols from Figure 2-3: rely on buffers located in the main memory of the

nodes. This memory is allocated as shared, i.e., it is not private to a particular process in the

node. Each process has one set of receiving buffers for of the processes it communicates with.

As the figure shows all communication relies on the sending process depositing messages

directly into the communication buffers of the receiver. For Inline and Eagerbuffering the

management of the buffer resources does not require participation from the receiving process,

because of their designs as ring buffers.

2.3.1 Channel buffer

The Channel ringbuffer is divided into equally sized entries. The size varies differs for different

architectures and networks; see “Scali MPI Connect Release Notes” for details. An entry in the

ringbuffer, which is used to hold the information forming the message envelope, is reserved

each time a message is being sent, and is used by the inline protocol, the eagerbuffering

protocol, and the transporter protocol. In addition, one ore more entries are used by the inline

protocol for application data being transmitted.

Scali MPI Connect Release 4.4 Users Guide 16

Page 29

Section: 2.3 Communication protocols on DAT-devices

Figure 2-4: Resources and communication concepts in Scali MPI Connect

2.3.2 Inlining protocol

With the in-lining protocol the application’s data is included in the message header. The inlining protocol utilizes one or more channel ringbuffer entries.

2.3.3 Eagerbuffering protocol

The eagerbuffering protocol is used when medium-sized messages are to be transferred.

The protocol uses a scheme where the buffer resources which are allocated by the sender are

released by the receiver, without any explicit communication between the two communicating

partners.

The eagerbuffering protocol uses one channel ringbuffer entry for the message header, and one

eagerbuffer for the application data being sent.

2.3.4 Transporter protocol

The transporter protocol is used when large messages are to be transferred. The transporter

protocol utilizes one channel ringbuffer entry for the message header, and transporter buffers

for the application data being sent. The protocol takes care of fragmentation and reassembly

of large messages, such as those whose size is larger than the size of the transporter

ringbuffer-entry (transporter_size).

Scali MPI Connect Release 4.4 Users Guide 17

Page 30

Section: 2.4 Support for other interconnects

2.3.5 Zerocopy protocol

The zerocopy protocol is special case of the transporter protocol t. It includes the same steps

as a transporter except that data is written directly into the receivers buffer instead of being

buffered in the transporter-ringbuffer.

The zerocopy protocol is selected if the underlying hardware can support it. To disable it, set

the zerocopy_count or the zerocopy_size parameters to 0

2.4 Support for other interconnects

A uDAPL 1.1 module must be developed to interface other interconnects to Scali MPI Connect.

The listing below identifies the particular functions that must be implemented in order for SMC

to be able to use a uDAPL-implementation:

dat_cr_accept

dat_cr_query

dat_cr_reject

dat_ep_connect

dat_ep_create

dat_ep_disconnect

dat_ep_free

dat_ep_post_rdma_write

dat_evd_create

dat_evd_dequeue

dat_evd_free

dat_evd_wait

dat_ia_close

dat_ia_open

dat_ia_query

dat_lmr_create

dat_lmr_free

dat_psp_create

dat_psp_free

dat_pz_create

dat_pz_free

dat_set_consumer_context

2.5 MPI-2 Features

At the time being SMC does not implement the full MPI-2 functionality. At the same time some

users are asking for parts of the MPI-2 functionality, in particular the MPI-I/O functions. To fill

the users needs Scali are now using the Open Source ROMIO software to offer this functionality.

Scali MPI Connect Release 4.4 Users Guide 18

Page 31

Section: 2.5 MPI-2 Features

ROMIO is a high-performance, portable implementation of MPI-IO, the I/O chapter in MPI-2

and has become a de-facto standard for MPI-I/O (in terms of interface and semantics). ROMIO

is a library parallel to the MPI library for the application, but depend on an MPI to set up the

environment and do communication. See chapter 3.2.6 for more information on how to compile

and link applications with MPI-IO needs.

Scali MPI Connect Release 4.4 Users Guide 19

Page 32

Section: 2.5 MPI-2 Features

Scali MPI Connect Release 4.4 Users Guide 20

Page 33

Chapter 3 Using Scali MPI Connect

This chapter describes how to setup, compile, link and run a program using Scali MPI Connect,

and briefly discusses some useful tools for debugging and profiling.

Please note that the "Scali MPI Connect Release Notes" are also available as a file in the

/opt/scali/doc/ScaMPI directory.

3.1 Setting up a Scali MPI Connect environment

3.1.1 Scali MPI Connect environment variables

The use of Scali MPI Connect requires that some environment variables be defined. These are

usually set in the standard startup scripts (e.g..bashrc when using bash), but can also be

defined manually.

MPI_HOME Installation directory. For a standard installation, the variable should be set

as:export MPI_HOME=/opt/scali

LD_LIBRARY_PATH Path to dynamic link libraries. Must be set to include the path to the

directory where these libraries can be found:

export LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:$MPI_HOME/lib

PATH Path variable. Must be updated to include the path to the directory where

the MPI binaries can be found:

export PATH=${PATH}:$MPI_HOME/bin

Normally, the Scali MPI Connect library’s header files mpi.h and mpif.h reside in the

$MPI_HOME/include directory.

3.2 Compiling and linking

MPI is an "Application Programming Interface" (API) and not an "Application Binary Interface"

(ABI). This means that in general applications should be recompiled and linked when used with

Scali MPI Connect. Since the MPICH-implementation is widely used Scali has made SMC ABIcompatible, depending on the versions of MPICH and SMC used. Please check the "Scali MPI

Connect Release Notes" for details. For applications that are dynamically linked with MPICH it

should only be necessary to change the library-path (LD_LIBRARY_PATH). For applications with

the necessary object files, only a relinking is needed.

3.2.1 Running

Start the hello-world program on the three nodes called nodeA, nodeB and nodeC.

% mpimon hello-world -- nodeA 1 nodeB 1 nodeC 1

The hello-world program should produce the following output:

Hello-world, I'm rank 0; Size is 3

Hello-world, I'm rank 1; Size is 3

Hello-world, I'm rank 2; Size is 3

Scali MPI Connect Release 4.4 Users Guide 21

Page 34

Section: 3.2 Compiling and linking

3.2.2 Compiler support

Scali MPI Connect is a C library built using the GNU compiler. Applications can however be

compiled with most compilers, as long as they are linked with the GNU runtime library.

The details of the process of linking with the Scali MPI Connect libraries vary depending on

which compiler is used. Check the "Scali MPI Connect Release Notes" for information on

supported compilers and how linking is done.

When compiling the following string must be included as compiler flags (bash syntax):

“-I$MPI_HOME/include”

The pattern for compiling is:

user% gcc -c -I$MPI_HOME/include hello-world.c

user% g77 -c -I$MPI_HOME/include hello-world.f

3.2.3 Linker flags

The following string outlines the setup for the necessary linker flags (bash syntax):

“-L/opt/scali/lib -lmpi”

The following versions of MPI libraries are available:

• libmpi - Standard library containing the C API.

• libfmpi - Library containing the Fortran API wrappers.

The pattern for linking is:

user% gcc hello-world.o -L$MPI_HOME/lib -lmpi -o hello-world

user% g77 hello-world.o -L$MPI_HOME/lib -lfmpi -lmpi -o hello-world

3.2.4 Notes on Compiling and linking on AMD64 and EM64T

AMDs AMD64 and Intels EM64T (also known as x86-64) are instruction set architectures (ISA)

that add 64 bit extensions to Intel x86 (ia32) ISA.

These processors are capable of running 32 bit programs at full speed while running a 64 bit

OS. For this reason Scali supports running both 32 bit and 64 bit MPI programs while running

64 bit OS.

Having both 32 bit and 64 bit libraries installed at the same time require some tweaks to the

compiler and linker flags.

All compilers for x86_64 generate 64 bit code by default, but have flags for 32 bit code

generation. For gcc/g77 these are -m32 and -m64 for making 32 and 64 bit code respectively.

For Portland Group Compilers these are -tp k8-32 and -tp k8-64. For other compilers please

check the compiler documentation.

It is not possible to link 32 and 64 bit object code into one executable, (no cross dynamic linking

either) so there must be double set of libraries. It is common convention on x86_64 systems