Page 1

EMC® VMAX3™ Family

Product Guide

VMAX 100K, VMAX 200K, VMAX 400K

with HYPERMAX OS

REVISION 6.5

Page 2

Copyright © 2014-2017 EMC Corporation All rights reserved.

Published May 2017

Dell believes the information in this publication is accurate as of its publication date. The information is subject to change without notice.

THE INFORMATION IN THIS PUBLICATION IS PROVIDED “AS-IS.“ DELL MAKES NO REPRESENTATIONS OR WARRANTIES OF ANY KIND

WITH RESPECT TO THE INFORMATION IN THIS PUBLICATION, AND SPECIFICALLY DISCLAIMS IMPLIED WARRANTIES OF

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. USE, COPYING, AND DISTRIBUTION OF ANY DELL SOFTWARE DESCRIBED

IN THIS PUBLICATION REQUIRES AN APPLICABLE SOFTWARE LICENSE.

Dell, EMC, and other trademarks are trademarks of Dell Inc. or its subsidiaries. Other trademarks may be the property of their respective owners.

Published in the USA.

EMC Corporation

Hopkinton, Massachusetts 01748-9103

1-508-435-1000 In North America 1-866-464-7381

www.EMC.com

2 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

Page 3

CONTENTS

Figures

Tables

Chapter 1

Chapter 2

7

9

Preface 11

Revision history...........................................................................................18

VMAX3 with HYPERMAX OS 21

Introduction to VMAX3 with HYPERMAX OS............................................. 22

VMAX3 Family 100K, 200K, 400K arrays.................................................... 23

VMAX3 Family specifications.........................................................24

HYPERMAX OS..........................................................................................34

What's new in HYPERMAX OS 5977 Q2 2017................................34

HYPERMAX OS emulations........................................................... 35

Container applications .................................................................. 36

Data protection and integrity.........................................................39

Management Interfaces 47

Management interface versions..................................................................48

Unisphere for VMAX...................................................................................48

Workload Planner.......................................................................... 49

FAST Array Advisor....................................................................... 49

Unisphere 360............................................................................................ 49

Solutions Enabler........................................................................................49

Mainframe Enablers................................................................................... 50

Geographically Dispersed Disaster Restart (GDDR)....................................51

SMI-S Provider........................................................................................... 51

VASA Provider............................................................................................ 51

eNAS management interface .....................................................................52

ViPR suite...................................................................................................52

ViPR Controller..............................................................................52

ViPR Storage Resource Management............................................52

vStorage APIs for Array Integration........................................................... 53

SRDF Adapter for VMware® vCenter™ Site Recovery Manager.................54

SRDF/Cluster Enabler ............................................................................... 54

EMC Product Suite for z/TPF....................................................................54

SRDF/TimeFinder Manager for IBM i......................................................... 55

AppSync.....................................................................................................55

Chapter 3

Open systems features 57

HYPERMAX OS support for open systems.................................................58

Backup and restore to external arrays........................................................ 59

Data movement............................................................................. 59

Typical site topology......................................................................60

ProtectPoint solution components................................................. 61

ProtectPoint and traditional backup.............................................. 62

Basic backup workflow.................................................................. 63

Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS 3

Page 4

CONTENTS

Basic restore workflow.................................................................. 65

VMware Virtual Volumes............................................................................ 69

VVol components...........................................................................69

VVol scalability...............................................................................70

VVol workflow................................................................................70

Chapter 4

Chapter 5

Chapter 6

Mainframe Features 73

HYPERMAX OS support for mainframe...................................................... 74

IBM z Systems functionality support.......................................................... 74

IBM 2107 support....................................................................................... 75

Logical control unit capabilities...................................................................75

Disk drive emulations.................................................................................. 76

Cascading configurations........................................................................... 76

Provisioning 77

Thin provisioning.........................................................................................78

Thin devices (TDEVs).................................................................... 78

Thin CKD....................................................................................... 79

Thin device oversubscription......................................................... 79

Open Systems-specific provisioning.............................................. 79

Mainframe-specific provisioning.....................................................81

Storage Tiering 83

Fully Automated Storage Tiering................................................................ 84

Pre-configuration for FAST........................................................... 85

FAST allocation by storage resource pool...................................... 87

Service Levels............................................................................................ 88

FAST/SRDF coordination...........................................................................89

FAST/TimeFinder management..................................................................90

External provisioning with FAST.X............................................................. 90

Chapter 7

Chapter 8

4 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

Native local replication with TimeFinder 91

About TimeFinder....................................................................................... 92

Interoperability with legacy TimeFinder products.......................... 92

Targetless snapshots.....................................................................96

Secure snaps................................................................................. 96

Provision multiple environments from a linked target.................... 96

Cascading snapshots..................................................................... 97

Accessing point-in-time copies......................................................98

Mainframe SnapVX and zDP.......................................................................98

Remote replication solutions 101

Native remote replication with SRDF........................................................ 102

SRDF 2-site solutions...................................................................103

SRDF multi-site solutions.............................................................105

Concurrent SRDF solutions.......................................................... 107

Cascaded SRDF solutions............................................................ 108

SRDF/Star solutions.................................................................... 109

Interfamily compatibility................................................................114

SRDF device pairs......................................................................... 117

SRDF device states.......................................................................119

Dynamic device personalities........................................................122

Page 5

CONTENTS

SRDF modes of operation............................................................ 122

SRDF groups................................................................................ 124

Director boards, links, and ports...................................................125

SRDF consistency........................................................................ 126

SRDF data compression............................................................... 126

SRDF write operations................................................................. 127

SRDF/A cache management........................................................ 132

SRDF read operations.................................................................. 135

SRDF recovery operations............................................................137

Migration using SRDF/Data Mobility............................................140

SRDF/Metro ............................................................................................ 145

SRDF/Metro integration with FAST............................................. 147

SRDF/Metro life cycle..................................................................147

SRDF/Metro resiliency.................................................................149

Witness failure scenarios..............................................................153

Deactivate SRDF/Metro.............................................................. 154

SRDF/Metro restrictions............................................................. 155

RecoverPoint............................................................................................ 156

Remote replication using eNAS.................................................................156

Chapter 9

Chapter 10

Appendix A

Blended local and remote replication 159

SRDF and TimeFinder............................................................................... 160

R1 and R2 devices in TimeFinder operations.................................160

SRDF/AR..................................................................................... 160

SRDF/AR 2-site solutions............................................................. 161

SRDF/AR 3-site solutions............................................................. 161

TimeFinder and SRDF/A.............................................................. 162

TimeFinder and SRDF/S.............................................................. 163

SRDF and EMC FAST coordination.............................................. 163

Data Migration 165

Overview...................................................................................................166

Data migration solutions for open systems environments..........................166

Non-Disruptive Migration overview..............................................166

About Open Replicator................................................................. 170

PowerPath Migration Enabler.......................................................172

Data migration using SRDF/Data Mobility.................................... 172

Data migration solutions for mainframe environments...............................176

Volume migration using z/OS Migrator.........................................177

Dataset migration using z/OS Migrator........................................178

Mainframe Error Reporting 179

Error reporting to the mainframe host...................................................... 180

SIM severity reporting.............................................................................. 180

Environmental errors.................................................................... 181

Operator messages...................................................................... 184

Appendix B

Licensing 187

eLicensing................................................................................................. 188

Capacity measurements............................................................... 189

Open systems licenses.............................................................................. 190

License pack................................................................................ 190

License suites...............................................................................190

Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS 5

Page 6

CONTENTS

Individual licenses.........................................................................196

Ecosystem licenses...................................................................... 197

Mainframe licenses................................................................................... 198

License packs...............................................................................198

Individual license.......................................................................... 199

6 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

Page 7

FIGURES

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

D@RE architecture, embedded................................................................................... 41

D@RE architecture, external.......................................................................................41

ProtectPoint data movement..................................................................................... 60

Typical RecoverPoint backup/recovery topology........................................................61

Basic backup workflow............................................................................................... 64

Object-level restoration workflow.............................................................................. 66

Full-application rollback restoration workflow.............................................................67

Full database recovery to production devices.............................................................68

Auto-provisioning groups............................................................................................ 81

FAST data movement................................................................................................. 84

FAST components...................................................................................................... 87

Service Level compliance........................................................................................... 88

Local replication interoperability, FBA devices............................................................94

Local replication interoperability, CKD devices........................................................... 95

SnapVX targetless snapshots..................................................................................... 97

SnapVX cascaded snapshots...................................................................................... 98

zDP operation.............................................................................................................99

Concurrent SRDF topology....................................................................................... 108

Cascaded SRDF topology..........................................................................................109

Concurrent SRDF/Star..............................................................................................110

Concurrent SRDF/Star with R22 devices...................................................................111

Cascaded SRDF/Star................................................................................................ 112

R22 devices in cascaded SRDF/Star......................................................................... 112

Four-site SRDF.......................................................................................................... 114

R1 and R2 devices ..................................................................................................... 117

R11 device in concurrent SRDF.................................................................................. 118

R21 device in cascaded SRDF.................................................................................... 119

R22 devices in cascaded and concurrent SRDF/Star.................................................119

Host interface view and SRDF view of states............................................................120

Write I/O flow: simple synchronous SRDF.................................................................127

SRDF/A SSC cycle switching – multi-cycle mode.....................................................129

SRDF/A SSC cycle switching – legacy mode............................................................130

SRDF/A MSC cycle switching – multi-cycle mode.................................................... 131

Write commands to R21 devices................................................................................132

Planned failover: before personality swap................................................................. 137

Planned failover: after personality swap....................................................................138

Failover to Site B, Site A and production host unavailable......................................... 138

Migrating data and removing the original secondary array (R2)................................ 142

Migrating data and replacing the original primary array (R1)..................................... 143

Migrating data and replacing the original primary (R1) and secondary (R2) arrays....144

SRDF/Metro............................................................................................................. 146

SRDF/Metro life cycle.............................................................................................. 148

SRDF/Metro Array Witness and groups.................................................................... 151

SRDF/Metro vWitness vApp and connections.......................................................... 152

SRDF/Metro Witness single failure scenarios........................................................... 153

SRDF/Metro Witness multiple failure scenarios........................................................ 154

SRDF/AR 2-site solution........................................................................................... 161

SRDF/AR 3-site solution...........................................................................................162

Non-Disruptive Migration zoning ..............................................................................167

Open Replicator hot (or live) pull............................................................................... 171

Open Replicator cold (or point-in-time) pull.............................................................. 172

Migrating data and removing the original secondary array (R2)................................ 173

Migrating data and replacing the original primary array (R1)..................................... 174

Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS 7

Page 8

FIGURES

54

55

56

57

58

59

60

61

62

Migrating data and replacing the original primary (R1) and secondary (R2) arrays....175

z/OS volume migration..............................................................................................177

z/OS Migrator dataset migration.............................................................................. 178

z/OS IEA480E acute alert error message format (call home failure).........................184

z/OS IEA480E service alert error message format (Disk Adapter failure)................. 184

z/OS IEA480E service alert error message format (SRDF Group lost/SIM presented

against unrelated resource).......................................................................................184

z/OS IEA480E service alert error message format (mirror-2 resynchronization)......185

z/OS IEA480E service alert error message format (mirror-1 resynchronization)...... 185

eLicensing process.................................................................................................... 188

8 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

Page 9

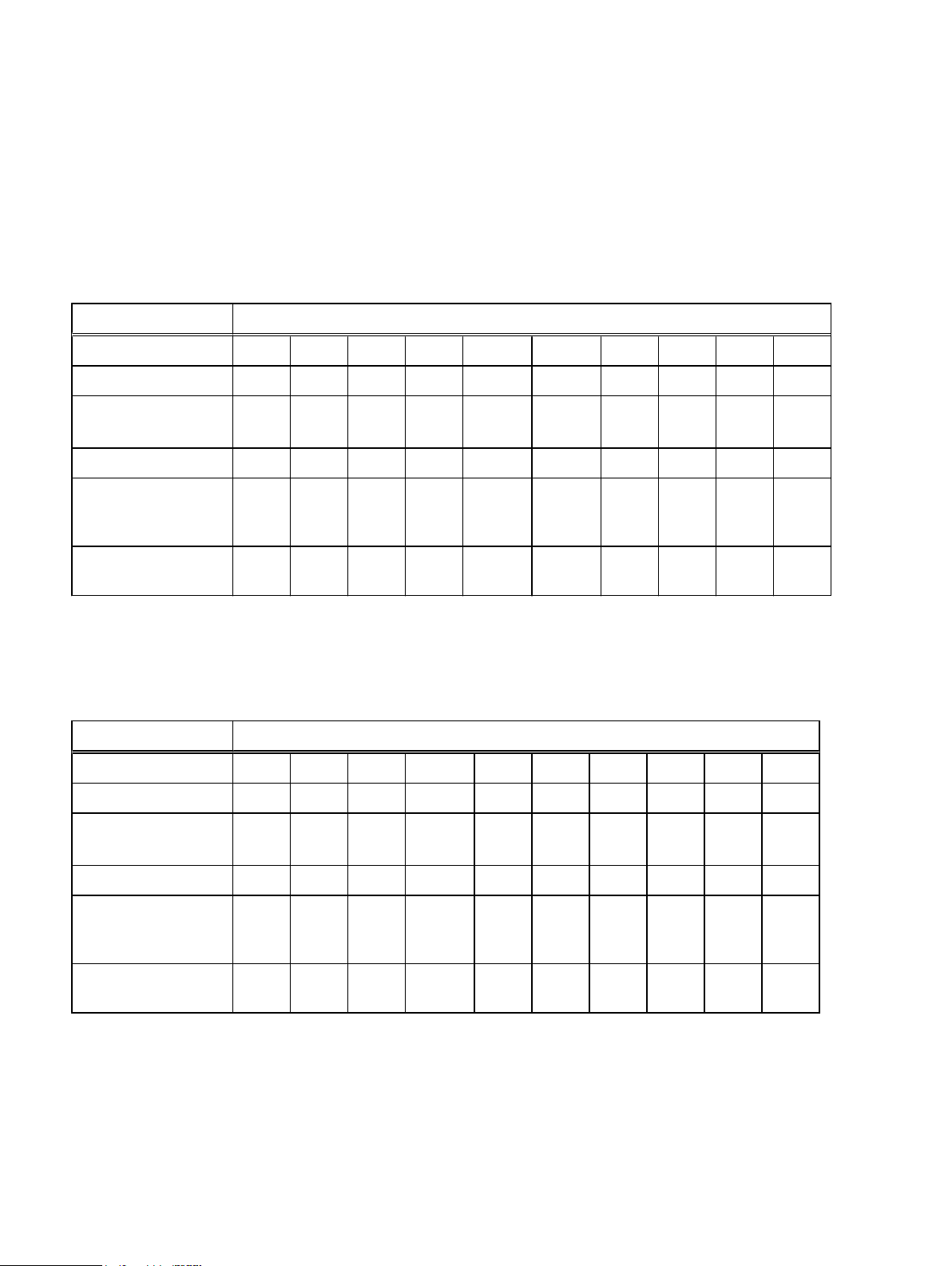

TABLES

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

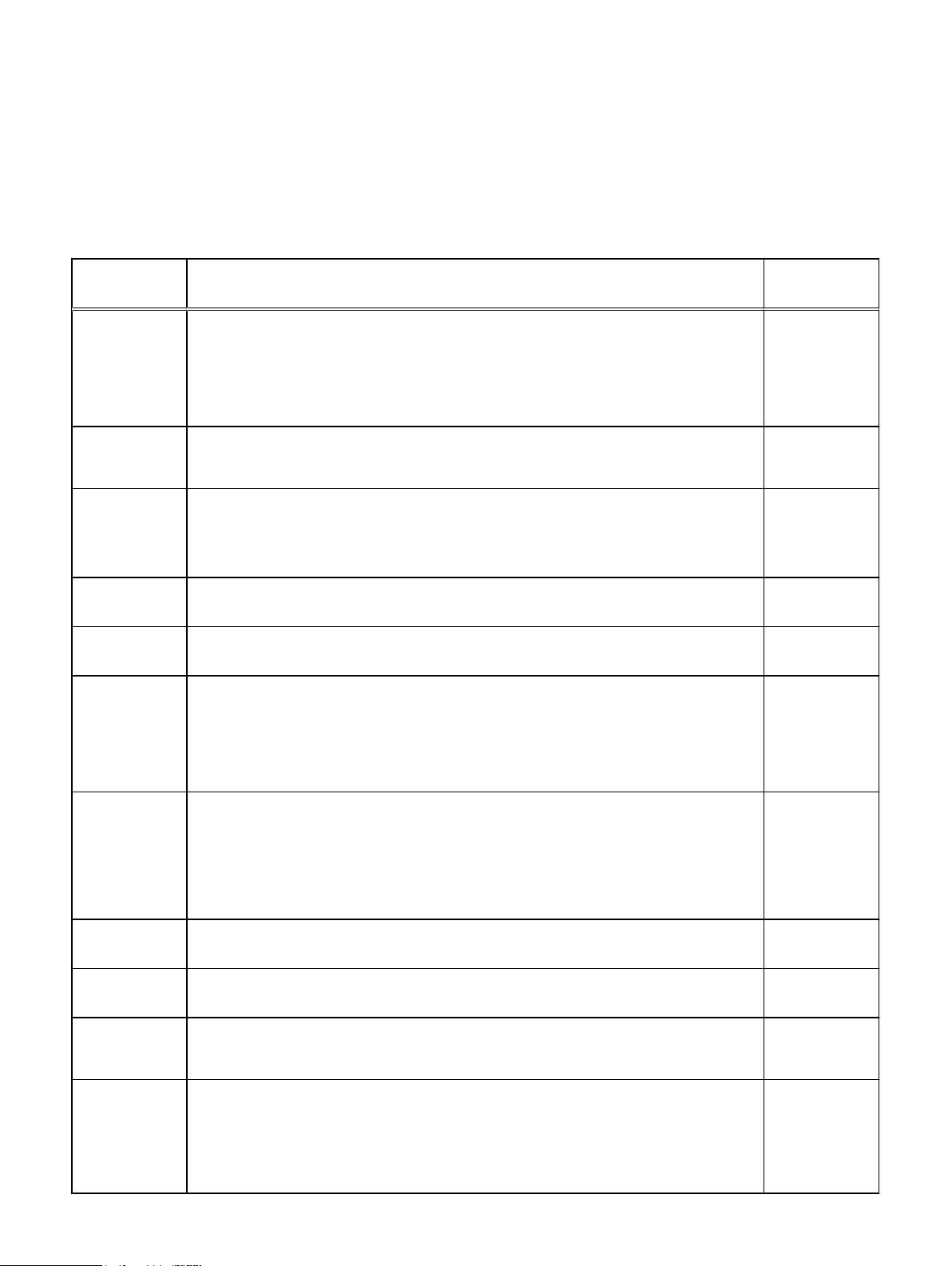

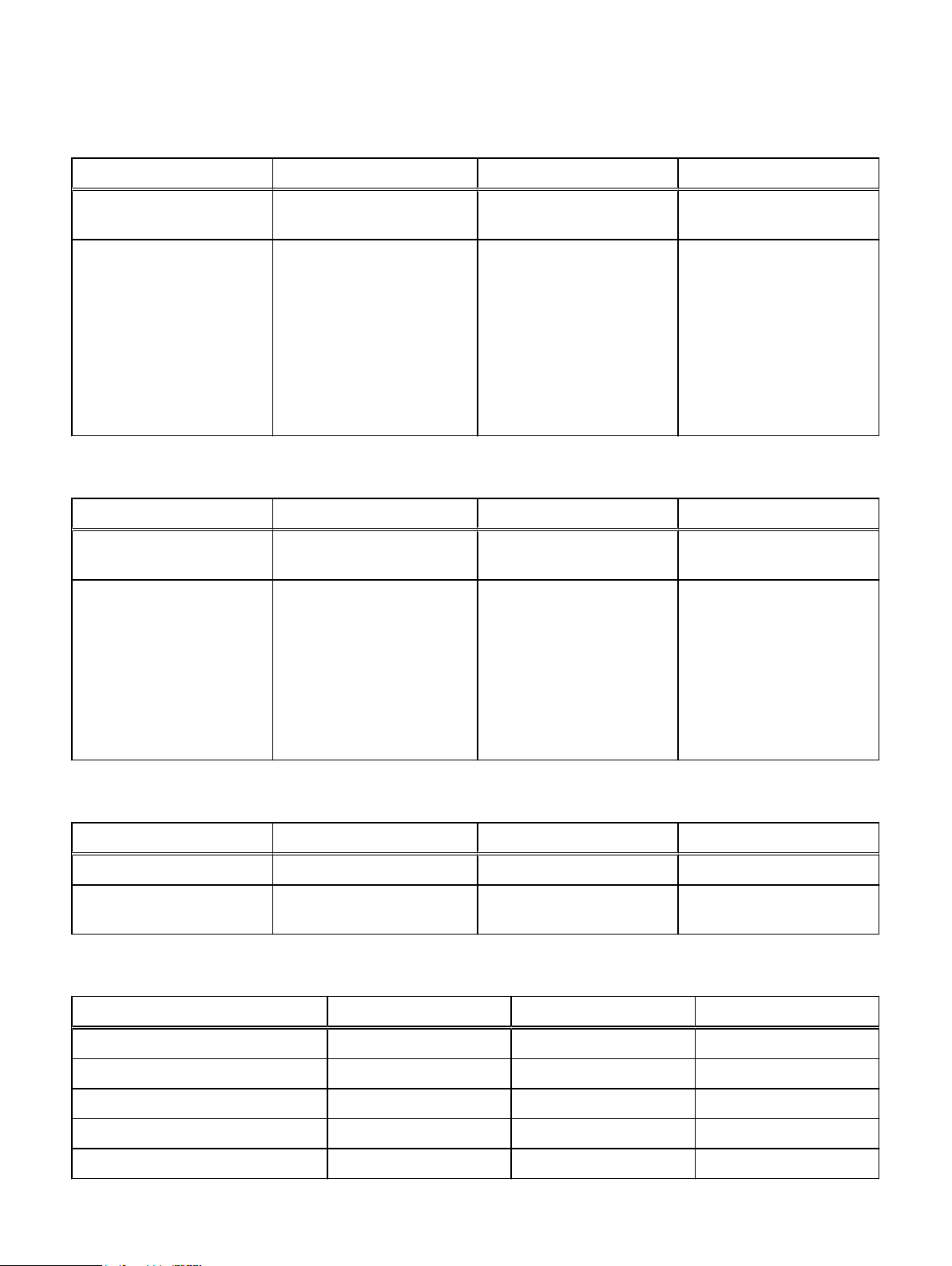

Typographical conventions used in this content..........................................................16

Revision history...........................................................................................................18

Engine specifications.................................................................................................. 24

Cache specifications...................................................................................................24

Vault specifications.....................................................................................................24

Front end I/O modules ...............................................................................................25

eNAS I/O modules......................................................................................................25

eNAS Software Data Movers .....................................................................................25

Capacity, drives .........................................................................................................25

Drive specifications ................................................................................................... 26

System configuration types ....................................................................................... 26

Disk Array Enclosures ................................................................................................ 27

Cabinet configurations ...............................................................................................27

Dispersion specifications............................................................................................ 27

Preconfiguration ........................................................................................................ 27

Host support ..............................................................................................................27

Hardware compression support option (SRDF) ......................................................... 28

VMAX3 Family connectivity .......................................................................................29

2.5" disk drives...........................................................................................................30

3.5" disk drives...........................................................................................................30

Power consumption and heat dissipation.................................................................... 31

Physical specifications................................................................................................ 31

Power options............................................................................................................ 32

Input power requirements - single-phase, North American, International, Australian

................................................................................................................................... 32

Input power requirements - three-phase, North American, International, Australian

................................................................................................................................... 32

Minimum distance from RF emitting devices.............................................................. 33

HYPERMAX OS emulations........................................................................................ 35

eManagement resource requirements........................................................................ 36

eNAS configurations by array .................................................................................... 38

Unisphere tasks.......................................................................................................... 48

ProtectPoint connections........................................................................................... 61

VVol architecture component management capability................................................ 69

VVol-specific scalability ............................................................................................. 70

Logical control unit maximum values...........................................................................75

Maximum LPARs per port...........................................................................................76

RAID options.............................................................................................................. 85

Service Level compliance legend................................................................................ 88

Service Levels............................................................................................................ 89

SRDF 2-site solutions................................................................................................103

SRDF multi-site solutions .........................................................................................105

SRDF features by hardware platform/operating environment................................... 115

R1 device accessibility................................................................................................121

R2 device accessibility.............................................................................................. 122

Limitations of the migration-only mode .................................................................... 144

Limitations of the migration-only mode .................................................................... 176

SIM severity alerts.....................................................................................................181

Environmental errors reported as SIM messages....................................................... 181

VMAX3 product title capacity types .........................................................................189

VMAX3 license suites for open systems environment................................................ 191

Individual licenses for open systems environment..................................................... 196

Individual licenses for open systems environment..................................................... 197

Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS 9

Page 10

TABLES

License suites for mainframe environment................................................................ 19852

10 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

Page 11

Preface

Note

As part of an effort to improve its product lines, EMC periodically releases revisions of

its software and hardware. Therefore, some functions described in this document

might not be supported by all versions of the software or hardware currently in use.

The product release notes provide the most up-to-date information on product

features.

Contact your EMC representative if a product does not function properly or does not

function as described in this document.

This document was accurate at publication time. New versions of this document might

be released on EMC Online Support (https://support.emc.com). Check to ensure that

you are using the latest version of this document.

Purpose

This document outlines the offerings supported on EMC® VMAX3™ Family (100K,

200K and 400K) arrays running HYPERMAX OS 5977.

Audience

This document is intended for use by customers and EMC representatives.

Related documentation

The following documentation portfolios contain documents related to the hardware

platform and manuals needed to manage your software and storage system

configuration. Also listed are documents for external components which interact with

your VMAX3 Family array.

EMC VMAX3 Family Site Planning Guide for VMAX 100K, VMAX 200K, VMAX 400K with

HYPERMAX OS

Provides planning information regarding the purchase and installation of a VMAX3

Family 100K, 200K, 400K.

EMC VMAX Best Practices Guide for AC Power Connections

Describes the best practices to assure fault-tolerant power to a VMAX3 Family

array or VMAX All Flash array.

EMC VMAX Power-down/Power-up Procedure

Describes how to power-down and power-up a VMAX3 Family array or VMAX All

Flash array.

EMC VMAX Securing Kit Installation Guide

Describes how to install the securing kit on a VMAX3 Family array or VMAX All

Flash array.

E-Lab™ Interoperability Navigator (ELN)

Provides a web-based interoperability and solution search portal. You can find the

ELN at https://elabnavigator.EMC.com.

Preface

11

Page 12

Preface

SRDF Interfamily Connectivity Information

Defines the versions of HYPERMAX OS and Enginuity that can make up valid

SRDF replication and SRDF/Metro configurations, and can participate in NonDisruptive Migration (NDM).

EMC Unisphere for VMAX Release Notes

Describes new features and any known limitations for Unisphere for VMAX .

EMC Unisphere for VMAX Installation Guide

Provides installation instructions for Unisphere for VMAX.

EMC Unisphere for VMAX Online Help

Describes the Unisphere for VMAX concepts and functions.

EMC Unisphere for VMAX Performance Viewer Online Help

Describes the Unisphere for VMAX Performance Viewer concepts and functions.

EMC Unisphere for VMAX Performance Viewer Installation Guide

Provides installation instructions for Unisphere for VMAX Performance Viewer.

EMC Unisphere for VMAX REST API Concepts and Programmer's Guide

Describes the Unisphere for VMAX REST API concepts and functions.

EMC Unisphere for VMAX Database Storage Analyzer Online Help

Describes the Unisphere for VMAX Database Storage Analyzer concepts and

functions.

EMC Unisphere 360 for VMAX Release Notes

Describes new features and any known limitations for Unisphere 360 for VMAX.

EMC Unisphere 360 for VMAX Installation Guide

Provides installation instructions for Unisphere 360 for VMAX.

EMC Unisphere 360 for VMAX Online Help

Describes the Unisphere 360 for VMAX concepts and functions.

EMC Solutions Enabler, VSS Provider, and SMI-S Provider Release Notes

Describes new features and any known limitations.

EMC Solutions Enabler Installation and Configuration Guide

Provides host-specific installation instructions.

EMC Solutions Enabler CLI Reference Guide

Documents the SYMCLI commands, daemons, error codes and option file

parameters provided with the Solutions Enabler man pages.

EMC Solutions Enabler Array Controls and Management for HYPERMAX OS CLI User

Guide

Describes how to configure array control, management, and migration operations

using SYMCLI commands for arrays running HYPERMAX OS.

EMC Solutions Enabler Array Controls and Management CLI User Guide

Describes how to configure array control, management, and migration operations

using SYMCLI commands.

12 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

Page 13

EMC Solutions Enabler SRDF Family CLI User Guide

Describes how to configure and manage SRDF environments using SYMCLI

commands.

SRDF Interfamily Connectivity Information

Defines the versions of HYPERMAX OS and Enginuity that can make up valid

SRDF replication and SRDF/Metro configurations, and can participate in NonDisruptive Migration (NDM).

EMC Solutions Enabler TimeFinder SnapVX for HYPERMAX OS CLI User Guide

Describes how to configure and manage TimeFinder SnapVX environments using

SYMCLI commands.

EMC Solutions Enabler SRM CLI User Guide

Provides Storage Resource Management (SRM) information related to various

data objects and data handling facilities.

EMC SRDF/Metro vWitness Configuration Guide

Describes how to install, configure and manage SRDF/Metro using vWitness.

VMAX Management Software Events and Alerts Guide

Documents the SYMAPI daemon messages, asynchronous errors and message

events, and SYMCLI return codes.

Preface

EMC ProtectPoint Implementation Guide

Describes how to implement ProtectPoint.

EMC ProtectPoint Solutions Guide

Provides ProtectPoint information related to various data objects and data

handling facilities.

EMC ProtectPoint File System Agent Command Reference

Documents the commands, error codes, and options.

EMC ProtectPoint Release Notes

Describes new features and any known limitations.

EMC Mainframe Enablers Installation and Customization Guide

Describes how to install and configure Mainframe Enablers software.

EMC Mainframe Enablers Release Notes

Describes new features and any known limitations.

EMC Mainframe Enablers Message Guide

Describes the status, warning, and error messages generated by Mainframe

Enablers software.

EMC Mainframe Enablers ResourcePak Base for z/OS Product Guide

Describes how to configure VMAX system control and management using the

EMC Symmetrix Control Facility (EMCSCF).

EMC Mainframe Enablers AutoSwap for z/OS Product Guide

Describes how to use AutoSwap to perform automatic workload swaps between

VMAX systems when the software detects a planned or unplanned outage.

13

Page 14

Preface

EMC Mainframe Enablers Consistency Groups for z/OS Product Guide

Describes how to use Consistency Groups for z/OS (ConGroup) to ensure the

consistency of data remotely copied by SRDF in the event of a rolling disaster.

EMC Mainframe Enablers SRDF Host Component for z/OS Product Guide

Describes how to use SRDF Host Component to control and monitor remote data

replication processes.

EMC Mainframe Enablers TimeFinder SnapVX and zDP Product Guide

Describes how to use TimeFinder SnapVX and zDP to create and manage spaceefficient targetless snaps.

EMC Mainframe Enablers TimeFinder/Clone Mainframe Snap Facility Product Guide

Describes how to use TimeFinder/Clone, TimeFinder/Snap, and TimeFinder/CG

to control and monitor local data replication processes.

EMC Mainframe Enablers TimeFinder/Mirror for z/OS Product Guide

Describes how to use TimeFinder/Mirror to create Business Continuance Volumes

(BCVs) which can then be established, split, re-established and restored from the

source logical volumes for backup, restore, decision support, or application

testing.

EMC Mainframe Enablers TimeFinder Utility for z/OS Product Guide

Describes how to use the TimeFinder Utility to condition volumes and devices.

EMC GDDR for SRDF/S with ConGroup Product Guide

Describes how to use Geographically Dispersed Disaster Restart (GDDR) to

automate business recovery following both planned outages and disaster

situations.

EMC GDDR for SRDF/S with AutoSwap Product Guide

Describes how to use Geographically Dispersed Disaster Restart (GDDR) to

automate business recovery following both planned outages and disaster

situations.

EMC GDDR for SRDF/Star Product Guide

Describes how to use Geographically Dispersed Disaster Restart (GDDR) to

automate business recovery following both planned outages and disaster

situations.

EMC GDDR for SRDF/Star with AutoSwap Product Guide

Describes how to use Geographically Dispersed Disaster Restart (GDDR) to

automate business recovery following both planned outages and disaster

situations.

EMC GDDR for SRDF/SQAR with AutoSwap Product Guide

Describes how to use Geographically Dispersed Disaster Restart (GDDR) to

automate business recovery following both planned outages and disaster

situations.

EMC GDDR for SRDF/A Product Guide

Describes how to use Geographically Dispersed Disaster Restart (GDDR) to

automate business recovery following both planned outages and disaster

situations.

14 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

Page 15

EMC GDDR Message Guide

DANGER

WARNING

CAUTION

NOTICE

Describes the status, warning, and error messages generated by GDDR.

EMC GDDR Release Notes

Describes new features and any known limitations.

EMC z/OS Migrator Product Guide

Describes how to use z/OS Migrator to perform volume mirror and migrator

functions as well as logical migration functions.

EMC z/OS Migrator Message Guide

Describes the status, warning, and error messages generated by z/OS Migrator.

EMC z/OS Migrator Release Notes

Describes new features and any known limitations.

EMC ResourcePak for z/TPF Product Guide

Describes how to configure VMAX system control and management in the z/TPF

operating environment.

EMC SRDF Controls for z/TPF Product Guide

Describes how to perform remote replication operations in the z/TPF operating

environment.

Preface

EMC TimeFinder Controls for z/TPF Product Guide

Describes how to perform local replication operations in the z/TPF operating

environment.

EMC z/TPF Suite Release Notes

Describes new features and any known limitations.

Special notice conventions used in this document

EMC uses the following conventions for special notices:

Indicates a hazardous situation which, if not avoided, will result in death or

serious injury.

Indicates a hazardous situation which, if not avoided, could result in death or

serious injury.

Indicates a hazardous situation which, if not avoided, could result in minor or

moderate injury.

Addresses practices not related to personal injury.

15

Page 16

Note

Preface

Presents information that is important, but not hazard-related.

Typographical conventions

EMC uses the following type style conventions in this document:

Table 1 Typographical conventions used in this content

Bold

Used for names of interface elements, such as names of windows,

dialog boxes, buttons, fields, tab names, key names, and menu paths

(what the user specifically selects or clicks)

Italic

Monospace

Monospace italic

Monospace bold

Used for full titles of publications referenced in text

Used for:

l

System code

l

System output, such as an error message or script

l

Pathnames, filenames, prompts, and syntax

l

Commands and options

Used for variables

Used for user input

[ ] Square brackets enclose optional values

| Vertical bar indicates alternate selections - the bar means “or”

{ } Braces enclose content that the user must specify, such as x or y or

z

... Ellipses indicate nonessential information omitted from the example

16 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

Page 17

Where to get help

EMC support, product, and licensing information can be obtained as follows:

Product information

EMC technical support, documentation, release notes, software updates, or

information about EMC products can be obtained on the https://

support.emc.com site (registration required).

Technical support

To open a service request through the https://support.emc.com site, you must

have a valid support agreement. Contact your EMC sales representative for

details about obtaining a valid support agreement or to answer any questions

about your account.

Additional support options

l Support by Product — EMC offers consolidated, product-specific information

on the Web at: https://support.EMC.com/products

The Support by Product web pages offer quick links to Documentation, White

Papers, Advisories (such as frequently used Knowledgebase articles), and

Downloads, as well as more dynamic content, such as presentations,

discussion, relevant Customer Support Forum entries, and a link to EMC Live

Chat.

l EMC Live Chat — Open a Chat or instant message session with an EMC

Support Engineer.

Preface

eLicensing support

To activate your entitlements and obtain your VMAX license files, visit the Service

Center on https://support.EMC.com, as directed on your License Authorization

Code (LAC) letter emailed to you.

l For help with missing or incorrect entitlements after activation (that is,

expected functionality remains unavailable because it is not licensed), contact

your EMC Account Representative or Authorized Reseller.

l For help with any errors applying license files through Solutions Enabler,

contact the EMC Customer Support Center.

l If you are missing a LAC letter, or require further instructions on activating

your licenses through the Online Support site, contact EMC's worldwide

Licensing team at licensing@emc.com or call:

n North America, Latin America, APJK, Australia, New Zealand: SVC4EMC

(800-782-4362) and follow the voice prompts.

n EMEA: +353 (0) 21 4879862 and follow the voice prompts.

Your comments

Your suggestions help us improve the accuracy, organization, and overall quality of the

documentation. Send your comments and feedback to:

VMAXContentFeedback@emc.com

17

Page 18

Preface

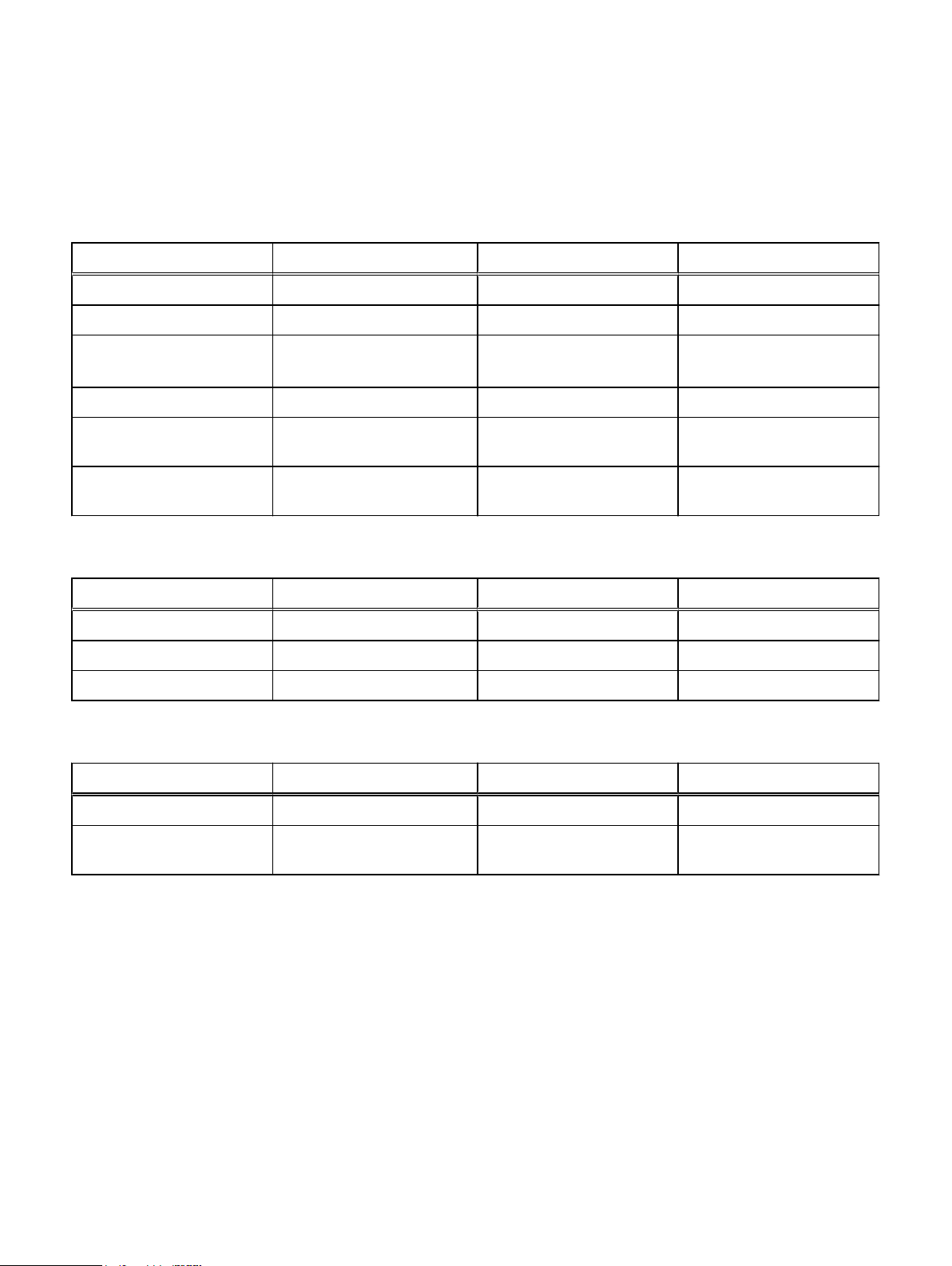

Revision history

The following table lists the revision history of this document.

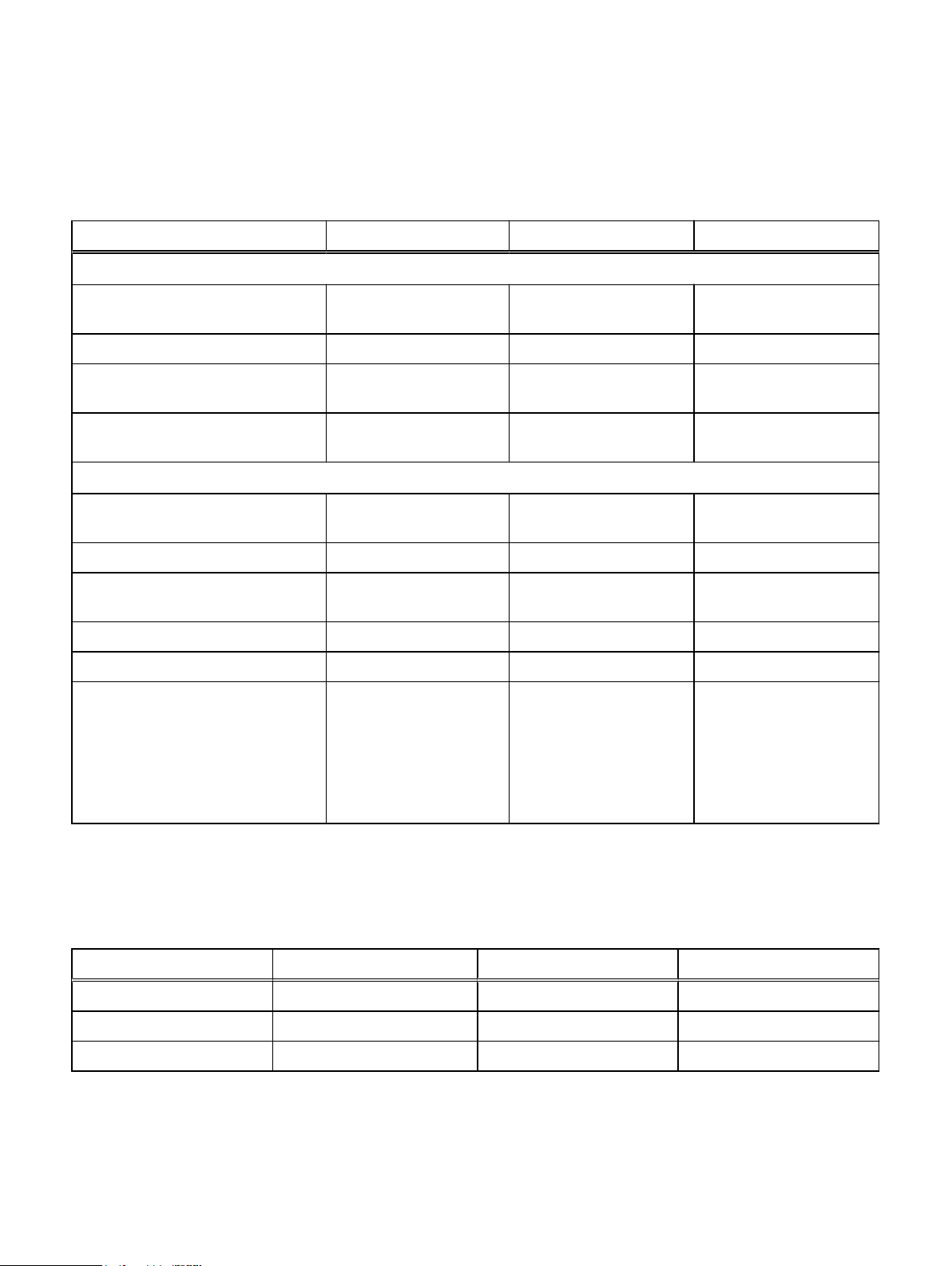

Table 2 Revision history

Revision Description and/or change Operating

system

6.5 New content:

l

RecoverPoint on page 156

l

Secure snaps on page 96

l

Data at Rest Encryption on page 39

6.4 Revised content:

l

SRDF/Metro array witness overview

6.3 New content:

l

Virtual Witness (vWitness) on page 151

l

Non-disruptive-migration

HYPERMAX OS

5977 Q2 2017

SR

HYPERMAX OS

5997.952. 892

HYPERMAX OS

5997.952. 892

6.2 Adding z/DP support (Mainframe SnapVX and zDP on page 98) HYPERMAX OS

5997.945.890

6.1 Updated Licensing appendix. HYPERMAX

5997.811.784

6.0 New content:

l

HYPERMAX OS support for mainframe on page 74

l

VMware Virtual Volumes on page 69

l

Unisphere 360 on page 49

HYPERMAX

5977.810.784

5.4 Updated VMAX3 Family power consumption and heat dissipation table:

See: VMAX3 Family specifications on page 24

l

For a 200K, dual engine system: Max heat dissipation changed from 30,975 to 28,912

HYPERMAX

5977.691.684

Btu/Hr.

l

Added note to Power and heat dissipation topic.

5.3 Changed Data Encryption Key PKCS#12 to PKCS#5. HYPERMAX

5977.691.684

5.2 Revised content: Number of CPUs required to support eManagement. HYPERMAX

5977.691.684

5.1 Revised content:

l

In SRDF/Metro, changed terminology from quorum to Witness.

5.0 New content:

l

New feature for FAST.X

l

SRDF/Metro on page 145

HYPERMAX

5977.691.684

HYPERMAX

5977.691.684

Revised content:

18 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

Page 19

Table 2 Revision history (continued)

Revision Description and/or change Operating

system

l

VMAX3 Family specifications on page 24

Removed content:

l

Legacy TimeFinder write operation details

l

EMC XtremSW Cache

Preface

4.0 New content: External provisioning with FAST.X on page 90

a

HYPERMAX OS

5977.596.583

plus Q2 Service

Pack (ePack)

3.0 New content:

l

Data at Rest Encryption on page 39

l

Data erasure on page 43

l

Cascaded SRDF solutions on page 108

l

SRDF/Star solutions on page 109

HYPERMAX OS

5977.596.583

2.0 New content: Embedded NAS (eNAS). HYPERMAX OS

5977.497.471

1.0 First release of the VMAX 100K, 200K, and 400K arrays with EMC HYPERMAX OS 5977. HYPERMAX OS

5977.250.189

a.

FAST.X requires Solutions Enabler/Unisphere for VMAX version 8.0.3.

Revision history 19

Page 20

Preface

20 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

Page 21

CHAPTER 1

VMAX3 with HYPERMAX OS

This chapter summarizes VMAX3 Family specifications and describes the features of

HYPERMAX OS. Topics include:

l

Introduction to VMAX3 with HYPERMAX OS.....................................................22

l

VMAX3 Family 100K, 200K, 400K arrays............................................................23

l

HYPERMAX OS................................................................................................. 34

VMAX3 with HYPERMAX OS 21

Page 22

VMAX3 with HYPERMAX OS

Introduction to VMAX3 with HYPERMAX OS

The EMC VMAX3 family storage arrays deliver tier-1 scale-out multi-controller

architecture with unmatched consolidation and efficiency for the enterprise. The

VMAX3 family includes three models:

l

VMAX 100K - 2 to 4 controllers, 48 cores, 2TB cache, 1440 2.5" drives, 64 ports,

1.1 PBu

l

VMAX 200K - 2 to 8 controllers, 128 cores, 8TB cache, 2880 2.5" drives, 128

ports, 2.3 PBu

l

VMAX 400K - 2 to 16 controllers, 384 cores, 16TB cache, 5760 2.5" drives, 256

ports, 4.3 PBu

VMAX3 arrays provide unprecedented performance and scale, and a radically new

architecture for enterprise storage that separates software data services from the

underlying platform. The combination of VMAX3 hardware and software provides:

l

Open system and mainframe connectivity

l

Dramatic increase in floor tile density by consolidating high capacity disk

enclosures for both 2.5" and 3.5" drives and engines in the same system bay

l

Support for either hybrid or all flash configurations

l

Unified block and file support through Embedded NAS (eNAS), eliminating the

physical hardware

l

Data at Rest Encryption for those applications that demand the highest level of

security

l

Service Level (SL) provisioning with FAST.X for external arrays (XtremIO, Cloud

Array, and other supported 3rd-party storage)

l

FICON, iSCSI, Fibre Channel, and FCoE front end protocols

l

Simplified management at scale through Service Levels, reducing time to provision

by up to 95% to less than 30 seconds

l

Extended tiering to the cloud with EMC CloudArray integration for extreme

scalability and up to 40% lower storage costs

HYPERMAX OS is an industry-leading open storage and hypervisor converged

operating system. HYPERMAX OS combines industry-leading high availability, I/O

management, quality of service, data integrity validation, storage tiering, and data

security with an open application platform.

HYPERMAX OS features the first real-time, non-disruptive storage hypervisor that

manages and protects embedded services by extending VMAX3 high availability to

services that traditionally would have run external to the array. It also provides direct

access to hardware resources to maximize performance.

22 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

Page 23

VMAX3 Family 100K, 200K, 400K arrays

VMAX3 arrays range in size from single up to two (100K), four (200K) or eight engine

systems (400K). Engines (consisting of two controllers) and high-capacity disk

enclosures (for both 2.5" and 3.5" drives) are consolidated in the same system bay,

providing a dramatic increase in floor tile density.

VMAX3 arrays come fully pre-configured from the factory, significantly reducing time

to first I/O at installation.

VMAX3 array features include:

l

Hybrid (mix of traditional/regular hard drives and solid state/flash drives) or all

flash configurations

l

System bay dispersion of up to 82 feet (25 meters) from the first system bay

l

Each system bay can house either one or two engines and up to six high-density

disk array enclosures (DAEs) per engine.

n

Single-engine configurations: up to 720 6 Gb/s SAS 2.5” drives, 360 3.5”

drives, or a mix of both drive types

n

Dual-engine configurations: up to 480 6 Gb/s SAS 2.5” drives, 240 3.5” drives,

or a mix of both drive types

l

Third-party racking (optional)

VMAX3 with HYPERMAX OS

VMAX3 Family 100K, 200K, 400K arrays 23

Page 24

VMAX3 with HYPERMAX OS

VMAX3 Family specifications

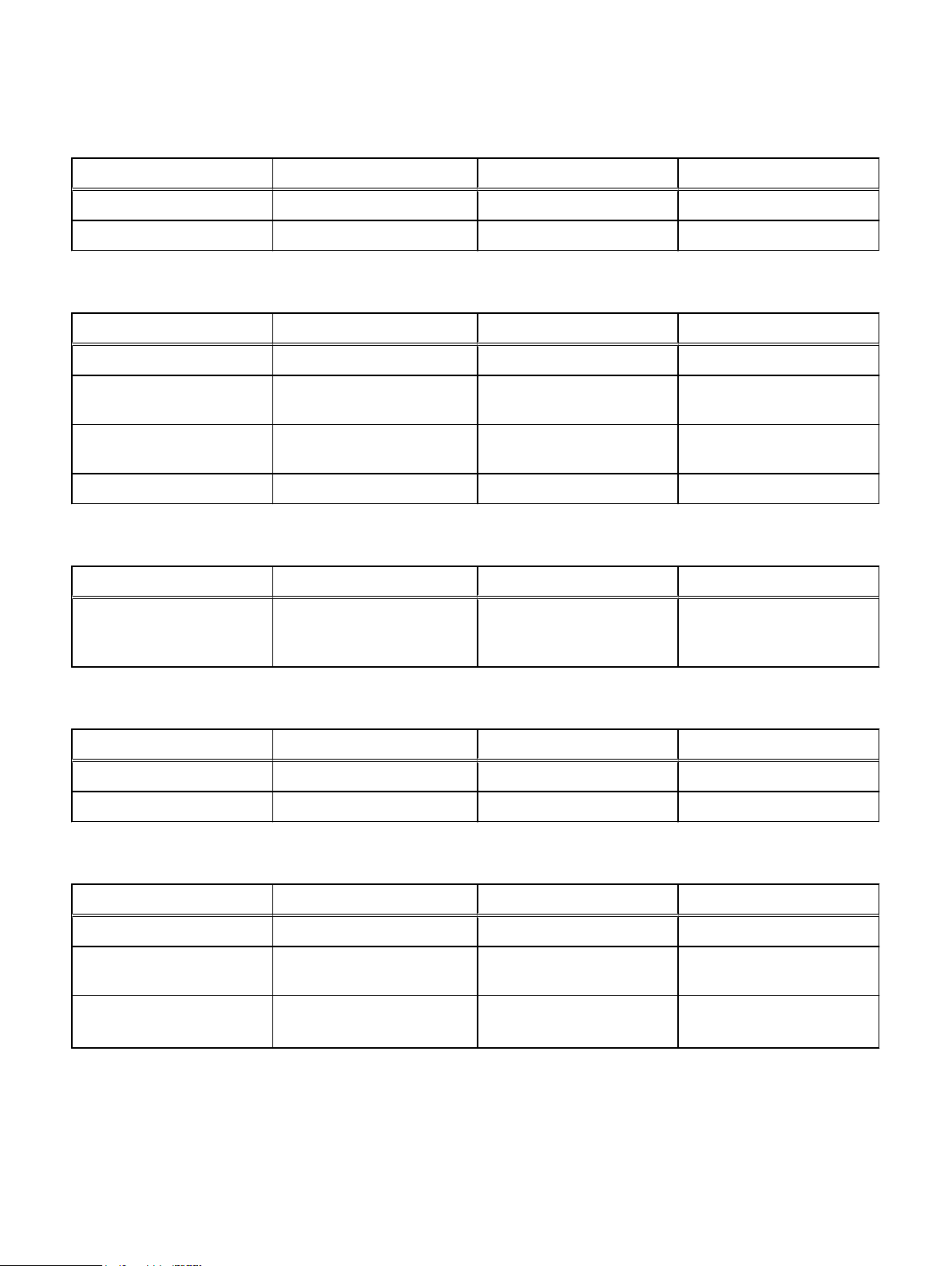

The following tables list specifications for each model in the VMAX3 Family.

Table 3 Engine specifications

Feature VMAX 100K VMAX 200K VMAX 400K

Number of engines supported 1 to 2 1 to 4 1 to 8

Engine enclosure 4U 4U 4U

CPU Intel Xeon E5-2620-v2

2.1 GHz 6 core

Dynamic Virtual Matrix BW 700GB/s 700GB/s 1400GB/s

# Cores per CPU/per

engine/per system

Dynamic Virtual Matrix

Interconnect

6/24/48 8/32/128 12/48/384

InfiniBand Dual Redundant

Fabric: 56Gbps per port

Table 4 Cache specifications

Intel Xeon E5-2650-v2

2.6 GHz 8 core

InfiniBand Dual Redundant

Fabric: 56Gbps per port

Intel Xeon E5-2697-v2

2.7 GHz 12 core

InfiniBand Dual Redundant

Fabric: 56Gbps per port

Feature VMAX 100K VMAX 200K VMAX 400K

Cache-System Min (raw) 512GB 512GB 512GB

Cache-System Max (raw) 2TBr (with 1024GB engine) 8TBr (with 2048GB engine) 16TBr (with 2048GB engine)

Cache-per engine options 512GB, 1024GB 512GB, 1024GB, 2048GB 512GB, 1024GB, 2048GB

Table 5 Vault specifications

Feature VMAX 100K VMAX 200K VMAX 400K

Vault strategy Vault to Flash Vault to Flash Vault to Flash

Vault implementation 2 to 4 Flash I/O modules per

Engine

24 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

2 to 8 Flash I/O modules per

Engine

2 to 8 Flash I/O modules per

Engine

Page 25

VMAX3 with HYPERMAX OS

Table 6 Front end I/O modules

Feature VMAX 100K VMAX 200K VMAX 400K

Max front-end I/O modules/

engine

Front-end I/O modules and

protocols supported

8 8 8

FC: 4 x 8Gbs (FC, SRDF)

FC: 4 x 16Gbs (FC, SRDF)

FICON: 4 x 16Gbs (FICON)

FCoE: 4 x 10GbE (FCoE)

iSCSI: 4 x 10 GbE (iSCSI)

GbE: 2/2 Opt/Cu (SRDF)

10GbE: 2 x 10GbE (SRDF)

Table 7 eNAS I/O modules

FC: 4 x 8Gbs (FC, SRDF)

FC: 4 x 16Gbs (FC, SRDF)

FICON: 4 x 16Gbs (FICON)

FCoE: 4 x 10GbE (FCoE)

iSCSI: 4 x 10 GbE (iSCSI)

GbE: 2/2 Opt/Cu (SRDF)

10GbE: 2 x 10GbE (SRDF)

FC: 4 x 8Gbs (FC, SRDF)

FC: 4 x 16Gbs (FC, SRDF)

FICON: 4 x 16Gbs (FICON)

FCoE: 4 x 10GbE (FCoE)

iSCSI: 4 x 10 GbE (iSCSI)

GbE: 2/2 Opt/Cu (SRDF)

10GbE: 2 x 10GbE (SRDF)

Feature VMAX 100K VMAX 200K VMAX 400K

Max eNAS I/O modules/

Software Data Mover

eNAS I/O modules supported GbE: 4 x 1GbE Cu

2 (min of 1 Ethernet I/O

module required)

10GbE: 2 x 10GbE Cu

10GbE: 2 x 10GbE Opt

FC: 4 x 8 Gbs (NDMP backup)

(max. 1 FC NDMP/Software

Data Mover)

3 (min of 1 Ethernet I/O

module required)

GbE: 4 x 1GbE Cu

10GbE: 2 x 10GbE Cu

10GbE: 2 x 10GbE Opt

FC: 4 x 8 Gbs (NDMP backup)

(max. 1 FC NDMP/Software

Data Mover)

3 (min of 1 Ethernet I/O

module required)

GbE: 4 x 1GbE Cu

10GbE: 2 x 10GbE Cu

10GbE: 2 x 10GbE Opt

FC: 4 x 8 Gbs (NDMP backup)

(max. 1 FC NDMP/Software

Data Mover)

Table 8 eNAS Software Data Movers

Feature VMAX 100K VMAX 200K VMAX 400K

Max Software Data Movers 2 (1 Active + 1 Standby) 4 (3 Active + 1 Standby) 8 (7 Active + 1 Standby)

Max NAS capacity/array

(Terabytes usable)

256 1536 3584

Table 9 Capacity, drives

Feature VMAX 100K VMAX 200K VMAX 400K

Max capacity per array .54PBu 2.31PBu 4.35PBu

Max drives per system 1440 2880 5760

Max drives per system bay 720 720 720

Min spares per system 1 1 1

Min drive count (1 engine) 4 + 1 spare 4 + 1 spare 4 + 1 spare

VMAX3 Family specifications 25

Page 26

VMAX3 with HYPERMAX OS

Table 9 Capacity, drives (continued)

Table 10 Drive specifications

VMAX 100K VMAX 200K VMAX 400K

3.5" SAS Drives

10K RPM SAS 300GBa, 600GBa, 1.2TB

10K RPM

a

300GBa, 600GBa, 1.2TB

10K RPM

15K RPM SAS 300GBa 15K RPM 300GBa 15K RPM 300GBa 15K RPM

a

300GBa, 600GBa, 1.2TB

10K RPM

a

7.2K RPM SAS 2TB 7.2K RPMa, 4TB 7.2K

a

RPM

Flash SAS 200GB

800GB

a,b

, 400GB

a,b

, 1.6TB

a,b

a,b

Flash

,

2TB 7.2K RPMa, 4TB 7.2K

a

RPM

200GB

800GB

a,b

, 400GB

a,b

, 1.6TB

a,b

a,b

Flash

,

2TB 7.2K RPMa, 4TB 7.2K

a

RPM

a,b

200GB

800GB

, 400GB

a,b

, 1.6TB

2.5" SAS Drives

10K RPM SAS 300GBc, 600GBc, 1.2TB

10K RPM

c

300GBc, 600GBc, 1.2TB

10K RPM

15K RPM SAS 300GBa 15K RPM 300GBa 15K RPM 300GBa 15K RPM

Flash SAS 200GB

800GB

Flash SAS 960GB

a,b

, 400GB

a,b

, 1.6TB

c,b

, 1.92TB

a,b

,

a,b

Flash

c,b

Flash 960GB

200GB

800GB

a,b

, 400GB

a,b

, 1.6TB

c,b

, 1.92TB

a,b

BE interface 6Gbps SAS 6Gbps SAS 6Gbps SAS

RAID options

(all drives)

RAID 1

RAID 5 (3+1)

RAID 5 (7+1)

RAID 6 (6+2)

RAID 6 (14+2)

RAID 1

RAID 5 (3+1)

RAID 5 (7+1)

RAID 6 (6+2)

RAID 6 (14+2)

c

a,b

,

Flash

c,b

Flash 960GB

300GBc, 600GBc, 1.2TB

10K RPM

a,b

200GB

800GB

, 400GB

a,b

, 1.6TB

c,b

, 1.92TB

RAID 1

RAID 5 (3+1)

RAID 5 (7+1)

RAID 6 (6+2)

RAID 6 (14+2)

a,b

a,b

a,b

Flash

a,b

Flash

c,b

,

c

,

Flash

a.

Capacity points and drive formats available for upgrades.

b.

Mixing of 200GB, 400GB, 800GB, or 1.6TB Flash capacities with 960GB, or 1.92TB Flash capacities on the same array is not

currently supported.

c.

Capacity points and drive formats available on new systems and upgrades

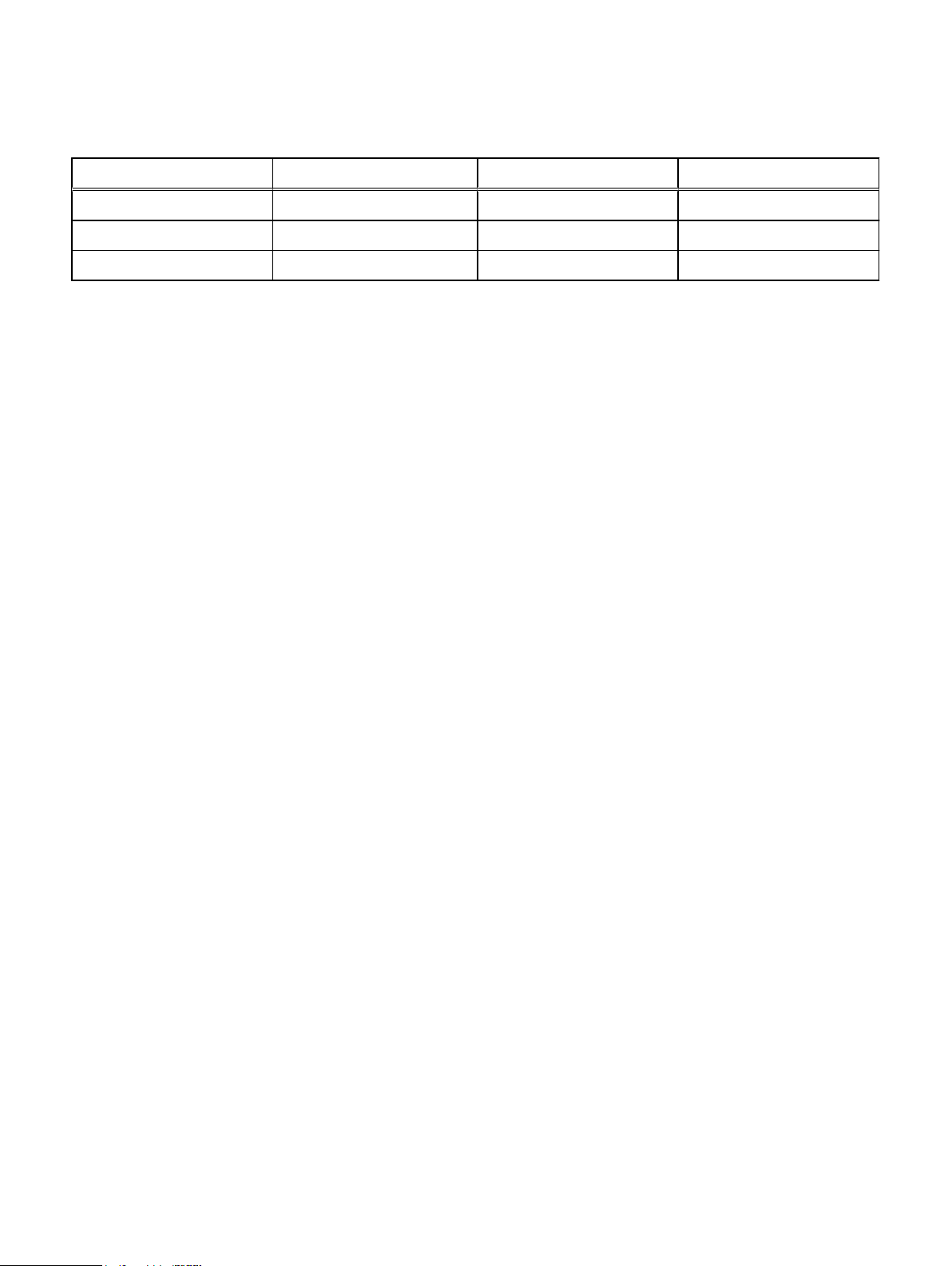

Table 11 System configuration types

Feature VMAX 100K VMAX 200K VMAX 400K

All 2.5" DAE configurations 2 bays 1440 drives 4 bays 2880 drives 8 bays 5760 drives

All 3.5" DAE configurations 2 bays 720 drives 4 bays 1440 drives 8 bays 2880 drives

Mixed configurations 2 bays 1320 drives 4 bays 2640 drives 8 bays 5280 drives

26 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

Page 27

VMAX3 with HYPERMAX OS

Table 12 Disk Array Enclosures

Feature VMAX 100K VMAX 200K VMAX 400K

120 x 2.5" drive DAE Yes Yes Yes

60 x 3.5" drive DAE Yes Yes Yes

Table 13 Cabinet configurations

Feature VMAX 100K VMAX 200K VMAX 400K

Standard 19" bays Yes Yes Yes

Single bay system

configuration

Dual-engine system bay

configuration

Third party rack mount option Yes Yes Yes

Yes Yes Yes

Yes Yes Yes

Table 14 Dispersion specifications

Feature VMAX 100K VMAX 200K VMAX 400K

System bay dispersion Up to 82 feet (25m) between

System Bay 1 and System Bay

2

Table 15 Preconfiguration

Up to 82 feet (25m) between

System Bay 1 and any other

System Bay

Up to 82 feet (25m) between

System Bay 1 and any other

System Bay

Feature VMAX 100K VMAX 200K VMAX 400K

100% Thin Provisioned Yes Yes Yes

Preconfigured at the factory Yes Yes Yes

Table 16 Host support

Feature VMAX 100K VMAX 200K VMAX 400K

Open systems Yes Yes Yes

Mainframe (CKD 3380 and

3390 emulation)

IBM i Series support

(D910 only)

Yes Yes Yes

Yes Yes Yes

VMAX3 Family specifications 27

Page 28

VMAX3 with HYPERMAX OS

Table 17 Hardware compression support option (SRDF)

Feature VMAX 100K VMAX 200K VMAX 400K

GbE / 10 GbE Yes Yes Yes

8Gb/s FC Yes Yes Yes

16Gb/s FC Yes Yes Yes

28 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

Page 29

VMAX3 with HYPERMAX OS

Table 18 VMAX3 Family connectivity

I/O protocols VMAX 100K VMAX 200K VMAX 400K

8 Gb/s FC Host/SRDF ports

Maximum/engine 32 32 32

Maximum/array 64 128 256

16 Gb/s FC Host/SRDF ports

Maximum/engine 32 32 32

Maximum/array 64 128 256

16 Gb/s FICON ports

Maximum/engine 32 32 32

Maximum/array 64 128 256

10 GbE iSCSI ports

Maximum/engine 32 32 32

Maximum/array 64 128 256

10 GbE FCoE ports

Maximum/engine 32 32 32

Maximum/array 64 128 256

10 GbE SRDF ports

Maximum/engine 16 16 16

Maximum/array 32 64 128

GbE SRDF ports

Maximum/engine 32 32 32

Maximum/array 64 128 256

Embedded NAS ports

GbE Ports

Maximum ports/Software Data Mover 8 12 12

Maximum ports/array 16 48 96

10 GbE (Cu or Optical) ports

Maximum ports/Software Data Mover 4 6 6

Maximum ports/array 8 24 48

8 Gb/s FC NDMP back-up ports

Maximum ports/Software Data Mover 1 1 1

Maximum ports/array 2 4 8

VMAX3 Family specifications 29

Page 30

VMAX3 with HYPERMAX OS

Disk drive support

The VMAX 100K, 200K, and 400K support the latest 6Gb/s dual-ported native SAS

drives. All drive families (Enterprise Flash, 10K, 15K and 7.2K RPM) support two

independent I/O channels with automatic failover and fault isolation. Configurations

with mixed-drive capacities and speeds are allowed depending upon the configuration.

All capacities are based on 1 GB = 1,000,000,000 bytes. Actual usable capacity may

vary depending upon configuration.

Table 19 2.5" disk drives

Platform Support VMAX 100K, 200K, 400K

Nominal capacity (GB) 200

a ,c

Speed (RPM) Flash Flash Flash Flash Flash Flash 15K 10K 10K 10K

400

a ,c

800

a ,c

960

b,c

1600

a ,c

1920

b ,c

300

a

300

b

600

b

1200

b

Average seek time

N/A N/A N/A N/A N/A N/A 2.8/3.33.7/4.2 3.7/4.2 3.7/4.2

(read/write ms)

Raw capacity (GB) 200 400 800 960 1600 1920 292.6 292.6 585.4 1200.2

Open systems

196.86 393.72 787.44 939.38 1574.88 1880.08 288.02 288.02 576.05 1181.16

formatted capacity

(GB)

Mainframe formatted

191.53 393.64 787.27 939.29 1574.55 1879.75 287.86 287.86 575.72 1180.91

capacity (GB)

a.

Capacity points and drive formats available for upgrades.

b.

Capacity points and drive formats available on new systems and upgrades.

c.

Mixing of 200GB, 400GB, 800GB, or 1.6TB Flash capacities with 960GB, or 1.92TB Flash capacities on the same array is not

currently supported.

Table 20 3.5" disk drives

Platform Support VMAX 100K, 200K, 400K

Nominal capacity (GB) 200

a,b

Speed (RPM) Flash Flash Flash Flash 15K 10K 10K 10K 7.2K 7.2K

Average seek time

N/A N/A N/A N/A 2.8/3.33.7/4.2 3.7/4.2 3.7/4.2 8.2/9.28.2/9.

(read/write ms)

400

a,b

800

a,b

1600

a,b

300

a

300

a

600

a

1200a2000a4000

a

2

Raw capacity (GB) 200 400 800 1600 292.6 292.6 585.4 1200.2 1912.1 4000

Open systems

196.86 393.72 787.44 1574.88 288.02 288.02 576.05 1181.16 1968.6 3938.5

formatted capacity

(GB)

Mainframe formatted

capacity (GB)

a.

Capacity points and drive formats available for upgrades.

b.

Mixing of 200GB, 400GB, 800GB, or 1.6TB Flash capacities with 960GB, or 1.92TB Flash capacities on the same array is not

currently supported.

30 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

196.53 393.64 787.27 1574.55 287.86 287.86 575.72 1180.91 1968.183938.1

0

Page 31

Table 21 Power consumption and heat dissipation

VMAX 100K VMAX 200K VMAX 400K

VMAX3 with HYPERMAX OS

Maximum power

and heat

dissipation at

<26°C and

a

>35°C

System bay 1

Single engine

System bay 2

Single engine

b

System bay 1

Dual engine

System bay 2

Dual engine

b

a.

Power values and heat dissipations shown at >35°C reflect the higher power levels associated with both the battery recharge

cycle, and the initiation of high ambient temperature adaptive cooling algorithms. Values at <26°C are reflective of more steady

state maximum values during normal operation.

b.

Power values for system bay 2 and all subsequent system bays where applicable.

Maximum

total power

consumption

<26°C /

>35°C

(kVA)

8.27 / 10.8 28,201 /

8.13 / 10.4 27,723 /

6.44 / 8.8 21,960 /

Maximum

heat

dissipation

<26°C /

>35°C

(Btu/Hr)

36,828

35,464

30,008

Maximum

total power

consumption

<26°C /

>35°C

(kVA)

Maximum

heat

dissipation

<26°C /

>35°C

(Btu/Hr)

8.37 / 10.9 28,542 /

37,169

8.33 / 10.6 28,405 /

36,146

6.74 / 9.1 22,983 /

31,031

N/A 6.7 / 8.8 22,847 /

30,008

Table 22 Physical specifications

Maximum

total power

consumption

<26°C /

>35°C

(kVA)

Maximum

heat

dissipation

<26°C /

>35°C

(Btu/Hr)

8.57 / 11.1 29,224 /

37,851

8.43 / 10.7 28,746 /

36,487

7.04 / 9.4 24,006 /

32,054

6.9 / 9 23,529 /

30,690

Bay configurations

a

Height

(in/cm)

b

c

Width

(in/cm)

Depth

(in/cm)

d

Weight

(max lbs/kg)

System bay, single-engine 75/190 24/61 47/119 2065/937

System bay, dual-engine 75/190 24/61 47/119 1860/844

a.

Clearance for service/airflow is the front at 42 in (106.7 cm) front and the rear at 30 in (76.2 cm).

b.

An additional 18 in (45.7 cm) is recommended for ceiling/top clearance.

c.

Measurement includes .25 in. (0.6 cm) gap between bays.

d.

Includes front and rear doors.

VMAX3 Family specifications 31

Page 32

VMAX3 with HYPERMAX OS

Input Power Requirements

Table 23 Power options

Feature VMAX 100K VMAX 200K VMAX 400K

Power Single or Three Phase Delta or

Wye

Single or Three Phase Delta or

Wye

Single or Three Phase Delta or

Wye

Table 24 Input power requirements - single-phase, North American, International, Australian

Specification North American 3-wire connection

(2 L & 1 G)

a

International and Australian 3-wire

connection

(1 L & 1 N & 1 G)

a

Input nominal voltage 200–240 VAC ± 10% L- L nom 220–240 VAC ± 10% L- N nom

Frequency 50–60 Hz 50–60 Hz

Circuit breakers 30 A 32 A

Power zones Two Two

Minimum power requirements at

customer site

l

Three 30 A, single-phase drops per zone.

l

Two power zones require 6 drops, each drop rated for 30 A.

l

PDU A and PDU B require three separate single-phase 30 A drops for each

PDU.

a.

L = line or phase, N = neutral, G = ground

Table 25 Input power requirements - three-phase, North American, International, Australian

Specification North American 4-wire connection

a

Input voltage

(3 L & 1 G)

b

200–240 VAC ± 10% L- L nom 220–240 VAC ± 10% L- N nom

International 5-wire connection

(3 L & 1 N & 1 G)

Frequency 50–60 Hz 50–60 Hz

Circuit breakers 50 A 32 A

Power zones Two Two

Minimum power requirements at

customer site

l

Two 50 A, three-phase drops per

bay.

l

PDU A and PDU B require one

Two 32 A, three-phase drops per bay.

separate three-phase Delta 50 A

drops for each.

a.

L = line or phase, N = neutral, G = ground

b.

An imbalance of AC input currents may exist on the three-phase power source feeding the array, depending on the

configuration. The customer's electrician must be alerted to this possible condition to balance the phase-by-phase loading

conditions within the customer's data center.

a

32 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

Page 33

VMAX3 with HYPERMAX OS

Radio frequency interference specifications

Electro-magnetic fields, which include radio frequencies can interfere with the

operation of electronic equipment. EMC Corporation products have been certified to

withstand radio frequency interference (RFI) in accordance with standard

EN61000-4-3. In data centers that employ intentional radiators, such as cell phone

repeaters, the maximum ambient RF field strength should not exceed 3 Volts /meter.

Table 26 Minimum distance from RF emitting devices

Repeater power level

1 Watt 9.84 ft (3 m)

2 Watt 13.12 ft (4 m)

5 Watt 19.69 ft (6 m)

7 Watt 22.97 ft (7 m)

10 Watt 26.25 ft (8 m)

12 Watt 29.53 ft (9 m)

15 Watt 32.81 ft (10 m)

a.

Effective Radiated Power (ERP)

a

Recommended minimum distance

VMAX3 Family specifications 33

Page 34

VMAX3 with HYPERMAX OS

HYPERMAX OS

This section highlights the features of the HYPERMAX OS.

What's new in HYPERMAX OS 5977 Q2 2017

This section describes new functionality and features provided by HYPERMAX OS

5977 Q2 2017 for VMAX 100K, 200K, and 400K arrays.

RecoverPoint

HYPERMAX OS 5977 Q2 2017 SR introduces support for RecoverPoint on VMAX

storage arrays. RecoverPoint is a comprehensive data protection solution designed to

provide production data integrity at local and remote sites. RecoverPoint also provides

the ability to recover data from any point in time using journaling technology.

RecoverPoint on page 156 provides more information.

Secure snaps

Secure snaps is an enhancement to the current snapshot technology. Secure snaps

prevent administrators or other high-level users from intentionally or unintentionally

deleting snapshot data. In addition, secure snaps are also immune to automatic failure

resulting from running out of Storage Resource Pool (SRP) or Replication Data

Pointer (RDP) space on the array.

Secure snaps on page 96 provides more information.

Data at Rest Encryption

Data at Rest Encryption (D@RE) now supports the OASIS Key Management

Interoperability Protocol (KMIP) and can integrate with external servers that also

support this protocol. This release has been validated to interoperate with the

following KMIP-based key managers:

l

Gemalto SafeNet KeySecure

l

IBM Security Key Lifecycle Manager

Data at Rest Encryption on page 39 provides more information.

34 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

Page 35

HYPERMAX OS emulations

HYPERMAX OS provides emulations (executables) that perform specific data service

and control functions in the HYPERMAX environment. The following table lists the

available emulations.

Table 27 HYPERMAX OS emulations

VMAX3 with HYPERMAX OS

Area Emulation Description Protocol Speed

Back-end DS Back-end connection in the

array that communicates with

the drives, DS is also known

as an internal drive controller.

DX Back-end connections that

are not used to connect to

hosts. Used by ProtectPoint,

Cloud Array, XtremIO and

other arrays.

ProtectPoint leverages

FAST.X to link Data Domain

to the array. DX ports must

be configured for FC

protocol.

Management IM Separates infrastructure

tasks and emulations. By

separating these tasks,

emulations can focus on I/Ospecific work only, while IM

manages and executes

common infrastructure tasks,

such as environmental

monitoring, Field

Replacement Unit (FRU)

monitoring, and vaulting.

SAS 6 Gb/s

FC 16 or 8 Gb/s

N/A

a

ED Middle layer used to separate

Host connectivity FA - Fibre Channel

SE - iSCSI

FE - FCoE

EF - FICON

b

front-end and back-end I/O

processing. It acts as a

translation layer between the

front-end, which is what the

host knows about, and the

back-end, which is the layer

that reads, writes, and

communicates with physical

storage in the array.

Front-end emulation that:

l

Receives data from the

host (network) and

commits it to the array

l

Sends data from the

array to the host/network

HYPERMAX OS emulations 35

N/A

FC - 16 or 8 Gb/s

SE and FE - 10 Gb/s

EF - 16 Gb/s

Page 36

VMAX3 with HYPERMAX OS

Table 27 HYPERMAX OS emulations (continued)

Area Emulation Description Protocol Speed

Remote replication RF - Fibre Channel

RE - GbE

a.

The 8 Gb/s module auto-negotiates to 2/4/8 Gb/s and the 16 Gb/s module auto-negotiates to 16/8/4 Gb/s using optical SFP

and OM2/OM3/OM4 cabling.

b.

Only on VMAX 450F, 850F, and 950F arrays.

Interconnects arrays for

Symmetrix Remote Data

Facility (SRDF).

RF - 8 or 16 Gb/s FC SRDF

RE - 1 GbE SRDF

RE - 10 GbE SRDF

Container applications

HYPERMAX OS provides an open application platform for running data services.

HYPERMAX OS includes a light-weight hypervisor that enables multiple operating

environments to run as virtual machines on the storage array.

Application containers are virtual machines that provide embedded applications on the

storage array. Each container virtualizes hardware resources required by the

embedded application, including:

l

Hardware needed to run the software and embedded application (processor,

memory, PCI devices, power management)

l

VM ports, to which LUNs are provisioned

l

Access to necessary drives (boot, root, swap, persist, shared)

a

Embedded Management

The eManagement container application embeds management software (Solutions

Enabler, SMI-S, Unisphere for VMAX) on the storage array, enabling you to manage

the array without requiring a dedicated management host.

With eManagement, you can manage a single storage array and any SRDF attached

arrays. To manage multiple storage arrays with a single control pane, use the

traditional host-based management interfaces, Unisphere for VMAX and Solutions

Enabler. To this end, eManagement allows you to link-and-launch a host-based

instance of Unisphere for VMAX.

eManagement is typically pre-configured and enabled at the EMC factory, thereby

eliminating the need for you to install and configure the application. However, starting

with HYPERMAX OS 5977.945.890, eManagement can be added to VMAX arrays in

the field. Contact your EMC representative for more information.

Embedded applications require system memory. The following table lists the amount

of memory unavailable to other data services.

Table 28

eManagement resource requirements

VMAX3 model CPUs Memory Devices supported

VMAX3 100K 4 12 GB 64K

VMAX3 200K 4 16 GB 128K

VMAX3 400K 4 20 GB 256K

36 Product Guide VMAX 100K, VMAX 200K, VMAX 400K with HYPERMAX OS

Page 37

Virtual Machine ports

Virtual machine (VM) ports are associated with virtual machines to avoid contention

with physical connectivity. VM ports are addressed as ports 32-63 per director FA

emulation.

LUNs are provisioned on VM ports using the same methods as provisioning physical

ports.

A VM port can be mapped to one and only one VM.

A VM can be mapped to more than one port.

Embedded Network Attached Storage

Embedded Network Attached Storage (eNAS) is fully integrated into the VMAX3

array. eNAS provides flexible and secure multi-protocol file sharings (NFS 2.0, 3.0,

4.0/4.1), CIFS/SMB 3.0) and multiple file server identities (CIFS and NFS serves).

eNAS enables:

l

File server consolidation/multi-tenancy

l

Built-in asynchronous file level remote replication (File Replicator)

l

Built-in Network Data Management Protocol (NDMP)

l

VDM Synchronous replication with SRDF/S and optional automatic failover

manager

File Auto Recovery (FAR) with optional File Auto Recover Manager (FARM)

l

FAST.X in external provisioning mode

l

Anti-virus

eNAS provides file data services that enable customers to:

l

Consolidate block and file storage in one infrastructure

l

Eliminate the gateway hardware, reducing complexity and costs

l

Simplify management

Consolidated block and file storage reduces costs and complexity while increasing

business agility. Customers can leverage rich data services across block and file

storage including FAST, service level provisioning, dynamic Host I/O Limits, and Data

at Rest Encryption.

VMAX3 with HYPERMAX OS

eNAS solutions and implementation

The eNAS solution runs on standard array hardware and is typically pre-configured at

the factory. In this scenario, EMC provides a one-time setup of the Control Station

and Data Movers, containers, control devices, and required masking views as part of

the factory eNAS pre-configuration. Additional front-end I/O modules are required to