Page 1

EMC Enterprise Storage

EMC Fibre Channel Storage Systems

Models FC4500, FC5300, and FC5700

CONFIGURATION PLANNING GUIDE

P/N 014003039-02

EMC Corporation 171 South Street, Hopkinton, MA 01748-9103

Corporate Headquarters: (508) 435-1000, (800) 424-EMC2 Fax: (508) 435-5374 Service: (800) SVC-4EMC

Page 2

Copyright © EMC Corporation 2000, 2001. All rights reserved.

Printed May 2001

No part of this publication may be reproduced or distributed in any form or by any means, or stored in a

database or retrieval system, without the prior written consent of EMC Corporation.

The information contained in this document is subject to change without notice. EMC Corporation assumes

no responsibility for any errors that may appear.

All computer software programs, including but not limited to microcode, described in this document are

furnished under a license, and may be used or copied only in accordance with the terms of such license.

EMC either owns or has the right to license the computer software programs described in this document.

EMC Corporation retains all rights, title and interest in the computer software programs.

EMC Corporation makes no warranties, expressed or implied, by operation of law or otherwise, relating to

this document, the products or the computer software programs described herein. EMC CORPORATION

DISCLAIMS ALL IMPLIED WARRANTIES OF MERCHANTIBILITY AND FITNESS FOR A PARTICULAR

PURPOSE. In no event shall EMC Corporation be liable for (a) incidental, indirect, special, or consequential

damages or (b) any damages whatsoever resulting from the loss of use, data or profits, arising out of this

document, even if advised of the possibility of such damages.

Trademark Information

EMC2, EMC, MOSAIC:2000, Symmetrix, CLARiiON, and Navisphere are registered trademarks and EMC Enterprise Storage, The Enterprise Storage

Company, The EMC Effect, Connectrix, EDM, SDMS, SRDF, Timefinder, PowerPath, InfoMover, FarPoint, EMC Enterprise Storage Network, EMC

Enterprise Storage Specialist, EMC Storage Logix, Universal Data Tone, E-Infostructure, Celerra, Access Logix, MirrorView, and SnapView are

trademarks of EMC Corporation.

All other trademarks mentioned herein are the property of their respective owners.

ii

EMC Fibre Channel Storage Systems Configuration Planning Guide

Page 3

Contents

Preface..............................................................................................................................xi

Chapter 1 About Fibre Channel Storage Systems and Networks

(SANs)

Introducing EMC Fibre Channel Storage Systems.......................1-2

Fibre Channel Background..............................................................1-3

Fibre Channel Storage Components ..............................................1-4

Server Component (Host-Bus Adapter Driver Package with

Software).....................................................................................1-4

Interconnect Components ........................................................1-4

Storage Component (Storage Systems, Storage Processors

(SPs), and Other Hardware).....................................................1-9

Types of Storage System Installations..........................................1-10

About Switched Shared Storage and SANs (Storage Area

Networks) ........................................................................................1-11

Storage Groups.........................................................................1-12

Storage System Hardware for Shared Storage....................1-15

About Unshared Storage ...............................................................1-16

Storage System Hardware for Unshared Storage...............1-16

Chapter 2 RAID Types and Tradeoffs

Introducing RAID.............................................................................2-2

Disk Striping...............................................................................2-2

Mirroring.....................................................................................2-2

RAID Groups and LUNs ..........................................................2-3

RAID Types........................................................................................2-4

RAID 5 Group (Individual Access Array) .............................2-4

RAID 3 Group (Parallel Access Array)...................................2-5

EMC Fibre Channel Storage Systems Configuration Planning Guide

iii

Page 4

Contents

RAID 1 Mirrored Pair ...............................................................2-7

RAID 0 Group (Nonredundant Array) ..................................2-8

RAID 1/0 Group (Mirrored RAID 0 Group).........................2-8

Individual Disk Unit .................................................................2-9

Hot Spare....................................................................................2-9

RAID Benefits and Tradeoffs.........................................................2-12

Performance .............................................................................2-13

Storage Flexibility....................................................................2-14

Data Availability and Disk Space Usage..............................2-14

Guidelines for RAID Types ...........................................................2-17

Sample Applications for RAID Types..........................................2-19

Chapter 3 Planning File Systems and LUNs with Shared Switched

Storage

Dual Paths to LUNs..........................................................................3-2

Sample Shared Switched Installation ............................................3-3

Planning Applications, LUNs, and Storage Groups....................3-6

Application and LUN Planning ..............................................3-6

Application and LUN Planning Worksheet ..........................3-7

LUN and Storage Group Planning Worksheet .....................3-8

LUN Details Worksheet..........................................................3-11

Chapter 4 Planning LUNs and File Systems with Unshared Direct

Storage

Dual SPs and Paths to LUNs...........................................................4-2

Unshared Direct and Shared-or-Clustered Direct Storage .........4-2

Sample Unshared Direct Installation......................................4-2

Sample Shared-or-Clustered Direct Installation...................4-3

Planning Applications and LUNs..................................................4-4

Application and LUN Planning ..............................................4-4

Application and LUN Planning Worksheet ..........................4-5

LUN Planning Worksheet........................................................4-6

Completing the LUN Details Worksheet.............................4-12

Chapter 5 Storage System Hardware

Hardware for Shared Storage .........................................................5-3

Storage Hardware — Rackmount DPE-Based Storage

Systems .......................................................................................5-3

Disks............................................................................................5-4

Storage Processor (SP) ..............................................................5-5

iv

EMC Fibre Channel Storage Systems Configuration Planning Guide

Page 5

Contents

Hardware for Unshared Storage....................................................5-6

Types of Storage System for Unshared Storage ................... 5-6

Disks............................................................................................ 5-8

Storage Processor (SP).............................................................. 5-9

Planning Your Hardware Components ...................................... 5-11

Configuration Tradeoffs - Shared Storage .......................... 5-11

Configuration Tradeoffs - Unshared Storage ..................... 5-12

Hardware Data Sheets................................................................... 5-14

DPE Data Sheet........................................................................ 5-14

iDAE Data Sheet...................................................................... 5-16

DAE Data Sheet....................................................................... 5-18

Cabinets for Rackmount Enclosures............................................ 5-20

Cable and Configuration Guidelines .......................................... 5-21

Hardware Planning Worksheets.................................................. 5-24

Hardware for Shared Storage ...............................................5-24

Hardware Component Worksheet for Shared Storage...... 5-25

Hardware Component Worksheet for Shared Storage...... 5-27

Hardware for Unshared Storage ..........................................5-27

Hardware Component Worksheet for Unshared Storage. 5-30

Hardware Component Worksheet for Unshared Storage. 5-31

Hardware Component Worksheet for Unshared Storage. 5-33

Hardware Component Worksheet for Unshared Storage. 5-35

Chapter 6 Storage-System Management

Using Navisphere Manager Software........................................... 6-3

Storage Management Worksheets.................................................. 6-5

Index ................................................................................................................................i-1

EMC Fibre Channel Storage Systems Configuration Planning Guide

v

Page 6

Contents

vi

EMC Fibre Channel Storage Systems Configuration Planning Guide

Page 7

Figures

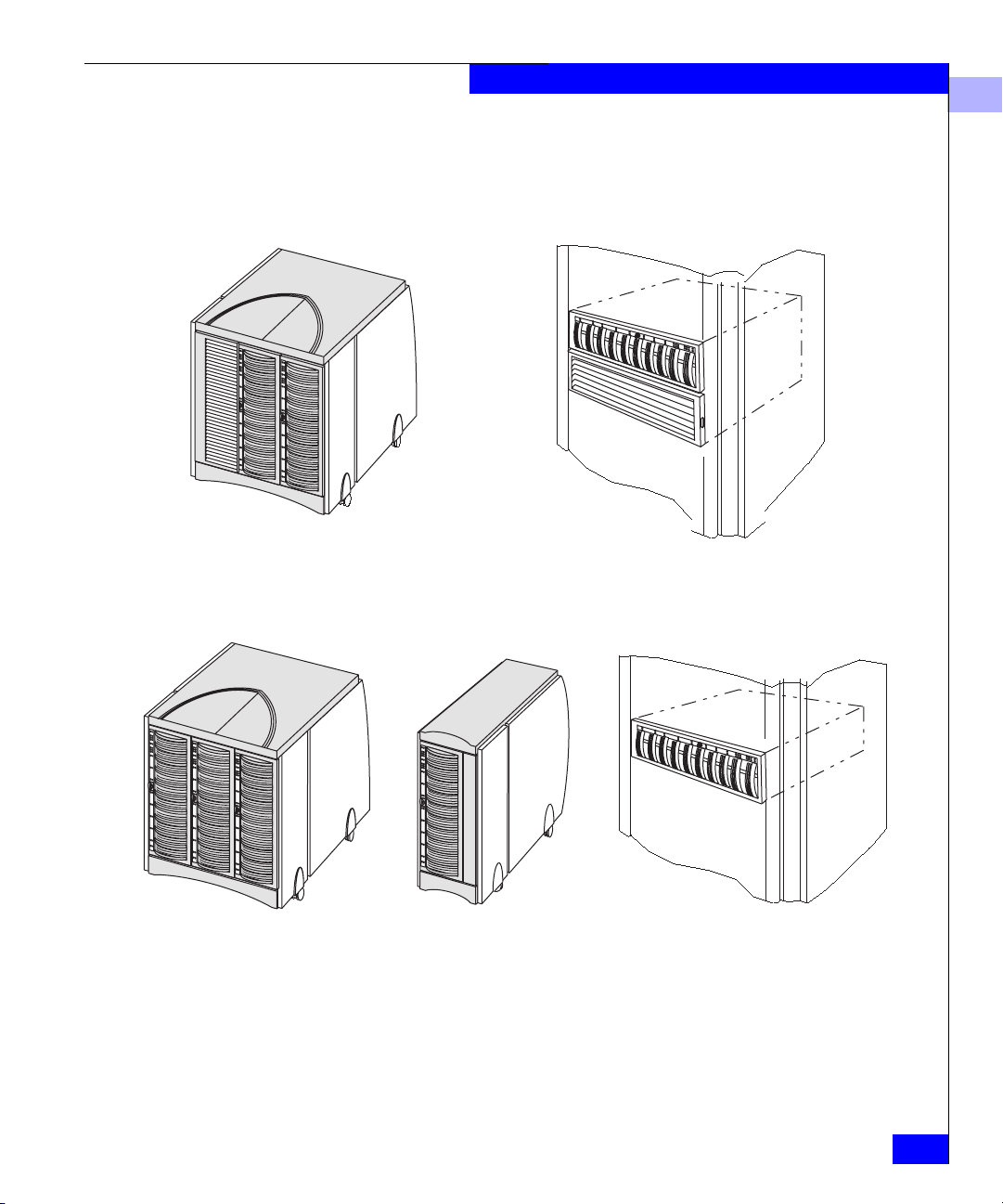

1-1 Storage System Models ................................................................................ 1-2

1-2 Nodes - Initiator and Target ....................................................................... 1-3

1-3 Switch and Hub Topologies Compared .................................................... 1-6

1-4 A Switch Zone ............................................................................................... 1-7

1-5 16-Port Switch, Back View .......................................................................... 1-7

1-6 Nine-Port Hub .............................................................................................. 1-8

1-7 Disk-Array Processor Enclosure (DPE) ..................................................... 1-9

1-8 Types of Storage System Installation ....................................................... 1-10

1-9 Components of a SAN ............................................................................... 1-11

1-10 Sample SAN Configuration ...................................................................... 1-13

1-11 Data and Configuration Access Control with Shared Storage ............ 1-14

1-12 Storage System with a DPE and Three DAEs ........................................ 1-15

1-13 Storage System Hardware for Unshared Storage .................................. 1-17

2-1 Multiple LUNs in a RAID Group ............................................................... 2-3

2-2 RAID 5 Group ............................................................................................... 2-5

2-3 RAID 3 Group ............................................................................................... 2-6

2-4 RAID 1 Mirrored Pair .................................................................................. 2-7

2-5 RAID 1/0 Group (Mirrored RAID 0 Group) ............................................ 2-9

2-6 How a Hot Spare Works ............................................................................ 2-11

2-7 Disk Space Usage in the RAID Configurations ...................................... 2-16

3-1 Sample Shared Switched High Availability installation ........................ 3-3

4-1 Unshared Direct Installation ....................................................................... 4-2

4-2 Sample Clustered Installation ..................................................................... 4-3

5-1 Shared and Unshared Storage .................................................................... 5-2

5-2 DPE Storage-System Components – Rackmount Model ........................ 5-3

5-3 Rackmount System with DPE and DAEs .................................................. 5-4

5-4 Shared Storage Systems ............................................................................... 5-5

5-5 Storage System Types for Unshared Storage ........................................... 5-7

5-6 DPE Components - Deskside Model ......................................................... 5-8

5-7 Disks and Disk IDs ....................................................................................... 5-9

EMC Fibre Channel Storage-System Configuration Planning Guide

vii

Page 8

Figures

5-8 Storage System with Two SPs Connected to the Same Server ............. 5-10

5-9 Storage System with Two SPs Connected to Different Servers ............ 5-10

5-10 Comparison Between Optical and Copper Cabling ............................... 5-21

5-11 Cable Identifier — DPE-Based System for Shared Storage ................... 5-24

5-12 Sample Shared Storage Installation .......................................................... 5-26

5-13 Cable Identifier — Unshared System without Hubs ............................. 5-28

5-14 Cable Identifier — Unshared Full-Fibre System with Hubs ................. 5-29

5-15 Sample Unshared Deskside System — Basic Configuration ................ 5-31

5-16 Sample Unshared Deskside System — Dual-Adapter/Dual-SP

Configuration ............................................................................................... 5-32

5-17 Sample Component Worksheet for DPE-Based System with Hubs — Two

Loops.............................................................................................................. 5-34

6-1 Sample Shared Switched Environment with Navisphere Manager ...... 6-4

6-2 Sample Unshared Environment with Navisphere Manager .................. 6-4

viii

EMC Fibre Channel Storage-System Configuration Planning Guide

Page 9

Tables

2-1 Performance, Availability, and Cost of RAID Types

(Individual Unit = 1.0) ............................................................................... 2-13

3-1 Cache Recommendations for Different RAID Types ............................ 3-16

4-1 Cache Recommendations for Different RAID Types ............................ 4-15

5-1 High-Availability Options, Deskside Unshared Storage ...................... 5-13

5-2 High-Availability Options, Rackmount Unshared Storage ................. 5-13

5-3 Cable Sizes — Optical ............................................................................ 5-22

5-4 Cable Sizes — Copper ................................................................................ 5-23

EMC Fibre Channel Storage-System Configuration Planning Guide

ix

Page 10

Tab les

x

EMC Fibre Channel Storage-System Configuration Planning Guide

Page 11

Audience for the Manual

Preface

This planning guide provides an overview of Fibre Channel

disk-array storage-system models and offers essential background

information and worksheets to help you with the installation and

configuration planning.

Please read this guide

• if you are considering purchase of an EMC Fibre Channel

disk-array storage system and want to understand its features; or

• before you plan the installation of a storage system.

You should be familiar with the host servers that will use the storage

systems and with the operating systems of the servers. After reading

this guide, you will be able to

• determine the best storage system components for your

installation

• determine your site requirements

• configure storage systems correctly

EMC Fibre Channel Storage-System Configuration Planning Guidexi

xi

Page 12

Preface

Organization of the Manual

Chapter 1 Provides background information on the Fibre

Channel protocols and explains the major installation

types.

Chapter 2 Describes the RAID Groups and the different ways

they store data.

Chapter 3 Describes installations for shared switched storage.

Chapter 4 Describes installations for unshared direct, and

shared-or-clustered direct, and shared switched

storage.

Chapter 5 Describes hardware components.

Chapter 6 Describes storage-system management utilities.

xii

EMC Fibre Channel Storage-System Configuration Planning Guide

Page 13

1

About Fibre Channel

Storage Systems and

Networks (SANs)

This chapter introduces Fibre Channel disk-array storage systems

and storage area networks (SANs). Major sections are

• Introducing EMC Fibre Channel Storage Systems........................1-2

• Fibre Channel Background...............................................................1-3

• Fibre Channel Storage Components................................................1-4

• About Switched Shared Storage and SANs (Storage Area

Networks).......................................................................................... 1-11

• About Unshared Storage.................................................................1-16

About Fibre Channel Storage Systems and Networks (SANs)

1-1

Page 14

About Fibre Channel Storage Systems and Networks (SANs)

1

Introducing EMC Fibre Channel Storage Systems

EMC Fibre Channel disk-array storage systems provide terabytes of

disk storage capacity, high transfer rates, flexible configurations, and

highly available data at low cost.

A storage system package includes a host-bus adapter driver package

with hardware and software to connect with a server, storage

management software, Fibre Channel interconnect hardware, and

one or more storage systems.

1-2

Figure 1-1 Storage System Models

EMC Fibre Channel Storage-System Configuration Planning

Page 15

Fibre Channel Background

Fibre Channel is a high-performance serial protocol that allows

transmission of both network and I/O channel data. It is a low level

protocol, independent of data types, and supports such formats as

SCSI and IP.

The Fibre Channel standard supports several physical topologies,

including switched fabric point-to-point and arbitrated loop (FC-AL).

The topologies used by the Fibre Channel storage systems described

in this manual are switched fabric and FC-AL.

A switch fabric is a set of point-to-point connections between nodes,

the connection being made through one or more Fibre Channel

switches. Each node may have its own unique address, but the path

between nodes is governed by a switch. The nodes are connected by

optical cable.

A Fibre Channel arbitrated loop is a circuit consisting of nodes. Each

node has a unique address, called a Fibre Channel arbitrated loop

address. The nodes are connected by optical cables. An optical cable

can transmit data over great distances for connections that span

entire enterprises and can support remote disaster recovery systems.

Copper cable serves well for local connections; its length is limited to

30 meters (99 feet).

About Fibre Channel Storage Systems and Networks (SANs)

1

Each connected device in a switched fabric or arbitrated loop is a

server adapter (initiator) or a target (storage system). The switches

and hubs are not considered nodes.

Server Adapter (initiator)

Node

Adapter

Storage System (tar

Connection

Figure 1-2 Nodes - Initiator and Target

Node

get)

EMC1802

Fibre Channel Background

1-3

Page 16

About Fibre Channel Storage Systems and Networks (SANs)

1

Fibre Channel Storage Components

A Fibre Channel storage system has three main components:

• Server component (host-bus adapter driver package with adapter

and software)

• Interconnect components (cables based on Fibre Channel

standards, switches, and hubs)

• Storage components (storage system with storage processors —

SPs — and power supply and cooling hardware)

Server Component (Host-Bus Adapter Driver Package with Software)

The host-bus adapter driver package includes a host-bus adapter and

support software. The adapter is a printed-circuit board that slides

into an I/O slot in the server’s cabinet. It transfers data between

server memory and one or more disk-array storage systems over

Fibre Channel — as controlled by the support software (adapter

driver).

Interconnect Components

Cables Depending on your needs, you can choose copper or optical cables.

1-4

EMC Fibre Channel Storage-System Configuration Planning

One or more servers can use a storage system. For high availability —

in event of an adapter failure — a server can have two adapters.

Server

Adapter

Adapter

EMC1803

Depending on your server type, you may have a choice of adapters.

The adapter is designed for a specific host bus; for example, a PCI bus

or SBUS. Some adapter types support copper or optical cabling; some

support copper cabling only.

The interconnect components include the cables, Fibre Channel

switch (for shared storage), and Fibre Channel hub (for unshared

storage).

Page 17

Fibre Channel Switches

About Fibre Channel Storage Systems and Networks (SANs)

1

The maximum length of copper cable is 30 meters (99 feet) between

nodes or hubs. The maximum length of optical cable between server

and hub or storage system is much greater, depending on the cable

type. For example, 62.5-micron multimode cable can span up to 500

meters (1,640 feet) while 9-micron single-mode cable can span up to

10 kilometers (6.2 miles). This ability to span great distances is a

major advantage of optical cable.

Some nodes have connections that require a specific type of cable:

copper or optical. Other nodes allow for the conversion from copper

to optical using a conversion device called a GigaBit Interface

Converter (GBIC) or Media Interface Adapter (MIA). In most cases, a

GBIC or MIA lets you substitute long-distance optical connections for

shorter copper connections.

With extenders, optical cable can span up to 40 km (25 miles). This

ability to span great distances is a major advantage of optical cable.

Details on cable lengths and rules appear later in this manual.

A Fibre Channel switch, which is a requirement for shared storage (a

Storage Area Network, SAN) connects all the nodes cabled to it using

a fabric topology. A switch adds serviceability and scalability to any

installation; it allows on-line insertion and removal of any device on

the fabric and maintains integrity if any connected device stops

participating. A switch also provides host-to-storage-system access

control in a multiple-host shared-storage environment. A switch has

several advantages over a hub: it provides point-to-point connections

(as opposed to a hub’s loop that includes all nodes) and it offers

zoning to specify paths between nodes in the switch itself.

Fibre Channel Storage Components

1-5

Page 18

About Fibre Channel Storage Systems and Networks (SANs)

1

You can cascade switches (connect one switch port to another switch)

for additional port connections.

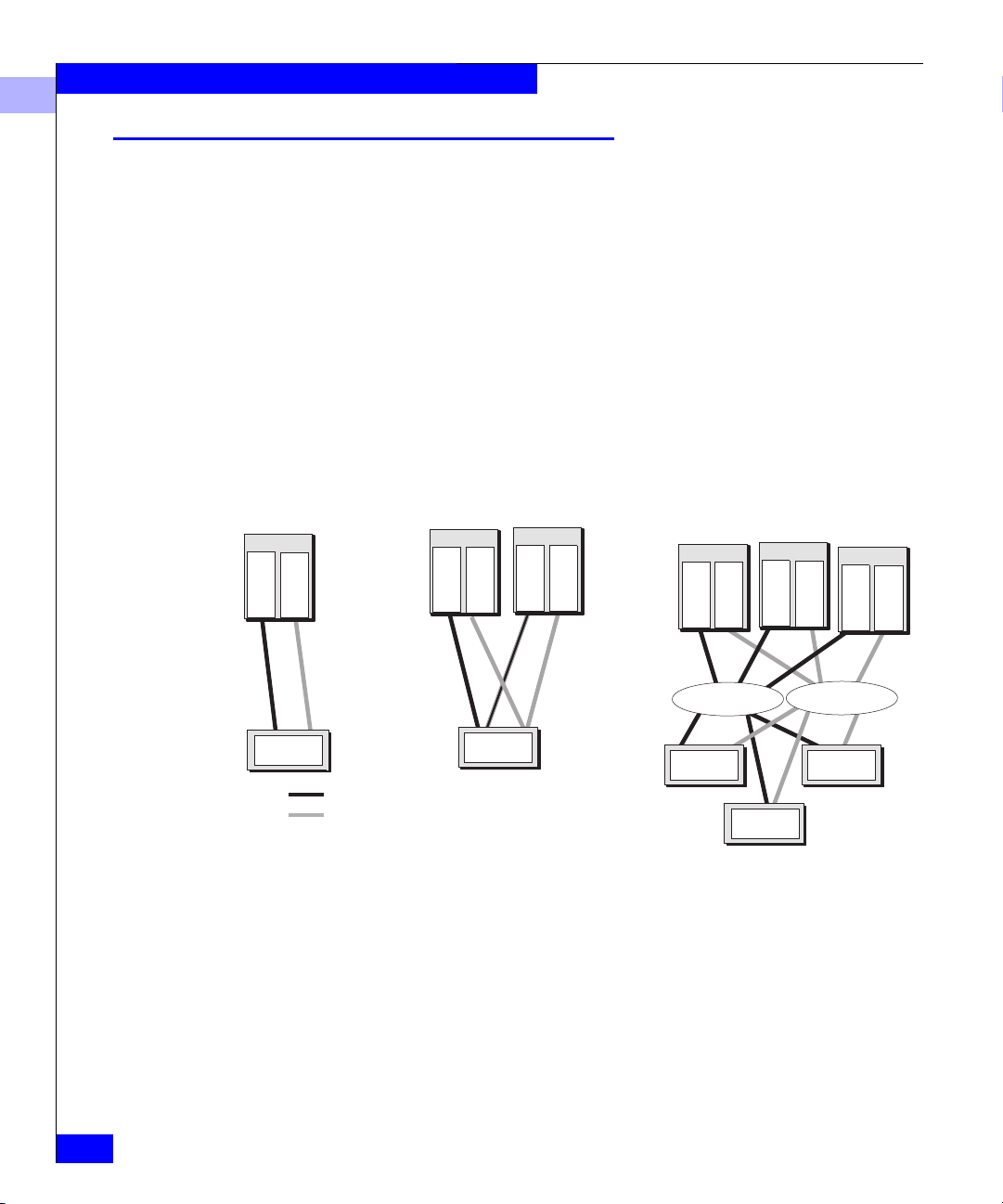

Switch topology (point-to-point)

Server

Adapter

Hub uses

loop between

ports

SP SP

Server

Adapter

SP

Adapter

Storage systems

Server Server

Adapter

witch uses discrete

onnections between

orts

o illustrate the comparison, this figure shows just one adapter per server and one switch or

hub. Normally, such installations include two adapters per server and two switches or hubs.

Figure 1-3 Switch and Hub Topologies Compared

Hub topology (loop)

Server

Adapter

SP

Switch Zoning

Switch zoning defines paths between connected nodes. Each zone

encloses one or more adapters and one or more SPs. A switch can

have as many zones as it has ports. The current connection limits are

four SP ports to one adapter port (the SPs fan in to the adapter) and

15 adapters to one SP (the SPs fan out to the adapters). There are

several zone types, including the single-initiator type, which is the

recommended type.

Server

Adapter

1-6

In the following figure, Server 1 has access to one SP (SP A) in storage

systems 1 and 2; it has no access to any other SP.

EMC Fibre Channel Storage-System Configuration Planning

Page 19

About Fibre Channel Storage Systems and Networks (SANs)

Server 1

1

Adapter

Zone

SP SP

Storage system 1

To illustrate switch zoning, this figure shows just one HBA per server and one

switch or hub. Normally, such installations will include two HBAs per server

and two switches or hubs.

Figure 1-4 A Switch Zone

If you do not define a zone in a switch, all adapter ports connected to

the switch can communicate with all SP ports connected to the

switch. However, access to an SP does not necessarily provide access

to the SP’s storage; access to storage is governed by the Storage

Groups you create (defined later).

Adapter

Switch

fabric

SP

Storage system 2 Storage system 3

Adapter

SP

SP

SP

Fibre Channel switches are available with 16 or 8 ports. They are

compact units that fit in 2 U (3.5 inches) for the 16-port or 1 U (1.75

inches) for the 8-port. They are available to fit into a rackmount

cabinet or as small deskside enclosures.

Ports

Figure 1-5 16-Port Switch, Back View

EMC1807

Fibre Channel Storage Components

1-7

Page 20

About Fibre Channel Storage Systems and Networks (SANs)

1

If your servers and storage systems will be far apart, you can place

the switches closer to the servers or the storage systems, as

convenient.

A switch is technically a repeater, not a node, in a Fibre Channel loop.

However, it is bound by the same cabling distance rules as a node.

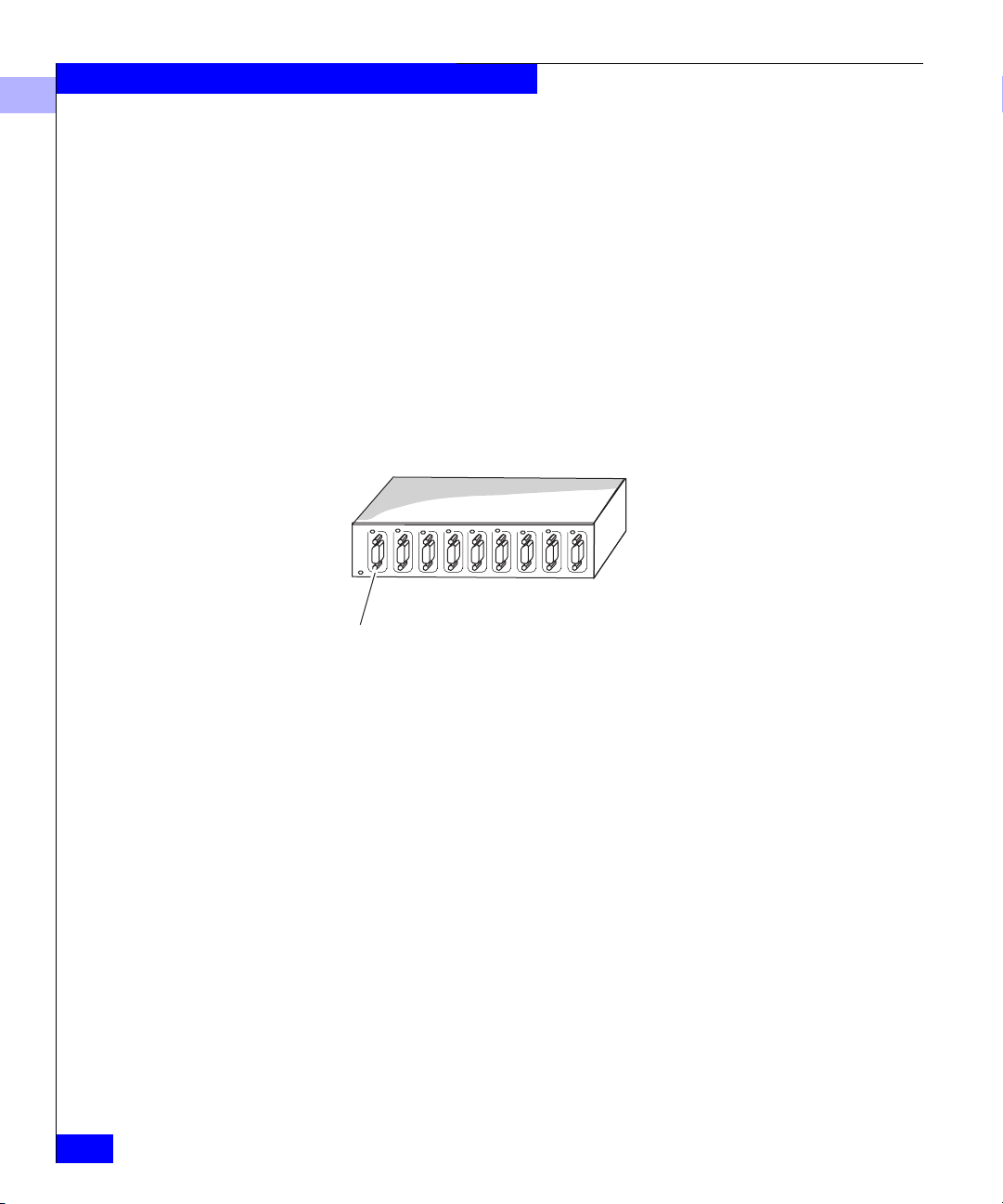

Fibre Channel Hubs A hub connects all the nodes cabled to it into a single logical loop. A

hub adds serviceability and scalability to any loop; it allows on-line

insertion and removal of any device on the loop and maintains loop

integrity if any connected device stops participating.

Fibre channel hubs are compact units that fit in 1 U (1.75 inches) of

storage space. They are available to fit into a rackmount cabinet or as

small deskside units.

1-8

The nine-pin port can connect to a server,

storage system, or another hub.

Figure 1-6 Nine-Port Hub

If your servers and storage systems will be far apart, you can place

the hubs closer to the servers or the storage systems, as convenient.

EMC Fibre Channel Storage-System Configuration Planning

Page 21

About Fibre Channel Storage Systems and Networks (SANs)

Storage Component (Storage Systems, Storage Processors (SPs), and Other Hardware)

EMC disk-array storage systems, with their storage processors,

power supplies, and cooling hardware form the storage component

of a Fibre Channel system. The controlling unit, a Disk-array

Processor Enclosure (DPE) looks like the following figure.

Disk

modules

EMC1808

Figure 1-7 Disk-Array Processor Enclosure (DPE)

1

DPE hardware details appear in a later chapter.

Fibre Channel Storage Components

1-9

Page 22

About Fibre Channel Storage Systems and Networks (SANs)

1

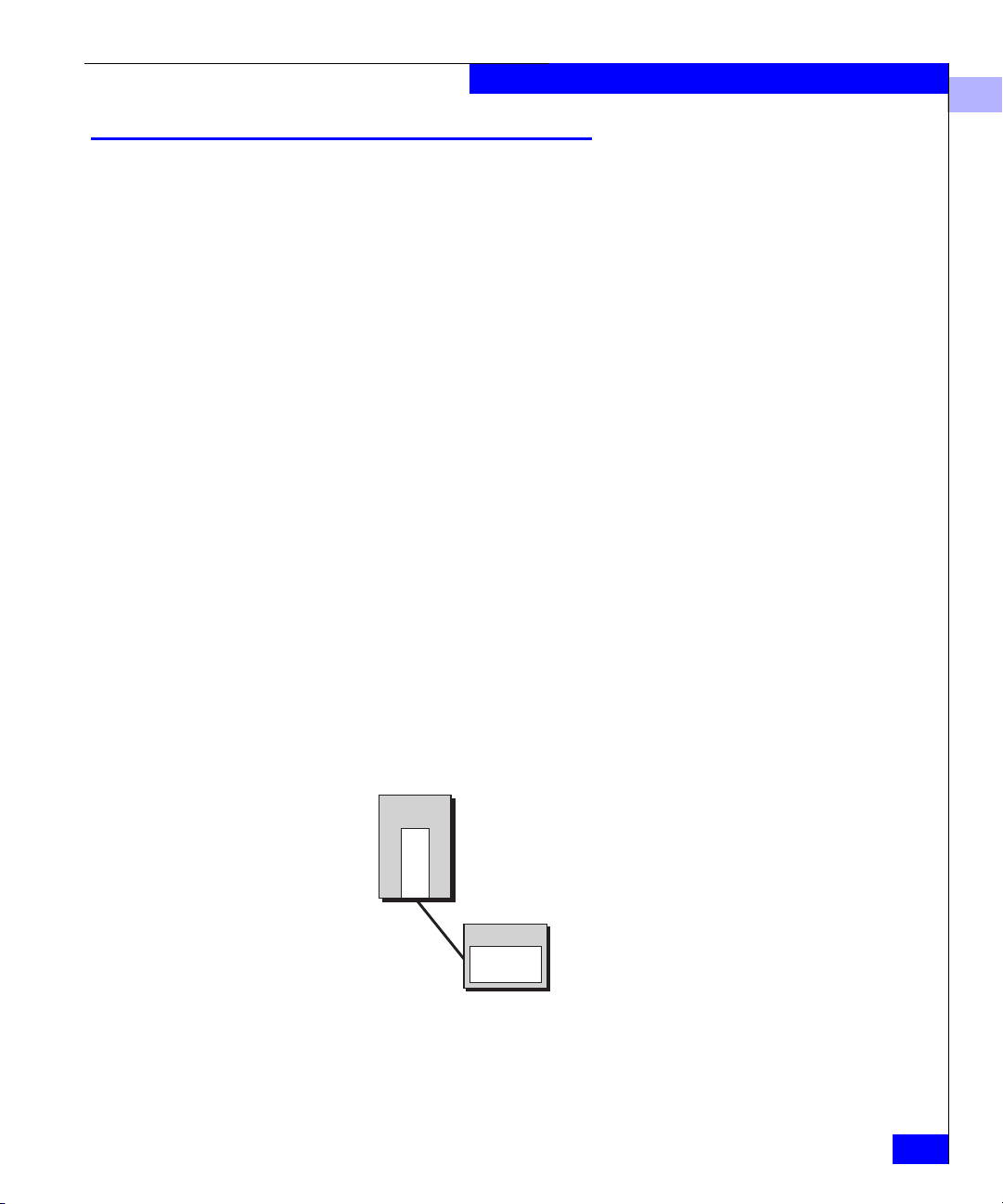

Types of Storage System Installations

You can use a storage systems in any of several types of installation:

• Unshared direct with one server is the simplest and least costly;

• Shared-or-clustered direct lets two clustered servers share

storage resources with high availability (FC4500 storage systems;

and

• Shared switched, with one or two switch fabrics, lets two to 15

servers share the resources of several storage systems in a Storage

Area Network (SAN) Shared switched installations are available

in a high-availability (HA) version, with two HBAs per server,

with two switches, or with one HBA per server and one switch.

Unshared Direct

(one or two servers)

Server

Adapter

Adapter

Path 1

Path 2

Figure 1-8 Types of Storage System Installation

Shared-or-Clustered Direct

(two servers)

Adapter

Server

Adapter

Adapter

Server

Adapter

Disk-array storage systems

Shared Switched

(multiple servers)

Server

Adapter

Switch fabric Switch fabric

Server

Adapter

Adapter

Adapter

Server

Adapter

Adapter

Storage systems for any shared installation require EMC Access

Logix™ software to control server access to the storage system LUNs.

The Shared-or-clustered direct installation may be either shared (that

is, use Access Logix to control LUN access) or clustered (without

Access Logix, using cluster software to control LUN access),

depending on the hardware model.

1-10

EMC Fibre Channel Storage-System Configuration Planning

Page 23

About Fibre Channel Storage Systems and Networks (SANs)

About Switched Shared Storage and SANs (Storage Area Networks)

This section explains the features that let multiple servers share

disk-array storage systems on a SAN (storage area network).

A SAN is a collection of storage devices connected to servers via Fibre

Channel switches to provide a central location for disk storage.

Centralizing disk storage among multiple servers has many

advantages, including

• highly available data

• flexible association between servers and storage capacity

• centralized management for fast, effective response to users’ data

storage needs

• easier file backup and recovery

An EMC SAN is based on shared storage; that is, the SAN requires

the Access Logix option to provides flexible access control to storage

system LUNs.

1

Server Server

Adapter

Adapter

Storage systems

Figure 1-9 Components of a SAN

Adapter

Switch fabric

Fibre Channel switches can control data access to storage systems

through the use of switch zoning. With zoning, an administrator can

specify groups (called zones) of Fibre Channel devices (such as

host-bus adapters, specified by worldwide name), and SPs between

which the switch will allow communication.

About Switched Shared Storage and SANs (Storage Area Networks)

SP A

Adapter

SP B

Switch fabric

SP A SP B

Server

Adapter

Adapter

Path 1

Path 2

1-11

Page 24

About Fibre Channel Storage Systems and Networks (SANs)

1

However, switch zoning cannot selectively control data access to

LUNs in a storage system, because each SP appears as a single Fibre

Channel device to the switch. So switch zoning can prevent or allow

communication with an SP, but not with specific disks or LUNs

attached to an SP. For access control with LUNs, a different solution is

required: Storage Groups.

Storage Groups

A Storage Group is one or more LUNs (logical units) within a storage

system that is reserved for one or more servers and is inaccessible to

other servers. Storage Groups are the central component of shared

storage; storage systems that are unshared do not use Storage

Groups.

When you configure shared storage, you specify servers and the

Storage Group(s) each server can read from and/or write to. The Base

Software firmware running in each storage system enforces the

server-to-Storage Group permissions.

A Storage Group can be accessed by more than one server if all the

servers run cluster software. The cluster software enforces orderly

access to the shared Storage Group LUNs.

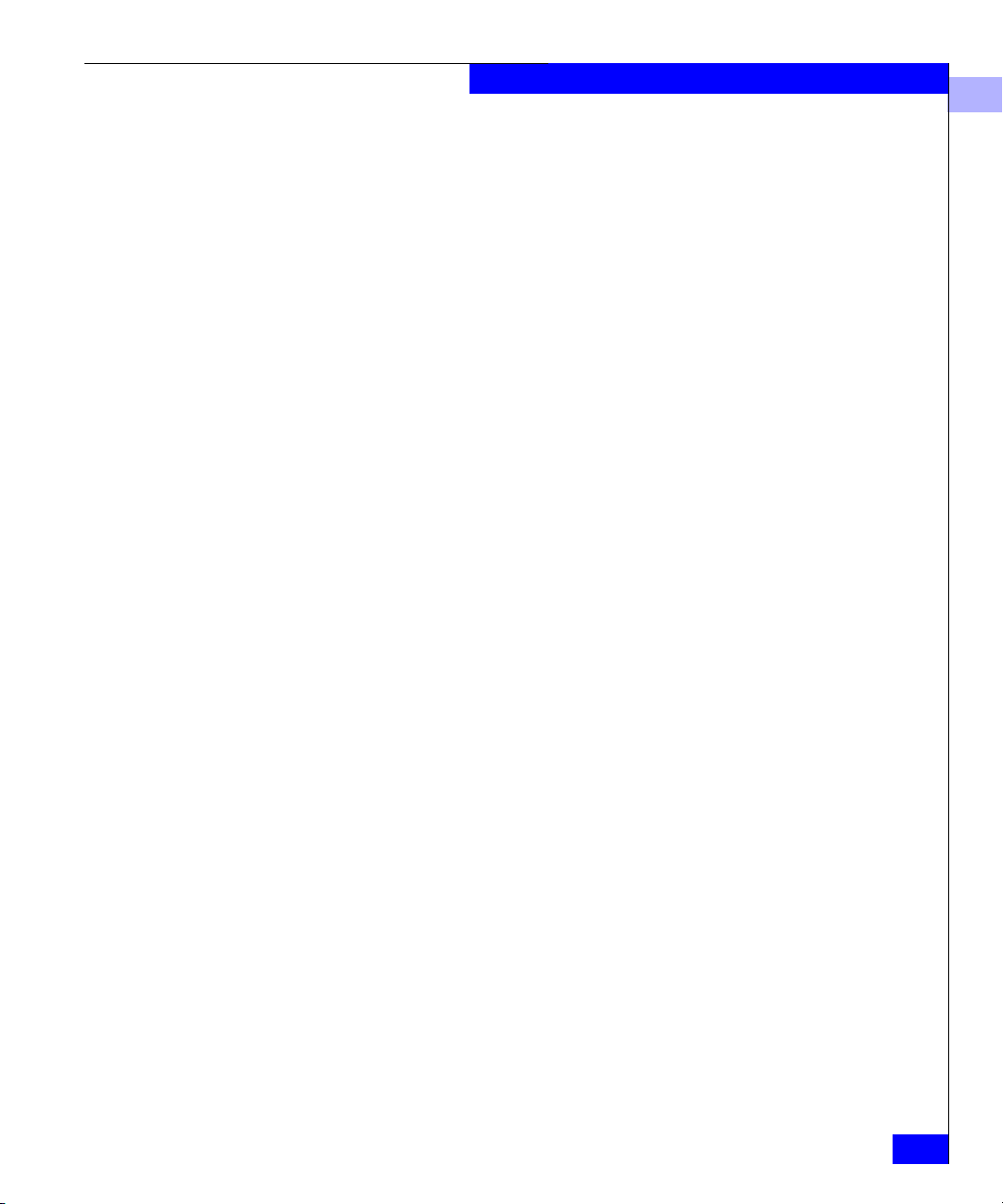

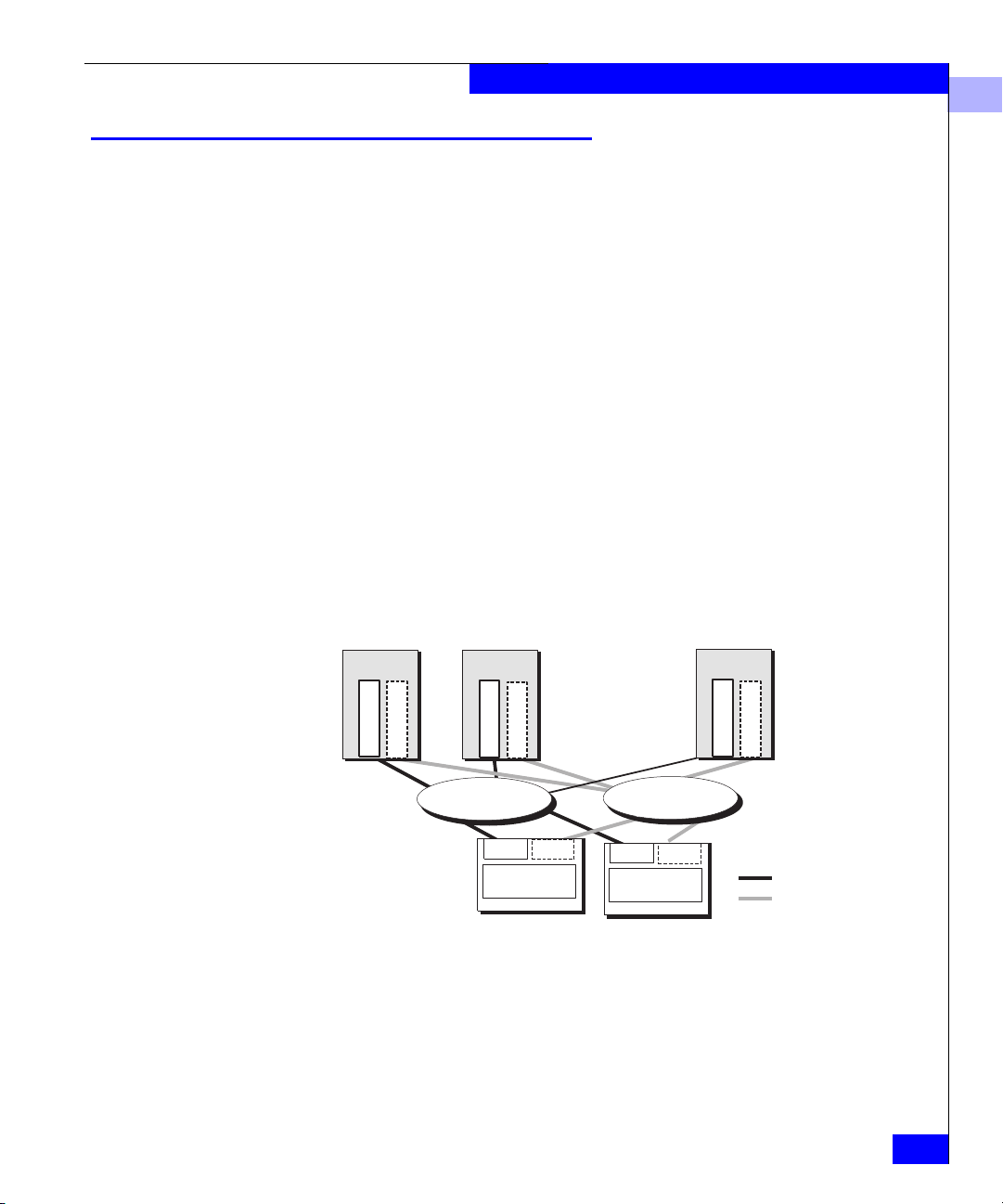

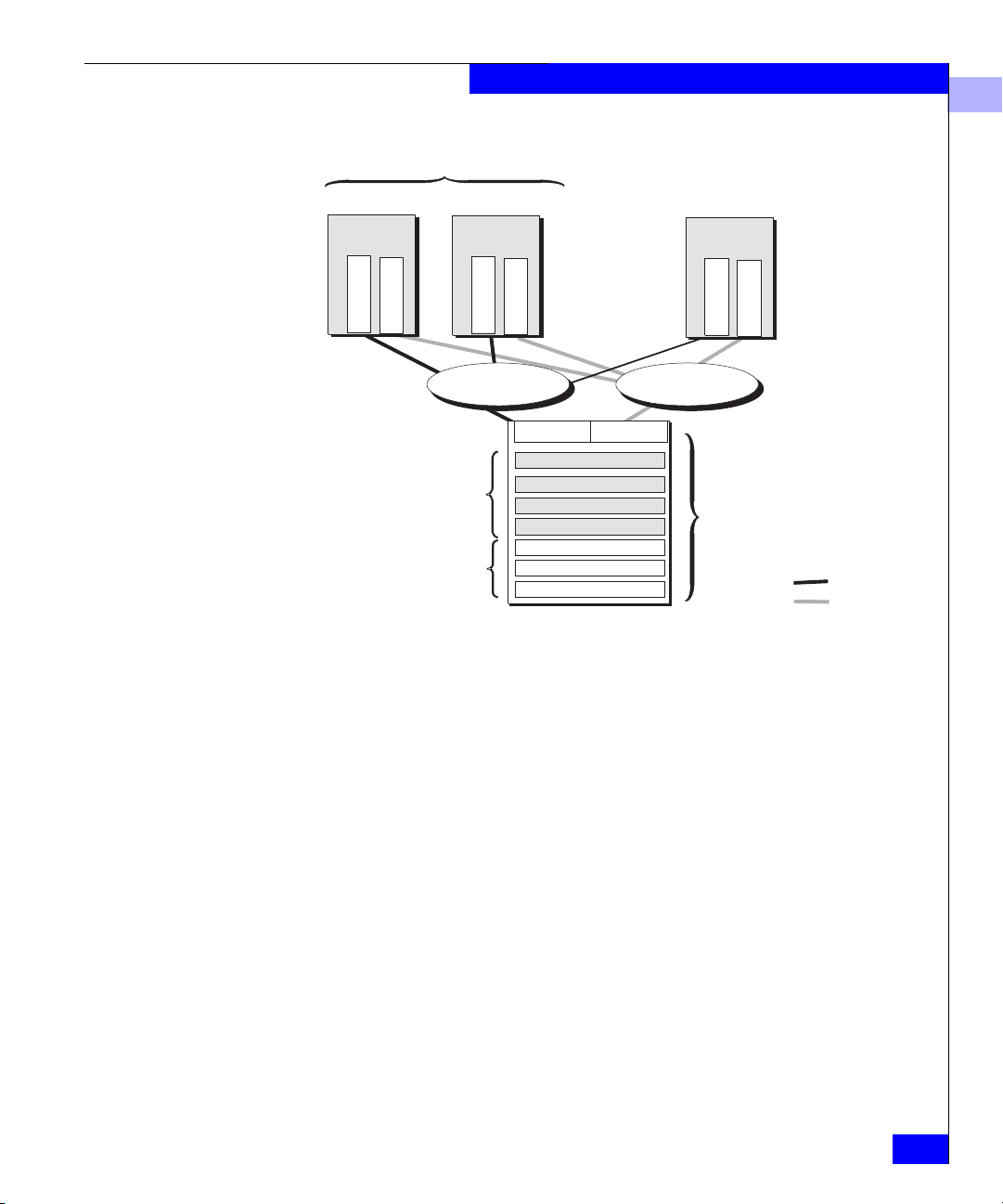

The following figure shows a simple shared storage configuration

consisting of one storage system with two Storage Groups. One

Storage Group serves a cluster of two servers running the same

operating system, and the other Storage Group serves a UNIX

database server. Each server is configured with two independent

paths to its data, including separate host-bus adapters, switches, and

SPs, so there is no single point of failure for access to its data.

1-12

EMC Fibre Channel Storage-System Configuration Planning

Page 25

About Fibre Channel Storage Systems and Networks (SANs)

Highly available cluster

File Server Mail Server Database Server

Operating

system A

Adapter

Adapter

Operating

system A

Adapter

Adapter

Operating

system B

Adapter

Adapter

1

Cluster

Storage Group

Database Server

Storage Group

Figure 1-10 Sample SAN Configuration

Access Control with Shared Storage

Access control permits or restricts a server’s access to shared storage.

There are two kinds of access control:

• Configuration access control

• Data access control

Configuration access control lets you restrict the servers through

which a user can send configuration commands to an attached

storage system.

Data access control is provided by Storage Groups. During storage

system configuration, using a management utility, the system

administrator associates a server with one or more LUNs.

Switch fabric

SP A

LUN

LUN

LUN

LUN

LUN

LUN

LUN

Switch fabric

SP B

Physical storage

systems with up to

100 disks per storage

system

Path 1

Path 2

Each server sees its Storage Group as if it were an entire storage

system, and never sees the other LUNs on the storage system.

Therefore, it cannot access or modify data on LUNs that are not part

of its Storage Group. However, you can define a Storage Group to be

accessible by more than one server, if, as shown above, the servers

run cluster software.

About Switched Shared Storage and SANs (Storage Area Networks)

1-13

Page 26

About Fibre Channel Storage Systems and Networks (SANs)

1

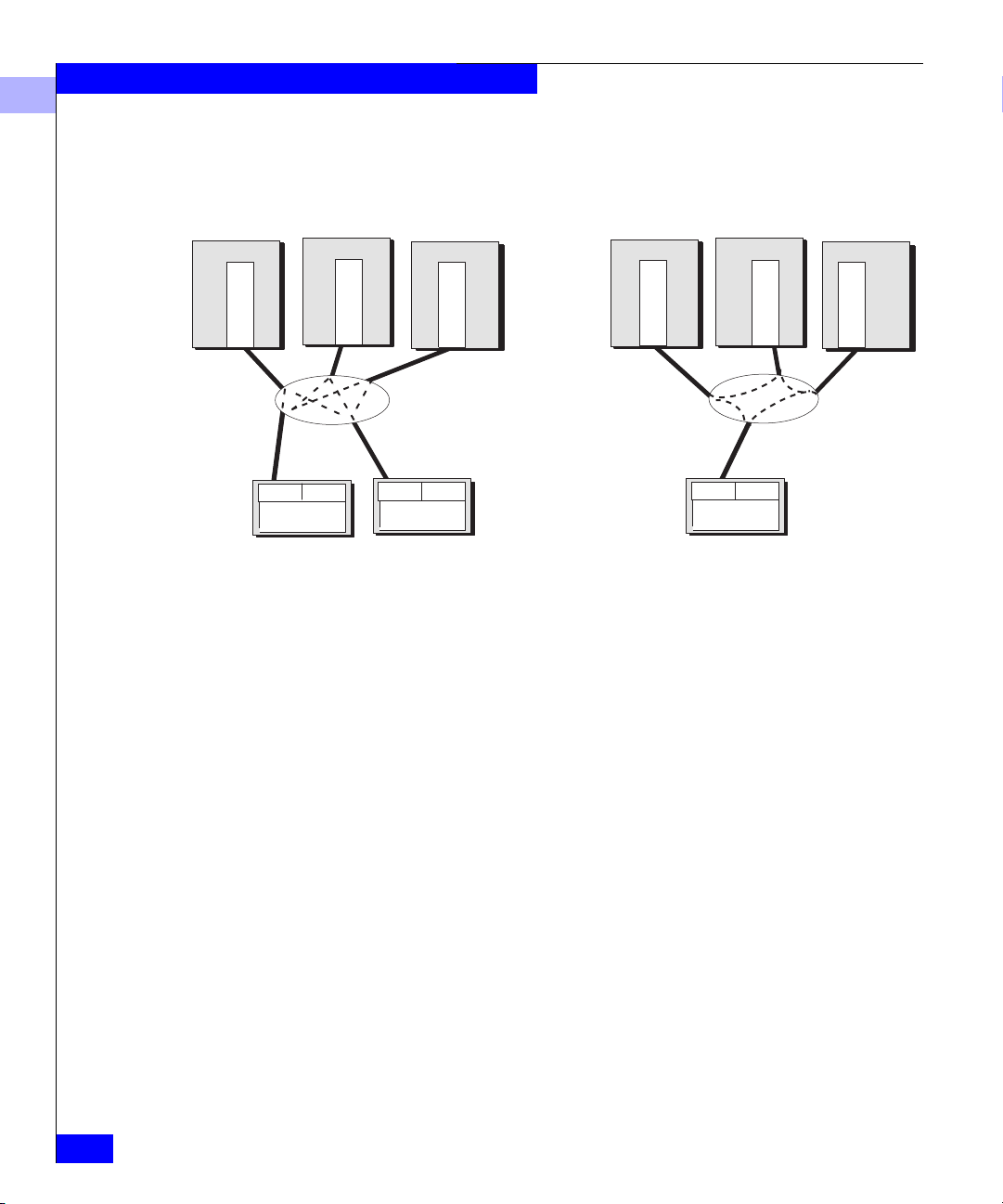

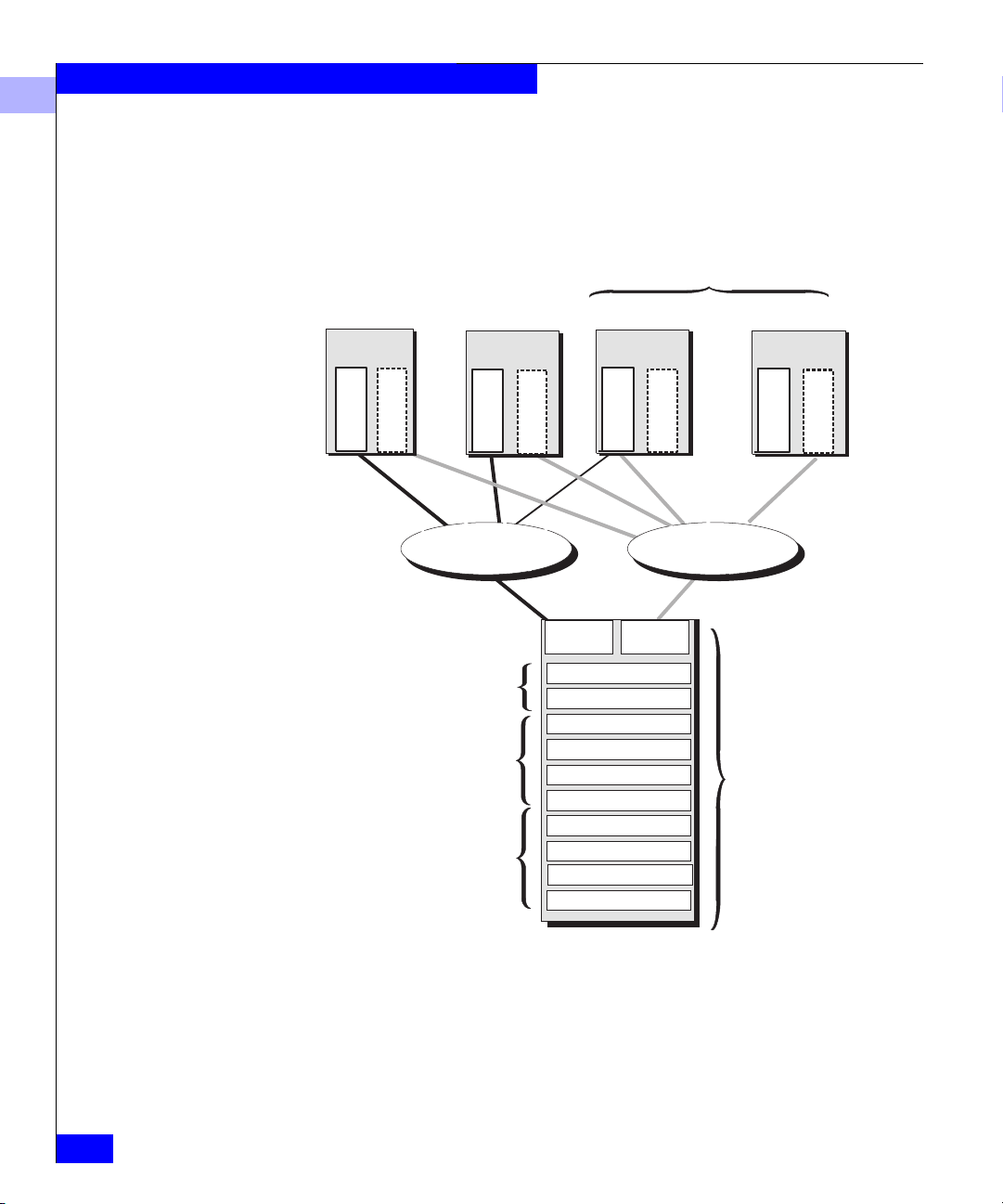

The following figure shows both data access control (Storage Groups)

and configuration access control. Each server has exclusive read and

write access to its designated Storage Group. Of the four servers

connected to the SAN, only the Admin server can send configuration

commands to the storage system.

Highly available cluster

Admin server

Operating

system A

Adapter

Adapter

01

02

Admin Storage Group

Dedicated

Data access by adapters 01, 02

Inventory Storage Group

Dedicated

Data access by adapters 03, 04

E-mail and Web server

Storage Group

Shared

Data access by

adapters 05, 06, 07, 08

Inventory server

Operating

system A

Adapter

03

Switch fabric

Adapter

04

SP A

E-mail server

Operating

system B

Adapter

Adapter

05

06

Switch fabric

SP B

LUN

LUN

LUN

LUN

LUN

LUN

LUN

LUN

LUN

LUN

Web server

Operating

system B

Adapter

Adapter

07

08

Configuation access, by

adapters 01and 02

(Admin server only)

1-14

Figure 1-11 Data and Configuration Access Control with Shared Storage

EMC Fibre Channel Storage-System Configuration Planning

Page 27

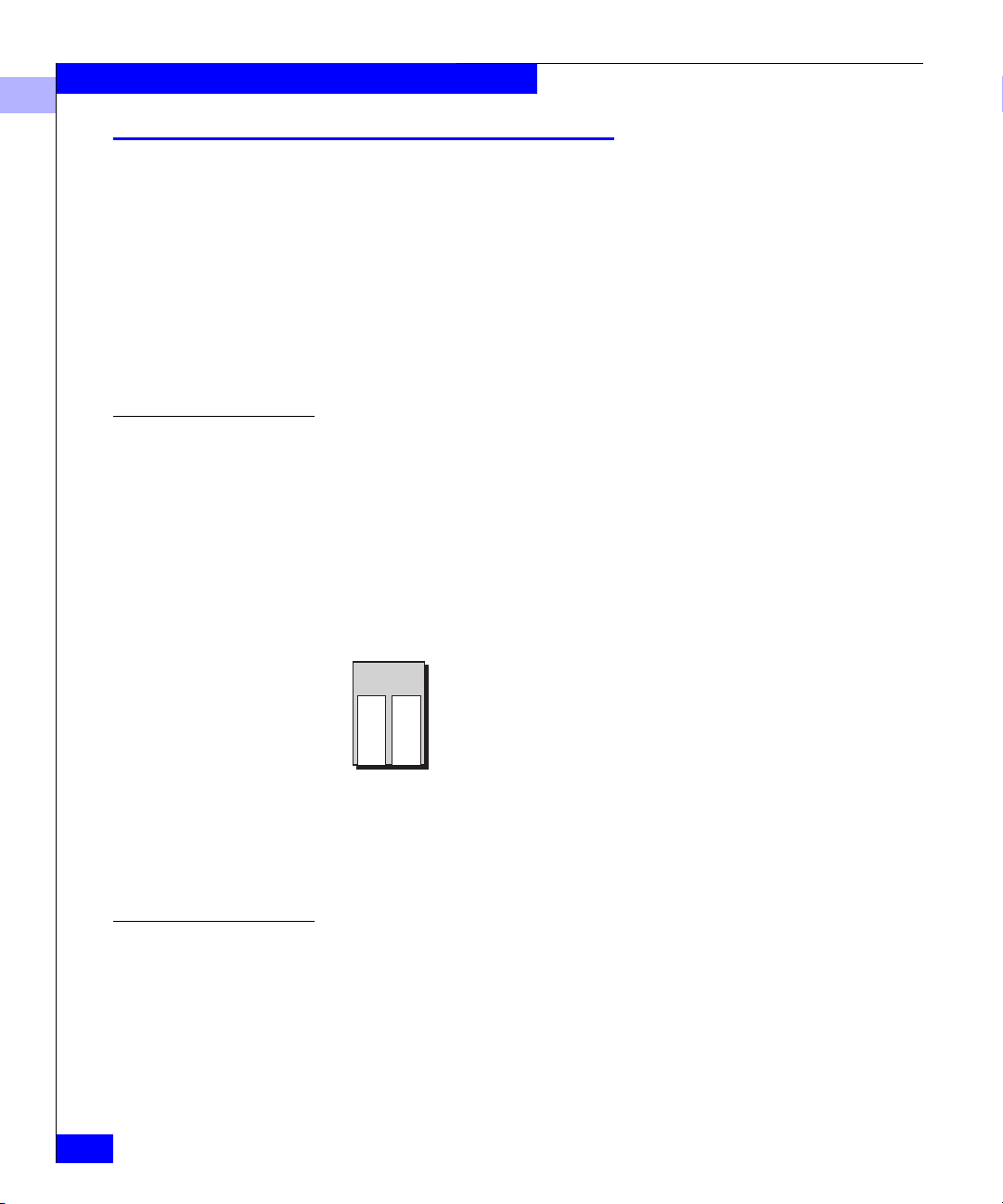

Storage System Hardware for Shared Storage

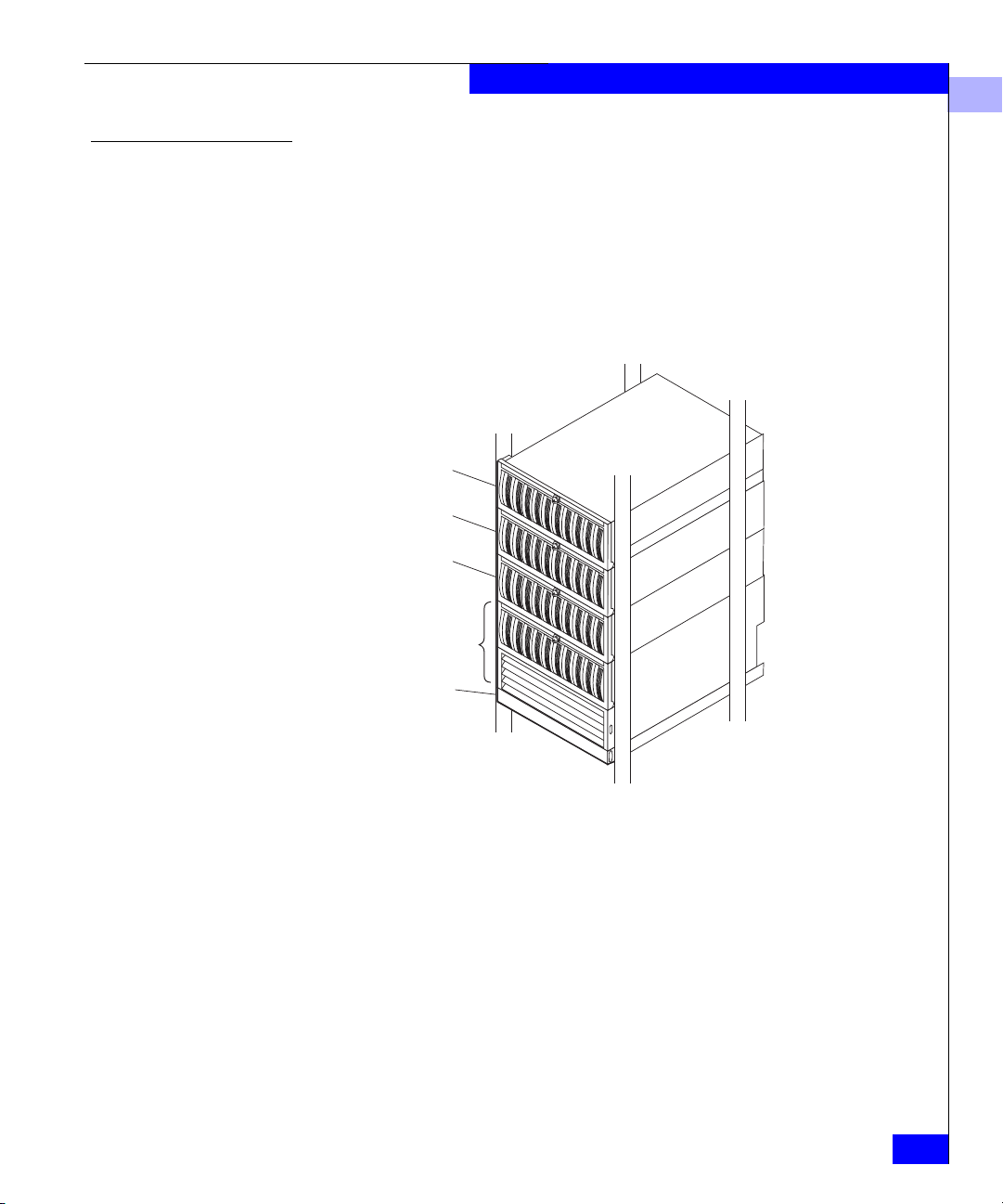

For shared storage, you need a Disk-array Processor Enclosure (DPE)

storage system.

A DPE is a 10-slot enclosure with hardware RAID features provided

by one or two storage processors (SPs). For shared storage, two SPs

are required. In addition to its own disks, a DPE can support up to

nine 10-slot Disk Array Enclosures (DAEs) for a total of 100 disks.

DAE

DAE

DAE

About Fibre Channel Storage Systems and Networks (SANs)

1

DPE

Standby power

supply (SPS)

Figure 1-12 Storage System with a DPE and Three DAEs

About Switched Shared Storage and SANs (Storage Area Networks)

EMC1741

1-15

Page 28

About Fibre Channel Storage Systems and Networks (SANs)

1

About Unshared Storage

Unshared storage systems are less costly and less complex than

shared storage systems. They offer many shared storage system

features; for example, you can use multiple unshared storage systems

with multiple servers. However, with multiple servers, unshared

storage offers less flexibility and security than shared storage, since

any user with write access to a privileged server’s files can enable

access to any storage system.

Storage System Hardware for Unshared Storage

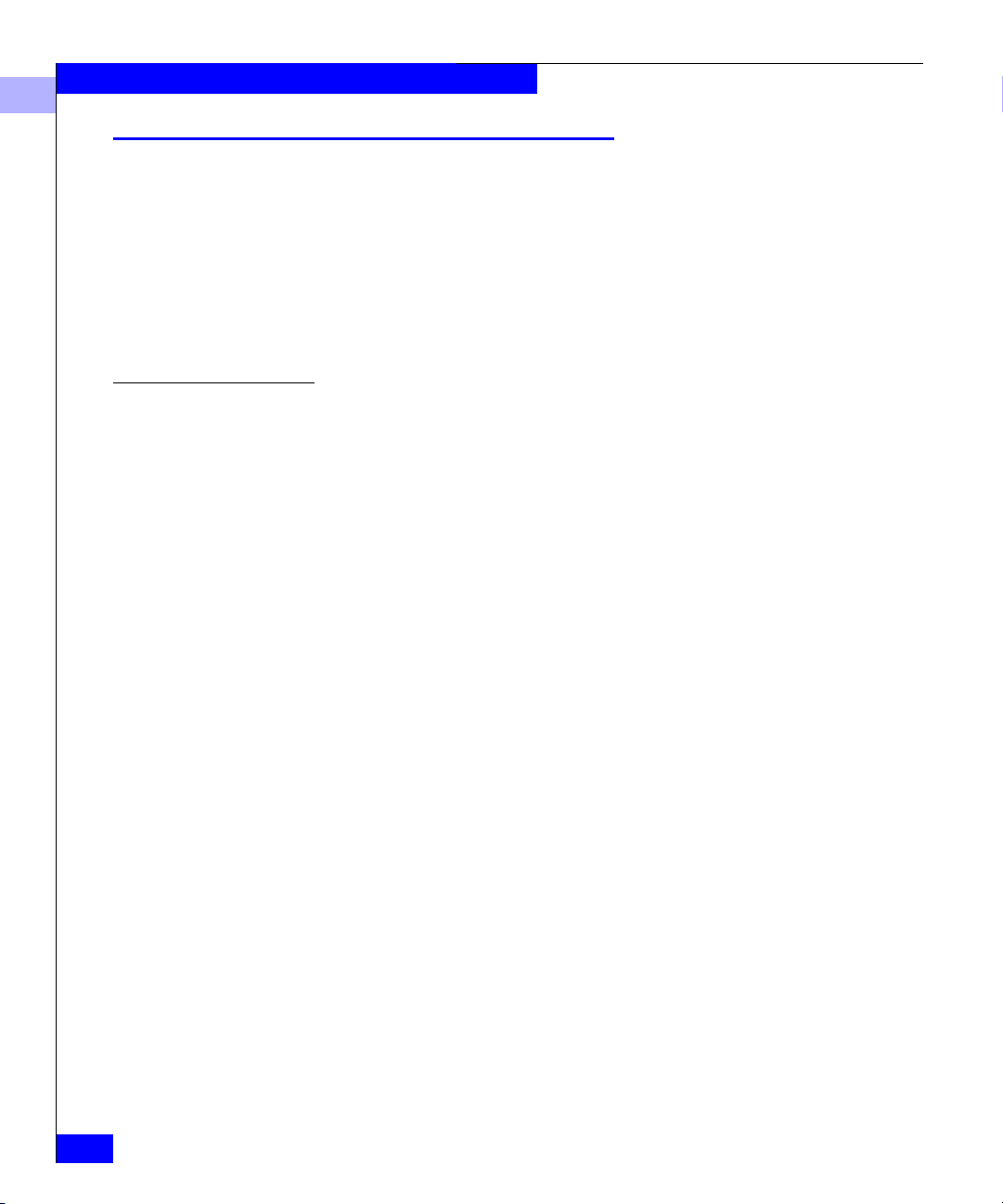

For unshared storage, there are four types of storage system, each

using the FC-AL protocol. Each type is available in a rackmount or

deskside (office) version.

• Disk-array Processor Enclosure (DPE) storage systems. A DPE is

a 10-slot enclosure with hardware RAID features provided by one

or two storage processors (SPs). In addition to its own disks, a

DPE can support up to 110 additional disks in 10-slot Disk Array

Enclosures (DAEs) for a total of 120 disks. This is the same type of

storage system used for shared storage, but it has a different SP

and different Core Software.

1-16

• Intelligent Disk Array Enclosure (iDAE). An iDAE, like a DPE,

has SPs and thus all the features of a DPE, but is thinner and has a

limit of 30 disks.

• Disk Array Enclosure (DAE). A DAE does not have SPs. A DAE

can connect to a DPE or an iDAE, or you can use it without SPs. A

DAE used without an SP does not inherently include RAID, but

can operate as a RAID device using software running on the

server system. Such a DAE is also known as Just a Box of Disks, or

JBOD.

EMC Fibre Channel Storage-System Configuration Planning

Page 29

About Fibre Channel Storage Systems and Networks (SANs)

Disk-array processor enclosure (DPE)

1

Deskside DPE with DAE

Intelligent disk-array enclosure (iDAE)

30-slot deskside Rackmount10-slot deskside

Rackmount DPE, one enclosure,

supports up to 9 DAEs

Figure 1-13 Storage System Hardware for Unshared Storage

What Next? For information about RAID types and RAID tradeoffs, continue to

the next chapter. To plan LUNs and file systems for shared storage,

skip to Chapter 3; or for unshared storage, Chapter 4. For details on

the storage-system hardware — shared and unshared — skip to

Chapter 5. For storage-system management utilities, skip to

Chapter 6.

About Unshared Storage

1-17

Page 30

About Fibre Channel Storage Systems and Networks (SANs)

1

1-18

EMC Fibre Channel Storage-System Configuration Planning

Page 31

2

RAID Types and

Tradeoffs

This chapter explains RAID types you can choose for your storage

system LUNs. If you already know about RAID types and know

which ones you want, you can skip this background information and

skip to Chapter 5. Topics are

• Introducing RAID ..............................................................................2-2

• RAID Types.........................................................................................2-4

• RAID Benefits and Tradeoffs..........................................................2-12

• Guidelines for RAID Types.............................................................2-17

• Sample Applications for RAID Types ...........................................2-19

This chapter applies primarily to storage systems with storage processors

(SPs). For a storage system without SPs (a DAE-only or JBOD system), RAID

types are limited by the RAID software you run on the server. The RAID

terms and definitions used here conform to generally accepted standards.

RAID Types and Tradeoffs

2-1

Page 32

RAID Types and Tradeoffs

2

Introducing RAID

The storage system uses RAID (redundant array of independent

disks) technology. RAID technology groups separate disks into one

logical unit (LUN) to improve reliability and/or performance.

The storage system supports five RAID levels and two other disk

configurations, the individual unit and the hot spare (global spare).

You group the disks into one RAID Group by binding them using a

storage-system management utility.

Four of the RAID types use disk striping and two use mirroring.

Disk Striping

Mirroring

Using disk stripes, the storage-system hardware can read from and

write to multiple disks simultaneously and independently. By

allowing several read/write heads to work on the same task at once,

disk striping can enhance performance. The amount of information

read from or written to each disk makes up the stripe element size.

The stripe size is the stripe element size multiplied by the number of

disks in a group. For example, assume a stripe element size of 128

sectors (the default) and a five-disk group. The group has five disks,

so you would multiply five by the stripe element size of 128 to yield a

stripe size of 640 sectors.

The storage system uses disk striping with most RAID types.

Mirroring maintains a second (and optionally through software, a

third) copy of a logical disk image that provides continuous access if

the original image becomes inaccessible. The system and user

applications continue running on the good image without

interruption. There are two kinds of mirroring: hardware mirroring,

in which the SP synchronizes the disk images; and software

mirroring, in which the operating system synchronizes the images.

Software mirroring consumes server resources, since the operating

system must mirror the images, and has no offsetting advantages; we

mention it here only for historical completeness.

With a storage system, you can create a hardware mirror by binding

disks as a RAID 1 mirrored pair or a RAID 1/0 Group (a mirrored

RAID 0 Group); the hardware will then mirror the disks

automatically.

2-2

EMC Fibre Channel Storage-System Configuration Planning

Page 33

RAID Types and Tradeoffs

2

RAID Groups and LUNs

Some RAID types let you create multiple LUNs on one RAID Group.

You can then allot each LUN to a different user, server, or application.

For example, a five-disk RAID 5 Group that uses 36-Gbyte disks

offers 144 Gbytes of space. You could bind three LUNs, say with 24,

60, and 60 Gbytes of storage capacity, for temporary, mail, and

customer files.

One disadvantage of multiple LUNs on a RAID Group is that I/O to

each LUN may affect I/O to the others in the group; that is, if traffic

to one LUN is very heavy, I/O performance with other LUNs may

degrade. The main advantage of multiple LUNs per RAID Group is

the ability to divide the enormous amount of disk space provided by

RAID Groups on newer, high-capacity disks.

RAID Group

LUN 0

temp

LUN 1

mail

LUN 2

customers

Disk Disk Disk Disk Disk

LUN 0

temp

LUN 1

mail

LUN 2

customers

LUN 0

temp

LUN 1

mail

LUN 2

customers

LUN 0

temp

LUN 1

mail

LUN 2

customers

LUN 0

temp

LUN 1

mail

LUN 2

customers

EMC1814

Figure 2-1 Multiple LUNs in a RAID Group

Introducing RAID

2-3

Page 34

RAID Types and Tradeoffs

2

RAID Types

You can choose from the following RAID types: RAID 5, RAID 3,

RAID 1, RAID 0, RAID 1/0, individual disk unit, and hot spare.

RAID 5 Group (Individual Access Array)

A RAID 5 Group usually consists of five disks (but can have three to

sixteen). A RAID 5 Group uses disk striping. With a RAID 5 Group on

a full-fibre storage system, you can create up to 32 RAID 5 LUNs to

apportion disk space to different users, servers, and applications.

The storage system writes parity information that lets the group

continue operating if a disk fails. When you replace the failed disk,

the SP rebuilds the group using the information stored on the

working disks. Performance is degraded while the SP rebuilds the

group. However, the storage system continues to function and gives

users access to all data, including data stored on the failed disk.

The following figure shows user and parity data with the default

stripe element size of 128 sectors (65,536 bytes) in a five-disk RAID 5

Group. The stripe size comprises all stripe elements. Notice that the

disk block addresses in the stripe proceed sequentially from the first

disk to the second, third, and fourth, then back to the first, and so on.

2-4

EMC Fibre Channel Storage-System Configuration Planning

Page 35

Stripe

si

ze

Stripe

element

size

Stripe

Blocks

0-127

512-639 1024-1151 1536-1663

First disk

Parity

Second disk

128-255 640-767 1152-1279

Parity

2048-2175

Third disk

256-383 768-895 1664-1791 2176-2303

Parity

Fourth disk

384-511 1280-1407 1792-1919 2304-2431

Parity

RAID Types and Tradeoffs

…

…

…

User data

…

Parity data

2

Parity

Figure 2-2 RAID 5 Group

896-1023 1408-1535 1920-2047 2432-2559

RAID 5 Groups offer excellent read performance and good write

performance. Write performance benefits greatly from

storage-system caching.

RAID 3 Group (Parallel Access Array)

A RAID 3 Group consists of five or more disks. The hardware always

reads from or writes to all the disks. A RAID 3 Group uses disk

striping. To maintain the RAID 3 performance, you can create only

one LUN per RAID 3 Group.

The storage system writes parity information that lets the group

continue operating if a disk fails. When you replace the failed disk,

the SP rebuilds the group using the information stored on the

working disks. Performance is degraded while the SP rebuilds the

group. However, the storage system continues to function and gives

users access to all data, including data stored on the failed disk.

Fifth disk

…

EMC1815

RAID Types

2-5

Page 36

RAID Types and Tradeoffs

2

The following figure shows user and parity data with a data block

size of 2 Kbytes in a RAID 3 Group. Notice that the byte addresses

proceed from the first disk to the second, third, and fourth, then the

first, and so on.

Data block

First disk

Second disk

Third disk

5120-56311024-1535 3072-3583 7168-7679 9116-9627

Fourth disk

Fifth disk

…

…

…

User data

…

…

Parity data

EMC1816

Stripe

si

ze

Stripe

element

size

Bytes

2048-2559 4096-4607 6144-6655 8192-8603

0-511

512-1023 2560-3071 4608-5119 6656-71678604-9115

3584-40951536-2047 5632-6143 7680-8191 9628-10139

Parity Parity Parity Parity Parity

2-6

Figure 2-3 RAID 3 Group

RAID 3 differs from RAID 5 in several important ways. First, in a

RAID 3 Group the hardware processes disk requests serially; whereas

in a RAID 5 Group the hardware can interleave disk requests. Second,

with a RAID 3 Group, the parity information is stored on one disk;

with a RAID 5 Group, it is stored on all disks. Finally, with a RAID 3

Group, the I/O occurs in small units (one sector) to each disk. A

RAID 3 Group works well for single-task applications that use I/Os

of blocks larger than 64 Kbytes.

Each RAID 3 Group requires some dedicated SP memory (6 Mbytes

recommended per group). This memory is allocated when you create

the group and becomes unavailable for storage-system caching. For

top performance, we suggest that you do not use RAID 3 Groups

with RAID 5, RAID 1/0, or RAID 0 Groups, since SP processing

power and memory are best devoted to the RAID 3 Groups. RAID 1

mirrored pairs and individual units require less SP processing power,

and therefore work well with RAID 3 Groups.

EMC Fibre Channel Storage-System Configuration Planning

Page 37

For each write to a RAID 3 Group, the storage system

1. Calculates the parity data.

2. Writes the new user and parity data.

RAID Types and Tradeoffs

2

RAID 1 Mirrored Pair

A RAID 1 Group consists of two disks that are mirrored

automatically by the storage-system hardware.

RAID 1 hardware mirroring within the storage system is not the same

as software mirroring or hardware mirroring for other kinds of disks.

Functionally, the difference is that you cannot manually stop

mirroring on a RAID 1 mirrored pair, and then access one of the

images independently. If you want to use one of the disks in such a

mirror separately, you must unbind the mirror (losing all data on it),

rebind the disk in as the type you want, and software format the

newly bound LUN.

With a storage system, RAID 1 hardware mirroring has the following

advantages:

• automatic operation (you do not have to issue commands to

initiate it)

• physical duplication of images

• a rebuild period that you can select during which the SP recreates

the second image after a failure

With a RAID 1 mirrored pair, the storage system writes the same data

to both disks, as follows.

First disk

0

0

1

1

2

Second disk

2

3

3

4

…

4

…

User data

EMC1817

Figure 2-4 RAID 1 Mirrored Pair

RAID Types

2-7

Page 38

RAID Types and Tradeoffs

2

RAID 0 Group (Nonredundant Array)

A RAID 0 Group consists of three to a maximum of sixteen disks. A

RAID 0 Group uses disk striping, in which the hardware writes to or

reads from multiple disks simultaneously. In a full-fibre storage

system, you can create up to 32 LUNs per RAID Group.

Unlike the other RAID levels, with RAID 0 the hardware does not

maintain parity information on any disk; this type of group has no

inherent data redundancy. RAID 0 offers enhanced performance

through simultaneous I/O to different disks.

If the operating system supports software mirroring, you can use

software mirroring with the RAID 0 Group to provide high

availability. A desirable alternative to RAID 0 is RAID 1/0.

RAID 1/0 Group (Mirrored RAID 0 Group)

A RAID 1/0 Group consists of four, six, eight, ten, twelve, fourteen,

or sixteen disks. These disks make up two mirror images, with each

image including two to eight disks. The hardware automatically

mirrors the disks. A RAID 1/0 Group uses disk striping. It combines

the speed advantage of RAID 0 with the redundancy advantage of

mirroring. With a RAID 1/0 Group on a full-fibre storage system, you

can create up to 32 RAID 5 LUNs to apportion disk space to different

users, servers, and applications.

2-8

The following figure shows the distribution of user data with the

default stripe element size of 128 sectors (65,536 bytes) in a six-disk

RAID 1/0 Group. Notice that the disk block addresses in the stripe

proceed sequentially from the first mirrored disks (first and fourth

disks) to the second mirrored disks (second and fifth disks), to the

third mirrored disks (third and sixth disks), and then from the first

mirrored disks, and so on.

EMC Fibre Channel Storage-System Configuration Planning

Page 39

Stripe

ze

si

Stripe

element

size

Stripe

Blocks

0-127 384-511

First disk of primary image

Second disk of primary image

512-639128-255 1664-17911280-1407896-1023

Third disk of primary image

256-383

640-767

1024-1151

1408-1535

1536-16631152-1279768-895

1792-1919

RAID Types and Tradeoffs

2

…

…

…

Figure 2-5 RAID 1/0 Group (Mirrored RAID 0 Group)

Individual Disk Unit

First disk of secondary image

384-511

768-895

1152-1279

1536-16630-127

…

User data

Second disk of secondary image

128-255

512-639

896-1023

1280-1407

1664-1791

…

Third disk of secondary image

256-383

640-767

1024-1151

1408-1535

1792-1919

…

EMC1818

A RAID 1/0 Group can survive the failure of multiple disks,

providing that one disk in each image pair survives.

An individual disk unit is a disk bound to be independent of any

other disk in the cabinet. An individual unit has no inherent high

availability, but you can make it highly available by using software

mirroring with another individual unit. You can create one LUN per

individual disk unit. If you want to apportion the disk space, you can

do so using partitions, file systems, or user directories.

Hot Spare

A hot spare is a dedicated replacement disk on which users cannot

store information. A hot spare is global: if any disk in a RAID 5

Group, RAID 3 Group, RAID 1 mirrored pair, or RAID 1/0 Group

fails, the SP automatically rebuilds the failed disk’s structure on the

hot spare. When the SP finishes rebuilding, the disk group functions

as usual, using the hot spare instead of the failed disk. When you

RAID Types

2-9

Page 40

RAID Types and Tradeoffs

2

replace the failed disk, the SP copies the data from the former hot

spare onto the replacement disk.

When the copy is done, the disk group consists of disks in the original

slots, and the SP automatically frees the hot spare to serve as a hot

spare again. A hot spare is most useful when you need the highest

data availability. It eliminates the time and effort needed for someone

to notice that a disk has failed, find a suitable replacement disk, and

insert the disk.

When you plan to use a hot spare, make sure the disk has the capacity to

serve in any RAID Group in the storage-system chassis. A RAID Group

cannot use a hot spare that is smaller than a failed disk in the group.

You can have one or more hot spares per storage-system chassis. You

can make any disk in the chassis a hot spare, except for a disk that

serves for Core Software storage or the write cache vault. That is, a

hot spare can be any of the following disks:

DPE or iDAE system without write caching: disks 3-119

DPE system with write caching: disks 9-119

iDAE system with write caching: disks 5-29

30-slot SCSI-disk system: disks A1-E1, A2-E2,

B3-E3, A4-E4

2-10

An example of hot spare usage for a deskside DPE storage system

follows.

EMC Fibre Channel Storage-System Configuration Planning

Page 41

RAID Types and Tradeoffs

2

10

0

1

2

3

4

5

6

7

8

9

11

12

13

14

15

16

17

18

19

1. RAID 5 group consists of disk modules 0-4; RAID 1 mirrored pair is

modules 5 and 6; hot spare is module 9.

2. Disk module 3 fails.

3. RAID 5 group becomes modules 0, 1, 2, 9, and 4; now no hot spare is

available.

4. System operator replaces failed module 3 with a functional module.

5. RAID 5 group once again is 0-4 and hot spare is 9.

Figure 2-6 How a Hot Spare Works

Hot spare

RAID Types

2-11

Page 42

RAID Types and Tradeoffs

2

RAID Benefits and Tradeoffs

This section reviews RAID types and explains their benefits and

tradeoffs. You can create seven types of LUN:

• RAID 5 Group (individual access array)

• RAID 3 Group (parallel access array)

• RAID 1 mirrored pair

• RAID 1/0 Group (mirrored RAID 0 Group); a RAID 0 Group

mirrored by the storage-system hardware

• RAID 0 Group (nonredundant individual access array); no

inherent high-availability features, but can be software mirrored

if the operating system supports mirroring

• Individual unit; no inherent high-availability features but can be

software mirrored, if the operating system supports mirroring

• Hot spare; serves only as an automatic replacement for any disk

in a RAID type other than 0; does not store data during normal

system operations

2-12

Plan the disk unit configurations carefully. After a disk has been bound into a

LUN, you cannot change the RAID type of that LUN without unbinding it,

and this means losing all data on it.

The following table compares the read and write performance,

tolerance for disk failure, and relative cost per megabyte (Mbyte) of

the RAID types. Figures shown are theoretical maximums.

EMC Fibre Channel Storage-System Configuration Planning

Page 43

RAID Types and Tradeoffs

Table 2-1 Performance, Availability, and Cost of RAID Types (Individual Unit = 1.0)

2

Performance

Relative read

Disk configuration

RAID 5 Group with

fivedisk s

RAID 3 Group with

fivedisks

RAID 1 mirrored pair Up to 2 Up to 1 2

RAID 1/0 Group with

10 disks

Individual unit 1 1 1

Notes: These performance numbers are not based on storage-system caching. With caching,

the performance numbers for RAID 5 writes improve significantly.

Performance multipliers vary with load on server and storage system.

performance

without cache

Up to 5 with five disks

(for small I/O requests, 2

to 8 Kbytes)

Up to 4 (for large I/O

requests)

Up to 10 Up to 5

Relative write

performance

without cache

Up to 1.25 with five disks

(for small I/O requests, 2 to

8 Kbytes)

Up to 4 (for large I/O

requests)

Relative

cost per

Mbyte

1.25

1.25

RAID 5, with individual access, provides high read throughput for

small requests (blocks of 2 to 8 Kbytes) by allowing simultaneous

reads from each disk in the group. RAID 5 write throughput is

limited by the need to perform four I/Os per request (I/Os to read

and write data and parity information). However, write caching

improves RAID 5 write performance.

RAID 3, with parallel access, provides high throughput for

sequential, large block-size requests (blocks of more than 64 Kbytes).

With RAID 3, the system accesses all five disks in each request but

need not read data and parity before writing – advantageous for large

requests but not for small ones. RAID 3 employs SP memory without

caching, which means you do not need the second SP and BBU that

caching requires.

Generally, the performance of a RAID 3 Group increases as the size of

the I/O request increases. Read performance increases rapidly with

read requests up to 1Mbyte. Write performance increases greatly for

sequential write requests that are greater than 256 Kbytes. For

applications issuing very large I/O requests, a RAID 3 LUN provides

significantly better write performance than a RAID 5 LUN.

RAID Benefits and Tradeoffs

2-13

Page 44

RAID Types and Tradeoffs

2

We do not recommend using RAID 3 in the same storage-system

chassis with RAID 5 or RAID 1/0.

A RAID 1 mirrored pair has its disks locked in synchronization, but

the SP can read data from the disk whose read/write heads are closer

to it. Therefore, RAID 1 read performance can be twice that of an

individual disk while write performance remains the same as that of

an individual disk.

A RAID 0 Group (nonredundant individual access array) or RAID

1/0 Group (mirrored RAID 0 Group) can have as many I/O

operations occurring simultaneously as there are disks in the group.

Since RAID 1/0 locks pairs of RAID 0 disks the same way as RAID 1

does, the performance of RAID 1/0 equals the number of disk pairs

times the RAID 1 performance number. If you want high throughput

for a specific LUN, use a RAID 1/0 or RAID 0 Group. A RAID 1/0

Group requires at least six disks; a RAID 0 Group, at least three disks.

An individual unit needs only one I/O operation per read or write

operation.

RAID types 5, 1, 1/0, and 0 allow multiple LUNs per RAID Group. If

you create multiple LUNs on a RAID Group, the LUNs share the

RAID Group disks, and the I/O demands of each LUN affect the I/O

service time to the other LUNs. For best performance, you may want

to use one LUN per RAID Group.

Storage Flexibility

Certain RAID Group types — RAID 5, RAID 1, RAID 1/0, and RAID

0 — let you create up to 32 LUNs in each group. This adds flexibility,

particularly with large disks, since it lets you apportion LUNs of

various sizes to different servers, applications, and users. Conversely,

with RAID 3, there can be only one LUN per RAID Group, and the

group must include five or nine disks — a sizable block of storage to

devote to one server, application, or user. However, the nature of

RAID 3 makes it ideal for that single-threaded type of application.

Data Availability and Disk Space Usage

If data availability is critical and you cannot afford to wait hours to

replace a disk, rebind it, make it accessible to the operating system,

and load its information from backup, then use a redundant RAID

Group: RAID 5, RAID 3, RAID 1 mirrored pair, or RAID 1/0. Or bind

a RAID 0 Group or individual disk unit that you will later mirror

with software mirroring. If data availability is not critical, or disk

2-14

EMC Fibre Channel Storage-System Configuration Planning

Page 45

RAID Types and Tradeoffs

space usage is critical, bind an individual unit or RAID 0 Group

without software mirroring.

A RAID 1 mirrored pair or RAID 1/0 Group provides very high data

availability. They are more expensive than RAID 5 or RAID 3 Groups,

since only 50 percent of the total disk capacity is available for user

data, as shown on page 2-13.

A RAID 5 or RAID 3 Group provides high data availability, but

requires more disks than a mirrored pair. In a RAID 5 or RAID 3

Group of five disks, 80 percent of the disk space is available for user

data. So RAID 5 and RAID 3 Groups use disk space much more

efficiently than a mirrored pair. A RAID 5 or RAID 3 Group is usually

more suitable than a RAID 1 mirrored pair for applications where

high data availability, good performance, and efficient disk space

usage are all of relatively equal importance.

2

RAID Benefits and Tradeoffs

2-15

Page 46

RAID Types and Tradeoffs

2

RAID 5 Group RAID 3 Group

1st disk

user and parity data

2nd disk

user and parity data

3rd disk

user and parity data

4th disk

user and parity data

5th disk

user and parity data

Disk Mirror (RAID 1 mirrored pair)

1st disk

user data

2nd disk

user data

1st disk

user data

2nd disk

user data

3rd disk

user data

4th disk

user data

5th disk

parity data

50% user data

50% redundant dat

80% user data

20% parity dat

50% user data

50% redundant data

a

100% user dat

a

RAID 0 Group

(nonredundant array)

1st disk

user data

a

2nd disk

user data

3rd disk

user data

RAID 1/0 Group

1st disk

user data

2nd disk

user data

3rd disk

user data

4th disk

user data

5th disk

user data

2-16

Individual Disk Unit

User data

Figure 2-7 Disk Space Usage in the RAID Configurations

100% user dat

a

Hot Spare

Reserved

A RAID 0 Group (nonredundant individual access array) provides all

its disk space for user files, but does not provide any high availability

features.

A RAID 1/0 Group provides the best combination of performance

and availability, at the highest cost per Mbyte of disk space.

An individual unit, like a RAID 0 Group, provides no

high-availability features. All its disk space is available for user data,

as shown in the figure above.

EMC Fibre Channel Storage-System Configuration Planning

No user dat

6th disk

user data

a

EMC1820

Page 47

Guidelines for RAID Types

To decide when to use a RAID 5 Group, RAID 3 Group, mirror (that

is, a RAID 1 mirrored pair or RAID 1/0 Group), a RAID 0 Group,

individual disk unit, or hot spare, you need to weigh these factors:

• Importance of data availability

• Importance of performance

• Amount of data stored

• Cost of disk space

The following guidelines will help you decide on RAID types.

Use a RAID 5 Group (individual access array) for applications

where

• Data availability is very important

• Large volumes of data will be stored

• Multitask applications use I/O transfers of different sizes

• Good read and moderate write performance are important (write

caching can improve (RAID 5 write performance)

RAID Types and Tradeoffs

2

• You want the flexibility of multiple LUNs per RAID Group

Use a RAID 3 Group (parallel access array) for applications where

• Data availability is very important

• Large volumes of data will be stored

• A single-task application uses large I/O transfers (more than 64

Kbytes). The operating system must allow transfers aligned to

start at disk addresses that are multiples of 2 Kbytes from the start

of the LUN.

Use a RAID 1 mirrored pair for applications where

• Data availability is very important

• Speed of write access is important and write activity is heavy

Use a RAID 1/0 Group (mirrored nonredundant array) for

applications where

• Data availability is critically important

• Overall performance is very important

Guidelines for RAID Types

2-17

Page 48

RAID Types and Tradeoffs

2

Use a RAID 0 Group (nonredundant individual access array) for

applications where

• High availability is not important

• Overall performance is very important

Use an individual unit for applications where

• High availability is not important

• Speed of write access is somewhat important

Use a hot spare where

• In any RAID 5, RAID 3, RAID 1/0 or RAID 1 Group, high

availability is so important that you want to regain data

redundancy quickly without human intervention if any disk in

the Group fails

• Minimizing the degraded performance caused by disk failure in a

RAID 5 or RAID 3 Group is important

2-18

EMC Fibre Channel Storage-System Configuration Planning

Page 49

Sample Applications for RAID Types

This section describes some types of applications in which you would

want to use a RAID 5 Group, RAID 3 Group, RAID 1 mirrored pair,

RAID 0 Group (nonredundant array), RAID 1/0 Group, or individual

unit.

RAID 5 Group (individual access array) — Useful as a database

repository or a database server that uses a normal or low percentage

of write operations (writes are 33 percent or less of all I/O

operations). Use a RAID 5 Group where multitask applications

perform I/O transfers of different sizes. Write caching can

significantly enhance the write performance of a RAID 5 Group.

For example, a RAID 5 Group is suitable for multitasking

applications that require a large history database with a high read

rate, such as a database of legal cases, medical records, or census

information. A RAID 5 Group also works well with transaction

processing applications, such as an airline reservations system, where

users typically read the information about several available flights

before making a reservation, which requires a write operation. You

could also use a RAID 5 Group in a retail environment, such as a

supermarket, to hold the price information accessed by the

point-of-sale terminals. Even though the price information may be

updated daily, requiring many write operations, it is read many more

times during the day.

RAID Types and Tradeoffs

2

RAID 3 Group — A RAID 3 Group (parallel access array) works well

with a single-task application that uses large I/O transfers (more than

64 Kbytes), aligned to start at a disk address that is a multiple of 2

Kbytes from the beginning of the logical disk. RAID 3 Groups can use

SP memory to great advantage without the second SP and battery

backup unit required for storage-system caching.

You might use a RAID 3 Group for a single-task application that does

large I/O transfers, like a weather tracking system, geologic charting

application, medical imaging system, or video storage application.

RAID 1 mirrored pair — A RAID 1 mirrored pair is useful for

logging or record-keeping applications because it requires fewer

disks than a RAID 0 Group (nonredundant array) and provides high

availability and fast write access. Or you could use it to store daily

updates to a database that resides on a RAID 5 Group, and then,

during off-peak hours, copy the updates to the database on the

RAID 5 Group.

Sample Applications for RAID Types

2-19

Page 50

RAID Types and Tradeoffs

2

RAID 0 Group (nonredundant individual access array) — Use a

RAID 0 Group where the best overall performance is important. In

terms of high availability, a RAID 0 Group is less available than an

individual unit. A RAID 0 Group (like a RAID 5 Group) requires a

minimum of three disks. A RAID 0 Group serves well for an

application that uses short-term data to which users need quick

access.

RAID 1/0 Group (mirrored RAID 0 Group) — A RAID 1/0 Group

provides the best balance of performance and availability. You can

use it very effectively for any of the RAID 5 applications. A RAID 1/0

Group requires a minimum of four disks.

Individual unit — An individual unit is useful for print spooling,

user file exchange areas, or other such applications, where high

availability is not important or where the information stored is easily

restorable from backup.

The performance of an individual unit is slightly less than a standard

disk not in an storage system. The slight degradation results from SP

overhead.

Hot spare — A hot spare provides no data storage but enhances the

availability of each RAID 5, RAID 3, RAID 1, and RAID 1/0 Group in

a storage system. Use a hot spare where you must regain high

availability quickly without human intervention if any disk in such a

RAID Group fails. A hot spare also minimizes the period of degraded

performance after a RAID 5 or RAID 3 disk fails.

2-20

What Next? This chapter explained RAID Group types and tradeoffs. To plan

LUNs and file systems for shared storage, continue to Chapter 3; or

for unshared storage, skip to Chapter 4. For details on storagesystem hardware — shared and unshared — skip to Chapter 5.

For storage-system management utilities, skip to Chapter 6.

EMC Fibre Channel Storage-System Configuration Planning

Page 51

3

Planning File Systems

and LUNs with Shared

Switched Storage

This chapter shows a sample RAID, LUN, and Storage Group

configuration with shared storage, and then provides worksheets for

planning your own shared storage installation. Topics are

• Dual Paths to LUNs...........................................................................3-2

• Sample Shared Switched Installation..............................................3-3

• Planning Applications, LUNs, and Storage Groups.....................3-6

Planning File Systems and LUNs with Shared Switched Storage

3-1

Page 52

Planning File Systems and LUNs with Shared Switched Storage

3

Dual Paths to LUNs

A shared storage system includes two or more servers, one or two

Fibre Channel switches, and one or more storage systems, each with

two SPs and Access Logix software.

With shared storage, there are two paths to each LUN in the storage

system. The storage-system software, using optional software called

Application Transparent Failover (ATF), can automatically switch to

the other path if a device (such as a host-bus adapter or cable) fails.

With unshared storage, if the server has two adapters and the storage

system has two SPs, ATF software is available as an option. With two

adapters and two SPs, ATF can perform the same function as with

shared systems: automatically switch to the other path if a device

(such as host bus adapter or cable) fails.

3-2

EMC Fibre Channel Storage-System Configuration Planning

Page 53

Planning File Systems and LUNs with Shared Switched Storage

Sample Shared Switched Installation

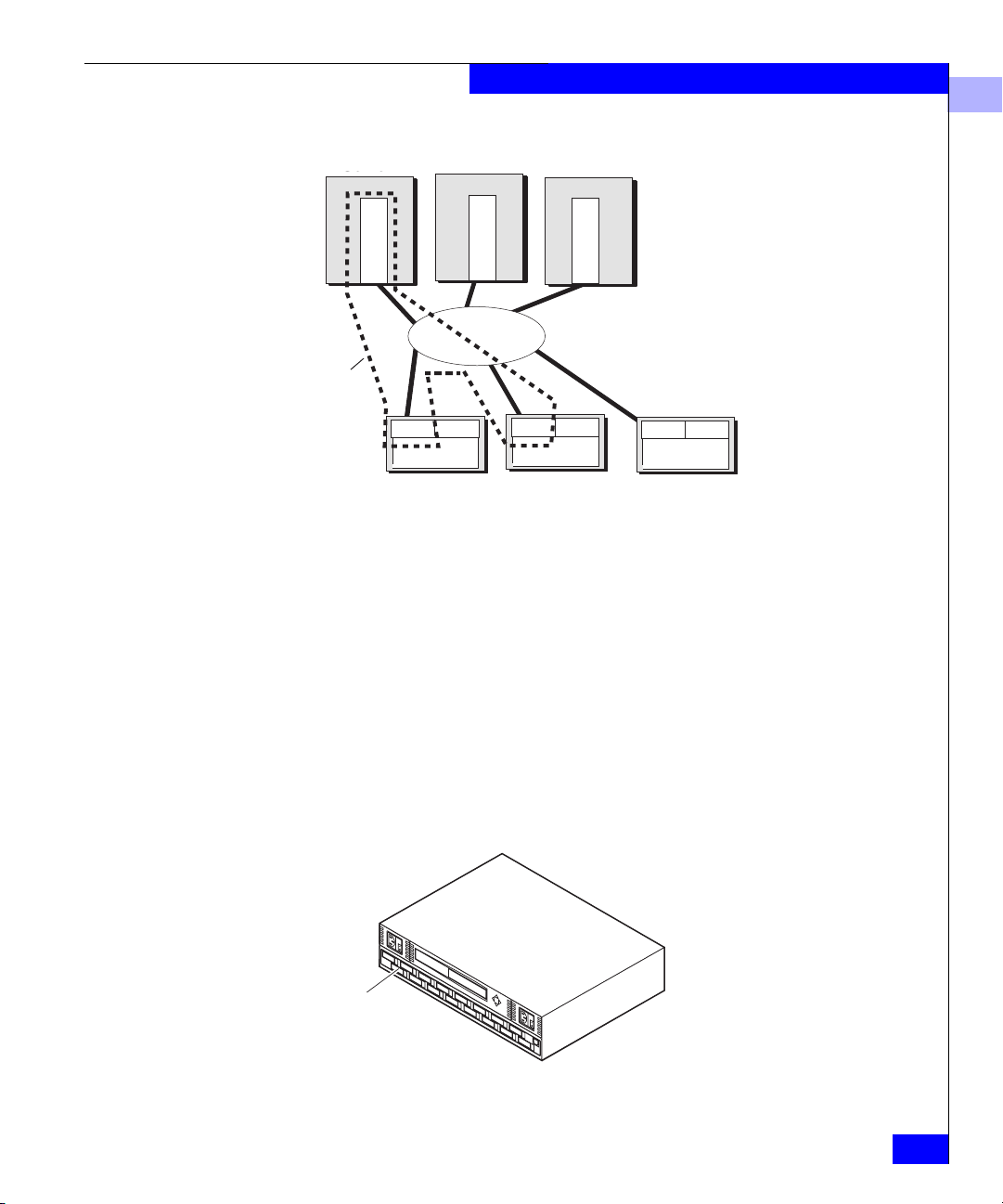

The following figure shows a sample shared switched

(high-availability) storage system connected to three servers: two

servers in a cluster and one server running a database management

program.

Highly available cluster

3

File Server (FS) Mail Server(MS)

Operating

system A

Adapter

Adapter

Private storage

Cluster

Storage Group

Database Server

Storage Group

Operating

system A

Adapter

Switch fabric

SP A

FS R5

Files A

MS R5

ISP A mail

MS R5

Users

DS R5

Users

DS R1

LogD1

Spare

Adapter

Spare

SP B

FS R5

Files B

MS R5

ISP B mail

MS R5

Specs

DS R5

Dbase2

DS R5 (6

disks) Dbase1

Database Server(DS)

Operating

system B

Adapter

Adapter

Switch fabric

Disk IDs

4_0-4_9

3_0-3_9

2_0-2_9

1_0-1_9

0_0-0_9

Path 1

Path 2

Figure 3-1 Sample Shared Switched High Availability installation

Sample Shared Switched Installation

3-3

Page 54

Planning File Systems and LUNs with Shared Switched Storage

3

The storage-system disk IDs and server Storage Group LUNs are as

follows.

Clustered System LUNs

File Server LUNs (FS) - SP B Mail Server LUNs (MS) - SP A

Disk IDs

RAID type, storage type

4_0-4_4 RAID 5, Files A

4_5-4_9 RAID 5, Files B

6_0, 6_1 – Hot spare (automatically replaces a failed disk in any server’s LUN)

RAID type, storage type

Disk IDs

2_0-2_4 RAID 5, ISP A mail

2_5-2_9 RAID 5, ISP B mail

3_0-3_4 RAID 5, Users

3_5-3_9 RAID 5, Specs

Database Server LUNs (DS) - SP A

Disk IDs

0_0, 0_ 1 RAID 1, Log file for

database Dbase1

0_4-0_9 RAID 5 (6 disks), Dbase1

1_0-1_4 RAID 5, Users