Dell EMC PowerVault ME4 Series Storage

System

Deployment Guide

Notes, cautions, and warnings

NOTE: A NOTE indicates important information that helps you make better use of your product.

CAUTION: A CAUTION indicates either potential damage to hardware or loss of data and tells you how to avoid the

problem.

WARNING: A WARNING indicates a potential for property damage, personal injury, or death.

© 2018 – 2019 Dell Inc. or its subsidiaries. All rights reserved. Dell, EMC, and other trademarks are trademarks of Dell Inc. or its

subsidiaries. Other trademarks may be trademarks of their respective owners.

2019 - 09

Rev. A04

Contents

1 Before you begin.......................................................................................................................... 6

Unpack the enclosure........................................................................................................................................................... 6

Safety guidelines.................................................................................................................................................................... 7

Safe handling.................................................................................................................................................................... 7

Safe operation.................................................................................................................................................................. 8

Electrical safety................................................................................................................................................................8

Rack system safety precautions....................................................................................................................................9

Installation checklist...............................................................................................................................................................9

Planning for installation........................................................................................................................................................10

Preparing for installation..................................................................................................................................................... 10

Preparing the site and host server...............................................................................................................................10

Required tools..................................................................................................................................................................11

Requirements for rackmount installation..................................................................................................................... 11

Disk drive module.................................................................................................................................................................. 11

Drive carrier module in 2U chassis................................................................................................................................ 11

Drive status indicators................................................................................................................................................... 12

Blank drive carrier modules........................................................................................................................................... 12

DDIC in a 5U enclosure..................................................................................................................................................13

Populating drawers with DDICs..........................................................................................................................................14

2 Mount the enclosures in the rack................................................................................................. 15

Rackmount rail kit.................................................................................................................................................................15

Install the 2U enclosure....................................................................................................................................................... 15

Install the 2U enclosure front bezel............................................................................................................................. 16

Install the 5U84 enclosure...................................................................................................................................................16

Connect optional expansion enclosures............................................................................................................................ 17

Cable requirements for expansion enclosures............................................................................................................ 18

3 Connect to the management network........................................................................................... 21

4 Cable host servers to the storage system..................................................................................... 22

Cabling considerations........................................................................................................................................................ 22

Connecting the enclosure to hosts................................................................................................................................... 22

CNC technology............................................................................................................................................................ 22

Fibre Channel protocol..................................................................................................................................................23

iSCSI protocol................................................................................................................................................................ 23

SAS protocol.................................................................................................................................................................. 24

Host connection.................................................................................................................................................................. 25

16 Gb Fibre Channel host connection......................................................................................................................... 25

iSCSI host connection...................................................................................................................................................25

12 Gb HD mini-SAS host connection.......................................................................................................................... 25

10Gbase-T host connection......................................................................................................................................... 25

Connecting direct attach configurations....................................................................................................................26

Single-controller module configurations..................................................................................................................... 26

Contents 3

Dual-controller module configurations........................................................................................................................ 26

5 Connect power cables and power on the storage system............................................................... 30

Power cable connection..................................................................................................................................................... 30

6 Perform system and storage setup.............................................................................................. 32

Record storage system information..................................................................................................................................32

Using guided setup..............................................................................................................................................................32

Web browser requirements and setup........................................................................................................................32

Access the ME Storage Manager............................................................................................................................... 32

Update firmware............................................................................................................................................................33

Use guided setup in the ME Storage Manager Welcome panel.............................................................................. 33

7 Perform host setup.................................................................................................................... 40

Host system requirements................................................................................................................................................. 40

About multipath configuration..................................................................................................................................... 40

Attach host servers.......................................................................................................................................................40

Windows hosts.................................................................................................................................................................... 40

Fibre Channel host server configuration for Windows Server................................................................................ 40

iSCSI host server configuration for Windows Server............................................................................................... 42

SAS host server configuration for Windows Server................................................................................................. 45

Linux hosts........................................................................................................................................................................... 46

Fibre Channel host server configuration for Linux ...................................................................................................46

iSCSI host server configuration for Linux...................................................................................................................48

SAS host server configuration for Linux..................................................................................................................... 51

VMware ESXi hosts............................................................................................................................................................ 52

Fibre Channel host server configuration for VMware ESXi.....................................................................................52

iSCSI host server configuration for VMware ESXi....................................................................................................54

SAS host server configuration for VMware ESXi..................................................................................................... 56

Enable Multipathing on iSCSI volumes....................................................................................................................... 58

Citrix XenServer hosts........................................................................................................................................................58

8 Troubleshooting and problem solving........................................................................................... 59

Locate the service tag........................................................................................................................................................59

Operators (Ops) panel LEDs..............................................................................................................................................59

2U enclosure Ops panel................................................................................................................................................59

5U enclosure Ops panel................................................................................................................................................60

Initial start-up problems.......................................................................................................................................................61

2U enclosure LEDs........................................................................................................................................................ 63

5U enclosure LEDs........................................................................................................................................................ 64

Module LEDs.................................................................................................................................................................. 67

Troubleshooting 2U enclosures................................................................................................................................... 68

Troubleshooting 5U enclosures................................................................................................................................... 69

Fault isolation methodology..........................................................................................................................................70

Options available for performing basic steps.............................................................................................................. 71

Performing basic steps.................................................................................................................................................. 71

If the enclosure does not initialize................................................................................................................................72

Correcting enclosure IDs...............................................................................................................................................72

Host I/O.......................................................................................................................................................................... 72

4

Contents

Dealing with hardware faults........................................................................................................................................73

A Cabling for replication................................................................................................................ 76

Connecting two storage systems to replicate volumes................................................................................................. 76

Host ports and replication.................................................................................................................................................. 76

Example cabling for replication...........................................................................................................................................77

Single-controller module configuration for replication.............................................................................................. 77

Dual-controller module configuration for replication................................................................................................. 77

Isolating replication faults................................................................................................................................................... 80

Diagnostic steps for replication setup......................................................................................................................... 81

B SFP+ transceiver for FC/iSCSI ports........................................................................................... 83

C System Information Worksheet...................................................................................................85

D Setting network port IP addresses using the CLI port and serial cable............................................ 88

Mini-USB Device Connection............................................................................................................................................ 90

Microsoft Windows drivers........................................................................................................................................... 91

Linux drivers....................................................................................................................................................................91

Contents

5

1

Before you begin

Unpack the enclosure

Examine the packaging for crushes, cuts, water damage, or any other evidence of mishandling during transit. If you suspect that damage

has happened, photograph the package before opening, for possible future reference. Retain the original packaging materials for use with

returns.

• Unpack the 2U storage system and identify the items in your shipment.

NOTE: The cables that are used with the enclosure are not shown in Figure 1. Unpacking the 2U12 and 2U24

enclosures. The rail kit and accessories box is located below the 2U enclosure shipping box lid.

Figure 1. Unpacking the 2U12 and 2U24 enclosures

Storage system enclosure 2. Rackmount left rail (2U)

1.

3. Rackmount right rail (2U) 4. Documentation

5. Enclosure front-panel bezel option 6. Rack mount ears

• 2U enclosures are shipped with the controller modules or input/output modules (IOMs) installed. Blank drive carrier modules must

be installed in the unused drive slots.

• For enclosures configured with CNC controller modules, locate the SFP+ transceivers included with the shipment. See SFP+

transceiver for FC/iSCSI ports.

• Unpack the 5U84 storage system and identify the items in your shipment.

NOTE:

kit and accessories box is located below the 5U84 enclosure shipping box lid.

The cables that are used with the enclosure are not shown in Figure 2. Unpacking the 5U84 enclosure. The rail

6 Before you begin

Figure 2. Unpacking the 5U84 enclosure

1. Storage system enclosure 2. DDICs (Disk Drive in Carriers)

3. Documentation 4. Rackmount left rail (5U84)

5. Rackmount right rail (5U84) 6. Drawers

• DDICs ship in a separate container and must be installed into the enclosure drawers during product installation. For rackmount

installations, DDICs are installed after the enclosure is mounted in the rack. See Populating drawers with DDICs.

• For enclosures configured with CNC controller modules, locate the SFP+ transceivers included with the shipment. See SFP+

transceiver for FC/iSCSI ports.

CAUTION:

• A 5U enclosure does not ship with DDICs installed, but the rear panel controller modules or IOMs are installed.

This partially populated enclosure is weights approximately 64 kg (142 lb). You need a minimum of two people to

remove the enclosure from the box.

• When lifting the enclosure, verify that the straps are securely wrapped and buckled and position one person at

each side of the enclosure. Then, grip the straps securely by the loops and lift the enclosure out of the box by

using proper lifting techniques. Place the enclosure in an electrostatically protected area.

Safety guidelines

Always follow these safety guidelines to avoid injury and damage to ME4 Series components.

If you use this equipment in a manner that is not specified by Dell EMC, the protection that is provided by the equipment could be

impaired. For your safety and precaution, observe the rules that are described in the following sections:

NOTE:

regulatory information. Warranty information is included as a separate document.

See the

Dell EMC PowerVault ME4 Series Storage System Getting Started Guide

for product safety and

Safe handling

Dell EMC recommends that only individuals with rack-mounting experience install an enclosure into a rack.

CAUTION:

provided by the equipment.

• Unplug the enclosure before you move it or if you think that it has become damaged in any way.

• A safe lifting height is 20U.

• Always remove the power cooling modules (PCMs) to minimize weight before you move the enclosure.

• Do not lift the enclosures by the handles on the PCMs—they are not designed to take the weight.

Use this equipment in a manner specified by Dell EMC. Failure to do so may cancel the protection that is

Before you begin 7

CAUTION: Do not try to lift the enclosure by yourself:

• Fully configured 2U12 enclosures can weigh up to 32 kg (71 lb)

• Fully configured 2U24 enclosures can weigh up to 30 kg (66 lb)

• Fully configured 5U84 enclosures can weigh up to 135 kg (298 lb). An unpopulated enclosure weighs 46 kg (101 lb).

• Use a minimum of two people to lift the 5U84 enclosure from the shipping box and install it in the rack.

Before lifting the enclosure:

• Avoid lifting the enclosure using the handles on any of the CRUs because they are not designed to take the weight.

• Do not lift the enclosure higher than 20U. Use mechanical assistance to lift above this height.

• Observe the lifting hazard label affixed to the storage enclosure.

Safe operation

Operation of the enclosure with modules missing disrupts the airflow and prevents the enclosure from receiving sufficient cooling.

NOTE: For a 2U enclosure, all IOM and PCM slots must be populated. In addition, empty drive slots (bays) in 2U

enclosures must hold blank drive carrier modules. For a 5U enclosure, all controller module, IOM, FCM, and PSU slots

must be populated.

• Follow the instructions in the module bay caution label affixed to the module being replaced.

• Replace a defective PCM with a fully operational PCM within 24 hours. Do not remove a defective PCM unless you

have a replacement model of the correct type ready for insertion.

• Before removal/replacement of a PCM or PSU, disconnect supply power from the module to be replaced. See the

Dell EMC PowerVault ME4 Series Storage System Owner’s Manual.

• Follow the instructions in the hazardous voltage warning label affixed to power cooling modules.

.

CAUTION: 5U84 enclosures only

• To prevent a rack from tipping over, drawer interlocks stop users from opening both drawers simultaneously. Do not

attempt to force open a drawer when the other drawer in the enclosure is already open. In a rack containing more

than one 5U84 enclosure, do not open more than one drawer per rack at a time.

• Observe the hot surface label that is affixed to the drawer. Operating temperatures inside enclosure drawers can

reach 60°C (140°F) . Take care when opening drawers and removing DDICs.

• Due to product acoustics, ear protection should be worn during prolonged exposure to the product in operation.

• Observe the drawer caution label. Do not use open drawers to support any other objects or equipment.

Electrical safety

• The 2U enclosure must be operated from a power supply input voltage range of 100–240 VAC, 50/60Hz.

• The 5U enclosure must be operated from a power supply input voltage range of 200–240 VAC, 50/60Hz.

• Provide a power source with electrical overload protection to meet the requirements in the technical specification.

• The power cord must have a safe electrical grounding connection. Check the grounding connection of the enclosure before you

switch on the power supply.

NOTE:

• The plug on the power supply cord is used as the main disconnect device. Ensure that the socket outlets are located

• 2U enclosures are intended to operate with two PCMs.

• 5U84 enclosures are intended to operate with two PSUs.

• Follow the instructions that are shown on the power-supply disconnection caution label that is affixed to power

The enclosure must be grounded before applying power.

near the equipment and are accessible.

cooling modules.

CAUTION: Do not remove the covers from the enclosure or any of the modules as there is a danger of electric shock

inside.

8 Before you begin

Rack system safety precautions

The following safety requirements must be considered when the enclosure is mounted in a rack:

• The rack construction must support the total weight of the installed enclosures. The design should incorporate stabilizing features to

prevent the rack from tipping or being pushed over during installation or in normal use.

• When loading a rack with enclosures, fill the rack from the bottom up; and empty the rack from the top down.

• Always remove all power supply modules to minimize weight, before loading the enclosure into the rack.

• Do not try to lift the enclosure by yourself.

CAUTION: To prevent of the rack falling over, never move more than one enclosure out of the cabinet at any one time.

• The system must be operated with low-pressure rear exhaust installation. The back pressure that is created by rack doors and

obstacles must not exceed 5 pascals (0.5 mm water gauge).

• The rack design should take into consideration the maximum operating ambient temperature for the enclosure. The maximum

operating temperature is 35ºC (95ºF) for controllers and 40ºC (104ºF) for expansion enclosures.

• The rack should have a safe electrical distribution system. It must provide overcurrent protection for the enclosure. Make sure that the

rack is not overloaded by the total number of enclosures that are installed in the rack. Consideration should be given to the electrical

power consumption rating shown on the nameplate.

• The electrical distribution system must provide a reliable connection for each enclosure in the rack.

• Each PSU or PCM in each enclosure has a grounding leakage current of 1.0 mA. The design of the electrical distribution system must

take into consideration the total grounding leakage current from all the PSUs/PCMs in all the enclosures. The rack requires labeling

with “High Leakage Current. Grounding connection essential before connecting supply.”

Installation checklist

This section shows how to plan for and successfully install your enclosure system into an industry standard 19-inch rack cabinet.

CAUTION:

The following table outlines the steps that are required to install the enclosures, and initially configure and provision the storage system:

NOTE:

Table 1. Installation checklist

Step Task Where to find procedure

1 Unpack the enclosure. See Unpack the enclosure.

2 Install the controller enclosure and optional expansion enclosures in the

3 Populate drawers with disks (DDICs) in 5U84 enclosure; 2U enclosures ship

4 Cable the optional expansion enclosures. See Connect optional expansion enclosures.

5 Connect the management ports. See Connect to the management network.

6 Cable the controller host ports.

7 Connect the power cords and power on the system. See Power cable connection.

8 Perform system and storage setup. See Using guided setup.

9 Perform host setup:

10 Perform the initial configuration tasks.

Use only the power cables supplied when installing the storage system.

To ensure successful installation, perform the tasks in the order presented.

1

rack.

with disks installed.

2

• Attach the host servers.

• Install the required host software.

3

See Required tools.

See Requirements for rackmount installation.

See Install the 2U enclosure.

See Install the 5U84 enclosure.

See Populating drawers with DDICs.

See Connecting the enclosure to hosts.

See Host system requirements.

See Attach host servers.

See Windows hosts and Linux hosts.

See VMware ESXi hosts.

See Using guided setup.

Before you begin 9

1

The environment in which the enclosure operates must be dust-free to ensure adequate airflow.

2

For more information about hosts, see the About hosts topic in the Dell EMC PowerVault ME4 Series Storage System Administrator’s

Guide.

3

The ME Storage Manager is introduced in Using guided setup. See the Dell EMC PowerVault ME4 Series Storage System

Administrator’s Guide or online help for additional information.

Planning for installation

Before beginning the enclosure installation, familiarize yourself with the system configuration requirements.

Table 2. System configuration

Module type Location Description

Drive carrier modules 2U front panel All drive slots must hold either a drive carrier or blank drive carrier module. Empty slots

are not allowed. At least one disk must be installed.

DDIC 5U front panel

drawers

Power cooling

modules

Power supply unit

modules

Fan cooling modules 5U rear panel Five FCMs provide airflow circulation, maintaining all system components below the

Controller modules

and IOMs

2U rear panel Two PCMs provide full power redundancy, allowing the system to continue to operate

5U rear panel Two PSUs provide full power redundancy, allowing the system to continue to operate

Rear panel

Maximum 84 disks are installed (42 disks per drawer). Minimum 28 disks are required.

Follow the drawer population rules in Populating drawers with DDICs.

while a faulty PCM is replaced.

while a faulty PSU is replaced.

maximum temperature allowed.

• One or two controller modules may be installed in 2U12 and 2U24 enclosures.

• Two controller modules must be installed in 5U84 enclosures.

• Two IOMs must be installed in 2U12, 2U24, and 5U84 enclosures.

Preparing for installation

NOTE:

• 2U enclosures are delivered with CRUs and all drive carrier modules installed.

• 5U84 enclosures are delivered with CRUs installed; however, DDICs must be installed during system setup.

• 5U84 enclosures require 200–240VAC for operation. See the

CAUTION: Lifting enclosures:

• A 2U enclosure, including all its component parts, is too heavy for one person to lift and install into the rack cabinet.

• A 5U enclosure, which is delivered without DDICs installed, requires two people to lift it from the box. A mechanical

Make sure that you wear an effective antistatic wrist or ankle strap and follow conventional ESD precautions when touching modules and

components. Do not touch the midplane, motherboard, or module connectors. See Safety guidelines for important preparation

requirements and handling procedures to use during product installation.

Preparing the site and host server

Before beginning the enclosure installation, verify that the site where you plan to install your storage system has the following:

• Each redundant power supply module requires power from an independent source or a rack power distribution unit with

Uninterruptible Power Supply (UPS). 2U enclosures use standard AC power and the 5U84 enclosure requires high-line (high-voltage)

AC power.

• A host computer configured with the appropriate software, BIOS, and drives. Contact your supplier for the correct software

configurations.

Enclosure configurations:

Environmental requirements

PowerVault ME4 Series Storage System Owner’s Manual

Two people are required to safely move a 2U enclosure.

lift is required to hoist the enclosure for positioning in the rack.

for detailed information

topic in the

Dell EMC

10

Before you begin

Before installing the enclosure, verify the existence of the following:

• Depending upon the controller module: SAS, Fibre Channel (FC), or iSCSI HBA and appropriate switches (if used)

• Qualified cable options for host connection

• One power cord per PCM or PSU

• Rail kit (for rack installation)

Contact your supplier for a list of qualified accessories for use with the enclosure. The accessories box contains the power cords and other

accessories.

Required tools

The following tools are required to install an ME4 Series enclosure:

• Phillips screwdriver

• Torx T20 bit for locks and select CRU replacement

Requirements for rackmount installation

You can install the enclosure in an industry standard 19-inch cabinet capable of holding 2U form factors.

NOTE:

• Minimum depth: 707 mm (27.83") from rack posts to maximum extremity of enclosure (includes rear panel cabling and cable bend

radii).

• Weight:

• Up to 32 kg (71 lb), dependent upon configuration, per 2U enclosure.

• Up to 128 kg (282 lb), dependent upon configuration, per 5U enclosure.

• The rack should cause a maximum back pressure of 5 pascals (0.5 mm water gauge).

• Before you begin, ensure that you have adequate clearance in front of the rack for installing the rails.

See the

Dell EMC PowerVault ME4 Series Owner's Manual

for front and rear panel product views.

Disk drive module

The ME4 Series Storage System supports different disk drive modules for use in 2U and 5U84 enclosures.

• The disk drive modules that are used in 2U enclosures are referred to as drive carrier modules.

• The disk drive modules that are used in 5U84 enclosures are referred to as Disk Drive in Carrier (DDIC) modules.

Drive carrier module in 2U chassis

The drive carrier module consists of a disk drive that is installed in a carrier module.

• Each 2U12 drive slot holds a single low profile 1.0 in. high, 3.5 in. form factor disk drive in its carrier. The disk drives are horizontal. A

2.5" to 3.5" carrier adapter is available to accommodate 2.5" disk drives.

• Each 2U24 drive slot holds a single low profile 5/8 inch high, 2.5 in. form factor disk drive in its carrier. The disk drives are vertical.

The carriers have mounting locations for:

• Direct dock SAS drives.

A sheet steel carrier holds each drive, which provides thermal conduction, radio frequency, and electro-magnetic induction protection, and

physically protects the drive.

The front cap also has an ergonomic handle which gives the following functions:

• Secure location of the carrier into and out of drive slots.

• Positive spring-loading of the drive/midplane connector.

The carrier can use this interface:

• Dual path direct dock Serial Attached SCSI.

The following figures display the supported drive carrier modules:

Before you begin

11

Figure 3. Dual path LFF 3.5" drive carrier module

Figure 4. Dual path SFF 2.5" drive carrier module

Figure 5. 2.5" to 3.5" hybrid drive carrier adapter

Drive status indicators

Green and amber LEDs on the front of each drive carrier module indicate disk drive status.

Blank drive carrier modules

Blank drive carrier modules, also known as drive blanks, are provided in 3.5" (2U12) and 2.5" (2U24) form factors. They must be installed

in empty disk slots to create a balanced air flow.

12

Before you begin

Figure 6. Blank drive carrier modules: 3.5" drive slot (left); 2.5" drive slot (right)

DDIC in a 5U enclosure

Each disk drive is installed in a DDIC that enables secure insertion of the disk drive into the drawer with the appropriate SAS carrier

transition card.

The DDIC features a slide latch button with directional arrow. The slide latch enables you to install and secure the DDIC into the disk slot

within the drawer. The slide latch also enables you to disengage the DDIC from its slot, and remove it from the drawer. The DDIC has a

single Drive Fault LED, which illuminates amber when the disk drive has a fault.

The following figure shows a DDIC with a 3.5" disk drive:

Figure 7. 3.5" disk drive in a DDIC

The following figure shows a DDIC with a hybrid drive carrier adapter and a 2.5" disk drive:

Before you begin

13

Figure 8. 2.5" drive in a 3.5" DDIC with a hybrid drive carrier adapter

Populating drawers with DDICs

The 5U84 enclosure does not ship with DDICs installed. Before populating drawers with DDICs, ensure that you adhere to the following

guidelines:

• The minimum number of disks that are supported by the enclosure is 28, 14 in each drawer.

• DDICs must be added to disk slots in complete rows (14 disks at a time).

• Beginning at the front of each drawer, install DDICs consecutively by number, and alternately between the top drawer and the bottom

drawer. For example, install first at slots 0–13 in the top drawer, and then 42–55 in the bottom drawer. After that, install slots 14–27,

and so on.

• The number of populated rows must not differ by more than one row between the top and bottom drawers.

• Hard disk drives (HDD) and solid-state drives (SDD) can be mixed in the same drawer.

• HDDs installed in the same row should have the same rotational speed.

• DDICs holding 3.5" disks can be intermixed with DDICs holding 2.5" disks in the enclosure. However, each row should be populated

with disks of the same form factor (all 3.5" disks or 2.5" disks).

The following figure shows a drawer that is fully populated with DDICs:

• See Figure 7. 3.5" disk drive in a DDIC for the DDIC holding the 3.5" disk

• See Figure 8. 2.5" drive in a 3.5" DDIC with a hybrid drive carrier adapter for the DDIC holding the 2.5" disk with 3.5" adapter

Figure 9. 5U84 enclosure drawer fully populated with DDICs

14

Before you begin

2

Mount the enclosures in the rack

This section describes how to unpack the ME4 Series Storage System equipment, prepare for installation, and safely mount the enclosures

into the rack.

Topics:

• Rackmount rail kit

• Install the 2U enclosure

• Install the 5U84 enclosure

• Connect optional expansion enclosures

Rackmount rail kit

Rack mounting rails are available for use in 19-inch rack cabinets.

The rails have been designed and tested for the maximum enclosure weight. Multiple enclosures may be installed without loss of space in

the rack. Use of other mounting hardware may cause some loss of rack space. Contact Dell EMC to ensure that suitable mounting rails are

available for the rack you plan to use.

Install the 2U enclosure

The 2U enclosure is delivered with the disks installed.

Refer to Figure 10. Secure brackets to the rail (left hand rail shown for 2U) when following the rail installation instructions.

1. Remove the rack mounting rail kit from the accessories box, and examine for damage.

2. Use the following procedure to attach the rail kit brackets to the rack post:

a. Set each location pin at the rear of the rail into a rear rack post hole.

b. Use the supplied washers and screws to attach the bracket to the rear rack post. Leave the screws loose.

c. Extend the rail to fit between the front and rear rack posts.

d. Attach the bracket to the front rack post using the washers and screws supplied. Leave the screws loose.

e. Tighten the two clamping screws located along the rear section of the rack bracket.

f. Repeat the previous steps for the companion rail.

3. Install the enclosure into the rack:

a. Lift the enclosure and align it with the installed rack rails, taking care to ensure that the enclosure remains level.

b. Carefully insert the chassis slides into the rack rails and push fully in.

c. Tighten the mounting screws in the rear rail kit brackets.

d. Remove the enclosure until it reaches the hard stops—approximately 400 mm (15.75")—tighten the mounting screws in the front

rail kit bracket.

e. Return the enclosure to the home position.

Mount the enclosures in the rack 15

Figure 10. Secure brackets to the rail (left hand rail shown for 2U)

1. Front rack post (square hole) 2. Rail location pins (quantity 2 per rail)

3. Left rail 4. Rear rack post (square hole)

5. Clamping screw (B) 6. Clamping screw (B)

7. Fastening screw (A) 8. 2U Ops panel installation detail (exploded view)

9. Left rail position locking screw 10. 2U enclosure fastening screw (C)

11. Key: Rail kit fasteners used in rack-mount installation

Install the 2U enclosure front bezel

Install the bezel if it was included with the enclosure.

While holding the bezel in your hands, face the front panel of the 2U12 or 2U24 enclosure.

1. Hook the right end of the bezel onto the right ear cover of the storage system.

Figure 11. Attach the bezel to the front of the 2U enclosure

2. Insert the left end of the bezel into the securing slot until the release latch snaps into place.

3. Secure the bezel with the keylock as shown in the detail view in Figure 11. Attach the bezel to the front of the 2U enclosure.

NOTE:

To remove the bezel from the 2U enclosure front panel, reverse the order of the previous steps.

Install the 5U84 enclosure

The 5U84 enclosure is delivered without the disks installed.

NOTE:

CRUs to decrease the enclosure weight.

The adjustment range of the rail kit from the front post to the rear post is 660 mm–840 mm. This range suits a one-meter deep rack

within Rack Specification IEC 60297.

16

Due to the weight of the enclosure, install it into the rack without DDICs installed, and remove the rear panel

Mount the enclosures in the rack

1. To facilitate access, remove the door from the rack.

2. Ensure that the preassembled rails are at their shortest length.

NOTE: See the reference label on the rail.

Locate the rail location pins inside the front of the rack, and extend the length of the rail assembly to position the rear location pins.

3.

Ensure that the pins are fully inserted in the square or round holes in the rack posts.

Figure 12. Secure brackets to the rail (left hand rail shown for 5U84 enclosure)

Item Description Item Description

1 Fastening screws (A) 8 Front rack post (square hole)

2 Left rail 9 Middle slide locking screws

3 Rear rack post (square hole) 10 5U84 chassis section shown for reference

4 Clamping screw (B) 11 Fastening screw (C)

5 Clamping screw (B) 12 Key: Rail kit fasteners used in rackmount installation

6 Rail location pins (quantity 4 per rail) (A= fastening; B= clamping; C= fastening)

7 5U84 chassis section shown for reference

4. Fully tighten all clamping screws and middle slide locking screws.

5. Ensure the four rear spacer clips (not shown) are fitted to the edge of the rack post.

6. Slide the enclosure until fully seated on its rails.

7. Fasten the front of the enclosure using the enclosure fastening screws (x4) as shown in Figure 12. Secure brackets to the rail (left

hand rail shown for 5U84 enclosure).

8. Fix the rear of the enclosure to the sliding bracket with the rear enclosure fastening screws.

CAUTION:

the enclosure from the rack.

Reinsert the rear panel modules and install the DDICs into the drawers. See the instructions in the Dell EMC PowerVault ME4 Series

Storage System Owner’s Manual.

• Installing a controller module

• Installing an IOM

• Installing a fan cooling module

• Installing a PSU

• Installing a DDIC

Once the enclosure is installed in the rack, dispose of the lifting straps. The straps cannot be used to remove

Connect optional expansion enclosures

ME4 Series controller enclosures support 2U12, 2U24, and 5U84 expansion enclosures. 2U12 and 2U24 expansion enclosures can be

intermixed, however 2U expansion enclosures cannot be intermixed with 5U84 expansion enclosures in the same storage system.

NOTE:

connecting the expansion enclosures.

To add expansion enclosures to an existing storage system, power down the controller enclosure before

Mount the enclosures in the rack 17

• ME4 Series 2U controller enclosures support up to ten 2U enclosures (including the controller enclosure), or a maximum of 240 disk

drives.

• ME4 Series 5U controller enclosures support up to four 5U enclosures (including the controller enclosure), or a maximum of 336 disk

drives.

• ME4 Series expansion enclosures are equipped with dual-IOMs. These expansion enclosures cannot be cabled to a controller enclosure

equipped with a single IOM.

• The enclosures support reverse SAS cabling for adding expansion enclosures. Reverse cabling enables any drive enclosure to fail—or

be removed—while maintaining access to other enclosures. Fault tolerance and performance requirements determine whether to

optimize the configuration for high availability or high performance when cabling.

Cable requirements for expansion enclosures

ME4 Series supports 2U12, 2U24, and 5U84 form factors, each of which can be configured as a controller enclosure or an expansion

enclosure. Key enclosure characteristics include:

NOTE: To add expansion enclosures to an existing storage system, power down the controller enclosure before

connecting the expansion enclosures.

• When connecting SAS cables to IOMs, use only supported HD mini-SAS x4 cables.

• Qualified HD mini-SAS to HD mini-SAS 0.5 m (1.64 ft.) cables are used to connect cascaded enclosures in the rack.

• The maximum enclosure cable length that is allowed in any configuration is 2 m (6.56 ft.).

• When adding more than two expansion enclosures, you may need to purchase additional cables, depending upon the number of

enclosures and cabling method used.

• You may need to order additional or longer cables when reverse-cabling a fault-tolerant configuration.

Per common convention in cabling diagrams, the controller enclosure is shown atop the stack of connected expansion enclosures. In

reality, you can invert the order of the stack for optimal weight and placement stability in the rack. The schematic representation of

cabling remains unchanged. See Mount the enclosures in the rack for more detail.

When connecting multiple expansion enclosures to an expansion enclosure, use reverse cabling to ensure the highest level of fault

tolerance.

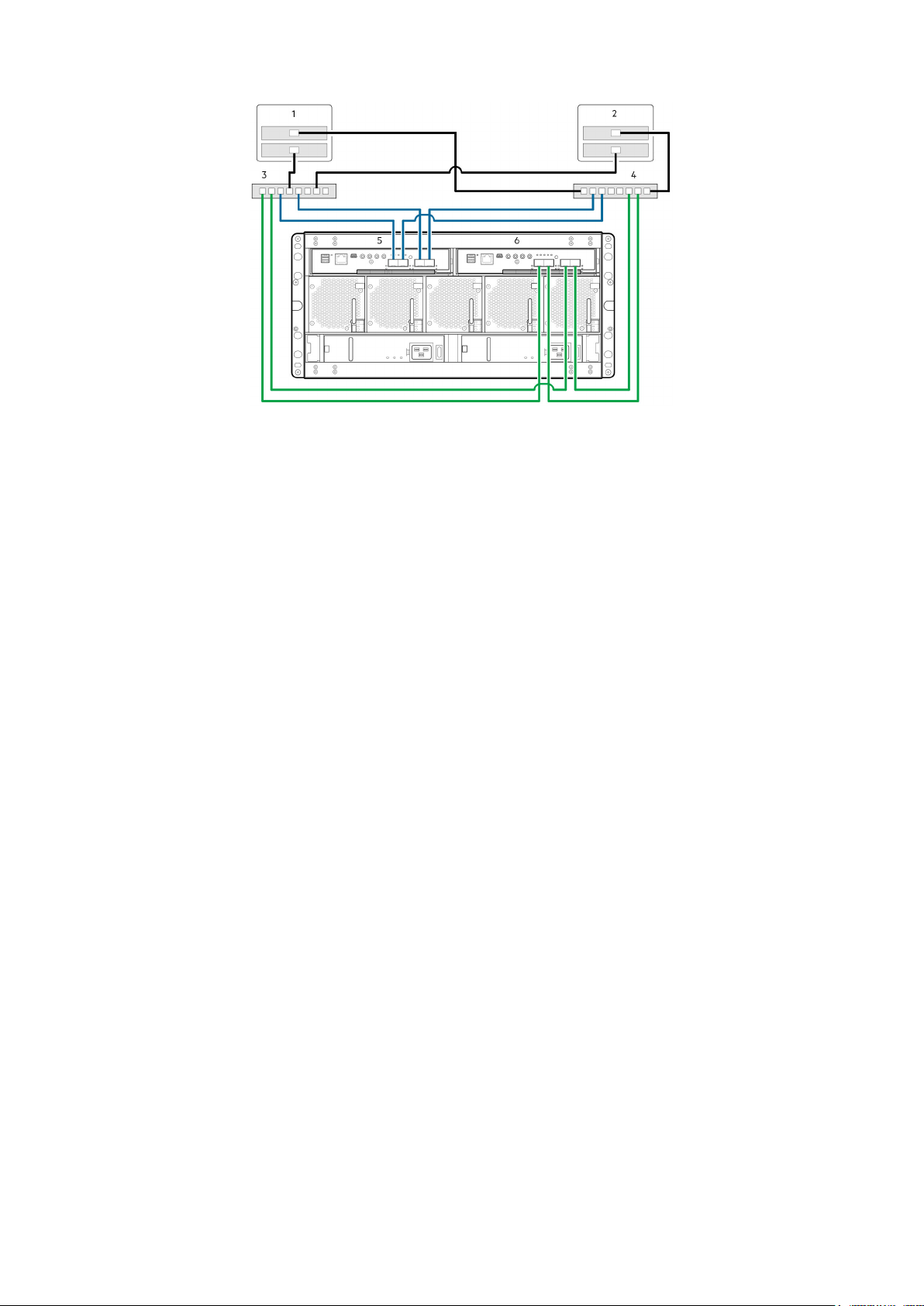

The ME4 Series identifies controller modules and IOMs by enclosure ID and IOM ID. In the following figure, the controller modules are

identified as 0A and 0B, the IOMs in the first expansion enclosure are identified as 1A and 1B, and so on. Controller module 0A is connected

to IOM 1A, with a chain of connections cascading down (blue). Controller module 0B is connected to the lower IOM (9B), of the last

expansion enclosure, with connections moving in the opposite direction (green). Reverse cabling enables any expansion enclosure to fail—

or be removed—while maintaining access to other enclosures.

NOTE:

Figure 13. Cabling connections between a 2U controller enclosure and 2U expansion enclosures shows the maximum cabling configuration

for a 2U controller enclosure with 2U expansion enclosures.

The cabling diagrams show only relevant details such as module face plate outlines and expansion ports.

18

Mount the enclosures in the rack

Figure 13. Cabling connections between a 2U controller enclosure and 2U expansion enclosures

Controller module A (0A) 2. Controller module B (0B)

1.

3. IOM (1A) 4. IOM (1B)

5. IOM (2A) 6. IOM (2B)

7. IOM (3A) 8. IOM (3B)

9. IOM (9A) 10. IOM (9B)

Figure 14. Cabling connections between a 5U controller enclosure and 5U expansion enclosures shows the maximum cabling configuration

for a 5U84 controller enclosure with 5U84 expansion enclosures (four enclosures including the controller enclosure).

Figure 14. Cabling connections between a 5U controller enclosure and 5U expansion enclosures

1.

Controller module A (0A) 2. Controller module B (0B)

3. IOM (1A) 4. IOM (1B)

5. IOM (2A) 6. IOM (2B)

7. IOM (3A) 8. IOM (3B)

Figure 15. Cabling connections between a 2U controller enclosure and 5U84 expansion enclosures shows the maximum cabling

configuration for a 2U controller enclosure with 5U84 expansion enclosures (four enclosures including the controller enclosure).

Mount the enclosures in the rack

19

Figure 15. Cabling connections between a 2U controller enclosure and 5U84 expansion enclosures

1. Controller module A (0A) 2. Controller module B (0B)

3. IOM (1A) 4. IOM (1B)

5. IOM (2A) 6. IOM (2B)

7. IOM (3A) 8. IOM (3B)

Label the back-end cables

Make sure to label the back-end SAS cables that connect the controller enclosure and the expansion enclosures.

20

Mount the enclosures in the rack

Connect to the management network

Perform the following steps to connect a controller enclosure to the management network:

1. Connect an Ethernet cable to the network port on each controller module.

2. Connect the other end of each Ethernet cable to a network that your management host can access, preferably on the same subnet.

NOTE: If you connect the iSCSI and management ports to the same physical switches, Dell EMC recommends using

separate VLANs.

3

Figure 16. Connect a 2U controller enclosure to the management network

Controller module in slot A 2. Controller module in slot B

1.

3. Switch 4. SAN

Figure 17. Connect a 5U controller enclosure to the management network

1.

Controller module in slot A 2. Controller module in slot B

3. Switch 4. SAN

NOTE: See also the topic about configuring network ports on controller modules in the

Storage System Administrator’s Guide

.

Dell EMC PowerVault ME4 Series

Connect to the management network 21

4

Cable host servers to the storage system

This section describes the different ways that host servers can be connected to a storage system.

Topics:

• Cabling considerations

• Connecting the enclosure to hosts

• Host connection

Cabling considerations

Host interface ports on ME4 Series controller enclosures can connect to respective hosts using direct-attach or switch-attach methods.

Another important cabling consideration is cabling controller enclosures to enable the replication feature. The FC and iSCSI product

models support replication, but SAS product models do not support replication. See Cabling for replication.

Use only Dell EMC cables for host connections:

• Qualified 16 Gb FC SFP+ transceivers and cable options

• Qualified 10 GbE iSCSI SFP+ transceivers and cable options

• Qualified 10Gbase-T cable options

• Qualified 12 Gb mini-SAS HD cable options

Connecting the enclosure to hosts

A host identifies an external port to which the storage system is attached. The external port may be a port in an I/O adapter (such as an

FC HBA) in a server. Cable connections vary depending on configuration. This section describes host interface protocols supported by

ME4 Series controller enclosures, while showing a few common cabling configurations. ME4 Series controllers use Unified LUN

Presentation (ULP), which enables a host to access mapped volumes through any controller host port.

ULP can show all LUNs through all host ports on both controllers, and the interconnect information is managed by the controller firmware.

ULP appears to the host as an active-active storage system, allowing the host to select any available path to access the LUN, regardless

of disk group ownership.

CNC technology

The ME4 Series FC/iSCSI models use Converged Network Controller (CNC) technology.

The CNC technology enables you to select the host interface protocols to use on the storage system. The small form-factor pluggable

(SFP+) connectors that are used in CNC ports are further described in the following subsections:

NOTE:

• Controller modules are not always shipped with preinstalled SFP+ transceivers. You might need to install SFP

transceivers into the controller modules. Within your product kit, locate the qualified SFP+ transceivers and install

them into the CNC ports. See SFP+ transceiver for FC/iSCSI ports.

• Use the ME Storage Manager to set the host interface protocol for CNC ports using qualified SFP+ transceivers.

ME4 Series models ship with CNC ports configured for FC. When connecting CNC ports to iSCSI hosts, you must

configure these ports for iSCSI.

CNC ports used for host connection

ME4 Series SFP+ based controllers ship with CNC ports that are configured for FC.

If you must change the CNC port mode, you can do so using the ME Storage Manager.

22 Cable host servers to the storage system

Alternatively, the ME4 Series enables you to set the CNC ports to use FC and iSCSI protocols in combination. When configuring a

combination of host interface protocols, host ports 0 and 1 must be configured for FC, and host ports 2 and 3 must be configured for

iSCSI. The CNC ports must use qualified SFP+ connectors and cables for the selected host interface protocol. For more information, see

SFP+ transceiver for FC/iSCSI ports.

Fibre Channel protocol

ME4 Series controller enclosures support controller modules with CNC host interface ports.

Using qualified FC SFP+ transceiver/cable options, these CNC ports can be configured to support Fibre Channel protocol in either four or

two CNC ports. Supported data rates are 8 Gb/sec or 16 Gb/s.

The controllers support Fibre Channel Arbitrated Loop (public or private) or point-to-point topologies. Loop protocol can be used in a

physical loop or for direct connection between two devices. Point-to-point protocol is used to connect to a fabric switch. Point-to-point

protocol can also be used for direct connection, and it is the only option supporting direct connection at 16 Gb/s.

The Fibre Channel ports are used for:

• Connecting to FC hosts directly, or through a switch used for the FC traffic.

• Connecting two storage systems through a switch for replication. See Cabling for replication.

The first option requires that the host computer must support FC and optionally, multipath I/O.

Use the ME Storage Manager to set FC port speed and options. See the topic about configuring host ports in the Dell EMC PowerVault

ME4 Series Storage System Administrator’s Guide. You can also use CLI commands to perform these actions:

• Use the set host-parameters CLI command to set FC port options.

• Use the show ports CLI command to view information about host ports.

iSCSI protocol

ME4 Series controller enclosures support controller modules with CNC host interface ports.

CNC ports can be configured to support iSCSI protocol in either four or two CNC ports. The CNC ports support 10 GbE but do not

support 1 GbE.

The 10 GbE iSCSI ports are used for:

• Connecting to 10 GbE iSCSI hosts directly, or through a switch used for the 10 GbE iSCSI traffic.

• Connecting two storage systems through a switch for replication.

The first option requires that the host computer supports Ethernet, iSCSI, and optionally, multipath I/O.

See the topic about configuring CHAP in the Dell EMC PowerVault ME4 Series Storage System Administrator’s Guide.

Use the ME Storage Manager to set iSCSI port options. See the topic about configuring host ports in the Dell EMC PowerVault ME4

Series Storage System Administrator’s Guide. You can also use CLI commands to perform these actions:

• Use the set host-parameters CLI command to set iSCSI port options.

• Use the show ports CLI command to view information about host ports.

iSCSI settings

The host should be cabled to two different Ethernet switches for redundancy.

If you are using switches with mixed traffic (LAN/iSCSI), then a VLAN should be created to isolate iSCSI traffic from the rest of the

switch traffic.

Example iSCSI port address assignments

The following figure and the supporting tables provide example iSCSI port address assignments featuring two redundant switches and two

IPv4 subnets:

NOTE:

For each callout number, read across the table row for the addresses in the data path.

Cable host servers to the storage system 23

Figure 18. Two subnet switch example (IPv4)

Table 3. Two subnet switch example

No. Device IP Address Subnet

1 A0 192.68.10.200 10

2 A1 192.68.11.210 11

3 A2 192.68.10.220 10

4 A3 192.68.11.230 11

5 B0 192.68.10.205 10

6 B1 192.68.11.215 11

7 B2 192.68.10.225 10

8 B3 192.68.11.235 11

9 Switch A N/A N/A

10 Switch B N/A N/A

11 Host server 1, Port 0 192.68.10.20 10

12 Host server 1, Port 1 192.68.11.20 11

13 Host server 2, Port 0 192.68.10.21 10

14 Host server 2, Port 1 192.68.11.21 11

To enable CHAP, see the topic about configuring CHAP in the Dell EMC PowerVault ME4 Series Storage System Administrator’s Guide.

SAS protocol

ME4 Series SAS models use 12 Gb/s host interface protocol and qualified cable options for host connection.

12Gb HD mini-SAS host ports

ME4 Series 12 Gb SAS controller enclosures support two controller modules. The 12 Gb/s SAS controller module provides four SFF-8644

HD mini-SAS host ports. These host ports support data rates up to 12 Gb/s. HD mini-SAS host ports are used for attachment to SAS

hosts directly. The host computer must support SAS and optionally, multipath I/O. Use a qualified cable option when connecting to a host.

24

Cable host servers to the storage system

Host connection

ME4 Series controller enclosures support up to eight direct-connect server connections, four per controller module.

Connect appropriate cables from the server HBAs to the controller module host ports as described in the following sections.

16 Gb Fibre Channel host connection

To connect controller modules supporting FC host interface ports to a server HBA or switch, using the controller CNC ports, select a

qualified FC SFP+ transceiver. For information about configuring HBAs, see the Fibre Channel topics under Attach host servers.

Use the cabling diagrams to connect the host servers to the switches. See the Dell EMC Storage Support Matrix for supported Fibre

Channel HBAs.

• Install and connect each FC HBA to a switch that is connected to the host ports on the two controllers that are shown in Figure 26.

Connecting hosts: ME4 Series 2U switch-attached – two servers, two switches and Figure 27. Connecting hosts: ME4 Series 5U

switch-attached – two servers, two switches.

• In hybrid examples, one server and switch manage FC traffic, and the other server and switch manage iSCSI traffic.

• For FC, each initiator must be zoned with a single host port or multiple host ports only (single initiator, multi-target of the same kind).

Connecting host servers directly to the storage system is also supported.

Qualified options support cable lengths of 1 m (3.28'), 2 m (6.56'), 5 m (16.40'), 15 m (49.21'), 30 m (98.43'), and 50 m (164.04') for OM4

multimode optical cables and OM3 multimode FC cables. A 0.5 m (1.64') cable length is also supported for OM3. In addition to providing

host connection, these cables are used for connecting two storage systems through a switch, to facilitate use of the optional replication

feature.

iSCSI host connection

To connect controller modules supporting 10 GbE iSCSI host interface ports to a server HBA or switch, using the controller CNC ports,

select a qualified 10 GbE SFP+ transceiver. For information about configuring iSCSI initiators/HBAs, see the iSCSI topics under

host servers.

Use the cabling diagrams to connect the host servers to the switches.

• Install and connect each Ethernet NIC to a switch that is connected to the host ports on the two controllers that are shown in Figure

26. Connecting hosts: ME4 Series 2U switch-attached – two servers, two switches and Figure 27. Connecting hosts: ME4 Series 5U

switch-attached – two servers, two switches.

• In hybrid examples, one server and switch manage iSCSI traffic, and the other server and switch manage FC traffic.

Connecting host servers directly to the storage system is also supported.

Attach

12 Gb HD mini-SAS host connection

To connect controller modules supporting HD mini-SAS host interface ports to a server HBA, using the controller’s SFF-8644 dual HD

mini-SAS host ports, select a qualified HD mini-SAS cable option. For information about configuring SAS HBAs, see the SAS topics under

Attach host servers. Use the cabling diagrams to connect the host servers.

A qualified SFF-8644 to SFF-8644 cable option is used for connecting to a 12Gb/s enabled host; whereas a qualified SFF-8644 to

SFF-8088 cable option is used for connecting to a 6 Gb/s host. Qualified SFF-8644 to SFF-8644 options support cable lengths of 0.5 m

(1.64'), 1 m (3.28'), 2 m (6.56'), and 4 m (13.12'). Qualified SFF-8644 to SFF-8088 options support cable lengths of 1 m (3.28'), 2 m

(6.56'), 3 m (9.84'), and 4 m (13.12').

10Gbase-T host connection

To connect controller modules with 10Gbase-T iSCSI host interface ports to a server HBA or switch, select a qualified 10Gbase-T cable

option.

For information about configuring network adapters and iSCSI HBAs, see the iSCSI topics under Attach host servers. See also, the cabling

instructions in

iSCSI host connection.

Cable host servers to the storage system

25

Connecting direct attach configurations

A dual-controller configuration improves application availability. If a controller failure occurs, the affected controller fails over to the healthy

partner controller with little interruption to data flow.

A failed controller can be replaced without the need to shut down the storage system.

NOTE: In the following examples, a single diagram represents CNC, SAS, and 10Gbase-T host connections for ME4

Series controller enclosures. The location and sizes of the host ports are similar. Blue cables show controller A paths

and green cables show controller B paths for host connection.

Single-controller module configurations

A single controller module configuration does not provide redundancy if a controller module fails.

This configuration is intended only for environments where high availability is not required. If the controller module fails, the host loses

access to the storage data until failure recovery actions are completed.

NOTE:

Figure 19. Connecting hosts: ME4 Series 2U direct attach – one server, one HBA, single path

Server 2. Controller module in slot A

1.

3. Controller module blank in slot B

NOTE: If the ME4 Series 2U controller enclosure is configured with a single controller module, the controller module

must be installed in the upper slot. A controller module blank must be installed in the lower slot. This configuration is

required to enable sufficient air flow through the enclosure during operation.

Expansion enclosures are not supported in a single controller module configuration.

Dual-controller module configurations

A dual-controller module configuration improves application availability.

If a controller module failure occurs, the affected controller module fails over to the partner controller module with little interruption to

data flow. A failed controller module can be replaced without the need to shut down the storage system.

In a dual-controller module system, hosts use LUN-identifying information from both controller modules to determine the data paths are

available to a volume. Assuming MPIO software is installed, a host can use any available data path to access a volume that is owned by

either controller module. The path providing the best performance is through the host ports on the controller module that owns the

volume . Both controller modules share one set of 1,024 LUNs (0-1,023) for use in mapping volumes to hosts.

Dual-controller module configurations – directly attached

In the following figures, blue cables show controller module A paths, and green cables show controller module B paths for host connection:

Figure 20. Connecting hosts: ME4 Series 2U direct attach – one server, one HBA, dual path

1.

Server 2. Controller module in slot A

3. Controller module in slot B

26 Cable host servers to the storage system

Figure 21. Connecting hosts: ME4 Series 5U direct attach – one server, one HBA, dual path

1. Server 2. Controller module in slot A

3. Controller module in slot B

Figure 22. Connecting hosts: ME4 Series 2U direct attach – two servers, one HBA per server, dual path

Server 1 2. Server 2

1.

3. Controller module in slot A 4. Controller module in slot B

Figure 23. Connecting hosts: ME4 Series 5U direct attach – two servers, one HBA per server, dual path

Server 1 2. Server 2

1.

3. Controller module in slot A 4. Controller module in slot B

Figure 24. Connecting hosts: ME4 Series 2U direct attach– four servers, one HBA per server, dual path

1.

Server 1 2. Server 2

3. Server 3 4. Server 4

5. Controller module A 6. Controller module B

Cable host servers to the storage system 27

Figure 25. Connecting hosts: ME4 Series 5U direct attach – four servers, one HBA per server, dual path

1. Server 1 2. Server 2

3. Server 3 4. Server 4

5. Controller module A 6. Controller module B

Dual-controller module configurations – switch-attached

A switch-attached solution—or SAN—places a switch between the servers and the controller enclosures within the storage system.

Using switches, a SAN shares a storage system among multiple servers, reducing the number of storage systems required for a particular

environment. Using switches increases the number of servers that can be connected to the storage system.

NOTE:

• See the recommended switch-attached examples for host connection in the

• See Figure 18. Two subnet switch example (IPv4) for an example showing host port and controller port addressing on

Figure 26. Connecting hosts: ME4 Series 2U switch-attached – two servers, two switches

Server 1 2. Server 2

1.

3. Switch A 4. Switch B

5. Controller module A 6. Controller module B

About switch-attached configurations:

ME4 Series Storage System

an IPv4 network.

document that is provided with your controller enclosure.

Setting Up Your Dell EMC PowerVault

28 Cable host servers to the storage system

Figure 27. Connecting hosts: ME4 Series 5U switch-attached – two servers, two switches

1. Server 1 2. Server 2

3. Switch A 4. Switch B

5. Controller module A 6. Controller module B

Label the front-end cables

Make sure to label the front-end cables to identify the controller module and host interface port to which each cable connects.

Cable host servers to the storage system

29

5

Connect power cables and power on the

storage system

Before powering on the enclosure system, ensure that all modules are firmly seated in their correct slots.

Verify that you have successfully completed the Installation checklist instructions. Once you have completed steps 1–7, you can access

the management interfaces using your web-browser to complete the system setup.

Topics:

• Power cable connection

Power cable connection

Connect a power cable from each PCM or PSU on the enclosure rear panel to the PDU (power distribution unit) as shown in the following

figures:

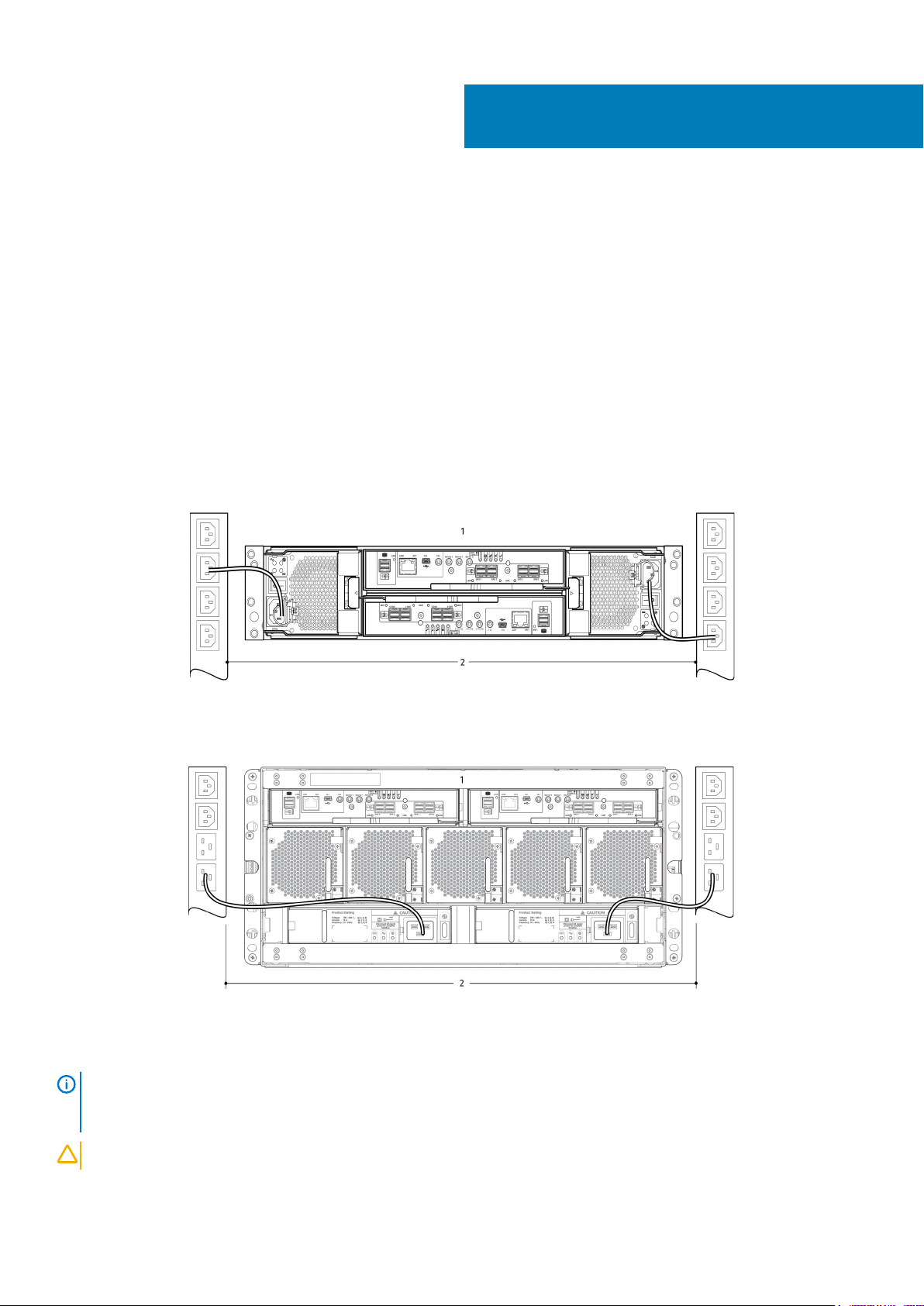

Figure 28. Typical AC power cable connection from PDU to PCM (2U)

Controller enclosure with redundant PCMs 2. Redundant PCM to PDU (AC UPS shown) connection

1.

Figure 29. Typical AC power cable connection from PDU to PSU (5U)

1.

Controller enclosure with redundant PSUs 2. Redundant PSU to PDU (AC UPS shown) connection

NOTE: The power cables must be connected to at least two separate and independent power supplies to ensure

redundancy. When the storage system is ready for operation, ensure that each PCM or PSU power switch is set to the

On position. See also Powering on).

CAUTION: Always remove the power connections before you remove the PCM (2U) or PSU (5U84) from the enclosure.

30 Connect power cables and power on the storage system

Testing enclosure connections

See Powering on. Once the power-on sequence succeeds, the storage system is ready to be connected as described in Connecting the

enclosure to hosts.

Grounding checks

The enclosure system must be connected to a power source that has a safety electrical grounding connection.

CAUTION: If more than one enclosure goes in a rack, the importance of the grounding connection to the rack increases

because the rack has a larger Grounding Leakage Current (Touch Current). Examine the grounding connection to the

rack before power on. An electrical engineer who is qualified to the appropriate local and national standards must do the

examination.

Powering on

CAUTION: Do not operate the enclosure system until the ambient temperature is within the specified operating range

that is described in the system specifications section of the

Manual

conditions before they are used with production data for I/O.

• With 2U enclosures, power on the storage system by connecting the power cables from the PCMs to the PDU, and moving the power

switch on each PCM to the On position. See Figure 28. Typical AC power cable connection from PDU to PCM (2U).

The System Power LED on the 2U Ops panel should be lit green when the enclosure power is activated.

• With 5U84 enclosures, power on the storage system by connecting the power cables from the PSUs to the PDU, and moving the

power switch on each PSU to the On position. See Figure 29. Typical AC power cable connection from PDU to PSU (5U).

The Power on/Standby LED on the 5U84 Ops panel should be lit green when the enclosure power is activated.

• When powering up, ensure to power up the enclosures and associated data host in the following order:

• Drive enclosures first – Ensures that the disks in the drive enclosure have enough time to completely spin up before being scanned

• Controller enclosure next – Depending upon the number and type of disks in the system, it may take several minutes for the

• Data host last (if powered off for maintenance purposes).

When powering off, reverse the order of steps that are used for powering on.

. If the drive modules have been recently installed, ensure that they have had time to adjust to the environmental

by the controller modules within the controller enclosure. The LEDs blink while the enclosures power up. After the LEDs stop

blinking – if the LEDs on the front and back of the enclosure are not amber – the power-on sequence is complete, and no faults

have been detected.

system to become ready.

Dell EMC PowerVault ME4 Series Storage System Owner’s

NOTE:

If main power is lost for any reason, the system automatically restarts when power is restored.

Enclosure Ops panels

• See 2U enclosure Ops panel for details pertaining to 2U Ops panel LEDs and related fault conditions.

• See 5U enclosure Ops panel for details pertaining to 5U84 Ops panel LEDs and related fault conditions.

Guidelines for powering enclosures on and off

• Remove the AC cord before inserting or removing a PCM (2U) or PSU (5U84).

• Move the PCM or PSU switch to the Off position before connecting or disconnecting the AC power cable.

• Allow 15 seconds between powering off and powering on the PCM or PSU.

• Allow 15 seconds before powering on one PSU or PCM in the system, and powering off another PCM or PSU.

• Never power off a PCM or PSU while any amber LED is lit on the partner PCM or PSU.

• A 5U84 enclosure must be left in a power on state for 30 seconds following resumption from standby before the enclosure can be

placed into standby again.

• Although the enclosure supports standby, the expansion module shuts off completely during standby and cannot receive a user

command to power back on. An AC power cycle is the only method to return the 5U84 to full power from standby.

Connect power cables and power on the storage system

31

6

Perform system and storage setup

Record storage system information

Use the System Information Worksheet to record the information that you need to install the ME4 Series storage system.

Using guided setup

Upon completing the hardware installation, use ME Storage Manager to configure, provision, monitor, and manage the storage system.

When first accessing the MESM, perform a firmware update before configuring your system. After the firmware update is complete, use

the guided setup to verify the web browser requirements and then access the

Web browser requirements and setup

The MESM web interface requires Mozilla Firefox 57 or later, Google Chrome 57 or later, Microsoft Internet Explorer 10 or 11, or Apple

Safari 10.1 or later.

NOTE:

• To see the help window, you must enable pop-up windows.

• To optimize the display, use a color monitor and set its color quality to the highest setting.

• Do not use the Back, Forward, Reload, or Refresh buttons in the browser. The MESM has a single page for which content changes as

you perform tasks and automatically updates to show current data.

• To navigate past the Sign In page (with a valid user account):

• Verify that cookies are allowed for the IP address of each controller network port.

• For Internet Explorer, set the local-intranet security option on the browser to medium or medium-low.

• For Internet Explorer, add each network IP address for each controller as a trusted site.

• For HTTPS, ensure that Internet Explorer is set to use TLS 1.2.

You cannot view MESM help content if you are using the Microsoft Edge browser that ships with Windows 10.

MESM.

Access the ME Storage Manager

Do not turn on more than one unconfigured controller enclosure at a time to avoid IP conflicts.

1. Temporarily set the management host NIC to a 10.0.0.x address or to the same IPv6 subnet to enable communication with the storage

system.

2. In a supported web browser:

• Type https://10.0.0.2 to access controller module A on an IPv4 network.

• Type https://fd6e:23ce:fed3:19d1::1 to access controller module A on an IPv6 network.

3. If the storage system is running G275 firmware, sign in to the ME Storage Manager using the user name manage and password !

manage.

If the storage system is running G280 firmware:

a. Click Get Started.

b. Read the Commercial Terms of Sale and End User License Agreement, and click Accept.

c. Specify a new user name and password for the system, and click Apply and Continue.

The Welcome panel that is displayed provides options to set up and provision your system.

NOTE:

using the CLI port and serial cable.

If you are unable to use the 10.0.0.x network to configure the system, see Setting network port IP addresses

32 Perform system and storage setup

Update firmware

After powering on the storage system for the first time, verify that the controller modules, expansion modules, and disk drives are using

the current firmware release.

NOTE: Expansion module firmware is updated automatically with controller module updates.

1. Using the ME Storage Manager, select Action > Update Firmware in the System topic.

The Update Firmware panel opens. The Update Controller Modules tab shows versions of firmware components that are installed in

each controller module.

2. Locate firmware updates at www.dell.com/support. If newer versions of the firmware are available, download the bundle file or

relevant firmware component files.

3. Click Browse, select the firmware bundle file or component file to install, and then click OK.

When the update is complete, the system restarts.

Use guided setup in the ME Storage Manager Welcome panel

The Welcome panel provides options for you to quickly set up your system by guiding you through the configuration and provisioning

process.

With guided setup, you must first configure your system settings by accessing the System Settings panel and completing all required

options. After these options are complete, you can provision your system by accessing the Storage Setup panel and the Host Setup panel

and completing the wizards.