Page 1

Dell™ PowerEdge™ Systems

Oracle Database 10g Extended

Memory 64 Technology (EM64T)

Enterprise Edition

Linux Deployment Guide

Version 2.1.1

www.dell.com | support.dell.com

Page 2

Notes and Notices

NOTE: A NOTE indicates important information that helps you make better use of your computer.

NOTICE: A NOTICE indicates either potential damage to hardware or loss of data and tells you how to avoid the problem.

____________________

Information in this document is subject to change without notice.

© 2006 Dell Inc. All rights reserved.

Reproduction in any manner whatsoever without the written permission of Dell Inc. is strictly forbidden.

Trademarks used in this text: Dell, the DELL logo, and PowerEdge are trademarks of Dell Inc.; EMC, PowerPath, and Navisphere are registered

trademarks of EMC Corporation; Intel and Xeon are registered trademarks of Intel Corporation; Red Hat is a registered trademark of Red Hat, Inc.

Other trademarks and trade names may be used in this document to refer to either the entities claiming the marks and names or their products.

Dell Inc. disclaims any proprietary interest in trademarks and trade names other than its own.

September 2006 Rev. A01

Page 3

Contents

Oracle RAC 10g Deployment Service . . . . . . . . . . . . . . . . . . . . . . . 5

Software and Hardware Requirements

License Agreements

Important Documentation

Before You Begin

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

. . . . . . . . . . . . . . . . . . . . . . . . . . . 7

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Installing and Configuring Red Hat Enterprise Linux

. . . . . . . . . . . . . . . . . . . . . . 6

. . . . . . . . . . . . . . . 8

Installing Red Hat Enterprise Linux Using the Deployment CDs

Configuring Red Hat Enterprise Linux

. . . . . . . . . . . . . . . . . . . . 9

Updating Your System Packages Using Red Hat Network

Verifying Cluster Hardware and Software Configurations

Fibre Channel Cluster Setup

Cabling Your Storage System

. . . . . . . . . . . . . . . . . . . . . . . . 10

. . . . . . . . . . . . . . . . . . . . . . . . 12

Configuring Storage and Networking for Oracle RAC 10g

Configuring the Public and Private Networks

Verifying the Storage Configuration

Disable SELinux

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

. . . . . . . . . . . . . . . . . . . . 19

. . . . . . . . . . . . . . . 15

Configuring Shared Storage for Oracle Clusterware and the

Database Using OCFS2

. . . . . . . . . . . . . . . . . . . . . . . . . . . 20

Configuring Shared Storage for Oracle Clusterware and the

Database Using ASM

Installing Oracle RAC 10g

Before You Begin

Installing Oracle Clusterware

Installing the Oracle Database 10g Software

RAC Post Deployment Fixes and Patches

Configuring the Listener

Creating the Seed Database Using OCFS2

Creating the Seed Database Using ASM

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

. . . . . . . . . . . . . . . . . . . . . . . . 26

. . . . . . . . . . . . . . . 28

. . . . . . . . . . . . . . . . . 29

. . . . . . . . . . . . . . . . . . . . . . . . . . 33

. . . . . . . . . . . . . . . . . 33

. . . . . . . . . . . . . . . . . . 35

. . . . . . . 8

. . . . . . . . . . 9

. . . . . . . . . . . 10

. . . . . . . . . . . 15

Securing Your System

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Setting the Password for the User oracle

. . . . . . . . . . . . . . . . . 37

Contents 3

Page 4

Configuring and Deploying Oracle Database 10g (Single Node) . . . . . . . . 38

Configuring the Public Network

Configuring Database Storage

Configuring Database Storage Using the Oracle ASM Library Driver

Installing Oracle Database 10g

Installing the Oracle Database 10g 10.2.0.2 Patchset

Configuring the Listener

Creating the Seed Database

. . . . . . . . . . . . . . . . . . . . . . 38

. . . . . . . . . . . . . . . . . . . . . . . 38

. . . 39

. . . . . . . . . . . . . . . . . . . . . . . 41

. . . . . . . . . . . 42

. . . . . . . . . . . . . . . . . . . . . . . . . . 43

. . . . . . . . . . . . . . . . . . . . . . . . 43

Adding and Removing Nodes

Adding a New Node to the Network Layer

Configuring Shared Storage on the New Node

Adding a New Node to the Oracle Clusterware Layer

Adding a New Node to the Database Layer

Reconfiguring the Listener

Adding a New Node to the Database Instance Layer

Removing a Node From the Cluster

Reinstalling the Software

Additional Information

Supported Software Versions

Determining the Private Network Interface

Troubleshooting

Getting Help

Dell Support

Oracle Support

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

Obtaining and Using Open Source Files

. . . . . . . . . . . . . . . . . . . . . . . . . . 46

. . . . . . . . . . . . . . . . . 46

. . . . . . . . . . . . . . 47

. . . . . . . . . . . 48

. . . . . . . . . . . . . . . . 48

. . . . . . . . . . . . . . . . . . . . . . . . . 49

. . . . . . . . . . . 50

. . . . . . . . . . . . . . . . . . . . . 51

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

. . . . . . . . . . . . . . . . . . . . . . . 54

. . . . . . . . . . . . . . . . 55

. . . . . . . . . . . . . . . . . . . . . 62

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

4 Contents

Page 5

This document provides information about installing, configuring, reinstalling, and using

Oracle Database 10g Enterprise Edition with the Oracle Real Application Clusters (RAC) software on

your Dell|Oracle supported configuration. Use this document in conjunction with the Dell Deployment,

Red Hat Enterprise Linux, and Oracle RAC 10g software CDs to install your software.

NOTE: If you install your operating system using only the operating system CDs, the steps in this document may not

be applicable.

This document covers the following topics:

• Software and hardware requirements

®

• Installing and configuring Red Hat

• Verifying cluster hardware and software configurations

• Configuring storage and networking for Oracle RAC

• Installing Oracle RAC

• Configuring and installing Oracle Database 10

• Adding and removing nodes

• Reinstalling the software

• Additional information

• Troubleshooting

• Getting help

• Obtaining and using open source files

For more information on Dell supported configurations for Oracle, see the Dell|Oracle Tested and

Validated Configurations website at www.dell.com/10g.

Enterprise Linux

g

(single node)

Oracle RAC 10g Deployment Service

If you purchased the Oracle RAC 10g Deployment Service, your Dell Professional Services representative

will assist you with the following:

• Verifying cluster hardware and software configurations

• Configuring storage and networking

• Installing Oracle RAC 10

g

Release 2

Deployment Guide 5

Page 6

Software and Hardware Requirements

Before you install the Oracle RAC software on your system:

• Download the Red Hat CD images from the Red Hat website at

• Locate your Oracle CD kit.

• Download the

Dell Deployment CD

images that are appropriate for the solution being installed from

the Dell|Oracle Tested and Validated Configurations website at

downloaded CD images to CDs.

Table 1-1 lists basic software requirements for Dell supported configurations for Oracle. Table 1-2

through Table 1-3 list the hardware requirements. For more information on the minimum software

versions for drivers and applications, see "Supported Software Versions."

Table 1-1. Software Requirements

Software Component Configuration

Red Hat Enterprise Linux AS EM64T (Version 4) Update 3

Oracle Database 10g Versio n 10 .2

• Enterprise Edition, including the RAC option for clusters

• Enterprise Edition for single-node configuration

EMC® PowerPath

NOTE: Depending on the number of users, the applications you use, your batch processes, and other factors, you

may need a system that exceeds the minimum hardware requirements in order to achieve desired performance.

®

Version 4.5.1

rhn.redhat.com

.

www.dell.com/10g

. Burn all these

NOTE: The hardware configuration of all the nodes must be identical.

Table 1-2. Minimum Hardware Requirements—Fibre Channel Cluster

Hardware Component Configuration

®

Dell™ PowerEdge™ system (two to

eight nodes using Automatic Storage

Management [ASM])

Dell|EMC Fibre Channel

storage system

Xeon® processor family

Intel

1 GB of RAM with Oracle Cluster File System Version 2 (OCFS2)

PowerEdge Expandable RAID Controller (PERC) for internal

hard drives

Two 73-GB hard drives (RAID 1) connected to PERC

Three Gigabit network interface controller (NIC) ports

Two optical host bus adapter (HBA) ports

See the Dell|Oracle Tested and Validated Configurations website at

www.dell.com/10g for information on supported configurations

6 Deployment Guide

Page 7

Table 1-2. Minimum Hardware Requirements—Fibre Channel Cluster (continued)

Hardware Component Configuration

Gigabit Ethernet switch (two) See the Dell|Oracle Tested and Validated Configurations website at

www.dell.com/10g for information on supported configurations

Dell|EMC Fibre Channel switch (two) Eight ports for two to six nodes

16 ports for seven or eight nodes

Table 1-3. Minimum Hardware Requirements—Single Node

Hardware Component Configuration

PowerEdge system Intel Xeon processor family

1 GB of RAM

Two 73-GB hard drives (RAID 1) connected to PERC

Two NIC ports

Dell|EMC Fibre Channel storage system

(optional)

Dell|EMC Fibre Channel switch

(optional)

See the Dell|Oracle Tested and Validated Configurations website at

www.dell.com/10g for information on supported configurations

Eight ports

License Agreements

NOTE: Your Dell configuration includes a 30-day trial license of Oracle software. If you do not have a license for

this product, contact your Dell sales representative.

Important Documentation

For more information on specific hardware components, see the documentation included with

your system.

For Oracle product information, see the How to Get Started guide in the Oracle CD kit.

Before You Begin

Before you install the Red Hat Enterprise Linux operating system, download the Red Hat Enterprise

Linux Quarterly Update ISO images from the Red Hat Network website at rhn.redhat.com and burn

these images to CDs.

To download the ISO images, perform the following steps:

1

Navigate to the Red Hat Network website at

2

Click

Channels

3

In the left menu, click

.

Easy ISOs

.

rhn.redhat.com

.

Deployment Guide 7

Page 8

4

In the

Easy ISOs

The ISO images for all Red Hat products appear.

5

In the

Channel Name

Linux software.

6

Download the ISOs for your Red Hat Enterprise Linux software as listed in your Solution Deliverable

List (SDL) from the Dell|Oracle Tested and Validated Configurations website at

7

Burn the ISO images to CDs.

page left menu, click

menu, click the appropriate ISO image for your Red Hat Enterprise

All

.

www.dell.com/10g

Installing and Configuring Red Hat Enterprise Linux

NOTICE: To ensure that the operating system is installed correctly, disconnect all external storage devices from

the system before you install the operating system.

This section describes the installation of the Red Hat Enterprise Linux AS operating system and the

configuration of the operating system for Oracle Database deployment.

Installing Red Hat Enterprise Linux Using the Deployment CDs

1

Disconnect all external storage devices from the system.

2

Locate your

3

Insert the

The system boots to the

4

When the deployment menu appears, type 1 to select

Linux 4 U3 (x86_64)

5

When another menu asking deployment image source appears, type 1 to select

Deployment CD.

Dell Deployment CD

Dell Deployment CD 1

Dell Deployment CD

.

and the

into the CD drive and reboot the system.

Red Hat Enterprise Linux AS EM64T

.

Oracle 10g R2 EE on Red Hat Enterprise

CDs.

Copy solution by

.

NOTE: This procedure may take several minutes to complete.

6

When prompted, insert

A deployment partition is created and the contents of the CDs are copied to it. When the copy

operation is completed, the system automatically ejects the last CD and boots to the

deployment partition.

When the installation is completed, the system automatically reboots and the Red Hat Setup

Agent appears.

7

In the

Red Hat Setup Agent Welcome

Do not create any operating system users at this time.

8

When prompted, specify a

8 Deployment Guide

Dell Deployment CD 2

window, click

root password

.

and each Red Hat installation CD into the CD drive.

Next

to configure your operating system settings.

Page 9

9

10

When the

When the

Network Setup

Security Level

window appears, click

window appears, disable the firewall. You may enable the firewall after

completing the Oracle deployment.

11

Log in as

root

.

Configuring Red Hat Enterprise Linux

1

Log in as

2

Insert the

mount /dev/cdrom

/media/cdrom/install.sh

root

.

Dell Deployment CD 2

into the CD drive and type the following commands:

Next

. You will configure network settings later.

The contents of the CD are copied to the

procedure is completed, type

3

Ty p e

cd /dell-oracle-deployment/scripts/standard

umount /dev/cdrom

containing the scripts installed from the

NOTE: Scripts discover and validate installed component versions and, when required, update components

to supported levels.

4

Ty p e

./005-oraclesetup.py

5

Ty p e

source /root/.bash_profile

6

Ty p e

./010-hwCheck.py

to configure the Red Hat Enterprise Linux for Oracle installation.

to verify that the CPU, RAM, and disk sizes meet the minimum

/usr/lib/dell/dell-deploy-cd

directory. When the copy

and remove the CD from the CD drive.

to navigate to the directory

Dell Deployment CD

.

to start the environment variables.

Oracle Database installation requirements.

If the script reports that a parameter failed, update your hardware configuration and run the script

again (see Table 1-2 and Table 1-3 for updating your hardware configuration).

7

Connect the external storage device.

8

Reload the HBA driver(s) using

rmmod

and

modprobe

commands. For instance, for Emulex HBAs,

reload the lpfc driver by issuing

rmmod lpfc

modprobe lpfc

For QLA HBAs, identify the drivers that are loaded (

lsmod | grep qla

), and reload these drivers.

Updating Your System Packages Using Red Hat Network

Red Hat periodically releases software updates to fix bugs, address security issues, and add new features.

You can download these updates through the Red Hat Network (RHN) service. See the Dell|Oracle

Tested and Validated Configurations website at www.dell.com/10g for the latest supported

configurations before you use RHN to update your system software to the latest revisions.

NOTE: If you are deploying Oracle Database on a single node, skip the following sections and see "Configuring and

Deploying Oracle Database 10g (Single Node)."

Deployment Guide 9

Page 10

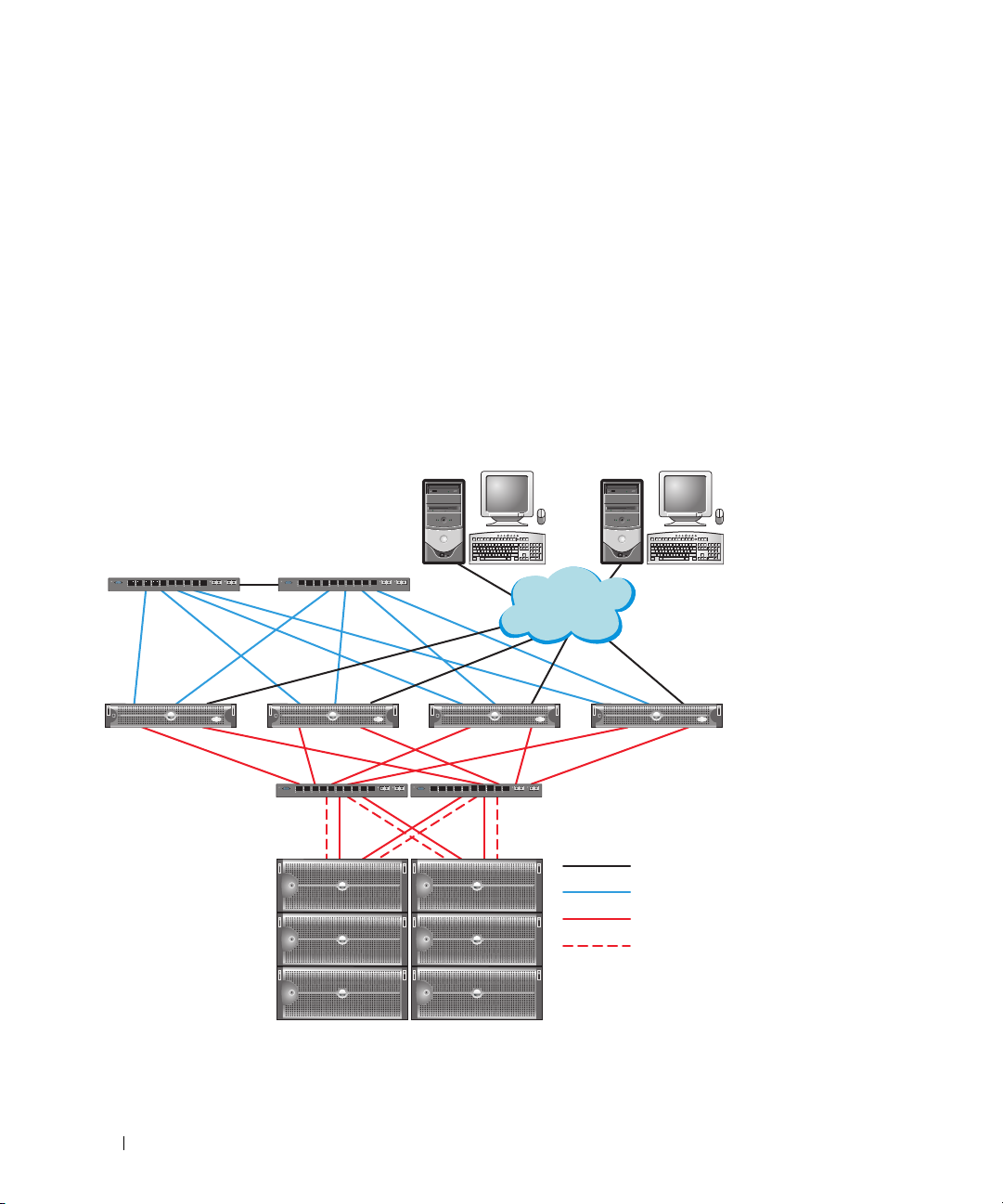

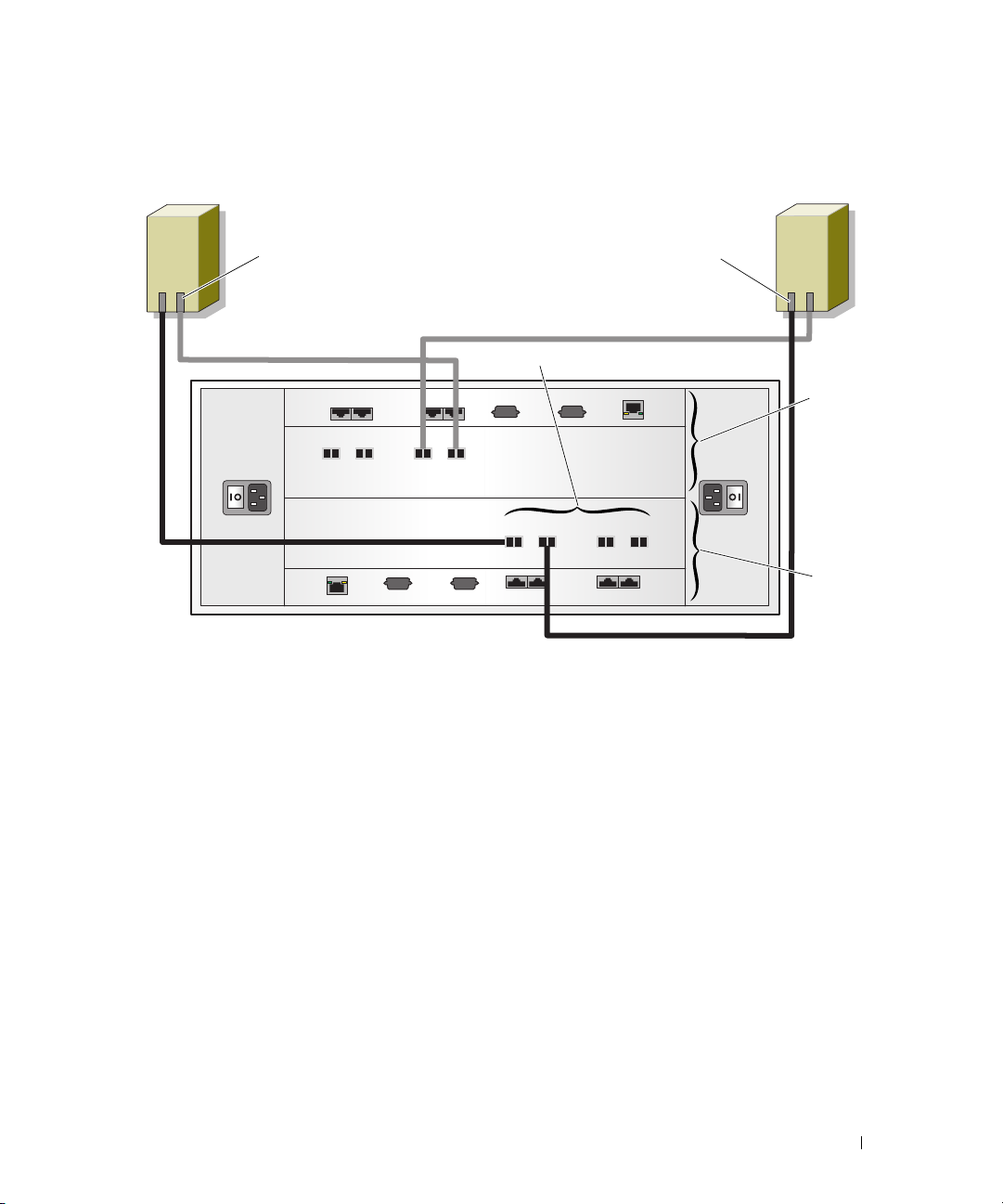

Verifying Cluster Hardware and Software Configurations

Dell|EMC Fibre Channel

storage systems

client systems

PowerEdge systems

(Oracle Database)

Gb Ethernet switches (private network)

Dell|EMC Fibre Channel switches

(SAN)

LAN/WAN

CAT 5e/6 (public NIC)

CAT 5e/6 (copper gigabit NIC)

fiber optic cables

additional fiber optic cables

Before you begin cluster setup, verify the hardware installation, communication interconnections, and

node software configuration for the entire cluster. The following sections provide setup information for

hardware and software Fibre Channel cluster configurations.

Fibre Channel Cluster Setup

Your Dell Professional Services representative completed the setup of your Fibre Channel cluster. Verify

the hardware connections and the hardware and software configurations as described in this section.

Figure 1-1 and Figure 1-3 show an overview of the connections required for the cluster, and Table 1-4

summarizes the cluster connections.

Figure 1-1. Hardware Connections for a Fibre Channel Cluster

10 Deployment Guide

Page 11

Table 1-4. Fibre Channel Hardware Interconnections

Cluster Component Connections

Each PowerEdge system node One Category 5 enhanced (CAT 5e) or CAT 6 cable from public NIC to local area

network (LAN)

One CAT 5e or CAT 6 cable from private Gigabit NIC to Gigabit Ethernet switch

One CAT 5e or CAT 6 cable from a redundant private Gigabit NIC to a redundant

Gigabit Ethernet switch

One fiber optic cable from optical HBA 0 to Fibre Channel switch 0

One fiber optic cable from HBA 1 to Fibre Channel switch 1

Each Dell|EMC Fibre

Channel storage system

Each Dell|EMC Fibre

Channel switch

Each Gigabit Ethernet switch One CAT 5e or CAT 6 connection to the private Gigabit NIC on each PowerEdge

Two CAT 5e or CAT 6 cables connected to the LAN

One to four fiber optic cable connections to each Fibre Channel switch; for

example, for a four-port configuration:

•One

fiber optic cable

•One

fiber optic cable

•One

fiber optic cable

•One

fiber optic cable

One to four fiber optic cable connections to the Dell|EMC Fibre Channel storage

system

One fiber optic cable connection to each PowerEdge system’s HBA

system

One CAT 5e or CAT 6 connection to the remaining Gigabit Ethernet switch

from SPA port 0 to Fibre Channel switch 0

from SPA port 1 to Fibre Channel switch 1

from SPB port 0 to Fibre Channel switch 1

from SPB port 1 to Fibre Channel switch 0

Verify that the following tasks are completed for your cluster:

• All hardware is installed in the rack.

• All hardware interconnections are set up as shown in Figure 1-1 and

Figure 1-3, and

listed in Table 1-4.

• All logical unit numbers (LUNs), redundant array of independent disk (RAID) groups, and storage

groups are created on the Dell|EMC Fibre Channel storage system.

• Storage groups are assigned to the nodes in the cluster.

Before continuing with the following sections, visually inspect all hardware and interconnections for

correct installation.

Deployment Guide 11

Page 12

Fibre Channel Hardware and Software Configurations

• Each node must include the minimum hardware peripheral components as described in Table 1-2.

• Each node must have the following software installed:

– Red Hat Enterprise Linux software (see Table 1-1)

– Fibre Channel HBA driver

• The Fibre Channel storage system must be configured with the following:

– A minimum of three LUNs created and assigned to the cluster storage group (see Table 1-5)

– A minimum LUN size of 5 GB

Table 1-5. LUNs for the cluster storage group

LUN Minimum Size Number of Partitions Used For

First LUN 512 MB three of 128 MB each Voting disk, Oracle Cluster

Registry (OCR), and

storage processor (SP) file

Second LUN Larger than the size of your database one Database

Third LUN Minimum twice the size of your

second LUN

one Flash Recovery Area

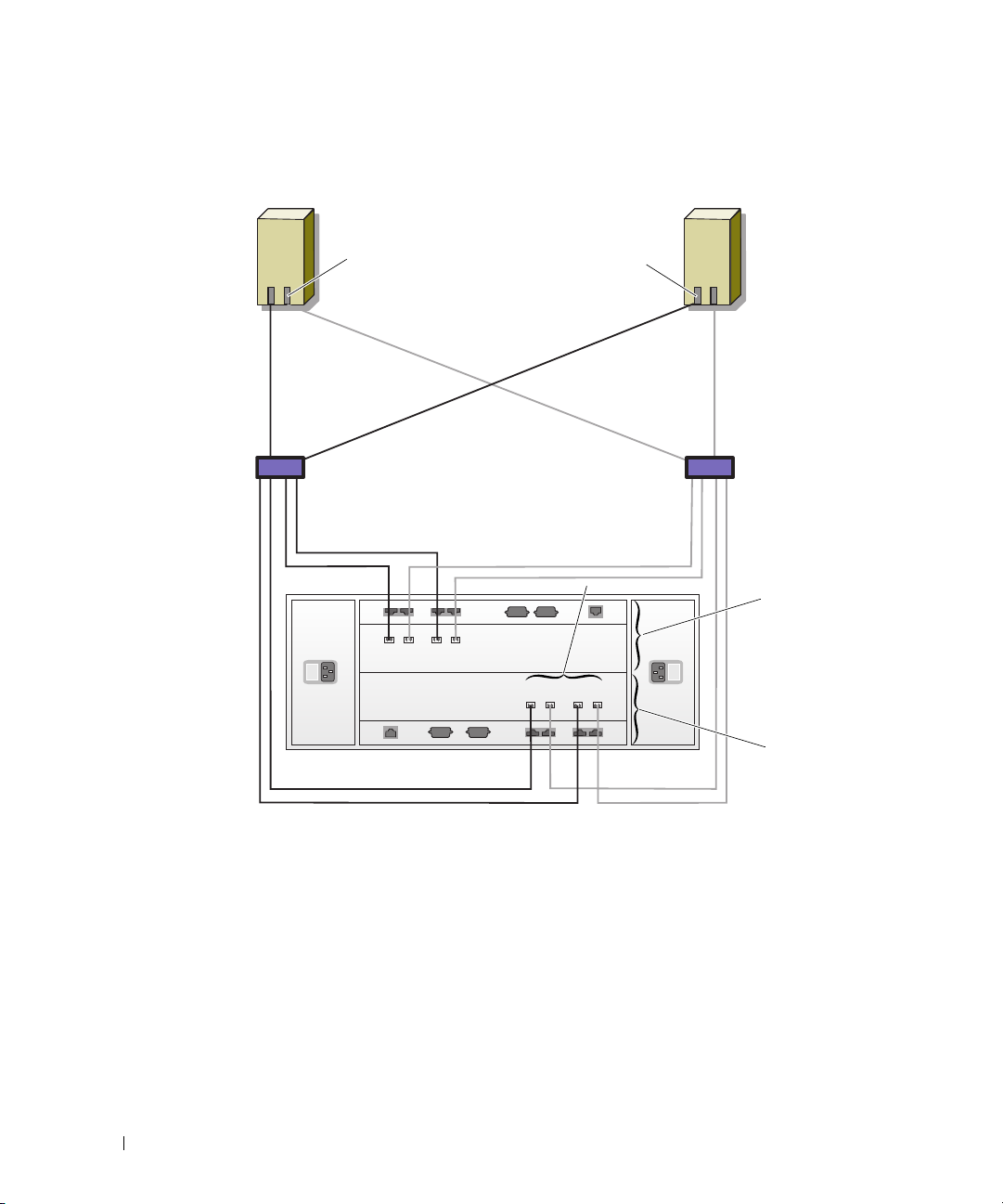

Cabling Your Storage System

You can configure your Oracle cluster storage system in a direct-attached configuration or a four-port

SAN-attached configuration, depending on your needs. See the following procedures for both

configurations.

12 Deployment Guide

Page 13

Figure 1-2. Cabling in a Direct-Attached Fibre Channel Cluster

HBA ports (2)

node 1

node 2

HBA ports (2)

0

1

0

1

CX700 storage system

SP-B

SP-A

0

1

2

3

SP ports

3

2

1

0

Direct-Attached Configuration

To configure your nodes in a direct-attached configuration (see Figure 1-2), perform the following steps:

Connect one optical cable from HBA0 on node 1 to port 0 of SP-A.

1

2

Connect one optical cable from HBA1 on node 1 to port 0 of SP-B.

3

Connect one optical cable from HBA0 on node 2 to port 1 of SP-A.

4

Connect one optical cable from HBA1 on node 2 to port 1 of SP-B.

Deployment Guide 13

Page 14

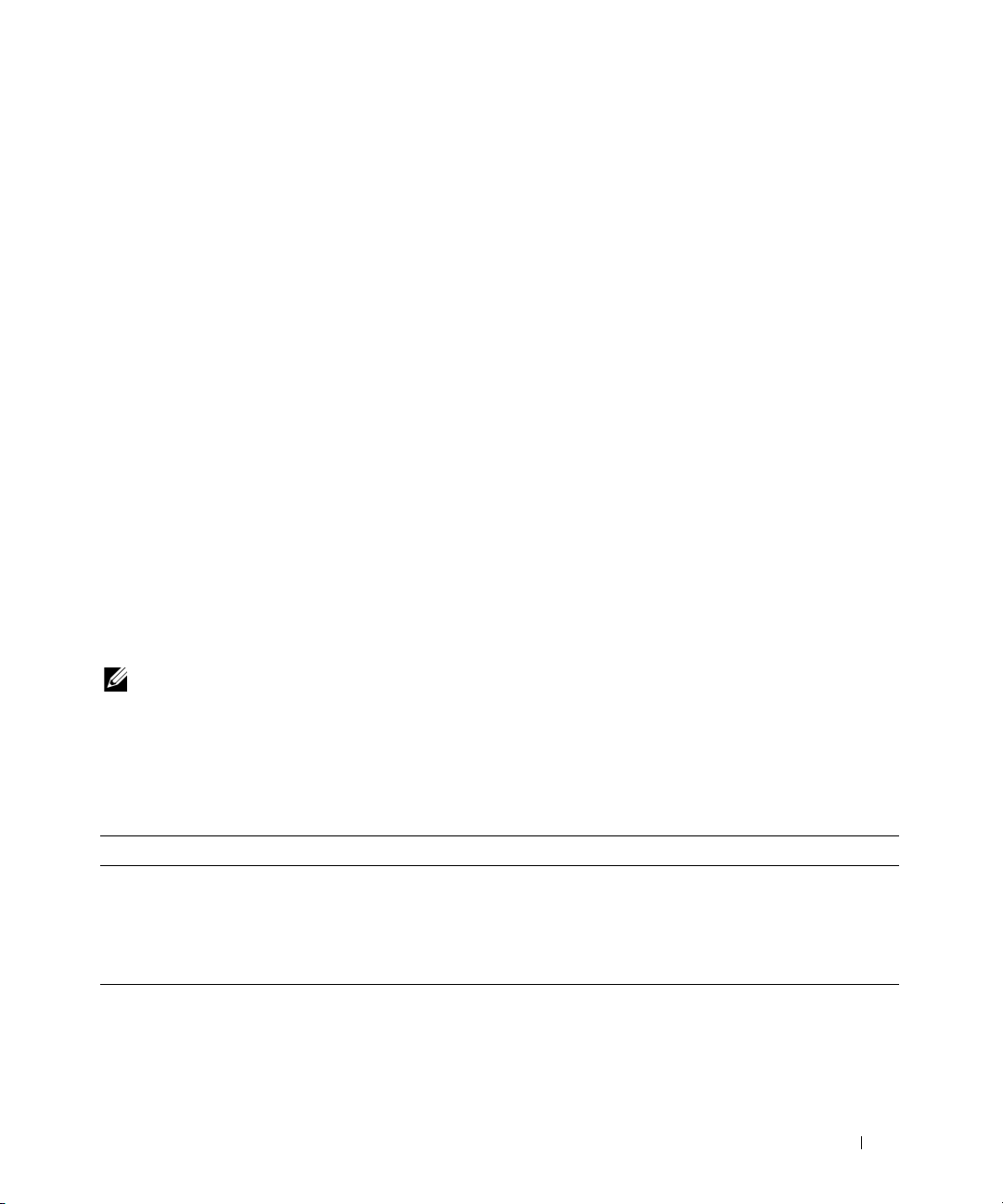

Figure 1-3. Cabling in a SAN-Attached Fibre Channel Cluster

HBA ports (2)

node 1

node 2

HBA ports (2)

SP-B

SP-A

0

1

2

3

SP ports

3

2

1

0

sw0

sw1

01

0

1

CX700 storage system

SAN-Attached Configuration

To configure your nodes in a four-port SAN-attached configuration (see Figure 1-3), perform the

following steps:

1

Connect one optical cable from SP-A port 0 to Fibre Channel switch 0.

2

Connect one optical cable from SP-A port 1 to Fibre Channel switch 1.

3

Connect one optical cable from SP-A port 2 to Fibre Channel switch 0.

4

Connect one optical cable from SP-A port 3 to Fibre Channel switch 1.

5

Connect one optical cable from SP-B port 0 to Fibre Channel switch 1.

6

Connect one optical cable from SP-B port 1 to Fibre Channel switch 0.

14 Deployment Guide

Page 15

7

Connect one optical cable from SP-B port 2 to Fibre Channel switch 1.

8

Connect one optical cable from SP-B port 3 to Fibre Channel switch 0.

9

Connect one optical cable from HBA0 on node 1 to Fibre Channel switch 0.

10

Connect one optical cable from HBA1 on node 1 to Fibre Channel switch 1.

11

Connect one optical cable from HBA0 on node 2 to Fibre Channel switch 0.

12

Connect one optical cable from HBA1 on node 2 to Fibre Channel switch 1.

Configuring Storage and Networking for Oracle RAC 10g

This section provides information and procedures for setting up a Fibre Channel cluster running a

seed database:

• Configuring the public and private networks

• Securing your system

• Verifying the storage configuration

• Configuring shared storage for Cluster Ready Services (CRS) and Oracle Database

Oracle RAC 10g is a complex database configuration that requires an ordered list of procedures.

To configure networks and storage in a minimal amount of time, perform the following procedures in order.

Configuring the Public and Private Networks

This section presents steps to configure the public and private cluster networks.

NOTE: Each node requires a unique public and private internet protocol (IP) address and an additional public

IP address to serve as the virtual IP address for the client connections and connection failover. The virtual

IP address must belong to the same subnet as the public IP. All public IP addresses, including the virtual IP address,

should be registered with Domain Naming Service and routable.

Depending on the number of NIC ports available, configure the interfaces as shown in Table 1-6.

Table 1-6. NIC Port Assignments

NIC Port Three Ports Available Four Ports available

1 Public IP and virtual IP Public IP

2 Private IP (bonded) Private IP (bonded)

3 Private IP (bonded) Private IP (bonded)

4 NA Virtual IP

Deployment Guide 15

Page 16

Configuring the Public Network

NOTE: Ensure that your public IP address is a valid, routable IP address.

If you have not already configured the public network, do so by performing the following steps on

each node:

1

Log in as

2

Edit the network device file

root

.

/etc/sysconfig/network-scripts/ifcfg-eth#

, where # is the number of the

network device, and configure the file as follows:

DEVICE=eth0

ONBOOT=yes

IPADDR=<Public IP Address>

NETMASK=<Subnet mask>

BOOTPROTO=static

HWADDR=<MAC Address>

SLAVE=no

3

Edit the

/etc/sysconfig/network

file, and, if necessary, replace

localhost.localdomain

with the

fully qualified public node name.

For example, the line for node 1 would be as follows:

HOSTNAME=node1.domain.com

4

Ty p e :

service network restart

5

Ty p e

ifconfig

6

To check your network configuration, ping each public IP address from a client on the LAN outside

to verify that the IP addresses are set correctly.

the cluster.

7

Connect to each node to verify that the public network is functioning and type

to verify that the secure shell (

Configuring the Private Network Using Bonding

ssh)

command is working.

ssh <public IP>

Before you deploy the cluster, configure the private cluster network to allow the nodes to communicate

with each other. This involves configuring network bonding and assigning a private IP address and

hostname to each node in the cluster.

To set up network bonding for Broadcom or Intel NICs and configure the private network, perform the

following steps on each node:

1

Log in as

2

Add the following line to the

root

.

/etc/modprobe.conf

file:

alias bond0 bonding

16 Deployment Guide

Page 17

3

For high availability, edit the

/etc/modprobe.conf

file and set the option for link monitoring.

The default value for miimon is 0, which disables link monitoring. Change the value to

100 milliseconds initially, and adjust it as needed to improve performance as shown in the following

example. Type:

options bonding miimon=100 mode=1

In the

4

/etc/sysconfig/network-scripts/

directory, create or edit the

ifcfg-bond0

configuration file.

For example, using sample network parameters, the file would appear as follows:

DEVICE=bond0

IPADDR=192.168.0.1

NETMASK=255.255.255.0

NETWORK=192.168.0.0

BROADCAST=192.168.0.255

ONBOOT=yes

BOOTPROTO=none

USERCTL=no

The entries for

DEVICE=bondn

IPADDR

NETMASK, NETWORK

is the required name for the bond, where n specifies the bond number.

is the private IP address.

, and

BROADCAST

are optional.

To use bond0 as a virtual device, you must specify which devices will be bonded as slaves.

5

For each device that is a bond member, perform the following steps:

a

In the directory

/etc/sysconfig/network-scripts/

, edit the

ifcfg-ethn file, containing the

following lines:

DEVICE=ethn

HWADDR=<MAC ADDRESS>

ONBOOT=yes

TYPE=Ethernet

USERCTL=no

MASTER=bond0

SLAVE=yes

BOOTPROTO=none

b

Ty p e

6

On

each node

service network restart

, type

ifconfig

to verify that the private interface is functioning.

and ignore any warnings.

The private IP address for the node should be assigned to the private interface bond0.

7

When the private IP addresses are set up on every node, ping each IP address from one node to ensure

that the private network is functioning.

Deployment Guide 17

Page 18

8

Connect to each node and verify that the private network and

ssh

are functioning correctly by typing:

ssh <private IP>

9

On

each node,

modify the

/etc/hosts

file by adding the following lines:

127.0.0.1 localhost.localdomain localhost

<private IP node1> <private hostname node1>

<private IP node2> <private hostname node2>

<public IP node1> <public hostname node1>

<public IP node2> <public hostname node2>

<virtual IP node1> <virtual hostname node1>

<virtual IP node2> <virtual hostname node2>

NOTE: The examples in this and the following step are for a two-node configuration; add lines for each

additional node.

10

On

each node

, create or modify the

/etc/hosts.equiv

file by listing all of your public IP addresses or host

names. For example, if you have one public hostname, one virtual IP address, and one virtual hostname

for each node, add the following lines:

<public hostname node1> oracle

<public hostname node2> oracle

<virtual IP or hostname node1> oracle

<virtual IP or hostname node2> oracle

11

Log in as

oracle

by typing:

rsh <public hostname nodex>

where x is the node number.

18 Deployment Guide

, connect to each node to verify that the remote shell (

rsh

) command is working

Page 19

Verifying the Storage Configuration

While configuring the clusters, create partitions on your Fibre Channel storage system. In order to create

the partitions, all the nodes must be able to detect the external storage devices. To verify that each node

can detect each storage LUN or logical disk, perform the following steps:

1

For Dell|EMC Fibre Channel storage system, verify that the EMC Navisphere® agent and the correct

version of PowerPath (see Table 1-7) are installed on each node, and that each node is assigned to the

correct storage group in your EMC Navisphere software. See the documentation that came with your

Dell|EMC Fibre Channel storage system for instructions.

NOTE: The Dell Professional Services representative who installed your cluster performed this step. If you

reinstall the software on a node, you must perform this step.

2

Visually verify that the storage devices and the nodes are connected correctly to the Fibre Channel

switch (see Figure 1-1 and Table 1-4).

3

Verify that you are logged in as

4

On

each node

, type:

more /proc/partitions

The node detects and displays the LUNs or logical disks, as well as the partitions created on those

external devices.

NOTE: The listed devices vary depending on how your storage system is configured.

A list of the LUNs or logical disks that are detected by the node is displayed, as well as the partitions

that are created on those external devices. PowerPath pseudo devices appear in the list, such as

/dev/emcpowera, /dev/emcpowerb

root

, and

.

/dev/emcpowerc

.

5

In the

/proc/partitions

file, ensure that:

• All PowerPath pseudo devices appear in the file with similar device names across all nodes.

For example,

/dev/emcpowera

, /

dev/emcpowerb

, and

/dev/emcpowerc

.

• The Fibre Channel LUNs appear as SCSI devices, and each node is configured with the same

number of LUNs.

For example, if the node is configured with a SCSI drive or RAID container attached to a Fibre

Channel storage device with three logical disks,

internal drive, and

emcpowera, emcpowerb

, and

sda

identifies the node’s RAID container or

emcpowerc

identifies the LUNs (or PowerPath

pseudo devices).

If the external storage devices do not appear in the /proc/partitions file, reboot the node.

Deployment Guide 19

Page 20

Disable SELinux

To run the Oracle database, you must disable SELinux.

To temporarily disable SELinux, perform the following steps:

Log in as

1

2

At the command prompt, type:

setenforce 0

To permanently disable SELinux, perform the following steps on all the nodes:

1

Open your

2

Locate the kernel command line and append the following option:

selinux=0

For example:

kernel /vmlinuz-2.6.9-34.ELlargesmp ro root=LABEL=/ apic rhgb quiet

selinux=0

3

Reboot your system.

root

.

grub.conf

file.

Configuring Shared Storage for Oracle Clusterware and the Database Using OCFS2

Before you begin using OCFS2:

• Download the RPMs from

• Find your kernel version by typing:

uname –r

http://oss.oracle.com/projects/ocfs2/files/RedHat/RHEL4/x86_64/1.2.3-1

.

and then download the OCFS2 packages for the kernel version.

• Download the ocfs2-tools packages from

http://oss.oracle.com/projects/ocfs2-tools/files/RedHat/RHEL4/x86_64/1.2.1-1

• Install all the ocfs2 and ocfs2-tools packages by typing:

rpm –ivh *

20 Deployment Guide

.

Page 21

To configure storage using OCFS2:

1

On the

2

Perform the following steps:

a

first node

, log in as

root

Start the X Window System by typing:

startx

b

Generate the OCFS2 configuration file (

ocfs2 by typing the following in a terminal:

ocfs2console

c

From the menu, click

Cluster→ Configure Nodes

If the cluster is offline, the console will start it. A message window appears displaying that

information. Close the message window.

The

Node Configuration

d

To add nodes to the cluster, click

private IP. Retain the default value of the port number. After entering all the details, click

Repeat this step to add all the nodes to the cluster.

e

When all the nodes are added, click

window.

f

From the menu, click

Cluster→ Propagate Configuration

Propagate Cluster Configuration

the window and then click

.

/etc/ocfs2/cluster.conf

window appears.

Add

. Enter the node name (same as the host name) and the

Apply

window appears. Wait until the message

Close

.

.

and then click

) with a default cluster name of

Close

in the

Node Configuration

.

Finished

OK

.

appears on

g

Select

3

On

all the nodes

File→ Quit

.

, enable the cluster stack on startup by typing:

/etc/init.d/o2cb enable

Change the

4

a

Stop the O2CB service on all the nodes by typing:

O2CB_HEARTBEAT_THRESHOLD

/etc/init.d/o2cb stop

b

Edit the

c

Start the O2CB service on all the nodes by typing:

O2CB_HEARTBEAT_THRESHOLD

/etc/init.d/o2cb start

value on all the nodes using the following steps:

value in

/etc/sysconfig/o2cb

to 61 on all the nodes.

Deployment Guide 21

Page 22

5

On the

storage devices with

a

first node

, for a Fibre Channel cluster, create one partition on each of the other two external

fdisk

:

Create a primary partition for the entire device by typing:

fdisk /dev/emcpowerx

Ty p e

h

for help within the

b

Verify that the new partition exists by typing:

fdisk

utility.

cat /proc/partitions

c

If you do not observe the new partition, type:

sfdisk -R /dev/<device name>

NOTE: The following steps use the sample values /u01, /u02, and /u03 for mount points and u01, u02, and u03 as labels.

6

On

any one node

slots (node slots refer to the number of cluster nodes) using the command line utility

, format the external storage devices with 4 K block size, 128 K cluster size, and 4 node

mkfs.ocfs2

follows:

mkfs.ocfs2 -b 4K -C 128K -N 4 -L u01 /dev/emcpowera1

mkfs.ocfs2 -b 4K -C 128K -N 4 -L u02 /dev/emcpowerb1

mkfs.ocfs2 -b 4K -C 128K -N 4 -L u03 /dev/emcpowerc1

NOTE: For more information about setting the format parameters of clusters, see

http://oss.oracle.com/projects/ocfs2/dist/documentation/ocfs2_faq.html.

7

On

each node

a

Create mount points for each OCFS2 partition. To perform this procedure, create the target

, perform the following steps:

partition directories and set the ownerships by typing:

mkdir -p /u01 /u02 /u03

chown -R oracle.dba /u01 /u02 /u03

as

b

On

each node

system:

/dev/emcpowera1 /u01 ocfs2 _netdev,datavolume,nointr 0 0

/dev/emcpowerb1 /u02 ocfs2 _netdev,datavolume,nointr 0 0

/dev/emcpowerc1 /u03 ocfs2 _netdev,datavolume,nointr 0 0

Make appropriate entries for all OCFS2 volumes.

c

On

each node

mount -a -t ocfs2

d

On

each node

mount -a -t ocfs2

22 Deployment Guide

, modify the

/etc/fstab

file by adding the following lines for a Fibre Channel storage

, type the following to mount all the volumes listed in the

, add the following command to the

/etc/rc.local

file:

/etc/fstab

file:

Page 23

Configuring Shared Storage for Oracle Clusterware and the Database Using ASM

Configuring Shared Storage for Oracle Clusterware

This section provides instructions for configuring shared storage for Oracle Clusterware.

Configuring Shared Storage Using the RAW Device Interface

1

On the

Ty p e

Voting disk, and the Oracle system parameter file.

2

Verify the new partitions by typing:

more /proc/partitions

first node

, create three partitions on an external storage device with the

fdisk /dev/emcpowerx

fdisk

and create three partitions of 150 MB each for the Cluster Repository,

utility:

On all the nodes, if the new partitions do not appear in the

/proc/partitions

file, type:

sfdisk -R /dev/<device name>

3

On all the nodes, perform the following steps:

a

Edit the

/etc/sysconfig/rawdevices

file and add the following lines for a Fibre Channel cluster:

/dev/raw/votingdisk /dev/emcpowera1

/dev/raw/ocr.dbf /dev/emcpowera2

/dev/raw/spfile+ASM.ora /dev/emcpowera3

b

Ty p e

udevstart

c

Ty p e

service rawdevices restart

NOTE: If the three partitions on PowerPath pseudo devices are not consistent across the nodes,

modify your /dev/sysconfig/rawdevices configuration file accordingly.

Configuring Shared Storage for the Database Using ASM

to create the RAW devices.

to restart the RAW Devices Service.

To configure your cluster using ASM, perform the following steps on all nodes:

Log in as

1

2

On all the nodes, create one partition on each of the other two external storage devices with the

a

root

.

Create a primary partition for the entire device by typing:

fdisk /dev/emcpowerx

Ty p e h for help within the

fdisk

utility.

fdisk

utility:

b

Verify that the new partition exists by typing:

cat /proc/partitions

If you do not see the new partition, type:

sfdisk -R /dev/<device name>

Deployment Guide 23

Page 24

NOTE: Shared storage configuration using ASM can be done either using the RAW device interface or the Oracle

ASM library driver.

Configuring Shared Storage Using the RAW Device Interface

1

Edit the

/etc/sysconfig/rawdevices

file and add the following lines for a Fibre Channel cluster:

/dev/raw/ASM1 /dev/emcpowerb1

/dev/raw/ASM2 /dev/emcpowerc1

Create the RAW devices by typing:

2

udevstart

3

Restart the RAW Devices Service by typing:

service rawdevices restart

4

To add an additional ASM disk (for example,

ASM3

), edit the

/etc/udev/scripts/raw-dev.sh

file on all

the nodes and add the appropriate bold entries as shown below:

MAKEDEV raw

mv /dev/raw/raw1 /dev/raw/votingdisk

mv /dev/raw/raw2 /dev/raw/ocr.dbf

mv /dev/raw/raw3 /dev/raw/spfile+ASM.ora

mv /dev/raw/raw4 /dev/raw/ASM1

mv /dev/raw/raw5 /dev/raw/ASM2

mv /dev/raw/raw6 /dev/raw/ASM3

chmod 660

/dev/raw/{votingdisk,ocr.dbf,spfile+ASM.ora,ASM1,ASM2,ASM3}

chown oracle.dba

/dev/raw/{votingdisk,ocr.dbf,spfile+ASM.ora,ASM1,ASM2,ASM3}

To add additional ASM disks type

Configuring Shared Storage Using the ASM Library Driver

1

Log in as

2

Open a terminal window and perform the following steps on all nodes:

a

b

root

.

Ty p e

service oracleasm configure

Type the following inputs for all the nodes:

udevstart

Default user to own the driver interface [ ]:

Default group to own the driver interface []:

Start Oracle ASM library driver on boot (y/n) [n]:

Fix permissions of Oracle ASM disks on boot (y/n) [y]:

24 Deployment Guide

on all the nodes and repeat step 4.

oracle

dba

y

y

Page 25

3

On the

4

Repeat step 3 for any additional ASM disks that need to be created.

5

Verify that the ASM disks are created and marked for ASM usage.

In the terminal window, type the following and press <Enter>:

service oracleasm listdisks

The disks that you created in step 3 appear.

For example:

ASM1

ASM2

6

Ensure that the remaining nodes are able to access the ASM disks that you created in step 3.

On each remaining node, open a terminal, type the following, and press <Enter>:

service oracleasm scandisks

first node

service oracleasm createdisk ASM1 /dev/emcpowerb1

service oracleasm createdisk ASM2 /dev/emcpowerc1

, in the terminal window, type the following and press <Enter>:

Installing Oracle RAC 10g

This section describes the steps required to install Oracle RAC 10g, which involves installing CRS and

installing the Oracle Database 10g software. Dell recommends that you create a seed database to verify

that the cluster works correctly before you deploy it in a production environment.

Before You Begin

To prevent failures during the installation procedure, configure all the nodes with identical system

clock settings.

Synchronize your node system clock with a Network Time Protocol (NTP) server. If you cannot access an

NTP server, perform one of the following procedures:

• Ensure that the system clock on the Oracle Database software installation node is set to a later time

than the remaining nodes.

• Configure one of your nodes as an NTP server to synchronize the remaining nodes in the cluster.

Deployment Guide 25

Page 26

Installing Oracle Clusterware

1

Log in as

2

Start the X Window System by typing:

startx

3

Open a terminal window and type:

xhost +

root

.

Mount the

4

5

Ty p e :

<CD_mountpoint>/cluvfy/runcluvfy.sh stage -pre crsinst

-n node1,node2 -r 10gR2 -verbose

where

If your system is

command, above.

If your system is configured correctly, the following message appears:

Pre-check for cluster services setup was successful on all the nodes.

6

Ty p e :

su - oracle

7

Type the following commands to start the Oracle Universal Installer:

unset ORACLE_HOME

<CD_mountpoint>

The following message appears:

Was ’rootpre.sh’ been run by root? [y/n] (n)

8

Ty p e y to proceed.

9

In the

10

In the

and click

11

In the

column for each system check, and then click

Oracle Clusterware

node1

and

node2

not

configured correctly, troubleshoot the issues and then repeat the

/runInstaller

Welc om e

Specify Home Details

Product-Specific Prerequisite Checks

window, click

Next

.

CD.

are the public host names.

Next

.

window, change the Oracle home path to

window, ensure that

Next

.

runcluvfy.sh

/crs/oracle/product/10.2.0/crs

Succeeded

appears in the

Status

26 Deployment Guide

Page 27

12

In the

13

Specify Cluster Configuration

a

Click

Add

.

b

Enter a name for the

click

OK

.

c

Repeat step a and step b for the remaining nodes.

d

In the

Cluster Name

Public Node Name, Private Node Name

field, type a name for your cluster.

The default cluster name is

e

Click

Next

.

In the

Specify Network Interface Usage

window, add the nodes that will be managed by Oracle Clusterware.

crs

.

window, ensure that the public and private interface names

are correct.

To modify an interface, perform the following steps:

a

14

Select the interface name and click

b

In the

Edit private interconnect type

interface type and then click

c

In the

Specify Network Interface Usage

names are correct, and then click

In the

Specify Oracle Cluster Registry (OCR) Location

a

In the

b

OCR Configuration

In the

Specify OCR Location

box, select

Edit

OK

.

Next

field, type:

/dev/raw/ocr.dbf

, and

Virtual Host Name

, and then

.

window in the

Interface Type

box, select the appropriate

window, ensure that the public and private interface

.

window, perform the following steps:

External Redundancy

.

15

Or

/u01/ocr.dbf

c

Click

Next

.

In the

Specify Voting Disk Location

a

In the

OCR Configuration

b

In the

Specify OCR Location

/dev/raw/votingdisk

Or

/u01/votingdisk

c

Click

Next

.

if using OCFS2.

window, perform the following steps:

box, select

field, type:

if using OCFS2.

External Redundancy

.

Deployment Guide 27

Page 28

16

In the

Summary

window, click

Install

Oracle Clusterware is installed on your system.

.

When completed, the

17

Follow the instructions in the window and then click OK.

NOTE: If root.sh hangs while formatting the Voting disk, apply Oracle patch 4679769 and then repeat this step.

18

In the

Configuration Assistants

Execute Configuration scripts

window, ensure that

window appears.

Succeeded

appears in the

tool name.

19

20

Next, the

Click

On

a

End of Installation

Exit

.

all nodes

, perform the following steps:

Verify the Oracle Clusterware installation by typing the following command:

window appears.

olsnodes -n -v

A list of the public node names of all nodes in the cluster appears.

b

Ty p e :

crs_stat -t

All running Oracle Clusterware services appear.

Installing the Oracle Database 10g Software

1

Log in as

cluvfy stage -pre dbinst -n node1,node2 -r 10gR2 -verbose

root

, and type:

Status

column for each

where

node1

and

If your system is

If your system is configured correctly, the following message appears:

Pre-check for database installation was successful.

2

As user

root

, type:

xhost +

3

As user

4

Log in as

root

oracle

, mount the

<CD_mountpoint>/runInstaller

The Oracle Universal Installer starts.

5

In the

Welc om e

28 Deployment Guide

node2

are the public host names.

not

configured correctly, see "Troubleshooting" for more information.

Oracle Database 10g

CD.

, and type:

window, click

Next

.

Page 29

6

In the

Select Installation Type

7

In the

Specify Home Details

/opt/oracle/product/10.2.0/db_1

NOTE: The Oracle home name in this step must be different from the Oracle home name that you identified

during the CRS installation. You cannot install the Oracle 10g Enterprise Edition with RAC into the same home

name that you used for CRS

8

In the

Specify Hardware Cluster Installation Mode

9

In the

Product-Specific Prerequisite Checks

column for each system check, and then click

NOTE: In some cases, a warning may appear regarding swap size. Ignore the warning and click Yes

to proceed.

10

In the

Select Configuration Option

11

In the

Summary

window, click

window, select

window in the

and click

Next

.

window, select

Install

.

Enterprise Edition

Path

window, ensure that

Next

The Oracle Database software is installed on your cluster.

and click

Next

.

field, verify that the complete Oracle home path is

.

window, click

Select All

Succeeded

and click

Next

appears in the

.

Status

.

Install database Software only

and click

Next

.

Next, the

12

Follow the instructions in the window and click OK.

13

In the

Execute Configuration Scripts

End of Installation

window, click

window appears.

Exit

.

RAC Post Deployment Fixes and Patches

This section provides the required fixes and patch information for deploying Oracle RAC 10g.

Reconfiguring the CSS Miscount for Proper EMC PowerPath Failover

When an HBA, switch, or EMC Storage Processor (SP) failure occurs, the total PowerPath failover time

to an alternate device may exceed 105 seconds. The default CSS disk time-out for Oracle 10g R2 version

10.2.0.1 is 60 seconds. To ensure that the PowerPath failover procedure functions correctly, increase the

CSS time-out to 120 seconds.

For more information, see Oracle Metalink Note 294430.1 on the Oracle Metalink website at

metalink.oracle.com.

To increase the CSS time-out:

1

Shut down the database and CRS on all nodes except on one node.

2

On the running node, log in as user

crsctl set css misscount 120

3

Reboot all nodes for the CSS setting to take effect.

root

and type:

Deployment Guide 29

Page 30

Installing the Oracle Database 10g 10.2.0.2 Patchset

Downloading and Extracting the Installation Software

1

On the

2

Create a folder for the patches and utilities at

3

Open a web browser and navigate to the Oracle Support website at

4

Log in to your Oracle Metalink account.

5

Search for the patch number 4547817 with Linux x86-64 (AMD64/EM64T) as the platform.

6

Download the patch to the

7

To unzip the downloaded zip file, type the following in a terminal window and press <

first node

, log in as

oracle

.

/opt/oracle/patches

/opt/oracle/patches

directory.

.

metalink.oracle.com

unzip p4547817_10202_LINUX-x86-64.zip

Upgrading Oracle Clusterware Installation

1

On the

2

Shut down Oracle Clusterware. To do so, type the following in the terminal window and press

<

Enter

first node

>:

, log in as

root

.

crsctl stop crs

3

On the remaining nodes, open a terminal window and repeat step 1 and step 2.

4

On the

5

In the terminal window, type the following and press <

first node

, log in as

oracle

.

Enter

>:

export ORACLE_HOME=/crs/oracle/product/10.2.0/crs

.

Enter

>:

6

Start the Oracle Universal Installer. To do so, type the following in the terminal window and

Enter

press <

>:

cd /opt/oracle/patches/Disk1/

./runInstaller

The

10

7

8

9

Welc om e

Click

In the

In the

In the

Next

screen appears.

.

Specify Home Details

Specify Hardware Cluster Installation Mode

Summary

The Oracle Universal Installer scans your system, displays all the patches that are required to be

installed, and installs them on your system. When the installation is completed, the

screen appears.

NOTE: This procedure may take several minutes to complete.

30 Deployment Guide

screen, click

screen, click

Install

.

Next

.

screen, click

Next

.

End of Installation

Page 31

11

Read all the instructions that are displayed in the message window, which appears.

NOTE: Do not shut down the Oracle Clusterware daemons, as you already performed this procedure in step 1

and step 2.

12

Open a terminal window.

13

Log in as

14

Type the following and press <

root

.

Enter

>:

$ORA_CRS_HOME/install/root102.sh

15

Repeat step 12 through step 14 on the remaining nodes, one node at a time.

16

On the

17

Click

18

Click

Upgrading the RAC Installation

1

On the

2

Log in as

3

Run the Oracle Universal Installer from the same node that you installed the Oracle Database software.

a

b

c

first node

Exit

Yes

to exit the Oracle Universal Installer.

first node

On the

Log in as

, return to the

.

, open a terminal window.

oracle

.

first node

oracle

, open a terminal window.

.

End of Installation

screen.

Shut down the Oracle Clusterware node applications on all nodes.

Enter

In the terminal window, type the following and press <

>:

$ORACLE_HOME/bin/srvctl stop nodeapps -n <nodename>

NOTE: Ignore any warning messages that may appear.

4

Repeat step 3 (c) on the remaining nodes and change the

5

On the

6

Log in as

7

Open a terminal window.

8

Type the following and press <Enter>:

first node

oracle

, open a terminal window.

.

nodename

of that given node.

export ORACLE_HOME=/opt/oracle/product/10.2.0/db_1

9

Start the Oracle Universal Installer. To do so, type the following in the terminal window, and

press <

Enter

>:

cd /opt/oracle/patches/Disk1/

./runInstaller

The

Welc om e

screen appears.

Deployment Guide 31

Page 32

10

Click

Next

.

11

In the

12

13

Specify Home Details

In the

Specify Hardware Cluster Installation Mode

In the

Summary

screen, click

screen, click

Install

.

Next

.

screen, click

Next

.

The Oracle Universal Installer scans your system, displays all the patches that are required to be

installed, and installs them on your system. When the installation is completed, the

End of Installation

screen appears.

Next, a message window appears, prompting you to run

14

Open a terminal window.

15

Type the following and press <

Enter

>:

root.sh

as user

root

.

/opt/oracle/product/10.2.0/db_1/root.sh

16

Repeat step 14 and step 15 on the remaining nodes, one node at a time.

When the installation is completed, the

NOTE: This procedure may take several minutes to complete.

17

In the

End of Installation

18

Click

Yes

to exit the Oracle Universal Installer.

19

On the

20

Log in as

21

Type the following and press <

first node

oracle

, open a terminal window.

.

screen, click

Enter

End of Installation

Exit

.

>:

screen appears.

srvctl start nodeapps -n <nodename>

Where <

22

On all the remaining nodes, shut down CRS by issuing the following command:

nodename

> is the public host name of the node.

crsctl stop crs

As the user

23

/opt/oracle/product/10.2.0/db_1/rdbms/lib/libknlopt.a

oracle

, from the node where you applied the patchset, copy

to all the other nodes in the cluster.

For example, to copy it from node1 to node2, type the following:

scp /opt/oracle/product/10.2.0/db_1/rdbms/lib/libknlopt.a

node2:/opt/oracle/product/10.2.0/db_1/rdbms/lib/libknlopt.a

NOTE: Do not perform this step as root.

24

Remake the Oracle binary on all the nodes by issuing the following commands on each node:

cd /opt/oracle/product/10.2.0/db_1/rdbms/lib

make -f ins_rdbms.mk ioracle

32 Deployment Guide

Page 33

Configuring the Listener

This section describes the steps to configure the listener, which is required for remote client connection

to a database.

On one node only, perform the following steps:

1

Log in as

2

Start the X Window System by typing:

startx

3

Open a terminal window and type:

xhost +

root

.

As the user

4

5

Select

6

In the

7

In the

8

In the

9

In the

and click

10

In the

11

In the

of 1521

12

In the

13

In the

14

Click

oracle

Cluster Configuration

TOPSNodes

Welc om e

Listener Configuration→ Listener

Listener Configuration→ Listener Name

Next

Listener Configuration→ Select Protocols

Listener Configuration→ TCP/IP Protocol

and click

Listener Configuration→ More Listeners?

Listener Configuration Done

Finish

.

, type

window, click

window, select

.

Next

.

netca

to start the Net Configuration Assistant.

and click

Select All Nodes

Listener Configuration

window, click

Creating the Seed Database Using OCFS2

1

On the

dbca -datafileDestination /u02

In the

2

3

In the

4

In the

5

In the

6

In the

7

In the

first node

Welc om e

Operations

Node Selection

Database Templates

Database Identification

Management Options

, as user

window, select

oracle

window, click

window, click

window, click

, start the Database Configuration Assistant (DBCA) by typing:

Oracle Real Application Cluster Database

Create a Database

Select All

window, enter a

window, click

Next

.

and click

window, select

window, type

window, select

window, select

window, select No and click

Next

and click

and click

Custom Database

Global Database Name

Next

.

Next

and click

Add

and click

LISTENER

.

Next

Next

.

and click

.

Next

.

Next

.

in the

Listener Name

TCP

and click

Use the standard port number

.

Next

such as

Next

Next

and click

.

racdb

.

.

Next

.

and click

field

Next

.

Deployment Guide 33

Page 34

10

11

12

13

14

15

16

17

8

In the

Database Credentials

a

Click

Use the same password for all accounts

b

Complete password selections and entries.

c

Click

Next

.

9

In the

Storage Options

In the

Database File Locations

In the

Recovery Configuration

a

Click

Specify Flash Recovery Area

b

Click

Browse

and select

c

Specify the flash recovery size.

d

Click

Next

.

In the

Database Content

In the

Database Services

In the

Initialization Parameters

Pool

value to

In the

In the

In the

NOTE: The seed database may take more than an hour to create.

500 MB

Database Storage

Creation Options

Summary

window, click OK to create the database.

window:

window, select

window, click

window:

/u03

.

window, click

window, click

window, if your cluster has more than four nodes, change the

, and click

Next

window, click

window, select

Cluster File System

Next

.

Next

.

Next

.

.

Next

.

Create Database

.

and click

Next

.

.

Shared

and click

Finish

.

NOTE: If you receive an Enterprise Manager Configuration Error during the seed database creation, click OK

to ignore the error.

When the database creation is completed, the

18

Click

Exit

.

A message appears indicating that the cluster database is starting on all the nodes.

19

On

each node

a

Determine the database instance that exists on that node by typing:

, perform the following steps:

srvctl status database -d <database name>

b

Add the

ORACLE_SID

echo "export ORACLE_SID=racdbx" >> /home/oracle/.bash_profile

source /home/oracle/.bash_profile

where

racdbx

c

This example assumes that

34 Deployment Guide

Password Management

window appears.

environment variable entry in the user profile

is the database instance identifier assigned to the node.

racdb

is the global database name that you defined in DBCA.

oracle

by typing:

Page 35

Creating the Seed Database Using ASM

This section contains procedures for creating the seed database using Oracle ASM and for verifying the

seed database.

Perform the following steps:

1

Log in as

cluvfy stage -pre dbcfg -n node1,node2 -d $ORACLE_HOME -verbose

where

root

node1

, and type:

and

node2

are the public host names.

If your system is

not

configured correctly, see "Troubleshooting" for more information.

If your system is configured correctly, the following message appears:

Pre-check for database configuration was successful.

2

On the

first node

, as the user

oracle

, type

dbca &

to start the Oracle Database Creation Assistant

(DBCA).

3

In the

Welc om e

4

In the

Operations

5

In the

Node Selection

6

In the

Database Templates

7

In the

Database Identification

8

In the

Management Options

9

In the

Database Credentials

information (if required), and click

10

In the

Storage Options

11

In the

Create ASM Instance

a

In the

b

Select

c

In the

window, select

window, click

window, click

Oracle Real Application Cluster Database

Create a Database

Select All

window, click

window, enter a

window, click

and click

Custom Database

Global Database Name

Next

.

window, select a password option, enter the appropriate password

Next

.

window, click

Automatic Storage Management (ASM)

window, perform the following steps:

SYS password

field, type a password.

Create server parameter file (SPFILE)

Server Parameter Filename

field, type:

and click

Next

.

Next

.

.

and click

/dev/raw/spfile+ASM.ora

and click

Next

.

, such as

Next

racdb

, and click

and click

.

Next

.

Next

.

d

Click

Next

.

12

When a message appears indicating that DBCA is ready to create and start the ASM instance,

click

OK

.

13

Under

ASM Disk Groups

, click

Create New

.

Deployment Guide 35

Page 36

14

In the

Create Disk Group

a

Enter a name for the disk group to be created, such as

window, perform the following steps:

databaseDG

, select

and then select the disks to include in the disk group.

If you are using the RAW device interface, select

/dev/raw/ASM1

.

A window appears indicating that disk group creation is in progress.

b

If you are using the ASM library driver and you cannot access candidate disks, click

Discovery String

c

Click OK.

, type

ORCL:*

as the string, and then select

ORCL:ASM1

The first ASM disk group is created on your cluster.

External Redundancy

Change Disk

.

,

Next, the

15

Repeat step 14 for the remaining ASM disk group, using

16

In the

ASM Disk Groups

(for example,

17

In the

Database File Locations

18

In the

Recovery Configuration

step 15 (for example,

19

In

Database Services

20

In the

Initialization Parameters

a

Select

b

In

c

In the

d

Click

21

In the

Database Storage

22

In the

Creation Options

23

In the

Summary

NOTE: This procedure may take an hour or more to complete.

ASM Disks Groups

window, select the disk group that you would like to use for Database Storage

databaseDG

flashbackDG

window, configure your services (if required) and then click

Custom

.

Shared Memory Management

SGA Size

Next

and

PGA Size

.

window, click

window, select

window click OK to create the database.

When the database creation is completed, the

24

Click

Password Management

click

Exit

.

window appears.

) and click

window, select

window, click

Next

flashbackDG

.

Use Oracle-Managed Files

Browse

, select the flashback group that you created in

as the disk group name.

), change the Flash Recovery Area size as needed, and click

window, perform the following steps:

, select

Automatic

.

windows, enter the appropriate information.

Next

.

Create Database

and click

Finish

Database Configuration Assistant

to assign specific passwords to authorized users (if required). Otherwise,

A message appears indicating that the cluster database is being started on all nodes.

and click

Next

.

window appears.

Next

.

.

Next

.

36 Deployment Guide

Page 37

25

Perform the following steps on

a

Determine the database instance that exists on that node by typing:

srvctl status database -d <database name>

b

Type the following commands to add the ORACLE_SID environment variable entry in the

user profile:

echo "export ORACLE_SID=racdbx" >> /home/oracle/.bash_profile

source /home/oracle/.bash_profile

where

racdbx

is the database instance identifier assigned to the node.

This example assumes that

26

On

one node

srvctl status database -d dbname

where

If the database instances are running, confirmation appears on the screen.

If the database instances are

srvctl start database -d dbname

where

dbname

dbname

, type:

is the global identifier name that you defined for the database in DBCA.

is the global identifier name that you defined for the database in DBCA.

each node

racdb

is the global database name that you defined in DBCA.

not

running, type:

:

Securing Your System

oracle

To prevent unauthorized users from accessing your system, Dell recommends that you disable rsh after

you install the Oracle software.

To disab l e rsh, type:

chkconfig rsh off

Setting the Password for the User oracle

Dell strongly recommends that you set a password for the user oracle to protect your system. Complete

the following steps to create the oracle password:

1

Log in as

2

Ty p e

NOTE: Additional security setup may be performed according to the site policy, provided the normal database

operation is not disrupted.

root

.

passwd oracle

and follow the instructions on the screen to create the

Deployment Guide 37

oracle

password.

Page 38

Configuring and Deploying Oracle Database 10g (Single Node)

This section provides information about completing the initial setup or completing the reinstallation

procedures as described in "Installing and Configuring Red Hat Enterprise Linux." This section covers

the following topics:

• Configuring the Public Network

• Configuring Database Storage

• Installing the Oracle Database

• Configuring the Listener

• Creating the Seed Database

Configuring the Public Network

Ensure that your public network is functioning and that an IP address and host name are assigned to

your system.

Configuring Database Storage

Configuring Database Storage Using ex3 File System

If you have additional storage device, perform the following steps:

1

Log in as

2

Ty p e :

cd /opt/oracle

root

.

3

Ty p e :

mkdir oradata recovery

Using the

4

sdb1

5

Using the

if your storage device is

6

Verify the new partition by typing:

cat /proc/partitions

If you do not detect the new partition, type:

sfdisk -R /dev/sdb

sfdisk -R /dev/sdc

7

Ty p e :

mke2fs -j /dev/sdb1

mke2fs -j /dev/sdc1

38 Deployment Guide

fdisk

if your storage device is

fdisk

utility, create a partition where you want to store your recovery files (for example,

utility, create a partition where you want to store your database files (for example,

sdb

).

sdc1

sdc

).

Page 39

8

Edit the

/etc/fstab

file for the newly created file system by adding entries such as:

/dev/sdb1 /opt/oracle/oradata ext3 defaults 1 2

/dev/sdc1 /opt/oracle/recovery ext3 defaults 1 2

Ty p e :

9

mount /dev/sdb1 /opt/oracle/oradata

mount /dev/sdc1 /opt/oracle/recovery

10

Ty p e :

chown -R oracle.dba oradata recovery

Configuring Database Storage Using Oracle ASM

The following example assumes that you have two storage devices (sdb and sdc) available to create a

disk group for the database files, and a disk group to be used for flash back recovery and archive

log files, respectively.

1

Log in as

2

Create a primary partition for the entire device by typing:

root

.

fdisk /dev/sdb

3

Create a primary partition for the entire device by typing:

fdisk /dev/sdc

Configuring ASM Storage Using the RAW Device Interface

1

Edit the

/etc/sysconfig/rawdevices

file and add the following lines:

/dev/raw/ASM1 /dev/sdb1

/dev/raw/ASM2 /dev/sdc1

2

Restart the RAW Devices Service by typing:

service rawdevices restart

Configuring Database Storage Using the Oracle ASM Library Driver

This section provides procedures for configuring the storage device using ASM.

NOTE: Before you configure the ASM Library Driver, disable SELinux.

To temporarily disable SELinux, perform the following steps:

Log in as

1

2

At the command prompt, type:

setenforce 0

root

.

Deployment Guide 39

Page 40

To permanently disable SELinux, perform the following steps:

1

Open your

2

Locate the kernel command line and append the following option:

grub.conf

file.

selinux=0

For example:

kernel /vmlinuz-2.6.9-34.ELlargesmp ro root=LABEL=/ apic rhgb quiet

selinux=0

3

Reboot your system.

4

Open a terminal window and log in as

5

Perform the following steps:

a

Ty p e :

root

.

service oracleasm configure

b

Type the following input for all the nodes:

Default user to own the driver interface [ ]:

oracle

Default group to own the driver interface [ ]:

Start Oracle ASM library driver on boot (y/n) [n]:

Fix permissions of Oracle ASM disks on boot (y/n) [y]:

6

In the terminal window, type the following:

dba

y

y

service oracleasm createdisk ASM1 /dev/sdb1

service oracleasm createdisk ASM2 /dev/sdc1

7

Repeat step 4 through step 6 for any additional ASM disks that you need to create.

8

Verify that the ASM disks are created and marked for ASM usage.

In the terminal window, type the following and press <Enter>:

service oracleasm listdisks

The disks you created in step 6 are listed in the terminal window.

For example:

ASM1

ASM2

40 Deployment Guide

Page 41

Installing Oracle Database 10g

Perform the following steps to install Oracle 10g:

Log in as

1

2

As the user

3

Start the X Window System by typing:

startx

4

Open a terminal window and type:

xhost +

5

Log in as

6

Start the Oracle Universal Installer.

In the terminal window, type the following and press <Enter>:

<CD_mountpoint>/runInstaller

7

In the

8

In the

9

In the

/opt/oracle/product/10.2.0/db_1

10

Click

11

In the

12

When the

13

In the

14

In the

15

When prompted, open a terminal window and run

A brief progress window appears, followed by the

root

.

root

, mount the

oracle

Select Installation Method

Select Installation Type

Specify Home Details

Next

.

Product-Specific Prerequisite Checks

Wa rn ing