Page 1

Dell™ PowerVault™ Modular Disk 3000i

Systems Installation Guide

www.dell.com | support.dell.com

Page 2

Notes, Notices

NOTE: A NOTE indicates important information that helps you make better use of

your computer.

NOTICE: A NOTICE indicates either potential damage to hardware or loss of data

and tells you how to avoid the problem.

____________________

Information in this document is subject to change without notice.

© 2008 Dell Inc. All rights reserved.

Reproduction in any manner whatsoever without the written permission of Dell Inc. is strictly

forbidden.

Trademarks used in this text: Dell, the DELL logo, and PowerVault are trademarks of Dell Inc.; Intel

and Pentium are registered trademarks of Intel Corporation; SUSE is a registered trademark of Novell

Inc. in the United States and other countries; Microsoft, Windows, and Windows Server are either

registered trademarks or trademarks of Microsoft Corporation in the United States and other countries;

Red Hat and Red Hat Enterprise Linux are registered trademarks of Red Hat Inc. in the United States

and other countries.

Other trademarks and trade names may be used in this document to refer to either the entities claiming

the marks and names or their products. Dell Inc. disclaims any proprietary interest in trademarks and

trade names other than its own.

February 2008

Page 3

Contents

1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

System Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Management Station Hardware Requirements

. . . . . . . . . . . . . . . 7

Introduction to Storage Arrays

. . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2 Hardware Installation . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Storage Configuration Planning . . . . . . . . . . . . . . . . . . . . . . . . . . 9

About the Enclosure Connections

Cabling the Enclosure

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Redundancy vs. Nonredundancy

Direct-Attached Solutions

Network-Attached Solutions

Attaching MD1000 Expansion Enclosures

Expanding with Previously Configured MD1000 Enclosures

Expanding with New MD1000 Enclosures

. . . . . . . . . . . . . . . . . . . . . . . . . 9

. . . . . . . . . . . . . . . . . . . . . . 10

. . . . . . . . . . . . . . . . . . . . . . . . . 10

. . . . . . . . . . . . . . . . . . . . . . . . 13

. . . . . . . . . . . . . . . . . . . . 15

. . . . . . . . 16

. . . . . . . . . . . . . . . . . 17

3 Software Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

System Assembly and Startup . . . . . . . . . . . . . . . . . . . . . . . . . . 19

Install the iSCSI Initiator Software (iSCSI-attached Host Servers Only)

Installing the iSCSI Initiator on a Windows Host Server

Installing the iSCSI Initiator on a Linux Host Server

. . . . . . . . . . 20

. . . . . . . . . . . . 20

. . . . 19

Installing MD Storage Software

. . . . . . . . . . . . . . . . . . . . . . . . . 23

Installing MD Storage Software on an iSCSI-attached Host

Server (Windows)

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Installing MD Storage Software on an iSCSI-attached Host

Server (Linux)

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Installing a Dedicated Management Station (Windows and Linux)

. . . . 27

Contents 3

Page 4

Documentation for Windows Systems. . . . . . . . . . . . . . . . . . . . . . 28

Viewing Resource CD Contents

Installing the Manuals

. . . . . . . . . . . . . . . . . . . . . . . 28

. . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Documentation for Linux Systems

Viewing Resource CD Contents

Installing the Manuals

. . . . . . . . . . . . . . . . . . . . . . . . 29

. . . . . . . . . . . . . . . . . . . . . . . 29

. . . . . . . . . . . . . . . . . . . . . . . . . . . 30

4 Array Setup and iSCSI Configuration . . . . . . . . . . . . . . . . 31

Before You Start . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Terminology

iSCSI Configuration Worksheet

Configuring iSCSI on your Storage Array

Using iSNS

Step 1: Discover the Storage Array (Out-of-band management only)

Default Management Port Settings

Automatic Storage Array Discovery

Manual Storage Array Discovery

Set Up the Array

Step 2: Configure the iSCSI Ports on the Storage Array

Step 3: Perform Target Discovery from the iSCSI Initiator

If you are using Windows Server 2003 or Windows Server 2008

GUI version

If you are using Windows Server 2008 Core Version

If you are using Linux Server

If you are using RHEL 5 or SLES 10 SP1

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

. . . . . . . . . . . . . . . . . . . . . . . 32

. . . . . . . . . . . . . . . . . . 35

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

. . . . . . 36

. . . . . . . . . . . . . . . . . . . . . 36

. . . . . . . . . . . . . . . . . . . . 36

. . . . . . . . . . . . . . . . . . . . . . 36

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

. . . . . . . . . . . . . 39

. . . . . . . . . . . 40

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

. . . . . . . . . . . . 40

. . . . . . . . . . . . . . . . . . . . . . . . 41

. . . . . . . . . . . . . . . . . . 42

4 Contents

Step 4: Configure Host Access

. . . . . . . . . . . . . . . . . . . . . . . . . 44

Understanding CHAP Authentication

What is CHAP?

Target CHAP

Mutual CHAP

CHAP Definitions

How CHAP Is Set Up

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

. . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

. . . . . . . . . . . . . . . . . . . . . . 45

Page 5

Step 5: Configure CHAP Authentication on the Storage Array (optional). . . . 47

Configuring Target CHAP Authentication on the Storage Array

Configuring Mutual CHAP Authentication on the Storage Array

. . . . . . 47

. . . . . . 48

Step 6: Configure CHAP Authentication on the Host Server (optional)

. . . . . 49

If you are using Windows Server 2003 or Windows Server 2008

GUI version

If you are using Windows Server 2008 Core Version

If you are using Linux Server

If you are using RHEL 5 or SLES 10 SP1

If you are using SLES10 SP1 via the GUI

Step 7: Connect to the Target Storage Array from the Host Server

If you are using Windows Server 2003 or Windows Server 2008 GUI

If you are using Windows Server 2008 Core Version

If you are using a Linux Server

Viewing the status of your iSCSI connections

Step 8: (Optional) Set Up In-Band Management

Premium Features

Troubleshooting Tools

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

. . . . . . . . . . . . 50

. . . . . . . . . . . . . . . . . . . . . . . . 50

. . . . . . . . . . . . . . . . . . 52

. . . . . . . . . . . . . . . . . . 53

. . . . . . . 54

. . . 54

. . . . . . . . . . . . 55

. . . . . . . . . . . . . . . . . . . . . . . 56

. . . . . . . . . . . . . . . 57

. . . . . . . . . . . . . . . . 58

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

5 Uninstalling Software. . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Uninstalling From Windows . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Uninstalling From Linux

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

6 Guidelines for Configuring Your Network for iSCSI . . . . . . 63

Windows Host Setup. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

Linux Host Setup

Configuring TCP/IP on Linux using DHCP (root users only)

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

. . . . . . . . 65

Configuring TCP/IP on Linux using a Static IP address

(root users only)

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

Contents 5

Page 6

6 Contents

Page 7

Introduction

This guide outlines the steps for configuring the Dell™ PowerVault™ Modular Disk 3000i

(MD3000i). The guide also covers installing the MD Storage Manager software, installing and

configuring the Microsoft

PowerVault MD3000i Resource CD. Other information provided includes system requirements,

storage array organization, initial software startup and verification, and discussions of utilities and

premium features.

MD Storage Manager enables an administrator to configure and monitor storage arrays for optimum

usability. MD Storage Manager operates on both Microsoft

systems and can send alerts about storage array error conditions by either e-mail or Simple Network

Management Protocol (SNMP). These alerts can be set for instant notification or at regular intervals.

System Requirements

Before installing and configuring the MD3000i hardware and MD Storage Manager software, ensure

that the operating system is supported and minimum system requirements are met. For more

information, refer to the Dell™ PowerVault™ MD3000i Support Matrix available on

support.dell.com.

Management Station Hardware Requirements

A management station uses MD Storage Manager to configure and manage storage arrays across the

network. Any system designated as a management station must be an x86-based system that meets

the following minimum requirements:

•Intel® Pentium® or equivalent CPU (133 MHz or faster)

• 128 MB RAM (256 MB recommended)

• 120 MB disk space available

• Administrator or equivalent permissions

• Minimum display setting of 800 x 600 pixels with 256 colors (1024 x 768 pixels with 16-bit color

recommended)

®

iSCSI and Linux initiators, and accessing documentation from the

®

Windows® and Linux operating

Introduction 7

Page 8

Introduction to Storage Arrays

A storage array includes various hardware components, such as physical disks, RAID controller modules,

fans, and power supplies, gathered into enclosures. An enclosure containing physical disks accessed

through RAID controller modules is called a RAID enclosure.

One or more host servers attached to the storage array can access the data on the storage array. You can

also establish multiple physical paths between the host(s) and the storage array so that loss of any single

path (through failure of a host server port, for example) does not result in total loss of access to data on the

storage array.

The storage array is managed by MD Storage Manager software running either on a host server or a

dedicated management station. On a host server system, MD Storage Manager and the storage array

communicate management requests and event information directly via iSCSI ports. On a dedicated

management station, MD Storage Manager communicates with the storage array either through an

Ethernet connection on the RAID controller modules or via the host agent installed on the host server.

Using MD Storage Manager, you configure the physical disks in the storage array into logical components

called disk groups, then divide the disk groups into virtual disks. You can make as many disk groups and

virtual disks as your storage array configuration and hardware permit. Disk groups are created in the

unconfigured capacity of a storage array, while virtual disks are created in the free capacity of a disk

group.

Unconfigured capacity is comprised of the physical disks not already assigned to a disk group. When a

virtual disk is created using unconfigured capacity, a disk group is automatically created. If the only

virtual disk in a disk group is deleted, the disk group is also deleted. Free capacity is space in a disk group

that has not been assigned to a virtual disk.

Data is written to the physical disks in the storage array using RAID technology. RAID levels define the

way in which data is written to physical disks. Different RAID levels offer different levels of accessibility,

redundancy, and capacity. You can set a specified RAID level for each disk group and virtual disk on your

storage array.

You can also provide an additional layer of data redundancy by creating disk groups that have a RAID

level other than 0. Hot spares can automatically replace physical disks marked as Failed.

For more information on using RAID and managing data in your storage solution, see the Dell™

PowerVault™ Modular Disk Storage Manager User’s Guide.

8 Introduction

Page 9

Hardware Installation

This chapter provides guidelines for planning the physical configuration of your Dell™ PowerVault™

MD3000i storage array and for connecting one or more hosts to the array. For complete information

on hardware configuration, see the Dell™ PowerVault™ MD3000i Hardware Owner’s Manual.

Storage Configuration Planning

Consider the following items before installing your storage array:

• Evaluate data storage needs and administrative requirements.

• Calculate availability requirements.

• Decide the frequency and level of backups, such as weekly full backups with daily partial backups.

• Consider storage array options, such as password protection and e-mail alert notifications for error

conditions.

• Design the configuration of virtual disks and disk groups according to a data organization plan.

For example, use one virtual disk for inventory, a second for financial and tax information, and a

third for customer information.

• Decide whether to allow space for hot spares, which automatically replace failed physical disks.

• If you will use premium features, consider how to configure virtual disk copies and snapshot

virtual disks.

About the Enclosure Connections

The RAID array enclosure is connected to an iSCSI-enabled host server via one or two RAID

controller modules. The RAID controller modules are identified as RAID controller module 0 and

RAID controller module 1 (see the PowerVault MD3000i Hardware Owner’s Manual for more

information).

Each RAID controller module contains two iSCSI In port connectors that provide direct connections

to the host server or switches. iSCSI In port connectors are labeled In-0 and In-1(see the PowerVault

MD3000i Hardware Owner’s Manual for more information).

Each MD3000i RAID controller module also contains an Ethernet management port and a SAS Out

port connector. The Ethernet management port allows you to install a dedicated management

station (server or standalone system). The SAS Out port allows you to connect the RAID enclosure

to an optional expansion enclosure (MD1000) for additional storage capacity.

Hardware Installation 9

Page 10

Cabling the Enclosure

You can connect up to 16 hosts and two expansion enclosures to the storage array.

To plan your configuration, complete the following tasks:

1

Evaluate your data storage needs and administrative requirements.

2

Determine your hardware capabilities and how you plan to organize your data.

3

Calculate your requirements for the availability of your data.

4

Determine how you plan to back up your data.

The iSCSI interface provides many versatile host-to-controller configurations. For the purposes of this

manual, the most conventional topologies are described. The figures in this chapter are grouped

according to the following general categories:

• Direct-attached solutions

• Network-attached (SAN) solutions

Redundancy vs. Nonredundancy

Nonredundant configurations, configurations that provide only a single data path from a host to the

RAID enclosure, are recommended only for non-critical data storage. Path failure from a failed or

removed cable, a failed NIC, or a failed or removed RAID controller module results in loss of host access

to storage on the RAID enclosure.

Redundancy is established by installing separate data paths between the host and the storage array, in

which each path is to different RAID controller modules. Redundancy protects the host from losing

access to data in the event of path failure, because both RAID controllers can access all the disks in the

storage array.

Direct-Attached Solutions

You can cable from the Ethernet ports of your host servers directly to your MD3000i RAID controller

iSCSI ports. Direct attachments support single path configurations (for up to four servers) and dual path

data configurations (for up to two servers) for both single and dual controller modules.

Single Path Data Configurations

With a single path configuration, a group of heterogeneous clients can be connected to the MD3000i

RAID controller through a single physical Ethernet port. Because there is only the single port, there is no

redundancy (although each iSCSI portal supports multiple connections). This configuration is

supported for both single controller and dual controller modes.

Figure 2-1 and Figure 2-2 show the supported nonredundant cabling configurations to MD3000i RAID

controller modules using the single path data configuration. Figure 2-1 shows a single controller array

configuration. Figure 2-2 shows how four standalone servers are supported in a dual controller array

configuration.

10 Hardware Installation

Page 11

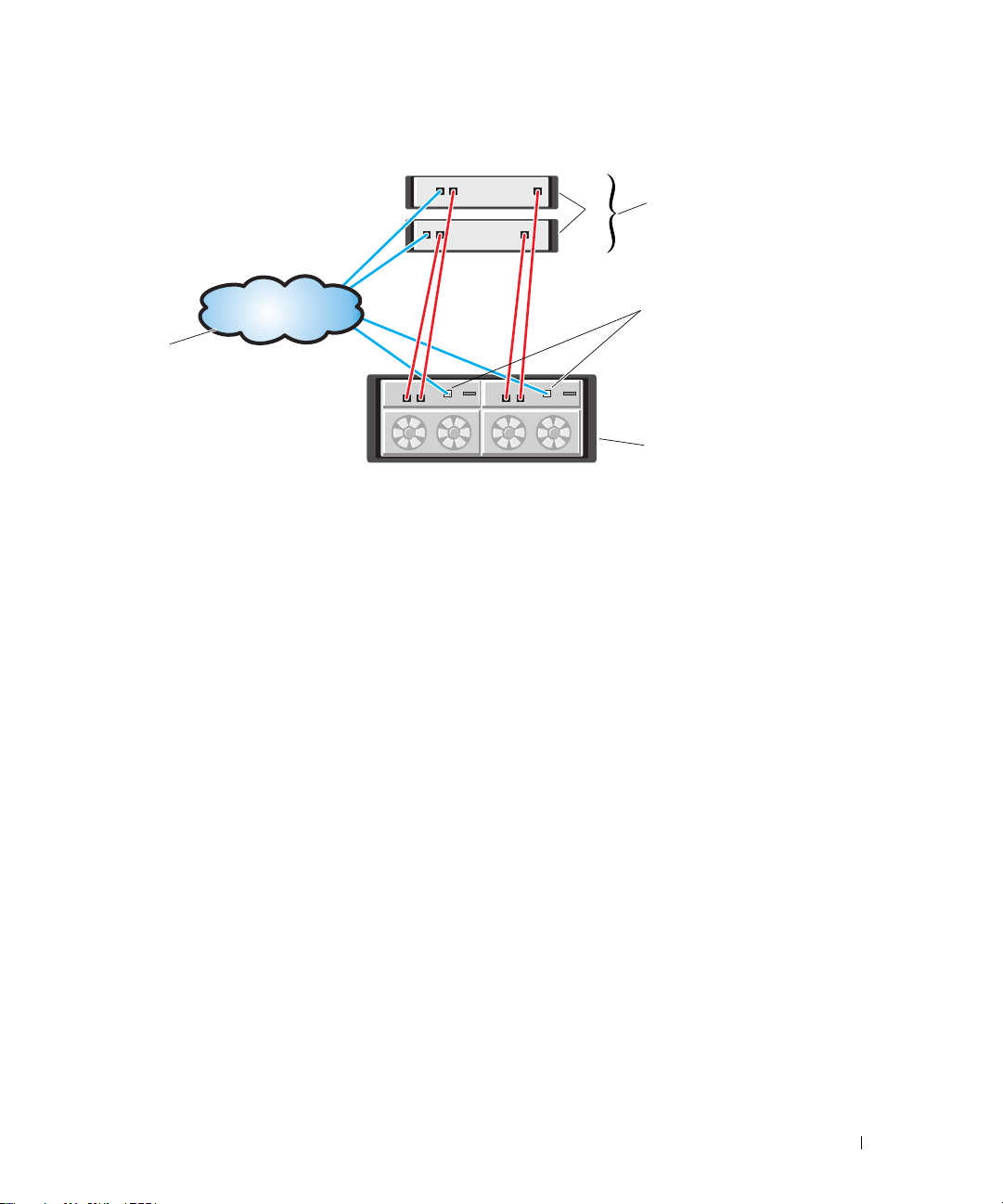

Figure 2-1. One or Two Direct-Attached Servers (or Two-Node Cluster), Single-Path Data, Single Controller (Simplex)

2

1

Management traffic

3

5

4

1 standalone (one or

two) host server

4 MD3000i RAID

Enclosure (single

controller)

2 two-node cluster 3 Ethernet

management port

5 corporate, public or

private network

Hardware Installation 11

Page 12

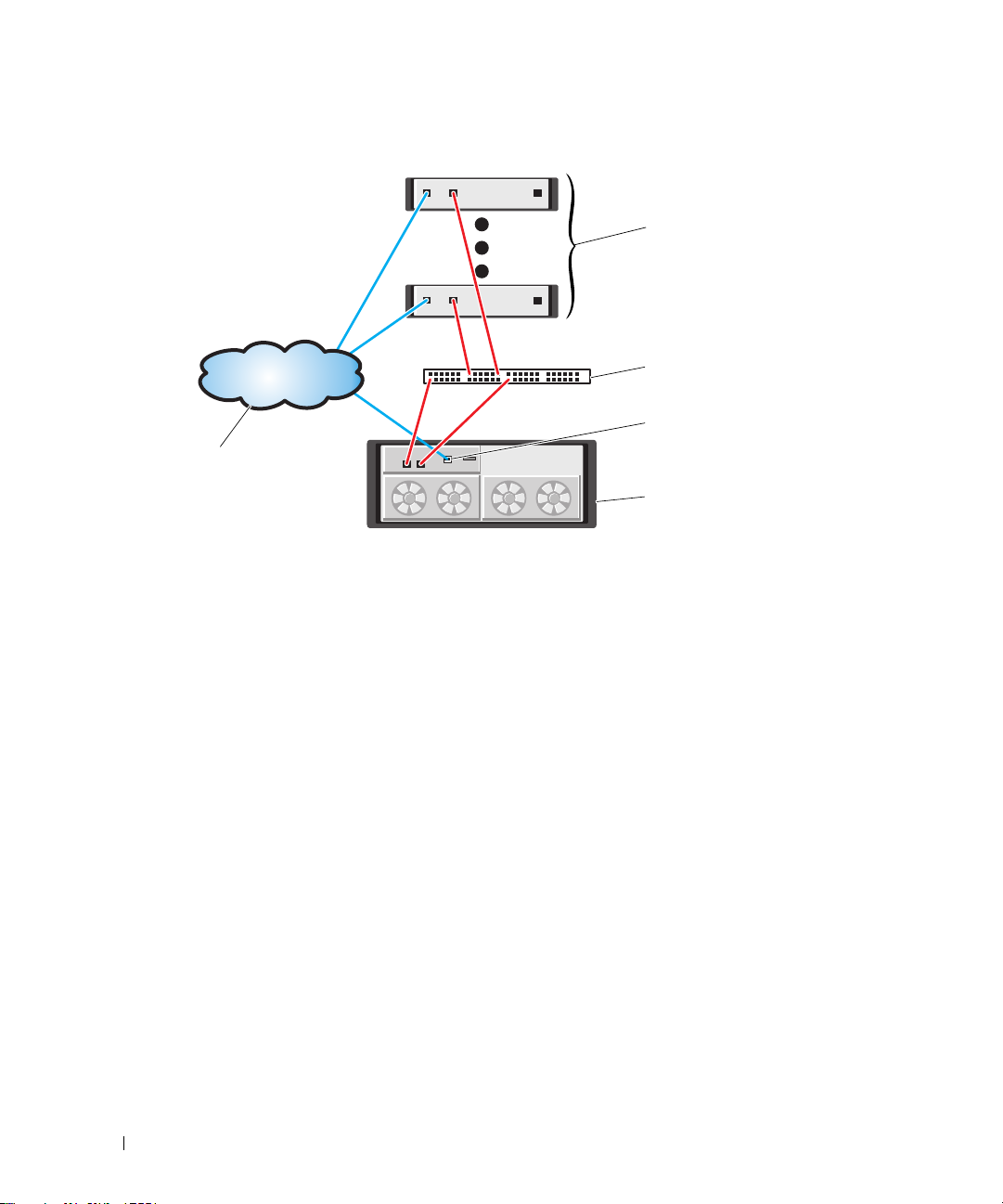

Figure 2-2. Up to Four Direct-Attached Servers, Single-Path Data, Dual Controllers (Duplex)

1

Management traffic

2

4

3

1 standalone (up to

four) host server

4 corporate, public or

private network

2 Ethernet management

port (2)

3 MD3000i RAID

Enclosure (dual

controllers)

Dual Path Data Configuration

In Figure 2-3, up to two servers are directly attached to the MD3000i RAID controller module. If the host

server has a second Ethernet connection to the array, it can be attached to the iSCSI ports on the array’s

second controller. This configuration provides improved availability by allowing two separate physical

paths for each host, which ensures full redundancy if one of the paths fail.

12 Hardware Installation

Page 13

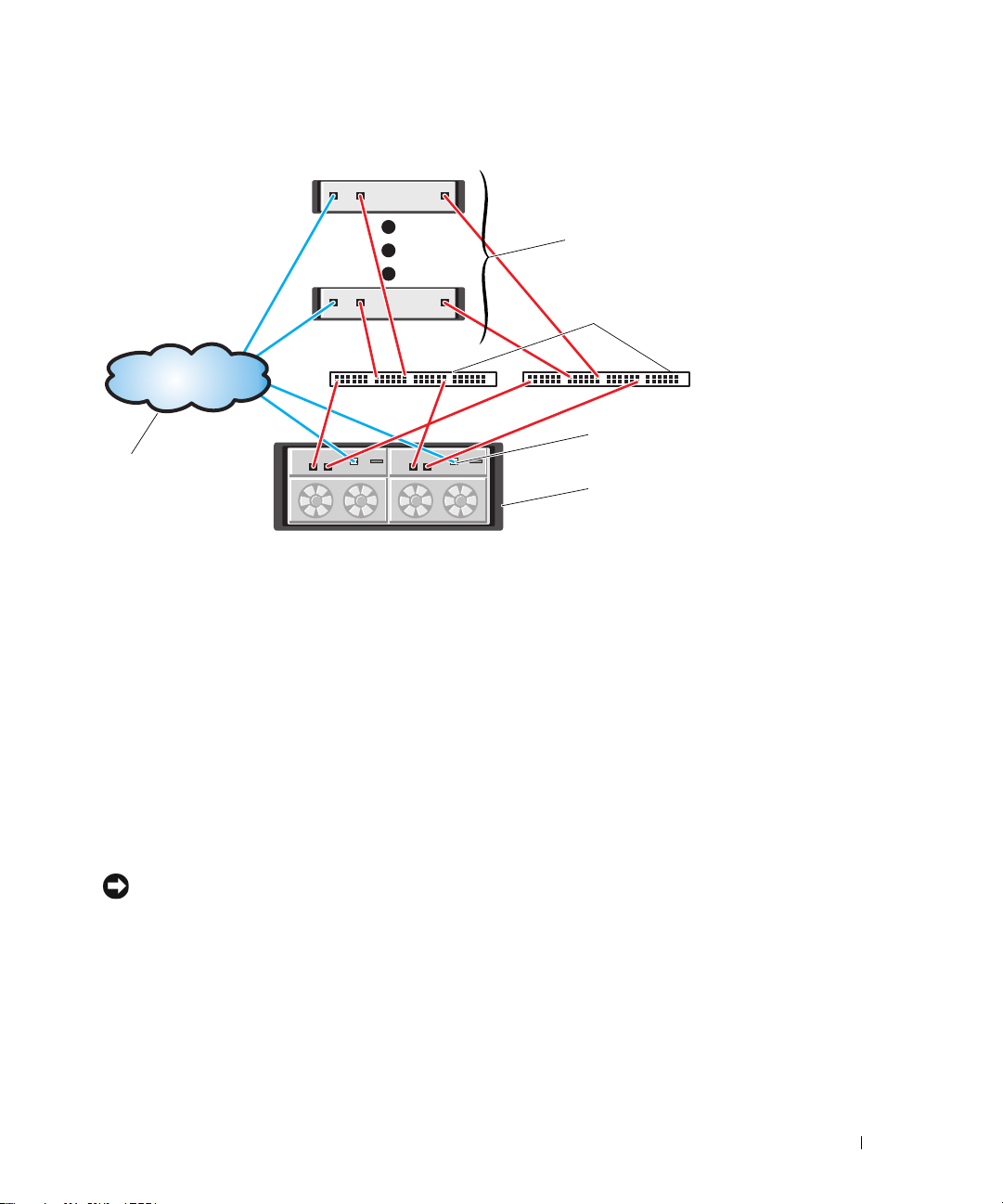

Figure 2-3. One or Two Direct-Attached Servers (or Two-Node Cluster), Dual-Path Data, Dual Controllers (Duplex)

1

5

1 standalone (one or

two) host server

4 MD3000i RAID

Enclosure (dual

controllers)

2 two-node cluster 3 Ethernet

5 corporate, public or

private network

2

3

4

management port

(2)

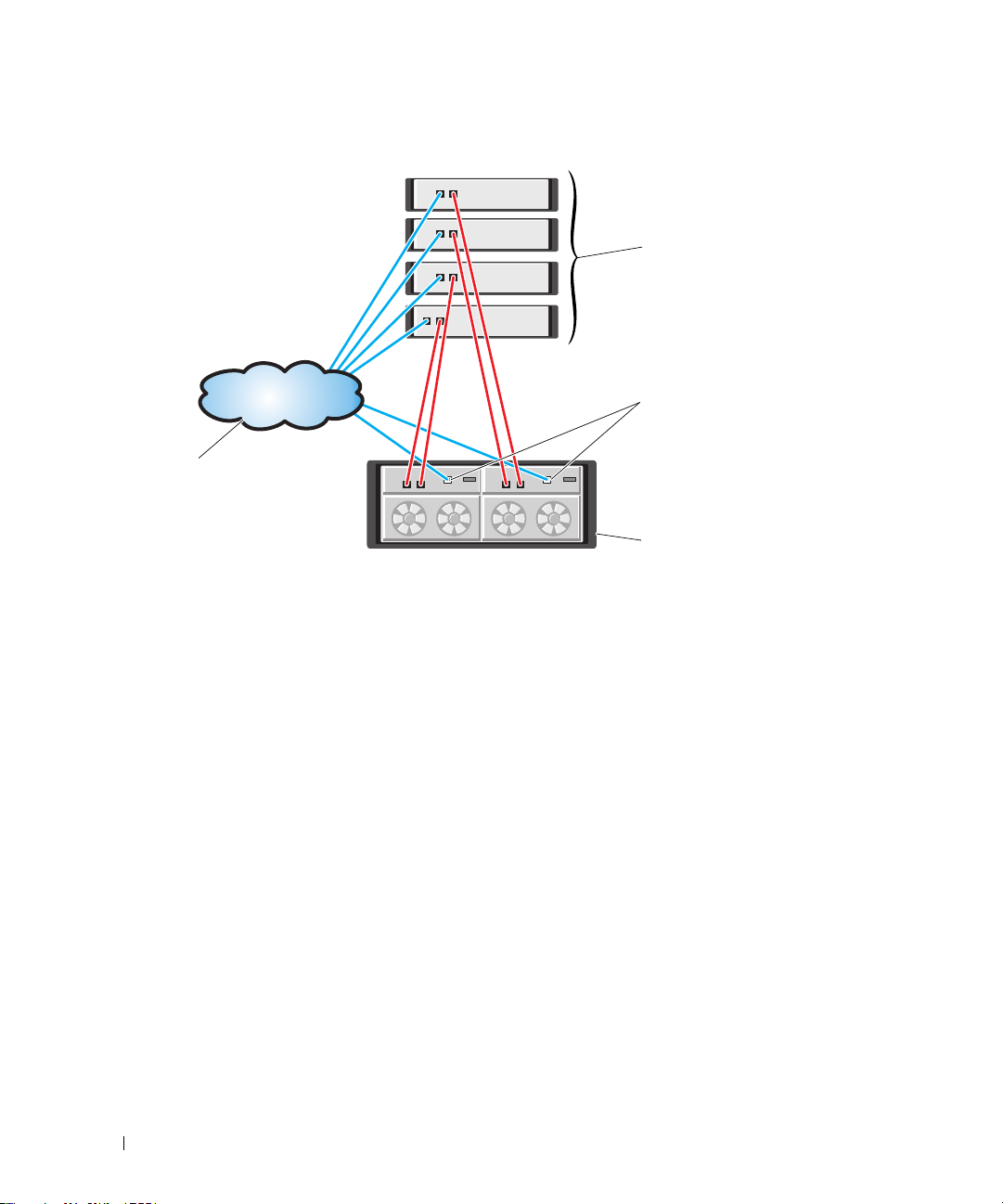

Network-Attached Solutions

You can also cable your host servers to the MD3000i RAID controller iSCSI ports through an IP storage

area network (SAN) industry-standard 1GB Ethernet switch. By using an IP SAN "cloud" Ethernet

switch, the MD3000i RAID controller can support up to 16 hosts simultaneously with multiple

connections per session. This solution supports either single- or dual-path data configurations, as well as

either single or dual controller modules.

Figure 2-4 shows how up to 16 standalone servers can be attached (via multiple sessions) to a single

MD3000i RAID controller module through a network. Hosts that have a second Ethernet connection to

the network allow two separate physical paths for each host, which ensures full redundancy if one of the

paths fail. Figure 2-5 shows how the same number of hosts can be similarly attached to a dual MD3000i

RAID controller array configuration.

Hardware Installation 13

Page 14

Figure 2-4. Up to 16 SAN-Configured Servers, Single-Path Data, Single Controller (Simplex)

1

2

3

5

4

1 up to 16 standalone

host servers

4 MD3000i RAID

Enclosure (single

controller)

2 IP SAN (Gigabit

Ethernet switch)

5 corporate, public or

private network

3 Ethernet

management port

14 Hardware Installation

Page 15

Figure 2-5. Up to 16 Dual SAN-Configured Servers, Dual-Path Data, Dual Controllers (Duplex)

1

2

3

5

4

1 up to 16 standalone

host servers

4 MD3000i RAID

Enclosure (dual

controllers)

2 IP SAN (dual Gigabit

Ethernet switches)

5 corporate, public or

private network

3 Ethernet

management port

(2)

Attaching MD1000 Expansion Enclosures

One of the features of the MD3000i is the ability to add up to two MD1000 expansion enclosures for

additional capacity. This expansion increases the maximum physical disk pool to 45 3.5" SAS and/or

SATA II physical disks.

As described in the following sections, you can expand with either a brand new MD1000 or an MD1000

that has been previously configured in a direct-attach solution with a PERC 5/E system.

NOTICE: Ensure that all MD1000 expansion enclosures being connected to the MD3000i are first updated to the

latest Dell MD1000 EMM Firmware (available from support.dell.com). Dell MD1000 EMM Firmware versions prior to

A03 are not supported in an MD3000i array; attaching an MD1000 with unsupported firmware causes an uncertified

condition to exist on the array. See the following procedure for more information.

Hardware Installation 15

Page 16

Expanding with Previously Configured MD1000 Enclosures

Use this procedure if your MD1000 is now directly attached to and configured on a Dell PERC 5/E

system. Data from virtual disks created on a PERC 5 SAS controller cannot be directly migrated to an

MD3000i or to an MD1000 expansion enclosure connected to an MD3000i.

NOTICE: If an MD1000 that was previously attached to PERC 5 SAS controller is used as an expansion enclosure

to an MD3000i, the physical disks of the MD1000 enclosure will be reinitialized and data will be lost. All data on the

MD1000 must be backed up before attempting the expansion.

Perform the following steps to attach previously configured MD1000 expansion enclosures to the MD3000i:

Back up all data on the MD1000 enclosure(s).

1

2

While the enclosure is still attached to the PERC 5 controller, upgrade the MD1000 firmware to

version A03 or above. Windows systems users can reference the

users can reference the

3

Before adding the MD1000 enclosure(s), make sure the MD3000i software is installed and up to date.

For more information, refer to the

support.dell.com

a

Install or update (to the latest version available on

.

DUP.bin

package.

Dell™ PowerVault™ MD3000i Support Matrix

support.dell.com

each host server. Install or update (to the latest version available on

multipath drivers on each host server. The multipath drivers are bundled with Modular Disk

Storage Management install. On Windows systems, the drivers are automatically installed when a

Full or Host selection is made.

b

Using the MD Storage Manager, update the MD3000i RAID controller firmware to the latest

version available on

Controller Module Firmware

RAID Controller Module NVSRAM

4

Stop I/O and turn off all systems:

a

Stop all I/O to the array and turn off affected host systems attached to the MD3000i.

b

Turn off the MD3000i.

c

Turn off the MD1000 enclosure(s).

5

Referencing the applicable configuration for your rack (Figure 2-1 through Figure 2-5), cable the

support.dell.com (Support→

) and the NVSRAM (

).

Download Firmware→

Support→

MD1000 enclosure(s) to the MD3000i.

DUP.exe

package; for Linux kernels,

available on

) the MD Storage Manager on

support.dell.com

Download RAID

Download Firmware→

) the

Download

16 Hardware Installation

Page 17

6

Turn on attached units:

a

Turn on the MD1000 expansion enclosure(s). Wait for the enclosure status LED to light blue.

b

Turn on the MD3000i and wait for the status LED to indicate that the unit is ready:

• If the status LEDs light a solid amber, the MD3000i is still coming online.

• If the status LEDs are blinking amber, there is an error that can be viewed using the

MD Storage Manager.

• If the status LEDs light a solid blue, the MD3000i is ready.

c

After the MD3000i is online and ready, turn on any attached host systems.

7

After the MD1000 is configured as the expansion enclosure to the MD3000i, restore the data that was

backed up in step 1.

After they are online, the MD1000 enclosures are available for use within the MD3000i system.

Expanding with New MD1000 Enclosures

Perform the following steps to attach new MD1000 expansion enclosures to the MD3000i:

1

Before adding the MD1000 enclosure(s), make sure the MD3000i software is installed and up to date.

For more information, refer to the

support.dell.com

a

Install or update (to the latest version available on

.

each host server.

b

Install or update (to the latest version available on

each host server.

c

Using the MD Storage Manager, update the MD3000i RAID controller firmware

(

Support→Download Firmware→

NVSRAM (

2

Stop I/O and turn off all systems:

a

Stop all I/O to the array and turn off affected host systems attached to the MD3000i.

b

Turn off the MD3000i.

c

Turn off any MD1000 enclosures in the affected system.

3

Referencing the applicable configuration for your rack (Figure 2-1 through Figure 2-5), cable the

Support→

MD1000 enclosure(s) to the MD3000i.

Dell™ PowerVault™ MD3000i Support Matrix

support.dell.com

support.dell.com

Download RAID Controller Module Firmware

Download Firmware→

Download RAID Controller Module NVSRAM

available on

) the MD Storage Manager on

) the multipath drivers on

) and the

).

Hardware Installation 17

Page 18

4

Turn on attached units:

a

Turn on the MD1000 expansion enclosure(s). Wait for the enclosure status LED to light blue.

b

Turn on the MD3000i and wait for the status LED to indicate that the unit is ready:

• If the status LEDs light a solid amber, the MD3000i is still coming online.

• If the status LEDs are blinking amber, there is an error that can be viewed using the

MD Storage Manager.

• If the status LEDs light a solid blue, the MD3000i is ready.

c

After the MD3000i is online and ready, turn on any attached host systems.

5

Using the MD Storage Manager, update all attached MD1000 firmware if it is out of date:

a

Select

b

Check the

Support→

Download Firmware→

Select All

check box so that all attached MD1000 enclosures are updated at the same

Download Environmental (EMM) Card Firmware

time (each takes approximately 8 minutes to update).

.

18 Hardware Installation

Page 19

Software Installation

The MD3000i Resource CD contains all documentation pertinent to MD3000i hardware and

MD Storage Manager software. It also includes software and drivers for both Linux and

Microsoft

The MD3000i Resource CD contains a readme.txt file covering changes to the software, updates,

fixes, patches, and other important data applicable to both Linux and Windows operating systems.

The readme.txt file also specifies requirements for accessing documentation, information regarding

versions of the software on the CD, and system requirements for running the software.

For more information on supported hardware and software for Dell™ PowerVault™ systems, refer to

the Dell™ PowerVault™ MD3000i Support Matrix located at support.dell.com.

Dell recommends installing all the latest updates available at support.dell.com.

System Assembly and Startup

Use the following procedure to assemble and start your system for the first time:

1

2

3

4

5

®

Windows® operating systems.

Install the NIC(s) in each host server that you attach to the MD3000i Storage Array, unless the

NIC was factory installed. For general information on setting up your IP addresses, see

for Configuring Your Network for iSCSI

Cable the storage array to the host server(s), either directly or via a switch.

Cable the Ethernet management ports on the storage array to either the management network

(iSCSI-attached host server) or dedicated management station (non-iSCSI).

Power on the storage array and wait for the status LED to turn blue.

Start up each host server that is cabled to the storage array.

.

Guidelines

Install the iSCSI Initiator Software (iSCSI-attached Host Servers Only)

To configure iSCSI later in this document (see "Array Setup and iSCSI Configuration"), you must

install the Microsoft iSCSI initiator on any host server that will access your storage array before you

install the MD Storage Manager software.

NOTE: Windows Server® 2008 contains a built-in iSCSI initiator. If your system is running Windows

Server 2008, you do not need to install the iSCSI initiator as shown in this section. Skip directly to "Installing

MD Storage Software."

Software Installation 19

Page 20

Depending on whether you are using a Windows Server 2003 operating system or a Linux operating

system, refer to the following steps for downloading and installing the iSCSI initiator.

Installing the iSCSI Initiator on a Windows Host Server

1

Refer to the

Dell™ PowerVault™ MD3000i Support Matrix

on

support.dell.com

for the latest version

and download location of the Microsoft iSCSI Software Initiator software.

2

From the host server, download the iSCSI Initiator software.

3

Once the installation begins and the

Initiator Service

4

DO NOT select

NOTICE: Make sure the Microsoft MPIO Multitpathing Support for iSCSI option is NOT selected. Using this option

will cause the iSCSI initiator setup to function improperly.

5

Accept the license agreement and finish the install.

NOTE: If you are prompted to do so, reboot your system.

and

Software Initiator

Microsoft MPIO Multitpathing Support for iSCSI

Microsoft iSCSI Initiator Installation

.

.

setup panel appears, select

Installing the iSCSI Initiator on a Linux Host Server

Follow the steps in this section to install the iSCSI initiator on a Linux server.

NOTE: All appropriate Linux iSCSI initiator patches are installed using the MD3000i Resource CD during MD

Storage Manager Software installation.

Installing the iSCSI Initiator on a RHEL 4 System

You can install the iSCSI initiator software on Red Hat® Enterprise Linux® 4 systems either during or

after operating system installation.

To install the iSCSI initiator during RHEL 4 installation:

1

When the

to be installed

2

In the

Package Installation Defaults

Servers

option. Click

list, select the

Next

Network Servers

screen is displayed, select the

to go to the

Server applications.

3

Select the

4

Click OK, then

To install the iSCSI initiator after RHEL 4 installation:

1

From the desktop, click

Group Selection

2

In the

iscsi-initiator-utils - iSCSI daemon and utility programs

Next

to continue with the installation.

Applications→ System Settings→ Add Remove Applications

screen is displayed.

Servers

list, select the

Network Servers

Server applications.

20 Software Installation

Package Group Selection

package and click

package and click

Details

Details

Customize the set of Packages

screen.

to display a list of Network

option.

. The

Package

to display a list of Network

Page 21

3

Select the

4

Click

Installing the iSCSI Initiator on a RHEL 5 System

iscsi-initiator-utils - iSCSI daemon and utility programs

Close

, then

Update

.

NOTE: Depending upon your installation method, the system will ask for the required source to install the

package.

option.

You can install the iSCSI initiator software on Red Hat Enterprise Linux 5 systems either during or after

operating system installation. With this version of the Linux software, you can also elect to install the

iSCSI initiator after the operating system installation via the command line.

To install the iSCSI initiator during RHEL 5 installation:

1

When the

2

Click

3

Select

4

Click

5

Select the

6

Click OK, then

To install the iSCSI initiator after RHEL 5 installation:

1

From the desktop, select

Package Installation Defaults

Next

to go to the

Base System

Optional Packages

iscsi-initiator-utils

Next

Package Group Selection

, then select the

.

option.

to continue with the installation.

Applications→ Add/Remove Software

screen is displayed, select the

screen.

Base

option.

Customize now

. The

Package Manager

option.

screen is

displayed.

2

In the

Package Manager

3

Search for

4

When it is displayed, select the

5

Click

iscsi-initiator-utils

Apply

.

screen, select the

iscsi-initiator-utils

Search

.

tab.

option.

NOTE: Depending upon your installation method, the system will ask for the required source to install the

package.

NOTE: This method might not work if network access is not available to a Red Hat Network repository.

To install the iSCSI initiator after RHEL 5 installation via the command line:

Insert the RHEL 5 installation CD 1 or DVD. If your media is not automounted, you must manual

1

mount it. The

2

Run the following command:

iscsi-initiatorutils.rpm

file is located in the Server or Client subdirectory.

rpm -i /path/to/media/Server/iscsi-initiatorutils.rpm

Software Installation 21

Page 22

Installing the iSCSI Initiator on a SLES 9 System

You can install the iSCSI initiator software on SUSE® Linux Enterprise Servers (SLES) 9 SP3 systems

either during or after operating system installation.

To install the iSCSI initiator during SLES 9 installation:

1

At the

YaST Installation Settings

2

Click

3

4

Software

Select

Various Linux Tools

Click

Accept

, then select

.

If a dependencies window is displayed, click

To install the iSCSI initiator after SLES 9 installation:

1

From the

2

Select

3

In the Search box, enter

4

When the

5

Click on

6

If no dependencies are found, click

Installing the iSCSI Initiator on a SLES 10 SP1 System

Start

menu, select

Software

, then

Install and Remove Software

linux-iscsi

linux-iscsi

module is displayed, select it.

Check Dependencies

screen, click

Detailed Selection

, then select

System YaST

Change

to see a complete list of packages.

linux-iscsi

Continue

.

.

.

and proceed with the installation.

.

.

to determine if any dependencies exist.

Accept

.

You can install the iSCSI initiator software on SUSE Linux Enterprise Server Version 10 systems either

during or after operating system installation.

To install the iSCSI initiator during SLES 10 SP1 installation:

1

At the

YaST Installation Settings

2

Click

Software

3

In the Search box, enter

4

When the

5

Click

Accept

6

If a dialog box regarding dependencies appears, click

Installing the iSCSI initiator after SLES 10 SP1 installation:

1

Select

Desktop→ YaS T→ Software→ Software Management

2

Select

Search

3

In the Search box, enter

, then select

open-iscsi

.

.

iscsi

and

yast2-iscsi-client

iscsi

Search

.

.

screen, click

.

modules are displayed, select them.

Change

22 Software Installation

.

Continue

.

and proceed with installation.

Page 23

4

When the

5

Click

open-iscsi

Accept

and

yast2-iscsi-client

modules are displayed, select them.

.

Installing MD Storage Software

The MD3000i Storage Software provides the host-based storage agent, multipath driver, and MD

Storage Manager application used to operate and manage the storage array solution. The MD Storage

Manager application is installed on a host server to configure, manage, and monitor the storage array.

When installing from the CD, three installation types are available:

•

Typical (Full installation)

software. It includes the necessary host-based storage agent, multipath driver, and MD Storage

Manager software. Select this option if you plan to use MD Storage Manager on the host server to

configure, manage, and monitor the storage array.

•

Management Station

to configure, manage, and monitor the storage array. Select this option if you plan to us MD Storage

Manager to manage the storage array from a standalone system that is connected to the storage

array only via the Ethernet management ports.

•

Host

— This package installs the necessary storage agent and multipath driver on a host server

connected to the storage array. Select this option on all host servers that are connected to a storage

array but will NOT use MD Storage Manager for any storage array management tasks.

NOTE: Dell recommends using the Host installation type if the host server is running Windows Server 2008

Core version.

— This package installs both the management station and host server

—

This package installs the MD Storage Manager software, which is needed

Installing MD Storage Software on an iSCSI-attached Host Server (Windows)

To install MD Storage Manager on a Windows system, you must have administrative privileges to install

MD Storage Manager files and program packages to the C:\Program Files\Dell\MD Storage Manager

directory.

NOTE: A minimum version of the Storport driver must be installed on the host server before installing the MD

Storage Manager software. A hotfix with the minimum supported version of the Storport driver is located in the

\windows\Windows_2003_2008\hotfixes directory on the MD3000i Resource CD. The MD Storage Manager

installation will test for the minimum Storport version and will require you to install it before proceeding.

Complete the following steps to install MD Storage Manager on an iSCSI-connected host server:

1

Close all other programs before installing any new software.

2

Insert the CD, if necessary, and navigate to the main menu.

NOTE: If the host server is running Windows Server 2008 Core version, navigate to the CD drive and run the

setup.bat utility.

3

From the main menu, select

The Installation Wizard appears.

Install MD3000i Storage Software

.

Software Installation 23

Page 24

4

Click

Next

.

5

Accept the terms of the License Agreement, and click

Next

.

The screen shows the default installation path.

6

Click

Next

to accept the path, or enter a new path and click

7

Select an installation type:

Next

.

• Typical (Full installation) — This package installs both the management station and host software.

It includes the necessary host-based storage agent, multipath driver, and MD Storage Manager

software. Select this option if you plan to use MD Storage Manager on the host server to configure,

manage, and monitor the storage array.

OR

• Host — This package installs the necessary storage agent and multipath driver on a host server

connected to the storage array. Select this option on all hosts that are connected to a storage array

but will NOT use MD Storage Manager for any storage array management tasks.

NOTE: Dell recommends using the Host installation type if the host server is running Windows Server 2008

Core version.

8

Click

Next

.

9

If the

Overwrite Warning

dialog appears, click OK. The software currently being installed

automatically replaces any existing versions of MD Storage Manager.

10

If you selected Typical (full) installation in step 6, a screen appears asking whether to restart the event

monitor automatically or manually after rebooting. You should configure only one system (either a host

or a management station) to automatically restart the event monitor.

NOTE: The event monitor notifies the administrator of problem conditions with the storage array. MD Storage

Manager can be installed on more than one system, but running the event monitor on multiple systems can

cause multiple alert notifications to be sent for the same error condition. To avoid this issue, enable the event

monitor only on a single system that monitors your storage arrays. For more information on alerts, the event

monitor, and manually restarting the event monitor, see the User’s Guide.

11

The

Pre-Installation Summary

space, and the available disk space. If the installation path is correct, click

12

When the installation completes, click

13

A screen appears asking if you want to restart the system now. Select

system myself

14

If you are setting up a cluster host, double-click the

in the

windows\utility

each node.

NOTE: Windows clustering is only supported on Windows Server 2003 and Windows Server 2008.

24 Software Installation

screen appears, showing the installation destination, the required disk

Install

.

Done

.

No, I will restart my

.

MD3000i Stand Alone to Cluster.reg

file located

directory of the MD3000i Resource CD. This merges the file into the registry of

Page 25

If you are reconfiguring a cluster node into a stand alone host, double-click the

Stand Alone.reg

file located in the

windows\utility

directory of the MD3000i Resource CD. This

MD3000i Cluster to

merges the file into the host registry.

NOTE: These registry files set the host up for the correct failback operation.

15

If you have third-party applications that use the Microsoft Volume Shadow-copy Service (VSS) or Virtual

Disk Service (VDS) Application Programming Interface (API), install the VDS_VSS package located in

the

windows\VDS_VSS

directory on the MD3000i Resource CD. Separate versions for 32-bit and 64-bit

operating systems are provided. The VSS and VDS provider will engage only if it is needed.

16

Set the path for the command line interface (CLI), if required. See the

MD Storage Manager CLI Guide

for more information.

17

Install MD Storage Manager on all other Windows hosts attached to the MD3000i array.

18

If you have not yet cabled your MD3000i Storage Array, do so at this time.

19

After the MD3000i has initialized, reboot each host attached to the array.

NOTE: If you are not installing MD Storage Manager directly from the Resource CD (for example, if you are instead

installing MD Storage Manager from a shared network drive), you must manually apply iSCSI updates to the

Windows system registry. To apply these updates, go to the \windows\Windows_2003_2008\iSCSI_reg_changer

directory on the Resource CD and run the iSCSi_reg_changer_Win2k3.bat or iSCSi_reg_changer_Win2k8.bat file.

The iSCSI Initiator must be installed before you make these updates.

Installing MD Storage Software on an iSCSI-attached Host Server (Linux)

MD Storage Manager can be installed and used only on Linux distributions that utilize the RPM Package

Manager format, such as Red Hat

/opt/dell/mdstoragemanager directory.

®

or SUSE®. The installation packages are installed by default in the

NOTE: Root privileges are required to install the software.

Follow these steps to install MD Storage Manager software on an iSCSI-connected host server:

1

Close all other programs before installing any new software.

2

Insert the CD. For some Linux installations, when you insert a CD into a drive, a screen appears asking

Yes

if you want to run the CD. Select

script in the top directory or, from within a terminal window, run

if the screen appears. Otherwise, double-click on the

./install.sh

from the

linux

on the CD.

NOTE: On RHEL 5 operating systems, CDs are automounted with the -noexec mount option. This option does

not allow you to run any executable directly from the CD. To complete this step, you must unmount the CD,

then manually remount it. Then, you can run these executables. The command to unmount the CD-ROM is:

umount CD_device_node

The command to manually mount CD is:

mount CD_device_node mount_directory

Software Installation 25

autorun

directory

Page 26

3

At the CD main menu, type

2

and press

Enter

.

The installation wizard appears.

4

Click

Next

.

5

Accept the terms of the License Agreement and click

6

Select an installation type:

Next

.

• Typical (Full installation) — This package installs both the management station and host options.

It includes the necessary host-based storage agent, multipath driver, and MD Storage Manager

software. Select this option if you plan to use MD Storage Manager on the host server to configure,

manage, and monitor the storage array.

OR

• Host — This package installs the necessary storage agent and multipath driver on a host server

connected to the storage array. Select this option on all hosts that are connected to a storage array

but will NOT use MD Storage Manager for any storage array management tasks.

7

Click

Next

.

8

If the

Overwrite Warning

dialog appears, click OK. The software currently being installed

automatically replaces any existing versions of MD Storage Manager.

9

The

Multipath Warning

driver. If this screen appears, click

dialog box may appear to advise that this installation requires an RDAC MPP

OK

. Installation instructions for the RDAC MPP driver are given in

step 13.

10

If you selected Typical (full) installation in step 6, a screen appears asking whether to restart the event

monitor automatically or manually after rebooting. You should configure only one system (either a host

or a management station) to automatically restart the event monitor.

NOTE: The event monitor notifies the administrator of problem conditions with the storage array. MD Storage

Manager can be installed on more than one system, but running the event monitor on multiple systems can

cause multiple alert notifications to be sent for the same error condition. To avoid this issue, enable the event

monitor only on a single system which monitors your MD3000i arrays. For more information on alerts, the

event monitor, and manually restarting the event monitor, see the User’s Guide.

11

The

Pre-Installation Summary

space, and the available disk space. If the installation path is correct, click

12

When the installation completes, click

13

At the

install the multi-pathing driver [y/n]?

14

When the RDAC driver installation is complete, quit the menu and restart the system.

15

Install MD Storage Manager on all other hosts attached to the MD3000i array.

16

Reboot each host attached to the array.

26 Software Installation

screen appears showing the installation destination, the required disk

Install

.

Done

.

prompt, answer y (yes).

Page 27

Installing a Dedicated Management Station (Windows and Linux)

Optionally, you can manage your storage array over the network via a dedicated system attached to the

array via the Ethernet management port. If you choose this option, follow these steps to install MD

Storage Manager on that dedicated system.

1

(Windows) From the CD main menu, select

2

(Linux) From the CD main menu, type

The Installation Wizard appears.

3

Click

Next

.

4

Accept the terms of the License Agreement and click

5

Click

Next

to accept the default installation path (Windows), or enter a new path and click

6

Select

Management Station

as the installation type. This option installs only the MD Storage

Manager software used to configure, manage and monitor a MD3000i storage array.

7

Click

Next

.

8

If the

Overwrite Warning

dialog appears, click OK. The software currently being installed

automatically replaces any existing versions of MD Storage Manager.

9

A screen appears asking whether to restart the event monitor automatically or manually after

rebooting. You should configure only one system (either a host or a management station) to

automatically restart the event monitor.

NOTE: The event monitor notifies the administrator of problem conditions with the storage array. MD Storage

Manager can be installed on more than one system, but running the event monitor on multiple systems can

cause multiple alert notifications to be sent for the same error condition. To avoid this issue, enable the event

monitor only on a single system that monitors your MD3000i arrays. For more information on alerts, the event

monitor, and manually restarting the event monitor, see the MD Storage Manager User’s Guide.

10

The

Pre-Installation Summary

screen appears showing the installation destination, the required disk

space, and the available disk space. If the installation path is correct, click

11

When the installation completes, click

A screen appears asking if you want to restart the system now.

Install MD3000i Storage Software

2

and press <Enter>.

Next

.

Done

.

Install

.

Next

.

.

12

Restart the system.

13

Set the path for the command line interface (CLI), if required. See the

Guide

for more information.

MD Storage Manager CLI

Software Installation 27

Page 28

Documentation for Windows Systems

Viewing Resource CD Contents

1

Insert the CD. If autorun is disabled, navigate to the CD and double-click

NOTE: On a server running Windows Server 2008 Core version, navigate to the CD and run the setup.bat

utility. Only the MD3000i Readme can be viewed on Windows Server 2008 Core versions. Other MD3000i

documentation cannot be viewed or installed.

A screen appears showing the following items:

a

View MD3000i Readme

b

Install MD3000i Storage Software

c

Install MD3000i Documentation

d

iSCSI Setup Instructions

2

To view the

The

readme.txt

3

Close the window after viewing the file to return to the menu screen.

4

To view the manuals from the CD, open the HTML versions from the

Installing the Manuals

1

Insert the CD, if necessary, and select

A second screen appears.

readme.txt

file, click the first bar.

file appears in a separate window.

Install MD3000i Documentation

setup.exe

/docs/

folder on the CD.

in the main menu.

.

2

Click

Next

.

3

Accept the License Agreement and click

4

Select the installation location or accept the default and click

5

Click

Install

.

The installation process begins.

6

When the process completes, click

7

To view the installed documents, go to

NOTE: The MD3000i Documentation cannot be installed on Windows Server 2008 Core versions.

28 Software Installation

Next

.

Finish

to return to the main menu.

My Computer

and navigate to the installation location.

Next

.

Page 29

Documentation for Linux Systems

Viewing Resource CD Contents

1

Insert the CD.

For some Linux distributions, a screen appears asking if you want to run the CD. Select

screen appears. If no screen appears, execute

2

A menu screen appears showing the following items:

1 View MD3000i Readme

2 Install MD3000i Storage Software

3 Install Multi-pathing Driver

4 Install MD3000i Documentation

5 View MD3000i Documentation

6 iSCSI Setup Instructions

7 Dell Support

8 View End User License Agreement

./install.sh

within the

linux

folder on the CD.

Yes

if the

If you want to view the

3

file appears in a separate window. Close the window after viewing the file to return to the menu screen.

The

4

To view another document, type

A second menu screen appears with the following selections:

MD3000i Owner's Manual

MD3000i Installation Guide

MD Storage Manager CLI Guide

MD Storage Manager User's Guide

NOTE: To view the documents from the CD, you must have a web browser installed on the system.

5

Type the number of the document you want and press <Enter>.

The document opens in a browser window.

6

Close the document when you are finished. The system returns to the documentation menu described

in step 4.

7

Select another document or type

menu screen.

readme.txt

5

q

file, type

1

and press <Enter>.

and press <Enter>.

and press <Enter> to quit. The system returns to the main

Software Installation 29

Page 30

Installing the Manuals

1

Insert the CD, if necessary, and from the menu screen, type

2

A screen appears showing the default location for installation. Press <Enter> to accept the path

shown, or enter a different path and press <Enter>.

3

When installation is complete, press any key to return to the main menu.

4

To view the installed documents, open a browser window and navigate to the installation directory.

5

and press <Enter>.

30 Software Installation

Page 31

Array Setup and iSCSI Configuration

To use the storage array, you must configure iSCSI on both the host server(s) and the storage array.

Step-by-step instructions for configuring iSCSI are described in this section. However, before

proceeding here, you must have already installed the Microsoft iSCSI initiator and the MD Storage

Manager software. If you have not, refer to Software Installation and complete those procedures

before attempting to configure iSCSI.

NOTE: Although some of these steps shown in this section can be performed in MD Storage Manager

from a management station, the iSCSI initiator must be installed and configured on each host server.

Before You Start

Before you begin configuring iSCSI, you should fill out the iSCSI Configuration Worksheet

(Table 4-2 and Table 4-3). Gathering this type of information about your network prior to starting

the configuration steps should help you complete the process in less time.

NOTE: If you are running Windows Server 2008 and elect to use IPv6, use Table 4-3 to define your

settings on the host server and storage array controller iSCSI ports. IPv6 is not supported on storage

array controller management ports.

Terminology

The table below outlines the terminology used in the iSCSI configuration steps later in this section.

Table 4-1. Standard Terminology Used in iSCSI Configuration

Term Definition

CHAP (Challenge Handshake

Authentication Protocol)

host or host server A server connected to the storage array via iSCSI ports.

host server port iSCSI port on the host server used to connect it to the storage

iSCSI initiator The iSCSI-specific software installed on the host server that

An optional security protocol used to control access to an iSCSI

storage system by restricting use of the iSCSI data ports on both

the host server and storage array. For more information on the

types of CHAP authentication supported, see Understanding

CHAP Authentication.

array.

controls communications between the host server and the storage

array.

Setting Up Your iSCSI Storage Array 31

Page 32

Table 4-1. Standard Terminology Used in iSCSI Configuration (continued)

Term Definition

iSCSI host port The iSCSI port (two per controller) on the storage array.

iSNS (Microsoft Internet Storage

Naming Service)

management station The system from which you manage your host server/storage array

storage array The enclosure containing the storage data accessed by the host

target An iSCSI port on the storage array that accepts and responds to

An automated discovery, management and configuration tool

used by some iSCSI devices.

configuration.

server.

requests from the iSCSI initiator installed on the host server.

iSCSI Configuration Worksheet

The iSCSI Configuration Worksheet (Table 4-2 or Table 4-3) helps you plan your configuration.

Recording host server and storage array IP addresses at a single location will help you configure your

setup faster and more efficiently.

Guidelines for Configuring Your Network for iSCSI provides general network setup guidelines for both

Windows and Linux environments. It is recommended that you review these guidelines before

completing the worksheet.

32 Setting Up Your iSCSI Storage Array

Page 33

Table 4-2. iSCSI Configuration Worksheet (IPv4 settings)

A

B

192.168.130.101 (In 0 default)

192.168.131.101 (In 1 default)

192.168.128.101 (Mgmt network port)

If you need additional space for more than one host server, use an additional sheet.

A

iSCSI port 1

iSCSI port 2

iSCSI port 3

iSCSI port 4

Management port

Static IP address (host server)

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

cntl. 0

cntl. 1

host server

MD3000i

192.168.128.102

(Mgmt network port)

192.168.131.102 (In 1 default)

192.168.130.102 (In 0 default)

Subnet

(should be different for each NIC)

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

Mutual CHAP

Secret

Target CHAP

Secret

Default gateway

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

Management port

B

Static IP address (storage array)

iSCSI controller 0, In 0

iSCSI controller 0, In 1

Management port, cntrl. 0

iSCSI controller 1, In 0

iSCSI controller 1, In 1

Management port, cntrl. 1

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

Subnet

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

Setting Up Your iSCSI Storage Array 33

____ . ____ . ____ . ____

Default gateway

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

____ . ____ . ____ . ____

Page 34

Table 4-3. iSCSI Configuration Worksheet (IPv6 settings)

A

cntl. 0

B

host server

cntl. 1

MD3000i

If you need additional space for more than one host server, use an additional sheet.

Host iSCSI port 1

A

Link Local IP Address

Routable IP Address

Subnet Prefix

Gateway

B

________________________

________________________

________________________

________________________

iSCSI controller 0, In 0

IP Address

Routable IP Address 1

Routable IP Address 2

Router IP Address

iSCSI controller 0, In 1

IP Address

Routable IP Address 1

Routable IP Address 2

Router IP Address

iSCSI controller 1, In 0

IP Address

Routable IP Address 1

Routable IP Address 2

Router IP Address

iSCSI controller 1, In 1

IP Address

Routable IP Address 1

Routable IP Address 2

Router IP Address

FE80 : 0000 : 0000 : 0000 : ____ : ____ : ____ : ____

____ : ____ : ____ : ____ : ____ : ____ : ____ : ____

____ : ____ : ____ : ____ : ____ : ____ : ____ : ____

____ : ____ : ____ : ____ : ____ : ____ : ____ : ____

FE80 : 0000 : 0000 : 0000 : ____ : ____ : ____ : ____

____ : ____ : ____ : ____ : ____ : ____ : ____ : ____

____ : ____ : ____ : ____ : ____ : ____ : ____ : ____

____ : ____ : ____ : ____ : ____ : ____ : ____ : ____

FE80 : 0000 : 0000 : 0000 : ____ : ____ : ____ : ____

____ : ____ : ____ : ____ : ____ : ____ : ____ : ____

____ : ____ : ____ : ____ : ____ : ____ : ____ : ____

____ : ____ : ____ : ____ : ____ : ____ : ____ : ____

FE80 : 0000 : 0000 : 0000 : ____ : ____ : ____ : ____

____ : ____ : ____ : ____ : ____ : ____ : ____ : ____

____ : ____ : ____ : ____ : ____ : ____ : ____ : ____

____ : ____ : ____ : ____ : ____ : ____ : ____ : ____

Host iSCSI port 2

Link Local IP Address

Routable IP Address

Subnet Prefix

Gateway

Mutual CHAP

Secret

Target CHAP

Secret

________________________

________________________

________________________

________________________

34 Setting Up Your iSCSI Storage Array

Page 35

Configuring iSCSI on Your Storage Array

The following sections contains step-by-step instructions for configuring iSCSI on your storage array.

However, before beginning, it is important to understand where each of these steps occur in relation to

your host server/storage array environment.

Table 4-4 below shows each specific iSCSI configuration step and where it occurs.

Table 4-4. Host Server vs. Storage Array

This step is performed on the HOST SERVER using

the Microsoft or Linux iSCSI Initiator:

Step 3: Perform target discovery from the iSCSI

initiator

Step 6: (Optional) Configure CHAP

authentication on the host server

Step 7: Connect to the storage array from the host

server

This step is performed on the STORAGE ARRAY

using MD Storage Manager:

Step 1: Discover the storage array

Step 2: Configure the iSCSI ports on the storage

array

Step 4: Configure host access

Step 5: (Optional) Configure CHAP

authentication on the storage array

Step 8: (Optional) Set up in-band management

Using iSNS

iSNS (Internet Storage Naming Service) Server, supported only on Windows iSCSI environments,

eliminates the need to manually configure each individual storage array with a specific list of initiators

and target IP addresses. Instead, iSNS automatically discovers, manages, and configures all iSCSI devices

in your environment.

For more information on iSNS, including installation and configuration, see www.microsoft.com.

Setting Up Your iSCSI Storage Array 35

Page 36

Step 1: Discover the Storage Array (Out-of-band management only)

Default Management Port Settings

By default, the

your storage array is unable to get IP configuration from a DHCP server, it will timeout after ten seconds

and fall back to a default static IP address. The default IP configuration is:

Controller 0: IP: 192.168.128.101 Subnet Mask: 255.255.255.0

Controller 1: IP: 192.168.128.102 Subnet Mask: 255.255.255.0

You can discover the storage array automatically or manually. Choose one and complete the steps below.

Automatic Storage Array Discovery

1

Launch MD Storage Manager.

If this is the first storage array to be set up, the

2

Choose

It may take several minutes for the discovery process to complete. Closing the discovery status window

before the discovery process completes will cancel the discovery process.

storage array

NOTE: No default gateway is set.

NOTE: If DHCP is not used, initial configuration of the management station must be performed on the same

physical subnet as the storage array. Additionally, during initial configuration, at least one network adapter

must be configured on the same IP subnet as the storage array’s default management port (192.168.128.101 or

192.168.128.102). After initial configuration (management ports are configured using MD Storage Manager),

the management station’s IP address can be changed back to its previous settings.

NOTE: This procedure applies to out-of-band management only. If you choose to set up in-band

management, you must complete this step and then refer to Step 8: (Optional) Set Up In-Band Management.

Automatic

management ports will be set to DHCP configuration. If the controller(s) on

Add New Storage Array

window appears.

and click OK.

After discovery is complete, a confirmation screen appears. Click

Manual Storage Array Discovery

1

Launch MD Storage Manager.

If this is the first storage array to be set up, the

2

Select

Manual

3

Select

Out-of-band management

storage array controller.

4

Click

Add

Out-of-band management should now be successfully configured.

After discovery is complete, a confirmation screen appears. Click

36 Setting Up Your iSCSI Storage Array

and click OK.

.

Close

to close the screen.

Add New Storage Array

window appears.

and enter the host server name(s) or IP address(es) of the iSCSI

Close

to close the screen.

Page 37

Set Up the Array

1

When discovery is complete, the name of the first storage array found appears under the

in MD Storage Manager.

2

The default name for the newly discovered storage array is

the down arrow next to that name and choose

3

Click the

information about each task, see the

Table 4-5. Initial Storage Array Setup Tasks

Tas k

Rename the storage array.

NOTE: If you need to physically

find the device, click Blink the

storage array on the Initial Setup

Tasks dialog box or click the Tools

tab and choose Blink. Lights on the

front of the storage array blink

intermittently to identify the array.

Dell recommends blinking storage

arrays to ensure that you are

working on the correct enclosure.

Initial Setup Tasks

option to see links to the remaining post-installation tasks. For more

User’s Guide.

NOTE: Before configuring the storage array, check the status icons on the Summary tab to make sure the

enclosures in the storage array are in an Optimal status. For more information on the status icons,

see Troubleshooting Tools.

Purpose Information Needed

To provide more a meaningful

name than the software-assigned

label of Unnamed.

Unnamed

Perform these tasks in the order shown in Table 4-5.

Unnamed

. If another name appears, click

in the drop-down list.

A unique, clear name with no more

than 30 characters that may include

letters, numbers, and no special

characters other than underscore (_),

minus (–), or pound sign (#).

NOTE: MD Storage Manager does not

check for duplicate names. Names are

not case sensitive.

Summary

tab

Set a storage array password. To restrict unauthorized access,

MD Storage Manager asks for a

password before changing the

configuration or performing a

destructive operation.

A case-sensitive password that meets

the security requirements of your

enterprise.

Setting Up Your iSCSI Storage Array 37

Page 38

Table 4-5. Initial Storage Array Setup Tasks (continued)

Purpose Information Needed

Tas k

Set the management port IP

addresses on each controller.

To set the management port IP

addresses to match your public

network configuration. Although

DHCP is supported, static IP

addressing is recommended.

In MD Storage Manager, select

Initial Setup Tasks

Ethernet Management Ports, then

specify the IP configuration for each

management port on the storage

array controllers.

NOTE: If you change a management

port IP address, you may need to

update your management station

configuration and/or repeat storage

array discovery.

Set up alert notifications.

Set up e-mail alerts.

Set up SNMP alerts.

NOTE: The Status area in the

Summary tab shows if alerts have

been set for the selected array.

To arrange to notify individuals (by

e-mail) and/or storage management

stations (by SNMP) when a storage

array component degrades or fails,

or an adverse environmental

condition occurs.

E-mail — Sender (sender’s SMTP

gateway and e-mail address) and

recipients (fully qualified e-mail

addresses)

SNMP — (1) A community name, a

known set of storage management

stations set by administrator as an

ASCII string in the management

console (default: "public"), and (2)

a trap destination, IP address or host

name of a management console

running an SNMP service

→

Configure

38 Setting Up Your iSCSI Storage Array

Page 39

Step 2: Configure the iSCSI Ports on the Storage Array

By default, the iSCSI ports on the storage array are set to the following IPv4 settings:

Controller 0, Port 0: IP: 192.168.130.101 Subnet Mask: 255.255.255.0 Port: 3260

Controller 0, Port 1: IP: 192.168.131.101 Subnet Mask: 255.255.255.0 Port: 3260

Controller 1, Port 0: IP: 192.168.130.102 Subnet Mask: 255.255.255.0 Port: 3260

Controller 1, Port 1: IP: 192.168.131.102 Subnet Mask: 255.255.255.0 Port: 3260

NOTE: No default gateway is set.

To configure the iSCSI ports on the storage array, complete the following steps:

1

From MD Storage Manager, click the

2

Configure the iSCSI ports on the storage array.

NOTE: Using static IPv4 addressing is recommended, although DHCP is supported.

NOTE: IPv4 is enabled by default on the iSCSI ports. You must enable IPv6 to configure IPv6 addresses.

NOTE: IPv6 is supported only on controllers that will connect to host servers running Windows Server 2008.

The following settings are available (depending on your specific configuration) by clicking the

Advanced

button:

• Virtual LAN (VLAN) support

A VLAN is a network of different systems that behave as if they are connected to the same

segments of a local area network (LAN) and are supported by the same switches and routers.

When configured as a VLAN, a device can be moved to another location without being

reconfigured. To use VLAN on your storage array, obtain the VLAN ID from your network

administrator and enter it here.

iSCSI

tab, then select

Configure iSCSI Host Ports

.

• Ethernet priority

This parameter is set to determine a network access priority.

• TCP listening port

The port number the controller on the storage array uses to listen for iSCSI logins from host server

iSCSI initiators.

NOTE: The TCP listening port for the iSNS server is the port number the storage array controller uses to

connect to an iSNS server. This allows the iSNS server to register the iSCSI target and portals of the storage

array so that the host server initiators can identify them.

• Jumbo frames

Jumbo Ethernet frames are created when the maximum transmission units (MTUs) are larger than

1500 bytes per frame. This setting is adjustable port-by-port.

3

To enable ICMP PING responses for all ports, select

4

Click OK when all iSCSI storage array port configurations are complete.

5

Test the connection by performing a ping command on each iSCSI storage array port.

Enable ICMP PING responses

Setting Up Your iSCSI Storage Array 39

.

Page 40

Step 3: Perform Target Discovery from the iSCSI Initiator

This step identifies the iSCSI ports on the storage array to the host server. Select the set of steps in one

of the following sections (Windows or Linux) that corresponds to your operating system.

If you are using Windows Server 2003 or Windows Server 2008 GUI version

1

Click

Start→

Tools

→ iSCSI Initiator

2

Click the

3

Under

storage array.

4

If the iSCSI storage array uses a custom TCP port, change the

5

Click

Advanced

•

•

•

•

NOTE: IPSec is not supported.

Click OK to exit the

Programs→

Discovery

Target Portals

and set the following values on the

Local Adapter

Source IP

Data Digest and Header Digest

information be compiled during transmission to assist in troubleshooting.

CHAP logon information

at this point, unless you are adding the storage array to a SAN that has target CHAP already

configured.

: The source IP address of the host you want to connect with.

Microsoft iSCSI Initiator

.

tab.

, click

Add

and enter the

: Must be set to

: Leave this option unselected and do not enter CHAP information

Advanced

menu, and OK again to exit the

or

Start→

IP address or DNS name

General

Microsoft iSCSI Initiator

: Optionally, you can specify that a digest of data or header

All Programs→

of the iSCSI port on the

Port