Page 1

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

PM9311/2/3/5

Enhanced TT1 Chip Set

Enhanced TT1 Switch Fabric

Datasheet

Issue 3: August 2001

PROPRIETARY AND CONFIDENTIAL TO P MC-SIERRA, INC.,AND FOR ITS CUSTOMERS’ INTERNAL USE

Page 2

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

This document is the proprietaryand confidential information of PMC-Sierra Inc. Access to this information

does not transfer or grant any right or license to use this intellectual property. PMC-Sierra will grant such

rights only under a separate written license agreement.

Any product, process or technology described in this document is subject to intellectual property rights

reserved by PMC-Sierra, Inc.and are not licensed hereunder. Nothing contained herein shall be construed

as conferring by implication, estoppel or otherwise any license or right under any patent or trademark of

PMC-Sierra, Inc.or any third party. Except as expressly provided for herein, nothing contained herein shall

be construed as conferring any license or right under any PMC-Sierra, Inc.copyright.

Each individual document published by PMC-Sierra, Inc. may contain additional or other proprietary

notices and/or copyright information relating to that individual document.

THE DOCUMENT MAYCONTA IN TE CHNICAL INACCURACIES OR TYPOGRAPHICAL ERRORS.

CHANGES ARE REGULARLY MADE TO THE INFORMATION CONTAINED IN THE DOCUMENTS.

CHANGES MAYOR MAY NOT BE INCLUDED IN FUTURE EDITIONS OF THE DOCUMENT.

PMC-SIERRA, INC.OR ITS SUPPLIERS MAY MAKE IMPROVEMENTS AND/OR CHANGES IN THE

PRODUCTS(S), PROCESS(ES), TECHNOLOGY, DESCRIPTION(S), AND/OR PROGRAM(S)

DESCRIBED IN THE DOCUMENT AT ANY TIME.

THE DOCUMENT IS PROVI DED "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR

IMPLIED, INCLUDING BUT NOT LIMITED TO ANY IMPLIED WARRANTY OR MERCHANTABILITY,

FITNESS FOR A PARTICULAR PURPOSE, OR NON-INFRINGEMENT.

ETT1, Enhanced TT1, TT1, and LCS are trademarks of PMC-Sierra, Inc.

© 2000

PMC-Sierra, Inc.

8555 Baxter Place

Burnaby BC Canada V5A 4V7

Phone: (604) 415-6000 FAX: (604) 415-6200

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

Page 3

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

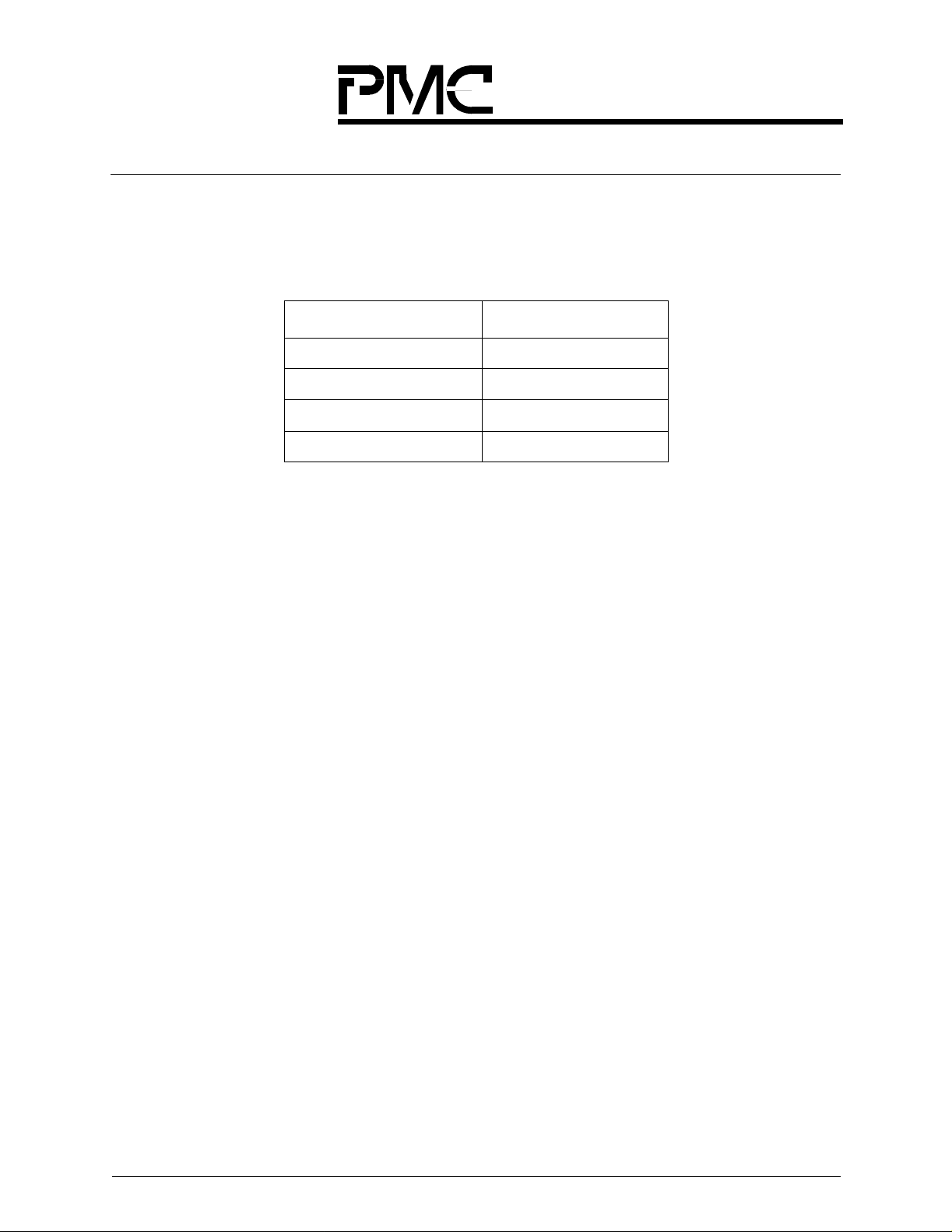

Device Part Numbers

This document contains information on the ETT1TMChip Set,available from PMC-Sierra, Inc. The following

devices comprise the ETT1 Chip Set:

Device Name PMC Part Number

Scheduler PM9311-UC

Crossbar PM9312-UC

Dataslice PM9313-HC

Enhanced PortProcessor PM9315-HC

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

Page 4

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

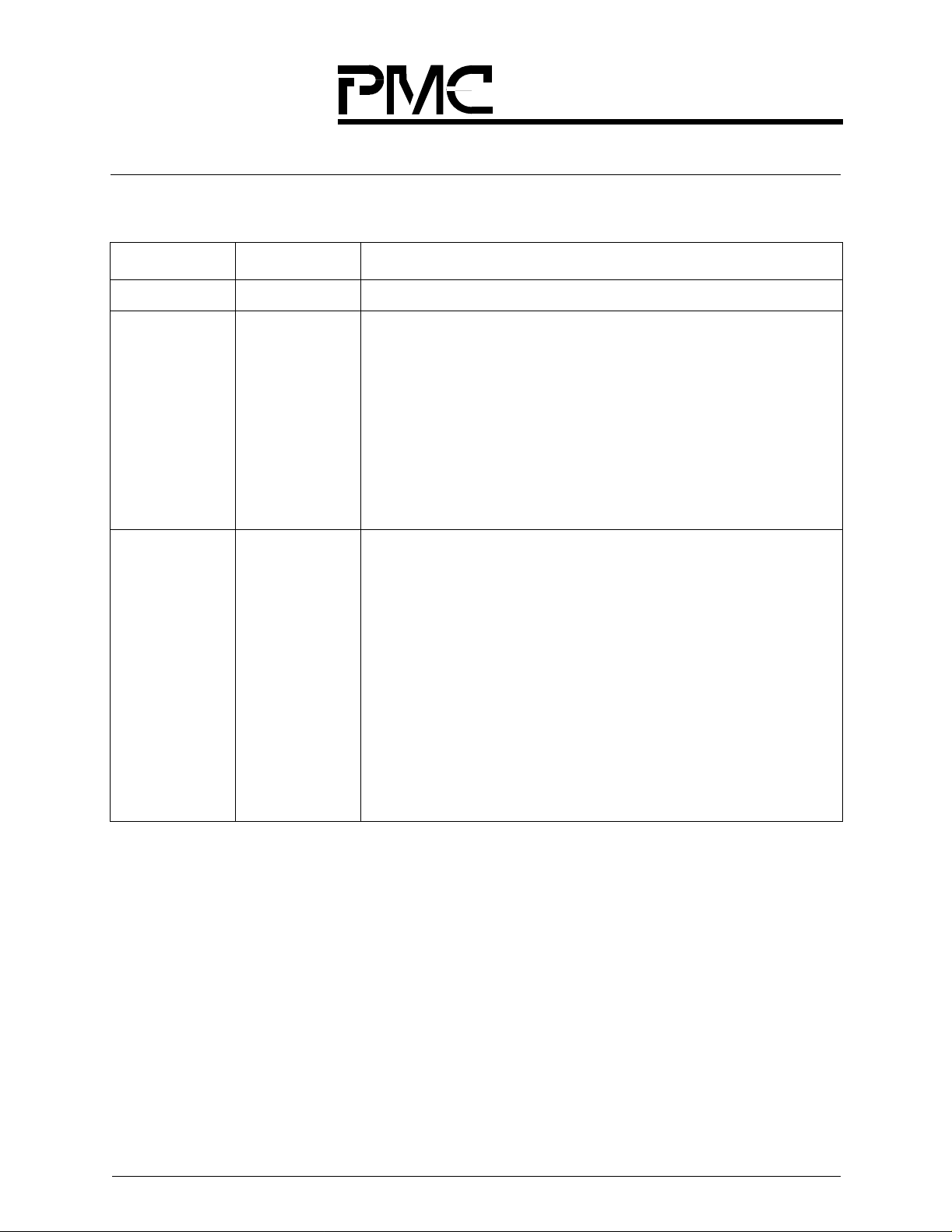

Revision History

Issue Number Issue Date Details Of Change

1 March 2000 Creation of document

2 July 2000 Added HSTL Power Dissipation values, corrected programming

constraints for EPP, added OOB, JTAG and PLL blocks to device

dataflow diagrams, added heat sink information to Characteristics

section, corrected EPP register section, corrected Frame Format

tables, added correct bit definition for EPP Interrupt register,

corrected diagram in Appendix C, added send and receive

procedure for control packets, added ESOBLCP register

explanation, corrected conditions in Table 57, corrected Scheduler

Refresh Procedure, added new drawing for Crossbar data flow,

corrected token explanation, corrected Figures 36 and 37,

corrected spelling and formatting errors throughout document.

3 August 2001 Removed bit 17 from Scheduler Status Register, updated BP_FIFO

information in Scheduler Control and Reset Register, corrected

bottom view drawings for Scheduler and Crossbar, corrected signal

description section in Scheduler and Crossbar for power pins,

corrected tper3, and tpl3 in AC Electrical, added memory address

information in EPP registers, corrected mechanical drawings,

updated state diagram in Scheduler, added information about

Scheduler PLL timing, updated initialization sequence for all chips,

corrected DatasliceSignal Descriptionfor ibpen0, added bit 14(13th

and 14th Dataslice enable) to EPP Control register, updated TDM

constraints, added Output TDM Queue Overflow to the EPP’s

Interrupt register, updated EPP Output Backpressure /

Unbackpressure Threshold register, updated LCS2 link

synchronization in appendix, modified EPP Egress Control Packet

Data Format table, Modified ETT1 usage of LCS2 protocol section

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

Page 5

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

1 Functional Description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.1 Overview.........................................................15

1.1.1 ETT1SwitchCoreFeatures...................................................15

1.1.2 TheSwitchCoreModel ......................................................16

1.1.3 TheLCSProtocol...........................................................17

1.1.4 TheOOB(Out-Of-Band)Bus..................................................18

1.2 Architecture and Features. . ......................................... 18

1.2.1 ETT1SwitchCore ..........................................................18

1.2.2 BasicCellFlow.............................................................19

1.2.3 PrioritizedBest-effortService..................................................21

1.2.4 End-to-EndFlowControl .....................................................22

1.2.5 TDMService...............................................................22

1.2.6 SubportMode(2.5Gbit/sLinecards)............................................23

1.2.7 LCSControlPackets ........................................................24

1.2.8 Redundancy...............................................................25

1.2.9 SystemConfigurationOptions.................................................28

1.3 Prioritized Best-Effort Q ueue Model .................................. 30

1.3.1 UnicastTraffic(OC-192)......................................................31

1.3.2 MulticastTraffic(OC-192) ....................................................31

1.3.3 UnicastTraffic(SubportMode).................................................33

1.3.4 MulticastTraffic(subportmode)................................................46

1.3.5 CombinationsofOC-192candQuadOC-48cLinecards.............................49

1.3.6 Summary.................................................................50

1.3.7 TheLCSProtocol...........................................................51

1.4 Explicit Backpressure From oEPP to iEPP ............................. 52

1.4.1 FlowControlCrossbarSynchronization..........................................54

1.5 TDMService...................................................... 54

1.5.1 TDMQueues..............................................................54

1.5.2 TDMReservationTables.....................................................55

1.5.3 TDMFrameTiming..........................................................59

1.5.4 TDMSynchronization........................................................59

1.5.5 ConfiguringtheTDMService..................................................62

1.5.6 ChangingtheSourceoftheSuggestedSync......................................63

1.6 ETT1 Usage of the LCS Protocol ..................................... 64

1.6.1 LCSProtocolPrepend.......................................................64

1.6.2 UseofLabelandTypeFields..................................................67

1.6.3 ControlPackets ............................................................69

1.6.4 UseofCRCFields..........................................................73

1.6.5 LCSPHYLayer ............................................................75

1.7 TheOut-of-Band(OOB)BusInterface................................. 77

1.7.1 TheOOBBus..............................................................78

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE i

Page 6

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

1.8 Initialization Procedure .............................................84

1.8.1 InitialPower-on:............................................................85

1.8.2 PortBoardbeingadded:......................................................87

1.8.3 SchedulerBoardbeingadded:.................................................89

1.8.4 CrossbarBoardbeingadded:..................................................90

1.9 Fault Tolerance. ................................................... 91

1.9.1 FaultToleranceModel.......................................................91

1.9.2 Soft/Transient Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

1.9.3 FlowControlRefreshProcedure ...............................................97

1.9.4 SchedulerRefreshProcedure .................................................98

1.9.5 RefreshSchedulersAfterModifyingRegisters.....................................99

1.9.6 HardErrors................................................................99

1.10 ETT1 Signals and Interconnections .................................. 102

1.10.1 LVCMOS(CandO)........................................................102

1.10.2 HSTL(H) ................................................................102

1.10.3 AIBLinks(A)..............................................................103

1.10.4 LiveInsertion .............................................................104

1.11 System Latencies................................................. 104

1.11.1 LCSRequesttoGrantMinimumLatency........................................105

1.11.2 LCSGranttoLinecardCellLatency............................................105

1.11.3 CelllatencyThroughanEmptySystem.........................................106

1.11.4 LCSHoleRequesttoHoleGrantLatency.......................................107

1.12 ETT1Diagnostics.................................................108

1.12.1 DeviceTests..............................................................108

1.12.2 AIBLinkTests ............................................................109

1.12.3 LinecardtoETT1LinkDiagnostics.............................................109

1.12.4 ETT1InternalDatapathTests ................................................113

2 Dataslice.......................................................115

2.1 Dataslice Blocks.................................................. 115

2.1.1 OOBInterfaceandControl/StatusRegisters.....................................117

2.1.2 DatasliceCellFlow.........................................................117

2.2 8B/10BInterface..................................................118

2.3 DatasliceRegisters...............................................119

2.3.1 DatasliceSummary ........................................................119

2.3.2 DatasliceRegisterDescriptions...............................................121

2.4 Dataslice Signal Descriptions.......................................136

ii PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

Page 7

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

2.5 Pinout and Package Information ....................................142

2.5.1 PinoutTables.............................................................142

2.5.2 PackageDimensions.......................................................147

2.5.3 PartNumber..............................................................149

3 EnhancedPortProcessor.........................................151

3.1 EPP Data Flows and Blocks ........................................153

3.1.1 EPPDataFlows...........................................................154

3.1.2 LCSGrantManager........................................................156

3.1.3 SchedulerRequestModulator................................................158

3.1.4 InputQueueManager.......................................................159

3.1.5 OutputQueueManager.....................................................160

3.1.6 OutputScheduler..........................................................161

3.1.7 OOBInterfaceandControl/StatusRegisters.....................................161

3.2 Input Dataslice Queue Memory Allocation w ith EPP . . .................. 162

3.3 Output Dataslice Queue Memory Allocation with EPP. .................. 162

3.4 Enhanced Port Processor Registers ................................. 165

3.4.1 EnhancedPortProcessorSummary ...........................................165

3.4.2 EnhancedPortProcessorRegisterDescriptions..................................169

3.5 Enhanced Port processor Signal Descriptions......................... 209

3.6 Pinout and Package Information ....................................214

3.6.1 PinoutTables.............................................................214

3.6.2 PackageDimensions.......................................................221

3.6.3 PartNumber..............................................................223

4 Crossbar.......................................................225

4.1 Crossbar Blocks.................................................. 225

4.1.1 OOBInterfaceandControl/StatusRegisters.....................................227

4.2 Modes Of Operation...............................................227

4.3 OOB Access . . . .................................................. 227

4.4 Crossbar Registers ............................................... 228

4.4.1 Summary................................................................228

4.4.2 CrossbarRegisterDescriptions...............................................229

4.5 Crossbar Signal Descriptions.......................................237

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE iii

Page 8

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

4.6 Pinout and Package Information ....................................240

4.6.1 PinoutTables.............................................................240

4.6.2 PackageDimensions.......................................................249

4.6.3 PartNumber..............................................................251

5 Scheduler......................................................253

5.1 BlockStructure ..................................................253

5.1.1 PortBlock................................................................253

5.1.2 ArbiterBlock..............................................................253

5.1.3 OOBInterfaceandControl/StatusRegisters.....................................254

5.1.4 TDMService..............................................................254

5.2 PortState.......................................................256

5.3 Fault Tolerance. .................................................. 257

5.4 Scheduler Registers ..............................................259

5.4.1 Summary................................................................259

5.4.2 SchedulerRegisterDescriptions ..............................................261

5.4.3 InputQueueMemory(IQM)..................................................275

5.5 Scheduler Signal Descriptions ...................................... 276

5.6 Pinout and Package Information ....................................279

5.6.1 PinoutTables.............................................................279

5.6.2 PackageDimensions.......................................................288

5.6.3 PartNumber..............................................................290

6 Characteristics..................................................293

6.1 Signal Associations............................................... 293

6.2 Absolute Maximum Ratings ........................................297

6.3 Recommended Operating Conditions . . . ............................. 299

6.4 DCElectricalCharacteristics.......................................302

6.5 ACElectricalCharacteristics.......................................305

6.6 TimingDiagrams.................................................308

AppendixA GeneralPacket/FrameFormats...........................317

iv PROPRIETARYAND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

Page 9

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

A.1 Frame Formats - Dataslice To and From Enhanced Port Processor........ 317

A.2 FrameFormats-DatasliceToandFromCrossbar...................... 318

A.3 Frame Formats - Enhanced Port Processor To and From Scheduler....... 319

Appendix B Common Pinout Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . 323

B.1 JTAGInterface................................................... 323

B.2 Reserved Manufacturing Test Pins ..................................323

B.3 Power Supply Connections. ........................................ 324

B.4 Example Power Up Sequence....................................... 325

AppendixC InterfacingDetailsforETT1..............................327

C.1 Compensating for Round Trip Delay Between Linecard and ETT1(LCS) .... 327

C.1.1 PreventingUnderflowoftheVOQ .............................................327

C.2 The8b/10bInterfacetotheDataslice................................. 328

C.2.1 Link.....................................................................329

C.2.2 Channel.................................................................333

C.3 Managing Cell Rates (Setting the Idle Count Register) .................. 335

C.4 AvoidingDelaysDuetoIdleCells ................................... 336

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE v

Page 10

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

vi PROPRIETARYAND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

Page 11

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

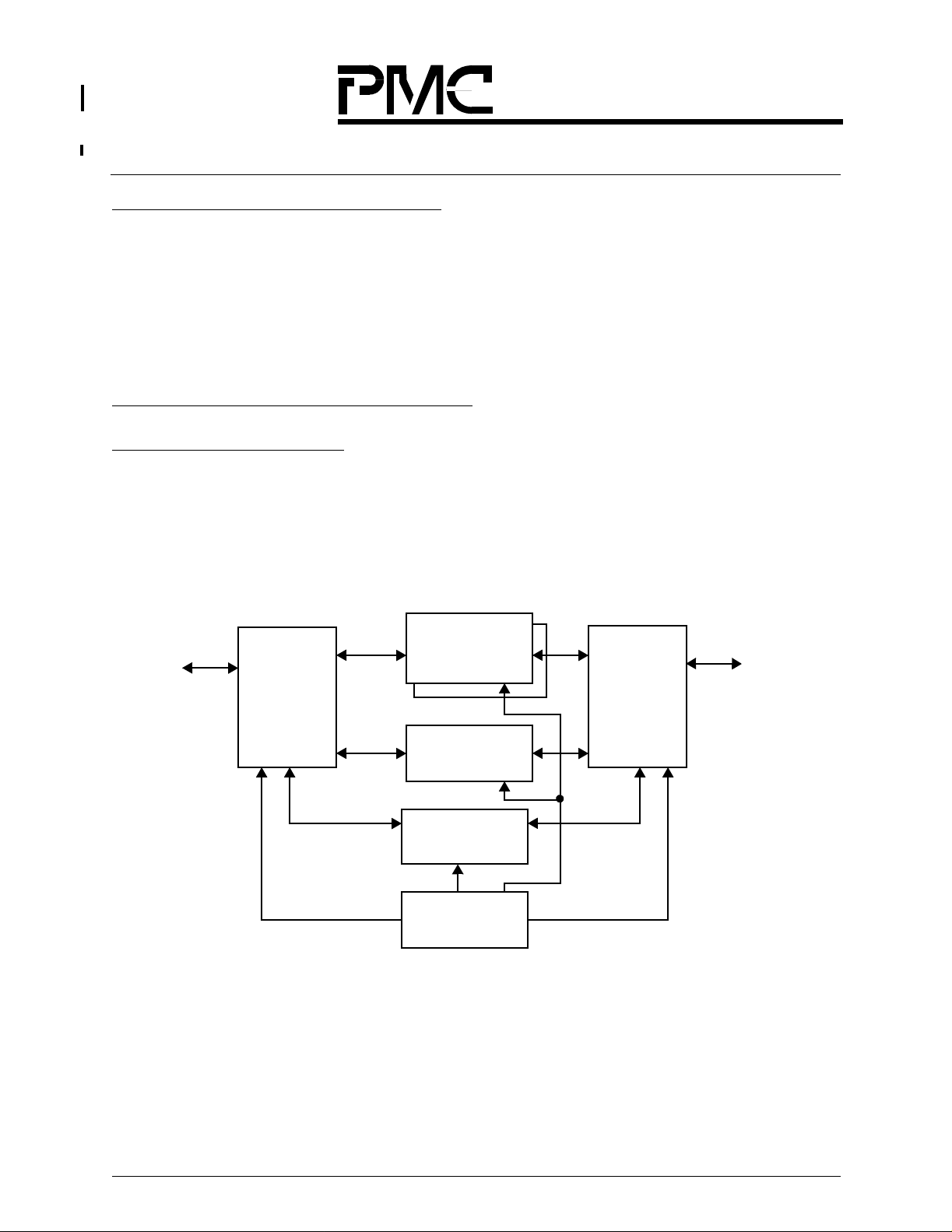

Figure 1. The Basic Components of a Switch Built Around the ETT1 Chip Set.. . . ...............16

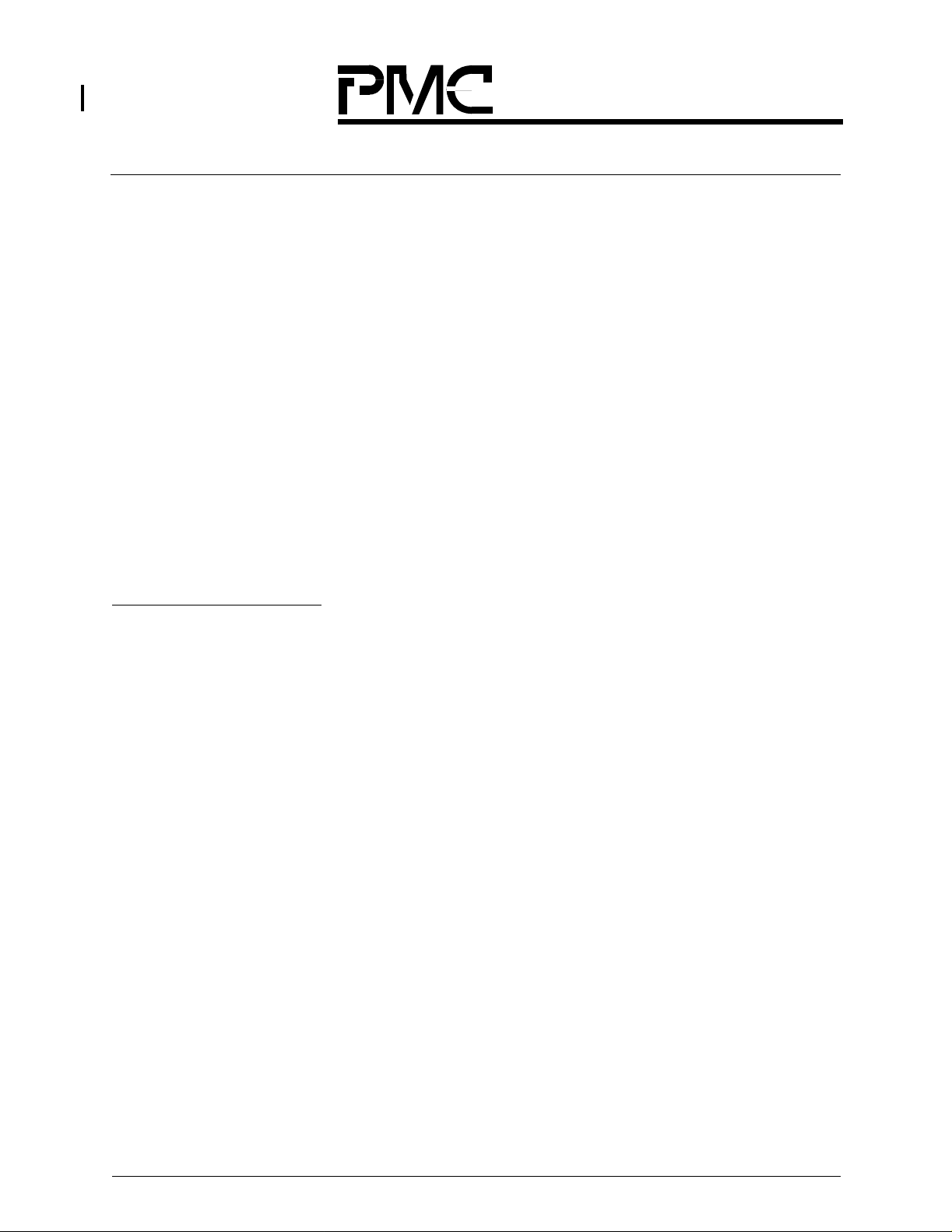

Figure2. ETT1SwitchCoreLogicalInterconnects........................................18

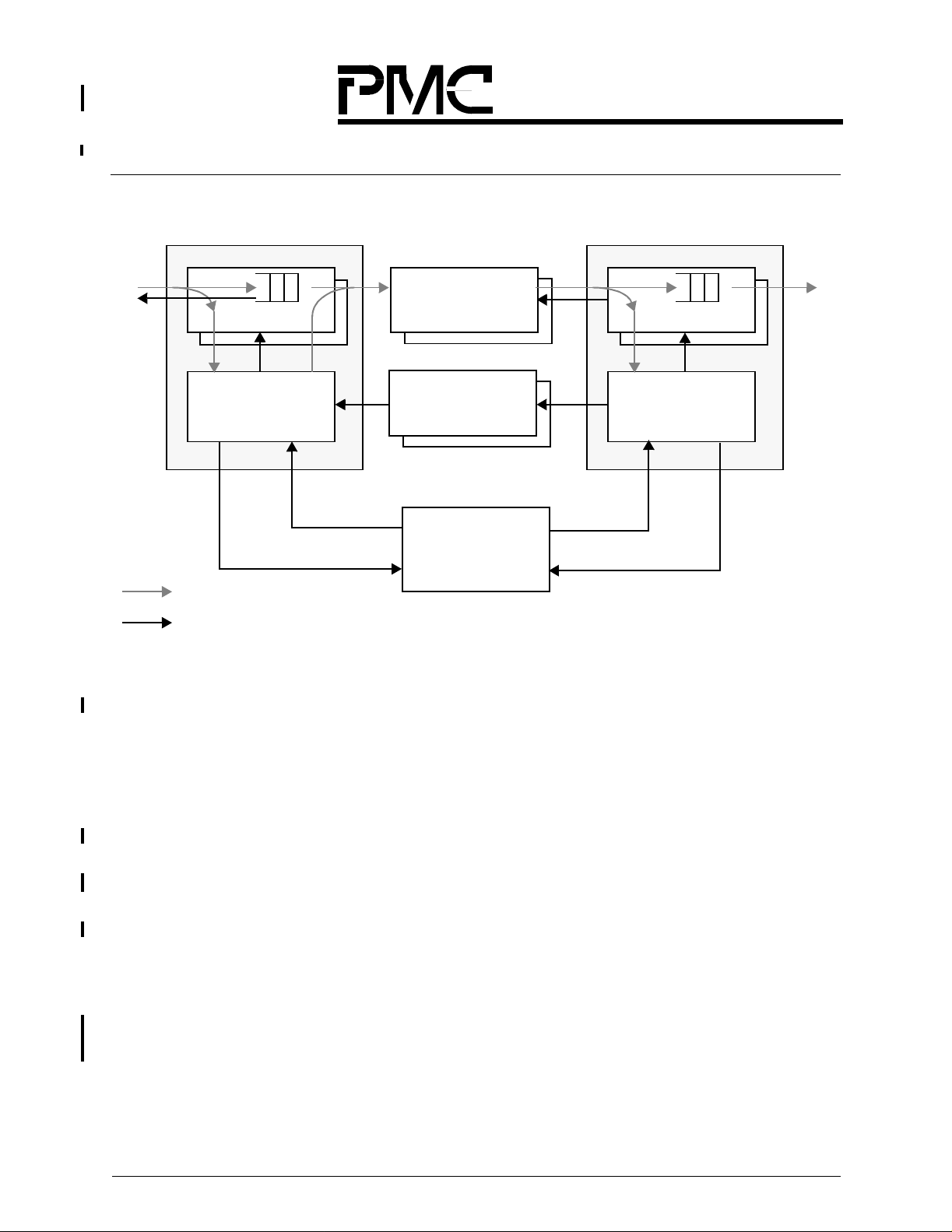

Figure3. TwoPortConfigurationofaETT1Switch.......................................20

Figure4. QueueingandFlowControl..................................................22

Figure5. TheTDMFrameConcept ...................................................23

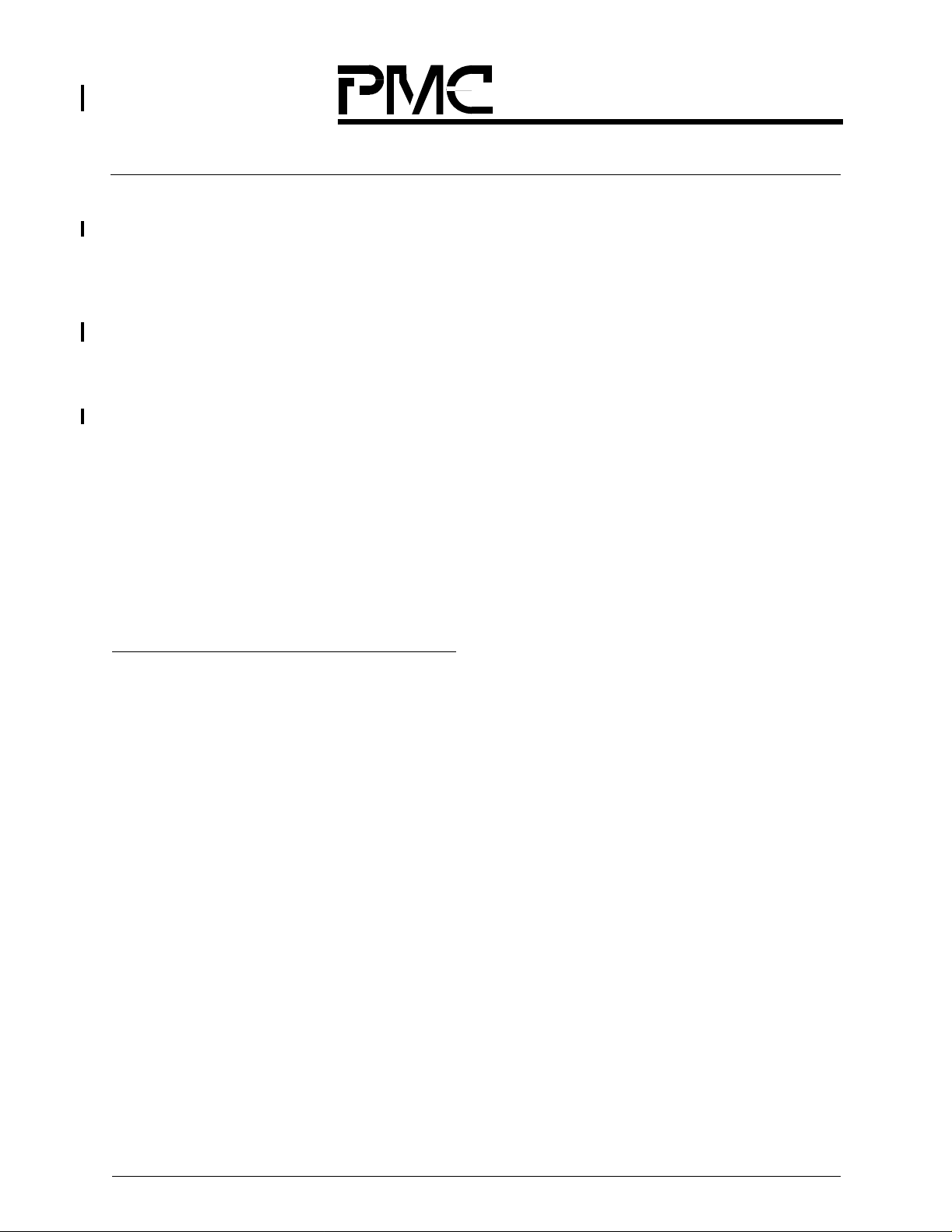

Figure 6. Fully Redundant Core . . . . . . . . . . ............................................26

Figure 7. Simple Redundant Scheduler Configuration . . . . . . . . . ............................27

Figure8. LCSCellSlicedAcrossDataslicesandCrossbars ................................29

Figure9. TheUnicastIngressQueueingModelforOnePort................................31

Figure10. IngressandEgressQueueforMulticastTraffic...................................33

Figure11. AnETT1PortOperatinginSubportModewithFourOC-48cLinecards................34

Figure12. TheInputRequestCounters.................................................35

Figure13. FullSetofCountersforaSinglePriority........................................36

Figure14. AllRequestCountersforaSingleOutputShareaSingleQueue.....................38

Figure15. SequenceofEventsattheInputSide..........................................40

Figure16. VirtualInputQueues.......................................................43

Figure17. TheFourPriorities.........................................................45

Figure18. SchedulerRequests/GrantsareMultiplexedataPortLevel.........................46

Figure19. EachiEPPhasaSingleVirtualOutputQueueforMulticastCells.....................47

Figure20. MulticastCellProcessing....................................................47

Figure21. EITIBMstructure ..........................................................48

Figure22. OITIBMstructure..........................................................49

Figure23. SchedulerPriorities........................................................50

Figure24. ETT1PortIngressQueues ..................................................51

Figure 25. A Separate Crossbar is Used to Convey Backpressure Information to the iEPPs. . . . . . . . . 53

Figure 26. The Queueing Structure. . . . . . . . . ............................................55

Figure27. IngressPortLogic .........................................................57

Figure28. TheoEPPMustTreattheTransferredTDMCellasaMulticastCell...................58

Figure29. SingleTDMTable .........................................................59

Figure30. TDMSynchronization.......................................................60

Figure31. SignalFlow...............................................................62

Figure 32. One Implementation: The OOB Bus Consists of Both Local and Global Buses . . . . . . . . . . 77

Figure 33. The Global Device Select Defines the Board Number and the Device on Each Board . . . . . 80

Figure34. WriteCycle-NoWait.......................................................82

Figure35. WriteCycle-OneWait......................................................82

Figure36. ReadCycle-OneWait .....................................................83

Figure37. ReadCycle-TwoWaits.....................................................83

Figure38. InterruptsareActiveHighandSynchronous.....................................84

Figure 39. Redundant Connections . . . . . . . . ............................................92

Figure40. SimpleNon-RedundantCrossbarConfiguration..................................93

Figure41. SimpleNon-RedundantSchedulerConfiguration.................................95

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE vii

Page 12

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

Figure 42. Simple Redundant Crossbar Configuration . . . . . . . . . . ............................95

Figure 43. Simple Redundant Scheduler Configuration . . . . . . . . . ............................96

Figure 44. ETT1 Signals and Interconnects . . ...........................................102

Figure45. AIBLinks...............................................................103

Figure46. AIBControl/StatusSignals..................................................104

Figure47. RequesttoGrantlatency...................................................105

Figure48. ETT1EventLatencies.....................................................106

Figure49. CellLatency.............................................................107

Figure50. IllustratingtheFlowofCellsfromtheLinecardCPUtotheETT1CPU................110

Figure51. LoopbackPath...........................................................111

Figure52. ControlPacketExchangeBetweenLinecardCPUandETT1CPU...................112

Figure53. TestingtheETT1InternalDatapaths..........................................113

Figure54. DatasliceDataFlow.......................................................116

Figure55. SlicingofaDataCellintoLogicalDataslices....................................117

Figure56. DatasliceCBGAPackageDimensions-TopandSideViews.......................147

Figure57. DatasliceCBGAPackageDimensions-BottomView.............................148

Figure58. AnETT1PortOperatinginSub-portModewithFourOC-48cLinecards..............152

Figure59. FunctionalDiagramofLCSEnhancedPortProcessor............................153

Figure 60. Enhanced Port Processor CBGA Package Dimensions - Top and Side Views. . . . . . . . . . 221

Figure 61. Enhanced Port Processor (EPP) CBGA Package Dimensions - Bottom View . . . . . . . . . . 222

Figure62. TheBasicDataflowThroughtheCrossbar.....................................226

Figure63. CrossbarCCGAPackageDimensions-TopandSideViews.......................249

Figure64. CrossbarCCGApackageDimensions-BottomView.............................250

Figure65. SchedulerBlockDiagram...................................................255

Figure66. PortStateMachine........................................................256

Figure67. SchedulerCCGApackageDimensions-TopandSideViews......................288

Figure68. SchedulerCCGApackageDimensions-BottomView............................289

Figure 69. Setup and Hold for 3.3V-tolerant 2.5V CMOS and 2.5V CMOS (OOB Interface) . . . . . . . . 308

Figure 70. Rise and Fall Times for 3.3V-tolerant 2.5V CMOS and 2.5V CMOS (OOB Interface). . . . . 309

Figure 71. OOB Test Loading ........................................................309

Figure 72. Setup and Hold for 3.3V-tolerant 2.5V CMOS and 2.5V CMOS (JTAG Interface). . . . . . . . 310

Figure 73. Rise and Fall Times for 3.3V-tolerant 2.5V CMOS and 2.5V CMOS (JTAG Interface) . . . . 310

Figure74. JTAGTestLoading.......................................................310

Figure 75. Setup and Hold for 3.3V-tolerant 2.5V CMOS and 2.5V CMOS (Serdes Interface) . . . . . . 311

Figure 76. Rise and Fall Times for 3.3V-tolerant 2.5V CMOS and 2.5V CMOS (Serdes Interface) . . . 311

Figure77. SerdesTestLoading......................................................311

Figure78. SetupandHoldfor200MbpsHSTL(EPP/DSInterface)...........................312

Figure79. RiseandFallTimesfor200MbpsHSTL(EPP/DSInterface).......................312

Figure80. Class1HSTLTestLoading.................................................313

Figure81. SetupandHoldfor800MbpsSTI(AIBInterfaces)...............................314

Figure82. RiseandFallTimesfor800MbpsSTI(AIBInterfaces))...........................314

viii PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

Page 13

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

Figure83. AIBTestLoading.........................................................315

Figure84. SetupandHoldfor2.5V200MbpsPECL(CLK&SOCSignals)....................316

Figure85. ClockPeriodandDutyCyclefor2.5V200MbpsPECL(CLKSignal).................316

Figure86. ExampleNoiseIsolationCircuit..............................................325

Figure87. ExampleVoltageDivider...................................................325

Figure88. VoltageRamp-up.........................................................326

Figure89. TheTimeAvailabletotheLinecard...........................................328

Figure90. CellsareStripedAcrossthe12PhysicalLinks..................................329

Figure91. The10-bitDataPaths .....................................................330

Figure 92. Initial Sequence Expected at Port Card . . . . . . . . . . . . . ...........................331

Figure93. CellsMayBeSkewedBetweenDataslices.....................................335

Figure94. TheIdleCounterCanDelayanOutboundCell..................................337

Figure95. TheIdleCounterCanDelayanOutboundCell..................................337

Figure96. One-deepQueueforOutboundRequests......................................339

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE ix

Page 14

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

x PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

Page 15

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

Table1. CrossbarConfigurations ....................................................30

Table2. TDMReservationTable ....................................................55

Table3. LCSFormatfromLinecardtoSwitch ..........................................65

Table4. DefinitionsofLCSFieldsfromLinecardtoSwitch ................................65

Table5. LCSFormatfromSwitchtoLinecard ..........................................66

Table6. DefinitionsofLCSFieldsfromSwitchtoLinecard ................................66

Table7. Encodingof4-bitTypeFields ................................................67

Table8. UsageoftheIngressRequestLabel_1.........................................67

Table9. UsageoftheEgressGrantLabel_1 ...........................................68

Table10. UsageoftheEgressPayloadLabel_2 .........................................68

Table11. LCSControlPacketFormat .................................................70

Table12. TDMControlPacketFormat .................................................71

Table13. RequestCountControlPacketFormat .........................................71

Table14. Start/StopControlPacketFormat .............................................72

Table15. Fiber1.5Gbit/sperChannelInterfaceConfigurations .............................76

Table 16. Mapping Segments to 1.5 Gbit/s Channels . . . . . . . . . . ............................76

Table17. OOBControlBusSignals ...................................................78

Table18. MulticastGroupSelects ....................................................79

Table19. UnicastGroupSelects .....................................................81

Table20. Refresh-sensitiveRegistersintheScheduler ...................................99

Table21. DatasliceRegisterSummary ...............................................119

Table22. DatasliceSignalDescriptions ...............................................136

Table23. DataslicePinout(leftside) .................................................142

Table24. DataslicePinout(rightside) ................................................143

Table18. DatasliceAlphaPinList ...................................................144

Table19. DatasliceCBGAMechanicalSpecifications ....................................148

Table 20. Linecard Request Count Maximum Values . . . . . . . . . . ...........................157

Table21. InputDatasliceQueueMemoryAllocation .....................................162

Table22. OutputDatasliceQueueMemoryAllocation ....................................163

Table23. EPPEgressControlPacketDataFormatandDatasliceOOBAddressing ............164

Table24. EnhancedPortProcessorRegisterSummary ..................................165

Table25. EnhancedPortProcessorSignalDescriptions .................................209

Table26. EnhancedPortProcessorPinout(leftside) ....................................214

Table27. EnhancedPortProcessorPinout(center)......................................215

Table28. EnhancedPortProcessorPinout(rightside) ...................................216

Table35. EnhancedPortProcessorAlphaPinList ......................................217

Table 36. Enhanced Port Processor CBGA Mechanical Specifications . . . . . . . . . ..............222

Table37. CrossbarRegisterSummary................................................228

Table38. CrossbarSignalDescriptions ..............................................237

Table39. CrossbarPinout(leftside)..................................................240

Table40. CrossbarPinout (center) ..................................................241

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE xi

Page 16

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

Table41. CrossbarPinout(rightside) ................................................242

Table33. CrossbarAlphaPinList....................................................243

Table34. CrossbarCCGAMechanicalSpecifications ....................................250

Table35. Non-TDMTraffic .........................................................257

Table36. SchedulerRegisterSummary ...............................................259

Table37. SchedulerSignalDescriptions ..............................................276

Table38. SchedulerPinout(leftside).................................................279

Table39. SchedulerPinout(center) ..................................................280

Table40. SchedulerPinout(rightside)................................................281

Table41. SchedulerAlphaPinList...................................................282

Table42. SchedulerCCGAMechanicalSpecifications ...................................289

Table43. SignalAssociations ......................................................293

Table44. AbsoluteMaximumRatings ................................................297

Table 45. Absolute Maximum Ratings for 3.3V-tolerant 2.5V CMOS (OOB & Serdes Interface) . . . . 297

Table46. AbsoluteMaximumRatingsfor2.5VCMOS(JTAGInterface) ......................297

Table47. AbsoluteMaximumRatingsfor200MbpsHSTL(EPP/DSInterface).................297

Table48. AbsoluteMaximumRatingsfor800MbpsSTI(AIBInterface) ......................298

Table 49. Absolute Maximum Ratings for 2.5V 200 Mbps PECL (CLK and SOC Signals) . . . . . . . . . 298

Table50. RecommendedOperatingConditions.........................................299

Table51. AdditionalPowerDesignRequirements .......................................301

Table 52. DC Electrical Characteristics for 3.3V-tolerant 2.5V CMOS (OOB Interface) . . . . . . . . . . 302

Table53. DCElectricalCharacteristicsfor2.5VCMOS(JTAGInterface) .....................302

Table54. DCElectricalCharacteristicsfor3.3V-tolerant2.5VCMOS(Serdes) ................303

Table55. DCElectricalCharacteristicsfor200MbpsHSTL(EPP/DSInterface) ...............303

Table56. DCElectricalCharacteristicsfor800MbpsSTI(AIBInterfaces) ....................304

Table 57. DC Electrical Characteristics for 2.5V 200 Mbps PECL (CLK and SOC Signals) . . . . . . . 304

Table 58. AC Electrical Characteristics for 3.3V-tolerant 2.5V CMOS (OOB Interface) . . . . . . . . . . . 305

Table59. ACElectricalCharacteristicsfor2.5VCMOS(JTAGInterface) .....................305

Table 60. AC Electrical Characteristics for 3.3V-tolerant 2.5V CMOS (Serdes Interface) . . . . . . . . . 306

Table61. ReferenceClockfor200MbpsHSTL(EPP/DSInterface) .........................306

Table62. ACElectricalCharacteristicsfor800MbpsSTI(AIBInterface) ....................307

Table63. ACElectricalCharacteristicsfor2.5V200MbpsPECL(CLKSignals) ................307

Table64. JitterandStaticPhaseOffsetforPLL.........................................308

Table65. Request/DatafromiDSFrameFormat ........................................317

Table66. DatafromoDSFrameFormat ..............................................317

Table67. Grant/DatatoiDSFrameFormat ............................................317

Table68. ControltoxDSLinkFormat ................................................318

Table69. DataslicetoCrossbarFrameFormat .........................................318

Table70. CrossbartoDatasliceFrameFormat .........................................319

Table71. SchedulertoEPPFrameFormat ............................................319

Table72. EPPtoSchedulerFrameFormat ............................................321

xii PROPRIETARY ANDCONFIDENTIAL TO PMC-SIERRA,INC., ANDFOR ITS CUSTOMERS’ INTERNAL USE

Page 17

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

Table73. Suggested8b/10bDecodeMapWithintheDataslice.............................331

Table74. DatasliceEgressTruthTable ...............................................331

Table75. ProgrammedTokenDelayvs.Inter-linkSkew ..................................334

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE xiii

Page 18

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

xiv PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

Page 19

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

1 Functional Description

1.1 OVERVIEW

The ETT1TMChip Set provides a crossbar-based switch core which is capable of switching cells between

32 ports with each port operating at data rates up to 10 Gbit/s. This section describes the main features of

the switch core and how cells flow through a complete system that is based on the ETT1 Chip Set.

This document often refers to port rates of OC-192c or OC-48c. The ETT1 Chip Set itself operates at a

fixed cell rate of 25M cells per second per port and thus is unaware of the actual data rate of the attached

link. So a switchmight be 32 port sof OC-192c, or it could be 32 ports of 10 Gbit/sEthernet; itis the internal

cell rate that is determined by the ETT1 Chip Set, not the link technology.

1.1.1 E TT1 Switch Core Features

The ETT1 switch core provides the following features:

• 320 Gbit/s aggregate bandwidth- up to 32 ports of 10 Gbit/s bandwidth each

• Each port can be configured as 4 x OC-48c or 1 x OC-192c

• Both port configurations support four priorities of best-ef fort traffic for unicast and multicast data

traffic

• TDM support for guaranteed bandwidth and zero delay variation with 10 Mbit/s channel resolution

•LCS

TM

protocol supports a physical separation of switch core and linecards up to 200 feet (70 m)

• Virtual output queues to eliminate head-of-line blocking on unicast cells

• Internal speedup to provide near-output-queued performance

• Cells are transferred using a credit mechanism to avoid cell losses due to buffer overrun

• In-band management and control via Control Packets

• Out-of-band management and control via a dedicated CPU interface

• Optional redundancy of all shared components for fault tolerance

• Efficient support for multicast with cell replication performed within the switch core

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE 15

Page 20

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

1.1.2 The Switch Core Model

The ETT1Chip Set is designed to provide a switch core, not a complete packet switch system.A complete

switch consists of one or more switch cores, together with a number of linecards. Each linecard connects

to one port in the core. The linecard includes a physical interface (fiber, co-axial cable) to a transmission

system suchas SONET/SDH or Ethernet. The linecardanalyzes incoming cells or packets and determines

the appropriate egress port and priority. The linecard contains any cell/packet queues that are needed to

allow for transient congestion through the switch.

The ETT1 switch core operates on fixed sizecells. If the linecard transmissionsystem uses variablelength

packets, or cells of a size different from those used in the core, then the linecard is responsible for

performing any segmentation and reassembly that is needed. Figure 1 illustrates this generic

configuration.

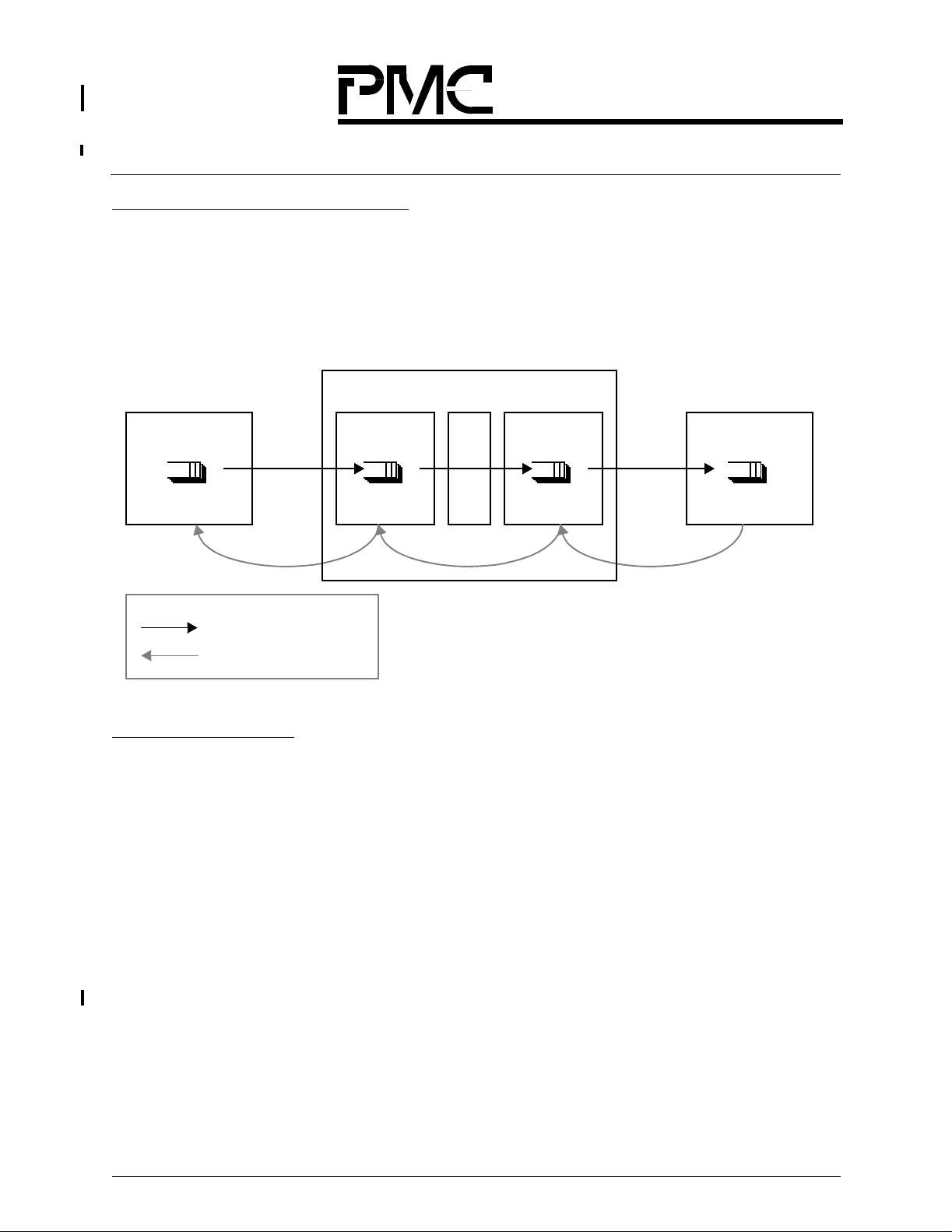

Figure 1. The Basic Components of a Switch Built Around the ETT1 Chip Set.

ETT1 Switch Core

port 0

Linecard 0

SONET/SDH

Ethernet....

Linecard 31

SONET/SDH

Ethernet....

port 0

LCS Protocol

ETT1 Chip Set

OOB Bus

CPU

The ETT1 Chip Set has been designed to allow for up to 200 feet (70 meters) of physical separation

between the ETT1 core and the linecard. The LCS

TM

(Linecard to Switch) protocol is used between the

ETT1 core and the linecard to ensure lossless transfer of cells between the two entities. However, while

the LCS protocol must be implemented,the physical separation is not mandatory; the linecard could reside

on the same physical board as the ETT1 port devices.

The switch core has two main interfaces. One is the interface between the linecard and the core port,

described in the LCS Protocol section.

16 PROPRIETARYAND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

Page 21

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

The secondinterface is between the ETT1 devices and the local CPU. TheETT1 ChipSet requires a local

CPU for configuration,diagnostics and maintenance purposes. A singleCPU can control a completeETT1

core via a common Out-Of-Band (OOB) bus. All of the ETT1 devices have an interface to the OOB bus.

The OOB bus is described in Section 1.1.4 “The OOB (Out-Of-Band) Bus” on page 18.

1.1.3 The LCS Protocol

The Linecard-to-Switch (LCSTM) protocol provides a simple, clearly defined interface between the linecard

and the core. In this section we introduce LCS. There are two aspects to LCS:

• a per-queue, credit-based flow control protocol

• a physical interface

The LCS protocol provides per-queue, credit-based flow control from the ETT1 core to the linecard, which

ensures that queues are not overrun.The ETT1core has shallow (64 cells)queues in both the ingressand

egress directions. These queues compensate for the latency between the linecard and the core. One way

to think of these queues is simply as extensions of the queues within the linecards. The queues

themselves are described further in Section 1.3 “Prioritized Best-Effort Queue Model” on page 30.

The LCS protocol is asymmetrical; it uses different flow control mechanisms for the ingress and egress

flows. For the ingress flow LCS uses credits to manage the flow of cells between the linecards and the

ETT1 core. The core provides the linecard with a certain number of creditsfor each ingress queue in the

core. These credits correspond to the number of cell requests that the linecard cansend to the core. For

each cell request that is forwardedto a given queuein the core the linecardmust decrementthe number of

credits for that queue. The core sends a grant (which is also a new credit) to the linecard whenever the

core is ready to accept a cell in responseto the cell request. At some later time, which is dependent on the

complete traffic load, the cell will be forwarded through the ETT1 core to the egress port. In the egress

direction a linecard can send hole requests, requesting that the ETT1 core does not forward a cell for one

celltime. The linecard can issue a hole request for each of the four besteffort unicastor multicast priorities.

If the linecard continually issued hole requestsat all four priorities then the ETT1 core would not forward

any best effort traffic to the linecard.

The LCS protocol information is contained within an eight byte header that is added to every cell.

The physical interface that has been implemented in the ETT1 Chip Set is based on a faster version of the

Gigabit EthernetSerdes interface,enabling theuse of off-the-shelf parts for the physical link. This interface

provides a

1.5 Gbit/sserial link that uses 8b/10b encoded data. Twelve of these links are combined to provide a single

LCS link operating at 18Gbaud, providing an effective databandwidth that is in excessof an OC-192clink.

NOTE: The LCS protocol is defined in the “LCS Protocol Specification -- Protocol Version 2”,

available from PMC-Sierra,Inc. This version of LCS supersedes LCS Version 1. Version 2

is first supported in the TT1 Chip Set with the Enhanced Port Processor device (also

referred to as the ETT1 Chip Set) and will be supported in future PMC-Sierra products.

The ETT1 implementation of the LCS protocol is described further in Section 1.6 “ETT1

Usage of the LCS Protocol” on page 64.

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE 17

Page 22

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

1.1.4 The OOB (Out-Of-Band) Bus

The ETT1 Chip Set requires a local CPU to perform initialization and configuration after the Chip Set is

reset or if the core configuration is changed - perhaps new ports are added or removed, for example. The

OOB bus provides a simple mechanism whereby a local CPU can configure each device.

Logically, the OOB bus provides a 32 bit address/data bus with read/write, valid and ready signals. The

purpose of the OOB bus is for maintenance and diagnostics; the CPU is not involved in any per-cell

operations.

1.2 ARCHITECTURE AND FEATURES

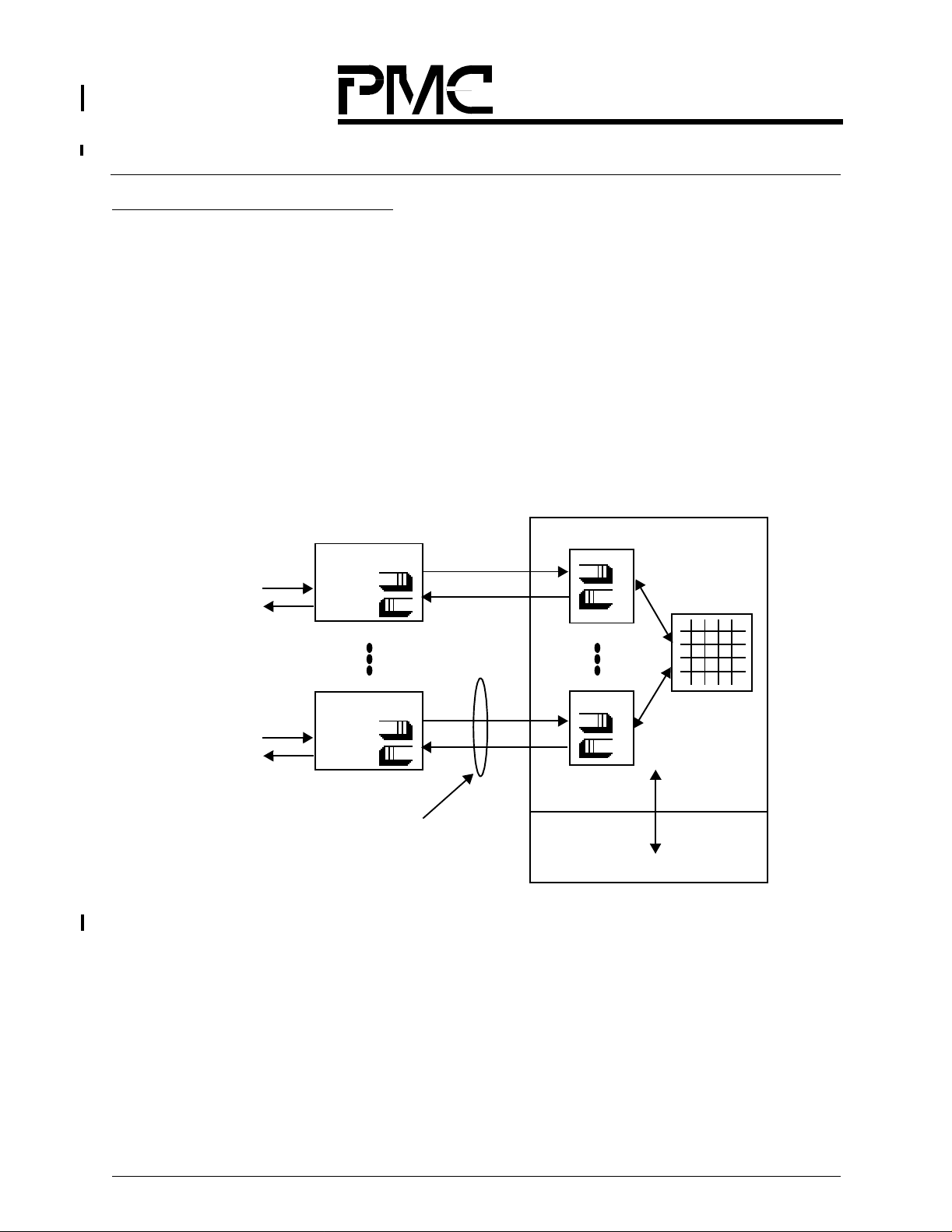

1.2.1 ETT1 Switch Core

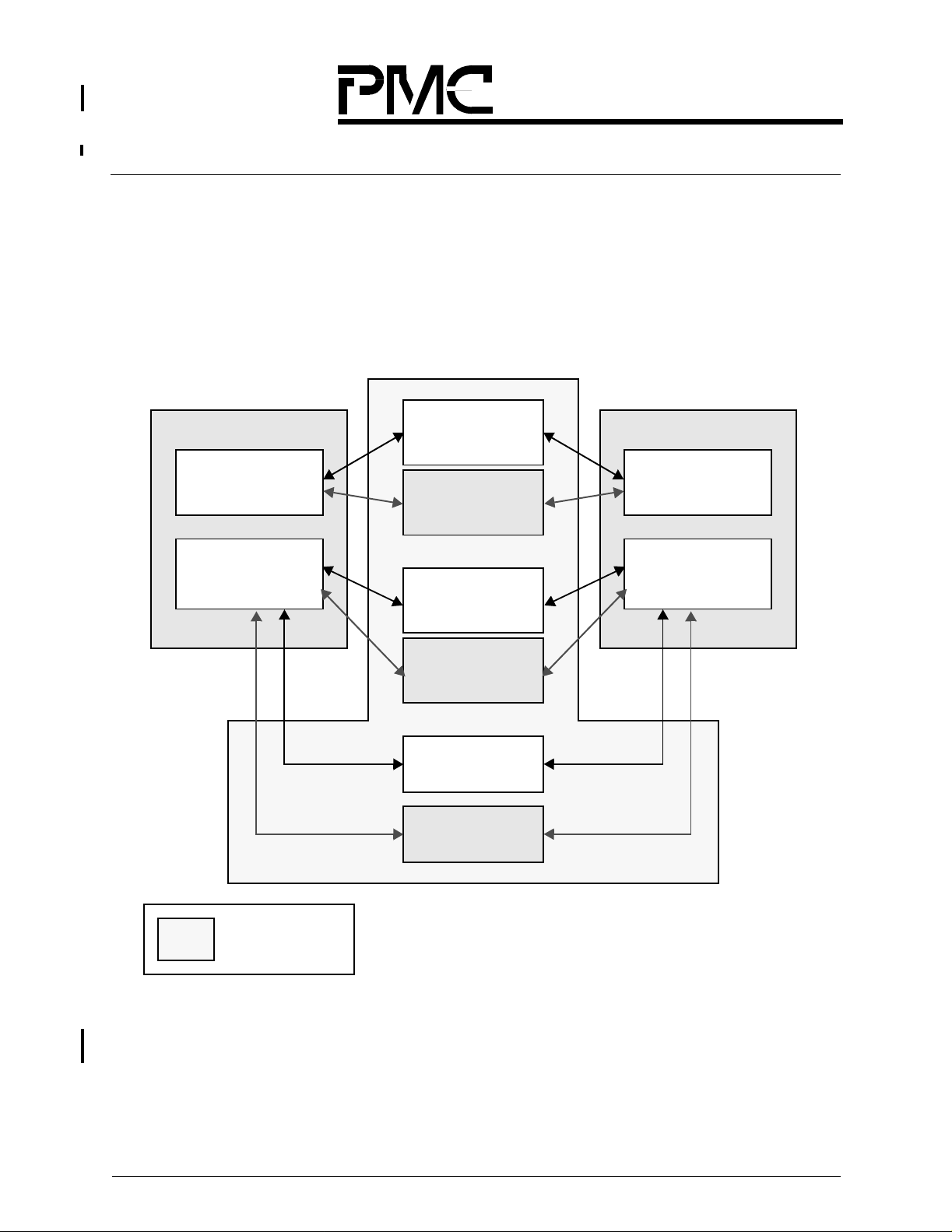

An ETT1 switch core consists of four types of entities: Port, Crossbar, Scheduler, and CPU/Clock. There

may be one or more instances of each entity within a switch. For example, each entity might be

implemented as a separate PCB, with each PCB interconnected via a single midplane PCB. Figure 2

illustrates the logical relationship between these entities.

Linecard

Figure 2. ETT1 Switch Core Logical Interconnec ts

Crossbar

Port

Flow Control

Crossbar

Scheduler

CPU/clock

Linecard

Port

18 PROPRIETARYAND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

Page 23

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

Each ETT1 port is attached to one or more linecards. The port contains the shallow cell queues and

implements the LCS protocol. The port and Scheduler exchange information about cells that are waiting to

be forwarded through the Crossbar core.

The Scheduler maintains local information on the number of cells that are waiting in all of the ingress and

egress queues. It arbitrates amongst all cells in the ingress queues, and instructs all of the ports as to

which cell they can forward through the Crossbar at each cell time.

Two Crossbars are used. The first, referred to as simply ‘the Crossbar’, interconnects all of the ports with

all of the other ports, enabling cells to be forwardedfrom the ingress port queues to theegressport queues

(in a different port). The Crossbaris reconfigured at every celltime to provide any non-blocking one-to-one

or one-to-many mapping from input ports to output ports. Each Crossbar port receives its configuration

information from its attached port; the Crossbars do not communicate directly with the Scheduler. The

second Crossbar is the flow-control Crossbar. It passes output queue occupancy information from every

egress port to every ingress port. The ingress ports use this information to determine when requests

should and should not be made to the Scheduler.

The CPU/clock provides clocks and cell boundary information to every ETT1 device. It also has a local

CPU which can read and write state information in every ETT1 device via the OOB bus. The CPU/clock

entity is a necessary element of the ETT1 switch core, but does not contain any of the ETT1 devices.

1.2.2 Basic Cell Flow

The ETT1 Chip Set consists of four devices. Their names (abbreviations) are:

•Dataslice(DS)

• Enhanced Port Processor (EPP)

• Scheduler (Sched)

• Crossbar (Xbar)

This section describes how cells flow through these four devices.

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE 19

Page 24

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

Figure 3. Two P ort Conf igu ration of a ETT1 Switch

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

1,3

2

1,3

Input Data Slice

2,3

5

5

7

Input EPP

Crossbar

Flow Control

Crossbar

6

5

7

Output Data Slice

6

5,7

Output EPP

7

44

Scheduler

data cell flow

control flow

3

6,7

Figure 3 shows a two-port configuration of a ETT1 switch. Only the ingress queues of the left hand port

and theegress queues of the right hand port are shown. The port has one EPP and eithersix or seven DS

devices. The DS contains the cell queue memory and also has the Serdes interface to the linecard. A

single cell is “sliced” across all of the Dataslice devices, each of which can manage two slices. The EPP is

the port controller, and determines where cells should be stored in the DS memories. Multiple Crossbar

devices make up a full Crossbar. A single Scheduler device can arbitrate for the entire core.

A cell traverses the switch core in the following sequence of events:

1. A cell request arrives at the ingress port, and is passed to the EPP. The EPP adds the request to

any other outstanding cell requests for the same queue.

2. At some later time, the EPP issues a grant/credit to the source linecard, requesting an actual cell

for a specific queue. The linecard must respond with the cell within a short period of time.

3. The cell arrives at the ingress port and the LCS header is passed to the EPP. The EPP

determines the destination queue from the LCS header, and then tells the Dataslices where to

store the cell (each Dataslice stores part of the cell). The EPP also informs the Scheduler that a

new cell has arrived and so the Scheduler should add it to the list of cells waiting to be forwarded

through the Crossbar. The EPP modifies the LCS label by replacing the destination port with the

source port, so the egress port and linecard can see which port sent the cell.

4. The Scheduler arbitrates among all queuedcells and sends a granttothose ports that can forward

a cell. The Scheduler also sends a routing tag to each of the destination (egress) ports; this tag

20 PROPRIETARYAND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

Page 25

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

tells the ports which of the many source ports will be sending it a cell.

5. The source EPP sends a read command to the Dataslices, which then reads the cell from the

appropriate queue and sends it to the Crossbar. At the same time, the destination ports send the

routing tag information to the Crossbar. This routing tag information is used to configure the

internal connections within the Crossbar for the duration of one cell time. The cell then flows

through the Crossbar from the source port to the destination port.

6. The cell arrives at the destination port, and the EPP receives the LCS header of the cell. It uses

this information to decide in which egress queue the cell should be stored. If this was a multicast

cell which caused the egress multicast to reach its occupancy limit, then the EPP would send a

congestion notification to the Scheduler.

7. At some later time, the EPP decides to forward the cell to the linecard. The EPP sends a read

command to the Dataslices which read the cell from memory and forward the cell out to the

linecard. The egress EPP also sends flow control to the ingress EPP, informing it that there now

exists free space in one or more of the egress EPP’s output queues. Also, if the transmitted cell

was a multicast cell then this may cause the egress queue to go from full to not full, in which case

the EPP notifies the Scheduler that it (the EPP) can once again accept multicast cells.

The above description does not account for all of the interactions that can take place between ETT1

devices, but it describes the most frequent events. In general, users do not need to be aware of the

detailed interactions, however knowledge of the main information flows will assist in gaining an

understanding of some of the more complicated sections.

1.2.3 Prioritized Best-effort Service

An ETT1 switch core provides two types of service. The first is a prioritized,best-effort service. The second

provides guaranteed bandwidth and is described later.

The best-effort service is very simple. Linecards forward best-effort cells to the ETT1 core where they will

be queued. The Scheduler arbitrates among the various cells; the arbitration algorithm has the dual goals

of maximizing throughput while providing fair access to all ports. If more than one cell is destined for the

same egress port then the Scheduler will grant one of the cells and the others will remain in their ingress

queues awaitinganother round of arbitration. The serviceis best-effort in that the Scheduler tries its best to

satisfy all queued cells, but in the case of contention then some cells will be delayed.

The Scheduler support s four levels of strict priority for best effort traffic. Level 0 cells have the highest

priority, and level 3 cells have the lowest priority. A level 0 cell destined for a given port will always be

granted before a cell of a different priority level, in the same ingress port, that is destined for the same

egress port.

A ‘flow’ is a sequence of cells from the same ingress port to the same egress port(s) at a given priority.

Best-effort flows are either unicast flows (cells in the flow go to only one egress port), or multicast flows (in

whichcasecellscangotomany,evenall,oftheegressports).

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE 21

Page 26

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

1.2.4 End-to-End Flow Control

The full queueing and flow control model is shown in Figure 4.

NOTE: Creditsor backpressure are used at every transfer point to ensure that cells cannot be lost

due to lack of buffer space.

Figure 4. Queueing and Flow Control

ETT1 core

r

a

Linecard

Ingress queues

ETT1 port

Ingress

queues

b

s

s

o

r

C

1

T

T

E

ETT1 port

Egress

queues

Linecard

Egress queues

LCS credits

cell flow

backpressure/credits

hole requestsETT1 backpressure

1.2.5 TDM Service

The ETT1 TDM service provides guaranteed bandwidth and zero cell delay variation. These properties,

which are not available from the best-effort service, mean that the TDM service might be used to provide

an ATM CBR service, for example. The ETT1 core provides the TDM service at the same time as the best

effort service, and TDM cells integrate smoothly with the flow of best-effort traffic. In effect, the TDM cells

appear to the Scheduler to be cells of the highest precedence, even greater than level zero best-effort

multicast traffic.

The TDM service operates by enabling a ETT1 port (and linecard) to reserve the crossbar fabric at some

specified cell time in the future. The Scheduler is notified of this reservation and will not schedule any

best-effort cells from the ingress port or to the egress port during that one cell time. Each port can make

separate reservations according to whether it will send and/or receive a cell at each cell time.

Several egress ports may receive the same cell from a given ingress port; therefore, the TDM service is

inherently a multicast service.

In order to provide a guaranteed bandwidth over a long period of time, an ingress port will want to repeat

the reservations on a regular basis. To support this the ETT1 core uses an internal construct called a TDM

22 PROPRIETARYAND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

Page 27

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

Frame. A TDM Frame is simplya sequence of ingressand egressreservations. The length (number of cell

times) of a TDM Frame is configurable up to a maximum of 1024 cells. The TDM Frame repeat s after a

certain fixed time. All ports are synchronized to the start of the TDM Frame, and operate

cell-synchronouslywith respectto each other. Thus, atany cell time, everyETT1 port knows whether ithas

made a reservation to send or receive a TDM cell. See the application note “LCS-2 TDM Service in the

ETT1 and TTX Switch Core”, available from PMC-Sierra, Inc.

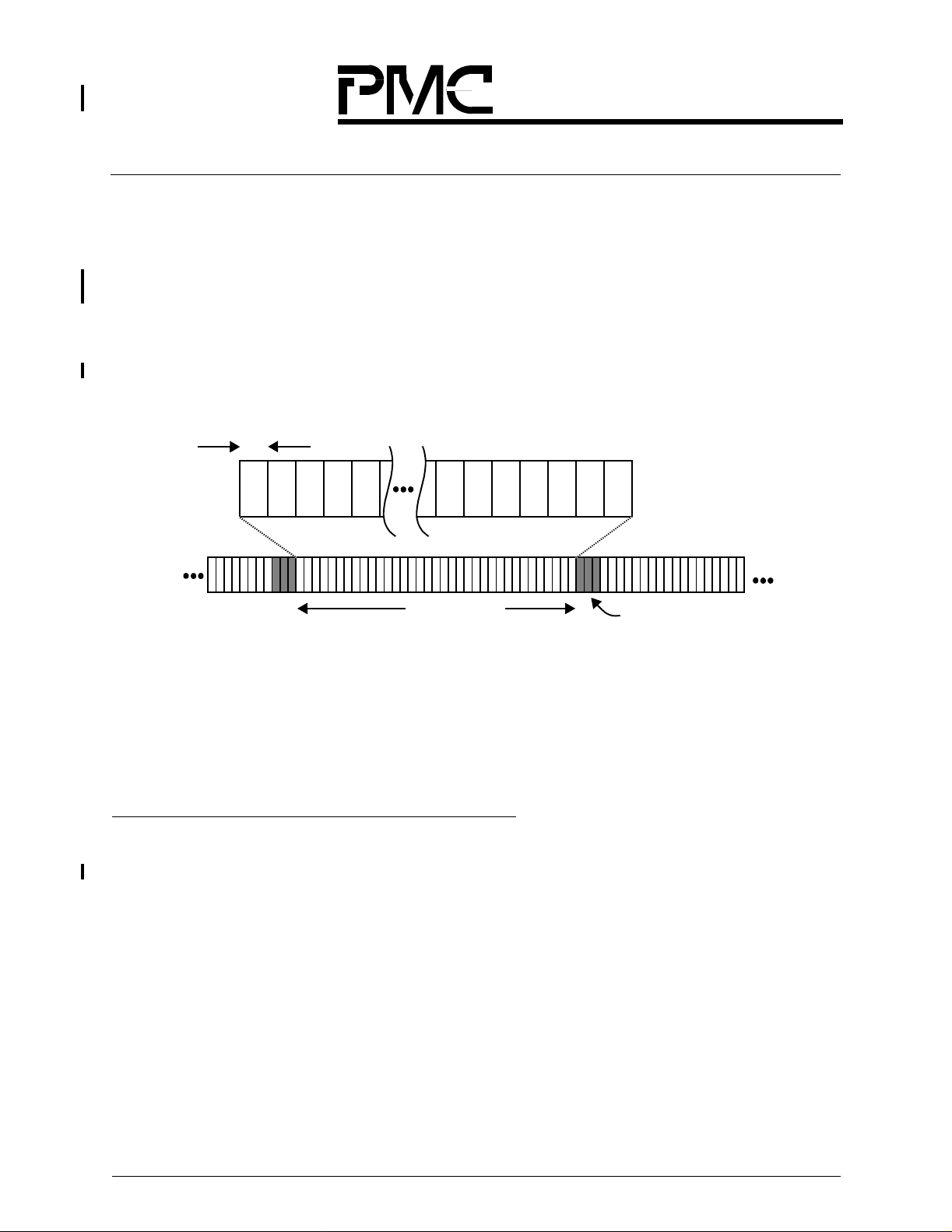

Figure 5 illustrates the idea of a TDM Frame. The TDM Frame has N slots(N is 1024 or less) where each

slot is one 40ns cell time. The TDM Frame is repeated continuously, but adjacent TDM Frames are

separated by a guard band of at least 144 cells.

Figure 5. The TDM Fr ame Concept

40ns

1

One TDM Frame

N

Guard band

All of the ETT1 ports must be synchronized with respect to the start of the TDM Frame. This

synchronization information is distributed via the Scheduler. The Scheduler can generate the

synchronization pulses itself, or it can receive “Suggested Sync” pulses from a ETT1 port which can in turn

receive synchronization signals from a linecard. The linecards do not need to be exactly synchronized to

either the ETT1 core or the other linecards. The LCSprotocol willcompensate forthe asynchrony between

the linecard and the ETT1 core.

1.2.6 S ubport Mode (2.5 Gbit/s Linecards)

An ETT1 switch core can have up to 32 ports. Each port supports a linecard bandwidth in excess of

10 Gbit/s.Thisbandwidth mightbe used by a single linecard (for example, OC-192c), or the ETT1port can

be configured to share this bandwidth among up to four ports, each of 2.5 Gbit/s. This latter mode,

specifically four 2.5 Gbit/s linecards, is referred to as subport mode, or sometimes as quad OC-48c mode.

A single ETT1 switch can have some ports in ‘normal’ mode, and some in subport mode; there is no

restriction. The four levels of prioritized best-effort traffic are available in all configurations. However, there

are some important differences between the two modes. One difference is that the LCS header must now

identify the subport associated with each cell. Two bits in the LCS label fields are used for this purpose, as

described below.

A second difference is that the EPP must carefully manage the output rate of each subport, so as not to

overflow the buffers at the destination linecard, and this is also described below.

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE 23

Page 28

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

A thirddifference is thatthe EPP must maintainseparate LCS request counters for each of the subports. It

must also maintain separate egress queues. So the number of queues can increase four-fold in order to

preserve the independence of each subport. Section 1.3 “Prioritized Best-EffortQueue Model” on page 30

describes the various queuing models in great detail.

1.2.6.1

Identifying th e Source Subport

The EPP manages a single physical stream of cells at 25M cells/second. In subport mode the EPP must

look at each incoming cell and determine which subport has sent the cell. The LCS label field is used to

achieve this. Two bitswithin the label(referred toin the LCS Specification as MUX bits) are used to denote

the source subport, numbered 0 through 3. The MUX bits must be inserted in the LCS label before the cell

arrives at the EPP. The MUX bits might be inserted at the source linecards themselves. Alternatively, they

might be inserted by a four-to-one multiplexer device placed between the subport linecards and the EPP.

In this latter case, the multiplexer device might not be able to re-calculate the LCS CRC. For maximum

flexibility, the EPP can be configured to calculate the LCS CRC either with or without the MUX bits.

1.2.6.2

Egress Cell Rate

Within the EPP is an Output Scheduler process which is different from, and should not be confused with,

the ETT1 Scheduler device. At every OC-192 cell time, the Output Scheduler looks at the egress queues

and decides which cell should be forwarded to the attached egress linecard(s). In subport mode, the

Output Scheduler will constrain the egress cell rate so as not to overflow any of the 2.5 Gbit/s links. It does

this by operating in a strict round-robin mode, so that at any OC-192 cell time it will only try to send a cell

for one of the subports. The four 2.5 Gbit/s subports are labeled as 0 through 3 ; at some cell time the

Output Scheduler will only try to send a cell from the egress queues associated with subport 0. In the next

time, it will only consider cells destined for subport 1, etc. If, at any cell time, there are nocells to be sent to

the selected subport, then an Idle (empty) cell is sent. For example, if subports 0 and 3 are connected to

linecards but subports 2 and 4 are disconnected, then the Output Scheduler will send a cell to 0, then an

empty cell, then a cell to 3, then an empty cell, and then repeat the sequence. The effective cell rate

transmitted to each subport will not exceed 6.25 M cells per second.

1.2.7 LCS Control Packets

The LCS protocol provides in-band control packets. These packets (cells) are distinct from normal cell

traffic in that they do not pass through the fabric to an egress linecard, but are intended to cause some

effect within the switch.

There are two classes of Control Packets. The first class, referred to as CPU Control Packets, are

exchanged between the linecard and the ETT1 CPU (via the EPP and Dataslices). The intention is that

CPU Control Packets form the basic mechanism through which the linecard CPU and ETT1 CPU can

exchange information. This simple mechanism is subject to cell loss, and so should be supplemented by

some form of reliable transport protocol that would operate within the ETT1 CPU and the linecards.

The second class, referred to as LCS Control Packets, are used to manage the link between the linecard

and the ETT1 port. These LCS Control Packets can be used to start and stop the flow of cells on the link,

24 PROPRIETARYAND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

Page 29

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

to provide TDM synchronizationevent information,and can recover from any grant/credit information that

is lost if cells are corrupted in transmission.

1.2.7.1

Sending a Control Packet f rom the OOB to the Linecard

Before sendinga CPU (OOB to linecard) control packet,the OOB must first write the controlpacketheader

and payload data intothe appropriatelocations in the Dataslice. (See Section3.3 “OutputDataslice Queue

Memory Allocation with EPP”, Table 23, on page 164.) The header for customer-specific CPU control

packets should be written into the Dataslices as shown, but the payload data is completely up to the

customer.

To send the CPU control packet which has been written into Dataslice queue memory, the OOB writes the

ESOBLCP register with the control packet type select in bits [5:4] (see the bit-breakout), and a linecard

fanout in bits [3:0]. If the port is connected to 4 subport linecards, then bits [3:0] are a subport-bitmap. If

the port is connected to one OC-192c linecard, then bit 0 must be set when the OOB wishes to send a CP.

When the CP has been sent to the linecard(s) indicated in bits [3:0], bits [3:0] will read back as 0. Since

control packets have higher priority than any other traffic type, they will be sent immediately, unless the

EPPisprogrammedtosendonlyidlecells.

1.2.7.2

Sending a Control Packet F rom the Linecard to the OOB

The linecard sends control packets to OOB using the regular LCS request/grant/cell mechanism. A CPU

(linecard to OOB) control packet must have the CPU bit set in its request label (See Section 1.1.3 “The

LCS Protocol” on page 17.) When the EPPreceives the cellpayload for a CPU control packet, it stores the

cell in the Dataslices’ Input Queue memories and raises the “Received LC2OOB/CPU Control Packet from

Linecard...” interrupt. (In OC-192c mode, it will always be Linecard 0.)

The input queue for CPU control packets from each linecard is only 8 cells deep, so as soon as the OOB

sees a “Received LC2OOB...” interrupt, it should read the appropriate “Subport* to OOB FIFO Status”

register (0x80..0x8c). Bit [3:0] of that register will tell how many control packet cells are currently in the

input queue; bits 6:4 will tell the offset of the head the 8-cell CPU CP input queue. That queue offset

should be used to form addresses for the Dataslices’ Input Queue memories. See Section 3.2 “Input

Dataslice Queue Memory Allocation with EPP” on page 162, for Dataslice Input Queue memory

addressing. Then the OOB should read those addresses to obtain the CPU CP payload data. When the

OOB has read the CPU CP payload data, it should write the appropriate “Linecard * to OOB FIFO Status”

register (any value). A write to that register, regardless of the write data, will cause the head of the queue

to be dequeued, freeing up that space in the CPU CP input queue.

See Section 1.6.3 “Control Packets” on page 69 for more details.

1.2.8 Redundancy

An ETT1 core can be configured with certain redundant (duplicated) elements. A fully redundant core is

capable of sustaining single errors within any shared device without losing or re-ordering any cells. This

section describes the main aspects of a redundant core. A complete switch may have two ETT1 cores,

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE 25

Page 30

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

with linecards dual-homed to both cores, and while this is a valid configuration the ETT1 core does not

provide any specific support for such a configuration.

Figure 6 shows a fully redundant core. The Crossbars and Scheduler are fully replicated, as are the links

connecting those devices. The port devices (EPP and Dataslices) are not replicated. It is important to

understand that if an EPP or Dataslice fails (or incurs a transient internal error), then data may be lost, but

only within that port.

Figure 6. Fully R edu ndan t Core

Crossbar 0

Port

Dataslice

EPP

Dataslice

Crossbar 1

EPP

Flow Control

Crossbar 0

Port

Flow Control

Crossbar 1

Scheduler 0

Scheduler- 1

Fault tolerant region

A fully redundant core operates in exactly the same way as a non-redundant core. Consider the EPP: it

sends new request information to bothSchedulers. The two Schedulers operate synchronously, producing

identical outputs, and the information (grants) received by the EPP will be the same. The Dataslices

operate in exactly the same way with their two Crossbar devices.

26 PROPRIETARYAND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

Page 31

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

The information carried on the links is protected by checksums. For example, if the EPP receives

information with a checksum error onone link, then it simply ignores thatlink and uses the information from

the other link. All errors are reported to the local CPU via interrupts.

The redundantcore is tolerant of such single errors, provided that they are detectable. In general,all errors

that occur on the links or within the Scheduler or Crossbar will be detectable.

It should be clear from Figure 6 that only the Dataslice and EPP have the redundant connections. An

individual Crossbar or Scheduler cannot compensate for information that is received with a checksum

error. The following discussion reviewshow thesetwo types of devices are affected by receiving corrupted

information.

If a Crossbardetects a checksumerror then it simply marks the cell as being corrupted.This informationis

passed to the egress Dataslice which will ignore that link and use the information from the other Crossbar.

The Scheduler is more complicated because it must maintainaccurate state information on the occupancy

of all queues as well as the backpressure status of some of the egress queues. If a Scheduler receives a

checksum error then it must effectively remove itself from the system.

Consider the simple configuration illustrated in Figure 7. Scheduler 0 sees a checksum error on

information it receives from EPP 1. Scheduler 0 now has incorrect state information. It immediately

disables all of its outbound links so that EPP 1 and EPP 2 know that Scheduler 0 should be ignored. The

EPPs now only accept information from Scheduler 1.

Figure 7. Simple Redundant Scheduler Config ur atio n

EPP 1 EPP 2

Scheduler 0

Scheduler 1

If a new Scheduler-0 board is inserted in the system, then it must have its internal state updated to match

that of Scheduler-1. This is done using a sequence of actions that are controlled by the ETT1 CPU. In

essence, the linecards are temporarily stopped from sending new cells, and the state within each EPP is

downloaded into the Schedulers, and then the linecards are restarted. The entire process is very rapid

(much less than 1ms), but does cause best-effort traffic to be suspended for a brief time.

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE 27

Page 32

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

1.2.9 System Co nfiguration Options

Most of the previous sections have assumed a particular switch configuration consisting of 32 ports of

OC-192c, with 64 byte payload cells and full redundancy. The ETT1 Chip Set has four aspects that can be

configured according to the user’s requirements. Two of these aspects have been described: quad OC48c

versus single OC-192c port, and a redundant system versus a non-redundant system. The other two

aspects are described in this section.

NOTE: All four aspects are orthogonal and so the choice of any one particular aspect does not

limit the choices for the other three aspects.

1.2.9.1

Payload: 64 bytes or 76 bytes

The ETT1 core and LCS protocol are designed to forward fixed length cells. Each LCS cell consists of an

LCS header (8 bytes) and a payload. The size of this payload can be either 64 bytes or 76 bytes. The

choice of payload size is a function of the linecard traffic: 53-byte ATM cells can probably use a 64 byte

payload; fragmented IP packets might obtain greater efficiency with a 76 byte payload.

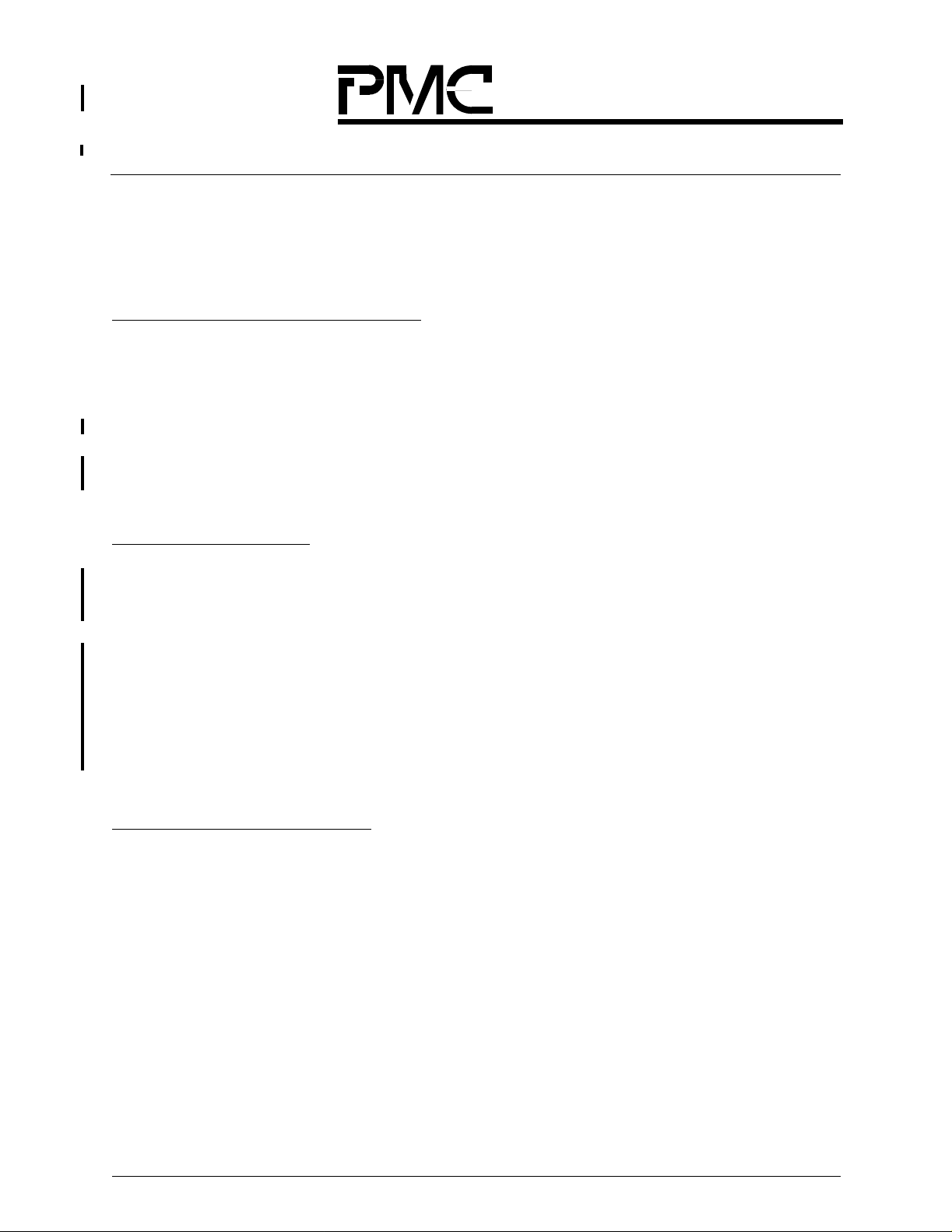

This flexibility in cell size is due to the way the ETT1 Chip Set slices the cells. Figure 8 shows how an LCS

cell is sliced across the Dataslices and Crossbars.

28 PROPRIETARYAND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE

Page 33

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

Figure 8. LCS Cell Sliced Across Dataslices and Crossbars

LCS Cell

LCS Header

(8 Bytes)

Payload

(64 Bytes)

Extra 12 bytes

(6 bytes per slice)

ETT1 Port

Dataslice

Slices 0,1

Dataslice

Slices 2,3

Dataslice

Slices 10,11

Dataslice

Slices 12,13

Crossbar 0

Crossbar 1

Crossbar 2

Crossbar 3

Crossbar 10

Crossbar 11

Crossbar 12

Crossbar 13

ETT1 Port

Dataslice

Slices 0,1

Dataslice

Slices 2,3

Dataslice

Slices 10,11

Dataslice

Slices 12,13

The 64 byte payload cell requires six Dataslices per port and 12 Crossbars (32 port, non-redundant). The

76 byte payload cell requires seven Dataslices per port and 14 Crossbars. The EPP is capable of

supporting the seventh Dataslice.

Because the extra data is obtained by slicing the cell then this does not affect the clock frequency of the

Chip Set. The onlyeffect is thatthe link between the ETT1 port and the linecard must go up from18 Gbaud

to 21 Gbaud. This is done by making the link wider, not faster.

1.2.9.2

8, 16, or 32 ports

The ETT1 core can supporta maximum of 32 ports. Smaller configurations can be supportedby simply not

using some of the ports. However, if the maximum system is an eight or 16 port configuration, then some

PROPRIETARY AND CONFIDENTIAL TO PMC-SIERRA, INC., AND FOR ITS CUSTOMERS’ INTERNAL USE 29

Page 34

Released

Data Sheet

PMC-2000164 ISSUE 3 ENHANCED TT1™ SWITCH FABRIC

PMC-Sierra, Inc.

PM9311/2/3/5 ETT1™ CHIP SET

cost savings can be achieved by using fewer Crossbar devices. A Crossbar can be configured to be in an

8- 16- or 32-port core, as shown in Table 1.

Table 1. Crossbar Configurations

Number of OC-192c ports

Number of Crossbars required

(non redundant)

Number of Crossbars required

(redundant)

1 to 8 3 + 1 Flow Control 6 + 2 Flow Control

9 to 16 6 + 1 Flow Control 12 + 2 Flow Control

17 to 32 12 + 2 Flow Control 24 + 4 Flow Control

The reduction in the number of crossbars is achieved by having a single port use more than one

connection on each Crossbar. In a 32 port system, each port will use one connection to each Crossbar

device; in an 8 port system,each port will use four connections on each Crossbar. (This DOES NOT apply

to Flow Control Crossbars; each port will always use only one connection.)

1.3 PRIORITIZED BEST-EFFORT QUEUE MODEL

The ETT1 switch core has been designed to maximize cell throughput while keeping latency small and

providing “fair” throughput among all ports. The ETT1 core supports four levels of strict priority. Level 0 is

the highest priority and level 3 the lowest. All conditions being equal, the core will always forward a higher

priority cell before a lower priority cell. Furthermore, the core will be fair to all inputs. This fairness is very

difficult to quantify and is onlymeaningful over time periods of many cells. Over short time periods (tens or

perhaps hundreds of cells) there may be temporary unfairness between ports.

A cell of a lower priority may pass a cell of a higher priority under certain conditions:

1. Since the scheduling pipeline is shorter for lower priorities, a cell of a lower priority may emerge

from the switch sooner than a cell of a higher priority if the lower-priority cell is sent immediately

after the higher-priority cell.

2. Hole requests for a higher priority can allow cells of a lower priority to pass cells of the higher

priority.

3. If the linecard responds to LCS grants faster than required to meet the system round trip time