Page 1

Crestron Surround Sound

Primer

Page 2

This document was prepared and written by the Technical Documentation Department at:

Crestron Electronics, Inc.

15 Volvo Drive

Rockleigh, NJ 07647

1-888-CRESTRON

Manufactured under license from Dolby Laboratories. “Dolby”, “Pro Logic”, “Pro Logic II”, “Dolby Digital”, “Dolby Digital 5.1, and

the double-D symbol are trademarks of Dolby Laboratories.

Manufactured under license from Digital Theater Systems, Inc. “DTS”, DTS Digital Surround Sound”’ “DTS-ES Extended

Surround”, DTS Virtual 5.1”, “NEO:5”, and “NEO:6” are trademarks of Digital Theater Systems, Inc.

All other brand names, product names and trademarks are the property of their respective owners.

©2003 Crestron Electronics, Inc.

Page 3

Crestron Surround Sound Primer

Contents

Crestron Surround Sound 1

Sound, Hearing and the Limits of Perception...................................................... 1

Frequency.............................................................................................. 1

Wavelength ........................................................................................... 1

Volume (Amplitude) ............................................................................. 2

VU and dB............................................................................................. 3

Perception.............................................................................................. 3

Timbre................................................................................................... 4

Psychoacoustics................................................................................................... 5

Localization........................................................................................... 6

Interaural Time Difference.................................................................... 6

Interaural Intensity Difference .............................................................. 6

Pinna Filtering....................................................................................... 7

The Precedence Effect........................................................................... 7

Reverberation and Echoes..................................................................... 8

Temporal Masking ................................................................................ 9

A History of Surround Sound .............................................................................. 9

Early Surround ...................................................................................... 9

Quadraphonic ........................................................................................ 9

Dolby................................................................................................... 10

Dolby Pro Logic .................................................................................. 11

Dolby Pro Logic II .............................................................................. 12

Dolby Digital....................................................................................... 12

Dolby Digital Surround EX................................................................. 12

DTS ..................................................................................................... 13

DTS-ES ............................................................................................... 13

DTS-Neo 6 .......................................................................................... 14

Pulse Code Modulation ....................................................................... 14

Speakers............................................................................................................. 15

Surround Speakers............................................................................... 15

SubWoofers......................................................................................... 15

Bass Management ............................................................................... 16

Large and Small .................................................................................. 16

Speaker Set-up Suggestions ................................................................ 16

Standing Waves................................................................................... 17

Phase ................................................................................................... 17

Surround Sound Speaker Placement.................................................................. 18

Front Speaker Placement..................................................................... 18

Surround Speaker Placement Optimized for Movie Soundtracks ....... 19

Surround EX Speaker Placement ........................................................ 19

Flat Response ...................................................................................... 19

Equalization......................................................................................... 20

Required Test Equipment:................................................................... 21

Primer – DOC. 6122 Surround Sound • i

Page 4

Primer Crestron Surround Sound

Setup Procedures................................................................................. 21

What We Hear in a Room ................................................................... 22

Speakers Placed in Cabinets................................................................ 22

Stereo Imaging .................................................................................... 23

About Surround Sound........................................................................ 23

Subwoofer Placement.......................................................................... 24

Equalizers .......................................................................................................... 25

Graphic Equalizer................................................................................ 25

Parametric Audio Filters ..................................................................... 25

Parametric GUI ................................................................................... 35

Glossary............................................................................................................. 37

Index.................................................................................................................. 40

ii • Surround Sound Primer – DOC. 6122

Page 5

Crestron Surround Sound Primer

Crestron Surround Sound

Sound, Hearing and the Limits of Perception

Objects produce sound when vibrating in an elastic medium. Solids,

liquids and gas all conduct sound. When something vibrates in the

atmosphere, it pushes the air around it creating an acoustic compression

wave. The trail of this wave creates a drop in pressure, called

rarefaction.

Sound waves, which travel at about 1,086 feet per second (331.1 meters

per second) in the air, have three basic properties, frequency,

wavelength, and volume (amplitude).

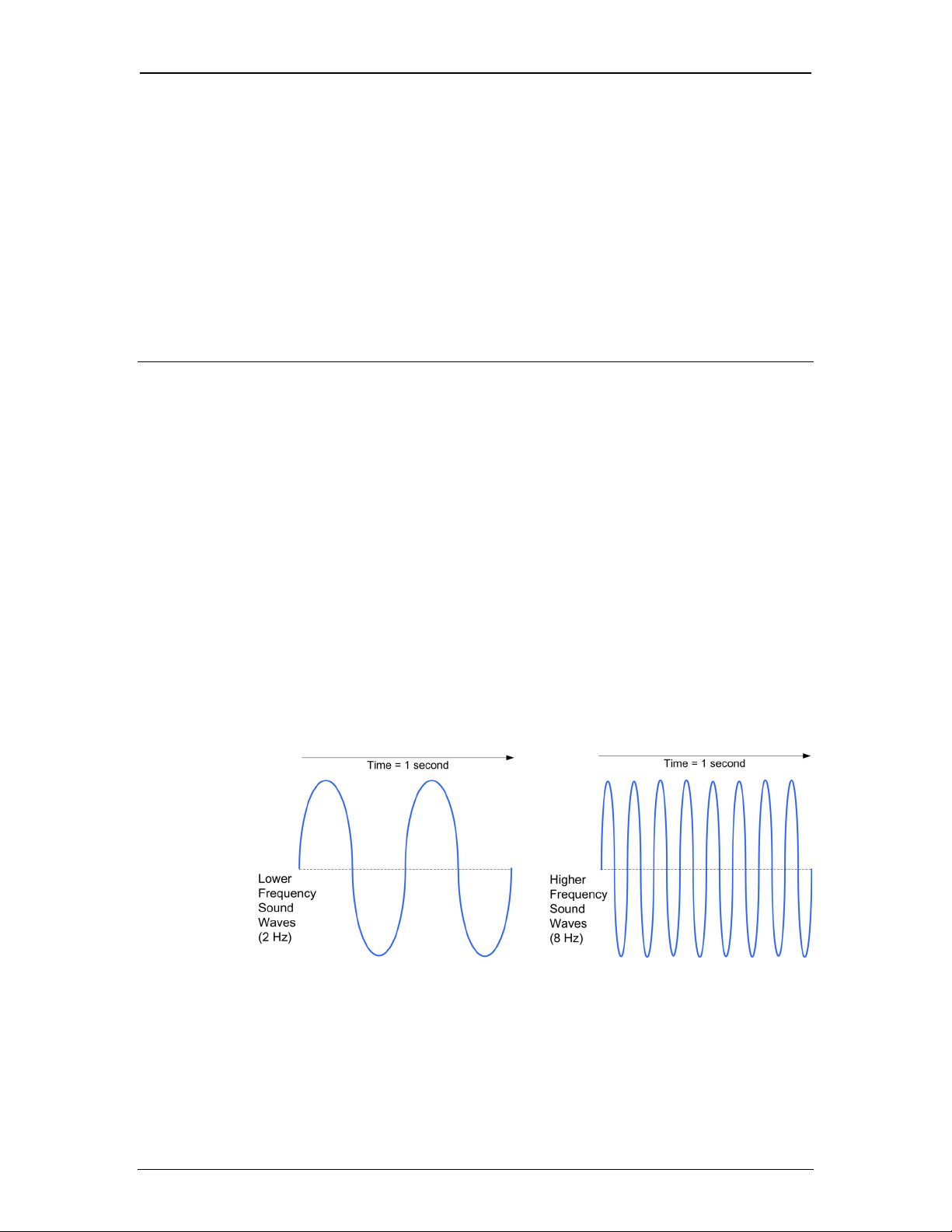

Frequency (Hz)

Frequency

Frequency is the number of distinct positive or negative sound wave

elements that repeat in one second. Frequency is measured in Hertz

(Hz). A 20 Hz frequency contains 20 positive and negative cycles of

individual components each second (20 distinct waves passing by in

one second). A 20 kHz (kilohertz) frequency contains 20,000 of these

cycles every second.

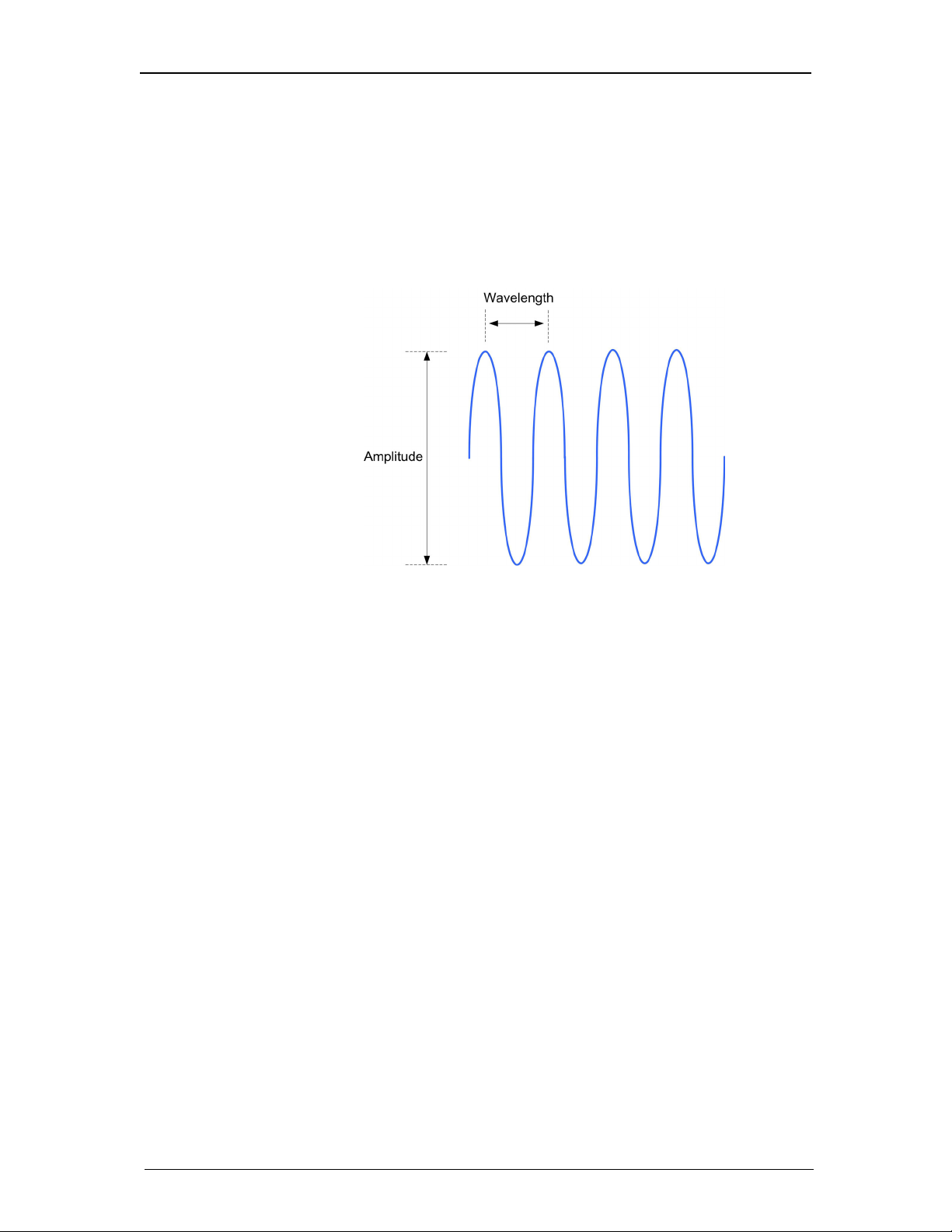

Wavelength

Wavelength is the distance between two points on consecutive waves.

It is measured from the same position on a wave in two consecutive

cycles. Wavelength can be measured by taking the horizontal distance

from a point (at the peak in our example) of one wave cycle to the same

point at the peak in the second wave cycle.

Primer – DOC. 6122 Surround Sound • 1

Page 6

Primer Crestron Surround Sound

Low frequency sounds have long wavelengths and high frequency

sounds have short wavelengths. The length of a 20 Hz sound wave is

about 56 feet. Speakers that produce low frequencies must therefore be

large in size with long excursions (the distance a speaker moves in and

out) to produce large and long waves. Speakers producing high

frequency sounds must be small enough to move rapidly and produce

the very small waves of high frequencies (about two thirds of an inch at

20 kHz).

Wavelength and Amplitude

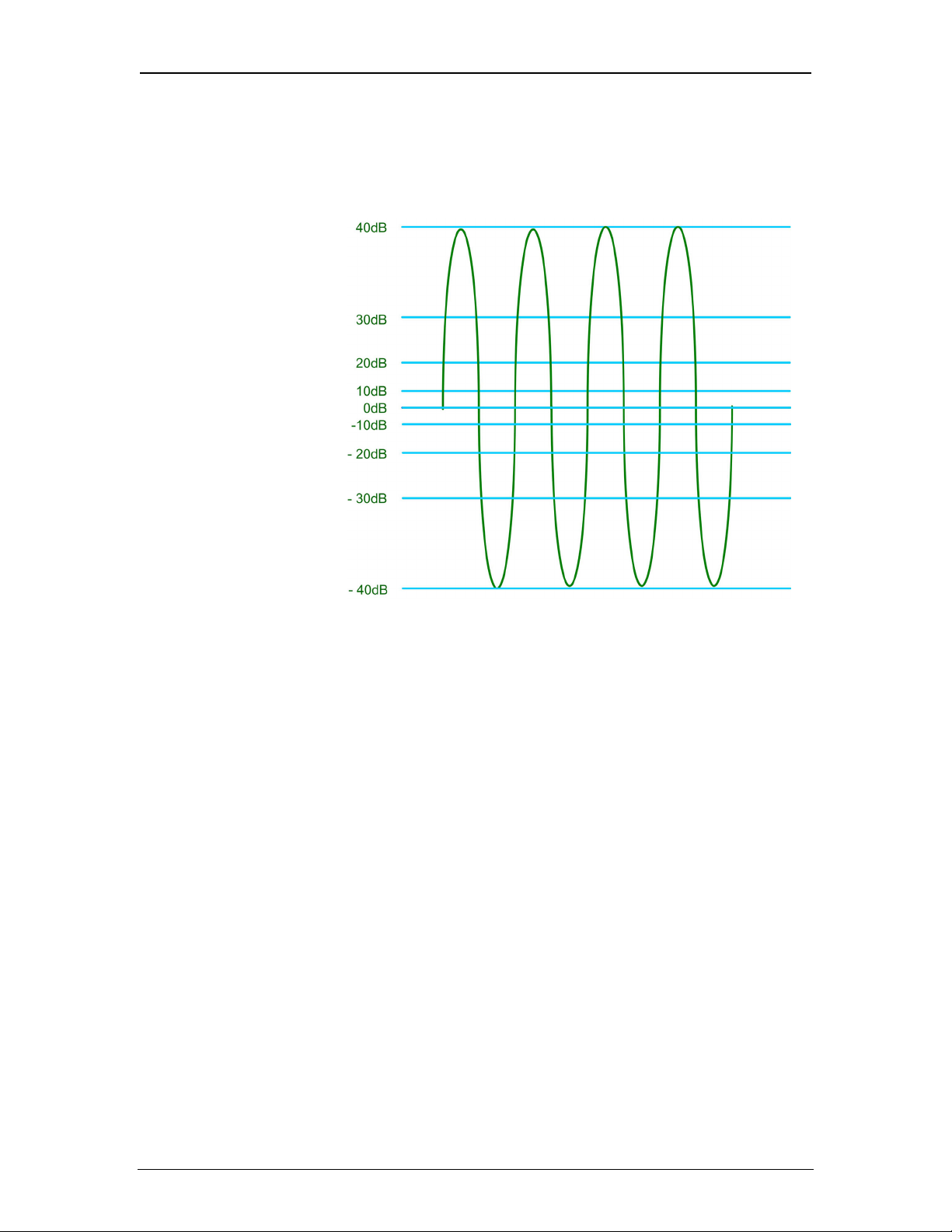

Volume (Amplitude)

Volume is the relative loudness or power of an audio signal resulting

from the amplitude of a sound wave. Amplitude is the vertical distance

from zero to the highest point or peak. Sound waves with higher

amplitudes carry more acoustic power and therefore higher volume.

Volume is measured in units called decibels (dB). A dB is one-tenth of

a Bel, named in part after Alexander Graham Bell (the “B” is

capitalized for Bell) and is used in both audio and video applications.

Decibel is a logarithmic scale measuring the intensity (pressure level)

of sound. Decibels are ratios, not fixed quantities. Decibels are also

referred to as a measurement of "gain" with respect to amplifiers (refer

to the glossary).

For the non-linear human ear to perceive a sound that seems twice as

loud, a ten-decibel (10 dB) increase doubles the sound pressure level,

20dB is twice the sound level of 10dB, and 30dB is twice as loud as

20dB. 40dB is twice the sound level of 30dB and four times the sound

level of 20 decibels.

With some kinds of equipment, such as microphones, analog tape

recorders, or LP playback systems, the dB measurement is "weighted"

as to audibility, because the ear is more sensitive to particular

frequencies. Two common corrections for hearing characteristics are

the A-weighted and the more rigorous C-weighted scales, indicated as

dBA or dBC, respectively.

The term decibel is also used in various other measurements such as

signal-to-noise ratio, gain and dynamic headroom. In these instances,

2 • Surround Sound Primer – DOC. 6122

Page 7

Crestron Surround Sound Primer

decibel refers to the measurement of signal increase or signal strength

instead of sound pressure level, but the logarithmic scale concept

remains the same.

Decibel Scale

VU and dB

VU and dB meters both measure the audio power and they both use

logarithmic scales to report that power. In both measures, the zero is

chosen as the highest power for which distortion is acceptable.

Where VU and dB differ is in how they measure audio power. VU is

short for "volume units" and it is a measure of average audio power. A

VU meter responds relatively slowly and considers the sound volume

over a period of time. Its zero is set to a 1% total harmonic distortion

level in the recorded signal.

Decibel (dB) meters measure instantaneous audio power. A dB meter

responds very rapidly and considers the audio power at each instant. Its

zero is set to a 3% total harmonic distortion level. Because of these

differences in zero definitions, zero on the dB meter is approximately

+8 on the VU meter.

Perception

The human ear can usually hear sounds in the range of 20 Hz to 20

kHz, but are most sensitive to sounds from 2 KHz to 4 KHz, the same

range as the human voice. With age, this range decreases, especially at

the upper limit. Very Low frequencies (below 20Hz) cannot be heard,

but loud low frequency sounds can be felt as vibrations on the skin. The

frequency resolution of the ear is, in the middle range, about 2 Hz.

Changes in pitch larger than 2 Hz are noticeable. Even smaller pitch

Primer – DOC. 6122 Surround Sound • 3

Page 8

Primer Crestron Surround Sound

differences can be perceived when two pitches interfere and are heard

as a frequency difference pitch.

The lower limit of audibility is defined as 0 dB, there is no defined

upper limit. The upper limit is more a question of where the ear will be

physically harmed. This limit depends on the time exposed to the

sound. The ear can be exposed to short periods of sounds of 120 dB

without harm, but long exposure to 80 dB sounds can do permanent

damage. The human voice range is about 68-76 dB; a jet plane creates

about 120 dB of sound.

Sound waves radiate out from the source in straight lines regardless of

frequency or wavelength. But low frequency (long wavelength) sounds

do not fit in confined spaces. They loose their directional character and

that is why you only need one subwoofer for a sound system; you can't

tell where the lowest frequency sounds are coming from when the

sound is confined in a room.

The model of sound so far described is a simplified version, operating

in just one dimension (as opposed to the real world three-dimensions),

and assumes that the vibrating particles of air are held semi-rigidly. In

the real world, air molecules are in constant random motion in all three

dimensions. Air pressure constantly changes as the molecules collide

and rebound from each other and from objects in the environment. This

random motion creates a background level of noise and helps define the

lower limit of hearing.

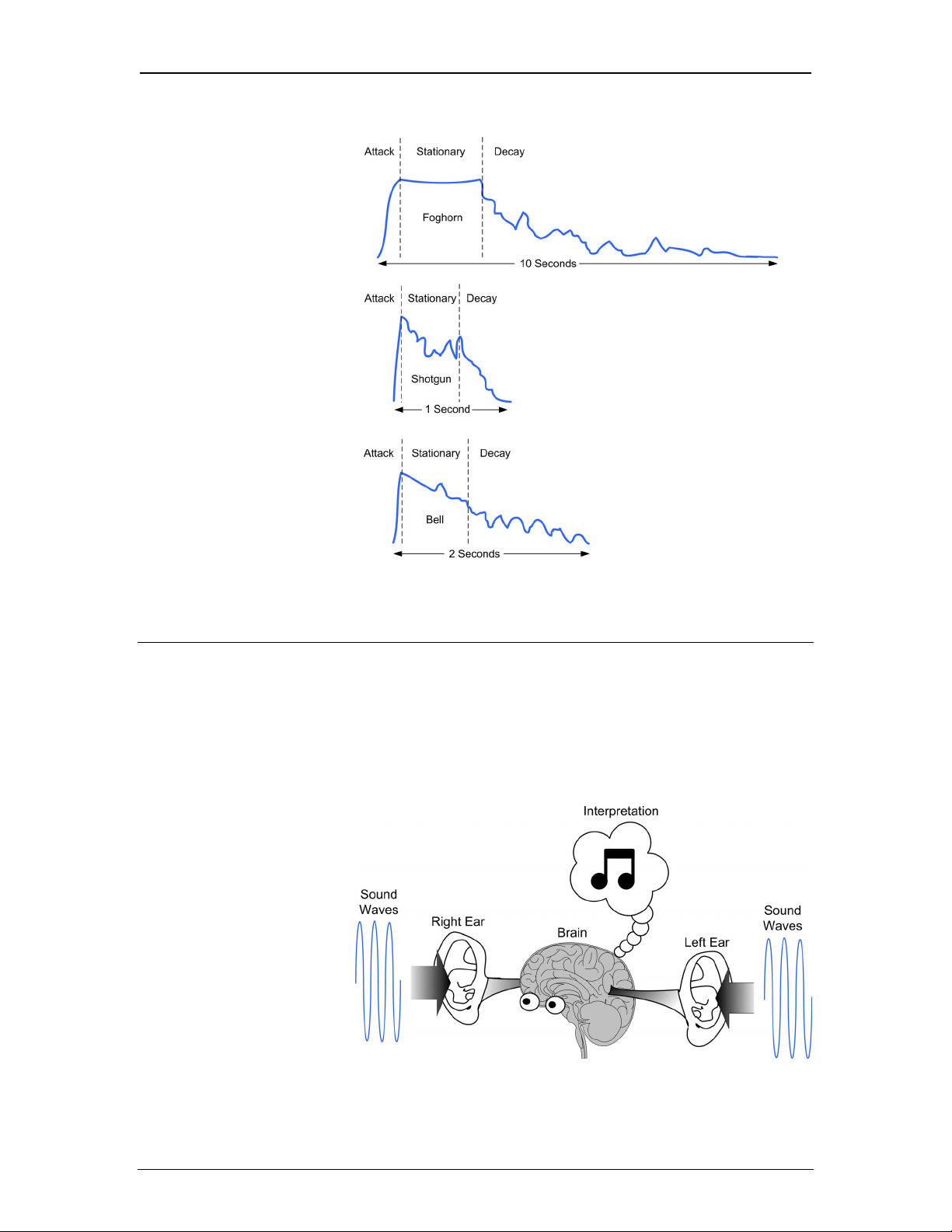

Timbre

Musical timbre is a property of sound. It is composed of spectral

components containing perceptual cues, and can be described by

Fourier series coefficients. The spectral “envelope” of a sound, the

profile of the Fourier series, is the sound amplitude behavior over time.

The pattern that this sound pressure variation creates is the waveform

of the sound.

Timbre is the temporal evolution of the spectral envelope. This

envelope consists of an "attack" portion at the beginning or onset of the

sound, a sustained portion (stationary state), and a decay portion.

Timbre has psychoachoustic properties. A number of transient

fluctuations occur during the initial part (attack), for example, the

moment a violinist puts the bow to the string. These are called onset

transients and are important in identifying the sound and its location in

space.

4 • Surround Sound Primer – DOC. 6122

Page 9

Crestron Surround Sound Primer

Waveform Envelope Examples:

Psychoacoustics

Psychoacoustics is the study of human auditory perception. It includes;

the physical characteristics of sound waves, the physiological structure

of the ear, the electrical signal from the ear to the brain, and the

subjective interpretation of the listener. Understanding psychoacoustics

is essential to creating surround sound.

Primer – DOC. 6122 Surround Sound • 5

Page 10

Primer Crestron Surround Sound

Localization

Our stereophonic ears can discern azimuth or horizontal (left-right)

directionality, and zenith or vertical (up-down) directionality. We

perceive directionality using the localization mechanisms of: interaural

time difference, interaural intensity difference, pinna filtering, and

motion parallax.

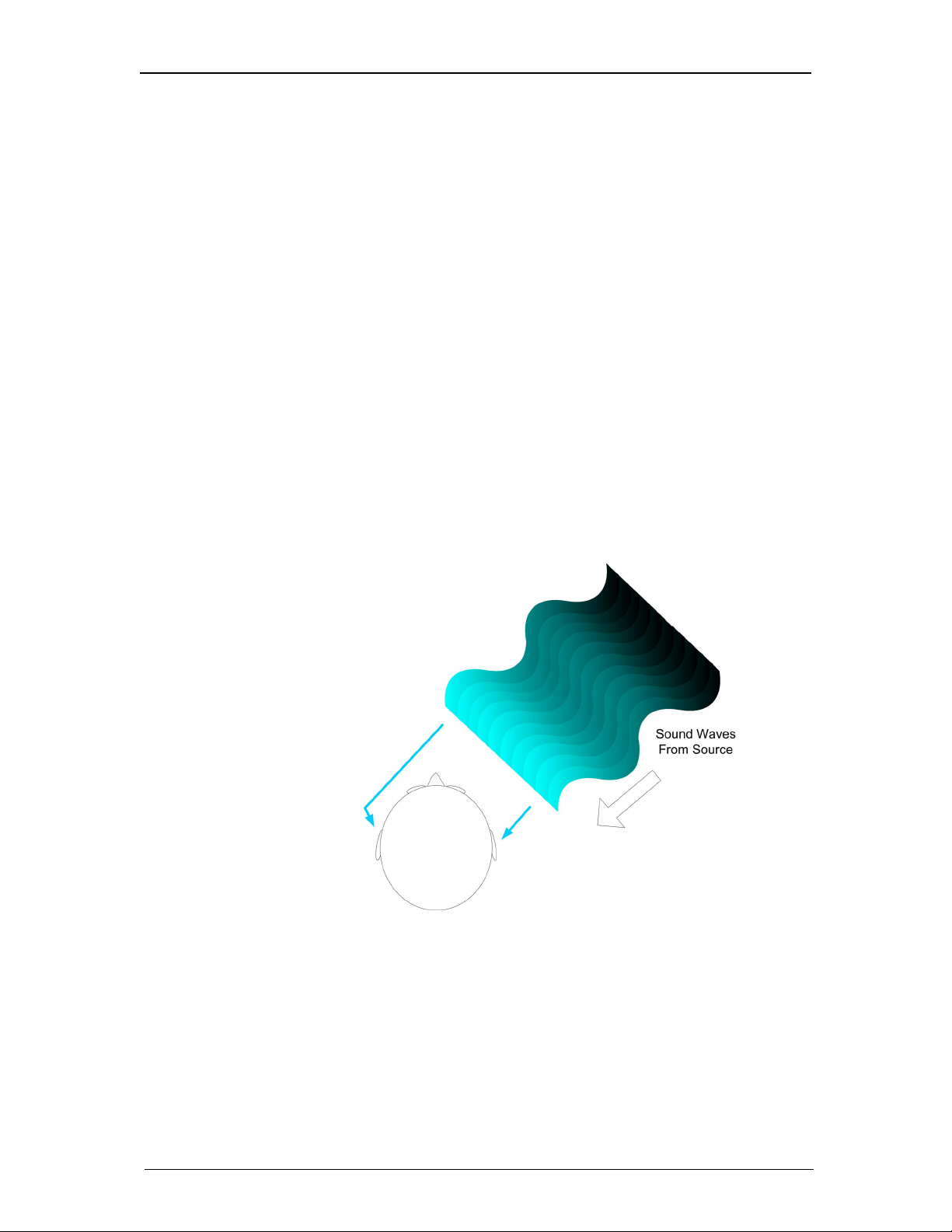

Interaural Time Difference

The horizontal position of a sound is determined by comparing the

information coming from the left and right ears. The Interaural Time

Difference is the difference in arrival time at each ear. The approximate

six-inch separation of the ears slightly delays the sound, each ear

receiving a slight difference when the sound is not equally distant from

the two ears. Although the time delay differences are very slight, the

brain extracts precise directional information from this information.

Human listeners are able to accurately locate the sources of sound from

almost any direction, even from above when interaural differences are

almost zero. Listeners are also capable of locating sound sources in a

room when the reflections from the walls are louder than the sound

coming directly from the source.

Interaural Time Difference

Interaural Intensity Difference

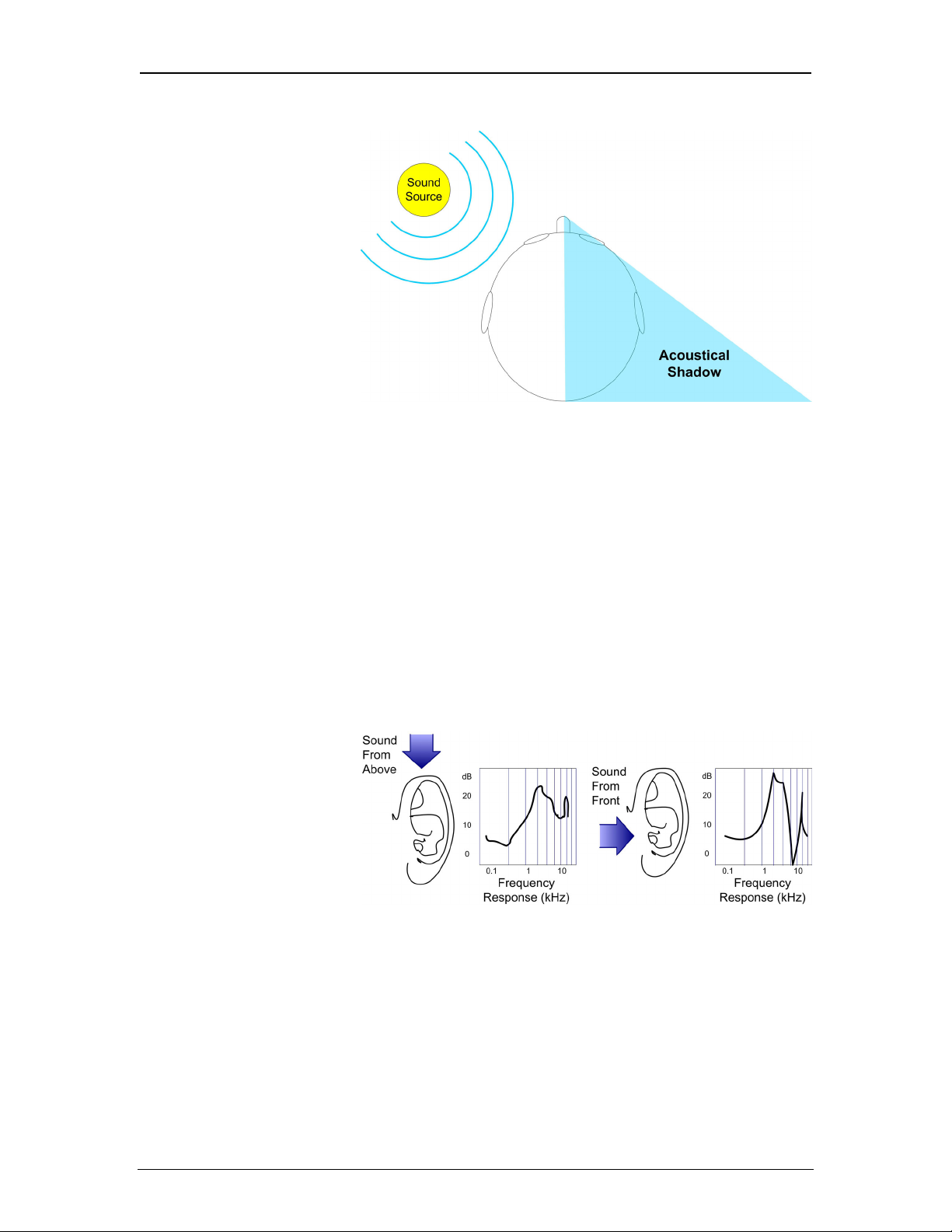

The head, shoulders and upper torso create a sound barrier at one ear or

the other. This acoustical shadow called the Interaural Intensity

Difference.

6 • Surround Sound Primer – DOC. 6122

Page 11

Crestron Surround Sound Primer

Interaural Intensity Difference

For example, a sound coming from the extreme left has a lowered

intensity in the right ear in addition to a slight time delay. The reduced

intensity is the additional distance plus the effect of the acoustical

shadow. The amount of this effect depends on frequency, and is useful

for high frequencies up to wavelengths twice the distance between the

ears (about 1 kHz). Lower frequencies, with longer wavelengths, bend

around obstructions.

Pinna Filtering

The pinna structure is the outer part of the ear. Its forward pointing

position and complex curves affect the way sound is heard. A sound is

coming from behind or above bounces off the pinna in a different way

than from in front or below. When the indirect (reflected) sounds from

the pinna combine with the direct sounds, the wavelengths of the sound

are altered.

Pinna Filtering

The brain, interpreting the altered sounds, produces directional

information. To provide additional cues, small head movements

(motion parallax) allow the brain to judge relative differences.

The Precedence Effect

The precedence effect is a listening strategy unconsciously used to cope

with distorted localization cues in a confined space. Localization

judgments are based on the first arriving sound waves at the beginning

of a sound. This strategy is known as the precedence effect, because the

Primer – DOC. 6122 Surround Sound • 7

Page 12

Primer Crestron Surround Sound

earliest arriving sound wave is given precedence over the subsequent

reflections and reverberations.

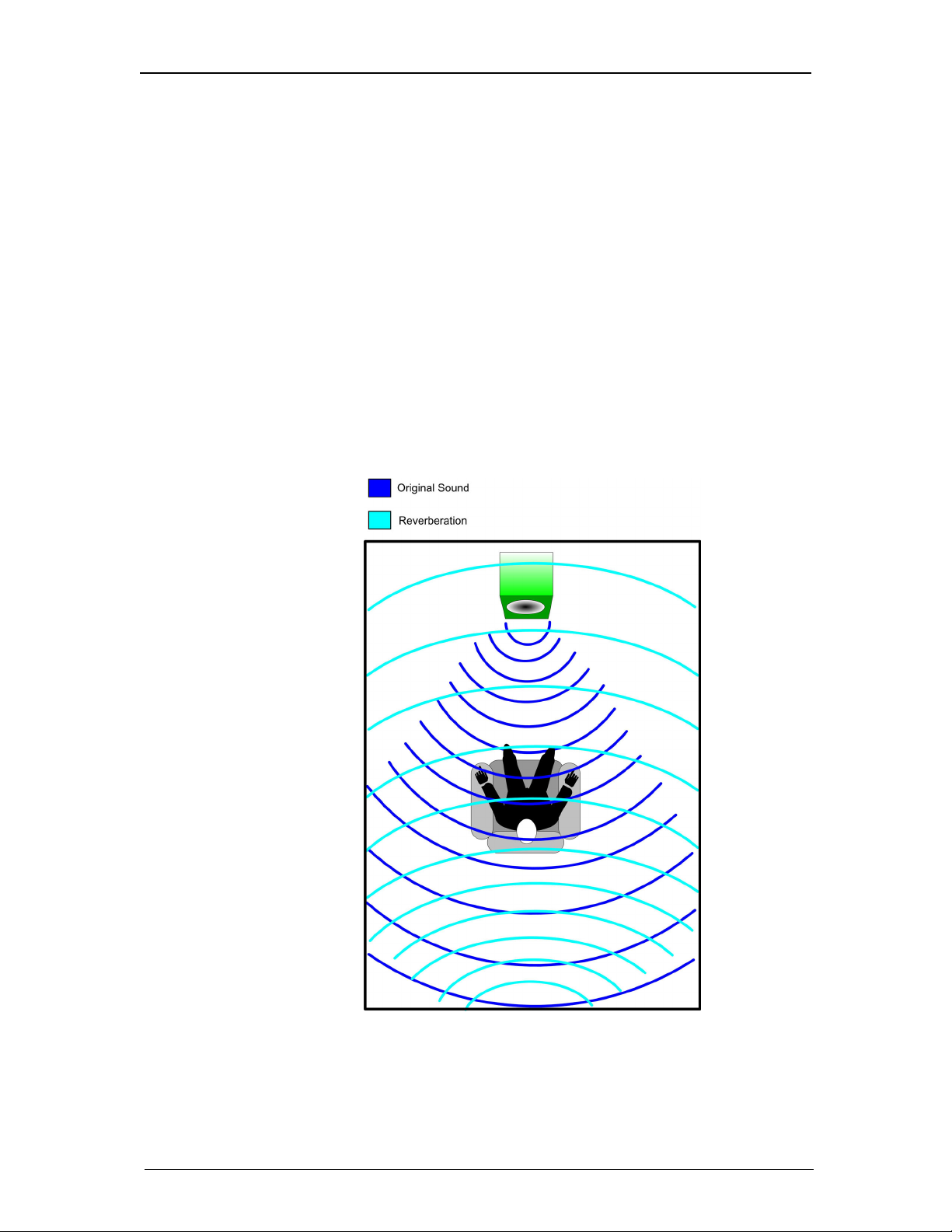

Reverberation and Echoes

The ratio of the direct to reverberant energy is a primary cue for range

and space. Very short delays cause a sound image to shift spatially and

color the tone. Longer delays contribute to a spatial impression of

reverberation. Reverberation can also make speech indistinct by

masking the onset transients.

Sound decreases inversely with the square of the distance. But in an

ordinary room, the sound is reflected and scattered against room

boundaries and objects within the room. Reverberation is essentially an

echo that increases by bouncing off of hard surfaces. Reverberations

are dampened when absorbed by soft materials such as rugs, carpet and

sofas. These reflections are most noticeable when the time delay

between the direct sound and the reverberation gets longer than 30 to

50 ms, the echo threshold.

Reverberations

Acoustic designers place importance on early reflections (arriving

within the first 80 ms), which reinforce the direct sound (as long as the

angle of reflection is not too wide). Reflections arriving after 80 ms add

reverberant energy, which gives the sound spaciousness, warmth and

8 • Surround Sound Primer – DOC. 6122

Page 13

Crestron Surround Sound Primer

envelopment. The acoustic design listening spaces usually involves

creating a balance between clarity, definition, and spaciousness.

Listeners often have different preferences regarding this balance.

Temporal Masking

Temporal masking is a defense mechanism of the ear that is activated

to protect its delicate structures from loud sounds. When exposed to a

loud sound, the human ear reacts by contracting slightly, temporarily

reducing the perceived volume of sounds that follow. Loud sounds in

an audio signal tend to overpower other sounds that occur just before

and just after it.

A History of Surround Sound

The simplest method of sound recording is called monaural or mono.

All the sound is recorded on one audio track and played back on one

speaker.

Two-channel recordings played back on speakers on either side of the

listener are referred to as stereophonic or stereo. The simplest twochannel recordings, (binaural recordings) are produced with two

microphones. Playback of these two channels on two speakers recreates

some of the experience of being present at a concert event. But the

listener must be anchored in the "sweet spot" between the speakers to

maintain the illusion of the phantom sound from between the speakers.

Surround recordings add additional audio channels so that sound comes

from multiple directions. In effect, widening the sweet spot and

enhancing the realistic sound quality.

The term "surround sound" refers to specific multi-channel systems

designed by Dolby Laboratories, but is commonly used as a generic

term for theater and home theater multi-channel sound systems.

Early Surround

Walt Disney's "Fantasia" (1941), was one of the first surround sound

motion pictures. Four separate recordings of each orchestra section

were recorded on a separate reel of film and played through speakers

positioned around the theater.

By the late 1950s, movies were encoded with simpler multi-channel

formats. Several different systems emerged, including Cinerama and

Cinemascope. These systems were referred to as stereophonic sound, or

theater stereo. Stereophonic sound used multiple magnetic audio tracks

at the edges of the film. The standard film format could support two

optical audio tracks or up to six magnetic audio tracks. A four-channel

theater system included: left, right, center speakers behind the screen,

and surround speakers along the sides and back of the theater.

Quadraphonic

In the quadraphonic systems of the early 1970s, two rear surround

channels were combined (matrixed or encoded) with the two front

Primer – DOC. 6122 Surround Sound • 9

Page 14

Primer Crestron Surround Sound

channels so that the two sides of an LP groove carried four playback

channels. This four-speaker system required a decoder and a separate

rear channel amplifier. Problems with system standardization prevented

technological development.

Dolby

In the mid 1970's, Dolby Laboratories (www.dolby.com) devised a

method to encode additional audio channels. This technology, initially

known as Dolby Stereo when it was launched in 1975, was later

renamed Dolby Surround.

In 1982, Dolby Surround and enhanced Dolby Pro Logic playback

decoder became available to the consumer. Dolby Surround, like the

earlier Quadraphonic systems, used channel matrixing to combine four

audio channels into two signals.

Also described as 4-2-4 matrixing, these signals (compatible with twospeaker stereo playback) can be decoded into multiple channels.

Basic Dolby Surround decoding yields: front left, front right, and one

surround channel (the center channel is a phantom).

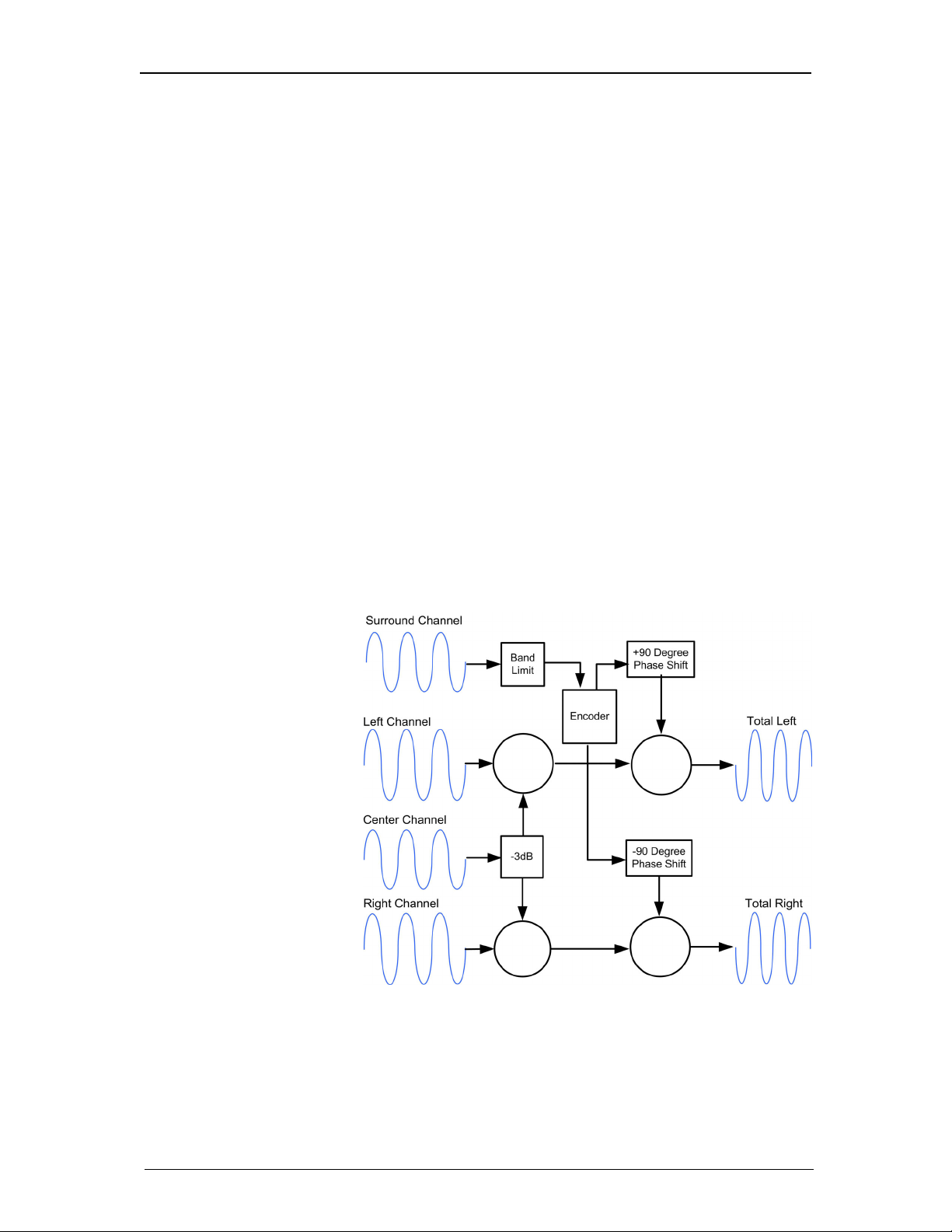

The 4-2-4 encoder accepts four separate inputs (left, right, center and

surround) and creates two outputs (left-total and right-total). The front

left and right channels are a regular stereo signal. The center channel is

inserted equally in the left and right channel, with a 3 dB level

reduction to maintain constant acoustic power.

Dolby 4-2-4 Encoding

The surround input is also divided equally between the left-total and

right-total signals but first undergoes three processing steps:

• It is frequency band-limited from 100 Hz to 7 kHz

• It is encoded with a modified Dolby B-type noise reduction

10 • Surround Sound Primer – DOC. 6122

Page 15

Crestron Surround Sound Primer

• The surround signal is split into two identical signals; one

signal is phase shifted by +90 degrees relative to the fronts and

the other signal by -90 degrees, creating a 180-degree phase

difference between the surround signal components.

To recapture the matrix signals:

1. The center channel is extracted by summing the left-total and

right-total signals.

2. The surround signal is extracted by taking the difference

between them.

3. The identical center channel components in the left-total and

right-total signals cancel out one another in the surround

output.

4. The equal and opposite surround channel components cancel

out one another in the center output.

5. Signals that are different in left-total and right-total are applied

to the front left and right speakers.

6. Signals that are identical and in-phase are applied to the center

channel.

7. Signals that are identical but out of phase are applied to the

rear surround speaker.

Dolby Pro Logic

Dolby Pro Logic is an enhanced type of Dolby Surround decoder

introduced in 1987. Pro Logic uses directional enhancement circuitry to

actively steer sound toward the dominant position. Dolby Surround Pro

Logic decoding yields: left, center, right, and a monaural surround

channel.

Dolby Pro Logic Decoder Block Diagram

It determines the dominant signal of the four outputs by comparing the

signal level of the left-total and right-total signals while simultaneously

comparing the level of the sum and difference signals. An active

matrixing circuit uses this information to output the dominant signal to

Primer – DOC. 6122 Surround Sound • 11

Page 16

Primer Crestron Surround Sound

the appropriate channel while canceling other channels. The amount of

enhancement applied is relative to the level of dominance.

Dolby Pro Logic II

Pro Logic II includes bass management, allowing bass to be reproduced

from the main speakers, or an LFE (Low frequency effects) output.

Pro Logic II uses directional enhancement, and a feedback loop allows

the anti-phase signals to more closely match unwanted crosstalk

signals. Control of the spatial dimensionality and front sound field are

also provided.

Dolby Digital

Storage and playback of audio signals in a digital format has a lot of

advantages over analog. Low-cost digital signal processing (DSP) has

revolutionized the audio/video industry. The availability of complex

processing coupled with increases in storage density has opened up

new possibilities in audio/video coding and playback. As its name

implies, Dolby Digital, introduced in 1997, takes advantage of DSP. It

uses a digital processing method, which exploits the limits of human

hearing.

Playback of Dolby Digital is similar to that of Dolby Surround, but the

technologies are very different. Dolby Surround is primarily on analog

storage media, but Dolby Digital is a digital-only coding system.

Dolby Digital Surround EX

Dolby Digital Surround EX provides a third surround channel on Dolby

Digital movie soundtracks. The third surround channel can be decoded

at the cinema or home viewer for playback over surround speakers

located behind the seating area. Surround speakers to the sides

reproduce the left and right surround channels. The back surround

channel is matrix-encoded onto the left and right surround channels of a

conventional 5.1 mix, permitting conventional 5.1 playback.

A/V receivers are available with either Dolby Digital EX or THX

Surround EX decoding which derives the extra surround channel for

playback in 6.1 (three surround speakers) or 7.1 (four surround

speakers) configurations. The additional rear speakers are matrixed and

not discrete in nature.

12 • Surround Sound Primer – DOC. 6122

Page 17

Crestron Surround Sound Primer

7.1 Soundfield (Subwoofer not shown)

DTS

DTS Coherent Acoustics is a variable/high bit rate codec for discrete

5.1 multichannel digital audio. DTS Coherent Acoustics is a competing

codec to Dolby Laboratories AC-3®. DTS encoding requires less

compression of the signal which provides improved detail, clarity and

dynamics.

DTS-ES

DTS-ES is a digital matrix decoder developed by DTS for back channel

5.1 surround sound in theatres. It is comparable to Dolby Digital

Surround EX soundtracks and is a competitor to the Dolby SA10

adapter, the proprietary back surround decoder for cinemas.

Coherent Acoustics is a flexible audio coding scheme, allowing for a

wide range of bit resolutions and sampling rates. Its operation ranges

from low bit rate, lossy perceptual coding (meaning that it reduces data

based on psychoacoustics principles) to high quality, lossless coding

with variable bit rates. Encoded data rates of 32 to 4096kbps, sampling

rates of 8 to 192kHz, resolutions of 16- to 24-bit and up to eight

channels are possible.

DTS-ES for home theater features:

• The back channel is matrixed in the left and right surround

channels.

• A discrete back channel can be optionally encoded.

• A DTS-ES 6.1 discrete decoder plays the discrete back

channel and subtracts the encoded discrete back channel out of

the matrixed left and right surround channels. This is the only

decoding mode with a discrete rear speaker for the home.

• DTS-ES is fully compatible with 6.1 and 5.1 matrix decoders.

Primer – DOC. 6122 Surround Sound • 13

Page 18

Primer Crestron Surround Sound

DTS-Neo 6

The DTS-Neo 6 derives the surround sound channels from a technique

of advanced sub-band processing by using algorithms. Stereo music

material may be expanded from stereo to 5.1 or 6.1 surround channels.

Users with 5.1 and 6.1 systems derive five and six separate channels,

respectively, corresponding to the standard home-theater speaker

layouts. Bass management in the preamp or receiver generates the

subwoofer channel.

• Neo 6 decodes Extended Surround matrix soundtracks, and

generates a back channel from 5.1 material.

• Neo 6 technology separately steers various sound elements

within a channel or channels in a naturalistic way that follows

the original presentation.

• Neo 6 Music and Neo 6 Cinema have slightly different

characteristics because these are recorded in different types of

sound studios.

Pulse Code Modulation

The representation of sound as a mathematical sum of frequencies

(digital) is just as valid as a linear series of pressure measurements

(analog).

Pulse Code Modulation (PCM) representations of high quality audio is

the industry standard method of digitizing analog audio signals.

An audio signal, recorded as a time varying electrical signal, is a

fluctuating voltage. The amplitude and polarity of this voltage are

directly related to the changes in air pressure. This signal is measured

or sampled at a rate of at least twice the maximum frequency contained

in the audio signal (the Nyquist rate), typically 44.1 kHz for Compact

Discs or 48 kHz for professional audio applications. Each sample is

given a binary number to represent the measured voltage at that instant

in time.

The binary number, representing the original signal, is stored in

memory. This representation of the audio signal must be converted

back to a continuously time varying analog signal.

Typically a 16-bit number is used in PCM, yielding a number range up

to about 32767. For a 1-volt analog signal, a 16-bit number can only

resolve to 1/32767 of a volt. Because the 16-bit number cannot

represent voltages that lie between these 1/32767 steps, the

measurement is rounded off to a discrete number. This loss in precision

creates a slight increase in the background noise during playback.

Generally, small binary numbers generate higher levels of noise or

distortion, and large binary word lengths have such low noise that the

dynamic range can exceed human hearing. The size of the binary

number determines the extent of lost information and determines the

fidelity of the reproduced audio.

One fundamental problem of PCM digital audio recorders is the

recording of all frequencies with equal importance. PCM operates in a

time domain; the binary numbers represent a time-series of sampled

voltages. The frequency domain of all the components of the time-

14 • Surround Sound Primer – DOC. 6122

Page 19

Crestron Surround Sound Primer

series have the same resolution. In playback, PCM ignores the

frequency dependent sensitivity of human hearing, and gives far too

much weight to high frequencies (above 12 kHz) in comparison to low

frequencies (below 4 kHz).

Various algorithms have been developed to compensate for the human

listener. These algorithms modify the digital signal to more closely

approximate natural sound. "Lossy" audio coders save storage space by

eliminating data that is redundant or unnecessary to reproduce good

sound quality, which is why they are also known as "perceptual"

coders. Dolby Digital, for example, can reduce the audio data up to a

factor of 15:1 compared to the source PCM audio data.

Speakers

Surround Speakers

Direct radiating speakers in a surround sound system provide very

precise sound imaging.

Dipolar and bipolar speakers provide a diffuse sound without creating a

specific point source. Dipolar speakers have two identical drivers

mounted on opposite sides of the cabinet operating 180 degrees out of

phase. Bipolar speakers have two sets of identical drivers mounted on

opposite sides of the cabinet operating in phase.

Surround channel speakers are similar to bookshelf speakers. Surround

speakers should have similar response characteristics to the other

speakers in a surround sound system to present a uniform sound

environment. This is also referred to as “timbre matching”.

NOTE: When referring to loudspeakers, the term "Q" is a measure of

directionality. At low frequencies, the Q will always be low. At higher

frequencies, it gets larger, depending on the size of the drivers

involved. Thus, Q is a measurement of frequency-dependent radiation

pattern and polar characteristics. Q is also a measurement of the slope

of any peaks in loudspeaker, equalizer, or microphone frequencyresponse curves.

SubWoofers

A subwoofer is a special type of speaker that reproduces only the lower

portion of the audible frequency spectrum (usually from 120 Hz down

to the lower limit of hearing, 20 Hz).

There are two types of subwoofers. Powered subwoofers have a built-in

amplifier. Non-powered subwoofers require an external amplifier and

may be connected to a separate amplifier or to the main sound system.

Subwoofers may be contained in a variety of different enclosures.

A subwoofer operates in an omnidirectional manner, meaning it can be

placed almost anywhere in the room. The human ear cannot locate the

origin of sound waves below 80 Hz, so low frequency sound waves

have no apparent direction in a closed room. The Dolby 5.1 digital

surround sound systems allocate a specific low frequency effects (LFE)

channel for subwoofers.

Primer – DOC. 6122 Surround Sound • 15

Page 20

Primer Crestron Surround Sound

Bass Management

In a surround sound speaker system, the higher frequencies are

distributed to the speakers around the room: Left, Center, Right, Left

and Right Surround, and in a 7.1 system, Left and Right Rear Surround.

The lower, or Bass frequencies for all of these channels can be directed

through a Bass Management circuit, which outputs to a subwoofer.

Producing a consistent bass response from all the channels while

maintaining the integrity of the soundscape is impossible without bass

management. Bass management electronically imposes a bass

frequency crossover (typically 80 to 120 Hz) on some or all the

channels, and redirects the bass frequencies from each of the channels

to the subwoofer.

Large and Small

Each speaker in a surround system can be set for large or small. When

you select "Large" in a bass management function, it means all of that

channel's sounds, the entire range of frequencies, is directed to that

speaker. When "Small" is selected, the bass sounds (below the

crossover frequency) are filtered out of that speaker and directed to the

subwoofer. Physically small speakers may not be able to reproduce

very low frequencies without introducing distortion. Bass management

permits each speaker to operate in the range for which it was designed.

NOTE: Bass management sends the bass content of small speakers to

the front speakers if they are large and there is no subwoofer.

Speaker Set-up Suggestions

The following are suggestions for setting up the bass management

feature of the surround system.

SPEAKER TYPE SETTINGS

Front Floor-Standing Type With Built-In

Subwoofer And No Additional Subwoofer

Front Floor-Standing With Built-In Woofer

And An Additional Subwoofer

Any Speaker System That Does Not Have

A Subwoofer In The System

Front Bookshelf Speaker With Small

Woofer or a Separate Powered Subwoofer

Front Bookshelf Speaker With 8" Woofer Or

Dual 6" Woofers

Center Speaker Center channel speakers do not carry as much bass as a subwoofer or

Surround Speakers For bookshelf, on-wall or in-wall speakers as surrounds, select "Small."

Subwoofer Select subwoofer as "On."

Select front speakers as "Large"

Select Subwoofer as "Not Present"

Select front speakers as "Large"

Select Subwoofer as "On"

Select front speakers as "Large" even if it the speakers are physically

small.

Select subwoofer as "Not Present."

Select front as "Small."

Select Subwoofer as "On"

Set the front speakers as either "Small" or "Large".

Set the subwoofer as "On."

main speakers, set the center speaker as "Small."

For large floor-standing surround speakers, select "Large."

The subwoofer reproduces the Low Frequency Effects bass channel along

with the bass of any other speakers that have been selected as "Small."

16 • Surround Sound Primer – DOC. 6122

Page 21

Crestron Surround Sound Primer

Standing Waves

A standing wave is a low frequency distortion that happens when a

particular frequency has a unique relationship to the size or shape of a

room, resulting in a increasingly resonating sound. The original signal

is amplified to a loud, booming bass that overpowers all of the other

frequencies. This wave phenomenon results from the interference of

sound waves of the same frequency and kind traveling in opposite

directions. For example, if a string is stretched between two supports

and a wave sent down its length, the wave is reflected and sent back in

the opposite direction, resulting in a standing wave. Standing waves

can be also seen in columns, tubes, plates, rods and diaphragms that are

the component parts of musical instruments.

Standing waves occur in rooms by low frequency sounds with long

wavelengths. The reflected sound wave is nearly in perfect phase with

the original wave and creates a fixed spatial pattern of nodes and

antinodes. The nodes are experienced as dead spots, points of nearly

complete cancellation. The antinodes reinforce and amplify the original

sound, creating the booming bass sound. Standing waves can be

reduced or eliminated by careful placement of subwoofers, rearranging

furniture, and equalization adjustment.

Phase

Phase is a specific point in a sound wave, measured from a zero point

and given as an angle. Many powered subwoofers feature a phase

switch allowing a change of phase from 0 degrees to 180 degrees (180

degrees is exactly one half of a complete cycle). When two audio

signals are out of phase, they cancel each other out resulting in a weak

signal (or no signal at all if they are 180 degrees out of phase). This

occurs when one sound wave is at its peak while the other is at its

bottom point, the trough. Similar to adding a negative one to a positive

one, the end result is zero. By switching the phase, the sound waves are

aligned and reinforce one another instead of canceling each other out.

When connecting speakers, ensure that the phase (+ and -) connections

are correct and consistent. Many powered subwoofers feature a phase

switch, allowing the user to change the phase from 0 degrees to 180

degrees. By switching the phase, sound waves from the subwoofer can

be aligned with other sound waves to reinforce one another instead of

canceling each other out.

Phase Shift

Primer – DOC. 6122 Surround Sound • 17

Page 22

Primer Crestron Surround Sound

Surround Sound Speaker Placement

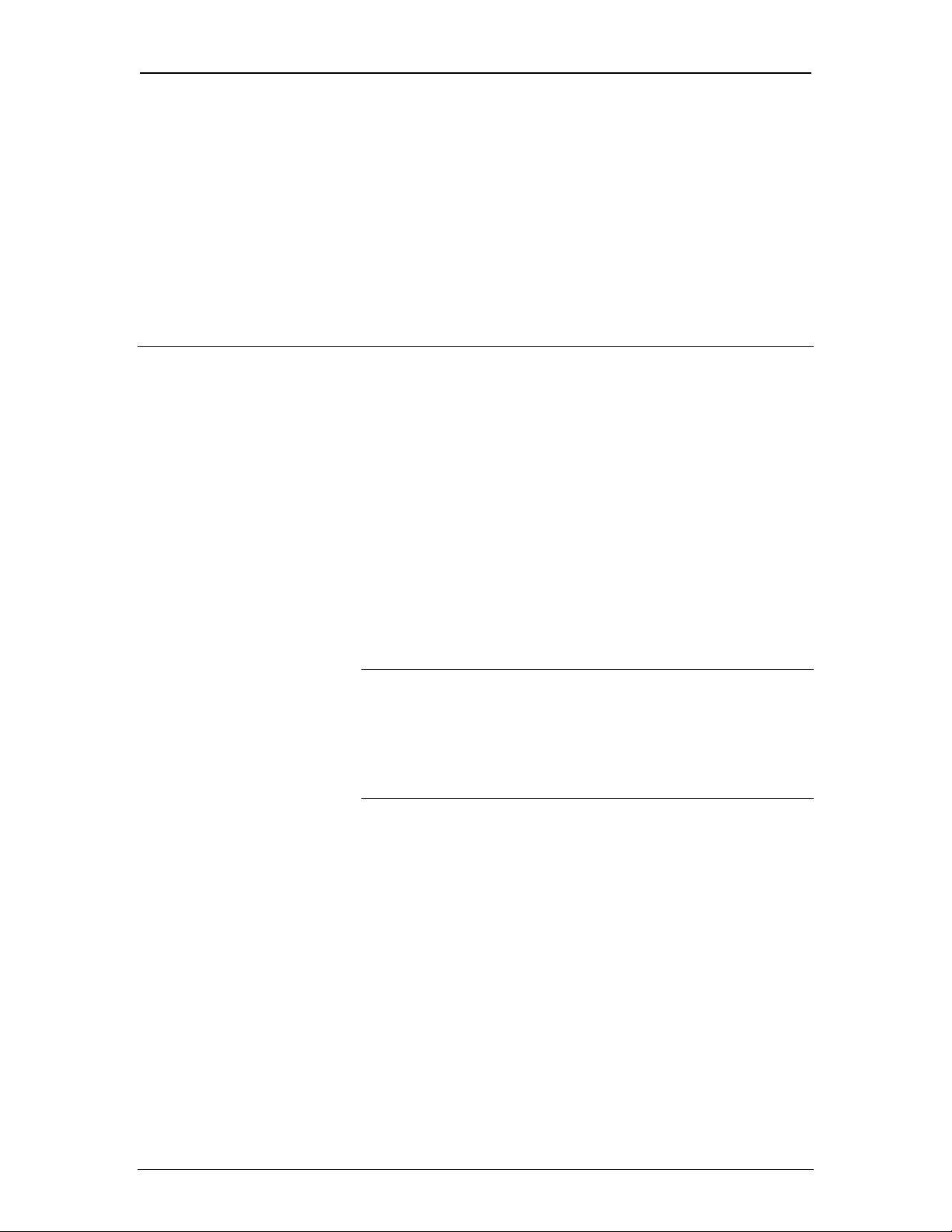

Front Speaker Placement

The front speakers are arrayed across the front of the viewing/listening

area. These three speakers should be as equidistant as possible from the

center listener position and on a plane with the listener's ears.

Try not to position the center speaker closer to the listeners than the left

and right front speakers if possible.

The left and right front speakers are positioned at an angle of 45 to 60

degrees to the center-most listener. An angle nearer to 45 degrees is

preferred if the system is used primarily for viewing movies. This

position approximates the circumstances under which film soundtracks

are mixed. A wider angle, nearer to 60 degrees, is recommended when

the system is used primarily for listening to music.

The three front speakers should be as close as possible to the same

height, at or near ear level.

Ideal 5.1 Speaker Placement (Subwoofer not shown)

18 • Surround Sound Primer – DOC. 6122

Page 23

Crestron Surround Sound Primer

Surround Speaker Placement Optimized for Movie Soundtracks

The surround speakers should be placed alongside and slightly to the

rear of (but not behind) the primary seating position, two to three feet

above ear level to help minimize localization effects, and aimed

directly across the listening area, not down at the listeners. This

arrangement creates a surround soundfield throughout the listening

area.

Surround EX Speaker Placement

Dolby Digital Surround EX (7.1) encodes Dolby Digital program with

additional rear surround channels for playback over two additional

surround speakers placed behind the viewer. Surround EX program

material is fully compatible with regular Dolby Digital 5.1 playback

(the additional center rear information is split between the left and right

rear surround channels). Rear channel speakers should be placed at an

angle of 60 to 90 degrees to the prime listening spot.

Ideal 7.1 Speaker Placement (Subwoofer not shown)

Flat Response

A flat response is a theoretical ideal for audio components, representing

a frequency response that does not deviate from a flat line (0 dB) over

the audible frequency spectrum. A perfectly flat response is practically

impossible in the real world. The interactions with the room and

surfaces in the room alter the waveforms. A flat response from a home

theater system is not very pleasing to the human ear. Ideally, you first

attain the flattest response possible, and then adjust the system to the

listener’s preference.

Generally, audio component response specifications are given as a

function of the frequency range. For example, a speaker can have a flat

Primer – DOC. 6122 Surround Sound • 19

Page 24

Primer Crestron Surround Sound

response of 2 dB within the range of 50 Hz to 18 kHz. This means that

the speaker is within 2 dB (at the limit of perception) of a flat response

within the given frequency range. All speakers will fluctuate above and

below an ideal flat response. Speakers that remain within two or three

dB of a flat response are very linear and nearly flat. Most speakers have

a drop off in response in the very low and very high frequency ranges.

These frequency areas are outside of the critical midrange frequencies

to which human hearing is most attuned.

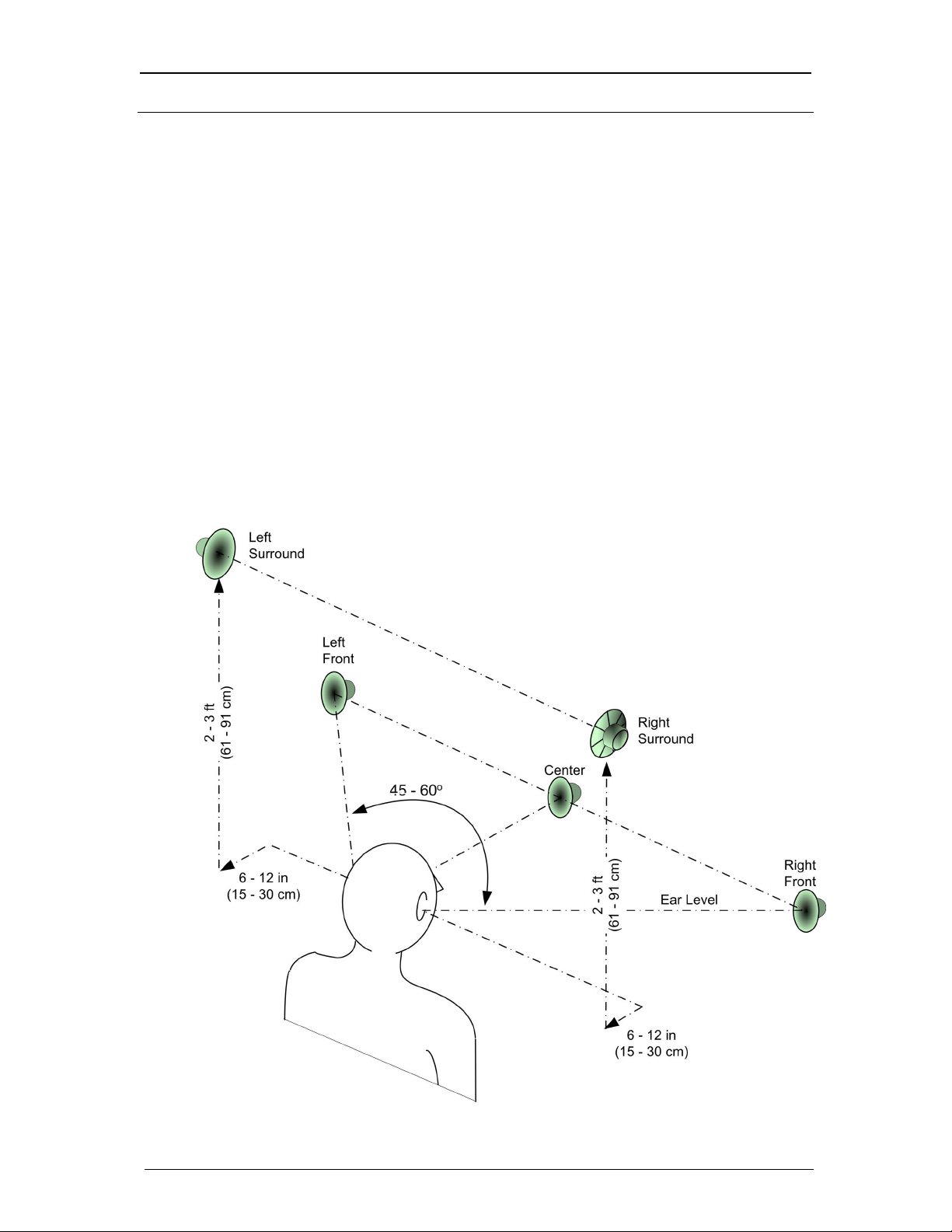

Equalization

Equalization is a change in the frequency response of an audio signal.

This is accomplished by adjusting the amplitude of the signal within a

range of frequencies. The aim of equalization is to achieve a flatter

frequency response (more closely matching the original signal), by

compensating for the room acoustics and speaker deficiencies.

The following diagram illustrates the use of equalization on an audio

signal. The listening area is first tested with a pink noise generator and

a sound level meter to determine the flatness of the room. Equalizers

are then used to increase or decrease those portions of the audio

spectrum that fall above or below the ideal response of 0 dB. The result

of this compensation is a flatter response, more closely resembling the

original audio recording as intended. The equalizer settings mirror the

actual room readings. In practice, sound engineers would not increase

the amplitude of frequencies that fall below the flat response line.

Generally, an artificial increase of those frequencies can introduce

undesirable distortion.

NOTE: Equalizer adjustments should only be performed when the

actual room response is known.

20 • Surround Sound Primer – DOC. 6122

Page 25

Crestron Surround Sound Primer

Room Adjustments

After completing the hardware hookup procedures, perform these

procedures to set the surround sound default values according to the

actual environment of the listening area.

Required Test Equipment:

• Sound Level Meter

• Tape Measure

The following equipment is recommended to optimize performance

relative to the listening area, speaker arrangement, and the user's

preferences:

• Audio Spectrum Analyzer

• Pink Noise Generator

• Avia Guide to Home Theater, from Ovation Software

(www.ovationsw.com)

• Video Essentials, from DVD International

(www.videoessentials.com)

Setup Procedures

NOTE: The Avia Guide to Home Theater and the Video Essentials

DVD discs provide information and extensive instructions for setting

up a home theater environment. Follow the instructions provided.

NOTE: Part of the setup process is determined by the source of the

sound to be reproduced-either movie audio, or other kinds of audio-and

the types of speakers used.

The first thing to recognize when constructing a home theater system is

that the room itself can be more important than the equipment. The

correct speaker placement, furniture and acoustical treatments can turn

a good sounding room into a great sounding room. Balancing the

speakers, the room and the right electronic equipment raises the home

theater experience to an amazing level.

This section focuses on performing the proper adjustments to a room

and audio system, providing your client with the best possible home

theater acoustics. Covered here are speaker placement, equalizers, and

compensation techniques to overcome poor room acoustics. Regardless

of the specifications, a system is not great until it sounds great. What

most people desire is an even room sound, the bass and the treble going

together smoothly and in a complimentary manner. One portion of the

sound spectrum overriding another is not the optimum choice for a

great listening experience.

Primer – DOC. 6122 Surround Sound • 21

Page 26

Primer Crestron Surround Sound

What We Hear in a Room

The first sound to arrive from an audio system is direct sound. This is

also considered the on-axis sound. Direct sound occurs when the

speaker is angled toward the listener, and this is the best possible sound

the speaker produces.

Early reflections are heard next, which follow the direct sound by a few

milliseconds and are not as loud as the direct sound.

After the reflections we hear the reverberation of the rest of the room.

These reverberations can come from multiple directions at a lower

sound level. Together, they cause a confusing soundscape if not dealt

with properly.

Rooms also contain resonance that can emphasize and attenuate certain

frequencies. The resonance depends on the location of the listener and

the placement of the speakers. The effects of resonances are often

experienced at the lower range of frequencies.

Very often we cannot overcome all of these deficits, however we can

work with them and turn them in our favor.

We begin by selecting good quality speakers that provide a smooth

(flat) frequency response over the given drivers operational

frequencies.

Placing a speaker too close to a reflective surface, such as a wall,

adversely affects the audio by presenting an inaccurate, heavy sound.

The sound-reflecting wall acts like second speaker, increasing the

sound level but decreasing clarity. This situation can be resolved by

adding some absorption material.

You can use a variety of materials, such as acoustical foam and

acoustic fiberglass. Even a drapery can help absorb the reflection. A

room that is too acoustically “live” or “dead” does not sound good. A

mixture of the two creates the most realistic home theater environment.

If you have a room that is relatively “live” sounding, use a speaker

equipped with a horn tweeter for good directionality. It can be aimed

towards the listener and away from reflective surfaces.

Speakers Placed in Cabinets

Although best placement for a speaker is about one to three feet from

the nearest wall, we do not always have that option. Many home

theaters incorporate cabinets to add a warm feel to the room and hide

the electronic equipment. Placing a speaker in a cabinet can hinder

speaker function, especially if the speaker is improperly positioned and

mounted in the cabinet.

The speaker should be as close to the front of the cabinet as possible.

As a speaker is placed deeper in the cabinet, more boundaries interfere

with the sound.

How the speaker is mounted in the cabinet is important. If the cabinet

vibrates, you should acoustically uncouple the speaker from the

cabinet. This vibration can transfer from the speaker to the cabinet,

reducing the full sound capability of the speaker. Placing the speaker

on mounting posts (that may be provided) or on a platform designed to

reduce vibration transmission can help.

22 • Surround Sound Primer – DOC. 6122

Page 27

Crestron Surround Sound Primer

Many cabinets also have a door covering the speaker. The interaction

with this can have an adverse effect on the sound. Ensure that no part of

the door covers the speaker drivers. Try to keep the door from covering

the whole face of the speaker cabinet. This is not always possible,

however if you have the opportunity to influence the design of the

cabinet door, you could achieve better results. An important part of

cabinet design is the choice of covering of the speaker opening. Do not

use just any fabric. Use a fabric produced by an acoustics company.

Companies that manufacture acoustic fabric have different styles and

designs available that can appear like wood or have a unique pattern

that can add style to the theater enclosure.

Stereo Imaging

Begin by playing pink noise through both speakers. The stereo image

should be floating between the two speakers. While moving forward

and back, the image should stay centered. While moving left and right,

the image should move left and right correspondingly.

When listening to stereo music, an image between the two speakers

should be audible. In most recordings, the vocalist is centered while the

band is arrayed across the front soundstage. While moving left and

right, the image should move left and right correspondingly. Use

several different selections to perform this test. Selections are mixed

with different interpretations of the sound engineer image design.

If the image is not as tight as desired, the absorption methods for wall

reflections will help greatly. If a little more spaciousness is desired,

allow for reflections and you can even add diffusers to help create the

desired sound. Doing either of these is fine as long as the stereo image

is not adversely affected. No matter what your preference, you always

want to maintain a good stereo image.

Absorption and reflection can be added to any wall. Make sure it is

used in the proper location for the desired room response. You do not

want a heavy reflection off the back wall sitting right against it (like

many seats in a home theater). Instead, add a little absorption directly

behind the listener and diffusion to the sides. This can reduce the

unwanted reflection that can ruin the stereo image while still providing

a feeling of spaciousness.

A diffuser is a device that takes any incident sound and directs it in

different directions. Some sounds return to the listener, while others do

not. You can purchase diffusers from some of the acoustics companies

or you can use things in the room such as bookcases, paintings,

sculpture and other furniture that has surfaces of varying depths.

About Surround Sound

The same theories are applied to surround speakers as stereo speakers.

Placement should be about six feet above the finished floor, and should

present a stereo image in their placement around the room. For

instance, if a plane rumbles directly overhead, you should hear the

sound travel evenly from front to back. A single pair of surrounds

should be placed slightly behind the main seating position. If you have

additional rears they should be placed on the back wall, positioned

between the front right and left speakers and directed toward the front

Primer – DOC. 6122 Surround Sound • 23

Page 28

Primer Crestron Surround Sound

of the room. If you have a single rear speaker, place it at the same

height as the surround speakers, in the center of the back wall facing

the front of the room. You should keep all of the surround speakers at

the same height to give the sound an even feel as it passes throughout

the surrounds.

Subwoofer Placement

Too much or too little bass creates an unsatisfying listening experience.

The number of subwoofers, their locations and the listener position are

extremely important to good bass. Frequencies below 120 Hz are omnidirectional, the sound radiates throughout the room and the source

cannot be localized. This works to our advantage in many rooms.

Listeners need not sit in front of the subwoofer to get good bass

response.

The location of the subwoofer is critical for the best response; the worst

place to put a subwoofer is in the middle of a room. Moving the

subwoofer closer to a wall or corner improves the overall room

response.

Locating the subwoofer in the corner of the room can often provide the

best room sound level. However, this position can also cause room

modes to be more apparent and affect the listening position. If all of

your listening positions sound good with the subwoofer in the corner,

then you are doing well. Many times though, the best position for

viewing a movie and listening to a surround system has the worst bass

response. This is due to a room mode at that position that causes a loss

of bass. The frequencies are actually canceling themselves out at that

particular position. Meanwhile, people that may be sitting in off-center

may have loud and muddy bass, because at this position, the room

mode is doubling the amplitude of the frequency that is causing the

increase of the volume. You can adjust for this situation a several ways.

First, adjust the position of the subwoofer until you have an overall

good bass response throughout the room. As you move the subwoofer,

notice that the locations with the most bass and the least bass changes.

You are moving the subwoofer through the room modes. The goal is to

find the best location to minimize the number of modes in the main

seating areas.

For middle size to larger rooms, add a second subwoofer. The proper

placement of these subwoofers together can create a great room

response without a loss at the main seating position and an increase the

response in the room corners.

Adding a second subwoofer can also remedy the room mode situation if

done properly. Do not place the subwoofers on the same lateral plane,

both subwoofers against the front wall, both subwoofers against the

back wall, or exactly opposite one another. This actually defeats the

purpose of adding a second subwoofer by increasing the room mode.

Placing a subwoofer in the front right corner and one in the back left

corner, or placing a sub up front and one on the side wall will better

cover the room modes. Start by placing the subwoofers in the corners

and gradually move them out. The best sound medium to use is pink

noise because of the range of random frequencies produced.You can

also purchase bass traps and place them through out the room in the

corners and other locations. These are commonly placed in corners

24 • Surround Sound Primer – DOC. 6122

Page 29

Crestron Surround Sound Primer

because that is the location of the highest level of bass energy. You can

also consult acoustic software and let it determine where to place them.

This also applies to any absorption and diffusion products.

Equalizers

Equalizers are a kind of sophisticated audio filtering device, used to

compensate for room acoustics, speaker deficiencies, and to achieve a

flat response. The two types of equalizers are graphic and parametric.

Graphic Equalizer

Graphic equalizers typically have multiple adjustable settings that can

range from one full octave to one third of an octave, and are used to

achieve a flatter frequency response from an audio system in a specific

acoustic space. Graphic equalizers use a set of predetermined frequency

bands to adjust the amplitude of the waveform at specific frequencies.

The center frequencies that are used and bandwidth affected by graphic

equalization are predetermined and cannot be altered. This allows only

a change in amplitude within each predetermined frequency range. The

graphic equalizer allows a greater range of control than offered by the

treble and bass controls.

Graphic Equalizer

Parametric Audio Filters

Parametric equalizers permit an adjustment to a signal's frequency

response with complete choice over how to divide up the signal and

adjust it using amplitude, center frequency and bandwidth (octave).

When a signal is parametrically equalized, its amplitude is changed at a

selected center frequency and over a selected range of frequencies on

either side of the center.

Primer – DOC. 6122 Surround Sound • 25

Page 30

Primer Crestron Surround Sound

There are six types of parametric audio filters:

• High Pass Filter

• Low Pass Filter

• Bass Shelf

• Treble Shelf

• EQ

• Notch

The filters may be used alone or in combination to adjust for room

acoustics, furnishings, and speaker deficiencies to achieve the desired

flatness.

High Pass

A high-pass filter passes on a majority of the high frequencies to the

next circuit and blocks or attenuates the lower frequencies. Sometimes

it is called a low-frequency discriminator or low-frequency attenuator.

A high-pass filter circuit passes all signals that have a frequency higher

than the specified frequency, while attenuating all frequencies lower

than its specified frequency.

High Pass Filter

26 • Surround Sound Primer – DOC. 6122

Page 31

Crestron Surround Sound Primer

Low Pass Filter

Low Pass

A low-pass filter passes on a majority of the low frequencies and

blocks or attenuates the higher frequencies. Sometimes it is called a

high-frequency discriminator or high-frequency attenuator. A low-pass

filter passes all frequencies below the specified frequency, while

attenuating all frequencies above this specified frequency.

Primer – DOC. 6122 Surround Sound • 27

Page 32

Primer Crestron Surround Sound

Bass Shelf

A bass shelf filter uniformly affects all low frequencies while not

affecting high frequencies. If, for example, to increase the bass

frequencies to a subwoofer, you can set the bass shelf filter to

uniformly increase the amplitude of all bass frequencies. The bass shelf

filter can also be used to uniformly decrease the bass frequencies to

eliminate a booming bass sound

Bass Shelf Filter- Reducing Overall Bass Response

28 • Surround Sound Primer – DOC. 6122

Page 33

Crestron Surround Sound Primer

Bass Shelf Filter – Increasing Overall Bass Response

Primer – DOC. 6122 Surround Sound • 29

Page 34

Primer Crestron Surround Sound

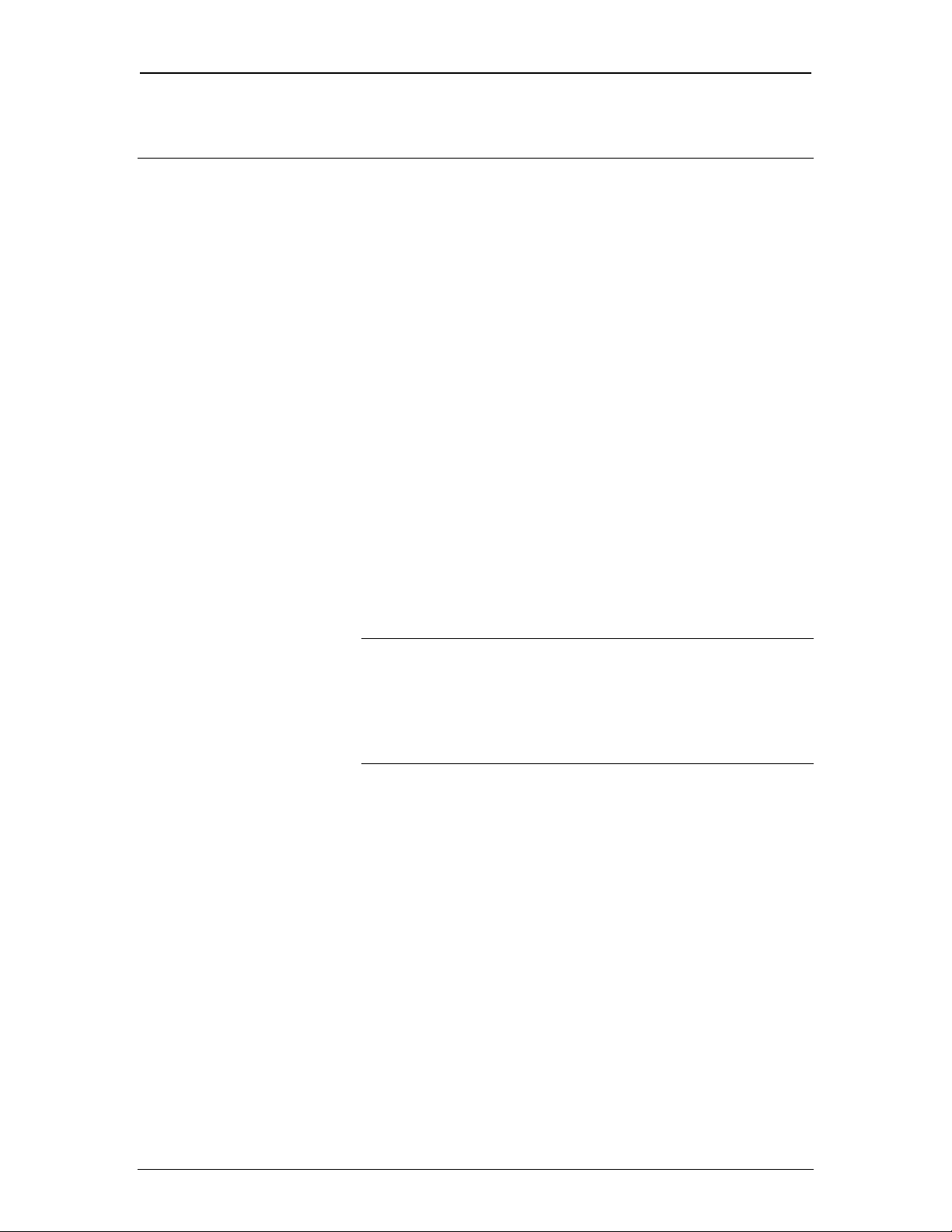

Treble Shelf

A treble shelf filter affects all high frequencies in a uniform manner

while not affecting all low frequencies. Because bass frequencies have

longer wavelengths, small speakers may sound distorted when trying to

reproduce these frequencies. The treble shelf filter can increase the

proportion of treble to bass, enabling the smaller speakers to produce a

clearer sound.

Treble Shelf Filter – Increasing Overall Treble Response

30 • Surround Sound Primer – DOC. 6122

Page 35

Crestron Surround Sound Primer

Treble Shelf Filter – Decrease Overall Treble Response

Primer – DOC. 6122 Surround Sound • 31

Page 36

Primer Crestron Surround Sound

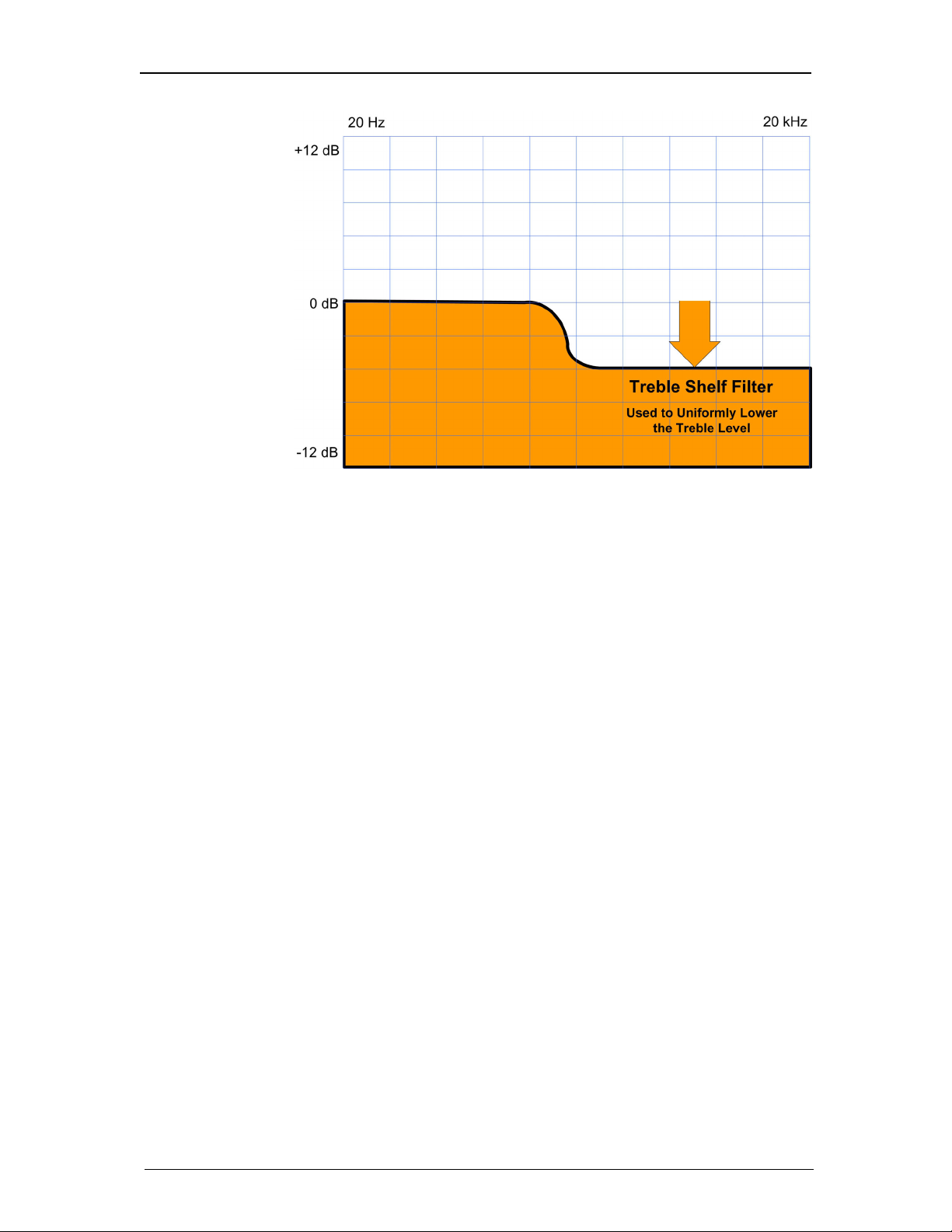

Peaking EQ

Peaking equalization (EQ) filters permit a precise amplitude adjustment

of a selectable range of frequencies. The range can vary from a small

slice of the frequency spectrum to a two-octave area. EQ filters allow a

fine adjustment to compensate for room acoustics, noise, and speaker

limitations. If, for example, the furniture or floor coverings absorb

sound in the 4 kHz range. The frequency response of the system can be

equalized by increasing the amplitude of the 4 kHz signals. If this

increase in amplitude closely matches the loss of the room, then the

frequency response is flattened, creating a more realistic sound.

In the following example, a parametric filter is used to adjust the

response by boosting the signal by +6 dB at 4 kHz.

The bandwidth (octave) range is also adjustable, which determines the

slope of the affected adjacent frequencies. In this case, the octave range

has been set to 0.6. This affects adjacent frequencies from about 2 kHz

to 8 kHz by gradually increasing the amplitude from 2 to 4 kHz and

gradually decreasing the amplitude from 4 kHz to 8 kHz.

32 • Surround Sound Primer – DOC. 6122

Page 37

Crestron Surround Sound Primer

Peaking EQ Filter

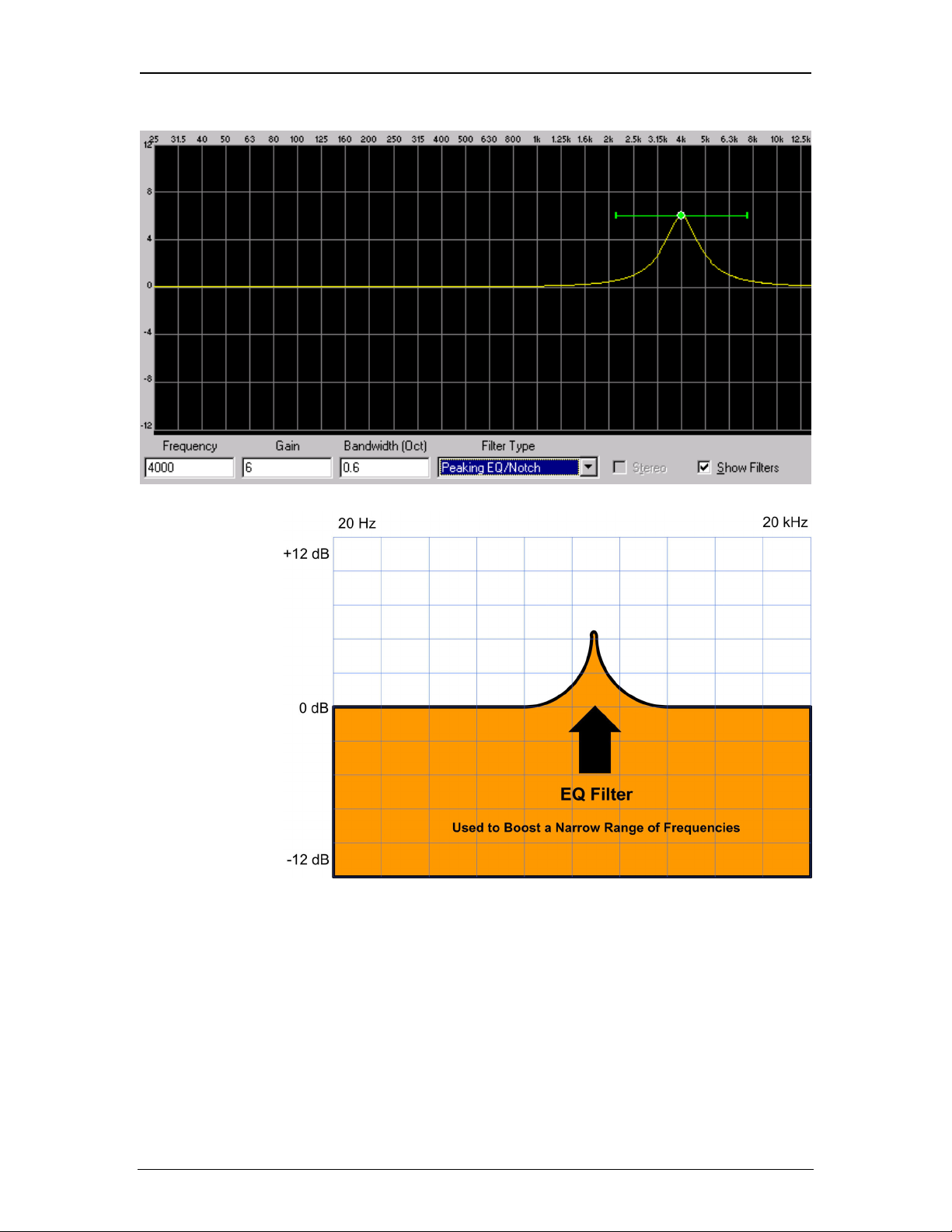

Notch Filters

Notch filters (also called band-reject filters) are used to remove an

unwanted frequency from a signal, while affecting all other frequencies

as little as possible.

An example of the use of a notch filter is with an audio program that

has been contaminated by 60 Hz powerline hum. A notch filter with a

center frequency of 60 Hz can remove the hum while having little

effect on the overall audio signal. A notch filter ideally suppresses a

single frequency component (the notch frequency) within the input

signal, or a narrow symmetric window around the notch frequency.

Primer – DOC. 6122 Surround Sound • 33

Page 38

Primer Crestron Surround Sound

Notch Filter

34 • Surround Sound Primer – DOC. 6122

Page 39

Crestron Surround Sound Primer

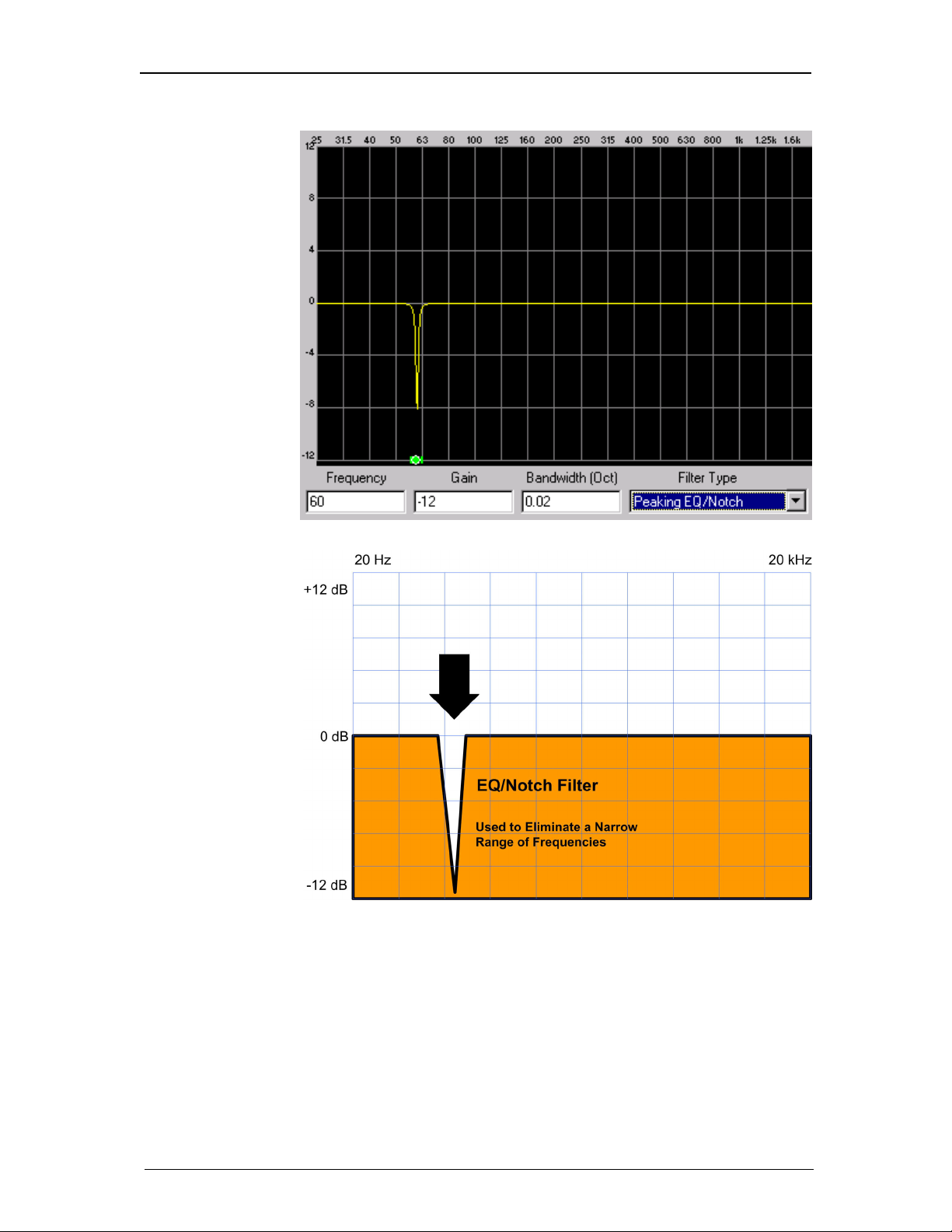

Parametric GUI

Parametric equalizers provide the user with an adjustable graphical user

interface (GUI) in addition to an area in which to enter numeric values.

The interface provides a center node to select the center value of the

frequency adjustment. The lines extending on either side of the center

node allow for a bandwidth adjustment (also called an octave range or

"Q"). In the following example of a parametric filter with center

frequency of 4 kHz, a range of frequencies above and below 4 kHz are

also affected. By shortening the octave range, fewer adjacent

frequencies are affected. The area within the shaded portion of this

diagram corresponds to the frequencies that are affected by this

adjustment.

Example: 4kHz Parametric Filter

Primer – DOC. 6122 Surround Sound • 35

Page 40

Primer Crestron Surround Sound

References

1. Information courtesy of ExtremeTech, www.extremetech.com

2. Information courtesy of The Sonic Research Studio School of Communication, Simon Fraser

University, www.sfu.ca/sonic-studio/index.html

3. Information courtesy of University of Southern California, www-classes.usc.edu/engr/ees/321/lect1/tsld021.htm

4. Information courtesy of Sound & Vision Engineering Department, Technical University of Gdansk ul.

Narutowicza 11/12, PL-80952 Gdansk, Poland, www.sound.eti.pg.gda.pl/SRS/tutorials.html

5. Information courtesy of Smyth, Mike. "An Overview Of The Coherent Acoustics Coding System."

DTS Digital Surround White Paper.

6. Information courtesy of Smyth, S.M.F., Smith, W.P. et al. "DTS Coherent Acoustics: Delivering High

Quality Multichannel Sound To The Consumer." 100th AES Convention, Preprint 4293, May 1996.

7. Information courtesy of Watkinson, John. The Art Of Digital Audio. Second Edition, Oxford: Focal

Press, 1994.

8. Information courtesy of Barry Truax, editor, "The Handbook for Acoustic Ecology" Second Edition

1999, Simon Fraser University and Arc Publications.

9. Information courtesy of Dolby Laboratories, www.dolby.com/ht/Guide.HomeTheater

10. Information courtesy of AudioVideo101.com

11. Information courtesy of THX, Ltd, www.thx.com

12. Information courtesy of Yanman, www.yanman.com/HomeTheater

13. Information courtesy of Widescreen Review, http:// db.widescreenreview.com/surround/articles

14. Information courtesy of DTS, www.dtsonline.com

36 • Surround Sound Primer – DOC. 6122

Page 41

Crestron Surround Sound Primer

Glossary

A

Acoustic

The physical transfer of energy from a

vibrating object to the surrounding medium

(acoustic radiation) and the physical transfer

of acoustic energy in a medium (sound

propagation).

Ambience

The background-sound quality of a listening

room, surround processor, and/or recording.

The ambience of a recording is what gives it

space and a sense of realism.

B

Bandwidth

Bandwidth is the difference between the

upper and the lower cutoff frequencies. (An

equalizer with cutoff frequencies of 200 and

2000 Hz has a bandwidth of 1,800 Hz.)

Bipole Speakers

Speakers sometimes used for surround

channels to provide diffusion of sound. The

speakers have two or more drivers, pointing

in opposite directions or away from the

listening position.

C

Codec (coder/decoder)

A pair of processing elements that code a

signal prior to storage or transmission and

then correspondingly decode the signal.

Crossover

The crossover splits up the frequency

spectrum into pieces, which are then

delivered to various speaker drivers. A

crossover is required because one speaker

driver cannot efficiently handle the full

spectrum of sound. For Example: A two-way

crossover splits the frequency spectrum into

two frequency bands. The first from 20 Hz to

2 kHz and the second from 2 kHz to 20 kHz.

The woofer reproduces the section from 20

Hz to 2 kHz and the tweeter takes over above

2 kHz. Two types of crossovers are active

and passive. Active crossovers are adjustable

and require a power source. Passive

crossovers are not adjustable and do not

require power to operate.

D

dB (Decibel)

Volume is measured in units called decibels

(dB). A three-decibel (3 dB) change in sound

pressure or volume is perceptible to human

hearing. A 10 dB change doubles the sound

pressure level.

Dipole Speakers

Speakers sometimes used for surround

channels to provide diffusion of sound. The

speakers have two or more drivers, pointing

in opposite directions or away from the

listening position.

Direct Stream Digital (DSD)

The modulation coding method used in the

Super Audio Compact Disc (SACD) format.

DTS

A digital coding method used by Coherent

Acoustics.

F

Fast-Fourier Transform (FFT)

Any complex sinusoidal wave signal (like an

audio signal) can be mathematically

represented. Some manufacturers have

developed products using several FFT

processes.

H

Haas (or precedence) effect

A psychoacoustic phenomenon in which the

ear localizes sound based on the direction of

the first arriving sound.

Harmonic Distortion

The most common form of audio distortion,

it shows up as additional unwanted signals at

multiples of the original frequency.

Thus, a l kHz tone may have second-order

harmonic distortion at 2 kHz, third-order at 3

kHz, etc. These can continue upward to

beyond the seventh or eighth order. The

percentage total of all these measurements is

called total harmonic distortion (THD) and is

commonly used in audio test reports.

However, different components generate

different ratios of odd and even orders,

making some sound better than others, even

though their THD measurements might be

the same.

Primer – DOC. 6122 Surround Sound • 37

Page 42

Primer Crestron Surround Sound

O L

LFE Octave

Low Frequency Effects 1. In music, an octave is a doubling or

Lossless

A term describing a data compression

algorithm that retains all the information in

the data, allowing it to be recovered perfectly

by decompression.

Lossy

A term describing a data compression

algorithm that reduces the amount of

information in the data, rather than just the

number of bits used to represent that

information. The lost information is usually

removed because it is subjectively less

important to the quality of the data or

because it can be recovered by interpolation

from the remaining data.

LPCM (linear pulse code modulation)

A means to digitally represent signals in

which the amplitude of a signal is coded as a

binary number.

M

Masking

Under ordinary conditions, the process by

which the threshold of hearing of one sound

is raised by the presence of another. In both

digital video and digital audio, a technique

that allows a system to delete superfluous

(inaudible or invisible) artifacts from a data

stream by means of data reduction or data

compression, enabling the system to transmit

or store wide-bandwidth information within a

much smaller bandwidth. Four uses of

masking involve Dolby AC-3 Digital

Surround Sound, MPEG video, DCC

cassettes, and the MiniDisc.

Matrixing

A technique in which additional signals can

be conveyed by altering the phase

relationships of the signals.

MPEG (Moving Pictures Expert Group)

A committee developing audio and video

standards.

N

Nyquist Sampling Theorem

Mathematical theory that a signal can be

completely represented by a sampling signal

with a frequency that is at least twice the

highest input signal frequency.

halving of frequency with the bottom octave

usually given as 20 to 40 Hz.

2. In parametric equalizers, it is the range of

frequencies above and below a selected

center frequency that are affected by the

change in amplitude.

P

Parametric Equalizer

Type of equalizer that allows adjustment of

frequency response with complete choice of

center frequency, amplitude, and bandwidth.

PCM

Pulse Code Modulation. A method of

transferring analog information into digital

signals by representing analog waveforms

with streams of digital bits.

Perceptual Coding

A coding method that exploits limitations in

human hearing acuity to decrease the bit rate

of the audio bitstream.

Phantom Image

A virtual sound source detected by a listener

but not actually produced by a speaker at that

location.

Pink Noise

Random noise (hiss) that has equal energy in

each octave, and is used for setting and

balancing a surround system. Pink noise is

used as a test tone featuring equal amount of

energy per octave of bandwidth. Compare

this to white noise, which features an equal

amount of energy per Hz (cycle per second)

of bandwidth. Pink noise and white noise are

common test signals with pink noise used

frequently to adjust equalizers for a flat

frequency response.

Pinna

The outer part of the ear

S

SACD (Super Audio Compact Disc)

A standard for high-density storage of twochannel CD and two-channel and

multichannel SACD audio recordings.

SDDS (Sony Dynamic Digital Sound)

A perceptual coding method used in some

theatrical motion picture releases.

38 • Surround Sound Primer – DOC. 6122

Page 43

Crestron Surround Sound Primer

Signal to Noise

(S/N ratio) the S/N ratio is the difference, in

dB, between the noise floor of a playback

component or sound recording and the

loudest level it can achieve with inaudible

distortion. The measurement is sometimes Aweighted because the ear is more sensitive to

particular frequencies.

Soundscape

The illusion of three-dimensional space

created by a surround system.

SPDIF

SPDIF is a acronym for Sony Philips Digital

Interface and is sometimes abbreviated

S/PDIF or S-PDIF. SPDIF is a digitial audio

interface standard developed jointly by Sony

and Philips that enables direct digital

interconnections between separate digital

audio components. Physically the connection

between SPDIF compatible units can be

made using optical fiber and optical

"TosLink" modules, or electrically using

coaxial cable and RCA type connectors.

Spectral Envelope

As used in the Dolby Digital encoder, a bit

allocation routine to determine the number of

bits needed.

Standing Wave

A low frequency distortion created when a

certain frequency is reproduced whose size

has some special relationship to the room in

which it is produced.

Subband

A relatively narrow band of audio

frequencies used by a perceptual codec to

approximate the critical bands of the human

ear.

Sweet-Spot

The sweet-spot is the prime listening position

where an audio system is optimized, and is

where optimal sound quality is encountered.

Depending on the system, the sweet-spot may

be large enough to accommodate multiple

listeners.

.

T

Toslink

A type of fiber-optic cable connection that

uses light beams to transmit digital

information from digital audio components.

Most digital-to-analog converters and digital

surround sound processors (Dolby Digital

and DTS processors) can connect to source

components using Toslink cables and