Page 1

Compaq AlphaServer SC RMS

Reference Manual

Quadrics Supercomputers World Ltd.Document Version 7 - June 22nd 2001 - AA-RLAZB-TE

Page 2

The information supplied in this document is believed to be correct at the time of publication, but no liability is assumed for its use or for the infringements of the rights of others

resulting from its use. No license or other rights are granted in respect of any rights

owned by any of the organizations mentioned herein.

This document may not be copied, in whole or in part, without the prior written consent

of Quadrics Supercomputers World Ltd.

Copyright 1998,1999,2000,2001 Quadrics Supercomputers World Ltd.

The specifications listed in this document are subject to change without notice.

Compaq, the Compaq logo, Alpha, AlphaServer, and Tru64 are trademarks of Compaq

Information Technologies Group, L.P. in the United States and other countries.

UNIX is a registered trademark of The Open Group in the U.S. and other countries.

TotalView and Etnus are registered trademarks of Etnus LLC.

All other product names mentioned herein may be trademarks of their respective companies.

The Quadrics Supercomputers World Ltd. (Quadrics) web site can be found at:

http://www.quadrics.com/

Quadrics’ address is:

One Bridewell Street

Bristol

BS1 2AA

UK

Tel: +44-(0)117-9075375

Fax: +44-(0)117-9075395

Circulation Control: None

Document Revision History

Revision Date Author Remarks

1 January 1999 HRA Initial Draft

2 Feb 2000 DR Updated Draft

3 Apr 2000 DR Draft changes for Product Release

4 Jun 2000 RMC Corrections for Product Release

5 Jan 2001 HRA Updates for Version 2

6 June 2001 DR Further Version 2 changes

7 June 2001 DR AlphaServer SC V2 Product Release

Page 3

Contents

1 Introduction 1-1

1.1 Scope of Manual . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-1

1.2 Audience . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-1

1.3 Using this Manual . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-1

1.4 Related Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-3

1.5 Location of Online Documentation . . . . . . . . . . . . . . . . . . . 1-3

1.6 Reader’s Comments . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-3

1.7 Conventions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1-3

2 Overview of RMS 2-1

2.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-1

2.2 The System Architecture . . . . . . . . . . . . . . . . . . . . . . . . . 2-1

2.2.1 Nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-1

2.3 The Role of the RMS . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-3

2.3.1 The Structure of the RMS . . . . . . . . . . . . . . . . . . . . . . 2-4

2.3.2 The RMS Daemons . . . . . . . . . . . . . . . . . . . . . . . . . . 2-4

2.3.3 The RMS Commands . . . . . . . . . . . . . . . . . . . . . . . . . 2-5

2.3.4 The RMS Database . . . . . . . . . . . . . . . . . . . . . . . . . . 2-6

2.4 RMS Management Functions . . . . . . . . . . . . . . . . . . . . . . 2-7

2.4.1 Allocating Resources . . . . . . . . . . . . . . . . . . . . . . . . . 2-7

2.4.2 Scheduling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-8

2.4.3 Access Control and Accounting . . . . . . . . . . . . . . . . . . . 2-9

Contents i

Page 4

2.4.4 RMS Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . 2-10

3 Parallel Programs Under RMS 3-1

3.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-1

3.2 Resource Requests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3-2

3.3 Loading and Running Programs . . . . . . . . . . . . . . . . . . . . . 3-3

4 RMS Daemons 4-1

4.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-1

4.1.1 Startup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-2

4.1.2 Log Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-2

4.1.3 Daemon Status . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-2

4.2 The Database Manager . . . . . . . . . . . . . . . . . . . . . . . . . . 4-2

4.3 The Machine Manager . . . . . . . . . . . . . . . . . . . . . . . . . . 4-3

4.3.1 Interaction with the Database . . . . . . . . . . . . . . . . . . . 4-3

4.4 The Partition Manager . . . . . . . . . . . . . . . . . . . . . . . . . . 4-3

4.4.1 Partition Startup . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-4

4.4.2 Interaction with the Database . . . . . . . . . . . . . . . . . . . 4-4

ii Contents

4.5 The Switch Network Manager . . . . . . . . . . . . . . . . . . . . . . 4-5

4.5.1 Interaction with the Database . . . . . . . . . . . . . . . . . . . 4-5

4.6 The Transaction Log Manager . . . . . . . . . . . . . . . . . . . . . . 4-5

4.6.1 Interaction with the Database . . . . . . . . . . . . . . . . . . . 4-6

4.7 The Event Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-6

4.7.1 Interaction with the Database . . . . . . . . . . . . . . . . . . . 4-6

4.8 The Process Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-7

4.8.1 Interaction with the Database . . . . . . . . . . . . . . . . . . . 4-7

4.9 The RMS Daemon . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4-7

4.9.1 Interaction with the Database . . . . . . . . . . . . . . . . . . . 4-8

5 RMS Commands 5-1

5.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-1

allocate(1) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-3

Page 5

nodestatus(1) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-8

msqladmin(1) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-9

prun(1) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-11

rcontrol(1) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-20

rinfo(1) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-32

rmsbuild(1) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-35

rmsctl(1) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-37

rmsexec(1) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-39

rmshost(1) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-41

rmsquery(1) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-42

rmstbladm(1) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5-44

6 Access Control, Usage Limits and Accounting 6-1

6.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-1

6.2 Users and Projects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-1

6.3 Access Controls . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-2

6.3.1 Access Controls Example . . . . . . . . . . . . . . . . . . . . . . 6-3

6.4 How Access Controls are Applied . . . . . . . . . . . . . . . . . . . . 6-4

6.4.1 Memory Limit Rules . . . . . . . . . . . . . . . . . . . . . . . . . 6-4

6.4.2 Priority Rules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-5

6.4.3 CPU Usage Limit Rules . . . . . . . . . . . . . . . . . . . . . . . 6-5

6.5 Accounting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6-6

7 RMS Scheduling 7-1

7.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-1

7.2 Scheduling Policies . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-1

7.3 Scheduling Constraints . . . . . . . . . . . . . . . . . . . . . . . . . . 7-2

7.4 What Happens When a Request is Received . . . . . . . . . . . . . . 7-3

7.4.1 Memory Limits . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-5

7.4.2 Swap Space . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-5

7.4.3 Time Slicing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-6

7.4.4 Suspend and Resume . . . . . . . . . . . . . . . . . . . . . . . . 7-6

Contents iii

Page 6

7.4.5 Idle Time . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7-6

8 Event Handling 8-1

8.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8-1

8.1.1 Posting Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8-2

8.1.2 Waiting on Events . . . . . . . . . . . . . . . . . . . . . . . . . . 8-2

8.2 Event Handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8-3

8.3 List of Events Generated . . . . . . . . . . . . . . . . . . . . . . . . . 8-4

8.3.1 Extending the RMS Event Handling Mechanism . . . . . . . . 8-6

9 Setting up RMS 9-1

9.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-1

9.2 Installation Planning . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-1

9.2.1 Node Names . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-2

9.3 Setting up RMS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-2

9.3.1 Starting RMS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-2

9.3.2 Initial Setup with One Partition . . . . . . . . . . . . . . . . . . 9-3

9.3.3 Simple Day/Night Setup . . . . . . . . . . . . . . . . . . . . . . . 9-4

iv Contents

9.4 Day-to-Day Operation . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-5

9.4.1 Periodic Shift Changes . . . . . . . . . . . . . . . . . . . . . . . . 9-5

9.4.2 Backing Up the Database . . . . . . . . . . . . . . . . . . . . . . 9-5

9.4.3 Summarizing Accounting Data . . . . . . . . . . . . . . . . . . . 9-6

9.4.4 Archiving Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-6

9.4.5 Database Maintenance . . . . . . . . . . . . . . . . . . . . . . . . 9-7

9.4.6 Configuring Nodes Out . . . . . . . . . . . . . . . . . . . . . . . 9-9

9.5 Local Customization of RMS . . . . . . . . . . . . . . . . . . . . . . . 9-10

9.5.1 Partition Startup . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-10

9.5.2 Core File Handling . . . . . . . . . . . . . . . . . . . . . . . . . . 9-10

9.5.3 Event Handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-11

9.5.4 Switch Manager Configuration . . . . . . . . . . . . . . . . . . . 9-11

9.6 Log Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9-12

Page 7

10 The RMS Database 10-1

10.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-1

10.1.1 General Information about the Tables . . . . . . . . . . . . . . . 10-1

10.1.2 Access to the Database . . . . . . . . . . . . . . . . . . . . . . . . 10-2

10.1.3 Categories of Table . . . . . . . . . . . . . . . . . . . . . . . . . . 10-2

10.2 Listing of Tables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-4

10.2.1 The Access Controls Table . . . . . . . . . . . . . . . . . . . . . . 10-4

10.2.2 The Accounting Statistics Table . . . . . . . . . . . . . . . . . . 10-4

10.2.3 The Attributes Table . . . . . . . . . . . . . . . . . . . . . . . . . 10-6

10.2.4 The Elans Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-8

10.2.5 The Elites Table . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-9

10.2.6 The Events Table . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-9

10.2.7 The Event Handlers Table . . . . . . . . . . . . . . . . . . . . . . 10-10

10.2.8 The Fields Table . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-11

10.2.9 The Installed Components Table . . . . . . . . . . . . . . . . . . 10-12

10.2.10 The Jobs Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-12

10.2.11 The Link Errors Table . . . . . . . . . . . . . . . . . . . . . . . . 10-13

10.2.12 The Modules Table . . . . . . . . . . . . . . . . . . . . . . . . . . 10-14

10.2.13 The Module Types Table . . . . . . . . . . . . . . . . . . . . . . . 10-15

10.2.14 The Nodes Table . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-15

10.2.15 The Node Statistics Table . . . . . . . . . . . . . . . . . . . . . . 10-16

10.2.16 The Partitions Table . . . . . . . . . . . . . . . . . . . . . . . . . 10-17

10.2.17 The Projects Table . . . . . . . . . . . . . . . . . . . . . . . . . . 10-19

10.2.18 The Resources Table . . . . . . . . . . . . . . . . . . . . . . . . . 10-19

10.2.19 The Servers Table . . . . . . . . . . . . . . . . . . . . . . . . . . 10-20

10.2.20 The Services Table . . . . . . . . . . . . . . . . . . . . . . . . . . 10-21

10.2.21 The Software Products Table . . . . . . . . . . . . . . . . . . . . 10-22

10.2.22 The Switch Boards Table . . . . . . . . . . . . . . . . . . . . . . 10-23

10.2.23 The Transactions Table . . . . . . . . . . . . . . . . . . . . . . . 10-23

10.2.24 The Users Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-24

Contents v

Page 8

A Compaq AlphaServer SC Interconnect Terms A-1

A.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-1

A.2 Link States . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-4

A.3 Link Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . A-4

B RMS Status Values B-1

B.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-1

B.2 Generic Status Values . . . . . . . . . . . . . . . . . . . . . . . . . . . B-2

B.3 Job Status Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-2

B.4 Link Status Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-3

B.5 Module Status Values . . . . . . . . . . . . . . . . . . . . . . . . . . . B-3

B.6 Node Status Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-4

B.7 Partition Status Values . . . . . . . . . . . . . . . . . . . . . . . . . . B-5

B.8 Resource Status Values . . . . . . . . . . . . . . . . . . . . . . . . . . B-5

B.9 Transaction Status Values . . . . . . . . . . . . . . . . . . . . . . . . B-6

C RMS Kernel Module C-1

C.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-1

vi Contents

C.2 Capabilities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-1

C.3 System Call Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . C-2

rms_setcorepath(3) . . . . . . . . . . . . . . . . . . . . . . . . . . C-3

rms_getcorepath(3) . . . . . . . . . . . . . . . . . . . . . . . . . . C-3

rms_prgcreate(3) . . . . . . . . . . . . . . . . . . . . . . . . . . . C-4

rms_prgdestroy(3) . . . . . . . . . . . . . . . . . . . . . . . . . . C-4

rms_prgids(3) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-6

rms_prginfo(3) . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-6

rms_getprgid(3) . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-6

rms_prgsuspend(3) . . . . . . . . . . . . . . . . . . . . . . . . . . C-8

rms_prgresume(3) . . . . . . . . . . . . . . . . . . . . . . . . . . C-8

rms_prgsignal(3) . . . . . . . . . . . . . . . . . . . . . . . . . . . C-8

rms_prgaddcap(3) . . . . . . . . . . . . . . . . . . . . . . . . . . C-10

rms_setcap(3) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-10

Page 9

rms_ncaps(3) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-12

rms_getcap(3) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C-12

rms_prggetstats(3) . . . . . . . . . . . . . . . . . . . . . . . . . . C-13

D RMS Application Interface D-1

D.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-1

rms_allocateResource(3) . . . . . . . . . . . . . . . . . . . . . . . D-2

rms_deallocateResource(3) . . . . . . . . . . . . . . . . . . . . . D-2

rms_run(3) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . D-4

rms_suspendResource(3) . . . . . . . . . . . . . . . . . . . . . . D-6

rms_resumeResource(3) . . . . . . . . . . . . . . . . . . . . . . . D-6

rms_killResource(3) . . . . . . . . . . . . . . . . . . . . . . . . . D-6

rms_defaultPartition(3) . . . . . . . . . . . . . . . . . . . . . . . D-7

rms_numCpus(3) . . . . . . . . . . . . . . . . . . . . . . . . . . . D-7

rms_numNodes(3) . . . . . . . . . . . . . . . . . . . . . . . . . . D-7

rms_freeCpus(3) . . . . . . . . . . . . . . . . . . . . . . . . . . . D-7

E Accounting Summary Script E-1

E.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . E-1

E.2 Command Line Interface . . . . . . . . . . . . . . . . . . . . . . . . . E-1

E.3 Example Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . E-2

E.4 Listing of the Script . . . . . . . . . . . . . . . . . . . . . . . . . . . . E-3

Glossary Glossary-1

Index Index-1

Contents vii

Page 10

Page 11

List of Figures

2.1 A Network of Nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-2

2.2 High Availability RMS Configuration . . . . . . . . . . . . . . . . . . . . 2-3

2.3 The Database . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-6

2.4 Partitioning a System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-7

2.5 Distribution of Processes . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-8

2.6 Preemption of Low Priority Jobs . . . . . . . . . . . . . . . . . . . . . . . 2-9

2.7 Two Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2-10

3.1 Distribution of Parallel Processes . . . . . . . . . . . . . . . . . . . . . . 3-2

3.2 Loading and Running a Parallel Program . . . . . . . . . . . . . . . . . 3-3

A.1 A 2-Stage, 16-Node, Switch Network . . . . . . . . . . . . . . . . . . . . A-2

A.2 A 3-Stage, 64-Node, Switch Network . . . . . . . . . . . . . . . . . . . . A-2

A.3 A 3-Stage, 128-Node, Switch Network . . . . . . . . . . . . . . . . . . . A-3

List of Figures i

Page 12

Page 13

List of Tables

10.1 Access Controls Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-4

10.2 Accounting Statistics Table . . . . . . . . . . . . . . . . . . . . . . . . . 10-5

10.3 Machine Attributes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-6

10.4 Performance Statistics Attributes . . . . . . . . . . . . . . . . . . . . . . 10-7

10.5 Server Attributes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-7

10.6 Scheduling Attributes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-8

10.7 Elans Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-8

10.8 Elites Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-9

10.9 Events Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-9

10.10 Example of Status Changes . . . . . . . . . . . . . . . . . . . . . . . . . 10-10

10.11 Event Handlers Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-10

10.12 Fields Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-11

10.13 Type Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-11

10.14 Installed Components Table . . . . . . . . . . . . . . . . . . . . . . . . . 10-12

10.15 Jobs Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-12

10.16 Link Errors Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-13

10.17 Modules Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-14

10.18 Module Types Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-15

10.19 Valid Module Types . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-15

10.20 Nodes Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-16

10.21 Node Statistics Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-17

List of Tables i

Page 14

10.22 Partitions Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-18

10.23 Projects Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-19

10.24 Resources Tables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-19

10.25 Servers Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-20

10.26 Services Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-21

10.27 Entries in the Services Table . . . . . . . . . . . . . . . . . . . . . . . . . 10-22

10.28 Software Products Table . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-22

10.29 Component Attribute Values . . . . . . . . . . . . . . . . . . . . . . . . . 10-22

10.30 Switch Boards Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-23

10.31 Transaction Log Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-23

10.32 Entry in the Transactions Table . . . . . . . . . . . . . . . . . . . . . . . 10-24

10.33 Users Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10-24

A.1 Switch Network Parameters . . . . . . . . . . . . . . . . . . . . . . . . . A-3

B.1 Job Status Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-2

B.2 Link Status Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-3

B.3 Module Status Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-3

B.4 Node Status Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-4

B.5 Run Level Status Values . . . . . . . . . . . . . . . . . . . . . . . . . . . B-5

B.6 Partition Status Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-5

B.7 Resource Status Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . B-6

B.8 Transaction Status Values . . . . . . . . . . . . . . . . . . . . . . . . . . B-6

ii List of Tables

Page 15

1.1 Scope of Manual

This manual describes the Resource Management System (RMS). The manual’s purpose

is to provide a technical overview of the RMS system, its functionality and

programmable interfaces. It covers the RMS daemons, client applications, the RMS

database, the system call interface to the RMS kernel module and the application

program interface to the RMS database.

1.2 Audience

This manual is intended for system administrators and developers. It provides a

detailed technical description of the operation and features of RMS and describes the

programming interface between RMS and third-party systems.

1

Introduction

The manual assumes that the reader is familiar with the following:

R

• UNIX

• C programming language

operating system including shell scripts

1.3 Using this Manual

This manual contains ten chapters and five appendices. The contents of these are as

follows:

1-1

Page 16

Related Information

Chapter 1 (Introduction)

Chapter 2 (Overview of RMS)

Chapter 3 (Parallel Programs Under RMS)

Chapter 4 (RMS Daemons)

Chapter 5 (RMS Commands)

Chapter 6 (Access Control, Usage Limits and Accounting)

Chapter 7 (RMS Scheduling)

explains the layout of the manual and the conventions used to present

information

overviews the functions of the RMS and introduces its components

shows how parallel programs are executed under RMS

describes the functionality of the RMS daemons

describes the RMS commands

explains RMS access controls, usage limits and accounting

describes how RMS schedules parallel jobs

Chapter 8 (Event Handling)

describes RMS event handling

Chapter 9 (Setting up RMS)

explains how to set up RMS

Chapter 10 (The RMS Database)

presents the structure of tables in the RMS database

Appendix A (Compaq AlphaServer SC Interconnect Terms)

defines terms relating to support for QsNet in RMS

Appendix B (RMS Status Values)

lists the status values of RMS objects

Appendix C (RMS Kernel Module)

describes the RMS kernel module and its system call interface

Appendix D (RMS Application Interface)

describes the RMS application interface

Appendix E (Accounting Summary Script)

contains an example of producing accounting information

1-2 Introduction

Page 17

1.4 Related Information

The following manuals provide additional information about the RMS from the point of

view of either the system administrator or the user:

•

Compaq AlphaServer SC User Guide

• Compaq AlphaServer SC System Administration Guide

1.5 Location of Online Documentation

Online documentation in HTML format is installed in the directory

/usr/opt/rms/docs/html and can be accessed from a browser at

http://rmshost:8081/html/index.html. PostScript and PDF versions of the

documents are in /usr/opt/rms/docs. Please consult your system administrator if

you have difficulty accessing the documentation. On-line documentation can also be

found on the AlphaServer SC System Software CD-ROM.

New versions of this and other Quadrics documentation can be found on the Quadrics

web site http://www.quadrics.com.

Further information on AlphaServer SC can be found on the Compaq website

http://www.compaq.com/hpc.

Conventions

1.6 Reader’s Comments

If you would like to make any comments on this or any other AlphaServer SC manual

please contact your local Compaq support centre.

1.7 Conventions

The following typographical conventions have been used in this document:

monospace type

Monospace type denotes literal text. This is used for command

descriptions, file names and examples of output.

bold monospace type

Bold monospace type indicates text that the user enters when

contrasted with on-screen computer output.

Introduction 1-3

Page 18

Conventions

italic monospace type

italic type Italic (slanted) proportional type is used in the text to introduce new

Ctrl/x This symbol indicates that you hold down the Ctrl key while you

TLA Small capital letters indicate an abbreviation (see Glossary).

ls(1) A cross-reference to a reference page includes the appropriate section

# A number sign represents the superuser prompt.

%, $ A percent sign represents the C shell system prompt. A dollar sign

Italic (slanted) monospace type denotes some meta text. This is used

most often in command or parameter descriptions to show where a

textual value is to be substituted.

terms. It is also used when referring to labels on graphical elements

such as buttons.

press another key or mouse button (shown here by x).

number in parentheses.

represents the system prompt for the Bourne, Korn, and POSIX

shells.

1-4 Introduction

Page 19

2.1 Introduction

This chapter describes the role of the Resource Management System (RMS). The RMS

provides tools for the management and use of a Compaq AlphaServer SC system. To put

into context the functions that RMS performs, a brief overview of the system architecture

is given first in Section 2.2. Section 2.3 outlines the main functions of the RMS and

introduces the major components of the RMS: a set of UNIX daemons, a suite of

command line utilities and a SQL database. Finally, Section 2.4 describes the resource

management facilities from the system administrator’s point of view.

2.2 The System Architecture

2

Overview of RMS

An RMS system looks like a standard UNIX system: it has the familiar command shells,

editors, compilers, linkers and libraries; it runs the same applications. The RMS system

differs from the conventional UNIX one in that it can run parallel applications as well as

sequential ones. The processes that execute on the system, particularly the parallel

programs, are controlled by the RMS.

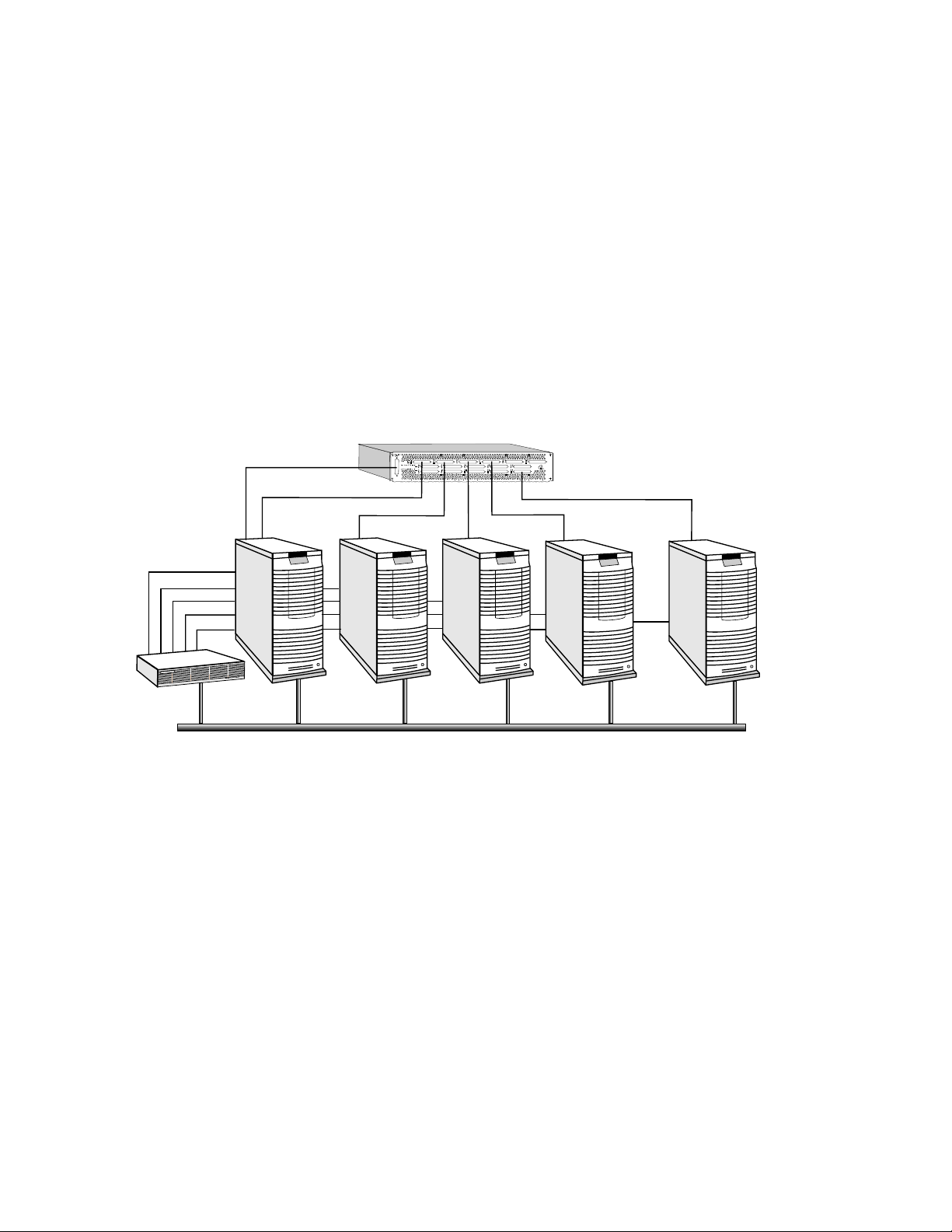

2.2.1 Nodes

An RMS system comprises a network of computers (referred to as nodes) as shown in

Figure 2.1

each node runs a single copy of UNIX. Nodes used interactively to login to the RMS

. Each node may have single or multiple processors (such as a SMP server);

Overview of RMS 2-1

Page 20

The System Architecture

system are also connected to an external LAN. The application nodes, used for running

parallel programs, are accessed through the RMS.

Figure 2.1: A Network of Nodes

SwitchNetworkControl

QM-S16

Switch

SwitchNetwork

...

Terminal

Concentrator

ManagementNetwork

InteractiveNodes

withLAN/FDDI

Interface

ApplicationNodes

All of the nodes are connected to a management network (normally, a 100 BaseT

Ethernet). They may also be connected to a Compaq AlphaServer SC Interconnect, to

provide high-performance user-space communications between application processes.

The RMS processes that manage the system reside either on an interactive node or on a

separate management server. This node, known as rmshost, holds the RMS database,

which stores all state for the RMS system.

For high-availability installations, the rmshost node should be an interactive node

rather than a management server. This will allow you to configure the system for

failover, as shown in Figure 2.2 (see Chapter 15 of the System Administration Guide for

details).

2-2 Overview of RMS

Page 21

The Role of the RMS

Figure 2.2: High Availability RMS Configuration

RMSHost BackupRMSHost

RMSDatabase

The RMS processes run on the node with the name rmshost, which migrates to the

backup on fail-over. The database is held on a shared disk, accessible to both the

primary and backup node.

2.3 The Role of the RMS

The RMS provides a single point interface to the system for resource management. This

interface enables a system administrator to manage the system resources (CPUs,

memory, disks, and so on) effectively and easily. The RMS includes facilities for the

following administrative functions:

Monitoring controlling and monitoring the nodes in the network to ensure the

correct operation of the hardware

Fault diagnosis diagnosing faults and isolating errors; instigating fault recovery

and escalation procedures

Data collection recording statistics on system performance

Allocating CPUs allocating system resources to applications

Access control controlling user access to resources

Accounting single point for collecting accounting data

Parallel jobs providing the system support required to run parallel programs

Overview of RMS 2-3

Page 22

The Role of the RMS

Scheduling deciding when and where to run parallel jobs

Audit maintaining an audit trail of system state changes

From the user’s point of view, RMS provides tools for:

Information querying the resources of the system

Execution loading and running parallel programs on a given set of resources

Monitoring monitoring the execution of parallel programs

2.3.1 The Structure of the RMS

RMS is implemented as a set of UNIX commands and daemons, programmed in C and

C++, using sockets for communications. All of the details of the system (its

configuration, its current state, usage statistics) are maintained in a SQL database, as

shown in

Chapter 10 (The RMS Database) for details of the database.

Figure 2.3. See Section 2.3.4 for an overview and

2.3.2 The RMS Daemons

A set of daemons provide the services required for managing the resources of the system.

To do this, the daemons both query and update the database (see Section 2.3.4).

• The Database Manager, msqld, provides SQL database services.

• The Machine Manager, mmanager, monitors the status of nodes in an RMS system.

• The Partition Manager, pmanager, controls the allocation of resources to users and

the scheduling of parallel programs.

• The Switch Network Manager, swmgr, supervises the operation of the Compaq

AlphaServer SC Interconnect, monitoring it for errors and collecting performance

data.

• The Event Manager, eventmgr, runs handlers in response to system incidents and

notifies clients who have registered an interest in them.

• The Transaction Log Manager, tlogmgr, instigates database transactions that have

been requested in the Transaction Log. All client transactions are made through this

mechanism. This ensures that changes to the database are serialized and an audit

trail is kept.

• The Process Manager, rmsmhd, runs on each node in the system. It starts the other

RMS daemons.

2-4 Overview of RMS

Page 23

• The RMS Daemon, rmsd, runs on each node in the system. It loads and runs user

processes and monitors resource usage and system performance.

The RMS daemons are described in more detail in Chapter 4 (RMS Daemons).

2.3.3 The RMS Commands

RMS commands call on the RMS daemons to get information about the system, to

distribute work across the system, to monitor the state of programs and, in the case of

administrators, to configure the system and back it up. A suite of these RMS client

applications is supplied. There are commands for users and commands for system

administrators.

The user commands for gaining access to the system and running parallel programs are

as follows:

• allocate reserves resources for a user.

• prun loads and runs parallel programs.

• rinfo gets information about the resources in the system.

• rmsexec performs load balancing for the efficient execution of sequential programs.

The Role of the RMS

• rmsquery queries the database. Administrators can also use rmsquery to update

the database.

The system administration commands for managing the system are as follows:

• nodestatus gets and sets node status information.

• rcontrol starts, stops and reconfigures services.

• rmsbuild populates the RMS database with information on a given system.

• rmsctl starts and stops RMS and shows the system status.

• rmshost reports the name of the node hosting the RMS database.

• rmstbladm builds and maintains the database.

• msqladmin performs database server administration.

The services available to the different types of user (application programmer, operator,

system administrator) are subject to access control. Access control restrictions are

embedded in the SQL database, based on standard UNIX group IDs (see

Overview of RMS 2-5

Page 24

RMS Management Functions

Section 10.2.20). Users have read access to all tables but no write access. Operator and

administrative applications are granted limited write access. Password-protected

administrative applications and RMS itself have full read/write access.

The RMS commands are described in more detail in Chapter 5 (RMS Commands).

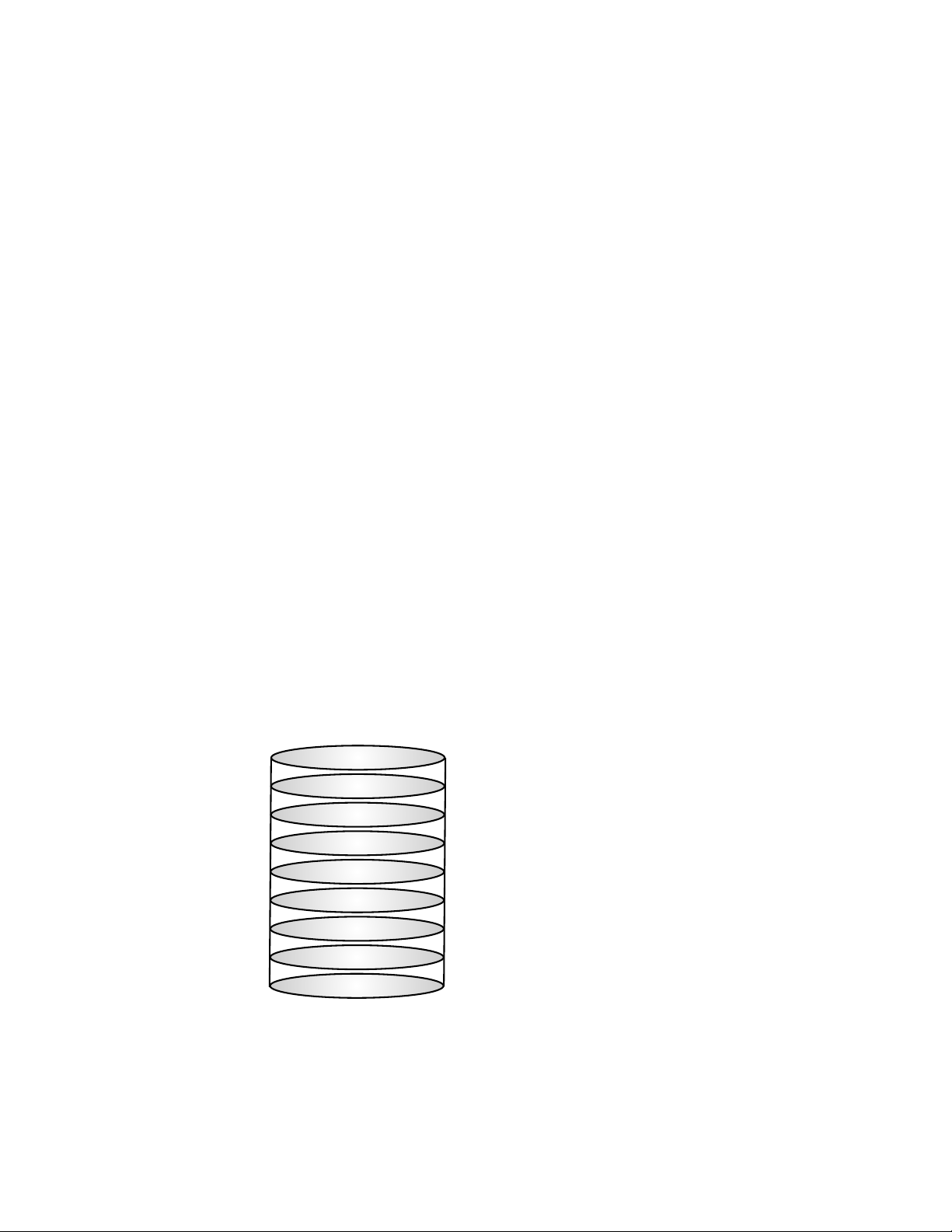

2.3.4 The RMS Database

The database provides a platform-independent interface to the RMS system. Users and

administrators can interact with the database using standard SQL queries. For example,

the following query displays details about the nodes in the machine. It selects fields

from the table called nodes (see Section 10.2.14). The query is submitted through the

RMS client rmsquery.

$ rmsquery "select name,status from nodes"

atlasms running

atlas0 running

atlas1 running

atlas2 running

atlas3 running

Figure 2.3: The Database

RMS uses the mSQL database engine from Hughes Technologies (for details see

http://www.Hughes.com.au). Client applications may use C, C++, Java, HTML or

UNIX script interfaces to generate SQL queries. See the Quadrics support page

http://www.quadrics.com/web/support for details of the SQL language.

2-6 Overview of RMS

NodeConfiguration

NetworkConfiguration

AccessControl

ResourceQuotas

Accounting

Auditing

UsageStatistics

SystemState

InternalSupport

Page 25

2.4 RMS Management Functions

The RMS gives the system administrator control over how the resources of a system are

assigned to the tasks it must perform. This includes the allocation of resources

(Section 2.4.1), scheduling policies (Section 2.4.2), access controls and accounting

(Section 2.4.3) and system configuration (Section 2.4.4).

2.4.1 Allocating Resources

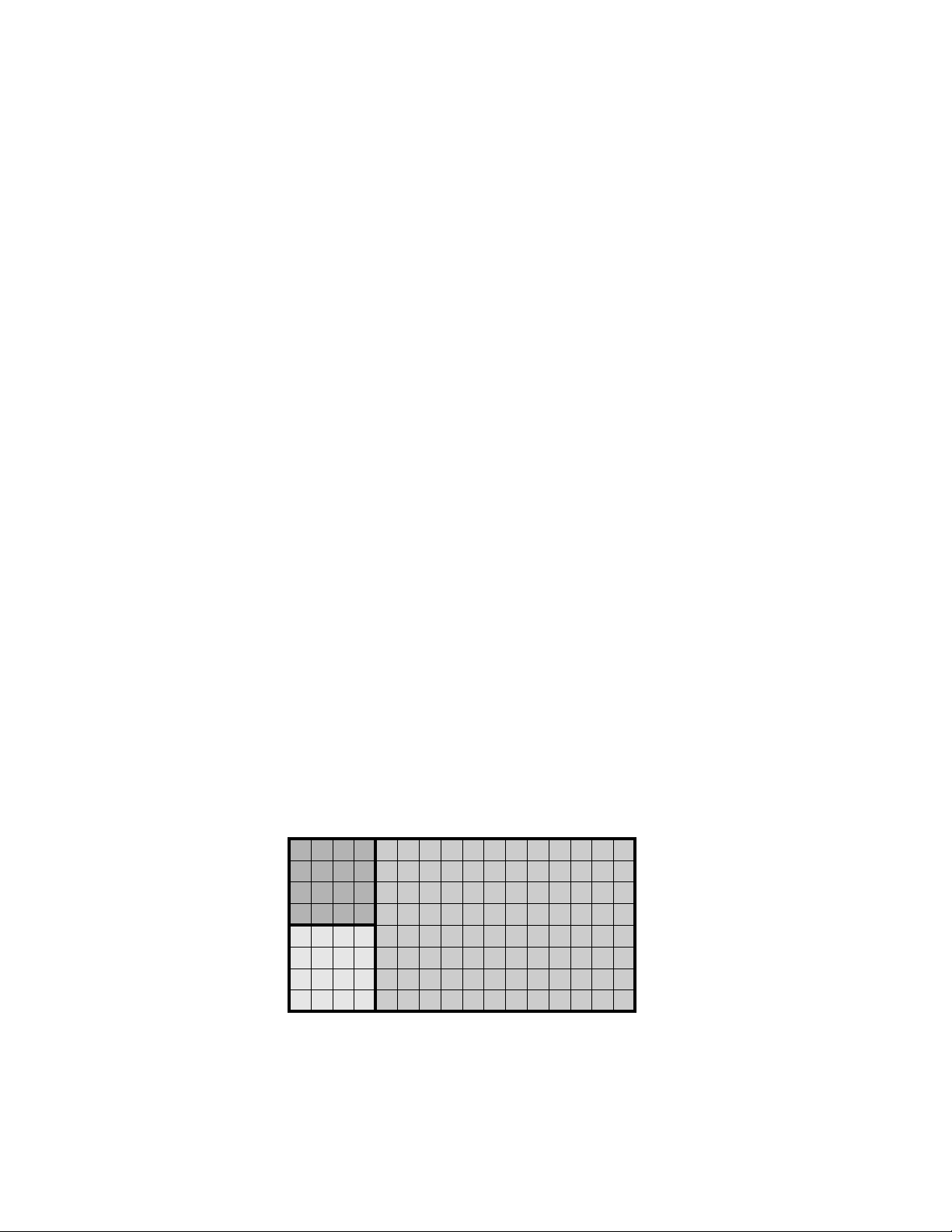

The nodes in an RMS system can be configured into mutually exclusive sets known as

partitions as shown in Figure 2.4. The administrator can create partitions with different

mixes of resources to support a range of uses. For example, a system may have to cater

for a variety of processing loads, including the following:

• Interactive login sessions for conventional UNIX processes

• Parallel program development

• Production execution of parallel programs

• Distributed system services, such as database or file system servers, used by

conventional UNIX processes

RMS Management Functions

• Sequential batch streams

Figure 2.4: Partitioning a System

Login

Parallel

Sequential

batch

The system administrator can allocate a partition with appropriate resources for each of

these tasks. Furthermore, the administrator can control who accesses the partitions (by

user or by project) and how much of the resource they can consume. This ensures that

resources intended for a particular purpose, for example, running production parallel

codes, are not diverted to other uses, for example, running user shells.

Overview of RMS 2-7

Page 26

RMS Management Functions

A further partition, the root partition, is always present. It includes all nodes. It does

not have a scheduler. The root partition can only be used by administrative users (root

and rms by default).

2.4.2 Scheduling

Partitions enable different scheduling policies to be put into action. On each partition,

one or more of three scheduling policies can be deployed to suit the intended usage:

1. Gang scheduling of parallel programs, where all processes in a program are

scheduled and de-scheduled together. This is the default scheduling policy for parallel

partitions.

2. Regular UNIX scheduling with the addition of load balancing, whereby the user can

run a sequential program on a lightly loaded node. The load may be judged in terms

of free CPU time, free memory or number of users.

3. Batch scheduling, where the use of resources is controlled by a batch system.

Scheduling parameters such as time limits, time slice interval and minimum request

size are applied on an individual partition basis. Default priorities, memory limits and

CPU usage limits can be applied to users or projects to tune the partition’s workload. For

details see Chapter 6 (Access Control, Usage Limits and Accounting) and

Chapter 7 (RMS Scheduling).

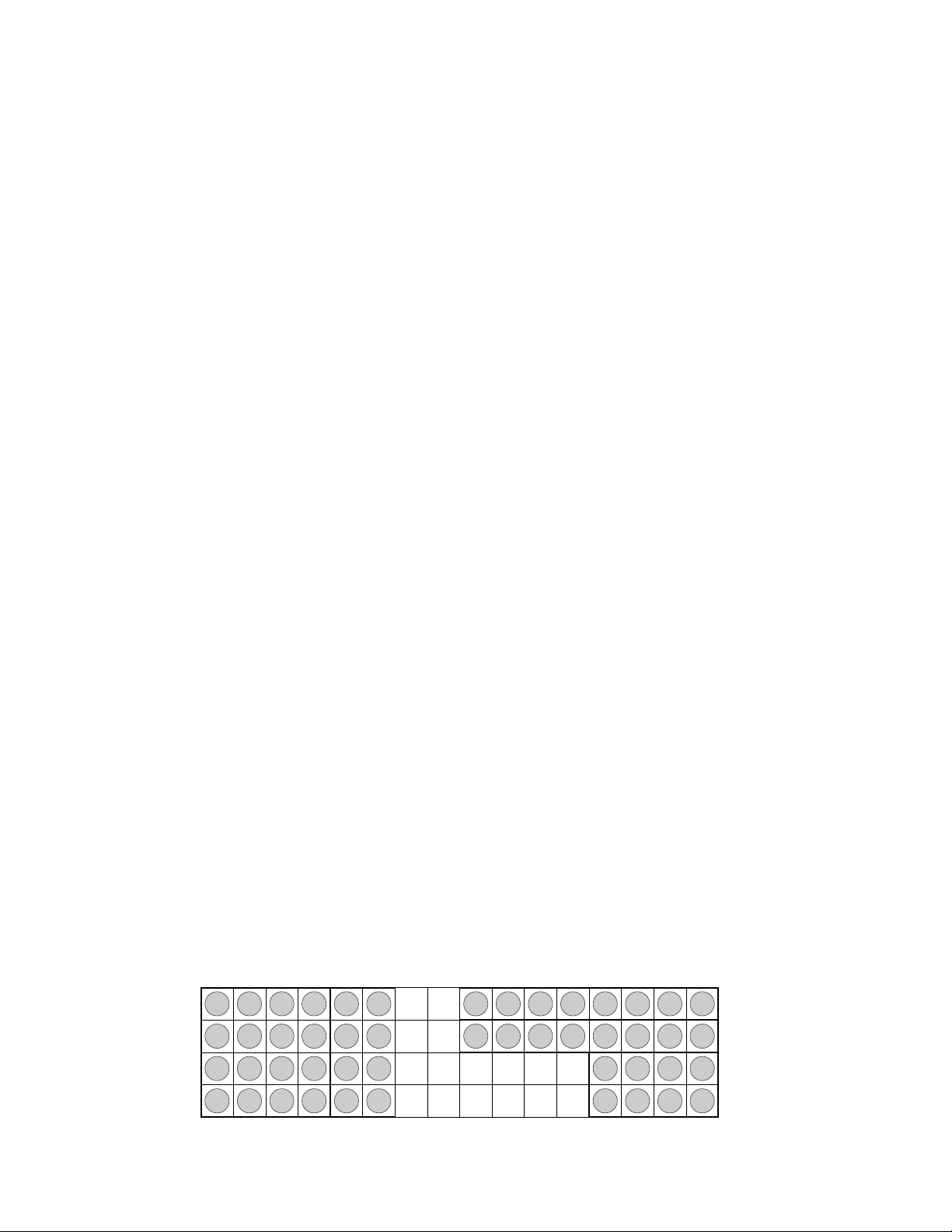

The partition shown in Figure 2.5 has its CPUs allocated to five parallel jobs. The jobs

have been allocated CPUs in two different ways: jobs 1 and 2 use all of the CPUs on each

node; jobs 3, 4 and 5 are running with only one or two CPUs per node. RMS allows the

user to specify how their job will be laid out, trading off the competing benefits of

increased locality on the one hand against increased total memory size on the other.

With this allocation of resources, all five parallel programs can run concurrently on the

partition.

Figure 2.5: Distribution of Processes

4CPUs

2-8 Overview of RMS

0

4

8

12

0

4

1

5

9

13

1

5

2

6

10

14

2

6

0011223

3

4

5

6

7

Job3

4

5

6

7

Job4

0

2

4

6

Job5

3

7

11

15

3

Job1

Job2

7

16Nodes

1

3

5

7

Page 27

RMS Management Functions

The RMS scheduler allocates contiguous ranges of nodes with a given number of CPUs

per node1. Where possible each resource request is met by allocating a single range of

nodes. If this is not possible, an unconstrained request (those that only specify the

number of CPUs required) may be satisfied by allocating CPUs on disjoint nodes. This

ensures that an unconstrained resource request can utilize all of the available CPUs.

The scheduler attempts to find free CPUs for each request. If this is not possible, the

request blocks until CPUs are available. RMS preempts programs when a higher priority

job is submitted, as shown in Figure 2.6. Initially, CPUs have been allocated for resource

requests 1 and 2. When the higher priority resource request 3 is submitted, 1 and 2 are

suspended; 3 runs to completion after which 1 and 2 are restarted.

Figure 2.6: Preemption of Low Priority Jobs

startjobs

Resource1

0 1 2

Resource2

0 2 4 6

3 4 5

suspendjobs

startjob

jobends

resumejobs

Resource1

0 1 2

3 4 5

2.4.3 Access Control and Accounting

Users are allocated resources on a per-partition basis. Resources in this context include

both CPUs and memory. The system administrator can control access to resources both

at the individual user level and at the project level (where a project is a list of users).

This means that default access controls can be set up at the project level and overridden

on an individual user basis as required. The access controls mechanism is described in

1

The scheduler allocates contiguous ranges of nodes so that processes may take advantage of the Compaq

AlphaServer SC Interconnect hardware support for broadcast and barrier operations which operate over a

contiguous range of network addresses.

1 3 5 7

Resource3

2 310 4 5 6 7

10

98

12

11

0 2 4 6

1 3 5 7

13 14

Resource2

15

Overview of RMS 2-9

Page 28

RMS Management Functions

detail in Chapter 6 (Access Control, Usage Limits and Accounting).

Each partition, except the root partition, is managed by a Partition Manager (see

Section 4.4), which mediates user requests, checking access permissions and usage

limits before scheduling CPUs and starting user jobs.

An accounting record is created as CPUs are allocated to each request. It is updated

periodically until the resources are freed. The accounting record itemizes CPU and

memory usage, indexed by job, by user and by project.

2.4.4 RMS Configuration

The set of partitions active at any time is known as a configuration. A system will

normally have a number of configurations, each appropriate to a particular operating

pattern. For example, there may be one configuration for normal working hours and

another for night time and weekend operation.

The CPUs allocated to a partition may vary between configurations. For example, a login

partition (nodes allocated for interactive use) may have more nodes allocated during

working hours than at night – it may even be absent from the night time configuration.

A pair of configurations are shown in Figure 2.7.

Figure 2.7: Two Configurations

16nodes,4CPUspernode

Day

Login Development

Night

Parallel

RMS supports automated reconfiguration at shift changes as well as dynamic

Parallel

reconfiguration in response to a request from an operator or administrator. The RMS

client rcontrol (Page 5-20) manages the switch-over from one configuration to another.

For automatic reconfiguration, rcontrol can be invoked from a cron job.

2-10 Overview of RMS

Page 29

3.1 Introduction

RMS provides users with tools for running parallel programs and monitoring their

execution, as described in Chapter 5 (RMS Commands). Users can determine what

resources are available to them and request allocation of the CPUs and memory required

to run their programs. This chapter describes the structure of parallel programs under

RMS and how they are run.

A parallel program consists of a controlling process, prun, and a number of application

processes distributed over one or more nodes. Each process may have multiple threads

running on one or more CPUs. prun can run on any node in the system but it normally

runs in a login partition or on an interactive node.

3

Parallel Programs Under RMS

In a system with SMP nodes, RMS can allocate CPUs so as to use all of the CPUs on the

minimum number of nodes (a block distribution); alternatively, it can allocate a specified

number of CPUs on each node (a cyclic distribution). This flexibility allows users to

choose between the competing benefits of increased CPU count and memory size on each

node (generally good for multithreaded applications) and increased numbers of nodes

(generally best for applications requiring increased total memory size, memory

bandwidth and I/O bandwidth).

Parallel programs can be written so that they will run with varying numbers of CPUs

and varying numbers of CPUs per node. They can, for example, query the number of

processors allocated and determine their data distributions and communications

patterns accordingly (see Appendix C (RMS Kernel Module) for details).

Parallel Programs Under RMS 3-1

Page 30

Resource Requests

3.2 Resource Requests

Having logged into the system, a user makes a request for the resources needed to run a

parallel program by using the RMS commands prun (see Page 5-11) or allocate (see

Page 5-3). When using the prun command, the request can specify details such as the

following:

• The partition on which to run the program (the -p option)

• The number of processes to run (the -n option)

• The number of nodes required (the -N option)

• The number of CPUs required per process (the -c option)

• The memory required per process (the RMS_MEMLIMITenvironment variable)

• The distribution of processes over the nodes (the -m, -B and -R options)

• How standard input, output and error streams should be handled (the -i, -o and -e

options)

• The project to which the program belongs for accounting and scheduling purposes

(the -P option)

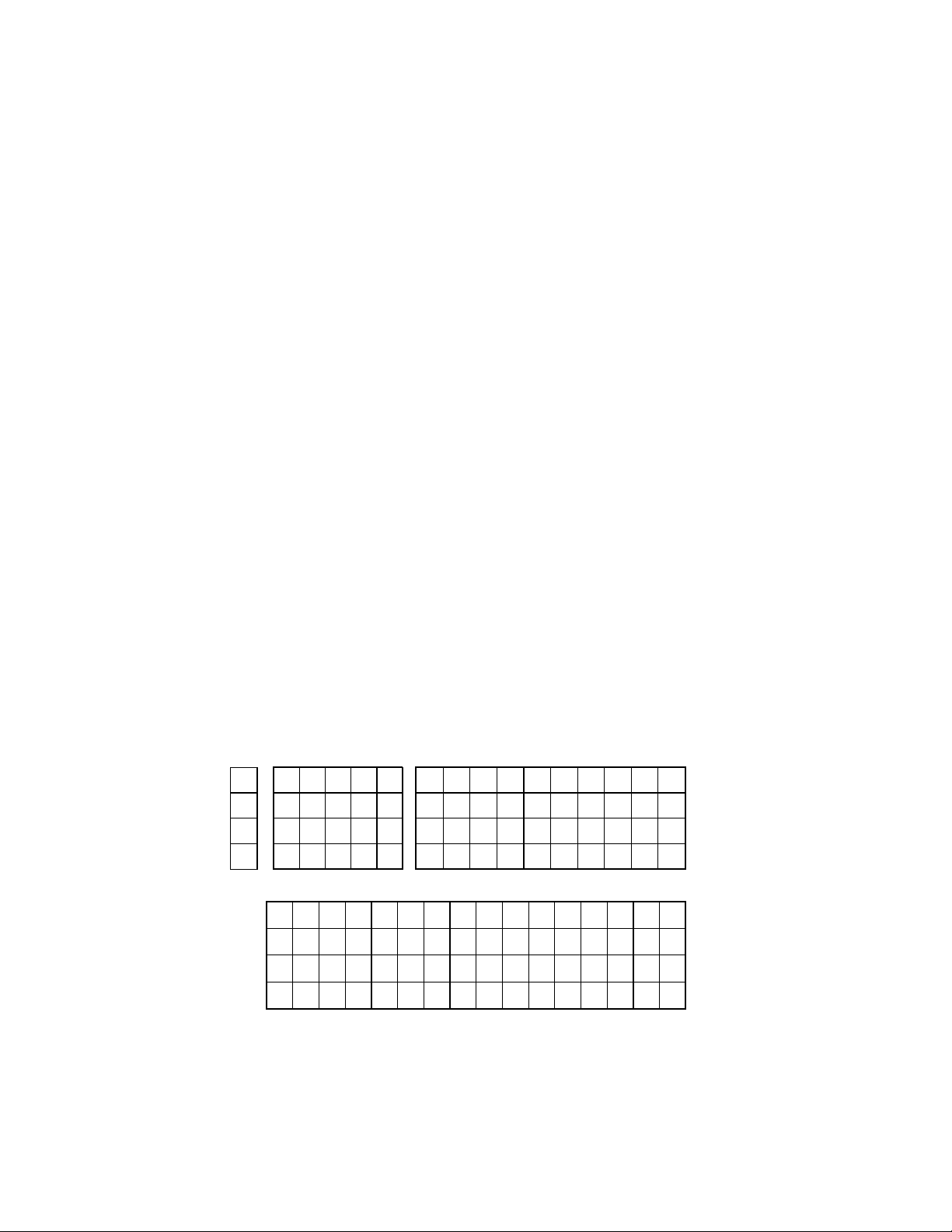

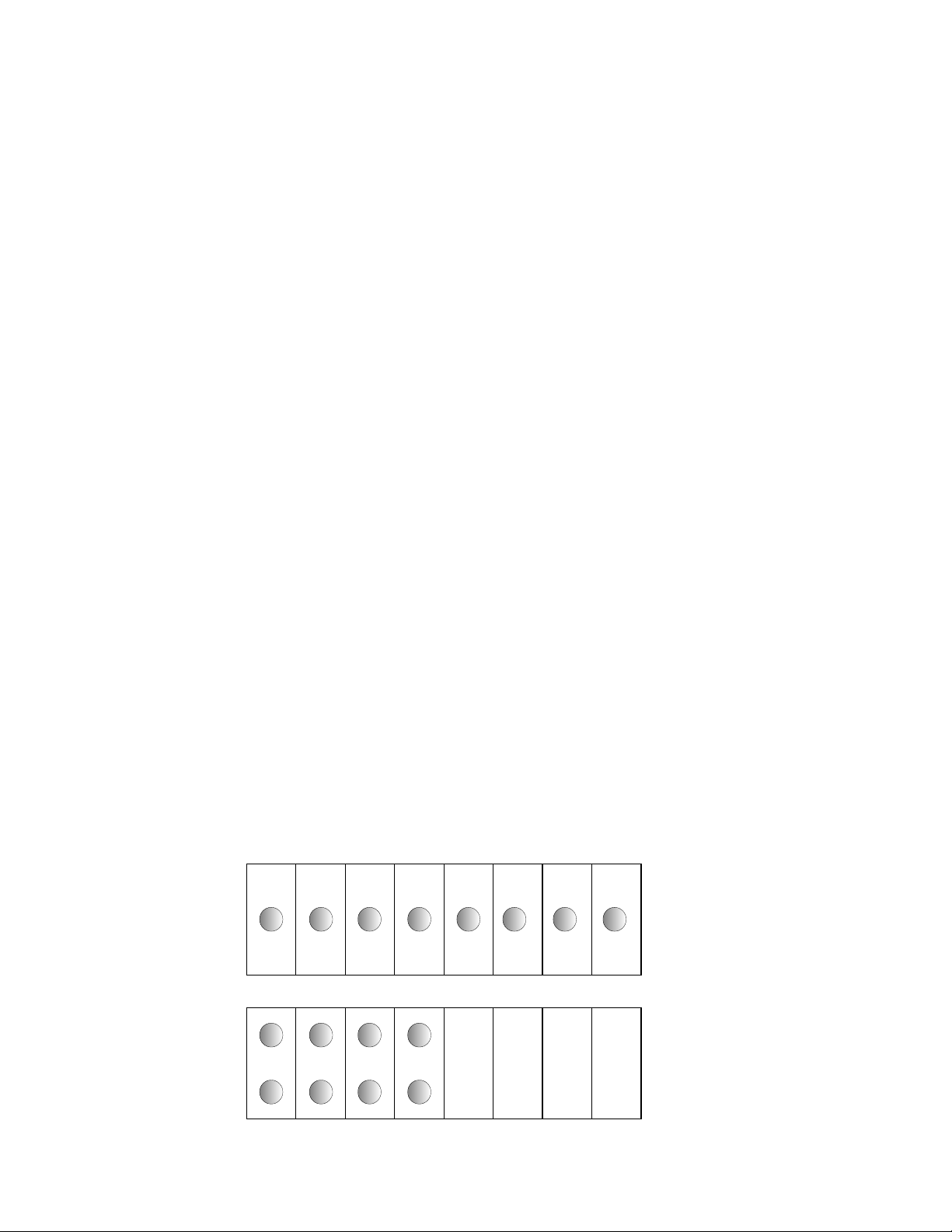

Two variants of a program with eight processes are shown in Figure 3.1: first, with one

process per node; and then, with two processes per node.

Figure 3.1: Distribution of Parallel Processes

0 1 2 3 4 5 6 7

0 1

4 5

3-2 Parallel Programs Under RMS

1ProcessPerNode

2 3

6 7

2ProcessesPerNode

Page 31

The resource request is sent to the Partition Manager, pmanager (described in

Section 4.4). The Partition Manager performs access checks (described in

Chapter 6 (Access Control, Usage Limits and Accounting)) and then allocates CPUs

according to the policies established for the partition (see Chapter 7 (RMS Scheduling)).

RMS makes a distinction between allocating resources and starting jobs on them. Before

the Partition Manager schedules a parallel program, it will ensure that the required

CPUs and memory are allocated. Note that this may cause requests to block for longer

than you might expect – especially when the job has not specified how much memory it

requires. Once CPUs have been allocated, jobs can be started on them immediately.

3.3 Loading and Running Programs

A simple parallel program is shown in Figure 3.2. It has eight application processes,

distributed over four nodes, two processes per node.

Figure 3.2: Loading and Running a Parallel Program

Loading and Running Programs

PartitionManager

prun

RMSNode

stdio

rmsd

rmsloader

0

FourNodesinaParallelPartition

4

1

5

2

6

3

7

Once the CPUs have been allocated, prun asks the pmanager to start the application

processes on the allocated CPUs. The pmanager does this by instructing the daemons

running on each of the allocated nodes to start the loader process rmsloader on the

user’s behalf.

The rmsloader process starts the application processes executing, forwarding their

stdout and stderr streams to prun (unless otherwise directed). Meanwhile, prun

supplies information on the application processes as requested by rmsloader and

forwards stdout and stderr to the controlling terminal or output files.

prun forwards stdin and certain signals (QUIT, USR1, USR2, WINCH) to the application

processes. If prun is killed, RMS cleans up the parallel program, killing the application

Parallel Programs Under RMS 3-3

Page 32

Loading and Running Programs

processes, removing any core files if requested (see Page 5-11) and then deallocating the

CPUs.

The application processes are run from the user’s current working directory with the

current limits and group rights. The data and stack size limits may be reduced if RMS

has applied a memory limit to the program.

During execution, the processes may be suspended at any time by the scheduler to allow

a program with higher priority to run. All of the processes in a parallel program are

suspended together under the gang-scheduling policy used by RMS for parallel programs

(see Chapter 7 (RMS Scheduling) for details). They are restarted together when the

higher priority program has completed.

A parallel program exits when all of its processes have exited. When this happens, the

rmsloader processes reduce the exit status back to the controlling process by

performing a global OR of the exit status of each of the processes. If prun is run with

verbose reporting enabled, a non-zero exit status is accompanied by a message, as shown

in the following example:

$ prun -v myprog

...

myprog: process 0 exited with status 1

If the level of reporting is increased with the -vv option, prun provides a commentary

on the resource request. With the -vvv option, rmsloader also outputs information

identifying the activity on each node running the program, as shown in the following

example.

$ prun -vvv myprog

prun: running /home/duncan/myprog

prun: requesting 2 CPUs

prun: starting 2 processes on 2 cpus default memlimit no timelimit

prun: stdio server running

prun: loader 1 starting on atlas1 (10.128.0.7)

prun: loader 0 starting on atlas0 (10.128.0.8)

loader[atlas1]: program description complete

loader[atlas1]: nodes 2 contexts 1 capability type 0xffff8002 entries 2

loader[atlas1]: run process 1 node=5 cntx=244

prun: process 1 is pid 1265674 on atlas1

loader[atlas0]: program description complete

loader[atlas0]: nodes 2 contexts 1 capability type 0xffff8002 entries 2

loader[atlas0]: run process 0 node=4 cntx=244

prun: process 0 is pid 525636 on atlas0

...

When the program has exited, the CPUs are deallocated and the scheduler is called to

service the queue of waiting jobs.

3-4 Parallel Programs Under RMS

Page 33

Loading and Running Programs

Sometimes, it is desirable for a user to be granted more control over the use of a

resource. For instance, the user may want to run several jobs concurrently or use the

same nodes for a sequence of jobs. This functionality is supported by the command

allocate (see Page 5-3) which allows a user to allocate CPUs in a parallel partition to a

UNIX shell. These CPUs are used for subsequent parallel jobs started from this shell.

The CPUs remain allocated until the shell exits or a time limit expires (see Section 7.3

and Section 7.4.5).

Parallel Programs Under RMS 3-5

Page 34

Page 35

4.1 Introduction

This chapter describes the role of the RMS daemons. There are daemons that run on the

rmshost node providing services for the system as a whole:

msqld Manages the database (see Section 4.2).

mmanager Monitors the health of the machine as a whole (see Section 4.3).

pmanager Controls the use of resources (see Section 4.4).

swmgr Monitors the health of the Compaq AlphaServer SC Interconnect (see

4

RMS Daemons

Section 4.5).

tlogmgr Carries out transactions on behalf of RMS servers (see Section 4.6).

eventmgr Provides a system-wide event-handling service (see Section 4.7).

There are daemons that run on each node, providing support for RMS functionality on

that node:

rmsmhd Acts as the Process Manager, starting all of the other RMS daemons

(see Section 4.8).

rmsd Carries out instructions from pmanager to run users’ programs (see

Section 4.9).

RMS Daemons 4-1

Page 36

The Machine Manager

4.1.1 Startup

RMS is started as each node executes the initialization script /sbin/init.d/rms with

the start argument on startup. This starts the rmsmhd daemon which, in turn, starts

the other daemons on that node.

The daemons can also be started, stopped and reloaded individually by rcontrol once

RMS is running. See Page 5-20 for details.

4.1.2 Log Files

Output from the management daemons is logged to the directory /var/rms/adm/log.

The log files are called daemon.log, where daemon gives the name of the RMS daemon,

such as swmgr. The Partition Managers are distinguished by suffixing pmanager with a

hyphen and then the name of the partition. For example, the Partition Manager for the

partition par1 is known is pmanager-par1.

Errors are logged to /var/rms/adm/log/error.log.

Output from rmsmhd and rmsd is logged to /tmp/rms.log on each node.

4.1.3 Daemon Status

The servers table contains information on the status of each daemon: the time it was

started, its process ID and the name of its host node (see Section 10.2.19 for details of

the table structure).

Note that the status field in the servers table is set to error if an error occurs when

starting an RMS daemon. The corresponding entry in the events table describes what

went wrong (see Chapter 8 (Event Handling) for details).

The command rinfo can be used to get reports on the status of each daemon. See

Page 5-32 for details.

4.2 The Database Manager

The Database Manager, msqld, manages the RMS database, providing an SQL interface

for its clients. Client applications may use C, C++, Java or UNIX scripts to generate SQL

queries for msqld.

The database holds all state information for RMS. This information is initially created

by the RMS client application rmsbuild (see Page 5-35). The information is updated by

the other RMS daemons as RMS operates. The information can be backed up, restored

and generally maintained using the database administration program, rmstbladm (see

Page 5-44).

4-2 RMS Daemons

Page 37

4.3 The Machine Manager

The Machine Manager, mmanager, is responsible for detecting and reporting changes in

the state of each node in the system. It records the current state of each node and any

changes in state in the database.

When a node is functioning correctly, rmsd, a daemon which runs on each node,

periodically updates the database. However, if the node crashes, or IP traffic to and from

the node stops, then these updates stop. RMS uses the external monitor, mmanager, to

check periodically the service level of each node. It monitors whether IP is functioning

and whether the RMS daemons on each node are operating.

4.3.1 Interaction with the Database

The Machine Manager records the current status of nodes in the nodes table (see

Section 10.2.14) while changes to node status are entered in the events table (see

Section 10.2.6).

The interval at which the Machine Manager performs status checks is set in the

attributes table (see Section 10.2.3) with the node-status-poll-interval

attribute. If this attribute is not present, the general attribute rms-poll-interval is

used instead.

The Partition Manager

4.4 The Partition Manager

The nodes in the RMS machine are configured into mutually exclusive sets known as

partitions (see Section 2.4). By restricting access to partitions, the system administrator

can reserve particular partitions for specific types of tasks or users. In this way, the

system administrator can ensure that resources are used most effectively; for example,

that resources intended for running parallel programs are not consumed running user

shells. The access restrictions are set up in the access_controls table (see

Section 10.2.1) of the RMS database.

Each partition is controlled by a Partition Manager, pmanager. The Partition Manager

mediates each user’s requests for resources (CPUs and memory) to run jobs in the

partition. It checks the user’s access permissions and resource limits before adding the

request to its scheduling queue. The request blocks until the resources are allocated for

the job.

When the resources requested by the user become available, the Partition Manager

instructs rmsd, a daemon that runs on each node in the partition (see Section 4.9), to

create a communications context for the user’s job. Finally, the Partition Manager

replies to the user’s request and the user’s job starts.

RMS Daemons 4-3

Page 38

The Partition Manager

The Partition Manager makes new scheduling decisions periodically and in response to

incoming resource requests (see Chapter 7 (RMS Scheduling) for details). These

decisions may result in jobs being suspended or resumed. Such scheduling operations,

together with those performed as jobs are killed, are performed by the Partition

Manager sending scheduling or signal delivery requests to the rmsds.

The Partition Manager is connected to its rmsds by a tree of sockets. Commands are

routed down this tree; they complete when an acknowledgement is returned. For

example, jobs are only marked as finished when the Partition Manager has confirmed

that all of their processes have exited.

If the tree of sockets is broken by a node crash, the Partition Manager marks the node’s

partition as blocked and generates an event. The node can then be rebooted or

configured out of the machine. If the node is rebooted, the rmsds reconnect and the

Partition Manager continues as before. If the node cannot be rebooted then the partition

must be halted, the node configured out and the partition restarted. Jobs that spanned

the failing node are cleaned up at this point. The other jobs run on unless explicitly

killed. Scheduling and signal delivery operations are suspended while the partition is

blocked.

4.4.1 Partition Startup

The Partition Manager is started by the rmsmhd daemon, running on the rmshost node,

on instruction from rcontrol (see Page 5-20). Once the partition is running, a startup

script /opt/rms/etc/pstartup is executed. This script performs site-specific and

OS-specific actions depending upon the partition type.

4.4.2 Interaction with the Database

The Partition Manager makes updates to the partitions table (see Section 10.2.16)

when it starts and as CPUs are allocated and freed.

The Partition Manager creates an entry in the resources table (see Section 10.2.18)

each time a user makes a request for resources to run a job. This entry is updated each

time CPUs are allocated or deallocated. The Partition Manager adds an entry to the

jobs table (see Section 10.2.10) as each job starts, updating it if the job is suspended or

resumed and when the job completes.

The Partition Manager creates an entry in the accounting statistics (acctstats) table

(see Section 10.2.2) when CPUs are allocated. The entry is updated periodically until the

request completes.

The Partition Manager consults the users table (see Section 10.2.24), the projects

table (see Section 10.2.17) and the access_controls table (see Section 10.2.1) to verify

users’ access permissions and usage limits.

4-4 RMS Daemons

Page 39

Configuration information about each partition is held in the partitions table (see

Section 10.2.16). The information is indexed by the name of the partition together with

the name of the active configuration.

4.5 The Switch Network Manager

The Switch Network Manager, swmgr, controls and monitors the Compaq AlphaServer

SC Interconnect (see Appendix A (Compaq AlphaServer SC Interconnect Terms)). It does

this using the switch network control interface connected to the parallel port of the

primary management node. If swmgr detects an error in the switch network, it updates

the status of the switch concerned and generates an event.

swmgr collects fan, power supply and temperature data from the Compaq AlphaServer

SC Interconnect modules, updating status information and generating events if

components fail or temperatures exceed their operating limits. See Section 9.5.4 for

site-specific details of configuring the swmgr.

4.5.1 Interaction with the Database

The Switch Network Manager creates and maintains the entries in the elites table

(see Section 10.2.5) and the switch_boards table (see Section 10.2.22). It maintains

entries in the elans table (see Section 10.2.4). In the event of errors, it creates entries

in the link_errors table (see Section 10.2.11).

The Transaction Log Manager

4.6 The Transaction Log Manager

The Transaction Log Manager, tlogmgr, executes change of state requests that have

been entered in the transactions table (see Section 10.2.23) by RMS administrative

clients. This mechanism is employed to serialize changes to the database and to provide

an audit trail of such changes.

The entry in the transactions table records who requested the change, and names the

service required together with any arguments to pass to the process on startup. A

transaction handle (a unique ID) is generated for the entry and passed to both the client

and the RMS daemon that provides the service.

The RMS daemon uses the transaction handle to label any results it produces, such as an

entry in the transaction_outputs table (see Section 10.1.3). The client uses the

handle to select the result from the relevant table. Output from the service is appended

to an output log. The name of this log is entered in the transactions table together

with the status of the transaction.

The services that are available are listed in the services table (see Section 10.2.20).

RMS Daemons 4-5

Page 40

The Process Manager

Each entry in the services table specifies which command to run, who can run it and

on which host.

4.6.1 Interaction with the Database

The Transaction Log Manager maintains the transactions table (see Section 10.2.23).

It consults the services table (see Section 10.2.20) in order to execute transactions on

behalf of its clients.

4.7 The Event Manager

When an RMS daemon detects an anomaly (such as a node crash or a high temperature

reading), it writes an event description to the events table (see Section 10.2.6). It is the

job of the Event Manager, eventmgr, to execute recovery scripts that either correct the

fault or report it to the operators if manual intervention is required.

On receiving an event notification, the Event Manager looks for a matching entry in the

event_handlers table (see Section 10.2.7), executing the handler script if it finds a

match (see Section 8.2 for details). If no match is found, it runs the default event

handler script; this script is site-specific, but it would typically run a command to

escalate the event through SNMP or email.

The Event Manager also implements the event-waiting mechanism that enables client

applications both to generate and to wait efficiently on a specified event. Typical events

include the following:

• Nodes changing state

• Partitions starting

• Transaction log entries being executed

The details that describe the event are held in the events table (see Section 10.2.6).

The Event Manager’s job is to notify interested clients that the event has occurred. This

frees the clients from having to poll for the information. For more information on RMS

event handling, see Chapter 8 (Event Handling).

4.7.1 Interaction with the Database

The Event Manager consults the events table (see Section 10.2.6) and the

event_handlers table (see Section 10.2.7).

4-6 RMS Daemons

Page 41

4.8 The Process Manager

The Process Manager, rmsmhd, is responsible for starting and stopping the other RMS

daemons. It runs on each node and is responsible for managing the other daemons that

run on its node. It starts them as the node boots, stops them as the node halts and starts

or stops them in response to requests from the RMS client application rcontrol (see

Page 5-20).

4.8.1 Interaction with the Database

RMS stores information regarding which daemons run on which nodes; this information

is stored centrally in the RMS database, rather than in node-specific configuration files.

On startup, the Process Manager checks the servers table (see Section 10.2.19) for

entries matching its node. This information is used to start the other daemons. If its

child processes (the other daemons) are killed, it checks the table to see whether they

should be restarted. The Process Manager creates its own entry in the servers table.

4.9 The RMS Daemon

The RMS daemon rmsd runs on each node in the machine. Its purpose is as follows:

The RMS Daemon

• To start application processes

• To implement scheduling decisions made by the Partition Manager

• To clean up after parallel programs when they have finished

• To execute RMS remote procedure calls on behalf of clients elsewhere in the network

• To collect accounting data and performance statistics

rmsd carries out the following tasks on behalf of the Partition Manager to run a user’s

parallel program:

• Creating and destroying communication contexts (see Section C.2)

• Starting the application loader, rmsloader.

• Delivering signals

• Suspending and resuming processes

• Collecting accounting data from the kernel

RMS Daemons 4-7

Page 42

The RMS Daemon

The rmsds communicate with each other and with the Partition Manager that controls

their node over a balanced tree of sockets. Requests (for example, to deliver a signal to

all processes in a parallel program) are passed down this tree to the appropriate range of

nodes. The results of each request are combined as they pass back up the tree.

rmsd is started by the RMS daemon rmsmhd and restarted when it exits – this happens

when a partition is shut down.

4.9.1 Interaction with the Database

rmsd records configuration information about each node (number of CPUs, amount of

memory and so on) in the nodes table (see

records usage statistics in the node statistics (node_stats) table (see Section 10.2.15).

The interval at which these statistics are sampled is set in the attributes table with

the cpu-stats-poll-interval attribute.

rmsd records details of the node’s Compaq AlphaServer SC Interconnect configuration in

the elans table as it starts (see Section 10.2.4 and

Appendix A (Compaq AlphaServer SC Interconnect Terms)).

Section 10.2.14) as it starts. It periodically

4-8 RMS Daemons

Page 43

5.1 Introduction

This chapter describes the RMS commands. RMS includes utilities that enable system

administrators to configure and manage the system, in addition to those that enable

users to run their programs.

RMS includes the following commands intended for use by system administrators:

rcontrol The rcontrol command is used to control the system resources.

rmsbuild The rmsbuild command creates and populates an RMS database for

5

RMS Commands

a given machine.

rmsctl The rmsctl script is used to stop and start the RMS system and to

report its status.

rmsquery The rmsquery command is used to select data from the database and,

in the case of system administrators, to update it.

rmstbladm The table administration rmstbladm program is used to create a

database, to back it up and to restore it.

The following utilities are used internally by RMS and may also be used by system

administrators:

nodestatus The nodestatus command is used to get or set the status or run

level of a node.

RMS Commands 5-1

Page 44

Introduction

rmshost The rmshost command reports the name of the node running the

msqladmin The msqladmin command is used for creating and deleting databases

RMS includes the following commands for all users of the system:

allocate The allocate command is used to reserve access to a set of CPUs

prun The prun command is used to run a parallel program or to run

rinfo The rinfo command is used to determine what resources are

rmsexec The rmsexec command is used to run a sequential program on a

The following sections describe the commands in more detail, listing them in

alphabetical order.

RMS management daemons.

and stopping the mSQL server.

either for running multiple tasks in parallel or for running a sequence

of commands on the same CPUs.

multiple copies of a sequential program.

available and which jobs are running.

lightly loaded node.

5-2 RMS Commands

Page 45

NAME

allocate – Reserves access to CPUs

SYNOPSIS

allocate [-hIv] [-B base] [-C CPUs] [-N nodes | all] [-n CPUs]

OPTIONS

-B base Specifies the number of the base node (the first node to use) in the

-C CPUs Specifies the number of CPUs required per node (default 1).

allocate(1)

[-p partition] [-P project] [-R request]

[script [args ...]]

partition. Numbering within the partition starts at 0. By default, the

base node is unassigned, leaving the scheduler free to select nodes

that are not in use.

-h Display the list of options.

-I Allocate CPUs immediately or fail. By default, allocate blocks until

resources become available.

-N nodes | all

Specifies the number of nodes to allocate (default 1). To allocate one

CPU on each node in the partition, use the argument all as follows:

allocate -N all. Either the -C option or the -n option can be

combined with -N but not both.

-n CPUs Specifies the total number of CPUs required.

-P project Specifies the name of the project with which the job should be

associated for scheduling and accounting purposes.

-p partition Specifies the target partition from which the resources are to be

allocated.

-R request Requests a particular configuration of resources. The types of

request currently supported are as follows:

RMS Commands 5-3

Page 46

allocate(1)

-v Specifies verbose operation.

DESCRIPTION

The allocate program allocates resources for subsequent use by the prun(1)

command. allocate is intended for use where a user wants to run a sequence of

commands or several programs concurrently on the same set of CPUs.

immediate=0 | 1

With a value of 1, this specifies that the request

should fail if it cannot be met immediately (this is

the same as the -I option).

hwbcast=0 | 1 With a value of 1, this specifies a contiguous range

of nodes and constrains the scheduler to queue the

request until a contiguous range becomes available.

rails=n In a multirail system, this specifies the number of

rails required, where 1 ≤ n ≤ 32.

Multiple requests can be entered as a comma-separated list, for

example, -R hwbcast=1,immediate=1.

The -p, -N, -C, -B and -n options control which CPUs are allocated. The -N option

specifies how many nodes are to be allocated. When this option is specified the user is

allocated a constant number of CPUs per node (default 1). The -C option specifies the

number of CPUs required per node. The alternative -n option specifies the total number

of CPUs to allocate. This option does not force the allocation of a constant number of

CPUs per node.

The -B option specifies the base of a contiguous range of nodes relative to the start of the

partition. The -N option specifies its extent. So for example -B0-N4 specifies the first

four nodes in the partition. Note that nodes that have been configured out are excluded.

The -B option should be used to gain access to a specific file system or device that is not

available on all nodes. If the -B option is used, the scheduler allocates a contiguous

range of nodes and the same number of CPUs on each node. Using this option causes a

request to block until the base node and any additional nodes required to run the

program are free.

The -p option specifies the partition from which CPUs can be allocated. CPUs cannot be

allocated from the root partition.

The Partition Manager, pmanager, allocates processing resources to users as and when

the resources are requested and become available. (See Section 4.4). By default, a

contiguous range of nodes is allocated to the request where possible. This enables

programs to take advantage of the system’s hardware broadcast facilities. The -R option

5-4 RMS Commands

Page 47

allocate(1)

can be used with hwbcast set to 1 to ensure that the range of nodes allocated is

contiguous.

Before allocating resources, the Partition Manager checks the resource limits imposed

on the current project. The project can be specified explicitly with the -P option. This

overrides the value of the environment variable RMS_PROJECT or any default setting in

the users table. (See Section 10.2.24).

The script argument (with optional arguments) can be used in two different ways, as

follows:

1. script is not specified, in which case an interactive command shell is spawned with

the resources allocated to it. The user can confirm that resources have been allocated

to an interactive shell by using the rinfo command. (See Page 5-32).

The resources are reserved until the shell exits or until a time limit defined by the

system administrator expires, whichever happens first. (See Section 10.2.16).

Parallel programs, executed from this interactive shell, all run on the shell’s

resources (concurrently, if sufficient resources are available).

2. script specifies a shell script, in which case the resources are allocated to the named

subshell and freed when execution of the script completes.

ENVIRONMENT VARIABLES

The following environment variables may be used to identify resource requirements and

modes of operation to allocate. They are used where no equivalent command line

options are given.

RMS_IMMEDIATE Controls whether to exit (value 1) rather than block (value 0) if

resources are not immediately available. The -I option overrides the

value of this environment variable. By default, allocate blocks until

resources become available. Root resource requests are always met.

RMS_MEMLIMIT Specifies the maximum amount of memory required. This must be

less than or equal to the limit set by the system administrator.

RMS_PARTITION Specifies the name of a partition. The -p option overrides the value of

this environment variable.

RMS_PROJECT Specifies the name of the project with which the request should be

associated for accounting purposes. The -P option overrides the value

of this environment variable.

RMS Commands 5-5

Page 48

allocate(1)

RMS_TIMELIMIT Specifies the execution time limit in seconds. The program will be

RMS_DEBUG Specifies whether to execute in verbose mode and display diagnostic

allocate passes all existing environment variables through to the shell that it

executes. In addition, it sets the following environment variable: