Compaq ProLiant 1600, ProLiant 2500, ProLiant 3000, ProLiant 4500, ProLiant 5000 Administrator's Manual

...Page 1

Compaq ProLiant Clusters

HA/F100 and HA/F200

Administrator Guide

Second Edition (September 1999)

Part Number 380362-002

Compaq Computer Corporation

Writer: Linda Arnold Project: Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide Comments:

Part Number: 380362-002 File Name: a-frnt.doc Last Saved On: 8/11/99 3:55 PM

Compaq Confidential – Need to Know Required

Page 2

Notice

The information in this publication is subject to change without notice.

COMPAQ COMPUTER CORPORATION SHALL NOT BE LIABLE FOR TECHNICAL OR

EDITORIAL ERRORS OR OMISSIONS CONTAINED HEREIN, NOR FOR INCIDENTAL OR

CONSEQUENTIAL DAMAGES RESULTING FROM THE FURNISHING, PERFORMANCE, OR

USE OF THIS MATERIAL. THIS INFORMATION IS PROVIDED “AS IS” AND COMPAQ

COMPUTER CORPORATION DISCLAIMS ANY WARRANTIES, EXPRESS, IMPLIED OR

STATUTORY AND EXPRESSLY DISCLAIMS THE IMPLIED WARRANTIES OF

MERCHANTABILITY, FITNESS FOR PARTICULAR PURPOSE, GOOD TITLE AND AGAINST

INFRINGEMENT.

This publication contains information protected by copyright. No part of this publication may be

photocopied or reproduced in any form without prior written consent from Compaq Computer

Corporation.

© 1999 Compaq Computer Corporation.

All rights reserved. Printed in the U.S.A.

The software described in this guide is furnished under a license agreement or nondisclosure agreement.

The software may be used or copied only in accordance with the terms of the agreement.

Compaq, Deskpro, Fastart, Compaq Insight Manager, Systempro, Systempro/LT, ProLiant, ROMPaq,

QVision, SmartStart, NetFlex, QuickFind, PaqFax, ProSignia, registered United States Patent and

Trademark Office.

Netelligent, Systempro/XL, SoftPaq, QuickBlank, QuickLock are trademarks and/or service marks of

Compaq Computer Corporation.

Microsoft, MS-DOS, Windows, and Windows NT are registered trademarks of Microsoft Corporation.

Pentium is a registered trademark and Xeon is a trademark of Intel Corporation.

Other product names mentioned herein may be trademarks and/or registered trademarks of their

respective companies.

Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

Second Edition (September 1999)

Part Number 380362-002

Writer: Linda Arnold Project: Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide Comments:

Compaq Confidential – Need to Know Required

Part Number: 380362-002 File Name: a-frnt.doc Last Saved On: 8/11/99 3:55 PM

Page 3

Contents

About This Guide

Audience......................................................................................................................ix

Scope.............................................................................................................................x

Text Conventions.........................................................................................................xi

Symbols in Text..........................................................................................................xii

Getting Help................................................................................................................xii

Compaq Technical Support.................................................................................xii

Compaq Website................................................................................................ xiii

Compaq Authorized Reseller ............................................................................ xiii

Chapter 1

Architecture of the Compaq ProLiant Clusters HA/F100 and HA/F200

Overview of Compaq ProLiant Clusters HA/F100 and HA/F200 Components...... 1-1

Compaq ProLiant Cluster HA/F100......................................................................... 1-3

Compaq ProLiant Cluster HA/F200......................................................................... 1-5

Compaq ProLiant Servers......................................................................................... 1-7

Compaq StorageWorks RAID Array 4000 Storage System..................................... 1-7

Compaq StorageWorks RAID Array 4000 ....................................................... 1-8

Compaq StorageWorks Fibre Channel Storage Hubs....................................... 1-9

Compaq StorageWorks RA4000 Controller...................................................... 1-9

Compaq StorageWorks Fibre Channel Host Adapter ..................................... 1-10

Gigabit Interface Converter-Shortwave .......................................................... 1-10

Cables .............................................................................................................. 1-10

Cluster Interconnect................................................................................................ 1-12

Client Network ................................................................................................ 1-12

Private or Public Interconnect......................................................................... 1-12

Interconnect Adapters...................................................................................... 1-13

Redundant Interconnects ................................................................................. 1-13

Microsoft Software................................................................................................. 1-14

Writer: Linda Arnold Project: Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide Comments:

Part Number: 380362-002 File Name: a-frnt.doc Last Saved On: 8/11/99 3:55 PM

Compaq Confidential – Need to Know Required

Page 4

iv Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

Architecture of the Compaq ProLiant Clusters HA/F100 and HA/F200

continued

Compaq Software ....................................................................................................1-14

Compaq SmartStart and Support Software CD................................................1-15

Compaq Redundancy Manager (Fibre Channel)..............................................1-16

Compaq Cluster Verification Utility................................................................1-16

Compaq Insight Manager.................................................................................1-17

Compaq Insight Manager XE...........................................................................1-18

Compaq Intelligent Cluster Administrator.......................................................1-18

Resources for Application Installation.............................................................1-19

Chapter 2

Designing the Compaq ProLiant Clusters HA/F100 and HA/F200

Planning Considerations............................................................................................2-2

Cluster Configurations .......................................................................................2-2

Cluster Groups....................................................................................................2-8

Reducing Single Points of Failure in the HA/F100 Configuration..................2-13

Enhanced High Availability Features of the HA/F200....................................2-22

Capacity Planning....................................................................................................2-26

Server Capacity ................................................................................................2-27

Shared Storage Capacity ..................................................................................2-29

Load Balancing.................................................................................................2-32

Networking Capacity........................................................................................2-33

Network Considerations..........................................................................................2-34

Network Configuration.....................................................................................2-34

Migrating Network Clients...............................................................................2-35

Failover/Failback Planning......................................................................................2-37

Performance After Failover..............................................................................2-37

MSCS Thresholds and Periods.........................................................................2-38

Failover of Directly Connected Devices..........................................................2-39

Manual vs. Automatic Failback .......................................................................2-40

Failover and Failback Policies .........................................................................2-40

Chapter 3

Setting Up the Compaq ProLiant Clusters HA/F100 and HA/F200

Preinstallation Overview ...........................................................................................3-1

Preinstallation Guidelines..........................................................................................3-3

Installing the Hardware .............................................................................................3-6

Setting Up the Nodes..........................................................................................3-6

Setting Up the Compaq StorageWorks Raid Array 4000 Storage System........3-8

Setting Up a Private Interconnect.....................................................................3-10

Setting Up a Public Interconnect......................................................................3-12

Redundant Interconnect....................................................................................3-12

Writer: Linda Arnold Project: Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide Comments:

Part Number: 380362-002 File Name: a-frnt.doc Last Saved On: 8/11/99 3:55 PM

Compaq Confidential – Need to Know Required

Page 5

Setting Up the Compaq ProLiant Clusters HA/F100 and HA/F200

continued

Installing the Software............................................................................................ 3-13

Assisted Integration Using SmartStart (Recommended)................................. 3-13

Manual Installation Using SmartStart............................................................. 3-19

Compaq Intelligent Cluster Administrator ............................................................. 3-22

Installing Compaq Intelligent Cluster Administrator...................................... 3-22

Additional Cluster Verification Steps..................................................................... 3-23

Verifying the Creation of the Cluster.............................................................. 3-23

Verifying Node Failover.................................................................................. 3-24

Verifying Network Client Failover ................................................................. 3-25

Chapter 4

Upgrading the HA/F100 to an HA/F200

Preinstallation Overview .......................................................................................... 4-1

Materials Required.................................................................................................... 4-2

Upgrade Procedures.................................................................................................. 4-3

Chapter 5

Managing the Compaq ProLiant Clusters HA/F100 and HA/F200

Managing a Cluster Without Interrupting Cluster Services ..................................... 5-2

Managing a Cluster in a Degraded Condition .......................................................... 5-2

Managing Hardware Components of Individual Cluster Nodes .............................. 5-3

Managing Network Clients Connected to a Cluster................................................. 5-3

Managing a Cluster’s Shared Storage....................................................................... 5-4

Remotely Managing a Cluster.................................................................................. 5-4

Viewing Cluster Events............................................................................................ 5-4

Modifying Physical Cluster Resources..................................................................... 5-5

Removing Shared Storage System .................................................................... 5-5

Adding Shared Storage System......................................................................... 5-5

Adding or Removing Shared Storage Drives.................................................... 5-7

Physically Replacing a Cluster Node................................................................ 5-9

Backing Up Your Cluster ....................................................................................... 5-10

Managing Cluster Performance.............................................................................. 5-11

Compaq Redundancy Manager............................................................................... 5-12

Changing Paths................................................................................................ 5-13

Other Functions ............................................................................................... 5-14

Compaq Insight Manager ....................................................................................... 5-15

Cluster-Specific Features of Compaq Insight Manager.................................. 5-16

Compaq Insight Manager XE................................................................................. 5-17

Cluster Monitor................................................................................................ 5-18

About This Guide v

Writer: Linda Arnold Project: Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide Comments:

Part Number: 380362-002 File Name: a-frnt.doc Last Saved On: 8/11/99 3:55 PM

Compaq Confidential – Need to Know Required

Page 6

vi Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

Managing the Compaq ProLiant Clusters HA/F100 and HA/F200

continued

Compaq Intelligent Cluster Administrator..............................................................5-20

Monitoring and Managing an Active Cluster...................................................5-20

Managing Cluster History................................................................................5-21

Importing and Exporting Cluster Configurations.............................................5-21

Microsoft Cluster Administrator .............................................................................5-22

Chapter 6

Troubleshooting the Compaq ProLiant Clusters HA/F100 and HA/F200

Installation .................................................................................................................6-2

Troubleshooting Node-to-Node Problems ................................................................6-5

Shared Storage...........................................................................................................6-7

Client-to-Cluster Connectivity ................................................................................6-12

Cluster Groups and Cluster Resource......................................................................6-16

Troubleshooting Compaq Redundancy Manager....................................................6-17

Event Logging..................................................................................................6-17

Informational Messages ...................................................................................6-17

Warning Message.............................................................................................6-20

Error Messages.................................................................................................6-20

Other Potential Problems..................................................................................6-22

Appendix A

Cluster Configuration Worksheets

Overview...................................................................................................................A-1

Cluster Group Definition Worksheet........................................................................A-2

Shared Storage Capacity Worksheet ........................................................................A-3

Group Failover/Failback Policy Worksheet.............................................................A-4

Preinstallation Worksheet.........................................................................................A-5

Appendix B

Using Compaq Redundancy Manager in a Single-Server Environment

Overview...................................................................................................................B-1

Installing Redundancy Manager...............................................................................B-4

Automatically Installing Redundancy Manager................................................B-4

Manually Installing Redundancy Manager.......................................................B-5

Managing Redundancy Manager..............................................................................B-6

Changing Paths..................................................................................................B-7

Expanding Capacity ..........................................................................................B-8

Other Functions.................................................................................................B-9

Troubleshooting Redundancy Manager ...................................................................B-9

Overview.................................................................................................................B-10

Informational Messages..........................................................................................B-10

Writer: Linda Arnold Project: Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide Comments:

Compaq Confidential – Need to Know Required

Part Number: 380362-002 File Name: a-frnt.doc Last Saved On: 8/11/99 3:55 PM

Page 7

About This Guide vii

Using Compaq Redundancy Manager in a Single-Server Environment

continued

Warning Message ...................................................................................................B-12

Error Messages .......................................................................................................B-13

Troubleshooting Redundancy Manager..................................................................B-16

Troubleshooting Potential Problems ...............................................................B-16

Appendix C

Software and Firmware Versions

Glossary

Index

Writer: Linda Arnold Project: Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide Comments:

Part Number: 380362-002 File Name: a-frnt.doc Last Saved On: 8/11/99 3:55 PM

Compaq Confidential – Need to Know Required

Page 8

Audience

About This Guide

This guide is designed to be used as step-by-step instructions for installation

and as a reference for operation, troubleshooting, and future upgrades of the

cluster server.

This guide provides information about the installation, configuration, and

implementation of the Compaq ProLiant Cluster Models HA/F100 and

HA/F200.

The primary audience of this guide consists of MIS professionals whose jobs

include designing, installing, configuring, and maintaining Compaq ProLiant

clusters.

This guide contains information that may be used by network administrators,

installation technicians, systems integrators, and other technical personnel in

the enterprise environment for the purpose of cluster installation,

implementation, and maintenance.

IMPORTANT:

information that can be valuable for a variety of users. If you are installing the Compaq

ProLiant Cluster HA/F100 or HA/F200 but will not be administering the cluster on a daily

basis, please make this guide available for the person who will be responsible for the

clustered servers when you have completed the installation.

This guide contains installation, configuration, and maintenance

Compaq Confidential – Need to Know Required

Writer: Linda Arnold Project: Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide Comments:

Part Number: 380362-002 File Name: a-frnt.doc Last Saved On: 8/11/99 3:55 PM

Page 9

x Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

Scope

Some clustering topics are mentioned, but not detailed, in this guide. Be sure

to obtain other Compaq documents that offer additional guidance. This guide

does not describe how to install and configure specific applications on a

cluster. However, several Compaq TechNotes provide this information for

industry-leading application.

This guide is designed to assist you in attaining the following objectives:

Planning and designing the Compaq ProLiant Cluster HA/F100 or

■

HA/F200 configuration to meet your business needs

Installing and configuring the Compaq ProLiant Cluster HA/F100 or

■

HA/F200 hardware and software

Using Compaq Insight Manager and Compaq Insight Manager XE,

■

Compaq Intelligent Cluster Administrator, and Compaq Redundancy

Manager to manage your Compaq ProLiant Cluster HA/F100 or

HA/F200

The contents of this guide are outlined below:

Chapter 1, “Architecture of the Compaq ProLiant Clusters HA/F100 and

■

HA/F200,” describes the hardware and software components of the

Compaq ProLiant Cluster HA/F100 and HA/F200.

Chapter 2, “Designing the Compaq ProLiant Clusters HA/F100 and

■

HA/F200,” outlines a step-by-step approach to planning and designing a

cluster configuration that meets your business needs. Included are

several cluster planning worksheets that help in documenting

information necesssary to configure your clustering solution.

Chapter 3 “Setting Up the Compaq ProLiant Clusters HA/F100 and

■

HA/F200,” outlines the steps you will take to install and configure the

Compaq ProLiant Clusters HA/F100 and HA/F200.

Chapter 4, “Upgrading the HA/F100 to an HA/F200,” illustrates

■

procedures for upgrading the Compaq ProLiant Cluster HA/F100 to a

HA/F200.

Chapter 5, “Managing the Compaq ProLiant Cluster HA/F100 and

■

HA/F200,” includes techniques for managing and maintaining the

Compaq ProLiant Clusters HA/F100 and HA/F200.

Chapter 6, “Troubleshooting the Compaq ProLiant Clusters HA/F100

■

and HA/F200,” contains high-level troubleshooting information for the

Compaq ProLiant Clusters HA/F100 and HA/F200.

Writer: Linda Arnold Project: Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide Comments:

Compaq Confidential – Need to Know Required

Part Number: 380362-002 File Name: a-frnt.doc Last Saved On: 8/11/99 3:55 PM

Page 10

Appendix A, “Cluster Configuration Worksheets,” contains blank

■

worksheets to copy and use as directed in the cluster design and the

installation steps outlined in chapters 2, 3, and 4.

Appendix B, “Using Compaq Redundancy Manager in a Single-Server

■

Environment,” explains how to implement Redundancy Manager in a

nonclustered server environment.

Appendix C, “Software and Firmware Versions,” provides software and

■

firmware version levels that are required for your Compaq ProLiant

Cluster.

“Glossary,” provides definitions for terms used throughout the guide.

■

Text Conventions

This document uses the following conventions to distinguish elements of text:

Keys

About This Guide xi

Keys appear in boldface. A plus sign (+) between

two keys indicates that they should be pressed

simultaneously.

USER INPUT

User input appears in a different typeface and in

uppercase.

FILENAMES

Menu Options,

File names appear in uppercase italics.

These elements appear with initial capital letters.

Command Names,

Dialog Box Names

COMMANDS,

These elements appear in uppercase.

DIRECTORY NAMES,

and DRIVE NAMES

Type When you are instructed to

the information

without

pressing the

Enter When you are instructed to

the information and then press the

information, type

type

Enter

information, type

enter

Enter

key.

key.

Writer: Linda Arnold Project: Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide Comments:

Part Number: 380362-002 File Name: a-frnt.doc Last Saved On: 8/11/99 3:55 PM

Compaq Confidential – Need to Know Required

Page 11

xii Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

Symbols in Text

These symbols may be found in the text of this guide. They have the following

meanings.

WARNING:

in the warning could result in bodily harm or loss of life.

CAUTION:

could result in damage to equipment or loss of information.

IMPORTANT:

instructions.

NOTE:

of information.

Text set off in this manner presents clarifying information or specific

Text set off in this manner presents commentary, sidelights, or interesting points

Getting Help

If you have a problem and have exhausted the information in this guide, you

can get further information and other help in the following locations.

Compaq Technical Support

You are entitled to free hardware technical telephone support for your product

for as long you own the product. A technical support specialist will help you

diagnose the problem or guide you to the next step in the warranty process.

Text set off in this manner indicates that failure to follow directions

Text set off in this manner indicates that failure to follow directions

In North America, call the Compaq Technical Phone Support Center at

1-800-OK-COMPAQ

1

. This service is available 24 hours a day, 7 days a week.

Outside North America, call the nearest Compaq Technical Support Phone

Center. Telephone numbers for worldwide Technical Support Centers are

listed on the Compaq website. Access the Compaq website by logging on to

the Internet:

http://www.compaq.com

1

For continuous quality improvement, calls may be recorded or monitored.

Compaq Confidential – Need to Know Required

Writer: Linda Arnold Project: Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide Comments:

Part Number: 380362-002 File Name: a-frnt.doc Last Saved On: 8/11/99 3:55 PM

Page 12

About This Guide xiii

Be sure to have the following information available before you call Compaq:

Technical support registration number (if applicable)

■

Product serial numbers

■

Product model name and number

■

Applicable error messages

■

Add-on boards or hardware

■

Third-party hardware or software

■

Operating system type and revision level

■

Detailed, specific questions

■

For additional information, refer to documentation related to specific hardware

and software components of the Compaq ProLiant Clusters HA/F100 and

HA/F200, including, but not limited to, the following:

Documentation related to the ProLiant servers you are clustering

■

(for example, manuals, posters, and performance and tuning guides)

Compaq RA4000 Array documentation

■

Microsoft NT Server 4.0/Enterprise Edition Administrator’s Guide

■

Compaq Website

The Compaq website has information on this product as well as the latest

drivers and Flash ROM images. You can access the Compaq website by

logging on to the Internet:

http://www.compaq.com

Compaq Authorized Reseller

For the name of your nearest Compaq authorized reseller:

In the United States, call 1-800-345-1518.

■

In Canada, call 1-800-263-5868.

■

Elsewhere, see the Compaq website for locations and telephone

■

numbers.

Writer: Linda Arnold Project: Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide Comments:

Part Number: 380362-002 File Name: a-frnt.doc Last Saved On: 8/11/99 3:55 PM

Compaq Confidential – Need to Know Required

Page 13

Chapter

1

Architecture of the

Compaq ProLiant Clusters

HA/F100 and HA/F200

Overview of Compaq ProLiant Clusters

HA/F100 and HA/F200 Components

A cluster is a loosely coupled collection of servers and storage that acts as a

single system, presents a single-system image to clients, provides protection

against system failures, and pr ovides configuration options for l oad balancing.

Clustering is a n established tec hnology that may provide one or mor e of the

following benefit s:

■ Availability

■ Scalability

■ Manageability

■ Inve stme nt prote c tion

■ Operational efficiency

Page 14

1-2 Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

Compaq ProLiant Clusters HA/F100 and HA/F200 platforms are composed of

the following.

Hardware:

■ Compaq Pr oLia nt ser ver s

■ Compa q StorageWorks R AID Array 4000 St orage System (formerly

Compaq Fibre Channel Storage System)

q

Compaq StorageWorks RAID Array 4000

q

Compaq Stora geWorks Fibre Channel Storage Hub (7- or 12-port)

q

Compaq Stora geWorks Fibre Channel Host Adapter

q

Compaq Storage Wor ks RA 400 0 Controller

q

Gigabit Interface Converter-Shortwave (GBIC-SW) modules

■ Cables

■ Cluster interconnect adapters

Software:

■ Microsoft Windows NT Server 4.0 Enterprise Edition

■ Compaq SmartStart and Support Software CD

■ Compaq Support Software Diskette for Windows NT (NT SSD)

■ Compaq Redundancy Manager (Fibre Channel)

■ Compaq Cluster Verification Utility

■ Compa q Insight Manager

■ Compa q Insight Manager XE

■ Compaq Intelligent Cluster Administrator

This chapter discusses the role each of these products plays in bringing a

complete clustering solution to your computing environment.

Page 15

Architecture of the Compaq ProLiant Clusters HA/F100 and HA/F200 1-3

Compaq ProLiant Cluster HA/F100

The Compaq ProLiant Cluster HA/F100 includes these hardware solution

components:

■ 2 Compaq ProLiant servers

■ 1 or more Compaq St orageWorks RA ID Array 4000s

■ 1 Compaq StorageWorks Fibre Channel St orage Hub (7- or 12- port)

■ 1 Compaq StorageWorks RA4000 Co ntroller per RA4000

■ 1 Compaq StorageWorks Fibre Channel Host Adapter per server

■ Network interface cards (NICs)

■ Gigabit Interface Converter-Shortwave (GBIC-SW) modules

■ Cables

q

Multi-mode Fibre Channel cable

q

Ethernet crossover cable

q

Network (LAN) cable

The Compaq ProLiant Cluster HA/F100 includes these software solution

components:

■ Microsoft Windows NT Server 4.0 Enterprise Edition

■ Compaq SmartStart and Support Software CD

■ Compaq Support Software Diskette for Windows NT (NT SSD)

■ Compaq Cluster Verification Utility

■ Compa q Insight Manager (optional)

■ Compa q Insight Manager XE (optional)

■ Compaq Intelligent Cluster Administrator (optional)

NOTE: See Appendix C, “Software and Firmware Versions,” for the necessary software

version levels for your cluster.

Page 16

1-4 Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

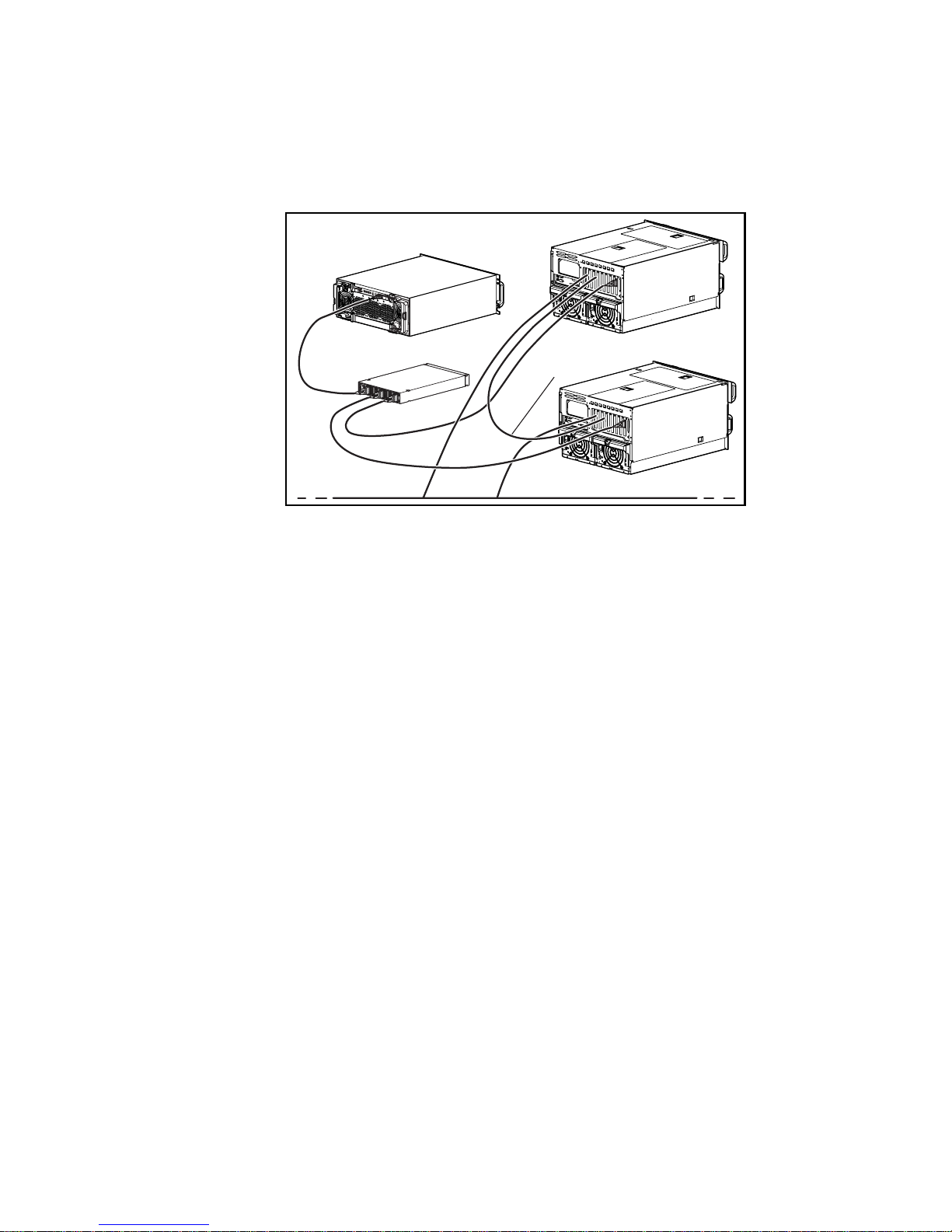

The followin g illustration depic t s the HA/F100 configuration:

RA4000

Dedicated

Interconnect

LAN

Node 2

Node 1

storage hubstorage hub

Figure 1-1. Hardware components of the Compaq ProLiant Cluster HA/F100

The Compaq ProL iant Cluster HA/F10 0 c onfiguration is a clu ste r with a

Compaq Stora geWorks RAID Array 4000, a single Compaq StorageWorks

Fibre Channe l Storage Hub (7- or 12-port), two Compaq ProLiant servers

(nodes), a single Compaq StorageWorks Fibre Channel Host Adapter per

server, a single Compaq Storage Works RA4000 Controller per RA400 0, and a

dedicated interconnect.

Page 17

Architecture of the Compaq ProLiant Clusters HA/F100 and HA/F200 1-5

Compaq ProLiant Cluster HA/F200

The Compaq ProLiant Cluster HA/F200 adds Redundancy Manager software

and a second, r edundant, Fibre Channel Arbitrated Loop (FC-AL) to the

HA/F100 configuration. The Redundancy Manager software, in conjunction

with redundant fibre channel loops, enhances the high availability features of

the HA/F200.

The Compaq ProLiant Cluster HA/F200 includes these hardware solution

components:

■ 2 Compaq ProLiant servers

■ 1 or more Compaq St orageWorks RA ID Array 4000s

■ 2 Compaq StorageWorks Fibre Channel St orage Hubs (7- or 12-port)

■ 2 Compaq StorageWorks RA4000 Co ntrollers per RA4000

■ 2 Compaq StorageWorks Fibre Channel Host Adapters per server

■ Network interface cards (NICs)

■ Gigabit Interface Converter-Shortwave (GBIC-SW) modules

■ Cables

q

Multi-mode Fibre Channel cable

q

Ethernet crossover cable

q

Network (LAN) cable

The Compaq ProLiant Cluster HA/F200 includes these software solution

components:

■ Microsoft Windows NT Server 4.0 Enterprise Edition

■ Compaq SmartStart and Support Software CD

■ Compaq Support Software Diskette for Windows NT (NT SSD)

■ Compaq Redundancy Manager (Fibre Channel)

■ Compaq Cluster Verification Utility

■ Compa q Insight Manager (optional)

■ Compa q Insight Manager XE (optional)

■ Compaq Intelligent Cluster Administrator (optional)

Page 18

1-6 Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

NOTE: See Appendix C, “Software and Firmware Versions,” for the necessary software

version levels for your cluster.

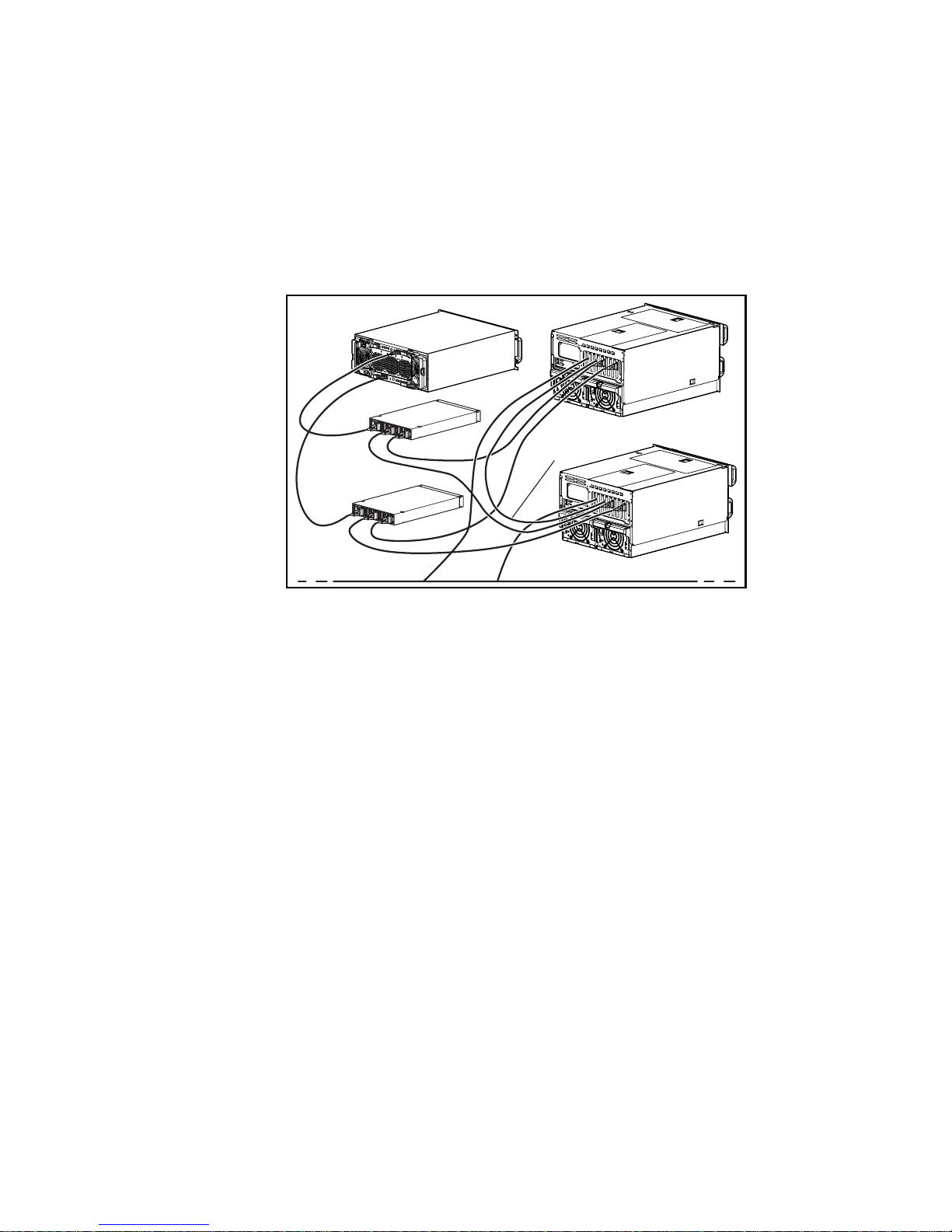

The followin g illustration depic t s the basic HA/F200 configuration.

Node 1

RA4000

Dedicated

Interconnect

LAN

Node 2

storage hubstorage hub

storage hubstorage hub

Figure 1-2. Hardware components of the Compaq ProLiant Cluster HA/F200

The Compaq ProLiant Cluster HA/F200 configuration is a cluster wit h one or

more Compaq StorageWorks RAID Array 4000s, two Compa q StorageWorks

Fibre Channe l Storage Hubs (7- or 12-port), two Compaq ProLiant serve rs,

two Compaq StorageWorks Fibre Channel Host Ada pte rs per server, two

Compaq Stora geWorks RA4000 Controllers per RA4000, and a dedica t ed

interconnect.

Page 19

Architecture of the Compaq ProLiant Clusters HA/F100 and HA/F200 1-7

Compaq ProLiant Servers

Compaq industry standard servers are a primary component of all mode ls of

Compaq ProLiant Clusters. At the high end of the ProLiant server line , several

high availability and manageability features are incorporated as a standard part

of the server feature set. These include online backup processors, a PCI bus

with hot-plug capa bilities, redundant hot-pluggable fans, redunda nt processor

power modules, redundant Network Interface Controller (NIC) support,

dual-ported hot-pluggable 10/100 NICs and redundant hot-pluggable power

supplies (on most high-end models). Many of these features are available at

the low end and mid range of the Compaq ProLiant server line, as well.

Compaq has logged thousands of hours testing m ultiple models of Compaq

servers in clustered configurations and has successfully passed the Microsoft

Cluster Certification Test Suite on numerous occasions. In fact, Compaq was

the first vendor to be certified using a shared stor age subsystem connected to

ProLiant servers through Fibre Channel Arbitrated Loop technology.

NOTE: Visit the Compaq High Availability website

(http://www.compaq.com/highavailability) to obtain a comprehensive list of

cluster-certified servers.

Compaq StorageWorks RAID Array 4000

Storage System

Microsoft Cluster Server (MSCS) is based on a cluster architecture known as

shared storage clustering, in which clustered servers share access to a

common set of hard drives. MSCS requires all cluste red (shared) data to be

stored in an external storage system.

The Compaq StorageWorks RAID Array 4000 storage system is the shared

storage system for the Compaq Pr oLiant Clusters HA/F100 and HA/F200. The

storage system consists of the following components and options:

■ Compaq StorageWor ks RAID Array 4000

■ Compa q StorageWorks Fibre Channel Storage Hub (7- or 12- port)

■ Compa q Stora ge Works RA4000 Controllers

■ Compa q StorageWorks Fibre Channel Host Adapters

Page 20

1-8 Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

■ Gigabit Interface Converter-Shortwave (GBIC-SW) modules

■ Cables

q

Multi-mode Fibre Channel cable

q

Ethernet crossover cable

q

Network (LAN) cable

Each of these components is discussed in the following sections. For detailed

information, refer to the following guides:

■ Compaq StorageWorks RAID Array 4000 User Guide

■ Compaq StorageWor ks RAID Array 4000 Configuration Poster

■ Compaq StorageWor ks RAID Array 4000 Redundant Ar ray Controller

Configuration Poster

■ Compaq StorageWorks Fibre Channel Host Adapter Installation Guide

■ Compaq StorageWorks Fibre Channel Storage Hub 7 Installation Guide

■ Compaq StorageWorks Fibre Channel Storage Hub 12 Installation

Guide

■ Compaq Fibre Chan nel Troubleshooting Guide

For more information about shared storage clustering, refer to the

Microsoft Cluster Server Administrator’s Guide.

Compaq StorageWorks RAID Array 4000

The Compaq StorageWorks RAID Array 4000 (RA4000, previously Compaq

Fibre Channel Storage System) is the storage cabinet that contains the disk

drives, power supply, and arra y controller. The RA 4000 can hold twelve

1-inch or eight 1.6-inch Wide-Ultra SCSI drives. The RA4000 supports the

same hot-pluggable drives as Compaq Servers an d Compaq ProLiant Storage

Systems, online capacity expansion, online spares, and RAID fault tolerance

of SMART-2 Ar ray Controller technology. The RA4000 also supports

hot-plugga ble, redundant pow er supplies and fans, hot-pluggable hard drives,

and MSCS.

The HA/F100 and HA/F200 ProLiant Clusters mus t have at least one RA4000

set up as external shared storage. Consult the Order and Confi g ur ati on Gui de

for Compaq ProLiant Cluster HA/F100 and HA/F200 at the Compaq

High Availability website

(http://www.compaq.com/highavailability) to determine the

maximum supported cluster co nfiguration.

Page 21

Architecture of the Compaq ProLiant Clusters HA/F100 and HA/F200 1-9

Compaq StorageWorks Fibre Channel Storage Hubs

The servers in a Compaq ProLiant Cluster HA/F100 and HA/F200 are

connected to one or more Compaq StorageWorks Raid Array 4000 shared

external storage systems using industry-standard Fibre Channel Arbitrate d

Loop (FC-AL) technology. The components used to implement the Fibre

Channel Arbitrated Loop include sh ortwave (multi-mode) fiber optic cables,

Gigabit Interface Converters-Shortwave (GBIC-SW) and Fibre Channel

storage hubs. T he Compaq StorageWorks Fibre Channel Storage Hub is a

critical component of the FC-AL configuration and allows up to five RA4000s

to be connected to the cluster servers in a “star” topology. For the HA/F100, a

single hub is used. For the HA/F200, two redundant hubs are used. Either the

7-port or 12-port hub may be used in both types of clusters. For the HA/F200

cluster, 7-p ort and 12-port hubs may be combined, if desired. If the maximum

number of s upported RA4000s (currently five) are connected to either type of

cluster using a 12-port hub, there will be unused ports. Compaq does not

currently support using these ports to connect additional RA4000s. Other

FC-AL capable devices suc h as tape backup systems should not be connected

to these unused ports under any circumstances.

Compaq StorageWorks RA4000 Controller

The Compaq StorageWorks RA4000 Controller is f ully RAID capable and

manages all of the drives in the RA4000 storage arr ay. Each RA4000 is

shipped with one controller installed. In an HA/F100 cluster, each array

controller is connected to both servers through a single Fibre Channel storage

hub. In an HA/ F200 cluster, the addition of a second Compaq StorageWorks

RA4000 Redundant Controller is required to provide redundancy. These

redundant controllers are connected to each server through two separate, and

redundant, Fibre Channel storage hubs. This dual-connection configuration

implements a vital aspect of the enhanced high availability features of the

HA/F200 cluster.

Page 22

1-10 Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

Compaq StorageWorks Fibre Channel Host Adapter

Compaq Stora geWorks Fibre Channel Host Adapters (host bus adapters) are

the interface between the server and the RA4000 storage system. At least two

host bus adapters (PCI or EISA), one for each cluster node, are required in the

Compaq ProLia nt Cluster HA/F100. At le ast four host bus adapters

(PCI only), two for each cluster node, are required in the HA/F200

configuration.

For more information about this product, refer to the Compaq StorageWorks

Fibre Channel Host Adapter Installation Guide.

Gigabit Interface Converter-Shortwave

Two Gigabit Interface Converter-Shortwave (GBIC-SW) modules are required

for each Fibre Channel cable installed. Two GBIC-SW modules are provided

with each RA4 000 and host bus adapter.

GBIC-SW modules hot-plug into Fibre Channel storage hubs, arra y

controllers, and host bus adapters. These converters provide ease of expansion

and 100 MB/s perform ance . GBI C-S W mo du les su ppor t dista nce s up to

500 meters using multi-mode fibre optic cable.

Cables

Three general categories of ca ble s are used for Compaq ProLiant HA/F100

and HA/F200 clusters:

Server to Storage

Shortwave (multi-mode) fiber optic cables are used to connect the servers,

hubs and RA4000s in a Fibre Channel Arbitrated Loop configuration.

Page 23

Architecture of the Compaq ProLiant Clusters HA/F100 and HA/F200 1-11

Cluster Interconnect

Two types of cluster interconnect cables may be used depending on the type of

devices used to implement the interconnect, and whether the interconnect is

dedicated or shared:

1.

Ethernet

If Ethernet NICs are used to implement the interconnect, there are three

options:

Dedicated Inter connect Using an Ethernet Cros sover Cable:

An Ethernet crossover cable (supplied in both the HA/F100 and

HA/F200 kits) can be used to connect the NICs directly together to

create a dedicated interconnect.

Dedicated Inter connect Using Standard Ethernet Cables and a

private Ethernet Hub: Standard E thernet cables c an be used to

connect the NICs together through a private Ethernet hub to create

another type of dedicated interconnect. Note that an Ethernet

crossover cable should not be used when using an Ethernet hub

because the hub performs the crossover function.

Shared Interconnect Using Standard Ethernet Cables and a

Public Hub: Sta ndard Ethernet cables may also be used to connect

the NICs to a public network to create a nondedicated interconnect.

2.

ServerNet

If Compaq ServerNet adapters are used to implement the interconnect,

special ServerNet cables must be used.

Network Interconnect

Standard Ethernet cables are used to provide this type of co nnection.

Page 24

1-12 Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

Cluster Interc onnect

The cluster interconnect is a data path over which nodes of a cluster

communicate. This type of communication is termed intracluster

communication. At a minimum, the interconnect consists of two ne twork

adapters (one in each server) and a cable connecting the adapters.

The cluster nodes use the interconnect data path to:

■ Communicate individual resource and overall c luster status

■ Send and receive heartbeat signals

■ Update modified registry information

IMPORTANT: MSCS requires TCP/IP as the cluster communication protocol. When

configuring the interconnects, be sure to enable TCP/IP.

Client Network

Every client/server application requires a local area network, or LAN, over

which client machines and servers communicate. The components of the LAN

are no different than with a stand-alone server configuration.

Because clients desiring the full advantage of the cluster will now connect to

the cluster rather than to a specific server, configuring client connections will

differ from those for a stand-alone server. Clients will connect to virtual

servers, which a re cluster groups that contain the i r own IP addresses.

Within this guide, communication between the network clients and the cluster

is termed cluster-to-LAN communication.

Private or Public Interconnect

There are two ty pes of interconnect paths:

■ A private interconnect ( also known as a dedicated interco nnect) is used

solely for intra cluster (node-to-node) communication. Communic ation

to and from netw ork clients does not occur over this type of

interconnect.

■ A public interconnect not only takes care of communication between the

cluster nodes, it also shares the data path with communication bet w een

the cluster and its networ k clients.

Page 25

Architecture of the Compaq ProLiant Clusters HA/F100 and HA/F200 1-13

For more information about Compaq-recommended interconnect strategies,

refer to the White Paper, “Increasing Availability of Cluster Communications

in a Windows NT Cluster,” available from the Compaq High Availability

website (

http://www.compaq.com/highavailability).

Interconnect Adapters

Ethernet adapters, or Compaq ServerNet adapters, can be used for the

interconnect between the servers in a Compaq ProLiant Cluster. Either

10Mb/sec, or 100Mb/sec, Et hernet may be used. ServerNet adapters have

built-in redundancy and provide a high-speed interconnect with 100MB/sec

aggregate throughput.

Ethernet adapters can be connected together using an Ethernet crossover cable

or a private Ethernet hub. Both of these options provide a dedicated

interconnect.

Implementing a direct Ethernet or ServerNet connection minimizes the

potential single points of failure.

Redundant Interconnects

To reduce potential disruptions of intracluster communication, use a redundant

path over whic h communication can continue if the primary path is di srupted.

Compaq recom mends configur ing the client LAN as a backup path for

intracluster communication. This provides a secondary path for the cluster

heartbeat in case the dedicated primary path for intracluster communications

fails. This is configured when installing the cluster software, or it can be added

later using the MSCS Cluster Administrator.

It is also important to provide a redun dant path to the client LAN. This can be

done by using a second NIC as a hot standby for the primary client LAN NI C.

There are two ways to achieve this, and the method you choose is dependent

on your hardware. One way is through use of the Redundant NIC Utility

available on all Compaq 10/100 Fast Ethernet products. The other option is

through the use of the Network Fault Tolerance feature designed to operate

with the Compaq 10/100 Intel silicon-based NICs. These features allow two

NICs to be configured so tha t one is a hot backup for the other.

For detailed information about interconnect redundancy, refer to the Compaq

White Paper, “Increasing Availability of Cluster Communications in a

Windows NT Cluster,” available from the Compaq Hig h Availability website

(

http://www.compaq.com/highavailability).

Page 26

1-14 Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

Microsoft Software

Microsoft Win dows NT Server 4.0/Enterprise Edition (Windows NTS/E) is

the operating system for the Com paq ProLiant Clusters HA/F100 and

HA/F200. Microsoft Cluster Server (MSCS) is part of Windows NTS/E. As

the core component of Windows NT clustering, MSCS provides the

underlying te chnology to:

■ Send and receive heartbeat signals between the cluster nodes.

■ Monitor the state of each cluster node.

■ Initiate failover a nd failback events.

NOTE: MSCS will only run with Windows NTS/E. Previous versions of Windows NT are not

supported.

Microsoft Cl uster Administrator, another component of Windows NTS/E,

allows you to do the following:

■ Defi ne and modify cluster groups

■ Manually control the cluster

■ View the current state of the cluster

NOTE: Microsoft Windows NTS/E must be purchased separately from your Compaq

ProLiant Cluster, through your Microsoft reseller.

Compaq Software

Compaq offers an extensive set of features and optional tools to support the

configuration and management of your Compa q ProLiant Cluster:

■ Compaq SmartStart and Support Software CD

■ Compaq Redundancy Manager (Fibre Channel)

■ Compa q Insight Manager

■ Compa q Insight Manager XE

■ Compaq Intelligent Cluster Administrator

■ Compaq Cluster Verification Utility

Page 27

Architecture of the Compaq ProLiant Clusters HA/F100 and HA/F200 1-15

Compaq SmartStart and Support Software CD

Compaq SmartStart is located on the SmartStart and Support Software CD

shipped with ProLiant servers. Sma rtStart is the recomme nded way to

configure the Compaq ProLiant Cluster HA/F100 or HA/F200. Smar tStart

uses a step-by-step process to configure the cluster and load the system

software. For information concerning SmartStart, refer to the Compaq Server

Setup and Management pack.

For information about usi ng SmartStart to install the Compaq ProLiant

Cluster HA/F1 00 and HA/F200, see chapters 3 and 4 of this guide.

Compaq Array Configuration Utility

The Compaq Array Configuration Utility, found on the Compaq SmartStart

and Support Software CD, is used to configure the array controller, add disk

drives to an existing configuration, and expand c apacity.

Compaq System Configuration Utility

The SmartStart and Support Software CD also contains the Compaq System

Configuration Utility. This utility is the primary means to confi gure hardware

devices in your server, such as I/ O addresses, boot order of disk controllers,

and so on.

For information concerning the Compaq System Configuration Utility, refer to

the Compaq Server Setup and Management pack.

Compaq Support Software Diskette (NT SSD)

The Compaq Support Software Diskette for Windows NT (NT SSD) contains

device drivers and utilities that enable you to take advantage of specific

capabilities offered on Compaq products. These drivers are provided for use

with Compaq hardware only.

The NT SSD is included in the Compaq Server Setup and M anagement pac k.

Options ROMPaq Utility

The SmartStart and Support Software CD also contains the Options ROMPaq

utility. Options ROMPaq updates the firmware on the Compaq StorageWorks

RA4000 Contr oller s and t he har d dr ive s.

Page 28

1-16 Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

Fibre Channel Fault Isolation Utility (FFIU)

The SmartStart and Support Software CD also contains the Fibre Channel

Fault Isolation Utility (FFIU). The FFIU verifie s the integrity of a new or

existing FC-AL installation. This utility provide s fault detection and help in

locating a failing device on the FC-AL.

Compaq Redundancy Manager (Fibre Channel)

Compaq Redundancy Manager, a Compaq-written software component that

works in conjunction with the Windows NT file system (NTFS), increases the

availability of both single-server and clustered systems that use the Compaq

StorageWork s RAID Array 4000 Storage System and Compaq ProLiant

servers. Redundancy Manager can detect failures in the host bus adapter, array

controller or other Fibre Channel Arbitrated Loop c omponents. When such a

failure occurs, I/O processing is rerouted through a redundant path, allowing

applications to continue processing. This rerouting is transparent to the

Windows NT file system. Therefor e, in an HA/F200 configuration, it is not

necessary for MSCS to fail resources over to the other node. Redundancy

Manager, i n combination with redundant hardware comp onents, is the ba sis

for the enhanc ed high availability features of the H A/F200.

Compaq Redundancy Manager (Fibre Channel) CD is included in the Compaq

ProLiant Cluster HA/F200 kit.

Compaq Cluster Verification Utility

The Compaq Cluster Verification Utility (CCVU) is a software utility that can

be used to validate several key a spects of the Compaq ProLiant

Cluster HA/F100 and HA/F200 and their components.

The stand-alone utility can be run from either of the cluster nodes or remotely

from a network client attached to the cluster. When CCVU is run remotely, it

can validate any number of Windows NT clusters to which the client is

attached.

The CCVU tests your cluster configuration in the following categories:

■ A node te st verifies that the clustered serve rs are supported in HA/F100

and HA/F200 cluster configura tions.

■ Networking tests verify that your setup mee t s the minimum cluster

requirements for network cards, connectivity, and TCP/IP configuration.

Page 29

Architecture of the Compaq ProLiant Clusters HA/F100 and HA/F200 1-17

■ Storage tests verify the presence and minimum configuration

requirements of supported host bus adapters, arr ay controllers, and

external storage subsystem.

■ System software tests verify that Microsoft Windows NT

Server 4.0/ Enterprise Edition has been installed.

The Compaq Cluster Verification Utility CD is included in the HA/F100 and

HA/F200 cluster kits. For detailed informatio n about the CCVU, refer to the

online documentation (CCVU.HLP) included on the CD.

Compaq Insight Manager

Compaq Insight Manager, loaded from the Compaq Management CD, is an

easy-to-use, console-based software utility for collecting server and cluster

information. Compaq Insight Manager perform s the following functions:

■ Monitors fault conditions and system status

■ Monitors shared storage and interconnect adapters

■ Forwards server alert fault conditions

■ Remotely controls servers

The Integrated Management Log collects and feeds data to Compaq Insight

Manager. This log is used with the Insight Manage ment Desktop (IM D ),

Remote Insight (optional controller), and SmartStart.

In Compaq servers, each hardware subsystem, such as disk storage, system

memory, and system process or, has a robust set of management capabilities.

Compaq Full Spectrum Fault Management notifies of impending fault

conditions and keeps the server up and running in the unlikely event of a

hardware failure.

For information concerning Compaq Insight Manager, refer to the Compaq

Server Setup and Management pack.

Page 30

1-18 Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

Compaq Insight Manager XE

Compaq Insight Manager XE is a Web-based mana gement system. It c an be

used in conjunct ion with Compaq Insight Manager agents as well as it s own

Web-enabled agents. This br owser-based utility provides increased flexibility

and efficiency for the administrator. It extends the functionality of Compaq

Insight Mana ger and works in conjunction with t he Cluster Monitor

subsystem, providing a common data repository and control point for

enterprise servers and clusters, desktops, and other devices usi ng either

SNMP- or DMI-based messaging.

Compaq Insight Manager XE is an optional CD available upon request from

the Compaq System Management website (

http://www.compaq.com/sysmanage).

Cluster Monitor

Cluster Monitor is a Web-based monitoring subsyste m of Compaq Insight

Manager XE. With Cluster Monitor, yo u can view all clusters from a single

browser and c onfigure monitor points and specific operational per formance

thresholds that will alert you when these thresholds have been met or exceeded

on your application systems. Cluster Monitor relies heavily on the Compaq

Insight Manager agents for basic information about system health. It also has

custom agents that are designed specifically for monitoring cluster health.

Cluster Monitor provides access to the Compaq Insight Manager alarm,

device, an d configuration information.

Cluster Monitor allows the administrator to view some or all of the clusters,

depending on administrative c ontrols that are s pe cified when cluste rs are

discovered by Compaq Insight Manager XE.

Compaq Intelligent Cluster Administrator

Compaq Intelligent Cluster Administrator extends Compaq Insight Manager

and Cluster Monitor by enabling Administrator to configure and manage

ProLiant clusters from a Web browser. With Compaq Intelligent Cluster

Administrator , you can copy, m odify, and dynamically install a cluster

configuration on the same physical cluster or on any physical cluste r anywhere

in the system, through the Web.

Compaq Intelligent Cluster Administrator checks for any cluster destabilizing

conditions, such as disk thresholds or application slowdowns, and reallocates

cluster resources to meet pr ocessing demands. This software also performs

dynamic alloc ation of cluster resources that may be failing without causing the

cluster to fail over.

Page 31

Architecture of the Compaq ProLiant Clusters HA/F100 and HA/F200 1-19

Compaq Intelligent Cluster Administrator also provides i nitialized cluster

configurations that allow rapid cluster generation as well as clus ter

configuration builder wizards for extending the Compaq initialized

configurations.

Compaq Intelligent Cluster Administrator is included with t he

HA/F200 cluste r kit and can be purchased as a stand-alone component for

the HA/F100 cluster.

Resources for Application Installation

The client/server software appl ications are among the key components of any

cluster. Com pa q is working with its key software partners to ensure that

cluster-aware applications are available and that the applications work

seamlessly on Compaq ProLiant c lusters.

Compaq provides a number of Integration TechNotes and White Papers to

assist you with installi ng these applications in a Compaq ProLiant Cluster

environment.

Visit the Compaq High Availabi lity website

(

http://www.compaq.com/highavailability) to download current versions of these

TechNotes and other technical documents.

IMPORTANT: Your software applications may need to be updated to take full advantage

of clustering. Contact your software vendors to check whether their software supports

MSCS and to ask whether any patches or updates are available for MSCS operation.

Page 32

Chapter

2

Designing the Compaq ProLiant

Clusters HA/F100 and HA/F200

Before connecting any cables or powering on any machines, it is important to

understand how all of the various cluster components and concepts fit together

to meet your information system needs. The major topics discussed in this

chapter are:

■ Planning Considerations

■ Capacity Planning

■ Network Considerations

■ Failover/Failback Planning

In addition to reading this chapter, read the planning chapter in

Microsoft Cluster Server Administrator’s Guide.

Page 33

2-2

Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

Planning Considerations

To correctly assess capacity, network, and failover needs in your business

environment, it is important to have a good understanding of clustering and the

things that affect the availability of clusters. The items detailed in this section

will help you design your Compaq ProLiant Cluster so that it addresses your

specific availability needs.

■ Cluster configuration design is addressed in “Cluster Configurations.”

■ A step-by-step approach to creating cluster groups is discussed in

“Cluster Groups.”

■ Recommendations regarding how to reduce or eliminate single points of

failure are contained in the “Reducing Single Points of Failure in the

HA/F100 Configuration” section of this chapter. By definition, a highly

available system is not continuously available and therefore may have

single points of failure.

NOTE:

The discussion in this chapter relating to single points of failure applies only to the

Compaq ProLiant Cluster HA/F100. The HA/F200 includes dual redundant loops, which

eliminate certain single points of failure contained in the HA/F100.

Cluster Configurations

Although there are many ways to set up clusters, most configurations fall into

two categories: active/active and active/standby.

Active/Active Configuration

The core definition of an active/active configuration is that each node is

actively processing data when the cluster is in a normal operating state. Both

the first and second nodes are “active.” Because both nodes are processing

client requests, an active/active design maximizes the use of all hardware in

both nodes.

Page 34

Designing the Compaq ProLiant Clusters HA/F100 and HA/F200

2-3

An active/active configuration has two primary designs:

■ The first design uses Microsoft Cluster Server (MSCS) failover

capabilities on both nodes, enabling Node1 to fail over clustered

applications to Node2 and enabling Node2 to fail over clustered

applications to Node1. This design optimizes availability since both

nodes can fail over applications to each other.

■ The second design is a one-way failover. For example, MSCS may be

set up to allow Node1 to fail over clustered applications to Node2, but

not to allow Node2 to fail over clustered applications to Node1. While

this design increases availability, it does not maximize availability since

failover is configured on only one node.

When designing cluster nodes to fail over to each other, ensure that each

server has enough capacity, memory, and processor power to run all

applications (all applications running on the first node plus all clustered

applications running on the other node).

When designing your cluster so that only one node (Node1) fails over to the

other (Node2), ensure that Node2 has enough capacity, memory, and CPU

power to execute not only its own applications, but to run the clustered

applications that can fail over from Node1.

Another consideration when determining your servers’ hardware is

understanding your clustered applications’ required level of performance when

the cluster is in a degraded state (when one or more clustered applications is

running on a secondary node). If Node2 is running near peak performance

when the cluster is in a normal operating state, and if several clustered

applications are failed over from Node1, Node2 will likely execute the

clustered applications more slowly than when they were executed on Node1.

Some level of performance degradation may be acceptable. Determining how

much degradation is acceptable depends on the company.

Page 35

2-4

Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

Example 1: File & Print/File & Print

An example business scenario involves two file and print servers. The Human

Resources (HR) department uses one server, and the Marketing department

uses the other. Both servers actively run their own file shares and print

spoolers while the cluster is in its normal state (an active/active design).

If the HR server encounters a failure, it fails over its file and print services to

the Marketing server. HR clients experience a slight disruption of service

while the file shares and print spooler fail over to their secondary server. Any

jobs that were in the print spooler before the failure event will now print from

the Marketing server.

File and Print

Human Resources

Capacity

Marketing

File and Print

Marketing

Capacity

Human Resources

Shared Storage

(Human Resources)(Marketing)

Figure 2-1. Active/active example 1

When failover is complete, all of the HR clients have full access to their file

shares and print spooler. Marketing clients do not experience any disruption of

service. All clients may experience slowed performance while the cluster runs

in a degraded state.

Page 36

Designing the Compaq ProLiant Clusters HA/F100 and HA/F200

2-5

Example 2: Database/Database

Another scenario has two distinct database applications running on two

separate cluster nodes. One database application maintains Human Resources

records, and its primary node is set to the HR database node. The other

database application is used for market research, and its primary node is set to

the Marketing database node.

While in a normal state, both cluster nodes run at expected performance levels.

If the Marketing server encounters a failure, the market research application

and associated data resources fail over to their secondary node, the HR

database server. The Marketing clients experience a slight disruption of

service while the database resources are failed over, the database transaction

log is rolled back, and the information in the database is validated. When the

database validation is complete, the market research application is brought

online on the HR database node and the Marketing clients can reconnect to it.

While the Marketing database validation is occurring, the HR clients do not

experience any disruption of service.

Example 3: File & Print/Database

In this example, a business uses a single server to run its order entry

department. The same department has a file and print server. While order entry

is business-critical and requires maximum availability, the file and print server

can be unavailable for several hours without impacting revenue. In this

scenario, the order entry database is configured to use the file and print server

as its secondary node. However, the file and print server will not be configured

to fail over applications to the order entry server.

File and Print

Services

Order Entry

Database

Capacity of

Order Entry

Database

Node1 Node2

Shared Storage

(Order Entry)

(File and Print)

Figure 2-2. Active/active example 3

Page 37

2-6

Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

If the node running the order entry database encounters a failure, the database

fails over to its secondary node. The order entry clients experience a slight

disruption of service while the database resources are failed over, the database

transaction log is rolled back, and the information in the database is validated.

When the database validation is complete, the order entry application is

brought online on the file and print server and the clients can reconnect to it.

While the database validation is occurring, file and print activities continue

without disruption.

If the file and print server encounters a failure, those services are not failed

over to the order entry server. File and print services are offline until the

problem is resolved and the node is brought back online.

Active/Standby Configuration

The primary difference between an active/active configuration and an

active/standby configuration is the number of servers actively processing data.

In active/standby, only one server is processing data (active) while the other

(the standby server) is in an idle state.

The standby server must be logged in to the Windows NT domain and MSCS

must be up and running. However, no applications are running. The standby

server’s only purpose is to take over failed clustered applications from its

partner. The standby server is not a preferred node for any clustered

applications and, therefore, does not fail-over any applications to its partner

server.

Because the standby server does not process data until it accepts failed over

applications, the limited use of the server may not justify the cost of the server.

However, the cost of standby servers is justified when performance and

availability are paramount to a business’ operations.

The standby server should be designed to run all of the clustered applications

with little or no performance degradation. Since the standby server is not

running any applications while the cluster is in a normal operating state, a

failed-over clustered application will likely execute with the same speed and

response time as if it were executing on the primary server.

Page 38

Designing the Compaq ProLiant Clusters HA/F100 and HA/F200

2-7

Example – Database/Standby Server

An example business scenario describes a mail order business whose

competitive edge is quick product delivery. If the product is not delivered on

time, the order is void and the sale is terminated. The business uses a single

server to perform queries and calculations on order entry information,

translating sales orders into packaging and distribution instructions for the

warehouse. With an estimated downtime cost of $1,000/hour, the company

determines that the cost of a standby server is justified.

This mission-critical (active) server is clustered with a standby server. If the

active server encounters a failure, this critical application and all its resources

fail over to the standby server, which validates the database and brings it

online. The standby server now becomes active and the application executes at

an acceptable level of performance.

Mail Order System

Shared Storage

(Mail Order Database)

(Standby)

Node1 Node2

Capacity

(Mail Order System)

Figure 2-3. Active/standby example

Page 39

2-8

Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

Cluster Groups

Understanding the relationship between your company’s business functions

and cluster groups is essential to getting the most from your cluster. Business

functions rely on computer systems to support activities such as transaction

processing, information distribution, and information retrieval. Each computer

activity relies on applications or services, and each application depends on

software and hardware subsystems. For example, most applications need a

storage subsystem to hold their data files.

This section is designed to help you understand which subsystems, or

resources, must be available for either cluster node to run a clustered

application properly.

Creating a Cluster Group

The easiest approach to creating a cluster group is to start by designing a

resource dependency tree. A resource dependency tree has as its top level the

business function for which cluster groups are created. Each cluster group has

branches that indicate the resources upon which the group is dependent.

Page 40

Designing the Compaq ProLiant Clusters HA/F100 and HA/F200

2-9

Resource Dependency Tree

The following steps describe the process of creating a resource dependency

tree. Each step is illustrated by adding information to a sample resource

dependency tree. The sample is for a hypothetical Web Sales Order business

function, which consists of two cluster groups: a database server

(a Windows NT application) and a Web server (a Windows NT service).

NOTE:

For this example, it is assumed that each cluster group can communicate with the

other even if they are not executing on the same node, for example, by means of an IP

address. With this assumption, one cluster group can fail over to the other node, while the

remaining cluster group continues to execute on its primary node.

1.

List each business function that requires a clustered application

or service.

Web Sales Order

Business Function

Web Sales Order

Cluster Group

Cluster Group #1

Cluster Group #2

Figure 2-4. Resource dependency tree: step 1

Page 41

2-10

Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

2.

List each application or service required for each business function.

Web Sales Order

Business Function

Web Server Service

(Cluster Group #1)

Resource

#1

Dependent-Resource

#1

Resource#2Resource

#3

Resource

#1

Dependent-Resource

#1

Resource#2Resource#3Resource

#4

Database Server Application

(Cluster Group #2)

Figure 2-5. Resource dependency tree: step 2

Page 42

Designing the Compaq ProLiant Clusters HA/F100 and HA/F200

2-11

3.

List the immediate dependencies for each application (or service).

Web Sales Order

Business Function

Web Server Service

(Cluster Group #1)

Database Server Application

(Cluster Group #2)

Network

Name

IP Address

Network

Name

IP Address

Web Server

Service

Physical Disk

Resource-

contains web

pages and web

scripts

Database

Application

Physical Disk

Resource -

contains DB

log file(s)

Physical Disk

Resource -

contains DB

data file(s)

Figure 2-6. Resource dependency tree: step 3

4.

Transfer the resource dependency tree into a Cluster Group Definition

worksheet.

Page 43

2-12

Compaq ProLiant Clusters HA/F100 and HA/F200 Administrator Guide

Figure 2-7 illustrates the worksheet for the Web Sales Order business function.

A blank copy of the worksheet is provided in Appendix A.

Cluster Group Definition Worksheet

Cluster Function

Web Sales Order

Group #1

Web Server Service

Group #2

Database Server Application

Resource Definitions

Group #1 (Web Server Service)

Resource #1 Network Name

Sub Resource 1 Sub Resource 2 Sub Resource 3 Sub Resource 4

IP Address

Resource #2 Physical Disk Resource-contains Web pages and Web scripts

Sub Resource 1 Sub Resource 2 Sub Resource 3 Sub Resource 4

Resource #3 Web Server Service

Sub Resource 1 Sub Resource 2 Sub Resource 3 Sub Resource 4

Resource #4 N/A

Sub Resource 1 Sub Resource 2 Sub Resource 3 Sub Resource 4

Group #2 (Database Server Application)

Resource #1 Network Name

Sub Resource 1 Sub Resource 2 Sub Resource 3 Sub Resource 4

IP Address

Resource #2 Physical Disk Resource-contains database log files

Sub Resource 1 Sub Resource 2 Sub Resource 3 Sub Resource 4

Resource #3 Physical Disk Resource-contains database data files

Sub Resource 1 Sub Resource 2 Sub Resource 3 Sub Resource 4

Resource #4 Database Application

Sub Resource 1 Sub Resource 2 Sub Resource 3 Sub Resource 4