Page 1

TruCluster Server

Hardware Configuration

Part Number: AA-RHGWB-TE

April 2000

Product Version: TruCluster Server Version 5.0A

Operating System and Version: Tru64 UNIX Version 5.0A

This manual describes how to configure the hardware for a TruCluster

Server environment. TruCluster Server Version 5.0A runs on the Tru64™

®

UNIX

operating system.

Compaq Computer Corporation

Houston, Texas

Page 2

© 2000 Compaq Computer Corporation

COMPAQandthe Compaq logo Registered in U.S. Patent and Trademark Office. TruCluster and Tru64 are

trademarks of Compaq Information Technologies Group, L.P.

Microsoft and Windows are trademarks of Microsoft Corporation. UNIX and The Open Group are

trademarks of The Open Group. All other product names mentioned herein may be trademarks or

registered trademarks of their respective companies.

Confidential computer software. Valid license from Compaq required for possession, use, or copying.

Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software

Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor’s standard commercial license.

Compaq shall not be liable for technical or editorial errors or omissions contained herein. The information

in this publication is subject to change without notice and is provided "as is" without warranty of any

kind. The entire risk arising out of the use of this information remains with recipient. In no event shall

Compaq be liable for any direct, consequential, incidental, special, punitive, or other damages whatsoever

(including without limitation, damages for loss of business profits, business interruption or loss of business

information), even if Compaq has been advised of the possibility of such damages. The foregoing shall

apply regardless of the negligence or other fault of either party and regardless of whether such liability

sounds in contract, negligence, tort, or any other theory of legal liability, and notwithstanding any failure of

essential purpose of any limited remedy.

The limited warranties for Compaq products are exclusively set forth in the documentation accompanying

such products. Nothing herein should be construed as constituting a further or additional warranty.

Page 3

About This Manual

1 Introduction

1.1

1.2

1.3

1.4

1.4.1

1.4.1.1

1.4.1.2

1.4.1.3

1.4.1.4

1.5

1.6

1.6.1

1.6.2

1.6.3

1.6.4

1.6.5

1.7

The TruCluster Server Product ..................................... 1–1

Overview of the TruCluster Server Hardware Configuration .. 1–2

Memory Requirements ...............................................

Minimum Disk Requirements ...................................... 1–3

Disks Needed for Installation .................................. 1–3

Tru64 UNIX Operating System Disk ..................... 1–3

Clusterwide Disk(s) ......................................... 1–4

Member Boot Disk .......................................... 1–4

Quorum Disk .................................................

Generic Two-NodeCluster ........................................... 1–5

Growing a Cluster from Minimum Storage to a NSPOF

Cluster ..................................................................

Two-Node Clusters Using an UltraSCSI BA356 Storage

Shelf and Minimum Disk Configurations .................... 1–8

Two-Node Clusters Using UltraSCSI BA356 Storage Units

with Increased Disk Configurations .......................... 1–10

Two-Node Configurations with UltraSCSI BA356 Storage

Units and Dual SCSI Buses .................................... 1–12

Using Hardware RAID to Mirror the Clusterwide Root

File System and Member System Boot Disks ................ 1–13

Creating a NSPOF Cluster ..................................... 1–15

Overview of Setting up the TruCluster Server Hardware

Configuration .......................................................... 1–17

Contents

1–3

1–5

1–7

2 Hardware Requirements and Restrictions

2.1

2.2

2.3

2.4

2.4.1

2.4.2

2.5

TruCluster Server Member System Requirements .............. 2–1

Memory Channel Restrictions ...................................... 2–1

Fibre Channel Requirements and Restrictions .................. 2–3

SCSI Bus Adapter Restrictions ..................................... 2–6

KZPSA-BB SCSI Adapter Restrictions ....................... 2–6

KZPBA-CB SCSI Bus Adapter Restrictions ................. 2–6

Disk Device Restrictions ............................................. 2–7

Contents iii

Page 4

2.6

2.7

2.8

2.9

2.10

RAID Array Controller Restrictions ...............................

SCSI Signal Converters ..............................................

DS-DWZZH-03 and DS-DWZZH-05 UltraSCSI Hubs ........... 2–9

SCSI Cables ............................................................

SCSI Terminators and Trilink Connectors ........................ 2–11

3 Shared SCSI Bus Requirements and Configurations Using

UltraSCSI Hardware

3.1

3.2

3.2.1

3.2.2

3.2.3

3.2.4

3.3

3.4

3.5

3.6

3.6.1

3.6.1.1

3.6.1.2

3.6.1.2.1

3.6.1.2.2

3.6.1.2.3

3.6.1.2.4

3.6.1.2.5

3.6.1.3

3.7

3.7.1

3.7.1.1

3.7.1.2

Shared SCSI Bus Configuration Requirements .................. 3–2

SCSI Bus Performance ...............................................

SCSI Bus Versus SCSI Bus Segments ........................ 3–4

Transmission Methods .......................................... 3–4

Data Path .........................................................

Bus Speed .........................................................

SCSI Bus Device Identification Numbers ........................ 3–5

SCSI Bus Length ......................................................

Terminating the Shared SCSI Bus when Using UltraSCSI

Hubs ....................................................................

UltraSCSI Hubs .......................................................

Using a DWZZH UltraSCSI Hub in a Cluster

Configuration .....................................................

DS-DWZZH-03 Description ................................ 3–9

DS-DWZZH-05 Description ................................ 3–10

DS-DWZZH-05 Configuration Guidelines .......... 3–10

DS-DWZZH-05 Fair Arbitration ..................... 3–12

DS-DWZZH-05 Address Configurations ............ 3–13

SCSI Bus Termination Power ......................... 3–15

DS-DWZZH-05 Indicators ............................. 3–15

Installing the DS-DWZZH-05 UltraSCSI Hub .......... 3–15

Preparing the UltraSCSI Storage Configuration ................ 3–16

Configuring Radially Connected TruCluster Server

Clusters with UltraSCSI Hardware ........................... 3–17

Preparing an HSZ70 or HSZ80 for a Shared SCSI Bus

Using Transparent Failover Mode ........................ 3–18

Preparing a Dual-Redundant HSZ70 or HSZ80 for a

Shared SCSI Bus Using Multiple-Bus Failover ........ 3–22

2–7

2–8

2–9

3–3

3–5

3–5

3–6

3–7

3–8

3–9

4 TruCluster Server System Configuration Using UltraSCSI

Hardware

4.1

4.2

iv Contents

Planning Your TruCluster Server Hardware Configuration ... 4–2

Obtaining the Firmware Release Notes ........................... 4–4

Page 5

4.3

4.3.1

4.3.2

4.3.3

4.3.3.1

4.3.3.2

4.3.3.3

TruCluster Server Hardware Installation ........................ 4–5

Installation of a KZPBA-CB Using Internal Termination

for a Radial Configuration ......................................

Displaying KZPBA-CB Adapters with the show Console

Commands ........................................................

Displaying Console Environment Variables and Setting

the KZPBA-CB SCSI ID ........................................ 4–14

Displaying KZPBA-CB pk* or isp* Console

Environment Variables ..................................... 4–15

Setting the KZPBA-CB SCSI ID .......................... 4–17

KZPBA-CB Termination Resistors ........................ 4–17

5 Setting Up the Memory Channel Cluster Interconnect

5.1

5.1.1

5.1.2

5.2

5.3

5.4

5.5

5.5.1

5.5.1.1

5.5.1.2

5.5.2

5.5.2.1

5.5.2.2

5.5.2.3

5.5.2.4

5.6

Setting the Memory Channel Adapter Jumpers ................ 5–2

MC1 and MC1.5 Jumpers ....................................... 5–2

MC2 Jumpers .....................................................

Installing the Memory Channel Adapter .......................... 5–5

Installing the MC2 Optical Converter in the Member System 5–6

Installing the Memory Channel Hub .............................. 5–6

Installing the Memory Channel Cables ........................... 5–7

Installing the MC1 or MC1.5 Cables .......................... 5–7

Connecting MC1 or MC1.5 Link Cables in Virtual Hub

Mode ...........................................................

Connecting MC1 Link Cables in Standard Hub Mode . 5–8

Installing the MC2 Cables ...................................... 5–9

Installing the MC2 Cables for Virtual Hub Mode

Without Optical Converters ............................... 5–9

Installing MC2 Cables in Virtual Hub Mode Using

Optical Converters .......................................... 5–10

Connecting MC2 Link Cables in Standard Hub Mode

(No Fiber Optics) ............................................ 5–10

Connecting MC2 Cables in Standard Hub Mode Using

Optical Converters .......................................... 5–10

Running Memory Channel Diagnostics ........................... 5–11

4–7

4–10

5–3

5–8

6 Using Fibre Channel Storage

6.1

6.2

6.2.1

6.2.2

6.2.2.1

Procedure for Installation Using Fibre Channel Disks ......... 6–2

Fibre Channel Overview ............................................. 6–4

Basic Fibre Channel Terminology ............................. 6–4

Fibre Channel Topologies ....................................... 6–5

Point-to-Point ................................................ 6–6

Contents v

Page 6

6.2.2.2

6.2.2.3

6.3

6.3.1

6.3.2

6.4

6.4.1

6.4.2

6.5

6.5.1

6.5.1.1

6.5.1.2

6.5.1.2.1

6.5.1.2.2

6.5.1.2.3

6.5.1.2.4

6.5.1.2.5

6.5.2

6.5.2.1

6.5.2.2

6.5.2.3

6.5.3

6.5.3.1

6.6

6.6.1

6.6.2

6.6.3

6.7

6.8

6.9

6.10

6.11

Fabric .........................................................

Arbitrated Loop Topology ..................................

6–6

6–7

Example Fibre Channel Configurations Supported by

TruCluster Server .....................................................

6–8

Fibre Channel Cluster Configurations for Transparent

Failover Mode .....................................................

6–8

Fibre Channel Cluster Configurations for Multiple-Bus

Failover Mode .....................................................

6–10

Zoning and Cascaded Switches ..................................... 6–13

Zoning ..............................................................

Cascaded Switches ...............................................

6–13

6–14

Installing and Configuring Fibre Channel Hardware ........... 6–15

Installing and Setting Up the Fibre Channel Switch ...... 6–15

Installing the Switch ........................................ 6–16

Managing the Fibre Channel Switches .................. 6–17

Using the Switch Front Panel ........................ 6–17

Setting the Ethernet IP Address and Subnet Mask

from the Front Panel ................................... 6–18

Setting the DS-DSGGB-AA Ethernet IP Address

and Subnet Mask from a PC or Terminal ........... 6–20

Logging Into the Switch with a TelnetConnection 6–20

Setting the Switch Name via TelnetSession ....... 6–21

Installing and Configuring the KGPSA PCI-to-Fibre

Channel Adapter Module ....................................... 6–22

Installing the KGPSA PCI-to-Fibre Channel Adapter

Module ........................................................

6–22

Setting the KGPSA-BC or KGPSA-CA to Run on a

Fabric ......................................................... 6–23

Obtaining the Worldwide Names of KGPSA Adapters 6–25

Setting up the HSG80 Array Controller for Tru64 UNIX

Installation ........................................................ 6–26

Obtaining the Worldwide Names of HSG80 Controller 6–31

Preparing to Install Tru64 UNIX and TruCluster Server on

Fibre Channel Storage ............................................... 6–33

Configuring the HSG80 Storagesets .......................... 6–33

Setting the Device Unit Number .............................. 6–40

Setting the bootdef_dev Console Environment Variable ... 6–46

Install the Base Operating System ................................ 6–48

Resetting the

bootdef_dev Console Environment Variable .. 6–49

Determining /dev/disk/dskn to Use for a Cluster Installation . 6–51

Installing the TruCluster Server Software ....................... 6–53

Changing the HSG80 from Transparent to Multiple-Bus

Failover Mode ......................................................... 6–54

vi Contents

Page 7

6.12

6.12.1

6.12.2

Using the emx Manager to Display Fibre Channel Adapter

Information ............................................................

Using the emxmgr Utility to Display Fibre Channel

Adapter Information .............................................

Using the emxmgr Utility Interactively ...................... 6–61

7 Preparing ATM Adapters

7.1

7.2

7.3

7.4

ATM Overview ........................................................

Installing ATM Adapters ............................................

Verifying ATM Fiber Optic Cable Connectivity .................. 7–4

ATMworks Adapter LEDs ........................................... 7–6

8 Configuring a Shared SCSI Bus for Tape Drive Use

8.1

8.1.1

8.1.2

8.1.3

8.1.4

8.2

8.2.1

8.2.2

8.2.3

8.2.4

8.3

8.3.1

8.3.2

8.4

8.4.1

8.4.2

8.5

8.5.1

8.5.2

8.6

8.6.1

8.6.2

8.7

8.7.1

8.7.2

Preparing the TZ88 for Shared Bus Usage ....................... 8–1

Setting the TZ88N-VA SCSI ID ............................... 8–2

Cabling the TZ88N-VA .......................................... 8–3

Setting the TZ88N-TA SCSI ID ............................... 8–4

Cabling the TZ88N-TA .......................................... 8–4

Preparing the TZ89 for Shared SCSI Usage ...................... 8–5

Setting the DS-TZ89N-VW SCSI ID .......................... 8–5

Cabling the DS-TZ89N-VW Tape Drives ..................... 8–7

Setting the DS-TZ89N-TA SCSI ID ........................... 8–8

Cabling the DS-TZ89N-TA Tape Drives ...................... 8–8

Compaq 20/40 GB DLT Tape Drive ................................ 8–9

Setting the Compaq 20/40 GB DLT Tape Drive SCSI ID .. 8–9

Cabling the Compaq 20/40 GB DLT Tape Drive ............. 8–10

Preparing the TZ885 for Shared SCSI Usage .................... 8–13

Setting the TZ885 SCSI ID .................................... 8–13

Cabling the TZ885 Tape Drive ................................ 8–13

Preparing the TZ887 for Shared SCSI Bus Usage ............... 8–15

Setting the TZ887 SCSI ID .................................... 8–15

Cabling the TZ887 Tape Drive ................................ 8–16

Preparing the TL891 and TL892 DLT MiniLibraries for

Shared SCSI Usage ................................................... 8–18

Setting the TL891 or TL892 SCSI ID ......................... 8–18

Cabling the TL891 or TL892 MiniLibraries ................. 8–20

Preparing the TL890 DLT MiniLibrary Expansion Unit ....... 8–23

TL890 DLT MiniLibrary Expansion Unit Hardware ....... 8–24

Preparing the DLT MiniLibraries for Shared SCSI Bus

Usage .............................................................. 8–24

6–59

6–59

7–1

7–3

Contents vii

Page 8

8.7.2.1

8.7.2.2

8.7.2.3

8.7.2.4

8.8

8.8.1

8.8.2

8.8.3

8.8.4

8.9

8.9.1

8.9.2

8.9.3

8.9.4

8.9.5

8.10

8.10.1

8.10.2

8.10.3

8.10.4

8.10.5

8.10.6

8.11

8.11.1

8.11.1.1

8.11.1.2

8.11.1.3

8.11.1.4

8.11.2

8.11.2.1

Cabling the DLT MiniLibraries ........................... 8–24

Configuring a Base Module as a Slave ................... 8–26

Powering Up the DLT MiniLibrary ....................... 8–28

Setting the TL890/TL891/TL892 SCSI ID ............... 8–28

Preparing the TL894 DLT Automated Tape Library for Shared

SCSI Bus Usage .......................................................

8–30

TL894 Robotic Controller Required Firmware .............. 8–30

Setting TL894 Robotics Controller and Tape Drive SCSI

IDs ..................................................................

8–30

TL894 Tape Library Internal Cabling ........................ 8–33

Connecting the TL894 Tape Library to the Shared SCSI

Bus .................................................................

8–34

Preparing the TL895 DLT Automated Tape Library for Shared

SCSI Bus Usage .......................................................

8–36

TL895 Robotic Controller Required Firmware .............. 8–37

Setting the TL895 Tape Library SCSI IDs ................... 8–37

TL895 Tape Library Internal Cabling ........................ 8–38

Upgrading a TL895 ..............................................

8–40

Connecting the TL895 Tape Library to the Shared SCSI

Bus .................................................................

8–40

Preparing the TL893 and TL896 Automated Tape Libraries

for Shared SCSI Bus Usage ......................................... 8–40

Communications with the Host Computer ................... 8–42

MUC Switch Functions ......................................... 8–42

Setting the MUC SCSI ID ...................................... 8–43

Tape Drive SCSI IDs ............................................

8–43

TL893 and TL896 Automated Tape Library Internal

Cabling ............................................................. 8–44

Connecting the TL893 and TL896 Automated Tape

Libraries to the Shared SCSI Bus ............................. 8–47

Preparing the TL881 and TL891 DLT MiniLibraries for

Shared Bus Usage .................................................... 8–48

TL881 and TL891 DLT MiniLibraries Overview ............ 8–48

TL881 and TL891 DLT MiniLibrary Tabletop Model .. 8–49

TL881 and TL891 MiniLibrary Rackmount

Components ................................................. 8–49

TL881 and TL891 Rackmount Scalability ............... 8–50

DLT MiniLibrary Part Numbers .......................... 8–51

Preparing a TL881 or TL891 MiniLibrary for Shared SCSI

Bus Use ............................................................ 8–52

Preparing a Tabletop Model or Base Unit for

Standalone Shared SCSI Bus Usage ..................... 8–52

viii Contents

Page 9

8.11.2.1.1

8.11.2.1.2

8.11.2.2

8.11.2.2.1

8.11.2.2.2

8.11.2.2.3

8.11.2.2.4

8.12

8.12.1

8.12.2

8.12.3

8.12.3.1

8.12.3.2

8.12.3.3

8.12.3.4

Compaq ESL9326D Enterprise Library ........................... 8–64

Setting the Standalone MiniLibrary Tape Drive

SCSI ID ..................................................

Cabling the TL881 or TL891 DLT MiniLibrary .... 8–54

Preparing a TL881 or TL891 Rackmount MiniLibrary

for Shared SCSI Bus Usage ................................

Cabling the Rackmount TL881 or TL891 DLT

MiniLibrary .............................................

Configuring a Base Unit as a Slave to the

Expansion Unit ......................................... 8–61

Powering Up the TL881/TL891 DLT MiniLibrary . 8–62

Setting the SCSI IDs for a Rackmount TL881 or

TL891 DLT MiniLibrary ............................... 8–63

General Overview ................................................

ESL9326D Enterprise Library Overview ..................... 8–65

Preparing the ESL9326D Enterprise Library for Shared

SCSI Bus Usage ..................................................

ESL9326D Enterprise Library Robotic and Tape Drive

Required Firmware ......................................... 8–66

Library Electronics and Tape Drive SCSI IDs .......... 8–66

ESL9326D Enterprise Library Internal Cabling ....... 8–66

Connecting the ESL9326D Enterprise Library to the

Shared SCSI Bus ............................................

8–53

8–58

8–58

8–64

8–65

8–68

9 Configurations Using External Termination or Radial Connections

to Non-UltraSCSI Devices

9.1

9.1.1

9.1.2

9.1.2.1

9.1.2.2

9.2

9.3

9.3.1

9.3.2

9.3.2.1

9.3.2.2

9.4

Using SCSI Bus Signal Converters ................................ 9–2

Types of SCSI Bus Signal Converters ......................... 9–2

Using the SCSI Bus Signal Converters ....................... 9–3

DWZZA and DWZZB Signal Converter Termination .. 9–3

DS-BA35X-DA Termination ............................... 9–4

Terminating the Shared SCSI Bus ................................. 9–5

Overview of Disk Storage Shelves .................................. 9–8

BA350 Storage Shelf ............................................. 9–9

BA356 Storage Shelf ............................................. 9–10

Non-UltraSCSI BA356 Storage Shelf .................... 9–10

UltraSCSI BA356 Storage Shelf .......................... 9–13

Preparing the Storage for Configurations Using External

Termination ............................................................ 9–14

Contents ix

Page 10

9.4.1

9.4.1.1

9.4.1.2

9.4.1.3

9.4.2

9.4.2.1

9.4.2.2

9.4.2.3

9.4.3

9.4.3.1

9.4.3.2

9.4.4

Preparing BA350, BA356, and UltraSCSI BA356 Storage

Shelves for an Externally Terminated TruCluster Server

Configuration .....................................................

Preparing a BA350 Storage Shelf for Shared SCSI

Usage ..........................................................

Preparing a BA356 Storage Shelf for Shared SCSI

Usage ..........................................................

Preparing an UltraSCSI BA356 Storage Shelf for a

TruCluster Configuration .................................. 9–17

Connecting Storage Shelves Together ........................ 9–17

Connecting a BA350 and a BA356 for Shared SCSI

Bus Usage ....................................................

Connecting Two BA356s for Shared SCSI Bus Usage . 9–20

Connecting Two UltraSCSI BA356s for Shared SCSI

Bus Usage ....................................................

Cabling a Non-UltraSCSI RAID Array Controller to an

Externally Terminated Shared SCSI Bus .................... 9–24

Cabling an HSZ40 or HSZ50 in a Cluster Using

External Termination ....................................... 9–25

Cabling an HSZ20 in a Cluster using External

Termination ..................................................

Cabling an HSZ40 or HSZ50 RAID Array Controller in a

Radial Configuration with an UltraSCSI Hub .............. 9–28

9–15

9–15

9–16

9–18

9–21

9–27

10 Configuring Systems for External Termination or Radial

Connections to Non-UltraSCSI Devices

10.1

10.1.1

10.1.2

10.1.3

10.1.4

10.1.4.1

10.1.4.2

10.1.4.3

10.1.4.4

x Contents

TruCluster Server Hardware Installation Using PCI SCSI

Adapters ................................................................ 10–1

Radial Installation of a KZPSA-BB or KZPBA-CB Using

Internal Termination ............................................ 10–2

Installing a KZPSA-BB or KZPBA-CB Using External

Termination ....................................................... 10–6

Displaying KZPSA-BB and KZPBA-CB Adapters with the

show Console Commands ....................................... 10–9

Displaying Console Environment Variables and Setting

the KZPSA-BB and KZPBA-CB SCSI ID ..................... 10–13

Displaying KZPSA-BB and KZPBA-CB pk* or isp*

Console Environment Variables ........................... 10–13

Setting the KZPBA-CB SCSI ID .......................... 10–16

Setting KZPSA-BB SCSI Bus ID, Bus Speed, and

Termination Power .......................................... 10–17

KZPSA-BB and KZPBA-CB Termination Resistors .... 10–18

Page 11

10.1.4.5

Updating the KZPSA-BB Adapter Firmware ........... 10–18

A Worldwide ID to Disk Name Conversion Table

Index

Examples

4–1

4–2

4–3

4–4

4–5

4–6

4–7

5–1

6–1

6–2

6–3

6–4

10–1

10–2

10–3

10–4

10–5

10–6

10–7

10–8

10–9

Displaying Configuration on an AlphaServer DS20 ............. 4–10

Displaying Devices on an AlphaServer DS20 .................... 4–12

Displaying Configuration on an AlphaServer 8200 .............. 4–13

Displaying Devices on an AlphaServer 8200 ..................... 4–13

Displaying the pk* Console Environment Variables on an

AlphaServer DS20 System .......................................... 4–15

Displaying Console Variables for a KZPBA-CB on an

AlphaServer 8x00 System ........................................... 4–16

Setting the KZPBA-CB SCSI Bus ID .............................. 4–17

Running the mc_cable Test .......................................... 5–13

Determine HSG80 Connection Names ............................ 6–29

Setting up the Mirrorset .............................................

Using the wwidmgr quickset Command to Set Device Unit

Number .................................................................

Sample Fibre Channel Device Names ............................. 6–45

Displaying Configuration on an AlphaServer 4100 .............. 10–9

Displaying Devices on an AlphaServer 4100 ..................... 10–10

Displaying Configuration on an AlphaServer 8200 .............. 10–11

Displaying Devices on an AlphaServer 8200 ..................... 10–12

Displaying the pk* Console Environment Variables on an

AlphaServer 4100 System ........................................... 10–13

Displaying Console Variables for a KZPBA-CB on an

AlphaServer 8x00 System ........................................... 10–15

Displaying Console Variables for a KZPSA-BB on an

AlphaServer 8x00 System ........................................... 10–15

Setting the KZPBA-CB SCSI Bus ID .............................. 10–16

Setting KZPSA-BB SCSI Bus ID and Speed ...................... 10–17

6–34

6–43

Figures

1–1

Two-Node Cluster with Minimum Disk Configuration and No

Quorum Disk .......................................................... 1–6

Contents xi

Page 12

1–2

1–3

1–4

1–5

1–6

1–7

1–8

3–1

3–2

3–3

3–4

3–5

3–6

3–7

3–8

4–1

5–1

6–1

6–2

6–3

6–4

6–5

6–6

6–7

6–8

7–1

8–1

8–2

8–3

8–4

8–5

Generic Two-Node Cluster with Minimum Disk Configuration

and Quorum Disk .....................................................

1–7

Minimum Two-Node Cluster with UltraSCSI BA356 Storage

Unit .....................................................................

1–9

Two-Node Cluster with Two UltraSCSI DS-BA356 Storage

Units ....................................................................

1–11

Two-Node Configurations with UltraSCSI BA356 Storage

Units and Dual SCSI Buses ......................................... 1–13

Cluster Configuration with HSZ70 Controllers in Transparent

Failover Mode .........................................................

1–14

NSPOF Cluster using HSZ70s in Multiple-Bus Failover Mode 1–16

NSPOF Fibre Channel Cluster using HSG80s in Multiple-Bus

Failover Mode .........................................................

1–17

VHDCI Trilink Connector (H8861-AA) ............................ 3–8

DS-DWZZH-03 Front View .......................................... 3–10

DS-DWZZH-05 Rear View ........................................... 3–14

DS-DWZZH-05 Front View .......................................... 3–15

Shared SCSI Bus with HSZ70 Configured for Transparent

Failover .................................................................

3–20

Shared SCSI Bus with HSZ80 Configured for Transparent

Failover .................................................................

3–21

TruCluster Server Configuration with HSZ70 in Multiple-Bus

Failover Mode .........................................................

3–24

TruCluster Server Configuration with HSZ80 in Multiple-Bus

Failover Mode .........................................................

3–25

KZPBA-CB Termination Resistors ................................. 4–18

Connecting Memory Channel Adapters to Hubs ................. 5–9

Point-to-Point Topology .............................................. 6–6

Fabric Topology ........................................................ 6–7

Arbitrated Loop Topology ............................................ 6–8

Fibre Channel Single Switch Transparent Failover

Configuration ......................................................... 6–9

Multiple-Bus NSPOF Configuration Number 1 .................. 6–11

Multiple-Bus NSPOF Configuration Number 2 .................. 6–12

Multiple-Bus NSPOF Configuration Number 3 .................. 6–13

A Simple Zoned Configuration ...................................... 6–14

Emulated LAN Over an ATM Network ............................ 7–3

TZ88N-VA SCSI ID Switches ....................................... 8–2

Shared SCSI Buses with SBB Tape Drives ....................... 8–4

DS-TZ89N-VW SCSI ID Switches .................................. 8–6

Compaq 20/40 GB DLT Tape Drive Rear Panel .................. 8–10

Cabling a Shared SCSI Bus with a Compaq 20/40 GB DLT

Tape Drive ............................................................. 8–12

xii Contents

Page 13

8–6

8–7

8–8

8–9

8–10

8–11

8–12

8–13

8–14

8–15

8–16

8–17

8–18

8–19

9–1

9–2

9–3

9–4

9–5

9–6

9–7

9–8

9–9

9–10

9–11

9–12

9–13

9–14

9–15

10–1

Cabling a Shared SCSI Bus with a TZ885 .........

TZ887 DLT MiniLibrary Rear Panel ...............................

Cabling a Shared SCSI Bus with a TZ887 ........................ 8–17

TruCluster Server Cluster with a TL892 on Two Shared SCSI

Buses ....................................................................

TL890 and TL892 DLT MiniLibraries on Shared SCSI Buses . 8–26

TL894 Tape Library Four-Bus Configuration .................... 8–33

Shared SCSI Buses with TL894 in Two-Bus Mode .............. 8–35

TL895 Tape Library Internal Cabling ............................. 8–39

TL893 Three-Bus Configuration .................................... 8–45

TL896 Six-Bus Configuration ....................................... 8–46

Shared SCSI Buses with TL896 in Three-Bus Mode ............ 8–48

TL891 Standalone Cluster Configuration ......................... 8–57

TL881 DLT MiniLibrary Rackmount Configuration ............ 8–60

ESL9326D Internal Cabling ........................................ 8–67

Standalone SCSI Signal Converter ................................ 9–4

SBB SCSI Signal Converter ......................................... 9–4

DS-BA35X-DA Personality Module Switches .................... 9–5

BN21W-0B Y Cable ...................................................

HD68 Trilink Connector (H885-AA) ............................... 9–8

BA350 Internal SCSI Bus ........................................... 9–10

BA356 Internal SCSI Bus ........................................... 9–12

BA356 Jumper and Terminator Module Identification Pins ... 9–13

BA350 and BA356 Cabled for Shared SCSI Bus Usage ......... 9–19

Two BA356s Cabled for Shared SCSI Bus Usage ................ 9–21

Two UltraSCSI BA356s Cabled for Shared SCSI Bus Usage .. 9–23

Externally Terminated Shared SCSI Bus with Mid-Bus HSZ50

RAID Array Controllers ............................................. 9–26

Externally Terminated Shared SCSI Bus with HSZ50 RAID

Array Controllers at Bus End ....................................... 9–27

TruCluster Server Cluster Using DS-DWZZH-03, SCSI

Adapter with Terminators Installed, and HSZ50 ................ 9–30

TruCluster Server Cluster Using KZPSA-BB SCSI Adapters,

a DS-DWZZH-05 UltraSCSI Hub, and an HSZ50 RAID Array

Controller .............................................................. 9–31

KZPSA-BB Termination Resistors ................................. 10–18

............... 8–15

8–16

8–23

9–7

Tables

2–1

2–2

2–3

AlphaServer Systems Supported for Fibre Channel ............ 2–4

RAID Controller SCSI IDs .......................................... 2–7

Supported SCSI Cables .............................................. 2–10

Contents xiii

Page 14

2–4

3–1

3–2

3–3

3–4

4–1

4–2

4–3

5–1

5–2

5–3

6–1

6–2

7–1

8–1

8–2

8–3

8–4

8–5

8–6

8–7

8–8

8–9

8–10

8–11

8–12

8–13

8–14

9–1

9–2

9–3

Supported SCSI Terminators and Trilink Connectors .......... 2–11

SCSI Bus Speeds ......................................................

SCSI Bus Segment Length ..............

............................

3–5

3–7

DS-DWZZH UltraSCSI Hub Maximum Configurations ........ 3–11

Hardware Components Used in Configuration Shown in

Figure 3–5 Through Figure 3–8 ....................................

3–21

Planning Your Configuration ....................................... 4–3

Configuring TruCluster Server Hardware ........................ 4–6

Installing the KZPBA-CB for Radial Connection to a DWZZH

UltraSCSI Hub ........................................................

4–9

MC1 and MC1.5 Jumper Configuration ........................... 5–2

MC2 Jumper Configuration ......................................... 5–3

MC2 Linecard Jumper Configurations ............................ 5–5

Telnet Session Default User Names for Fibre Channel

Switches ................................................................

6–21

Converting Storageset Unit Numbers to Disk Names .......... 6–40

ATMworks Adapter LEDs ........................................... 7–6

TZ88N-VA Switch Settings .......................................... 8–3

DS-TZ89N-VW Switch Settings .................................... 8–6

Hardware Components Used to Create the Configuration

Shown in Figure 8 — 5 ...............................................

8–12

TL894 Default SCSI ID Settings ................................... 8–30

TL895 Default SCSI ID Settings ................................... 8–37

MUC Switch Functions ..............................................

MUC SCSI ID Selection .............................................

TL893 Default SCSI IDs .............................................

8–43

8–43

8–44

TL896 Default SCSI IDs ............................................. 8–44

TL881 and TL891 MiniLibrary Performance and Capacity

Comparison ............................................................ 8–51

DLT MiniLibrary Part Numbers ................................... 8–51

Hardware Components Used to Create the Configuration

Shown in Figure 8–17 ................................................ 8–57

Hardware Components Used to Create the Configuration

Shown in Figure 8–18 ................................................ 8–61

Shared SCSI Bus Cable and Terminator Connections for the

ESL9326D Enterprise Library ...................................... 8–68

Hardware Components Used for Configuration Shown in

Figure 8–9 and Figure 8–10 ......................................... 9–20

Hardware Components Used for Configuration Shown in

Figure 9–11 ............................................................ 9–24

Hardware Components Used for Configuration Shown in

Figure 8–12 and Figure 8–13 ....................................... 9–27

xiv Contents

Page 15

9–4

10–1

10–2

10–3

Hardware Components Used in Configuration Shown in

Figure 9–14 ............................................................

Configuring TruCluster Server Hardware for Use with a PCI

SCSI Adapter ..........................................................

Installing the KZPSA-BB or KZPBA-CB for Radial Connection

to a DWZZH UltraSCSI Hub ........................................

Installing a KZPSA-BB or KZPBA-CB for use with External

Termination ............................................................

9–30

10–2

10–4

10–7

A–1 Converting Storageset Unit Numbers to Disk Names .......... A–1

Contents xv

Page 16

Page 17

This manual describes how to set up and maintain the hardware

configuration for a TruCluster Server cluster.

Audience

This manual is for system administrators who will set up and configure the

hardware before installing the TruCluster Server software. The manual

assumes that you are familiar with the tools and methods needed to

maintain your hardware, operating system, and network.

Organization

This manual contains ten chapters and an index. The organization of this

manual has been restructured to provide a more streamlined manual.

Those chapters containing information on SCSI bus requirements and

configuration, and configuring hardware have been split up into two sets of

two chapters each. One set covers the UltraSCSI hardware and is geared

towards radial configurations. The other set covers configurations using

either external termination or radial connection to non-UltraSCSI devices.

A brief description of the contents follows:

About This Manual

Chapter 1 Introduces the TruCluster Server product and provides an overview

Chapter 2 Describes hardware requirements and restrictions.

Chapter 3 Contains information about setting up a shared SCSI bus, SCSI

Chapter 4 Describes how to prepare systems for a TruCluster Server

Chapter 5 Describes how to set up the Memory Channel cluster interconnect.

Chapter 6 Provides an overview of Fibre Channel and describes how

Chapter 7 Provides information on the use of, and installation of, Asynchronous

of setting up TruCluster Server hardware.

bus requirements, and how to connect storage to a shared SCSI

bus using the latest UltraSCSI products (DS-DWZZH UltraSCSI

hubs, HSZ70 and HSZ80 RAID array controllers).

configuration, and how to connect host bus adapters to shared

storage using the DS-DWZZH UltraSCSI hubs and the newest

RAID array controllers (HSZ70 and HSZ80).

to set up Fibre Channel hardware.

Transfer Mode (ATM) hardware.

About This Manual xvii

Page 18

Chapter 8 Describes how to configure a shared SCSI bus for tape drive,

tape loader, or tape library usage.

Chapter 9 Contains information about setting up a shared SCSI bus, SCSI bus

requirements, and how to connect storage to a shared SCSI bus using

external termination or radial connections to non-UltraSCSI devices.

Chapter 10

Describes how to prepare systems for a TruCluster Server configuration,

and how to connect host bus adapters to shared storage using external

termination or radial connection to non-UltraSCSI devices.

Related Documents

Users of the TruCluster Server product can consult the following manuals for

assistance in cluster installation, administration, and programming tasks:

• TruCluster Server Software Product Description (SPD) — The

comprehensive description of the TruCluster Server Version 5.0A

product. You can find the latest version of the SPD and other TruCluster

Server documentation at the following URL:

http://www.unix.digital.com/faqs/publications/pub_page/cluster_list.html

• Release Notes — Provides important information about TruCluster

Server Version 5.0A.

• Technical Overview — Provides an overview of the TruCluster Server

technology.

• Software Installation — Describes how to install the TruCluster Server

product.

• Cluster Administration — Describes cluster-specific administration

tasks.

• Highly Available Applications — Describes how to deploy applications on

a TruCluster Server cluster.

The UltraSCSI Configuration Guidelines document provides guidelines

regarding UltraSCSI configurations.

For information about setting up a RAID subsystem, see the following

documentation as appropriate for your configuration:

• DEC RAID Subsystem User’s Guide

• HS Family of Array Controllers User’s Guide

• RAID Array 310 Configuration and Maintenance Guide User’s Guide

• Configuring Your StorageWorks Subsystem HSZ40 Array Controllers

HSOF Version 3.0

• Getting Started RAID Array 450 V5.4 for Compaq Tru64 UNIX

Installation Guide

xviii About This Manual

Page 19

• HSZ70 Array Controller HSOF Version 7.0 Configuration Manual

• HSZ80 Array Controller ACS Version 8.2

• Compaq StorageWorks HSG80 Array Controller ACS Version 8.5

Configuration Guide

• Compaq StorageWorks HSG80 Array Controller ACS Version 8.5 CLI

Reference Guide

• Wwidmgr User’s Manual

For information about the tape devices, see the following documentation:

• TZ88 DLT Series Tape Drive Owner’s Manual

• TZ89 DLT Series Tape Drive User’s Guide

• TZ885 Model 100/200 GB DLT5-Cartridge MiniLibrary Owner’s Manual

• TZ887 Model 140/280 GB DLT7-Cartridge MiniLibrary Owner’s Manual

• TL881 MiniLibrary System User’s Guide

• TL881 MiniLibrary Drive Upgrade Procedure

• Pass-Through Expansion Kit Installation Instructions

• TL891 MiniLibrary System User’s Guide

• TL81X/TL894 Automated Tape Library for DLT Cartridges Facilities

Planning and Installation Guide

• TL81X/TL894 Automated Tape Library for DLT Cartridges Diagnostic

Software User’s Manual

• TL895 DLT Tape Library Facilities Planning and Installation Guide

• TL895 DLT Library Operator’s Guide

• TL895 DLT Tape Library Diagnostic Software User’s Manual

• TL895 Drive Upgrade Instructions

• TL82X/TL893/TL896 Automated Tape Library for DLT Cartridges

Facilities Planning and Installation Guide

• TL82X/TL893/TL896 Automated Tape Library for DLT Cartridges

Operator’s Guide

• TL82X/TL893/TL896 Automated Tape Library for DLT Cartridges

Diagnostic Software User’s Manual

• TL82X Cabinet-to-Cabinet Mounting Instructions

• TL82X/TL89X MUML to MUSL Upgrade Instructions

The Golden Eggs Visual Configuration Guide provides configuration

diagrams of workstations, servers, storage components, and clustered

About This Manual xix

Page 20

systems. It is available on line in PostScript and Portable Document Format

(PDF) formats at:

http://www.compaq.com/info/golden-eggs

At this URL you will find links to individual system, storage, or cluster

configurations. You can order the document through the Compaq Literature

Order System (LOS) as order number EC-R026B-36.

In addition, you should have available the following manuals from the Tru64

UNIX documentation set:

• Installation Guide

• Release Notes

• System Administration

• Network Administration

You should also have the hardware documentation for the systems, SCSI

controllers, disk storage shelves or RAID controllers, and any other

hardware you plan to install.

Documentation for the following optional software products will be useful if

you intend to use these products with TruCluster Server:

• Compaq Analyze (DS20 and ES40)

• DECevent™ (AlphaServers other than the DS20 and ES40)

• Logical Storage Manager (LSM)

• NetWorker

• Advanced File System (AdvFS) Utilities

• Performance Manager

Reader’s Comments

Compaq welcomes any comments and suggestions you have on this and

other Tru64 UNIX manuals.

You can send your comments in the following ways:

• Fax: 603-884-0120 Attn: UBPG Publications, ZKO3-3/Y32

• Internet electronic mail: readers_comment@zk3.dec.com

A Reader’s Comment form is located on your system in the following

location:

/usr/doc/readers_comment.txt

• Mail:

Compaq Computer Corporation

xx About This Manual

Page 21

UBPG Publications Manager

ZKO3-3/Y32

110 Spit Brook Road

Nashua, NH 03062-2698

A Reader’s Comment form is located in the back of each printed manual.

The form is postage paid if you mail it in the United States.

Please include the following information along with your comments:

• The full title of the book and the order number. (The order number is

printed on the title page of this book and on its back cover.)

• The section numbers and page numbers of the information on which

you are commenting.

• The version of Tru64 UNIX that you are using.

• If known, the type of processor that is running the Tru64 UNIX software.

The Tru64 UNIX Publications group cannot respond to system problems

or technical support inquiries. Please address technical questions to your

local system vendor or to the appropriate Compaq technical support office.

Information provided with the software media explains how to send problem

reports to Compaq.

Conventions

The following typographical conventions are used in this manual:

#

% cat

file

.

.

.

cat

(1)

A number sign represents the superuser prompt.

Boldface type in interactive examples indicates

typed user input.

Italic (slanted) type indicates variable values,

placeholders, and function argument names.

A vertical ellipsis indicates that a portion of an

example that would normally be present is not

shown.

A cross-reference to a reference page includes

the appropriate section number in parentheses.

For example, cat

(1) indicates that you can find

information on the cat command in Section 1 of

the reference pages.

About This Manual xxi

Page 22

cluster

Bold text indicates a term that is defined in the

glossary.

xxii About This Manual

Page 23

This chapter introduces the TruCluster Server product and some basic

cluster hardware configuration concepts.

Subsequent chapters describe how to set up and maintain TruCluster Server

hardware configurations. See the TruCluster Server Software Installation

manual for information about software installation; see the TruCluster

Server Cluster Administration manual for detailed information about setting

up member systems and highly available applications.

1.1 The TruCluster Server Product

TruCluster Server, the newest addition to the Compaq Tru64 UNIX

TruCluster Software products family, extends single-system management

capabilities to clusters. It provides a clusterwide namespace for files and

directories, including a single root file system that all cluster members

share. It also offers a cluster alias for the Internet protocol suite (TCP/IP) so

that a cluster appears as a single system to its network clients.

1

Introduction

TruCluster Server preserves the availability and performance features found

in the earlier TruCluster products:

• Like the TruCluster Available Server Software and TruCluster

Production Server products, TruCluster Server lets you deploy highly

available applications that have no embedded knowledge that they are

executing in a cluster. They can access their disk data from any member

in the cluster.

• Like the TruCluster Production Server Software product, TruCluster

Server lets you run components of distributed applications in parallel,

providing high availability while taking advantage of cluster-specific

synchronization mechanisms and performance optimizations.

TruCluster Server augments the feature set of its predecessors by allowing

all cluster members access to all file systems and all storage in the cluster,

regardless of where they reside. From the viewpoint of clients, a TruCluster

Server cluster appears to be a single system; from the viewpoint of a system

administrator, a TruCluster Server cluster is managed as if it were a single

system. Because TruCluster Server has no built-in dependencies on the

architectures or protocols of its private cluster interconnect or shared storage

Introduction 1–1

Page 24

interconnect, you can more easily alter or expand your cluster’s hardware

configuration as newer and faster technologies become available.

1.2 Overview of the TruCluster Server Hardware

Configuration

A TruCluster Server hardware configuration consists of a number of highly

specific hardware components:

• TruCluster Server currently supports from one to eight member systems.

• There must be sufficient internal and external SCSI controllers, Fibre

Channel host bus adapters, and disks to provide sufficient storage for

the applications.

• The clusterwide root (

a shared SCSI bus. We recommend placing all member system boot

disks on a shared SCSI bus. If you have a quorum disk, it must be on

a shared SCSI bus.

_____________________ Note _____________________

The clusterwide root (/), /usr, and /var file systems, the

member system boot disks, and the quorum disk may be

located behind a RAID array controller, including the HSG80

controller (Fibre Channel).

• You need to allocate a number of Internet Protocol (IP) addresses from

one IP subnet to allow client access to the cluster. The IP subnet has

to be visible to the clients directly or through routers. The miminum

number of allocated addresses is equal to the number of cluster member

systems plus one (for the cluster alias), depending on the type of cluster

alias configuration.

For client access, TruCluster Server allows you to configure any number

of monitored network adapters (using a redundant array of independent

network adapters (NetRAIN) and Network Interface Failure Finder

(NIFF) facilities of the Tru64 UNIX operating system).

• TruCluster Server requires at least one peripheral component

interconnect (PCI) Memory Channel adapter on each system. The

Memory Channel adapters comprise the cluster interconnect for

TruCluster Server, providing host-to-host communications. For a cluster

with two systems, a Memory Channel hub is optional; the Memory

Channel adapters can be connected with a cable.

If there are more than two systems in the cluster, a Memory Channel

hub is required. The Memory Channel hub is a PC-class enclosure that

/), /usr, and /var file systems should be on

1–2 Introduction

Page 25

contains up to eight linecards. The Memory Channel adapter in each

system in the cluster is connected to the Memory Channel hub.

One or two Memory Channel adapters can be used with TruCluster

Server. When dual Memory Channel adapters are installed, if the

Memory Channel adapter being used for cluster communication fails, the

communication will fail over to the other Memory Channel.

1.3 Memory Requirements

Cluster members require a minimum of 128 MB of memory.

1.4 Minimum Disk Requirements

This section provides an overview of the minimum file system or disk

requirements for a two-node cluster. For more information on the amount

of space required for each required cluster file system, see the TruCluster

Server

1.4.1 Disks Needed for Installation

You need to allocate disks for the following uses:

• One or more disks to hold the Tru64 UNIX operating system. The disk(s)

Software Installation manual.

are either private disk(s) on the system that will become the first cluster

member, or disk(s) on a shared bus that the system can access.

• One or more disks on a shared SCSI bus to hold the clusterwide root (

/usr, and /var AdvFS file systems.

• One disk per member, normally on a shared SCSI bus, to hold member

boot partitions.

• Optionally, one disk on a shared SCSI bus to act as the quorum disk. See

Section 1.4.1.4, and for a more detailed discussion of the quorum disk,

see the TruCluster Server Cluster Administration manual.

The following sections provide more information about these disks.

Figure 1–1 shows a generic two-member cluster with the required file

systems.

1.4.1.1 Tru64 UNIX Operating System Disk

The Tru64 UNIX operating system is installed using AdvFS file systems on

one or more disks on the system that will become the first cluster member.

For example:

dsk0a root_domain#root

dsk0g usr_domain#usr

dsk0h var_domain#var

/),

Introduction 1–3

Page 26

The operating system disk (Tru64 UNIX disk) cannot be used as a

clusterwide disk, a member boot disk, or as the quorum disk.

Because the Tru64 UNIX operating system will be available on the first

cluster member, in an emergency, after shutting down the cluster, you have

the option of booting the Tru64 UNIX operating system and attempting to

fix the problem. See the TruCluster Server

for more information.

1.4.1.2 Clusterwide Disk(s)

When you create a cluster, the installation scripts copy the Tru64 UNIX

root (/), /usr, and /var file systems from the Tru64 UNIX disk to the disk

or disks you specify.

We recommend that the disk or disks used for the clusterwide file systems

be placed on a shared SCSI bus so that all cluster members have access to

these disks.

During the installation, you supply the disk device names and partitions

that will contain the clusterwide root (/), /usr, and /var file systems. For

example, dsk3b, dsk4c, and dsk3g:

dsk3b cluster_root#root

dsk4c cluster_usr#usr

dsk3g cluster_var#var

Cluster Administration manual

The /var fileset cannot share the cluster_usr domain, but must be a

separate domain, cluster_var. Each AdvFS file system must be a separate

partition; the partitions do not have to be on the same disk.

If any partition on a disk is used by a clusterwide file system, only

clusterwide file systems can be on that disk. A disk containing a clusterwide

file system cannot also be used as the member boot disk or as the quorum

disk.

1.4.1.3 Member Boot Disk

Each member has a boot disk. A boot disk contains that member’s boot,

swap, and cluster-status partitions. For example, dsk1 is the boot disk for

the first member and dsk2 is the boot disk for the second member:

dsk1 first member’s boot disk [pepicelli]

dsk2 second member’s boot disk [polishham]

The installation scripts reformat each member’s boot disk to contain three

partitions: an a partition for that member’s root (/) file system, a b partition

for swap, and an h partition for cluster status information. (There are no

/usr or /var file systems on a member’s boot disk.)

1–4 Introduction

Page 27

A member boot disk cannot contain one of the clusterwide root (/), /usr,

and /var file systems. Also, a member boot disk cannot be used as the

quorum disk. A member disk can contain more than the three required

partitions. You can move the swap partition off the member boot disk. See

the TruCluster Server

1.4.1.4 Quorum Disk

The quorum disk allows greater availability for clusters consisting of two

members. Its h partition contains cluster status and quorum information.

See the TruCluster Server Cluster Administration manual for a discussion of

how and when to use a quorum disk.

The following restrictions apply to the use of a quorum disk:

• A cluster can have only one quorum disk.

• The quorum disk should be on a shared bus to which all cluster members

are directly connected. If it is not, members that do not have a direct

connection to the quorum disk may lose quorum before members that

do have a direct connection to it.

• The quorum disk must not contain any data. The clu_quorum command

will overwrite existing data when initializing the quorum disk. The

integrity of data (or file system metadata) placed on the quorum disk

from a running cluster is not guaranteed across member failures.

This means that the member boot disks and the disk holding the

clusterwide root (/) cannot be used as quorum disks.

Cluster Administration manual for more information.

• The quorum disk can be small. The cluster subsystems use only 1 MB

of the disk.

• A quorum disk can have either 1 vote or no votes. In general, a quorum

disk should always be assigned a vote. You might assign an existing

quorum disk no votes in certain testing or transitory configurations,

such as a one-member cluster (in which a voting quorum disk introduces

a second point of failure).

• You cannot use the Logical Storage Manager (LSM) on the quorum disk.

1.5 Generic Two-Node Cluster

This section describes a generic two-node cluster with the minimum disk

layout of four disks. Note that additional disks may be needed for highly

available applications. In this section, and the following sections, the type

of PCI SCSI bus adapter is not significant. Also, although an important

consideration, SCSI bus cabling, including Y cables or trilink connectors,

termination, and the use of UltraSCSI hubs is not considered at this time.

Introduction 1–5

Page 28

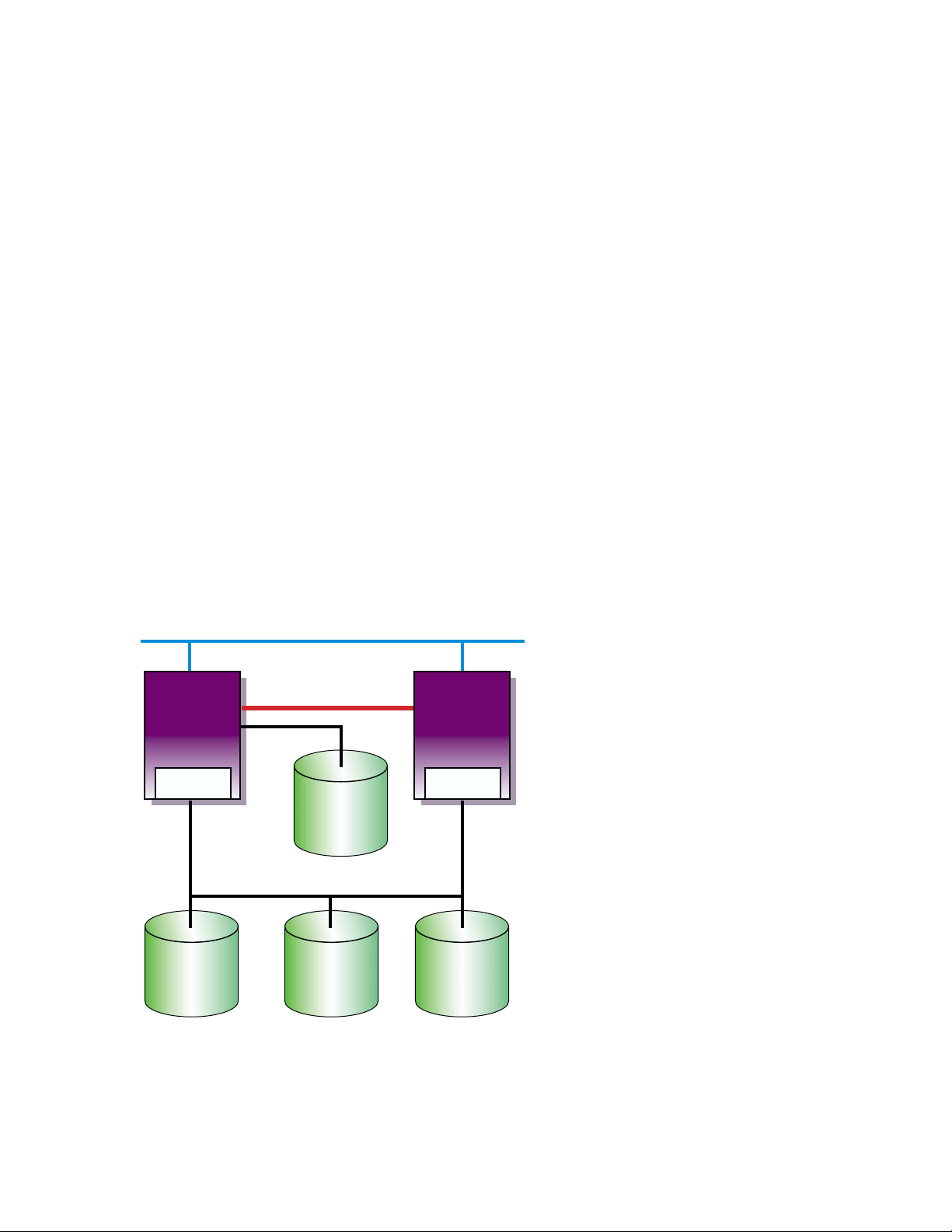

Figure 1–1 shows a generic two-node cluster with the minimum number

of disks.

• Tru64 UNIX disk

• Clusterwide root (

/), /usr, and /var

• Member 1 boot disk

• Member 2 boot disk

A minimum configuration cluster may have reduced availability due to the

lack of a quorum disk. As shown, with only two-member systems, both

systems must be operational to achieve quorum and form a cluster. If only

one system is operational, it will loop, waiting for the second system to boot

before a cluster can be formed. If one system crashes, you lose the cluster.

Figure 1–1: Two-Node Cluster with Minimum Disk Configuration and No

Quorum Disk

Network

Member

System

1

PCI SCSI

Adapter

Memory Channel

Tru64

UNIX

Disk

Member

System

2

PCI SCSI

Adapter

Cluster File

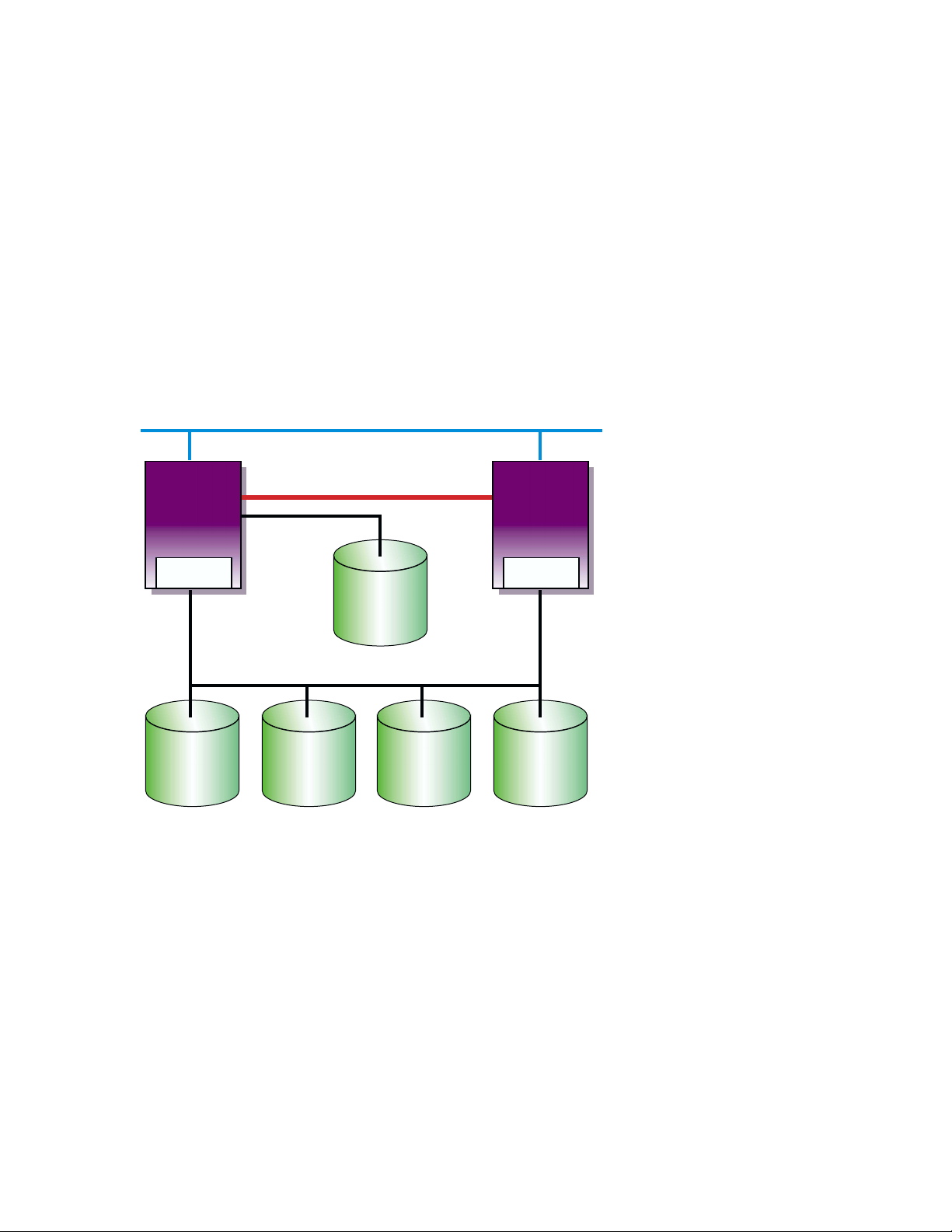

Figure 1–2 shows the same generic two-node cluster as shown in Figure 1–1,

but with the addition of a quorum disk. By adding a quorum disk, a cluster

may be formed if both systems are operational, or if either of the systems

and the quorum disk is operational. This cluster has a higher availability

than the cluster shown in Figure 1–1. See the TruCluster Server Cluster

1–6 Introduction

System

root (/)

/usr

/var

Shared SCSI Bus

Member 1

root (/)

swap

Member 2

root (/)

swap

ZK-1587U-AI

Page 29

Administration manual for a discussion of how and when to use a quorum

disk.

Figure 1–2: Generic Two-Node Cluster with Minimum Disk Configuration

and Quorum Disk

Network

Member

System

1

PCI SCSI

Adapter

Memory Channel

Tru64

UNIX

Disk

Member

System

2

PCI SCSI

Adapter

Shared SCSI Bus

Cluster File

System

root (/)

/usr

/var

Member 1

root (/)

swap

Member 1

root (/)

swap

Quorum

ZK-1588U-AI

1.6 Growing a Cluster from Minimum Storage to a NSPOF

Cluster

The following sections take a progression of clusters from a cluster with

minimum storage to a no-single-point-of-failure (NSPOF) cluster; a cluster

where one hardware failure will not interrupt the cluster operation:

• A cluster with minimum storage for highly available applications

(Section 1.6.1).

• A cluster with more storage, but the single SCSI bus is a single point

of failure (Section 1.6.2).

• Adding a second SCSI bus allows the use of LSM to mirror the /usr and

/var file systems and data disks. However, as LSM cannot mirror the

root (/), member system boot, swap, or quorum disks, so full redundancy

is not achieved (Section 1.6.3).

Introduction 1–7

Page 30

• Using a RAID array controller in transparent failover mode allows the

use of hardware RAID to mirror the disks. However, without a second

SCSI bus, second Memory Channel, and redundant networks, this

configuration is still not a NSPOF cluster (Section 1.6.4).

• By using an HSZ70, HSZ80, or HSG80 with multiple-bus failover enabled

you can use two shared SCSI buses to access the storage. Hardware

RAID is used to mirror the root (

the member system boot disks, data disks, and quorum disk (if used).

A second Memory Channel, redundant networks, and redundant power

must also be installed to achieve a NSPOF cluster (Section 1.6.5).

/), /usr, and /var file systems, and

1.6.1 Two-Node Clusters Using an UltraSCSI BA356 Storage Shelf

and Minimum Disk Configurations

This section takes the generic illustrations of our cluster example one step

further by depicting the required storage in storage shelves. The storage

shelves could be BA350, BA356 (non-UltraSCSI), or UltraSCSI BA356s.

The BA350 is the oldest model, and can only respond to SCSI IDs 0-6. The

non-Ultra BA356 can respond to SCSI IDs 0-6 or 8-14 (see Section 3.2). The

UltraSCSI BA356 also responds to SCSI IDs 0-6 or 8-14, but also can operate

at UltraSCSI speeds (see Section 3.2).

Figure 1–3 shows a TruCluster Server configuration using an UltraSCSI

BA356 storage unit. The DS-BA35X-DA personality module used in the

UltraSCSI BA356 storage unit is a differential-to-single-ended signal

converter, and therefore accepts differential inputs.

1–8 Introduction

______________________ Note _______________________

The figures in this section are generic drawings and do not show

shared SCSI bus termination, cable names, and so forth.

Page 31

Figure 1–3: Minimum Two-NodeCluster with UltraSCSI BA356 Storage Unit

Network

Tru64

UNIX

Disk

Member

System

1

Memory Channel

Host Bus Adapter (ID 6)

Shared

SCSI

Bus

ID 0

ID 1

ID 2

ID 3

ID 4

ID 5

ID 6

Memory

Channel

Interface

UltraSCSI

BA356

Clusterwide

/, /usr, /var

Member 1

Boot Disk

Member 2

Boot Disk

Quorum

Disk

PWR

Member

System

2

Memory Channel

Host Bus Adapter (ID 7)

Shared

SCSI

Bus

DS-BA35X-DA

Personality

Module

Clusterwide

Data Disks

Do not use for

data disk. May

be used for

redundant power

supply.

ZK-1591U-AI

The configuration shown in Figure 1–3 might represent a typical small or

training configuration with TruCluster Server Version 5.0A required disks.

In this configuration, because of the TruCluster Server Version 5.0A disk

requirements, there will only be two disks available for highly available

applications.

______________________ Note _______________________

Slot 6 in the UltraSCSI BA356 is not available because SCSI ID 6

is generally used for a member system SCSI adapter. However,

Introduction 1–9

Page 32

this slot can be used for a second power supply to provide fully

redundant power to the storage shelf.

Note that with the use of the cluster file system (See the TruCluster Server

Cluster Administration manual for a discussion of the cluster file system),

the clusterwide root (/), /usr, and /var file systems could be physically

placed on a private bus of either of the member systems. But, if that member

system was not available, the other member system(s) would not have access

to the clusterwide file systems. Therefore, placing the clusterwide root (/),

/usr, and /var file systems on a private bus is not recommended.

Likewise, the quorum disk could be placed on the local bus of either of the

member systems. If that member was not available, quorum could never be

reached in a two-node cluster. Placing the quorum disk on the local bus of a

member system is not recommended as it creates a single point of failure.

The individual member boot and swap partitions could also be placed on

a local bus of either of the member systems. If the boot disk for member

system 1 was on a SCSI bus internal to member 1, and the system was

unavailable due to a boot disk problem, other systems in the cluster could

not access the disk for possible repair. If the member system boot disks are

on a shared SCSI bus, they can be accessed by other systems on the shared

SCSI bus for possible repair.

By placing the swap partition on a system’s internal SCSI bus, you reduce

total traffic on the shared SCSI bus by an amount equal to the system’s

swap volume.

TruCluster Server Version 5.0A configurations require one or more disks to

hold the Tru64 UNIX operating system. The disk(s) are either private disk(s)

on the system that will become the first cluster member, or disk(s) on a

shared bus that the system can access.

We recommend that you place the /usr, /var, member boot disks, and

quorum disk on a shared SCSI bus connected to all member systems. After

installation, you have the option to reconfigure swap and can place the swap

disks on an internal SCSI bus to increase performance. See the TruCluster

Server Cluster Administration manual for more information.

1.6.2 Two-Node Clusters Using UltraSCSI BA356 Storage Units with

Increased Disk Configurations

The configuration shown in Figure 1–3 is a minimal configuration, with a

lack of disk space for highly available applications. Starting with Tru64

UNIX Version 5.0, 16 devices are supported on a SCSI bus. Therefore,

1–10 Introduction

Page 33

multiple BA356 storage units can be used on the same SCSI bus to allow

more devices on the same bus.

Figure 1–4 shows the configuration in Figure 1–3 with a second UltraSCSI

BA356 storage unit that provides an additional seven disks for highly

available applications.

Figure 1–4: Two-Node Cluster with Two UltraSCSI DS-BA356 Storage Units

Network

Host Bus Adapter (ID 6)

Tru64

UNIX

Disk

Data

disks

Do not use for

data disk. May

be used for

redundant power

supply.

Member

System

1

Memory Channel

UltraSCSI

BA356

Clusterwide

/, /usr, /var

Member 1

Boot Disk

Member 2

Boot Disk

Quorum

Disk

ID 4

ID 5

PWR

Memory

Channel

Interface

Shared

SCSI

Bus

ID 0

ID 1

ID 2

ID 3

ID 4

ID 5

ID 6

Member

System

2

Memory Channel

Host Bus Adapter (ID 7)

UltraSCSI

BA356

ID 8

ID 9

ID 10

Data

Disks

PWR

ID 11

ID 12

ID 13

ID 14 or

redundant

power

supply

ZK-1590U-AI

This configuration, while providing more storage, has a single SCSI bus that

presents a single point of failure. Providing a second SCSI bus would allow

the use of the Logical Storage Manager (LSM) to mirror the /usr and /var

file systems and the data disks across SCSI buses, removing the single SCSI

bus as a single point of failure for these file systems.

Introduction 1–11

Page 34

1.6.3 Two-Node Configurations with UltraSCSI BA356 Storage Units

and Dual SCSI Buses

By adding a second shared SCSI bus, you now have the capability to use the

Logical Storage Manager (LSM) to mirror data disks, and the clusterwide

/usr and /var file systems across SCSI buses.

______________________ Note _______________________

You cannot use LSM to mirror the clusterwide root (/), member

system boot, swap, or quorum disks, but you can use hardware

RAID.

Figure 1–5 shows a small cluster configuration with dual SCSI buses using

LSM to mirror the clusterwide /usr and /var file systems and the data

disks.

1–12 Introduction

Page 35

Figure 1–5: Two-Node Configurations with UltraSCSI BA356 Storage Units

and Dual SCSI Buses

Network

Tru64

UNIX

Disk

Member

System

1

Memory Channel

Host Bus Adapter (ID 6)

Host Bus Adapter (ID 6)

Memory

Channel

Interface

Member

System

2

Memory Channel

Host Bus Adapter (ID 7)

Host Bus Adapter (ID 7)

ID 0

ID 1

ID 2

ID 3

ID 4

ID 5

ID 6

UltraSCSI

BA356

Clusterwide

/, /usr, /var

Member 1

Boot Disk

Member 2

Boot Disk

Quorum

Disk

Data Disk

Data Disk

Redundant

PWR or not

used

PWR

UltraSCSI

BA356

ID 8

Data Disk

ID 9

Data Disk

ID 10

Data Disk

ID 11

Data Disk

ID 12

Data Disk

ID 13

Data Disk

ID 14 or

PWR

PWR

ID 0

ID 1

ID 2

ID 3

ID 4

ID 5

ID 6

UltraSCSI

BA356

Mirrored

/usr, /var

Not Used

Not Used

Not Used

Mirrored

Data Disk

Mirrored

Data Disk

Redundant

PWR or not

used

PWR

UltraSCSI

BA356

Mirrored

Data Disk

Mirrored

Data Disk

Mirrored

Data Disk

Mirrored

Data Disk

Mirrored

Data Disk

Mirrored

Data Disk

ID 14 or

PWR

PWR

ZK-1593U-AI

ID 8

ID 9

ID 10

ID 11

ID 12

ID 13

By using LSM to mirror the /usr and /var file systems and the data disks,

we have achieved higher availability. But, even if you have a second Memory

Channel and redundant networks, because we cannot use LSM to mirror the

clusterwide root (/), quorum, or the member boot disks, we do not have a

no-single-point-of-failure (NSPOF) cluster.

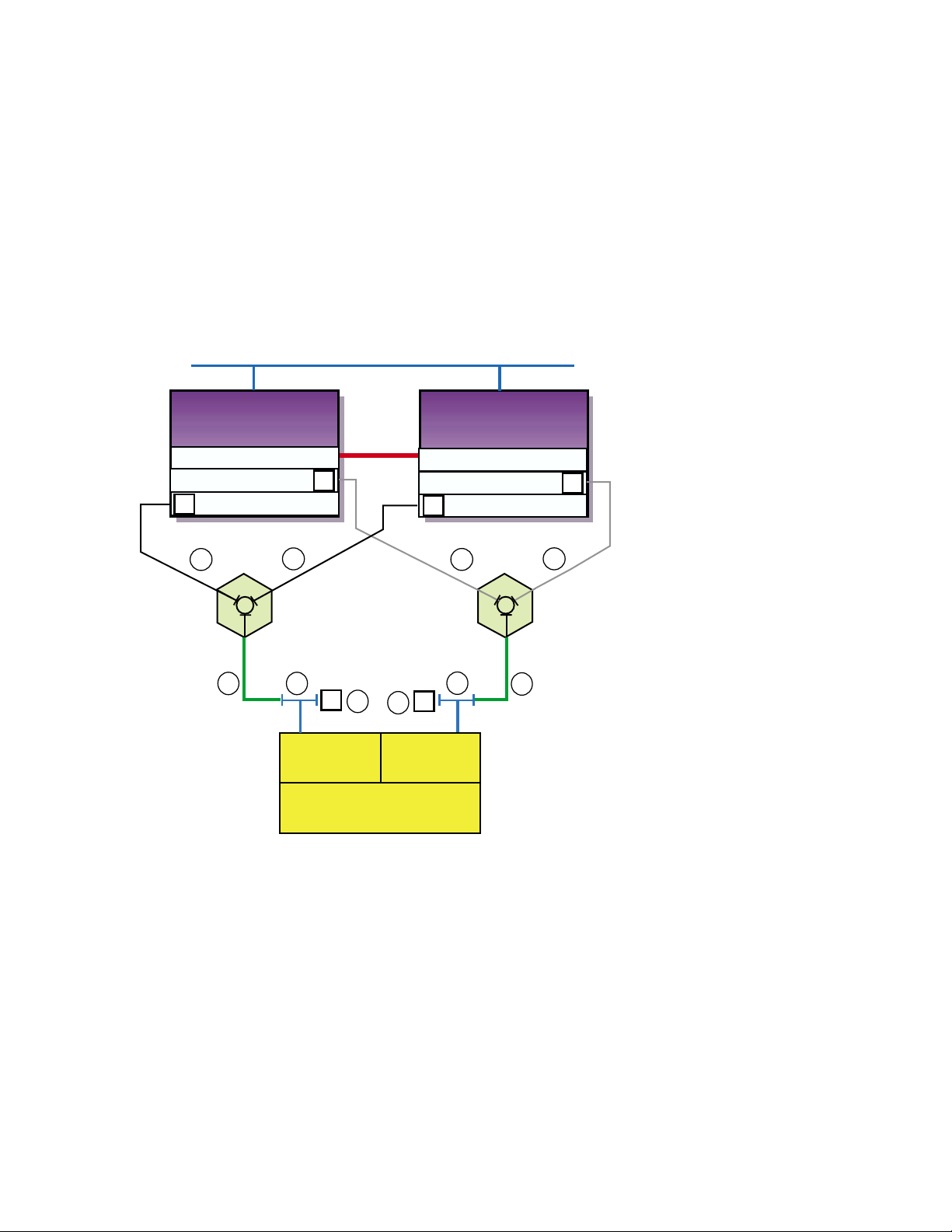

1.6.4 Using Hardware RAID to Mirror the Clusterwide Root File

System and Member System Boot Disks

You can use hardware RAID with any of the supported RAID array

controllers to mirror the clusterwide root (/), quorum, and member boot

disks. Figure 1–6 shows a cluster configuration using an HSZ70 RAID array

controller. An HSZ40, HSZ50, HSZ80, or HSG80 could be used instead of the

Introduction 1–13

Page 36

HSZ70. The array controllers can be configured as a dual redundant pair. If

you want the capability to fail over from one controller to another controller,

you must install the second controller. Also, you must set the failover mode.

Figure 1–6: Cluster Configuration with HSZ70 Controllers in Transparent

Failover Mode

Network

Member

Member

System

System

1

1

Memory

Channel

Member

System

2

Interface

Memory Channel

Host Bus Adapter (ID 6)

Tru64

UNIX

Disk

HSZ70 HSZ70

StorageWorks

RAID Array 7000

Memory Channel

Host Bus Adapter (ID 7)

ZK-1589U-AI

In Figure 1–6 the HSZ40, HSZ50, HSZ70, HSZ80, or HSG80 has transparent

failover mode enabled (SET FAILOVER COPY = THIS_CONTROLLER). In

transparent failover mode, both controllers are connected to the same shared

SCSI bus and device buses. Both controllers service the entire group of

storagesets, single-disk units, or other storage devices. Either controller can

continue to service all of the units if the other controller fails.

The assignment of HSZ target IDs can be balanced between the

controllers to provide better system performance. See the RAID

array controller documentation for information on setting up

storagesets.

1–14 Introduction

______________________ Note _______________________

Page 37

Note that in the configuration shown in Figure 1–6, there is only one shared

SCSI bus. Even by mirroring the clusterwide root and member boot disks,

the single shared SCSI bus is a single point of failure.

1.6.5 Creating a NSPOF Cluster

To create a no-single-point-of-failure (NSPOF) cluster:

• Use hardware RAID to mirror the clusterwide root (/), /usr, and /var

file systems, the member boot disks, quorum disk (if present), and data

disks

• Use at least two shared SCSI buses to access dual-redundant RAID

array controllers set up for multiple-bus failover mode (HSZ70, HSZ80,

and HSG80)

• Install a second Memory Channel interface for redundancy

• Install redundant power supplies

• Install redundant networks

• Connect the systems and storage to an uninterruptable power supply

(UPS)

Tru64 UNIX support for multipathing provides support for multiple-bus

failover.

______________________ Notes ______________________

Only the HSZ70, HSZ80, and HSG80 are capable of supporting

multiple-bus failover (SET MULTIBUS_FAILOVER COPY =

THIS_CONTROLLER).

Partitioned storagesets and partitioned single-disk units cannot

function in multiple-bus failover dual-redundant configurations

with the HSZ70 or HSZ80. You must delete any partitions before

configuring the controllers for multiple-bus failover.

Partitioned storagesets and partitioned single-disk units are

supported with the HSG80 and ACS V8.5.

Figure 1–7 shows a cluster configuration with dual-shared SCSI buses and a