Page 1

Citrix XenServer ® 5.6 Administrator's Guide

Published June 2010

1.1 Edition

Page 2

Citrix XenServer ® 5.6 Administrator's Guide

Copyright © 2009 Citrix All Rights Reserved.

Version: 5.6

Citrix, Inc.

851 West Cypress Creek Road

Fort Lauderdale, FL 33309

United States of America

Disclaimers

This document is furnished "AS IS." Citrix, Inc. disclaims all warranties regarding the contents of this

document, including, but not limited to, implied warranties of merchantability and fitness for any particular

purpose. This document may contain technical or other inaccuracies or typographical errors. Citrix, Inc.

reserves the right to revise the information in this document at any time without notice. This document and

the software described in this document constitute confidential information of Citrix, Inc. and its licensors,

and are furnished under a license from Citrix, Inc.

Citrix Systems, Inc., the Citrix logo, Citrix XenServer and Citrix XenCenter, are trademarks of Citrix Systems,

Inc. in the United States and other countries. All other products or services mentioned in this document are

trademarks or registered trademarks of their respective companies.

Trademarks

Citrix ®

XenServer ®

XenCenter ®

1.1 Edition

Page 3

Table of Contents

Document Overview .................................................................................... 1

How this Guide relates to other documentation .................................................................................. 1

Managing users ............................................................................................ 2

Authenticating users using Active Directory (AD) ................................................................................. 2

Configuring Active Directory authentication ................................................................................ 3

User authentication .............................................................................................................. 5

Removing access for a user .................................................................................................. 6

Leaving an AD domain ......................................................................................................... 6

Role Based Access Control ........................................................................................................... 7

Roles ................................................................................................................................ 8

Definitions of RBAC roles and permissions ................................................................................ 9

Working with RBAC using the xe CLI ..................................................................................... 14

To list all the available defined roles in XenServer ............................................................... 14

To display a list of current subjects: ............................................................................... 15

To add a subject to RBAC ........................................................................................... 16

To assign an RBAC role to a created subject ................................................................... 16

To change a subject’s RBAC role: ................................................................................. 17

Auditing ........................................................................................................................... 17

Audit log xe CLI commands ......................................................................................... 17

To obtain all audit records from the pool ......................................................................... 17

To obtain audit records of the pool since a precise millisecond timestamp ............................... 17

To obtain audit records of the pool since a precise minute timestamp ..................................... 17

How does XenServer compute the roles for the session? ............................................................ 18

XenServer hosts and resource pools ....................................................... 19

Hosts and resource pools overview ................................................................................................ 19

Requirements for creating resource pools ........................................................................................ 19

iii

Page 4

Creating a resource pool ............................................................................................................. 20

Creating heterogeneous resource pools .......................................................................................... 21

Adding shared storage ................................................................................................................ 21

Removing a XenServer host from a resource pool ............................................................................. 22

High Availability .......................................................................................................................... 23

HA Overview ..................................................................................................................... 23

Overcommitting ......................................................................................................... 23

Overcommitment Warning ............................................................................................ 23

Host Fencing ............................................................................................................ 23

Configuration Requirements .................................................................................................. 24

Restart priorities ................................................................................................................ 24

Enabling HA on a XenServer pool .................................................................................................. 25

Enabling HA using the CLI ................................................................................................... 25

Removing HA protection from a VM using the CLI ..................................................................... 26

Recovering an unreachable host ........................................................................................... 26

Shutting down a host when HA is enabled .............................................................................. 26

Shutting down a VM when it is protected by HA ....................................................................... 26

Host Power On ......................................................................................................................... 27

Powering on hosts remotely ................................................................................................. 27

Using the CLI to Manage Host Power On ............................................................................... 27

To enable Host Power On using the CLI ......................................................................... 28

To turn on hosts remotely using the CLI .......................................................................... 28

Configuring a Custom Script for XenServer’s Host Power On Feature ............................................. 28

Key/Value Pairs ......................................................................................................... 28

host.power_on_mode .......................................................................................... 28

host.power_on_config ......................................................................................... 29

Sample Script ........................................................................................................... 29

Storage ........................................................................................................ 30

iv

Page 5

Storage Overview ....................................................................................................................... 30

Storage Repositories (SRs) ................................................................................................... 30

Virtual Disk Images (VDIs) .................................................................................................... 30

Physical Block Devices (PBDs) .............................................................................................. 30

Virtual Block Devices (VBDs) ................................................................................................ 31

Summary of Storage objects ................................................................................................ 31

Virtual Disk Data Formats .................................................................................................... 31

VHD-based VDIs ........................................................................................................ 32

VHD Chain Coalescing ........................................................................................ 32

Space Utilization ................................................................................................ 32

LUN-based VDIs ........................................................................................................ 33

Storage configuration .................................................................................................................. 33

Creating Storage Repositories ............................................................................................... 33

Upgrading LVM storage from XenServer 5.0 or earlier ................................................................. 34

LVM performance considerations ........................................................................................... 34

VDI types ................................................................................................................. 34

Creating a raw virtual disk using the xe CLI ..................................................................... 34

Converting between VDI formats ........................................................................................... 35

Probing an SR .................................................................................................................. 35

Storage Multipathing ........................................................................................................... 38

Storage Repository Types ............................................................................................................ 39

Local LVM ........................................................................................................................ 40

Creating a local LVM SR (lvm) ....................................................................................... 40

Local EXT3 VHD ................................................................................................................ 40

Creating a local EXT3 SR (ext) ...................................................................................... 40

udev ............................................................................................................................... 41

ISO ................................................................................................................................. 41

EqualLogic ....................................................................................................................... 41

v

Page 6

Creating a shared EqualLogic SR .................................................................................. 41

EqualLogic VDI Snapshot space allocation with XenServer EqualLogic Adapter ......................... 42

Creating a VDI using the CLI ................................................................................ 43

NetApp ............................................................................................................................ 43

Creating a shared NetApp SR over iSCSI ........................................................................ 46

Managing VDIs in a NetApp SR ................................................................................... 47

Taking VDI snapshots with a NetApp SR ......................................................................... 47

Software iSCSI Support ....................................................................................................... 47

XenServer Host iSCSI configuration ............................................................................... 48

Managing Hardware Host Bus Adapters (HBAs) ........................................................................ 48

Sample QLogic iSCSI HBA setup ................................................................................. 48

Removing HBA-based SAS, FC or iSCSI device entries ..................................................... 49

LVM over iSCSI ................................................................................................................. 49

Creating a shared LVM over iSCSI SR using the software iSCSI initiator (lvmoiscsi) .................... 49

Creating a shared LVM over Fibre Channel / iSCSI HBA or SAS SR (lvmohba) .......................... 50

NFS VHD ......................................................................................................................... 52

Creating a shared NFS SR (nfs) ................................................................................... 53

LVM over hardware HBA ..................................................................................................... 53

Citrix StorageLink Gateway (CSLG) SRs .................................................................................. 54

Creating a shared StorageLink SR ................................................................................. 54

Managing Storage Repositories .................................................................................................... 58

Destroying or forgetting a SR ............................................................................................... 59

Introducing an SR ............................................................................................................. 59

Resizing an SR .................................................................................................................. 60

Converting local Fibre Channel SRs to shared SRs .................................................................... 60

Moving Virtual Disk Images (VDIs) between SRs ........................................................................ 60

Copying all of a VM’s VDIs to a different SR .................................................................... 60

Copying individual VDIs to a different SR ......................................................................... 60

vi

Page 7

Adjusting the disk IO scheduler ............................................................................................. 61

Virtual disk QoS settings ............................................................................................................. 61

Configuring VM memory ........................................................................... 63

What is Dynamic Memory Control (DMC)? ....................................................................................... 63

The concept of dynamic range ............................................................................................. 63

The concept of static range ................................................................................................. 64

DMC Behaviour ................................................................................................................. 64

How does DMC Work? ....................................................................................................... 64

Memory constraints ............................................................................................................ 65

Supported operating systems ............................................................................................... 65

xe CLI commands ..................................................................................................................... 66

Display the static memory properties of a VM ........................................................................... 66

Display the dynamic memory properties of a VM ....................................................................... 66

Updating memory properties ................................................................................................ 67

Update individual memory properties ...................................................................................... 68

Upgrade issues ......................................................................................................................... 68

Workload Balancing interaction ..................................................................................................... 68

Networking .................................................................................................. 69

XenServer networking overview ..................................................................................................... 69

Network objects ................................................................................................................ 70

Networks ......................................................................................................................... 70

VLANs ............................................................................................................................. 70

Using VLANs with host management interfaces ................................................................ 70

Using VLANs with virtual machines ................................................................................ 70

Using VLANs with dedicated storage NICs ...................................................................... 71

Combining management interfaces and guest VLANs on a single host NIC .............................. 71

NIC bonds ....................................................................................................................... 71

vii

Page 8

Initial networking configuration ............................................................................................. 72

Managing networking configuration ................................................................................................ 72

Creating networks in a standalone server ................................................................................ 72

Creating networks in resource pools ...................................................................................... 73

Creating VLANs ................................................................................................................. 73

Creating NIC bonds on a standalone host ............................................................................... 74

Creating a NIC bond on a dual-NIC host ......................................................................... 74

Controlling the MAC address of the bond ........................................................................ 75

Reverting NIC bonds .................................................................................................. 75

Creating NIC bonds in resource pools .................................................................................... 75

Adding NIC bonds to new resource pools ....................................................................... 76

Adding NIC bonds to an existing pool ............................................................................ 77

Configuring a dedicated storage NIC ...................................................................................... 79

Controlling Quality of Service (QoS) ........................................................................................ 79

Changing networking configuration options .............................................................................. 79

Hostname ................................................................................................................ 80

DNS servers ............................................................................................................. 80

Changing IP address configuration for a standalone host .................................................... 80

Changing IP address configuration in resource pools .......................................................... 80

Management interface ................................................................................................. 81

Disabling management access ...................................................................................... 81

Adding a new physical NIC .......................................................................................... 82

NIC/PIF ordering in resource pools ........................................................................................ 82

Verifying NIC ordering ................................................................................................. 82

Re-ordering NICs ....................................................................................................... 82

Networking Troubleshooting ......................................................................................................... 83

Diagnosing network corruption .............................................................................................. 83

Recovering from a bad network configuration ........................................................................... 84

viii

Page 9

Workload Balancing ................................................................................. 85

What’s New? ............................................................................................................................ 85

New Features .................................................................................................................... 85

Changes .......................................................................................................................... 86

Workload Balancing Overview ....................................................................................................... 86

Workload Balancing Basic Concepts ...................................................................................... 87

Workload Balancing Installation Overview ........................................................................................ 87

Workload Balancing System Requirements .............................................................................. 88

Supported XenServer Versions ...................................................................................... 88

Supported Operating Systems ...................................................................................... 88

Recommended Hardware ............................................................................................ 88

Workload Balancing Data Store Requirements .......................................................................... 89

SQL Server Database Authentication Requirements .......................................................... 89

Operating System Language Support ..................................................................................... 90

Preinstallation Considerations ................................................................................................ 90

WLB Access Control Permissions .................................................................................. 91

Installing Workload Balancing ................................................................................................ 91

To install Workload Balancing server ............................................................................... 92

To verify your Workload Balancing installation ................................................................... 93

Configuring Firewalls ........................................................................................................... 94

Upgrading Workload Balancing ............................................................................................. 94

Upgrading Workload Balancing on the Same Operating System ............................................ 95

Upgrading SQL Server ................................................................................................ 95

Upgrading Workload Balancing and the Operating System .................................................. 95

Initializing Workload Balancing ...................................................................................................... 95

To initialize Workload Balancing ............................................................................................. 96

Authorization for Workload Balancing .................................................................................... 97

Configuring Antivirus Software ............................................................................................... 98

ix

Page 10

Configuring Workload Balancing Settings ........................................................................................ 98

To display the Workload Balancing Configuration dialog box ........................................................ 99

Adjusting the Optimization Mode ........................................................................................... 99

Fixed ....................................................................................................................... 99

Scheduled ................................................................................................................ 99

To set an optimization mode for all time periods .............................................................. 100

To specify times when the optimization mode will change automatically ................................. 100

To edit or delete an automatic optimization interval ........................................................... 100

Optimizing and Managing Power Automatically ...................................................................... 100

Accepting Optimization Recommendations Automatically ................................................... 101

Enabling Workload Balancing Power Management ........................................................... 101

Designing Environments for Power Management and VM Consolidation ................................ 102

To apply optimization recommendations automatically ....................................................... 103

To select servers for power management ....................................................................... 103

Changing the Critical Thresholds .......................................................................................... 103

Default Settings for Critical Thresholds .......................................................................... 104

To change the critical thresholds .................................................................................. 104

Tuning Metric Weightings ................................................................................................... 104

To edit metric weighting factors ................................................................................... 105

Excluding Hosts from Recommendations ............................................................................... 105

To exclude hosts from placement and optimization recommendations ................................... 105

Configuring Optimization Intervals, Report Subscriptions, and Data Storage ................................... 106

Historical Data (Storage Time) ..................................................................................... 106

To configure the data storage period .................................................................... 106

VM Optimization Criteria ............................................................................................ 106

Length of Time Between Optimization Recommendations After VM Moves ..................... 106

Number of Times an Optimization Recommendation is Made ...................................... 107

Setting the Minimum Optimization Severity ............................................................. 107

x

Page 11

Modifying the Aggressiveness Setting ................................................................... 107

Receiving Reports by Email Automatically (Report Subscriptions) ......................................... 108

To configure report subscriptions ........................................................................ 109

Choosing an Optimal Server for VM Initial Placement, Migrate, and Resume ........................................... 109

To start a virtual machine on the optimal server ....................................................................... 109

To resume a virtual machine on the optimal server ........................................................... 109

Accepting Optimization Recommendations ..................................................................................... 110

To accept an optimization recommendation ............................................................................ 110

Administering Workload Balancing ................................................................................................ 110

Disabling Workload Balancing ............................................................................................ 111

Reconfiguring a Pool to Use Another WLB Server .................................................................... 111

Updating Workload Balancing Credentials .............................................................................. 112

Uninstalling Workload Balancing ......................................................................................... 113

Customizing Workload Balancing ......................................................................................... 113

Entering Maintenance Mode with Workload Balancing Enabled ........................................................... 113

To enter maintenance mode with Workload Balancing enabled .................................................... 114

Working with Workload Balancing Reports ..................................................................................... 114

Introduction ..................................................................................................................... 114

Subscribing to Workload Balancing Reports ........................................................................... 114

Using Workload Balancing Reports for Tasks .......................................................................... 115

Evaluating the Effectiveness of Your Optimization Thresholds .............................................. 115

Generating and Managing Workload Balancing Reports ............................................................ 115

To generate a Workload Balancing report ....................................................................... 115

To subscribe to a Workload Balancing report .................................................................. 115

To cancel a report subscription ................................................................................... 116

To navigate in a Workload Balancing Report ................................................................... 116

To print a Workload Balancing report ............................................................................ 117

To export a Workload Balancing report .................................................................. 117

xi

Page 12

Displaying Workload Balancing Reports ................................................................................. 117

Report Generation Features ........................................................................................ 117

Toolbar Buttons ....................................................................................................... 117

Workload Balancing Report Glossary .................................................................................... 118

Host Health History .................................................................................................. 118

Pool Optimization Performance History .......................................................................... 119

Pool Audit Log History .............................................................................................. 119

Audit Log Event Names ..................................................................................... 120

Pool Health ............................................................................................................. 120

Pool Health History ................................................................................................... 121

Pool Optimization History ........................................................................................... 121

Virtual Machine Motion History .................................................................................... 122

Virtual Machine Performance History ............................................................................. 122

Backup and recovery ............................................................................... 123

Backups ................................................................................................................................. 123

Full metadata backup and disaster recovery (DR) ............................................................................ 124

DR and metadata backup overview ...................................................................................... 124

Backup and restore using xsconsole .................................................................................... 124

Moving SRs between hosts and Pools .................................................................................. 125

Using Portable SRs for Manual Multi-Site Disaster Recovery ...................................................... 126

VM Snapshots ......................................................................................................................... 126

Regular Snapshots ........................................................................................................... 127

Quiesced Snapshots ......................................................................................................... 127

Snapshots with memory .................................................................................................... 127

Creating a VM Snapshot .................................................................................................... 127

Creating a snapshot with memory ........................................................................................ 128

To list all of the snapshots on a XenServer pool ...................................................................... 128

To list the snapshots on a particular VM ................................................................................ 128

xii

Page 13

Restoring a VM to its previous state ..................................................................................... 129

Deleting a snapshot .................................................................................................. 129

Snapshot Templates ........................................................................................................ 130

Creating a template from a snapshot ............................................................................ 130

Exporting a snapshot to a template .............................................................................. 131

Advanced Notes for Quiesced Snapshots ...................................................................... 131

Coping with machine failures ...................................................................................................... 133

Member failures ............................................................................................................... 133

Master failures ................................................................................................................. 133

Pool failures .................................................................................................................... 134

Coping with Failure due to Configuration Errors ....................................................................... 134

Physical Machine failure ..................................................................................................... 134

Monitoring and managing XenServer .................................................... 136

Alerts ..................................................................................................................................... 136

Customizing Alerts ............................................................................................................ 137

Configuring Email Alerts ..................................................................................................... 138

Custom Fields and Tags ............................................................................................................ 139

Custom Searches ..................................................................................................................... 139

Determining throughput of physical bus adapters ............................................................................ 139

Troubleshooting ........................................................................................ 140

XenServer host logs .................................................................................................................. 140

Sending host log messages to a central server ....................................................................... 140

XenCenter logs ........................................................................................................................ 141

Troubleshooting connections between XenCenter and the XenServer host ............................................. 141

A. Command line interface ..................................................................... 142

Basic xe syntax ....................................................................................................................... 142

Special characters and syntax ..................................................................................................... 143

xiii

Page 14

Command types ...................................................................................................................... 144

Parameter types ............................................................................................................... 145

Low-level param commands ............................................................................................... 145

Low-level list commands .................................................................................................... 146

xe command reference .............................................................................................................. 147

Bonding commands .......................................................................................................... 147

bond-create ............................................................................................................ 147

bond-destroy ........................................................................................................... 147

CD commands ................................................................................................................ 148

cd-list .................................................................................................................... 149

Console commands .......................................................................................................... 149

Event commands ............................................................................................................. 150

event-wait ............................................................................................................... 150

Host (XenServer host) commands ........................................................................................ 151

host-backup ............................................................................................................ 154

host-bugreport-upload ............................................................................................... 155

host-crashdump-destroy ............................................................................................ 155

host-crashdump-upload ............................................................................................. 155

host-disable ............................................................................................................ 155

host-dmesg ............................................................................................................ 155

host-emergency-management-reconfigure ...................................................................... 155

host-enable ............................................................................................................. 156

host-evacuate .......................................................................................................... 156

host-forget .............................................................................................................. 156

host-get-system-status .............................................................................................. 156

host-get-system-status-capabilities ............................................................................... 157

host-is-in-emergency-mode ........................................................................................ 158

host-apply-edition .................................................................................................... 158

xiv

Page 15

license-server-address ............................................................................................... 158

license-server-port .................................................................................................... 158

host-license-add ...................................................................................................... 158

host-license-view ...................................................................................................... 159

host-logs-download .................................................................................................. 159

host-management-disable .......................................................................................... 159

host-management-reconfigure ..................................................................................... 159

host-power-on ......................................................................................................... 160

host-set-power-on .................................................................................................... 160

host-reboot ............................................................................................................. 160

host-restore ............................................................................................................ 160

host-set-hostname-live .............................................................................................. 160

host-shutdown ......................................................................................................... 161

host-syslog-reconfigure .............................................................................................. 161

Log commands ............................................................................................................... 161

log-get-keys ............................................................................................................ 161

log-reopen .............................................................................................................. 161

log-set-output .......................................................................................................... 161

Message commands ......................................................................................................... 162

message-create ....................................................................................................... 162

message-list ............................................................................................................ 162

Network commands .......................................................................................................... 163

network-create ......................................................................................................... 164

network-destroy ....................................................................................................... 164

Patch (update) commands ................................................................................................. 164

patch-apply ............................................................................................................. 165

patch-clean ............................................................................................................. 165

patch-pool-apply ...................................................................................................... 165

xv

Page 16

patch-precheck ........................................................................................................ 165

patch-upload ........................................................................................................... 165

PBD commands .............................................................................................................. 165

pbd-create .............................................................................................................. 166

pbd-destroy ............................................................................................................ 166

pbd-plug ................................................................................................................ 166

pbd-unplug ............................................................................................................. 166

PIF commands ................................................................................................................ 166

pif-forget ................................................................................................................ 169

pif-introduce ............................................................................................................ 169

pif-plug .................................................................................................................. 170

pif-reconfigure-ip ...................................................................................................... 170

pif-scan .................................................................................................................. 170

pif-unplug ............................................................................................................... 170

Pool commands ............................................................................................................... 170

pool-designate-new-master ........................................................................................ 172

pool-dump-database ................................................................................................. 172

pool-eject ............................................................................................................... 172

pool-emergency-reset-master ...................................................................................... 172

pool-emergency-transition-to-master ............................................................................. 172

pool-ha-enable ......................................................................................................... 172

pool-ha-disable ........................................................................................................ 173

pool-join ................................................................................................................. 173

pool-recover-slaves ................................................................................................... 173

pool-restore-database ............................................................................................... 173

pool-sync-database .................................................................................................. 173

Storage Manager commands .............................................................................................. 173

SR commands ................................................................................................................ 174

xvi

Page 17

sr-create ................................................................................................................. 175

sr-destroy ............................................................................................................... 175

sr-forget ................................................................................................................. 175

sr-introduce ............................................................................................................. 176

sr-probe ................................................................................................................. 176

sr-scan ................................................................................................................... 176

Task commands ............................................................................................................... 176

task-cancel ............................................................................................................. 177

Template commands ......................................................................................................... 177

template-export ........................................................................................................ 185

Update commands ........................................................................................................... 185

update-upload ......................................................................................................... 185

User commands .............................................................................................................. 185

user-password-change .............................................................................................. 185

VBD commands ............................................................................................................... 186

vbd-create .............................................................................................................. 187

vbd-destroy ............................................................................................................. 188

vbd-eject ................................................................................................................ 188

vbd-insert ............................................................................................................... 188

vbd-plug ................................................................................................................. 188

vbd-unplug ............................................................................................................. 188

VDI commands ................................................................................................................ 188

vdi-clone ................................................................................................................ 190

vdi-copy ................................................................................................................. 190

vdi-create ............................................................................................................... 190

vdi-destroy .............................................................................................................. 191

vdi-forget ................................................................................................................ 191

vdi-import ............................................................................................................... 191

xvii

Page 18

vdi-introduce ........................................................................................................... 191

vdi-resize ................................................................................................................ 191

vdi-snapshot ........................................................................................................... 191

vdi-unlock ............................................................................................................... 192

VIF commands ................................................................................................................ 192

vif-create ................................................................................................................ 194

vif-destroy ............................................................................................................... 194

vif-plug ................................................................................................................... 194

vif-unplug ............................................................................................................... 194

VLAN commands ............................................................................................................. 195

vlan-create .............................................................................................................. 195

pool-vlan-create ....................................................................................................... 195

vlan-destroy ............................................................................................................ 195

VM commands ................................................................................................................ 195

vm-cd-add .............................................................................................................. 202

vm-cd-eject ............................................................................................................. 202

vm-cd-insert ............................................................................................................ 203

vm-cd-list ............................................................................................................... 203

vm-cd-remove ......................................................................................................... 203

vm-clone ................................................................................................................ 203

vm-compute-maximum-memory .................................................................................. 203

vm-copy ................................................................................................................. 204

vm-crashdump-list .................................................................................................... 204

vm-data-source-forget ............................................................................................... 204

vm-data-source-list ................................................................................................... 204

vm-data-source-query ............................................................................................... 205

vm-data-source-record .............................................................................................. 205

vm-destroy .............................................................................................................. 205

xviii

Page 19

vm-disk-add ............................................................................................................ 205

vm-disk-list ............................................................................................................. 206

vm-disk-remove ....................................................................................................... 206

vm-export ............................................................................................................... 206

vm-import ............................................................................................................... 206

vm-install ................................................................................................................ 207

vm-memory-shadow-multiplier-set ................................................................................ 207

vm-migrate ............................................................................................................. 207

vm-reboot ............................................................................................................... 208

vm-reset-powerstate ................................................................................................. 208

vm-resume .............................................................................................................. 208

vm-shutdown .......................................................................................................... 208

vm-start ................................................................................................................. 209

vm-suspend ............................................................................................................ 209

vm-uninstall ............................................................................................................. 209

vm-vcpu-hotplug ...................................................................................................... 209

vm-vif-list ................................................................................................................ 210

Workload Balancing commands ........................................................................................... 210

pool-initialize-wlb ...................................................................................................... 210

pool-param-set other-config ........................................................................................ 210

host-retrieve-wlb-evacuate-recommendations .................................................................. 210

vm-retrieve-wlb-recommendations ............................................................................... 210

pool-certificate-list .................................................................................................... 211

pool-certificate-install ................................................................................................. 211

pool-certificate-sync ................................................................................................. 211

pool-param-set ........................................................................................................ 212

pool-deconfigure-wlb ................................................................................................ 212

pool-retrieve-wlb-configuration .................................................................................... 212

xix

Page 20

pool-retrieve-wlb-recommendations ............................................................................ 212

pool-retrieve-wlb-report ........................................................................................... 212

pool-send-wlb-configuration ....................................................................................... 213

Index .......................................................................................................... 214

xx

Page 21

Document Overview

This document is a system administrator's guide to XenServer™, the platform virtualization solution from

Citrix®. It describes the tasks involved in configuring a XenServer deployment-- in particular, how to set up

storage, networking and resource pools, and how to administer XenServer hosts using the xe command

line interface (CLI).

This section summarizes the rest of the guide so that you can find the information you need. The following

topics are covered:

• XenServer hosts and resource pools

• XenServer storage configuration

• XenServer network configuration

• XenServer workload balancing

• XenServer backup and recovery

• Monitoring and managing XenServer

• XenServer command line interface

• XenServer troubleshooting

• XenServer resource allocation guidelines

How this Guide relates to other documentation

This document is primarily aimed at system administrators, who need to configure and administer XenServer

deployments. Other documentation shipped with this release includes:

• XenServer Installation Guide provides a high level overview of XenServer, along with step-by-step

instructions on installing XenServer hosts and the XenCenter management console.

• XenServer Virtual Machine Installation Guide describes how to install Linux and Windows VMs on top of

a XenServer deployment. As well as installing new VMs from install media (or using the VM templates

provided with the XenServer release), this guide also explains how to create VMs from existing physical

machines, using a process called P2V.

• XenServer Software Development Kit Guide presents an overview of the XenServer SDK- a selection of

code samples that demonstrate how to write applications that interface with XenServer hosts.

• XenAPI Specification provides a programmer's reference guide to the XenServer API.

• XenServer User Security considers the issues involved in keeping your XenServer installation secure.

• Release Notes provides a list of known issues that affect this release.

1

Page 22

Managing users

When you first install XenServer, a user account is added to XenServer automatically. This account is the

local super user (LSU), or root, which is authenticated locally by the XenServer computer.

The local super user (LSU), or root, is a special user account used for system administration and has all

rights or permissions. In XenServer, the local super user is the default account at installation. The LSU

is authenticated by XenServer and not an external authentication service. This means that if the external

authentication service fails, the LSU can still log in and manage the system. The LSU can always access

the XenServer physical server through SSH.

You can create additional users by adding their Active Directory accounts through either the XenCenter's

Users tab or the CLI. All editions of XenServer can add user accounts from Active Directory. However, only

XenServer Enterprise and Platinum editions let you assign these Active Directory accounts different levels

of permissions (through the Role Based Access Control (RBAC) feature). If you do not use Active Directory

in your environment, you are limited to the LSU account.

The permissions assigned to users when you first add their accounts varies according to your version of

XenServer:

• In the XenServer and XenServer Advanced edition, when you create (add) new users, XenServer

automatically grants the accounts access to all features available in that version.

• In the XenServer Enterprise and Platinum editions, when you create new users, XenServer does not

assign newly created user accounts roles automatically. As a result, these accounts do not have any

access to the XenServer pool until you assign them a role.

If you do not have one of these editions, you can add users from Active Directory. However, all users will

have the Pool Administrator role.

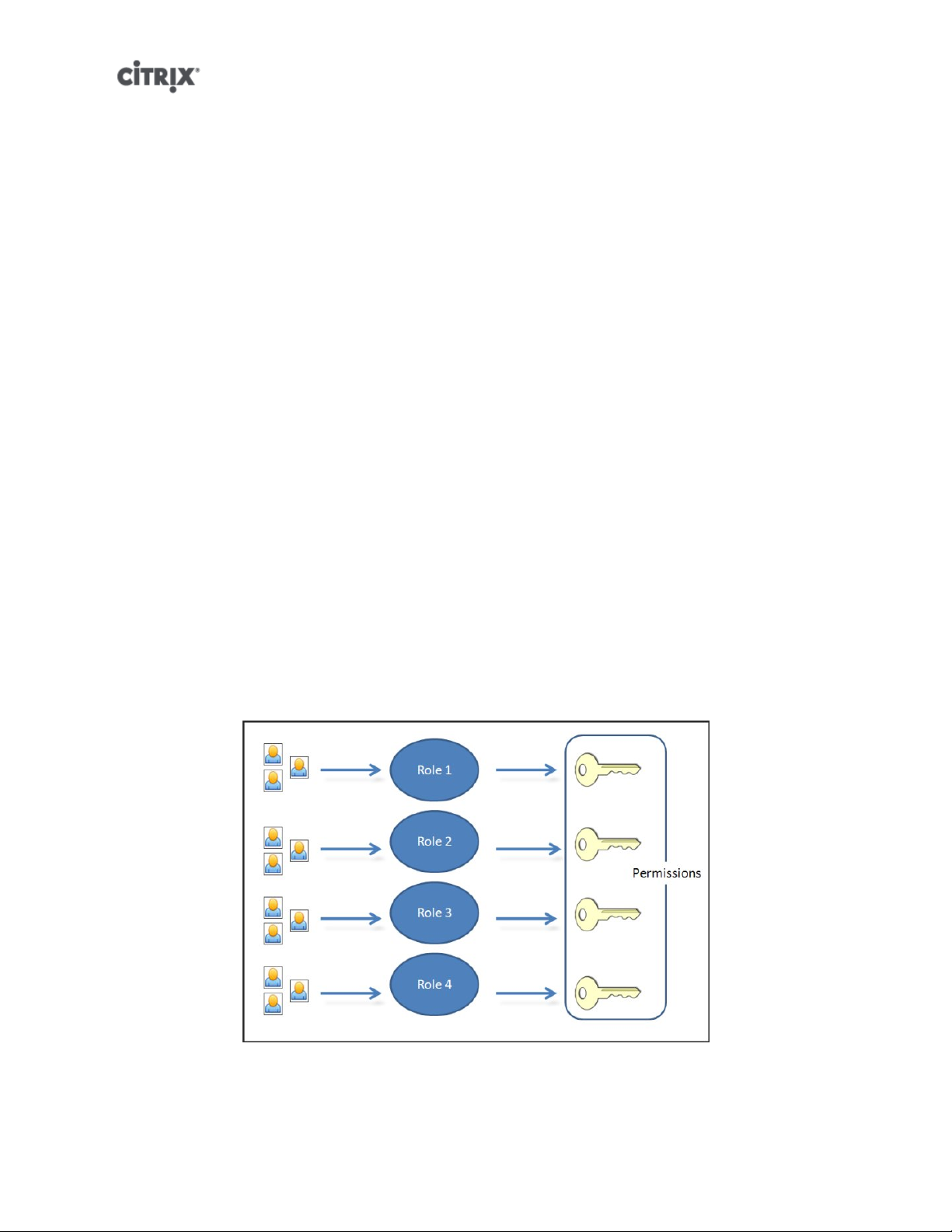

These permissions are granted through roles, as discussed in the section called “Authenticating users using

Active Directory (AD)”.

Authenticating users using Active Directory (AD)

If you want to have multiple user accounts on a server or a pool, you must use Active Directory user accounts

for authentication. This lets XenServer users log in to a pool's XenServers using their Windows domain

credentials.

The only way you can configure varying levels of access for specific users is by enabling Active Directory

authentication, adding user accounts, and assign roles to those accounts.

Active Directory users can use the xe CLI (passing appropriate -u and -pw arguments) and also connect

to the host using XenCenter. Authentication is done on a per-resource pool basis.

Access is controlled by the use of subjects. A subject in XenServer maps to an entity on your directory

server (either a user or a group). When external authentication is enabled, the credentials used to create

a session are first checked against the local root credentials (in case your directory server is unavailable)

and then against the subject list. To permit access, you must create a subject entry for the person or group

you wish to grant access to. This can be done using XenCenter or the xe CLI.

If you are familiar with XenCenter, note that the XenServer CLI uses slightly different terminology to refer

to Active Directory and user account features:

2

Page 23

XenCenter Term XenServer CLI Term

Users Subjects

Add users Add subjects

Understanding Active Directory authentication in the XenServer environment

Even though XenServers are Linux-based, XenServer lets you use Active Directory accounts for XenServer

user accounts. To do so, it passes Active Directory credentials to the Active Directory domain controller.

When added to XenServer, Active Directory users and groups become XenServer subjects, generally

referred to as simply users in XenCenter. When a subject is registered with XenServer, users/groups are

authenticated with Active Directory on login and do not need to qualify their user name with a domain name.

Note:

By default, if you did not qualify the user name (for example, enter either mydomain\myuser or

myser@mydomain.com), XenCenter always attempts to log users in to Active Directory authentication

servers using the domain to which it is currently joined. The exception to this is the LSU account, which

XenCenter always authenticates locally (that is, on the XenServer) first.

The external authentication process works as follows:

1. The credentials supplied when connecting to a server are passed to the Active Directory domain controller

for authentication.

2. The domain controller checks the credentials. If they are invalid, the authentication fails immediately.

3. If the credentials are valid, the Active Directory controller is queried to get the subject identifier and group

membership associated with the credentials.

4. If the subject identifier matches the one stored in the XenServer, the authentication is completed

successfully.

When you join a domain, you enable Active Directory authentication for the pool. However, when a pool is

joined to a domain, only users in that domain (or a domain with which it has trust relationships) can connect

to the pool.

Note:

Manually updating the DNS configuration of a DHCP-configured network PIF is unsupported and might cause

Active Directory integration, and consequently user authentication, to fail or stop working.

Upgrading from XenServer 5.5

When you upgrade from XenServer 5.5 to the current release, any user accounts created in XenServer 5.5

are assigned the role of pool-admin. This is done for backwards compatibility reasons: in XenServer 5.5, all

users had full permissions to perform any task on the pool.

As a result, if you are upgrading from XenServer 5.5, make sure you revisit the role associated with each

user account to make sure it is still appropriate.

Configuring Active Directory authentication

XenServer supports use of Active Directory servers using Windows 2003 or later.

Active Directory authentication for a XenServer host requires that the same DNS servers are used for

both the Active Directory server (configured to allow for interoperability) and the XenServer host. In some

3

Page 24

configurations, the active directory server may provide the DNS itself. This can be achieved either using

DHCP to provide the IP address and a list of DNS servers to the XenServer host, or by setting values in the

PIF objects or using the installer if a manual static configuration is used.

Citrix recommends enabling DHCP to broadcast host names. In particular, the host names localhost or

linux should not be assigned to hosts.

Note the following:

• XenServer hostnames should be unique throughout the XenServer deployment. XenServer labels its AD

entry on the AD database using its hostname. Therefore, if two XenServer hosts have the same hostname

and are joined to the same AD domain, the second XenServer will overwrite the AD entry of the first

XenServer, regardless of if they are in the same or in different pools, causing the AD authentication on

the first XenServer to stop working.

It is possible to use the same hostname in two XenServer hosts, as long as they join different AD domains.

• The servers can be in different time-zones, as it is the UTC time that is compared. To ensure

synchronization is correct, you may choose to use the same NTP servers for your XenServer pool and

the Active Directory server.

• Mixed-authentication pools are not supported (that is, you cannot have a pool where some servers in the

pool are configured to use Active Directory and some are not).

• The XenServer Active Directory integration uses the Kerberos protocol to communicate with the Active

Directory servers. Consequently, XenServer does not support communicating with Active Directory

servers that do not utilize Kerberos.

• For external authentication using Active Directory to be successful, it is important that the clocks on your

XenServer hosts are synchronized with those on your Active Directory server. When XenServer joins

the Active Directory domain, this will be checked and authentication will fail if there is too much skew

between the servers.

Warning:

Host names must consist solely of no more than 63 alphanumeric characters, and must not be purely

numeric.

Once you have Active Directory authentication enabled, if you subsequently add a server to that pool,

you are prompted to configure Active Directory on the server joining the pool. When you are prompted for

credentials on the joining server, enter Active Directory credentials with sufficient privileges to add servers

to that domain.

Enabling external authentication on a pool

• External authentication using Active Directory can be configured using either XenCenter or the CLI

using the command below.

xe pool-enable-external-auth auth-type=AD \

service-name=<full-qualified-domain> \

config:user=<username> \

config:pass=<password>

The user specified needs to have Add/remove computer objects or workstations privileges,

which is the default for domain administrators.

Note:

If you are not using DHCP on the network that Active Directory and your XenServer hosts use you can use

these two approaches to setup your DNS:

4

Page 25

1. Configure the DNS server to use on your XenServer hosts:

xe pif-reconfigure-ip mode=static dns=<dnshost>

2. Manually set the management interface to use a PIF that is on the same network as your DNS server:

xe host-management-reconfigure pif-uuid=<pif_in_the_dns_subnetwork>

Note:

External authentication is a per-host property. However, Citrix advises that you enable and disable this on a

per-pool basis – in this case XenServer will deal with any failures that occur when enabling authentication

on a particular host and perform any roll-back of changes that may be required, ensuring that a consistent

configuration is used across the pool. Use the host-param-list command to inspect properties of a host and

to determine the status of external authentication by checking the values of the relevant fields.

Disabling external authentication

• Use XenCenter to disable Active Directory authentication, or the following xe command:

xe pool-disable-external-auth

User authentication

To allow a user access to your XenServer host, you must add a subject for that user or a group that they are

in. (Transitive group memberships are also checked in the normal way, for example: adding a subject for

group A, where group A contains group B and user 1 is a member of group B would permit access to user

1.) If you wish to manage user permissions in Active Directory, you could create a single group that you then

add and remove users to/from; alternatively, you can add and remove individual users from XenServer, or

a combination of users and groups as your would be appropriate for your authentication requirements. The

subject list can be managed from XenCenter or using the CLI as described below.

When authenticating a user, the credentials are first checked against the local root account, allowing you

to recover a system whose AD server has failed. If the credentials (i.e.. username then password) do not

match/authenticate, then an authentication request is made to the AD server – if this is successful the user's

information will be retrieved and validated against the local subject list, otherwise access will be denied.

Validation against the subject list will succeed if the user or a group in the transitive group membership of

the user is in the subject list.

Note:

When using Active Directory groups to grant access for Pool Administrator users who will require host ssh

access, the number of users in the Active Directory group must not exceed 500.

Allowing a user access to XenServer using the CLI

• To add an AD subject to XenServer:

xe subject-add subject-name=<entity name>

The entity name should be the name of the user or group to which you want to grant access. You

may optionally include the domain of the entity (for example, '<xendt\user1>' as opposed to '<user1>')

although the behavior will be the same unless disambiguation is required.

Removing access for a user using the CLI

1. Identify the subject identifier for the subject you wish to revoke access. This would be the user or the

group containing the user (removing a group would remove access to all users in that group, providing

they are not also specified in the subject list). You can do this using the subject list command:

5

Page 26

xe subject-list

You may wish to apply a filter to the list, for example to get the subject identifier for a user named user1

in the testad domain, you could use the following command:

xe subject-list other-config:subject-name='<domain\user>'

2. Remove the user using the subject-remove command, passing in the subject identifier you learned

in the previous step:

xe subject-remove subject-identifier=<subject identifier>

3. You may wish to terminate any current session this user has already authenticated. See Terminating all

authenticated sessions using xe and Terminating individual user sessions using xe for more information