Page 1

CloudPlatform

(powered by Apache

CloudStack) Version

4.2 Installation Guide

Revised October 27, 2013 11:15 pm Pacific

Citrix CloudPlatform

Page 2

CloudPlatform (powered by Apache CloudStack) Version 4.2 Installation Guide

CloudPlatform (powered by Apache CloudStack) Version 4.2

Installation Guide

Revised October 27, 2013 11:15 pm Pacific

Author Citrix CloudPlatform

© 2013 Citrix Systems, Inc. All rights reserved. Specifications are subject to change without

notice. Citrix Systems, Inc., the Citrix logo, Citrix XenServer, Citrix XenCenter, and CloudPlatform

are trademarks or registered trademarks of Citrix Systems, Inc. All other brands or products are

trademarks or registered trademarks of their respective holders.

Installation Guide for CloudPlatform.

Page 3

1. Getting More Information and Help 1

1.1. Additional Documentation Available ............................................................................... 1

1.2. Citrix Knowledge Center ............................................................................................... 1

1.3. Contacting Support ....................................................................................................... 1

2. Concepts 3

2.1. What Is CloudPlatform? ................................................................................................ 3

2.2. What Can CloudPlatform Do? ....................................................................................... 3

2.3. Deployment Architecture Overview ................................................................................ 4

2.3.1. Management Server Overview ........................................................................... 5

2.3.2. Cloud Infrastructure Overview ............................................................................ 5

2.3.3. Networking Overview ......................................................................................... 6

3. Cloud Infrastructure Concepts 9

3.1. About Regions ............................................................................................................. 9

3.2. About Zones ................................................................................................................ 9

3.3. About Pods ................................................................................................................ 11

3.4. About Clusters ........................................................................................................... 12

3.5. About Hosts ............................................................................................................... 13

3.6. About Primary Storage ............................................................................................... 13

3.7. About Secondary Storage ........................................................................................... 14

3.8. About Physical Networks ............................................................................................ 14

3.8.1. Basic Zone Network Traffic Types .................................................................... 15

3.8.2. Basic Zone Guest IP Addresses ....................................................................... 16

3.8.3. Advanced Zone Network Traffic Types .............................................................. 16

3.8.4. Advanced Zone Guest IP Addresses ................................................................ 16

3.8.5. Advanced Zone Public IP Addresses ................................................................ 17

3.8.6. System Reserved IP Addresses ....................................................................... 17

4. Upgrade Instructions 19

4.1. Upgrade from 3.0.x to 4.2 ........................................................................................... 19

4.2. Upgrade from 2.2.x to 4.2 ........................................................................................... 28

4.3. Upgrade from 2.1.x to 4.2 ........................................................................................... 37

4.4. Upgrading and Hotfixing XenServer Hypervisor Hosts ................................................... 37

4.4.1. Upgrading to a New XenServer Version ............................................................ 37

4.4.2. Applying Hotfixes to a XenServer Cluster .......................................................... 39

5. Installation 43

5.1. Who Should Read This .............................................................................................. 43

5.2. Overview of Installation Steps ..................................................................................... 43

5.3. Minimum System Requirements .................................................................................. 44

5.3.1. Management Server, Database, and Storage System Requirements ................... 44

5.3.2. Host/Hypervisor System Requirements ............................................................. 44

5.3.3. Hypervisor Compatibility Matrix ......................................................................... 45

5.4. Management Server Installation .................................................................................. 47

5.4.1. Management Server Installation Overview ......................................................... 47

5.4.2. Prepare the Operating System ......................................................................... 47

5.4.3. Install the Management Server on the First Host ............................................... 49

5.4.4. Install and Configure the Database ................................................................... 50

5.4.5. About Password and Key Encryption ................................................................ 54

5.4.6. Changing the Default Password Encryption ....................................................... 55

5.4.7. Prepare NFS Shares ....................................................................................... 56

5.4.8. Prepare and Start Additional Management Servers ............................................ 59

5.4.9. Management Server Load Balancing ................................................................ 60

5.4.10. Prepare the System VM Template .................................................................. 61

5.4.11. Installation Complete! Next Steps ................................................................... 62

iii

Page 4

CloudPlatform (powered by Apache CloudStack) Version 4.2 Installation Guide

5.5. Setting Configuration Parameters ................................................................................ 62

5.5.1. About Configuration Parameters ....................................................................... 62

5.5.2. Setting Global Configuration Parameters ........................................................... 64

5.5.3. Setting Local Configuration Parameters ............................................................ 64

5.5.4. Granular Global Configuration Parameters ........................................................ 64

6. User Interface 69

6.1. Supported Browsers ................................................................................................... 69

6.2. Log In to the UI ......................................................................................................... 69

6.2.1. End User's UI Overview ................................................................................... 69

6.2.2. Root Administrator's UI Overview ..................................................................... 70

6.2.3. Logging In as the Root Administrator ................................................................ 70

6.2.4. Changing the Root Password ........................................................................... 71

6.3. Using SSH Keys for Authentication ............................................................................. 71

6.3.1. Creating an Instance from a Template that Supports SSH Keys .......................... 71

6.3.2. Creating the SSH Keypair ................................................................................ 72

6.3.3. Creating an Instance ........................................................................................ 73

6.3.4. Logging In Using the SSH Keypair ................................................................... 73

6.3.5. Resetting SSH Keys ........................................................................................ 73

7. Steps to Provisioning Your Cloud Infrastructure 75

7.1. Overview of Provisioning Steps ................................................................................... 75

7.2. Adding Regions (optional) ........................................................................................... 76

7.2.1. The First Region: The Default Region ............................................................... 76

7.2.2. Adding a Region .............................................................................................. 76

7.2.3. Adding Third and Subsequent Regions ............................................................. 77

7.2.4. Deleting a Region ............................................................................................ 78

7.3. Adding a Zone ........................................................................................................... 79

7.3.1. Create a Secondary Storage Mount Point for the New Zone ............................... 79

7.3.2. Steps to Add a New Zone ................................................................................ 79

7.4. Adding a Pod ............................................................................................................. 88

7.5. Adding a Cluster ........................................................................................................ 89

7.5.1. Add Cluster: KVM or XenServer ....................................................................... 89

7.5.2. Add Cluster: OVM ........................................................................................... 89

7.5.3. Add Cluster: vSphere ....................................................................................... 90

7.6. Adding a Host ............................................................................................................ 93

7.6.1. Adding a Host (XenServer, KVM, or OVM) ........................................................ 93

7.6.2. Adding a Host (vSphere) .................................................................................. 95

7.7. Adding Primary Storage .............................................................................................. 95

7.8. Adding Secondary Storage ......................................................................................... 96

7.8.1. Adding an NFS Secondary Staging Store for Each Zone .................................... 97

7.9. Initialize and Test ....................................................................................................... 98

8. Installing XenServer for CloudPlatform 101

8.1. System Requirements for XenServer Hosts ................................................................ 101

8.2. XenServer Installation Steps ..................................................................................... 102

8.3. Configure XenServer dom0 Memory .......................................................................... 102

8.4. Username and Password .......................................................................................... 102

8.5. Time Synchronization ............................................................................................... 102

8.6. Licensing .................................................................................................................. 103

8.6.1. Getting and Deploying a License .................................................................... 103

8.7. Install CloudPlatform XenServer Support Package (CSP) ............................................ 103

8.8. Primary Storage Setup for XenServer ........................................................................ 104

8.9. iSCSI Multipath Setup for XenServer (Optional) .......................................................... 105

8.10. Physical Networking Setup for XenServer ................................................................ 106

iv

Page 5

8.10.1. Configuring Public Network with a Dedicated NIC for XenServer (Optional) ....... 106

8.10.2. Configuring Multiple Guest Networks for XenServer (Optional) ........................ 106

8.10.3. Separate Storage Network for XenServer (Optional) ....................................... 107

8.10.4. NIC Bonding for XenServer (Optional) ........................................................... 107

9. Installing KVM for CloudPlatform 111

9.1. System Requirements for KVM Hypervisor Hosts ....................................................... 111

9.1.1. Supported Operating Systems for KVM Hosts .................................................. 111

9.1.2. System Requirements for KVM Hosts ............................................................. 111

9.2. Install and configure the Agent .................................................................................. 112

9.3. Installing the CloudPlatform Agent on a KVM Host ..................................................... 112

9.4. Physical Network Configuration for KVM .................................................................... 113

9.5. Time Synchronization for KVM Hosts ......................................................................... 114

9.6. Primary Storage Setup for KVM (Optional) ................................................................. 114

10. Installing VMware for CloudPlatform 117

10.1. System Requirements for vSphere Hosts ................................................................. 117

10.1.1. Software requirements ................................................................................. 117

10.1.2. Hardware requirements ................................................................................ 117

10.1.3. vCenter Server requirements: ....................................................................... 118

10.1.4. Other requirements: ..................................................................................... 118

10.2. Preparation Checklist for VMware ............................................................................ 119

10.2.1. vCenter Checklist ......................................................................................... 119

10.2.2. Networking Checklist for VMware .................................................................. 119

10.3. vSphere Installation Steps ....................................................................................... 120

10.4. ESXi Host setup ..................................................................................................... 120

10.5. Physical Host Networking ........................................................................................ 120

10.5.1. Configure Virtual Switch ............................................................................... 120

10.5.2. Configure vCenter Management Network ...................................................... 121

10.5.3. Configure NIC Bonding for vSphere .............................................................. 121

10.6. Configuring a vSphere Cluster with Nexus 1000v Virtual Switch ................................. 122

10.6.1. About Cisco Nexus 1000v Distributed Virtual Switch ....................................... 122

10.6.2. Prerequisites and Guidelines ........................................................................ 122

10.6.3. Nexus 1000v Virtual Switch Preconfiguration ................................................. 123

10.6.4. Enabling Nexus Virtual Switch in CloudPlatform ............................................. 126

10.6.5. Configuring Nexus 1000v Virtual Switch in CloudPlatform ............................... 126

10.6.6. Removing Nexus Virtual Switch .................................................................... 127

10.6.7. Configuring a VMware Datacenter with VMware Distributed Virtual Switch ........ 127

10.7. Storage Preparation for vSphere (iSCSI only) ........................................................... 132

10.7.1. Enable iSCSI initiator for ESXi hosts ............................................................. 132

10.7.2. Add iSCSI target .......................................................................................... 132

10.7.3. Create an iSCSI datastore ............................................................................ 133

10.7.4. Multipathing for vSphere (Optional) ............................................................... 133

10.8. Add Hosts or Configure Clusters (vSphere) .............................................................. 133

11. Bare Metal Installation 135

11.1. Bare Metal Host System Requirements .................................................................... 135

11.2. About Bare Metal Kickstart Installation ..................................................................... 135

11.2.1. Limitations of Kickstart Baremetal Installation ................................................. 136

11.3. Provisioning a Bare Metal Host with Kickstart ........................................................... 136

11.3.1. Download the Software ................................................................................ 136

11.3.2. Set Up IPMI ................................................................................................ 136

11.3.3. Enable PXE on the Bare Metal Host ............................................................. 137

11.3.4. Install the PXE and DHCP Servers ............................................................... 137

11.3.5. Set Up a File Server .................................................................................... 138

v

Page 6

CloudPlatform (powered by Apache CloudStack) Version 4.2 Installation Guide

11.3.6. Create a Bare Metal Image .......................................................................... 140

11.3.7. Create a Bare Metal Compute Offering ......................................................... 140

11.3.8. Create a Bare Metal Network Offering ........................................................... 141

11.3.9. Set Up the Security Group Agent (Optional) .................................................. 141

11.3.10. (Optional) Set Bare Metal Configuration Parameters ..................................... 143

11.3.11. Add a Bare Metal Zone .............................................................................. 143

11.3.12. Add a Bare Metal Cluster ........................................................................... 144

11.3.13. Add a Bare Metal Host ............................................................................... 144

11.3.14. Add the PXE Server and DHCP Server to Your Deployment ......................... 145

11.3.15. Create a Bare Metal Template .................................................................... 146

11.3.16. Provision a Bare Metal Instance .................................................................. 147

11.3.17. Test Bare Metal Installation ........................................................................ 147

11.3.18. Example CentOS 6.x Kickstart File .............................................................. 147

11.3.19. Example Fedora 17 Kickstart File ................................................................ 148

11.3.20. Example Ubuntu 12.04 Kickstart File ........................................................... 149

11.4. Using Cisco UCS as Bare Metal Host CloudPlatform ................................................ 151

11.4.1. Registering a UCS Manager ......................................................................... 151

11.4.2. Associating a Profile with a UCS Blade ......................................................... 152

11.4.3. Disassociating a Profile from a UCS Blade .................................................... 153

12. Installing Oracle VM (OVM) for CloudPlatform 155

12.1. System Requirements for OVM Hosts ...................................................................... 155

12.2. OVM Installation Overview ...................................................................................... 155

12.3. Installing OVM on the Host(s) ................................................................................. 155

12.4. Primary Storage Setup for OVM .............................................................................. 156

12.5. Set Up Host(s) for System VMs ............................................................................... 156

13. Choosing a Deployment Architecture 157

13.1. Small-Scale Deployment ......................................................................................... 157

13.2. Large-Scale Redundant Setup ................................................................................. 158

13.3. Separate Storage Network ...................................................................................... 159

13.4. Multi-Node Management Server .............................................................................. 159

13.5. Multi-Site Deployment ............................................................................................. 159

14. Network Setup 161

14.1. Basic and Advanced Networking ............................................................................. 161

14.2. VLAN Allocation Example ....................................................................................... 162

14.3. Example Hardware Configuration ............................................................................. 162

14.3.1. Dell 62xx ..................................................................................................... 162

14.3.2. Cisco 3750 .................................................................................................. 163

14.4. Layer-2 Switch ....................................................................................................... 163

14.4.1. Dell 62xx ..................................................................................................... 163

14.4.2. Cisco 3750 .................................................................................................. 164

14.5. Hardware Firewall ................................................................................................... 164

14.5.1. Generic Firewall Provisions .......................................................................... 164

14.5.2. External Guest Firewall Integration for Juniper SRX (Optional) ........................ 165

14.5.3. External Guest Firewall Integration for Cisco VNMC (Optional) ........................ 167

14.6. External Guest Load Balancer Integration (Optional) ................................................. 172

14.7. Topology Requirements .......................................................................................... 173

14.7.1. Security Requirements ................................................................................. 173

14.7.2. Runtime Internal Communications Requirements ........................................... 173

14.7.3. Storage Network Topology Requirements ...................................................... 174

14.7.4. External Firewall Topology Requirements ...................................................... 174

14.7.5. Advanced Zone Topology Requirements ....................................................... 174

14.7.6. XenServer Topology Requirements ............................................................... 174

vi

Page 7

14.7.7. VMware Topology Requirements .................................................................. 174

14.7.8. KVM Topology Requirements ....................................................................... 174

14.8. Guest Network Usage Integration for Traffic Sentinel ................................................ 174

14.9. Setting Zone VLAN and Running VM Maximums ...................................................... 175

15. Amazon Web Service Interface 177

15.1. Amazon Web Services EC2 Compatible Interface ..................................................... 177

15.2. System Requirements ............................................................................................. 177

15.3. Enabling the AWS API Compatible Interface ............................................................ 177

15.4. AWS API User Setup Steps (SOAP Only) ................................................................ 178

15.4.1. AWS API User Registration .......................................................................... 178

15.4.2. AWS API Command-Line Tools Setup .......................................................... 179

15.5. Supported AWS API Calls ....................................................................................... 179

16. Additional Installation Options 183

16.1. Installing the Usage Server (Optional) ...................................................................... 183

16.1.1. Requirements for Installing the Usage Server ................................................ 183

16.1.2. Steps to Install the Usage Server .................................................................. 183

16.2. SSL (Optional) ........................................................................................................ 183

16.3. Database Replication (Optional) .............................................................................. 184

16.3.1. Failover ....................................................................................................... 186

vii

Page 8

viii

Page 9

Chapter 1.

Getting More Information and Help

1.1. Additional Documentation Available

The following guides are available:

• Installation Guide — Covers initial installation of CloudPlatform. It aims to cover in full detail all the

steps and requirements to obtain a functioning cloud deployment.

At times, this guide mentions additional topics in the context of installation tasks, but does not

give full details on every topic. Additional details on many of these topics can be found in the

CloudPlatform Administration Guide. For example, security groups, firewall and load balancing

rules, IP address allocation, and virtual routers are covered in more detail in the Administration

Guide.

• Administration Guide — Discusses how to set up services for the end users of your cloud. Also

covers ongoing runtime management and maintenance. This guide discusses topics like domains,

accounts, service offerings, projects, guest networks, administrator alerts, virtual machines, storage,

and measuring resource usage.

• Developer's Guide — How to use the API to interact with CloudPlatform programmatically.

1.2. Citrix Knowledge Center

Troubleshooting articles by the Citrix support team are available in the Citrix Knowledge Center, at

support.citrix.com/product/cs/1.

1.3. Contacting Support

The support team is available to help customers plan and execute their installations. To contact the

support team, log in to the support portal at support.citrix.com/cloudsupport2 by using the account

credentials you received when you purchased your support contract.

1

http://support.citrix.com/product/cs/

2

http://support.citrix.com/cloudsupport

1

Page 10

2

Page 11

Chapter 2.

Concepts

2.1. What Is CloudPlatform?

CloudPlatform is a software platform that pools computing resources to build public, private, and

hybrid Infrastructure as a Service (IaaS) clouds. CloudPlatform manages the network, storage, and

compute nodes that make up a cloud infrastructure. Use CloudPlatform to deploy, manage, and

configure cloud computing environments.

Typical users are service providers and enterprises. With CloudPlatform, you can:

• Set up an on-demand, elastic cloud computing service. Service providers can sell self service virtual

machine instances, storage volumes, and networking configurations over the Internet.

• Set up an on-premise private cloud for use by employees. Rather than managing virtual machines in

the same way as physical machines, with CloudPlatform an enterprise can offer self-service virtual

machines to users without involving IT departments.

2.2. What Can CloudPlatform Do?

Multiple Hypervisor Support

CloudPlatform works with a variety of hypervisors. A single cloud deployment can contain multiple

hypervisor implementations. You have the complete freedom to choose the right hypervisor for your

workload.

CloudPlatform is designed to work with open source Xen and KVM hypervisors as well as enterprisegrade hypervisors such as Citrix XenServer, VMware vSphere, and Oracle VM (OVM).

3

Page 12

Chapter 2. Concepts

Massively Scalable Infrastructure Management

CloudPlatform can manage tens of thousands of servers installed in multiple geographically distributed

datacenters. The centralized management server scales linearly, eliminating the need for intermediate

cluster-level management servers. No single component failure can cause cloud-wide outage. Periodic

maintenance of the management server can be performed without affecting the functioning of virtual

machines running in the cloud.

Automatic Configuration Management

CloudPlatform automatically configures each guest virtual machine’s networking and storage settings.

CloudPlatform internally manages a pool of virtual appliances to support the cloud itself. These

appliances offer services such as firewalling, routing, DHCP, VPN access, console proxy, storage

access, and storage replication. The extensive use of virtual appliances simplifies the installation,

configuration, and ongoing management of a cloud deployment.

Graphical User Interface

CloudPlatform offers an administrator's Web interface, used for provisioning and managing the cloud,

as well as an end-user's Web interface, used for running VMs and managing VM templates. The UI

can be customized to reflect the desired service provider or enterprise look and feel.

API and Extensibility

CloudPlatform provides an API that gives programmatic access to all the management features

available in the UI. This API enables the creation of command line tools and new user interfaces to

suit particular needs.

The CloudPlatform pluggable allocation architecture allows the creation of new types of allocators for

the selection of storage and hosts.

High Availability

CloudPlatform has a number of features to increase the availability of the system. The Management

Server itself, which is the main controlling software at the heart of CloudPlatform, may be deployed

in a multi-node installation where the servers are load balanced. MySQL may be configured to use

replication to provide for a manual failover in the event of database loss. For the hosts, CloudPlatform

supports NIC bonding and the use of separate networks for storage as well as iSCSI Multipath.

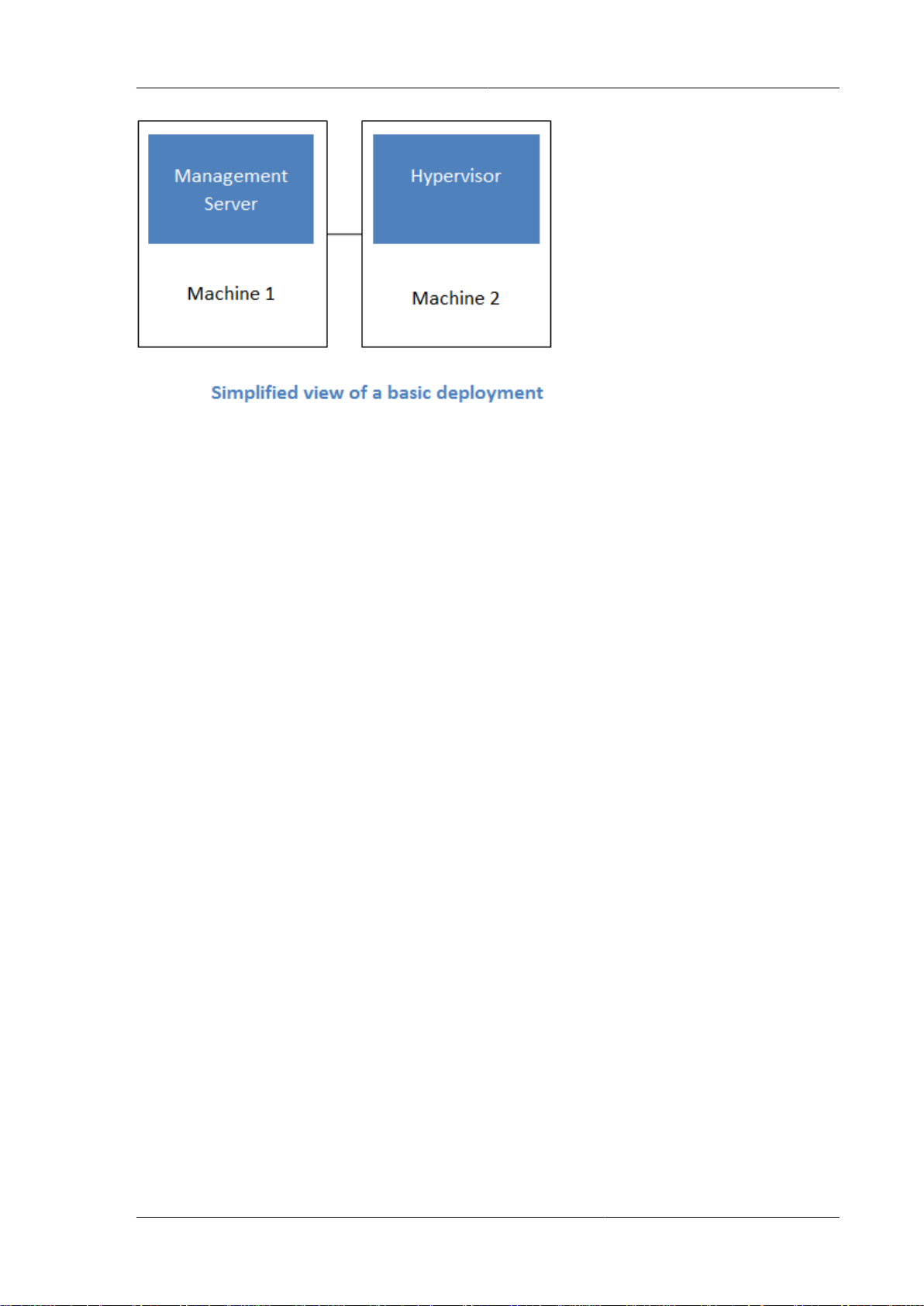

2.3. Deployment Architecture Overview

A CloudPlatform installation consists of two parts: the Management Server and the cloud infrastructure

that it manages. When you set up and manage a CloudPlatform cloud, you provision resources such

as hosts, storage devices, and IP addresses into the Management Server, and the Management

Server manages those resources.

The minimum production installation consists of one machine running the CloudPlatform Management

Server and another machine to act as the cloud infrastructure (in this case, a very simple infrastructure

consisting of one host running hypervisor software). In a trial installation, a single machine can act as

both the Management Server and the hypervisor host (using the KVM hypervisor).

4

Page 13

Management Server Overview

A more full-featured installation consists of a highly-available multi-node Management Server

installation and up to thousands of hosts using any of several advanced networking setups. For

information about deployment options, see Chapter 13, Choosing a Deployment Architecture.

2.3.1. Management Server Overview

The Management Server is the CloudPlatform software that manages cloud resources. By interacting

with the Management Server through its UI or API, you can configure and manage your cloud

infrastructure.

The Management Server runs on a dedicated server or VM. It controls allocation of virtual machines

to hosts and assigns storage and IP addresses to the virtual machine instances. The Management

Server runs in a Tomcat container and uses a MySQL database for persistence.

The machine where the Management Server runs must meet the system requirements described in

Section 5.3, “Minimum System Requirements”.

The Management Server:

• Provides the web user interface for the administrator and a reference user interface for end users.

• Provides the APIs for CloudPlatform.

• Manages the assignment of guest VMs to particular hosts.

• Manages the assignment of public and private IP addresses to particular accounts.

• Manages the allocation of storage to guests as virtual disks.

• Manages snapshots, templates, and ISO images, possibly replicating them across data centers.

• Provides a single point of configuration for the cloud.

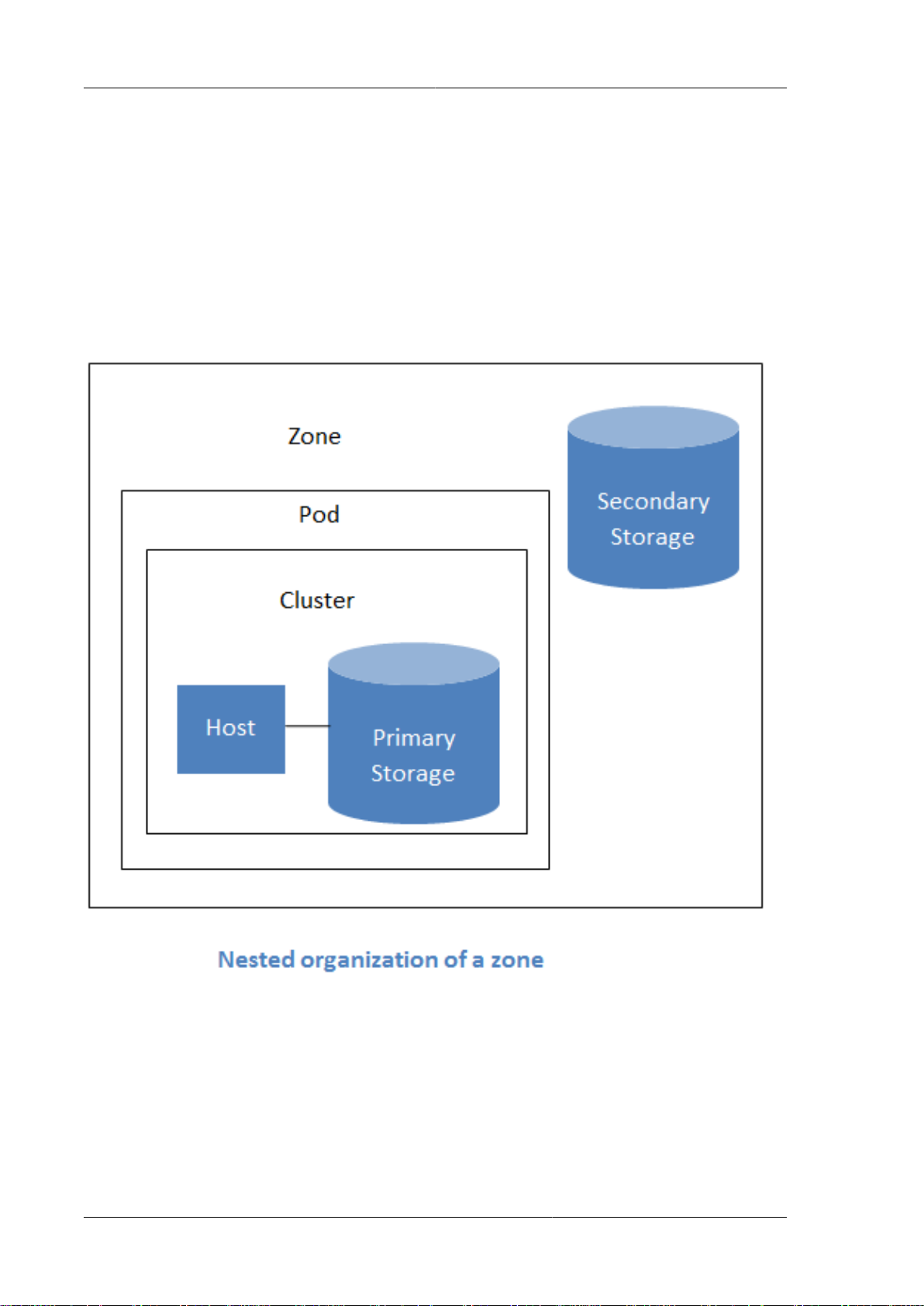

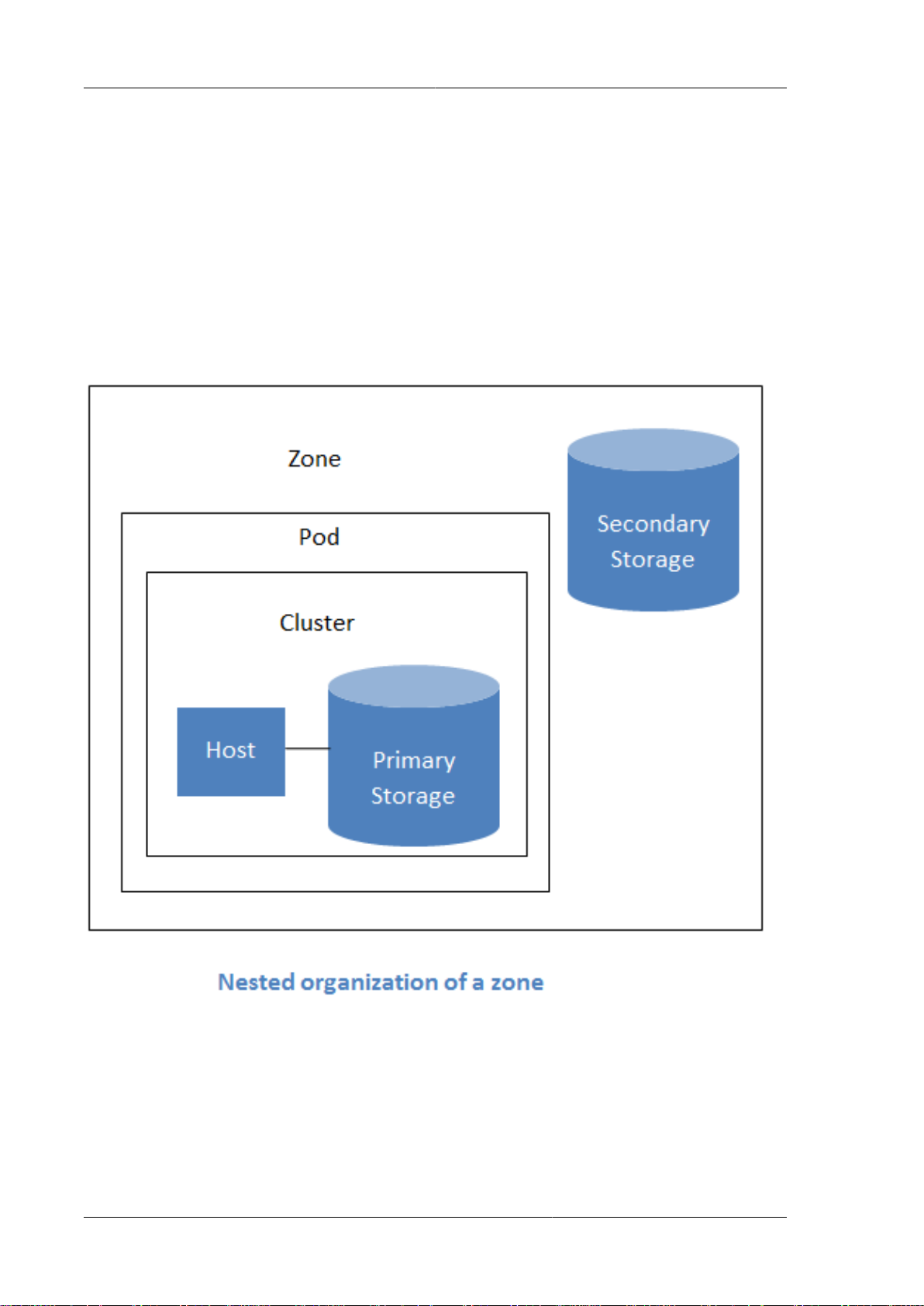

2.3.2. Cloud Infrastructure Overview

The Management Server manages one or more zones (typically, datacenters) containing host

computers where guest virtual machines will run. The cloud infrastructure is organized as follows:

• Region: To increase reliability of the cloud, you can optionally group resources into multiple

geographic regions. A region consists of one or more zones.

• Zone: Typically, a zone is equivalent to a single datacenter. A zone consists of one or more pods

and secondary storage.

5

Page 14

Chapter 2. Concepts

• Pod: A pod is usually one rack of hardware that includes a layer-2 switch and one or more clusters.

• Cluster: A cluster consists of one or more hosts and primary storage.

• Host: A single compute node within a cluster. The hosts are where the actual cloud services run in

the form of guest virtual machines.

• Primary storage is associated with a cluster, and it can also be provisioned on a zone-wide basis. It

stores the disk volumes for all the VMs running on hosts in that cluster.

• Secondary storage is associated with a zone, and it can also be provisioned as object storage that

is available throughout the cloud. It stores templates, ISO images, and disk volume snapshots.

More Information

For more information, see Chapter 3, Cloud Infrastructure Concepts.

2.3.3. Networking Overview

CloudPlatform offers two types of networking scenario:

• Basic. Provides a single network where guest isolation can be provided through layer-3 means such

as security groups (IP address source filtering).

6

Page 15

Networking Overview

• Advanced. For more sophisticated network topologies. This network model provides the most

flexibility in defining guest networks and providing guest isolation.

For more details, see Chapter 14, Network Setup.

7

Page 16

8

Page 17

Chapter 3.

Cloud Infrastructure Concepts

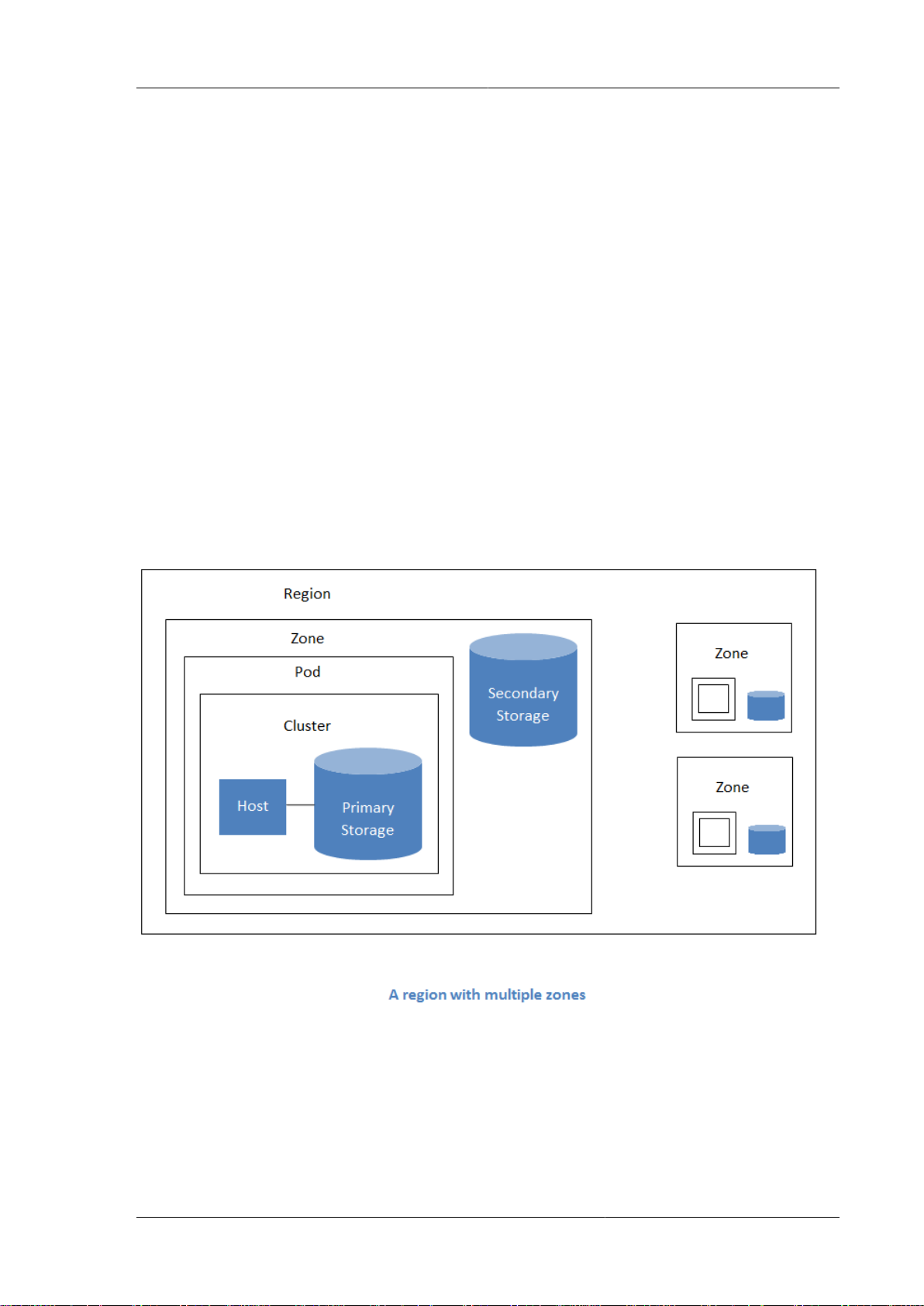

3.1. About Regions

To increase reliability of the cloud, you can optionally group resources into multiple geographic

regions. A region is the largest available organizational unit within a CloudPlatform deployment. A

region is made up of several availability zones, where each zone is equivalent to a datacenter. Each

region is controlled by its own cluster of Management Servers, running in one of the zones. The zones

in a region are typically located in close geographical proximity. Regions are a useful technique for

providing fault tolerance and disaster recovery.

By grouping zones into regions, the cloud can achieve higher availability and scalability. User

accounts can span regions, so that users can deploy VMs in multiple, widely-dispersed regions.

Even if one of the regions becomes unavailable, the services are still available to the end-user

through VMs deployed in another region. And by grouping communities of zones under their own

nearby Management Servers, the latency of communications within the cloud is reduced compared to

managing widely-dispersed zones from a single central Management Server.

Usage records can also be consolidated and tracked at the region level, creating reports or invoices

for each geographic region.

Regions are visible to the end user. When a user starts a guest VM on a particular CloudPlatform

Management Server, the user is implicitly selecting that region for their guest. Users might also be

required to copy their private templates to additional regions to enable creation of guest VMs using

their templates in those regions.

3.2. About Zones

A zone is the second largest organizational unit within a CloudPlatform deployment. A zone typically

corresponds to a single datacenter, although it is permissible to have multiple zones in a datacenter.

9

Page 18

Chapter 3. Cloud Infrastructure Concepts

The benefit of organizing infrastructure into zones is to provide physical isolation and redundancy. For

example, each zone can have its own power supply and network uplink, and the zones can be widely

separated geographically (though this is not required).

A zone consists of:

• One or more pods. Each pod contains one or more clusters of hosts and one or more primary

storage servers.

• (Optional) If zone-wide primary storage is desired, a zone may contain one or more primary storage

servers, which are shared by all the pods in the zone. (Supported for KVM and VMware hosts)

• Secondary storage, which is shared by all the pods in the zone.

Zones are visible to the end user. When a user starts a guest VM, the user must select a zone for

their guest. Users might also be required to copy their private templates to additional zones to enable

creation of guest VMs using their templates in those zones.

Zones can be public or private. Public zones are visible to all users. This means that any user may

create a guest in that zone. Private zones are reserved for a specific domain. Only users in that

domain or its subdomains may create guests in that zone.

Hosts in the same zone are directly accessible to each other without having to go through a firewall.

Hosts in different zones can access each other through statically configured VPN tunnels.

10

Page 19

About Pods

For each zone, the administrator must decide the following.

• How many pods to place in a zone.

• How many clusters to place in each pod.

• How many hosts to place in each cluster.

• (Optional) If zone-wide primary storage is being used, decide how many primary storage servers to

place in each zone and total capacity for these storage servers. (Supported for KVM and VMware

hosts)

• How many primary storage servers to place in each cluster and total capacity for these storage

servers.

• How much secondary storage to deploy in a zone.

When you add a new zone, you will be prompted to configure the zone’s physical network and add the

first pod, cluster, host, primary storage, and secondary storage.

(VMware) In order to support zone-wide functions for VMware, CloudPlatform is aware of VMware

Datacenters and can map each Datacenter to a CloudPlatform zone. To enable features like storage

live migration and zone-wide primary storage for VMware hosts, CloudPlatform has to make sure

that a zone contains only a single VMware Datacenter. Therefore, when you are creating a new

CloudPlatform zone, you can select a VMware Datacenter for the zone. If you are provisioning multiple

VMware Datacenters, each one will be set up as a single zone in CloudPlatform.

Note

If you are upgrading from a previous CloudPlatform version, and your existing deployment

contains a zone with clusters from multiple VMware Datacenters, that zone will not be forcibly

migrated to the new model. It will continue to function as before. However, any new zone-wide

operations introduced in CloudPlatform 4.2, such as zone-wide primary storage and live storage

migration, will not be available in that zone.

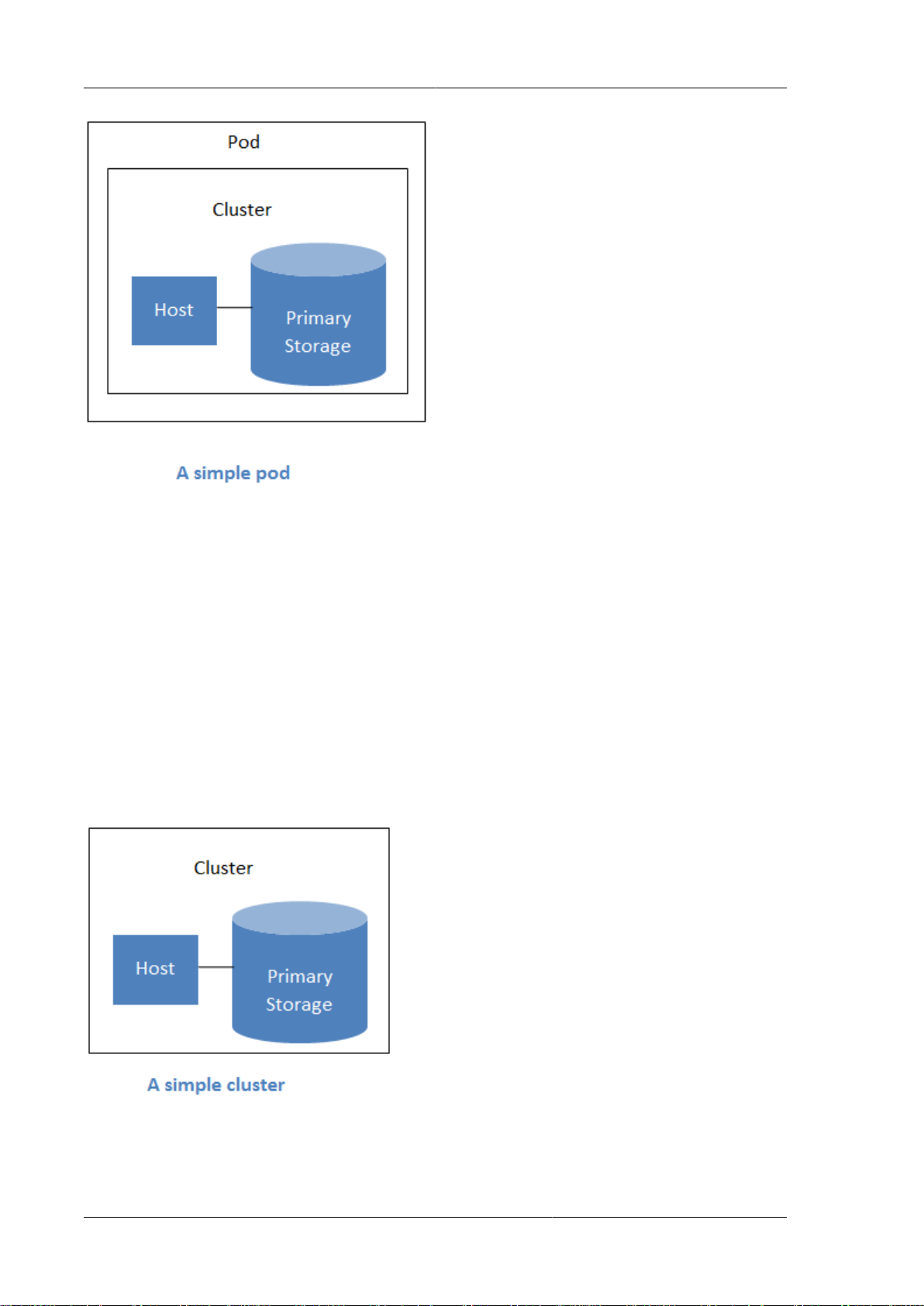

3.3. About Pods

A pod often represents a single rack. Hosts in the same pod are in the same subnet. A pod is the

third-largest organizational unit within a CloudPlatform deployment. Pods are contained within zones,

and zones can be contained within regions. Each zone can contain one or more pods. A pod consists

of one or more clusters of hosts and one or more primary storage servers. Pods are not visible to the

end user.

11

Page 20

Chapter 3. Cloud Infrastructure Concepts

3.4. About Clusters

A cluster provides a way to group hosts. To be precise, a cluster is a XenServer server pool, a set

of KVM servers, a set of OVM hosts, or a VMware cluster preconfigured in vCenter. The hosts in a

cluster all have identical hardware, run the same hypervisor, are on the same subnet, and access the

same shared primary storage. Virtual machine instances (VMs) can be live-migrated from one host to

another within the same cluster without interrupting service to the user.

A cluster is the fourth-largest organizational unit within a CloudPlatform deployment. Clusters

are contained within pods, pods are contained within zones, and zones can be contained within

regions. Size of the cluster is only limited by the underlying hypervisor, although the CloudPlatform

recommends you stay below the theoretically allowed maximum cluster size in most cases.

A cluster consists of one or more hosts and one or more primary storage servers.

Even when local storage is used, clusters are still required. In this case, there is just one host per

cluster.

(VMware) If you use VMware hypervisor hosts in your CloudPlatform deployment, each VMware

cluster is managed by a vCenter server. The CloudPlatform administrator must register the vCenter

12

Page 21

About Hosts

server with CloudPlatform. There may be multiple vCenter servers per zone. Each vCenter server may

manage multiple VMware clusters.

3.5. About Hosts

A host is a single computer. Hosts provide the computing resources that run guest virtual machines.

Each host has hypervisor software installed on it to manage the guest VMs. For example, a host can

be a Citrix XenServer server, a Linux KVM-enabled server, or an ESXi server.

The host is the smallest organizational unit within a CloudPlatform deployment. Hosts are contained

within clusters, clusters are contained within pods, pods are contained within zones, and zones can be

contained within regions.

Hosts in a CloudPlatform deployment:

• Provide the CPU, memory, storage, and networking resources needed to host the virtual machines

• Interconnect using a high bandwidth TCP/IP network and connect to the Internet

• May reside in multiple data centers across different geographic locations

• May have different capacities (different CPU speeds, different amounts of RAM, etc.), although the

hosts within a cluster must all be homogeneous

Additional hosts can be added at any time to provide more capacity for guest VMs.

CloudPlatform automatically detects the amount of CPU and memory resources provided by the hosts.

Hosts are not visible to the end user. An end user cannot determine which host their guest has been

assigned to.

For a host to function in CloudPlatform, you must do the following:

• Install hypervisor software on the host

• Assign an IP address to the host

• Ensure the host is connected to the CloudPlatform Management Server.

3.6. About Primary Storage

Primary storage is associated with a cluster or (in KVM and VMware) a zone, and it stores the disk

volumes for all the VMs running on hosts.

You can add multiple primary storage servers to a cluster or zone. At least one is required. It is

typically located close to the hosts for increased performance. CloudPlatform manages the allocation

of guest virtual disks to particular primary storage devices.

It is useful to set up zone-wide primary storage when you want to avoid extra data copy operations.

With cluster-based primary storage, data in the primary storage is directly available only to VMs

within that cluster. If a VM in a different cluster needs some of the data, it must be copied from one

cluster to another, using the zone's secondary storage as an intermediate step. This operation can be

unnecessarily time-consuming.

CloudPlatform is designed to work with all standards-compliant iSCSI and NFS servers that are

supported by the underlying hypervisor, including, for example:

13

Page 22

Chapter 3. Cloud Infrastructure Concepts

• Dell EqualLogic™ for iSCSI

• Network Appliances filers for NFS and iSCSI

• Scale Computing for NFS

If you intend to use only local disk for your installation, you can skip adding separate primary storage.

3.7. About Secondary Storage

Secondary storage stores the following:

• Templates — OS images that can be used to boot VMs and can include additional configuration

information, such as installed applications

• ISO images — disc images containing data or bootable media for operating systems

• Disk volume snapshots — saved copies of VM data which can be used for data recovery or to

create new templates

The items in secondary storage are available to all hosts in the scope of the secondary storage, which

may be defined as per zone or per region.

CloudPlatform manages the allocation of guest virtual disks to particular primary storage devices.

To make items in secondary storage available to all hosts throughout the cloud, you can add object

storage in addition to the zone-based NFS Secondary Staging Store. It is not necessary to copy

templates and snapshots from one zone to another, as would be required when using zone NFS

alone. Everything is available everywhere.

Object storage is provided through third-party software such as Amazon Simple Storage Service (S3)

or any other object storage that supports the S3 interface. Additional third party object storages can be

integrated with CloudPlatform by writing plugin software that uses the object storage plugin capability.

CloudPlatform provides some plugins which we have already written for you using this storage plugin

capability. The provided plugins are for OpenStack Object Storage (Swift, swift.openstack.org1)

and Amazon Simple Storage Service (S3) object storage. The S3 plugin can be used for any object

storage that supports the Amazon S3 interface. When using one of these storage plugins, you

configure Swift or S3 storage for the entire CloudPlatform, then set up the NFS Secondary Staging

Store for each zone. The NFS storage in each zone acts as a staging area through which all templates

and other secondary storage data pass before being forwarded to Swift or S3. The backing object

storage acts as a cloud-wide resource, making templates and other data available to any zone in the

cloud.

There is no hierarchy in the Swift storage, just one Swift container per storage object. Any secondary

storage in the whole cloud can pull a container from Swift at need.

3.8. About Physical Networks

Part of adding a zone is setting up the physical network. One or (in an advanced zone) more physical

networks can be associated with each zone. The network corresponds to a NIC on the hypervisor

host. Each physical network can carry one or more types of network traffic. The choices of traffic

1

http://swift.openstack.org

14

Page 23

Basic Zone Network Traffic Types

type for each network vary depending on whether you are creating a zone with basic networking or

advanced networking.

A physical network is the actual network hardware and wiring in a zone. A zone can have multiple

physical networks. An administrator can:

• Add/Remove/Update physical networks in a zone

• Configure VLANs on the physical network

• Configure a name so the network can be recognized by hypervisors

• Configure the service providers (firewalls, load balancers, etc.) available on a physical network

• Configure the IP addresses trunked to a physical network

• Specify what type of traffic is carried on the physical network, as well as other properties like

network speed

3.8.1. Basic Zone Network Traffic Types

When basic networking is used, there can be only one physical network in the zone. That physical

network carries the following traffic types:

• Guest. When end users run VMs, they generate guest traffic. The guest VMs communicate with

each other over a network that can be referred to as the guest network. Each pod in a basic zone

is a broadcast domain, and therefore each pod has a different IP range for the guest network. The

administrator must configure the IP range for each pod.

• Management. When CloudPlatform’s internal resources communicate with each other, they

generate management traffic. This includes communication between hosts, system VMs (VMs

used by CloudPlatform to perform various tasks in the cloud), and any other component that

communicates directly with the CloudPlatform Management Server. You must configure the IP

range for the system VMs to use.

Note

We strongly recommend the use of separate NICs for management traffic and guest traffic.

• Public. Public traffic is generated when VMs in the cloud access the Internet. Publicly accessible IPs

must be allocated for this purpose. End users can use the CloudPlatform UI to acquire these IPs

to implement NAT between their guest network and the public network, as described in Acquiring

a New IP Address. Public traffic is generated only in EIP-enabled basic zones. For information on

Elastic IP, see About Elastic IP in the Administration Guide.

• Storage. Traffic such as VM templates and snapshots, which is sent between the secondary storage

VM and secondary storage servers. CloudPlatform uses a separate Network Interface Controller

(NIC) named storage NIC for storage network traffic. Use of a storage NIC that always operates on

a high bandwidth network allows fast template and snapshot copying. You must configure the IP

range to use for the storage network.

In a basic network, configuring the physical network is fairly straightforward. In most cases, you only

need to configure one guest network to carry traffic that is generated by guest VMs. If you use a

NetScaler load balancer and enable its elastic IP and elastic load balancing (EIP and ELB) features,

15

Page 24

Chapter 3. Cloud Infrastructure Concepts

you must also configure a network to carry public traffic. CloudPlatform takes care of presenting the

necessary network configuration steps to you in the UI when you add a new zone.

3.8.2. Basic Zone Guest IP Addresses

When basic networking is used, CloudPlatform will assign IP addresses in the CIDR of the pod to the

guests in that pod. The administrator must add a direct IP range on the pod for this purpose. These

IPs are in the same VLAN as the hosts.

3.8.3. Advanced Zone Network Traffic Types

When advanced networking is used, there can be multiple physical networks in the zone. Each

physical network can carry one or more traffic types, and you need to let CloudPlatform know which

type of network traffic you want each network to carry. The traffic types in an advanced zone are:

• Guest. When end users run VMs, they generate guest traffic. The guest VMs communicate with

each other over a network that can be referred to as the guest network. This network can be

isolated or shared. In an isolated guest network, the administrator needs to reserve VLAN ranges to

provide isolation for each CloudPlatform account’s network (potentially a large number of VLANs). In

a shared guest network, all guest VMs share a single network.

• Management. When CloudPlatform’s internal resources communicate with each other, they

generate management traffic. This includes communication between hosts, system VMs (VMs

used by CloudPlatform to perform various tasks in the cloud), and any other component that

communicates directly with the CloudPlatform Management Server. You must configure the IP

range for the system VMs to use.

• Public. Public traffic is generated when VMs in the cloud access the Internet. Publicly accessible IPs

must be allocated for this purpose. End users can use the CloudPlatform UI to acquire these IPs to

implement NAT between their guest network and the public network, as described in “Acquiring a

New IP Address” in the Administration Guide.

• Storage. Traffic such as VM templates and snapshots, which is sent between the secondary storage

VM and secondary storage servers. CloudPlatform uses a separate Network Interface Controller

(NIC) named storage NIC for storage network traffic. Use of a storage NIC that always operates on

a high bandwidth network allows fast template and snapshot copying. You must configure the IP

range to use for the storage network.

These traffic types can each be on a separate physical network, or they can be combined with certain

restrictions. When you use the Add Zone wizard in the UI to create a new zone, you are guided into

making only valid choices.

3.8.4. Advanced Zone Guest IP Addresses

When advanced networking is used, the administrator can create additional networks for use by the

guests. These networks can span the zone and be available to all accounts, or they can be scoped

to a single account, in which case only the named account may create guests that attach to these

networks. The networks are defined by a VLAN ID, IP range, and gateway. The administrator may

provision thousands of these networks if desired. Additionally, the administrator can reserve a part of

the IP address space for non-CloudPlatform VMs and servers (see IP Reservation in Isolated Guest

Networks in the Administrator's Guide).

16

Page 25

Advanced Zone Public IP Addresses

3.8.5. Advanced Zone Public IP Addresses

When advanced networking is used, the administrator can create additional networks for use by the

guests. These networks can span the zone and be available to all accounts, or they can be scoped

to a single account, in which case only the named account may create guests that attach to these

networks. The networks are defined by a VLAN ID, IP range, and gateway. The administrator may

provision thousands of these networks if desired.

3.8.6. System Reserved IP Addresses

In each zone, you need to configure a range of reserved IP addresses for the management network.

This network carries communication between the CloudPlatform Management Server and various

system VMs, such as Secondary Storage VMs, Console Proxy VMs, and DHCP.

The reserved IP addresses must be unique across the cloud. You cannot, for example, have a host in

one zone which has the same private IP address as a host in another zone.

The hosts in a pod are assigned private IP addresses. These are typically RFC1918 addresses. The

Console Proxy and Secondary Storage system VMs are also allocated private IP addresses in the

CIDR of the pod that they are created in.

Make sure computing servers and Management Servers use IP addresses outside of the System

Reserved IP range. For example, suppose the System Reserved IP range starts at 192.168.154.2 and

ends at 192.168.154.7. CloudPlatform can use .2 to .7 for System VMs. This leaves the rest of the pod

CIDR, from .8 to .254, for the Management Server and hypervisor hosts.

In all zones:

Provide private IPs for the system in each pod and provision them in CloudPlatform.

For KVM and XenServer, the recommended number of private IPs per pod is one per host. If you

expect a pod to grow, add enough private IPs now to accommodate the growth.

In a zone that uses advanced networking:

When advanced networking is being used, the number of private IP addresses available in each pod

varies depending on which hypervisor is running on the nodes in that pod. Citrix XenServer and KVM

use link-local addresses, which in theory provide more than 65,000 private IP addresses within the

address block. As the pod grows over time, this should be more than enough for any reasonable

number of hosts as well as IP addresses for guest virtual routers. VMWare ESXi, by contrast uses

any administrator-specified subnetting scheme, and the typical administrator provides only 255 IPs

per pod. Since these are shared by physical machines, the guest virtual router, and other entities, it is

possible to run out of private IPs when scaling up a pod whose nodes are running ESXi.

To ensure adequate headroom to scale private IP space in an ESXi pod that uses advanced

networking, use one or more of the following techniques:

• Specify a larger CIDR block for the subnet. A subnet mask with a /20 suffix will provide more than

4,000 IP addresses.

• Create multiple pods, each with its own subnet. For example, if you create 10 pods and each pod

has 255 IPs, this will provide 2,550 IP addresses.

For vSphere with advanced networking, we recommend provisioning enough private IPs for your total

number of customers, plus enough for the required CloudPlatform System VMs. Typically, about 10

additional IPs are required for the System VMs. For more information about System VMs, see Working

with System Virtual Machines in the Administrator's Guide.

17

Page 26

18

Page 27

Chapter 4.

Upgrade Instructions

4.1. Upgrade from 3.0.x to 4.2

Perform the following to upgrade from version 3.0.0, 3.0.1, 3.0.2, 3.0.3, 3.0.4, 3.0.5, 3.0.6, or 3.0.7 to

version 4.2.

1. If you are upgrading from 3.0.0 or 3.0.1, ensure that you query your IP address usage records and

process them; for example, issue invoices for any usage that you have not yet billed users for.

Starting in 3.0.2, the usage record format for IP addresses is the same as the rest of the usage

types. Instead of a single record with the assignment and release dates, separate records are

generated per aggregation period with start and end dates. After upgrading, any existing IP

address usage records in the old format will no longer be available.

2. While running the 3.0.x system, log in to the UI as root administrator.

3. Using the UI, add a new System VM template for each hypervisor type that is used in your cloud.

In each zone, add a system VM template for each hypervisor used in that zone.

Note

You might notice that the size of the system VM template has increased compared to

previous CloudPlatform versions. This is because the new version of the underlying Debian

template has an increased disk size.

a. In the left navigation bar, click Templates.

b. In Select view, click Templates.

c. Click Register template.

The Register template dialog box is displayed.

d. In the Register template dialog box, specify the following values depending on the hypervisor

type (do not change these):

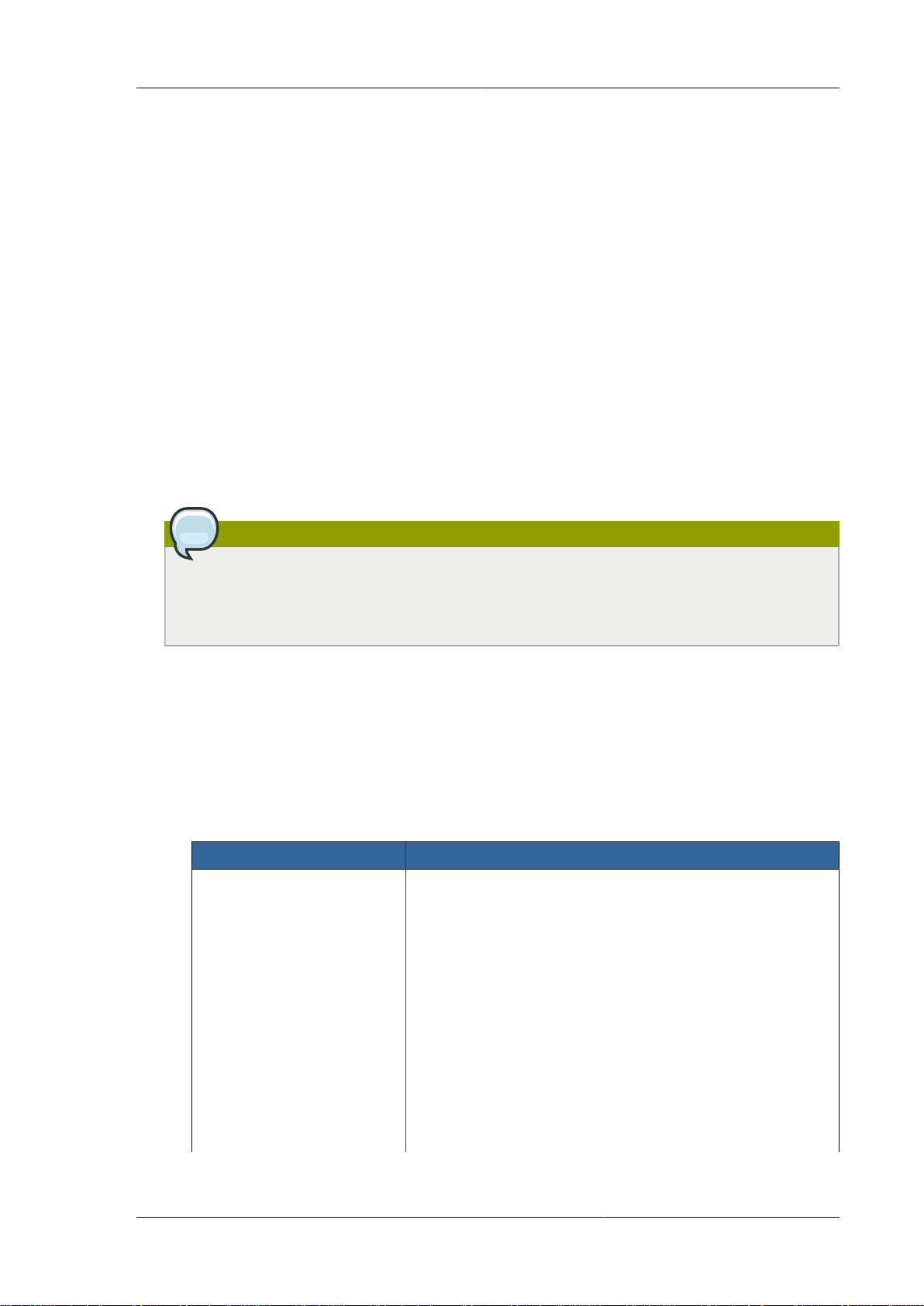

Hypervisor Description

XenServer Name: systemvm-xenserver-4.2

Description: systemvm-xenserver-4.2

URL: http://download.cloud.com/templates/4.2/

systemvmtemplate-2013-07-12-master-xen.vhd.bz2

Zone: Choose the zone where this hypervisor is used. If your

CloudPlatform deployment includes multiple zones running

XenServer, choose All Zones to make the template available

in all the XenServer zones.

Hypervisor: XenServer

Format: VHD

19

Page 28

Chapter 4. Upgrade Instructions

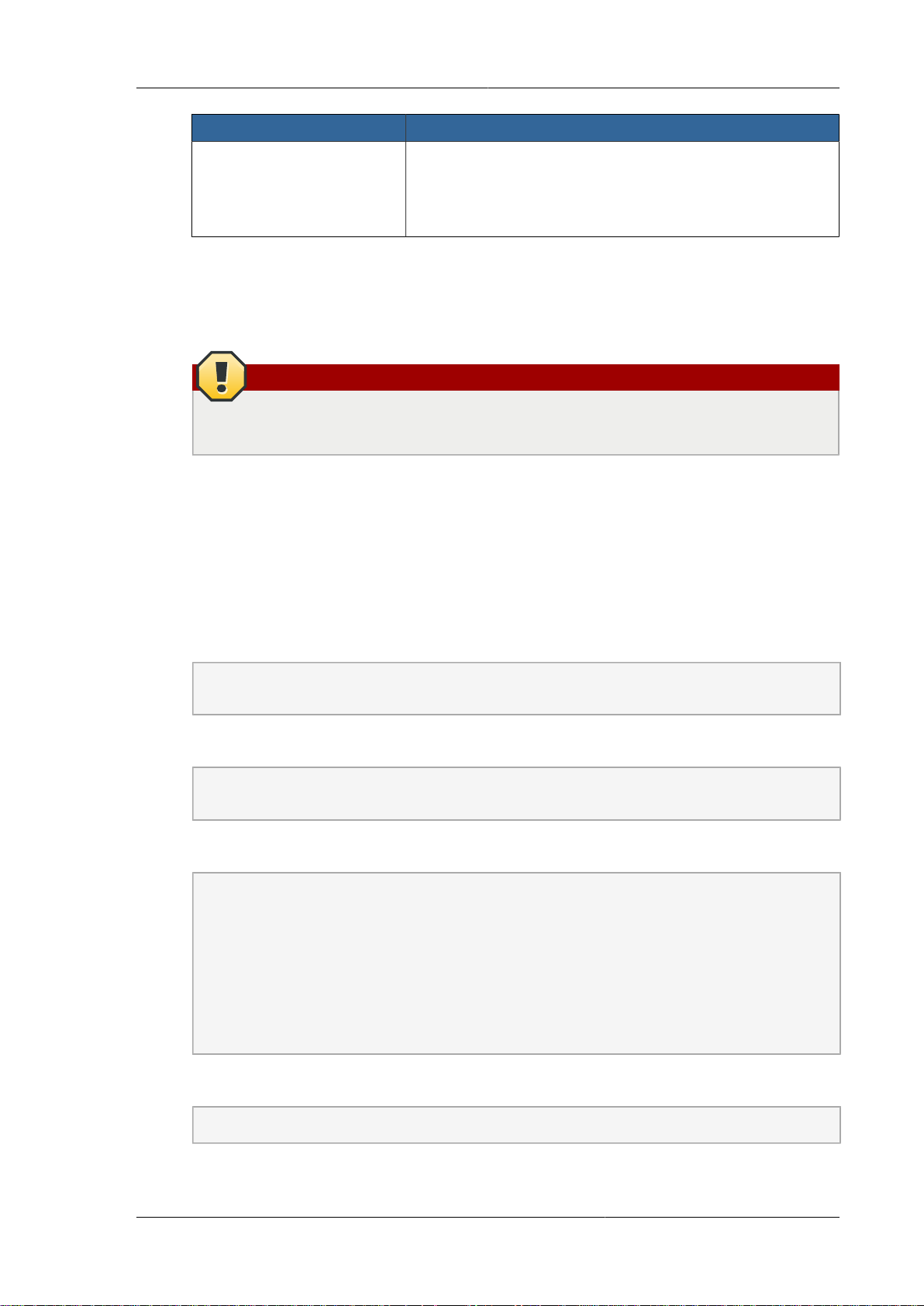

Hypervisor Description

KVM Name: systemvm-kvm-4.2

OS Type: Debian GNU/Linux 7.0 (32-bit) (or the highest

Debian release number available in the dropdown)

Extractable: no

Password Enabled: no

Public: no

Featured: no

Description: systemvm-kvm-4.2

URL: http://download.cloud.com/templates/4.2/

systemvmtemplate-2013-06-12-master-kvm.qcow2.bz2

Zone: Choose the zone where this hypervisor is used. If your

CloudPlatform deployment includes multiple zones running

KVM, choose All Zones to make the template available in all

the KVM zones.

Hypervisor: KVM

Format: QCOW2

OS Type: Debian GNU/Linux 7.0 (32-bit) (or the highest

Debian release number available in the dropdown)

Extractable: no

Password Enabled: no

Public: no

Featured: no

VMware Name: systemvm-vmware-4.2

Description: systemvm-vmware-4.2

URL: http://download.cloud.com/templates/4.2/

systemvmtemplate-4.2-vh7.ova

Zone: Choose the zone where this hypervisor is used. If your

CloudPlatform deployment includes multiple zones running

VMware, choose All Zones to make the template available in

all the VMware zones.

20

Hypervisor: VMware

Format: OVA

OS Type: Debian GNU/Linux 7.0 (32-bit) (or the highest

Debian release number available in the dropdown)

Extractable: no

Page 29

Upgrade from 3.0.x to 4.2

Hypervisor Description

Password Enabled: no

Public: no

Featured: no

e. Watch the screen to be sure that the template downloads successfully and enters the READY

state. Do not proceed until this is successful

f. If you use more than one type of hypervisor in your cloud, repeat these steps to download the

system VM template for each hypervisor type.

Warning

If you do not repeat the steps for each hypervisor type, the upgrade will fail.

4. (KVM on RHEL 6.0/6.1 only) If your existing CloudPlatform deployment includes one or more

clusters of KVM hosts running RHEL 6.0 or RHEL 6.1, you must first upgrade the operating

system version on those hosts before upgrading CloudPlatform itself.

Run the following commands on every KVM host.

a. Download the CloudPlatform 4.2.0 RHEL 6.3 binaries from https://www.citrix.com/English/ss/

downloads/.

b. Extract the binaries:

# cd /root

# tar xvf CloudPlatform-4.2.0-1-rhel6.3.tar.gz

c. Create a CloudPlatform 4.2 qemu repo:

# cd CloudPlatform-4.2.0-1-rhel6.3/6.3

# createrepo .

d. Prepare the yum repo for upgrade. Edit the file /etc/yum.repos.d/rhel63.repo. For example:

[upgrade]

name=rhel63

baseurl=url-of-your-rhel6.3-repo

enabled=1

gpgcheck=0

[cloudstack]

name=cloudstack

baseurl=file:///root/CloudPlatform-4.2.0-1-rhel6.3/6.3

enabled=1

gpgcheck=0

e. Upgrade the host operating system from RHEL 6.0 to 6.3:

yum upgrade

21

Page 30

Chapter 4. Upgrade Instructions

5. Stop all Usage Servers if running. Run this on all Usage Server hosts.

# service cloud-usage stop

6. Stop the Management Servers. Run this on all Management Server hosts.

# service cloud-management stop

7. On the MySQL master, take a backup of the MySQL databases. We recommend performing this

step even in test upgrades. If there is an issue, this will assist with debugging.

In the following commands, it is assumed that you have set the root password on the database,

which is a CloudPlatform recommended best practice. Substitute your own MySQL root password.

# mysqldump -u root -p<mysql_password> cloud >> cloud-backup.dmp

# mysqldump -u root -p<mysql_password> cloud_usage > cloud-usage-backup.dmp

8. (RHEL/CentOS 5.x) If you are currently running CloudPlatform on RHEL/CentOS 5.x, use the

following command to set up an Extra Packages for Enterprise Linux (EPEL) repo:

rpm -Uvh http://mirror.pnl.gov/epel/5/i386/epel-release-5-4.noarch.rpm

9. Download CloudPlatform 4.2 onto the management server host where it will run. Get the software

from the following link:

https://www.citrix.com/English/ss/downloads/.

You need a My Citrix Account1.

10. Upgrade the CloudPlatform packages. You should have a file in the form of “CloudPlatform-4.2N-OSVERSION.tar.gz”. Untar the file, then run the install.sh script inside it. Replace the file and

directory names below with those you are using:

# tar xzf CloudPlatform-4.2-N-OSVERSION.tar.gz

# cd CloudPlatform-4.2-N-OSVERSION

# ./install.sh

You should see a few messages as the installer prepares, followed by a list of choices.

11. Choose "U" to upgrade the package

>U

You should see some output as the upgrade proceeds, ending with a message like "Complete!

Done."

12. If you have made changes to your existing copy of the configuration files components.xml,

db.properties, or server.xml in your previous-version CloudPlatform installation, the changes will

be preserved in the upgrade. However, you need to do the following steps to place these changes

in a new version of the file which is compatible with version 4.2.

1

http://www.citrix.com/lang/English/publicindex.asp?destURL=%2FEnglish%2FmyCitrix%2Findex.asp%3F#

22

Page 31

Upgrade from 3.0.x to 4.2

Note

How will you know whether you need to do this? If the upgrade output in the previous step

included a message like the following, then some custom content was found in your old file,

and you need to merge the two files:

warning: /etc/cloud.rpmsave/management/components.xml created as /etc/cloudstack/

management/components.xml.rpmnew

a. Make a backup copy of your previous version file. For example: (substitute the file name

components.xml, db.properties, or server.xml in these commands as needed)

# mv /etc/cloudstack/management/components.xml /etc/cloudstack/management/

components.xml-backup

b. Copy the *.rpmnew file to create a new file. For example:

# cp -ap /etc/cloudstack/management/components.xml.rpmnew /etc/cloudstack/management/

components.xml

c. Merge your changes from the backup file into the new file. For example:

# vi /etc/cloudstack/management/components.xml

13. Repeat steps 8 - 12 on each management server node.

14. Start the first Management Server. Do not start any other Management Server nodes yet.

# service cloudstack-management start

Wait until the databases are upgraded. Ensure that the database upgrade is complete. After

confirmation, start the other Management Servers one at a time by running the same command on

each node.

Note

Failing to restart the Management Server indicates a problem in the upgrade. Restarting the

Management Server without any issues indicates that the upgrade is successfully completed.

15. Start all Usage Servers (if they were running on your previous version). Perform this on each

Usage Server host.

# service cloudstack-usage start

23

Page 32

Chapter 4. Upgrade Instructions

Note

After upgrade from 3.0.4 to 4.2, if the usage server fails to restart then copy db.properties

from /etc/cloudstack/management to /etc/cloudstack/usage. Then start the Usage Server.

16. (VMware only) If you are upgrading from 3.0.6 or beyond and you have existing clusters,

additional steps are required to update the existing vCenter password for each VMware cluster.

These steps will not affect running guests in the cloud. These steps are required only for clouds

using VMware clusters:

a. Stop the Management Server:

service cloudstack-management stop

b. Perform the following on each VMware cluster:

i. Encrypt the vCenter password:

java -classpath /usr/share/cloudstack-common/lib/jasypt-1.9.0.jar

org.jasypt.intf.cli.JasyptPBEStringEncryptionCLI encrypt.sh

input=<_your_vCenter_password_> password="`cat /etc/cloudstack/management/key`"

verbose=false

Save the output from this step for later use. You need to add this in the cluster_details and

vmware_data_center tables in place of the existing password.

ii. Find the ID of the cluster from the cluster_details table:

mysql -u <username> -p<password>

select * from cloud.cluster_details;

iii. Update the existing password with the encrypted one:

update cloud.cluster_details set value = <_ciphertext_from_step_i_> where id =

<_id_from_step_ii_>;

iv. Confirm that the table is updated:

select * from cloud.cluster_details;

v. Find the ID of the VMware data center that you want to work with:

select * from cloud.vmware_data_center;

24

vi. Change the existing password to the encrypted one:

Page 33

Upgrade from 3.0.x to 4.2

update cloud.vmware_data_center set password = <_ciphertext_from_step_i_> where

id = <_id_from_step_v_>;

vii. Confirm that the table is updated:

select * from cloud.vmware_data_center;

c. Start the CloudPlatform Management server

service cloudstack-management start

17. (KVM only) Additional steps are required for each KVM host. These steps will not affect running

guests in the cloud. These steps are required only for clouds using KVM as hosts and only on the

KVM hosts.

Note

After the software upgrade on a KVM machine, the Ctrl+Alt+Del button on the console view of

a VM doesn't work. Use Ctrl+Alt+Insert to log in to the console of the VM.

a. Copy the CloudPlatform 4.2 .tgz download to the host, untar it, and cd into the resulting

directory.

b. Stop the running agent.

# service cloud-agent stop

c. Update the agent software.

# ./install.sh

d. Choose "U" to update the packages.

e. Edit /etc/cloudstack/agent/agent.properties to change the resource parameter

from com.cloud.agent.resource.computing.LibvirtComputingResource to

com.cloud.hypervisor.kvm.resource.LibvirtComputingResource.

f. Upgrade all the existing bridge names to new bridge names by running this script:

# cloudstack-agent-upgrade

g. Install a libvirt hook with the following commands:

# mkdir /etc/libvirt/hooks

# cp /usr/share/cloudstack-agent/lib/libvirtqemuhook /etc/libvirt/hooks/qemu

# chmod +x /etc/libvirt/hooks/qemu

h. Restart libvirtd.

25

Page 34

Chapter 4. Upgrade Instructions

# service libvirtd restart

i. Start the agent.

# service cloudstack-agent start

18. Log in to the CloudPlatform UI as administrator, and check the status of the hosts. All hosts

should come to Up state (except those that you know to be offline). You may need to wait 20 or 30

minutes, depending on the number of hosts.

Note

Troubleshooting: If login fails, clear your browser cache and reload the page.

Do not proceed to the next step until the hosts show in Up state. If the hosts do not come to the

Up state, contact support.

19. If you are upgrading from 3.0.1 or 3.0.2, perform the following:

a. Ensure that the admin port is set to 8096 by using the "integration.api.port" global parameter.

This port is used by the cloudstack-sysvmadm script later in the upgrade procedure. For

information about how to set this parameter, see “Setting Configuration Parameters” in the

Installation Guide.

b. Restart the Management Server.

Note

If you don't want the admin port to remain open, you can set it to null after the upgrade is

done and restart the Management Server.

20. Run the following script to stop, then start, all System VMs including Secondary Storage VMs,

Console Proxy VMs, and virtual routers.

a. Run the script once on one management server. Substitute your own IP address of the

MySQL instance, the MySQL user to connect as, and the password to use for that user. In

addition to those parameters, provide the "-a" argument. For example:

# nohup cloudstack-sysvmadm -d 192.168.1.5 -u cloud -p password -a > sysvm.log 2>&1 &

This might take up to an hour or more to run, depending on the number of accounts in the

system.

b. After the script terminates, check the log to verify correct execution:

# tail -f sysvm.log

26

Page 35

Upgrade from 3.0.x to 4.2

The content should be like the following:

Stopping and starting 1 secondary storage vm(s)...

Done stopping and starting secondary storage vm(s)

Stopping and starting 1 console proxy vm(s)...

Done stopping and starting console proxy vm(s).

Stopping and starting 4 running routing vm(s)...

Done restarting router(s).

c. If you would like additional confirmation that the new system VM templates were correctly

applied when these system VMs were rebooted, SSH into the System VM and check the

version.

Use one of the following techniques, depending on the hypervisor.

XenServer or KVM:

SSH in by using the link local IP address of the system VM. For example, in the command

below, substitute your own path to the private key used to log in to the system VM and your

own link local IP.

ESXi

Run the following commands on the XenServer or KVM host on which the system VM is

present:

# ssh -i /root/.ssh/id_rsa.cloud <link-local-ip> -p 3922

# cat /etc/cloudstack-release

The output should be like the following:

Cloudstack Release 4.2 Mon Aug 12 15:10:04 PST 2013

SSH in using the private IP address of the system VM. For example, in the command below,

substitute your own path to the private key used to log in to the system VM and your own

private IP.

Run the following commands on the Management Server:

# ssh -i /var/cloudstack/management/.ssh/id_rsa <private-ip> -p 3922

# cat /etc/cloudstack-release

The output should be like the following:

Cloudstack Release 4.2 Mon Sep 24 15:10:04 PST 2012

21. If you want to close the admin port again (recommended in production systems), set

integration.api.port to null. Then restart the Management Server. For information about how to set

integration.api.port, see Section 5.5, “Setting Configuration Parameters”.

22. (XenServer only) If needed, upgrade all Citrix XenServer hypervisor hosts in your cloud to

a version supported by CloudPlatform 4.2 and apply any required hotfixes. Instructions for

upgrading XenServer software and applying hotfixes can be found in Section 4.4, “Upgrading and

Hotfixing XenServer Hypervisor Hosts”.

27

Page 36

Chapter 4. Upgrade Instructions

23. (VMware only) After upgrade, if you want to change a Standard vSwitch zone to a VMware

dvSwitch Zone, perform the following:

a. Ensure that the Public and Guest traffics are not on the same network as the Management

and Storage traffic.

b. Set vmware.use.dvswitch to true.

c. Access the physical network for the Public and guest traffic, then change the traffic labels as

given below:

<dvSwitch name>,<VLANID>,<Switch Type>

For example: dvSwitch18,,vmwaredvs

VLANID is optional.

d. Stop the Management server.

e. Start the Management server.

f. Add the new VMware dvSwitch-enabled cluster to this zone.

24. (VMware only) If your existing cloud includes any deployed data centers, you should set the global

configuration setting vmware.create.full.clone to false. Then restart the Management Server. For

information about how to set vmware.create.full.clone, see Section 5.5, “Setting Configuration

Parameters”. For information about how CloudPlatform supports full and linked clones, see

“Configuring Usage of Linked Clones on VMware” in the CloudPlatform Administration Guide.

Note

Troubleshooting tip: If passwords which you know to be valid appear not to work after upgrade, or

other UI issues are seen, try clearing your browser cache and reloading the UI page.

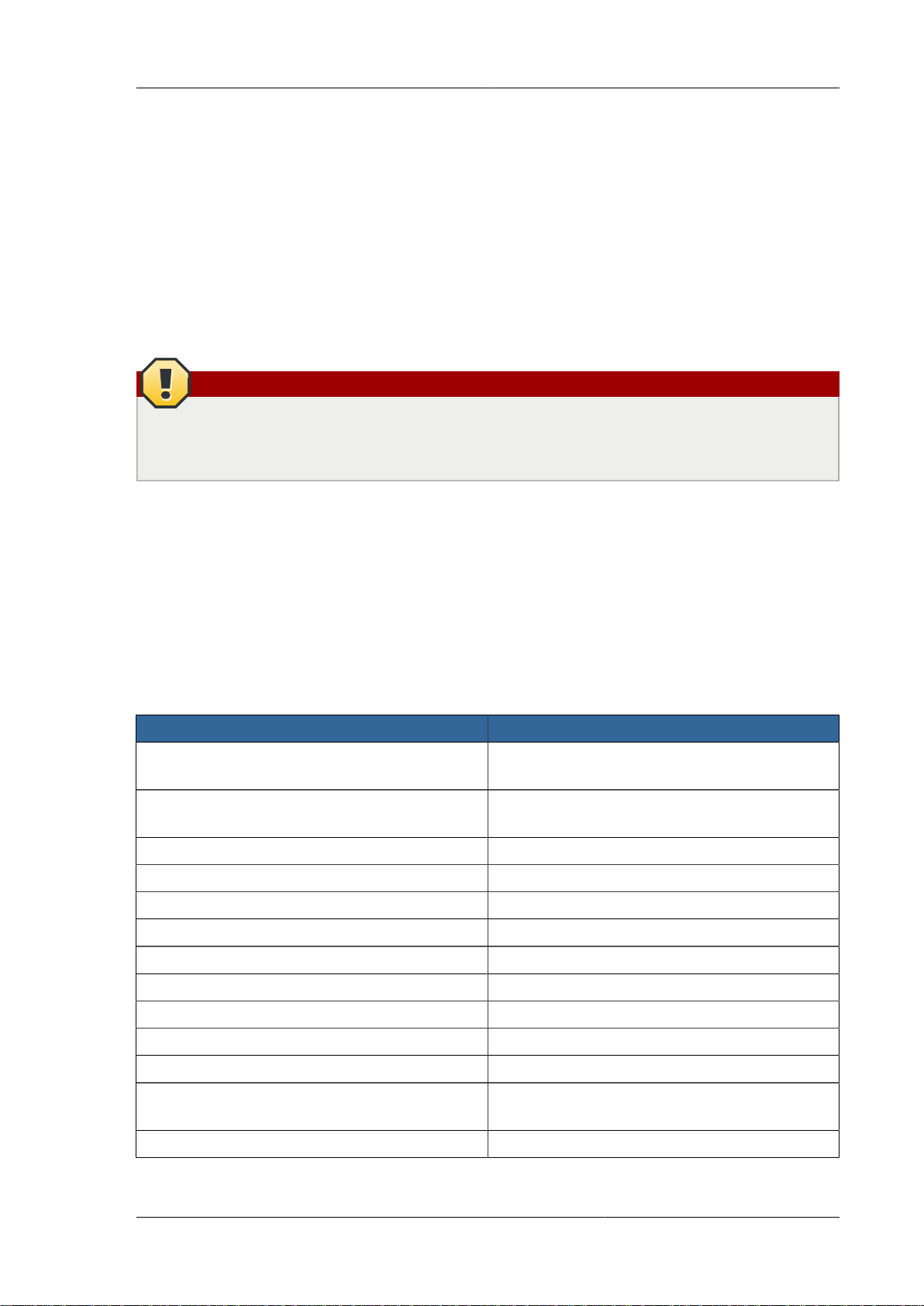

Note