Page 1

Data Integrator Core Tutorial

Data Integrator

Core Tutorial

Version 6.5.1

Page 2

COPYRIGHT No part of the computer software or this document may be reproduced or transmitted in any form or by any means,

electronic or mechanical, including photocopying, recording, or by any information storage and retrieval system, without permission in

writing from Business Objects. The information in this document is subject to change without notice. If you find any problems with this

documentation, please report them to Business Objects in writing at documentation@businessobjects.com. Business Objects does not

warrant that this document is error free.

Copyright © Business Objects 2003. All rights reserved.

Printed in the United States.

TRADEMARKS

The Business Objects logo, BusinessObjects and Rapid Marts are registered trademarks of Business Objects S.A. in the United States

and/or other countries.

Microsoft, Windows, Windows NT, Access, and other names of Microsoft products referenced herein are either registered trademarks

or trademarks of Microsoft Corporation in the United States and/or other countries.

Oracle is a registered trademark of Oracle Corporation. All other names of Oracle products referenced herein are trademarks or

registered trademarks of Oracle Corporation.

DETAIL is a trademark of Striva Technology Ltd.

SAP, R/3, BW, ALE/WEB and ABAP/4 are the registered or unregistered trademarks of SAP AG.

PeopleSoft is a registered trademark of PeopleSoft, Inc.

All other product, brand, and company names mentioned herein are the trademarks of their respective owners.

USE RESTRICTIONS

This software and documentation is commercial computer software under Federal Acquisition regulations, and is provided only under

the Restricted Rights of the Federal Acquisition Regulations applicable to commercial computer software provided at private expense.

The use, duplication, or disclosure by the U.S. Government is subject to restrictions set forth in subdivision (c)(1)(ii) of the Rights in

Technical Data and Computer Software clause at 252.227- 7013.

Document Number DI-651-0108-001

June 30, 2004

Page 3

Data Integrator Core Tutorial

Contents

Chapter 1 Before You Begin. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

Audience and assumptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

Tutorial objectives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

Data Integrator product documentation. . . . . . . . . . . . . . . . . . . . . . . . . 5

Tutorial prerequisites . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Preparation for this tutorial . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Environment required. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Tutorial setup. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Create repository, source, and target databases on an existing

RDBMS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Install Data Integrator on your computer . . . . . . . . . . . . . . . . . 9

Run SQL scripts to create sample source and target tables . . 11

Data Integrator tutorial structure. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

To exit the tutorial . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Chapter 2 Data Integrator Product Overview. . . . . . . . . . . . . . . . . . . . . . .17

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .18

What does Data Integrator do?. . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Data Integrator components. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

How do you use Data Integrator?. . . . . . . . . . . . . . . . . . . . . . . . . . 21

Data Integrator system configurations . . . . . . . . . . . . . . . . . . . . . . 22

Windows implementation. . . . . . . . . . . . . . . . . . . . . . . . . . . .23

UNIX implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .23

The Data Integrator Designer window . . . . . . . . . . . . . . . . . . . . . . . . . 24

Data Integrator objects. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Object hierarchy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Projects and jobs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .28

Data Integrator Core Tutorial iii

Page 4

Contents

Work flows and data flows. . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Object-naming conventions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

New terms. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Chapter summary and what to do next . . . . . . . . . . . . . . . . . . . . . . . . 31

Chapter 3 Defining Source and Target Metadata in Data Integrator .33

Logging in to the repository. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

Defining a datastore . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Importing metadata . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Defining a file format. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

New terms. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

Chapter summary and what to do next . . . . . . . . . . . . . . . . . . . . . . . . 49

Chapter 4 Populating the SalesOrg Dimension from a Flat File. . . . . . 51

Objects and their hierarchical relationships. . . . . . . . . . . . . . . . . . . . . 52

Adding a new project . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Adding a job . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

Adding a work flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

About data flows. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .57

Adding a data flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Defining the data flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Validating the data flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

Addressing errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

Saving the project . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .65

Executing the job . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

About deleting objects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

New terms. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

Chapter summary and what to do next . . . . . . . . . . . . . . . . . . . . . . . . 72

Chapter 5 Populating the Time Dimension Using a Transform . . . . . . . 73

Retrieving the project . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Adding the job and data flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .75

Defining the time dimension data flow . . . . . . . . . . . . . . . . . . . . . . . . 76

Saving and executing the job . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

Chapter summary and what to do next . . . . . . . . . . . . . . . . . . . . . . . . 81

Chapter 6 Populating the Customer Dimension from a Relational

Ta b l e . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .83

Adding the CustDim job and work flow. . . . . . . . . . . . . . . . . . . . . . . . 84

Adding the CustDim data flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

iv Data Integrator Core Tutorial

Page 5

Contents

Defining the CustDim data flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

Validating the CustDim data flow. . . . . . . . . . . . . . . . . . . . . . . . . . . . .88

Executing the CustDim job . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

Using the interactive debugger . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

Chapter summary and what to do next . . . . . . . . . . . . . . . . . . . . . . . . 94

Chapter 7 Populating the Material Dimension from an XML File . . . . 95

Adding the MtrlDim job, work flow, and data flow. . . . . . . . . . . . . . . 96

Importing a document type definition . . . . . . . . . . . . . . . . . . . . . . . . .97

Defining the MtrlDim data flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .98

Validating the MtrlDim data flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

Executing the MtrlDim job . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

Chapter summary and what to do next . . . . . . . . . . . . . . . . . . . . . . . 104

Chapter 8 Populating the Sales Fact Table from Relational Tables . . 105

Adding the SalesFact job, work flow, and data flow. . . . . . . . . . . . . . 106

Defining the SalesFact data flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

Validating the SalesFact data flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

Executing the SalesFact job . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

Using metadata reports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

Configuring Data Integrator to enable metadata reports . . . . . . 113

Viewing metadata reports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

Chapter summary and what to do next . . . . . . . . . . . . . . . . . . . . . . . 119

Chapter 9 Changed Data Capture. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

Exercise overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .122

Adding and defining the initial-load job . . . . . . . . . . . . . . . . . . . . . .123

Adding the job and defining global variables . . . . . . . . . . . . . . . 123

Adding and defining the work flow . . . . . . . . . . . . . . . . . . . . . . .124

Defining the set and update time scripts . . . . . . . . . . . . . . .124

Defining the data flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

Adding and defining the delta-load job . . . . . . . . . . . . . . . . . . . . . . .128

Executing the jobs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

Chapter summary and what to do next . . . . . . . . . . . . . . . . . . . . . . .133

Chapter 10 Recovery Mechanisms. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .135

Creating a recoverable work flow manually . . . . . . . . . . . . . . . . . . . .136

Adding the job and defining local variables. . . . . . . . . . . . . . . . . . . . 137

Specifying a recoverable job. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

Creating the script that determines the status . . . . . . . . . . . . . . . 138

Data Integrator Core Tutorial v

Page 6

Contents

Defining the recoverable data flow with a conditional. . . . . . . . 139

Adding the script that updates the status. . . . . . . . . . . . . . . . . . . 142

Specifying job execution order . . . . . . . . . . . . . . . . . . . . . . . . . . .143

Status of the exercise . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

Executing the job . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .145

Data Integrator automated recovery properties . . . . . . . . . . . . . . . . .146

Summary and what to do next . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .147

Chapter 11 Multiuser Development . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .150

Exercise overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .153

Preparation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

Creating a central repository. . . . . . . . . . . . . . . . . . . . . . . . . . . . .154

Creating two local repositories . . . . . . . . . . . . . . . . . . . . . . . . . . .155

Defining connections to the central repository . . . . . . . . . . . . . .156

Working in a multiuser environment . . . . . . . . . . . . . . . . . . . . . . . . . 158

Importing objects into your local repository. . . . . . . . . . . . . . . .158

Activating a connection to the central repository . . . . . . . . . . . .160

Adding objects to the central repository . . . . . . . . . . . . . . . . . . . 161

Checking out objects from the central repository . . . . . . . . . . . .164

Checking in objects to the central repository . . . . . . . . . . . . . . .166

Undoing check out . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .167

Comparing objects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .168

Checking out without replacement . . . . . . . . . . . . . . . . . . . . . . .170

Getting objects. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .173

Using filtering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .174

Deleting objects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .175

Chapter summary and what to do next . . . . . . . . . . . . . . . . . . . . . . .177

Chapter 12 Extracting SAP R/3 Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .179

Defining an SAP R/3 datastore . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .180

Importing metadata for SAP R/3 source tables. . . . . . . . . . . . . . . . . .182

Repopulating the customer dimension table . . . . . . . . . . . . . . . . . . . 184

Adding the SAP_CustDim job, work flow, and data flow . . . . . .184

Defining the SAP_CustDim data flow . . . . . . . . . . . . . . . . . . . . .184

Defining the R/3 data flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

Validating the SAP_CustDim data flow . . . . . . . . . . . . . . . . . . . . 191

Executing the SAP_CustDim job . . . . . . . . . . . . . . . . . . . . . . . . . 191

About ABAP job execution errors . . . . . . . . . . . . . . . . . . . . . . . . .192

Repopulating the material dimension table . . . . . . . . . . . . . . . . . . . .193

Adding the SAP_MtrlDim job, work flow, and data flow . . . . . . 193

vi Data Integrator Core Tutorial

Page 7

Contents

Defining the SAP_MtrlDim data flow . . . . . . . . . . . . . . . . . . . . .193

Defining the R/3 data flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 195

Executing the SAP_MtrlDim job. . . . . . . . . . . . . . . . . . . . . . . . . . 199

Repopulating the SalesFact table . . . . . . . . . . . . . . . . . . . . . . . . . . . . .200

Adding the SAP_SalesFact job, work flow, and data flow . . . . . . 200

Defining the DF_SAP_SalesFact data flow . . . . . . . . . . . . . . . . . . 200

Defining the R/3 data flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202

Validating the SAP_SalesFact data flow and executing the job . .208

New Terms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

Chapter summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 210

Chapter 13 Running a Real-time Job in Test Mode. . . . . . . . . . . . . . . . . . . 211

Exercise . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 213

Appendix A Glossary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 217

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .249

Data Integrator Core Tutorial vii

Page 8

Contents

viii Data Integrator Core Tutorial

Page 9

Data Integrator Core Tutorial

Before You Begin

1

Welcome to the Data Integrator Core Tutorial.

The exercises in this tutorial introduce concepts and techniques

to extract, transform and load batch data from flat-file and

relational database sources for use in a data warehouse. Use this

tutorial to obtain practical experience using Data Integrator

components including the Designer, repository and Job Server.

This chapter covers the following:

• Introduction

• Audience and assumptions

• Tutorial objectives

• Data Integrator product documentation

• Tutorial prerequisites

• Data Integrator tutorial structure

• To exit the tutorial

Data Integrator Core Tutorial 1

Page 10

1

Before You Begin

Introduction

Introduction

This tutorial introduces core features of Data Integrator. Data

Integrator is a component of the Business Objects Business

Intelligence suite that allows you to extract and integrate data for

analytical reporting and e-business.

While exercises in this tutorial focus on how to use batch data

flows, you can also use Data Integrator to develop real-time data

extraction and integration as described in Chapter 13, “Running

a Real-time Job in Test Mode”.

The Business Objects Data Integration platform also provides a

number of Rapid Marts, which are predefined data models with

built-in jobs provided by Data Integrator for use with business

intelligence (BI) and online analytical processing (OLAP) tools.

Contact your sales representative for more information about

Rapid Marts.

2 Data Integrator Core Tutorial

Page 11

Audience and assumptions

This tutorial assumes that:

• You are an application developer or database administrator

working on data extraction, data warehousing, or data

integration.

• You understand your source data systems, DBMS, business

intelligence, and e-business messaging concepts.

• You understand your organization’s data needs.

• You are familiar with SQL (Structured Query Language).

• You are familiar with Microsoft Windows NT or Windows

2000.

Before You Begin

Audience and assumptions

1

Data Integrator Core Tutorial 3

Page 12

1

Before You Begin

Tutorial objectives

Tutorial objectives

The intent of this tutorial is to introduce core Data Integrator

Designer functionality.

After completing this tutorial you should be able to:

• Describe the process for extracting, transforming, and

loading data using Data Integrator

• Identify Data Integrator objects

• Define Data Integrator objects to extract flat-file and

relational data from your sources, transform the data to suit

your needs, and load the data to your targets

• Use Data Integrator features and functions to:

! Recover from run-time errors

! Capture changed data

! Set up a multiuser development environment

! Run a real-time job

! View and print metadata reports

! Examine data throughout a job using the debugger

4 Data Integrator Core Tutorial

Page 13

Before You Begin

Data Integrator product documentation

Data Integrator product documentation

Consult the Data Integrator Getting Started Guide for:

• An overview of Data Integrator products and architecture

• Data Integrator installation and configuration information

• A list of product documentation and a suggested reading

path

After you install Data Integrator, you can view technical

documentation from many locations. To view documentation in

PDF format, you can:

1

• Select

• Select

Start > Programs > Business Objects Data Integrator

version > Data Integrator Documentation,

Designer’s

! Release Notes

! Release Summary

! Technical Manuals

Help menu, click:

Help in Data Integrator Web Administrator

or from the

You can also view and download PDF documentation by visiting

Business Objects Customer Support online. To access this Web

site, you must have a valid user name and password. To obtain

your user name and password, go to

http://www.techsupport.businessobjects.com and click

Register.

After installing Data Integrator Designer you can also view

documentation in HTML format.

Online Help opens

automatically the first time you open the Designer.

You can also open

• Choose

Contents from the Designer’s Help menu.

• Click objects in the object library or workspace and press

Help, using one of the following methods:

F1.

Online Help opens to the subject you selected.

Data Integrator Core Tutorial 5

Page 14

1

Before You Begin

Data Integrator product documentation

Use Online Help’s links and tool bar to navigate:

Online help tool bar:

Button Name Description

Home Returns to the Online Help home page.

Show/Hide

Navigation

Show/Hide

Descriptions

Start/Stop Help

Updates

Shows and hides the Table of Contents for the

core suite of Data Integrator Technical Manuals.

Includes a master Index and Search tabs.

The Online Help window automatically updates

its information when you click an object in the

Designer window. In addition, you can click any

object in the Designer window then click this

button to show or hide an object’s local and

global variables.

When the lock appears open, each time you click

an object in the Designer window, the Online

Help information automatically updates.

When the lock button appears closed, the Online

Help window does not automatically update

when you click objects in the Designer window;

you must press F1.

6 Data Integrator Core Tutorial

Page 15

Tutorial prerequisites

This section provides a high-level description of the steps you

need to complete before you begin the tutorial exercises.

Preparation for this tutorial

Read the chapters on logging in to the Designer and the Designer

user interface in the Data Integrator Designer Guide to get an

overview of the Designer user interface including terms and

concepts relevant to this tutorial.

This tutorial also provides a high-level summary in the next

chapter, “Data Integrator Product Overview” on page 17.

Environment required

To use this tutorial, you must have Data Integrator running on

Windows NT or 2000 and a supported RDBMS (Oracle, IBM

DB2, Informix, Microsoft SQL Server, or Sybase).

Before You Begin

Tutorial prerequisites

1

You can install Data Integrator product components (Designer,

Web Administrator, Job Server, Access Server) on a single

computer or distribute them across multiple computers.

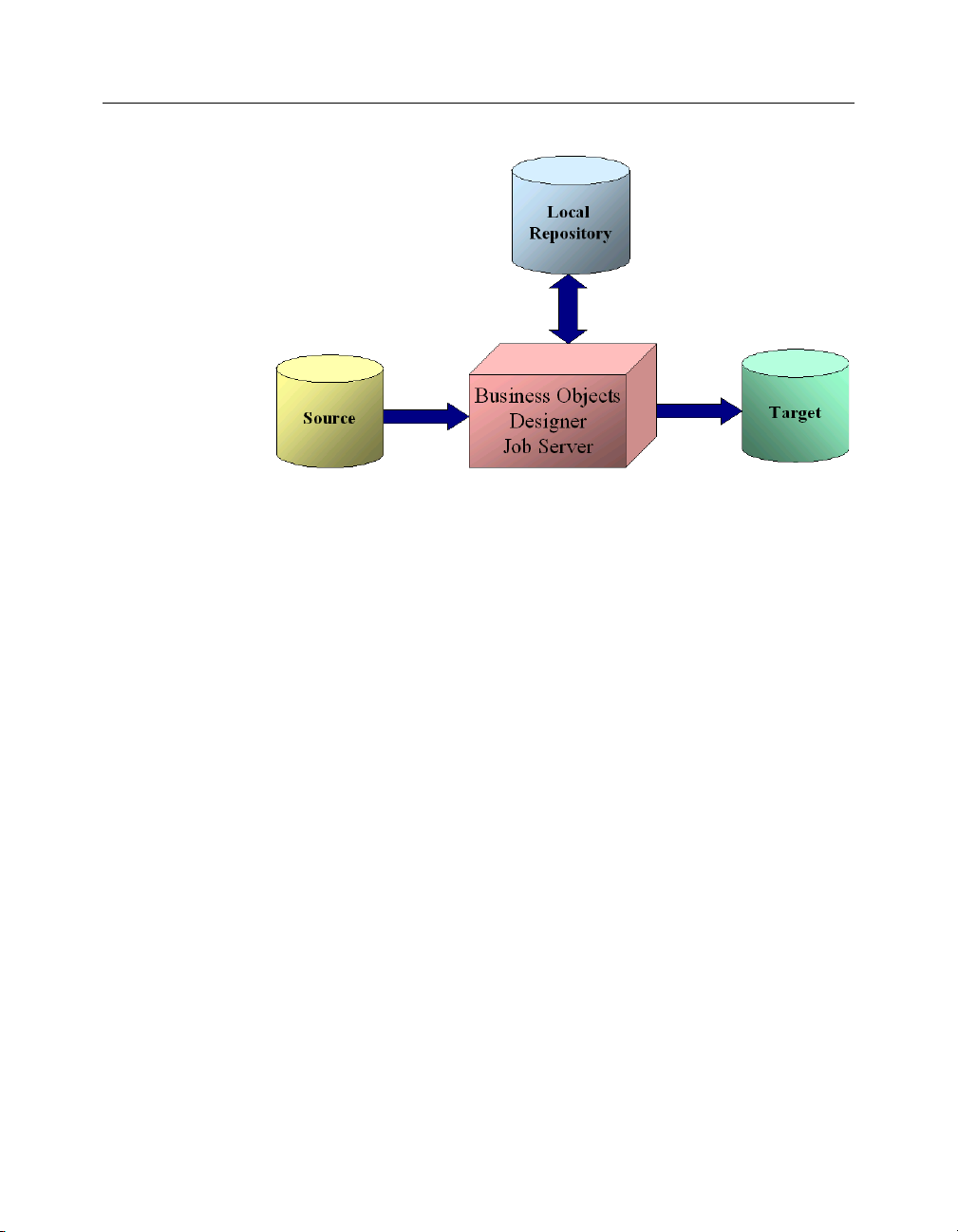

In the simplest case, all components in the following diagram

can reside on the same computer

Data Integrator Core Tutorial 7

Page 16

1

Before You Begin

Tutorial prerequisites

Tutorial setup

Ensure you have access to a RDBMS; contact your system

administrator for assistance.

To set up your computer for this tutorial you must do the

following tasks:

• Create repository, source, and target databases on an existing

RDBMS

• Install Data Integrator on your computer

• Run SQL scripts to create sample source and target tables

The following sections describe each of these tasks.

Create repository, source, and target databases on an existing RDBMS

" To create the databases

1. Log in to your RDBMS.

8 Data Integrator Core Tutorial

Page 17

Before You Begin

Tutorial prerequisites

2. (Oracle only). Optionally create a service name alias, for

example, using the Oracle Net8 Easy Config utility.

Set the protocol to TCP/IP and enter a service name; for

example, training.bobj. This can act as your connection

name.

3. Create three databases—for your repository, source

operational data store (ODS), and target. For each, you must

create a user account and password. The recommended

values used in the tutorial SQL scripts are:

Repository Source Target

User name repo ods target

Password repo ods target

4. Grant access privileges for the user account. For example for

Oracle, grant CONNECT and RESOURCE roles.

5. Make a note of the connection names, database versions,

user names and passwords in the following table. You will be

asked to refer to this information throughout the tutorial.

1

Value Repository Source Target

• Database connection name (Oracle)

or

• Database server name and

Database name (MS-SQL Server)

Database version

User name

Password

Install Data Integrator on your computer

See the Data Integrator Getting Started Guide for detailed

information about system requirements, configuration, and

running the installation program for Windows NT or 2000. See

also the previous section, “Environment required” on page 7.

Data Integrator Core Tutorial 9

Page 18

1

Before You Begin

Tutorial prerequisites

Be prepared to enter the following information when installing

Data Integrator:

• Your Windows NT or 2000 domain and user name

• Windows NT or 2000 password

• Your Windows NT or 2000 computer’s name and host ID

• Data Integrator license information

• Connection information for the local repository and Job

Server

NOTE: In the Repository Manager window, click the Local

option for the repository.

• A second Windows NT or 2000 account with rights to log in

as a service so the Job Server can run using this account

The installation process takes you into the Data Integrator Server

Manager to define a Job Server. Then, you can optionally create an

Access Server if you want to use web-based batch job

administration.

10 Data Integrator Core Tutorial

Page 19

Before You Begin

Tutorial prerequisites

The default installation creates the following entries in the

Start > Programs > Business Objects Data Integrator version

menu:

Command Function

Data Integrator

Documentation

Data Integrator Designer Opens Data Integrator Designer.

License Information Displays license information.

Metadata Reports Opens the metadata reporting tool in a

Repository Manager Opens a dialog box that you can use to

Server Manager Opens a dialog box that you can use to

Web Administrator Opens the Data Integrator Web

Provides a list of documentation PDFs

including Release Notes, Release

Summary, Technical Manuals (complete

documentation set), and Tutorial.

web browser

update repository connection

information.

configure Job Servers and Access Servers.

Administrator in a browser.

1

When you installed Data Integrator, it sets up a Windows service

for the Job Server. To verify that the service is enabled, open the

Services Control Panel and ensure that any Data Integrator

services are configured for Started Status and Automatic Startup

Type.

Run SQL scripts to create sample source and target tables

Data Integrator installation includes a batch file

(CreateTables_databasetype.bat) for each supported RDBMS

(indicated by the suffix databasetype). The batch files run SQL

scripts that create and populate tables on your source database

and create the target schema on the target database.

Data Integrator Core Tutorial 11

Page 20

1

Before You Begin

Tutorial prerequisites

" To run the scripts

1. Using Windows Explorer, locate the CreateTables batch file

for your RDBMS in your Data Integrator installation

directory in \Tutorial Files\Scripts.

2. Open the appropriate script file and edit the pertinent

connection information (and user names and passwords if

you are not using ods/ods and target/target).

The Oracle batch file contains commands of the form:

sqlplus username/password@connection

@scriptfile.sql > outputfile.out

The Microsoft SQL Server batch file contains commands of

the form:

isql /e /n /U username /S servername

/d databasename /P password

/i scriptfile.sql /o outputfile.out

(The output files provide logs that you can examine for

success or error messages.)

3. Double-click on the batch file to run the SQL scripts.

4. Use an RDBMS query tool to check your source ODS

database.

5. The following tables should exist on your source database

after you run the script. These tables should include a few

rows of sample data.

Descriptive Name Table Name in Database

Customer Customer

Material Material

Sales Order Header SalesOrder

Sales Order Line Item SalesItem

Sales Delivery Delivery

Employee Employee

Region Region

12 Data Integrator Core Tutorial

Page 21

Before You Begin

Tutorial prerequisites

6. Use an RDBMS query tool to check your target data

warehouse.

The following tables should exist on your target database after

you run the script.

Descriptive Name Table Name in Database

Sales Org Dimension salesorg_dim

Customer Dimension cust_dim

Material Dimension mtrl_dim

Time Dimension time_dim

Employee Dimension employee_dim

Sales Fact sales_fact

Recovery Status status_table

CDC Status CDC_time

1

Data Integrator Core Tutorial 13

Page 22

1

Before You Begin

Data Integrator tutorial structure

Data Integrator tutorial structure

The goal of the tutorial exercises is to demonstrate Data

Integrator features using a simplified data model. The model is a

sales data warehouse with a star schema that contains one fact

table and some dimension tables.

Sales Org

Dimension

From

flat file

Employee

Dimension

From

relational

table

Material

Dimension

From

XML file

or SAP

R/3 table

Star Schema

Sales Fact

From

relational

table

Time

Dimension

From

transform

Customer

Dimension

From

relational

table

Chapters build on jobs you create and skills learned in previous

chapters. You must complete each exercise to begin the next.

This tutorial is organized as follows.

Chapter 2, “Data Integrator Product Overview” introduces the

basic architecture and the user interface for Data Integrator.

Chapter 3, “Defining Source and Target Metadata in Data

Integrator” introduces working with the Designer. Use the

Designer to define a datastore and a file format, then import

metadata to the object library. After completing this chapter, you

will have completed the preliminary work required to define

data movement specifications for flat-file data.

14 Data Integrator Core Tutorial

Page 23

Before You Begin

Data Integrator tutorial structure

Chapter 4, “Populating the SalesOrg Dimension from a Flat File”

introduces basic data flows, query transforms, and source and

target tables. The exercise populates the sales organization

dimension table from flat-file data.

Chapter 5, “Populating the Time Dimension Using a Transform”

introduces Data Integrator functions. This exercise creates a data

flow for populating the time dimension table.

Chapter 6, “Populating the Customer Dimension from a

Relational Table” introduces data extraction from relational

tables. This exercise defines a job that populates the customer

dimension table.

Chapter 7, “Populating the Material Dimension from an XML

File” introduces data extraction from nested sources. This

exercise defines a job that populates the material dimension

table.

Chapter 8, “Populating the Sales Fact Table from Relational

Tables” continues data extraction from relational tables and

introduces joins and the lookup function. The exercise populates

the sales fact table.

1

Chapter 9, “Changed Data Capture” introduces a basic approach

to changed data capture. The exercise uses variables, parameters,

functions, and scripts.

Chapter 10, “Recovery Mechanisms” presents techniques for

recovering from incomplete data loads.

Chapter 11, “Multiuser Development” presents the use of a

central repository for setting up a multiuser development

environment.

Chapter 12, “Extracting SAP R/3 Data” provides optional

exercises on extracting data from an SAP R/3 source.

Chapter 13, “Running a Real-time Job in Test Mode” provides

optional exercises on running a real-time job in test mode.

Data Integrator Core Tutorial 15

Page 24

1

Before You Begin

To exit the tutorial

To exit the tutorial

You can exit the tutorial at any point after creating a sample

project (see “Adding a new project” on page 53).

" To exit the tutorial:

1. From the Project menu, click Exit.

If any work has not been saved, you are prompted to save

your work.

2. Click Ye s or No.

" To resume the tutorial:

1. Open Data Integrator and log in to the repository in which

you saved your work.

2. The Designer window opens.

3. From the Project menu, click Open.

4. Click the name of the project you want to work with, then

click

The Designer window opens with the project and the objects

within it displayed in the project area.

16 Data Integrator Core Tutorial

Open.

Page 25

Data Integrator Core Tutorial

Data Integrator Product

2

Overview

This chapter provides an overview of Data Integrator. It

introduces the product architecture and the Data Integrator

Designer.

This chapter contains the following sections:

• Introduction

• The Data Integrator Designer window

• Data Integrator objects

• New terms

• Chapter summary and what to do next

Data Integrator Core Tutorial 17

Page 26

2

Data Integrator Product Overview

Introduction

Introduction

This section gives you a brief introduction to Data Integrator.

What does Data Integrator do?

Data Integrator is a data movement and integration tool. One of

its fundamental capabilities is extracting, transforming, and

loading (ETL) data from heterogeneous sources into a target

database or data warehouse. You create applications (jobs) that

specify data mappings and transformations by using the Data

Integrator Designer.

Data Integrator real-time interfaces provide additional support

for real-time data movement and access. Data Integrator

real-time reacts immediately to messages as they are sent,

performing predefined operations with message content. Data

Integrator real-time components provide services to web

applications and other client applications.

18 Data Integrator Core Tutorial

Page 27

Data Integrator components

The Data Integrator product consists of several components

including:

• Data Integrator Designer

The Designer allows you to create, test, and execute jobs that

populate a data warehouse. It is a development tool with a

unique graphical user interface. It enables developers to

create objects, then drag, drop, and configure them by

selecting icons in a source-to-target flow diagram. It allows

you to define data mappings, transformations, and control

logic

. Use the Designer to create applications specifying work

flows (job execution definitions) and data flows (data

transformation definitions).

• Data Integrator Job Server

The Job Server is an application that launches the Data

Integrator processing engine and serves as an interface to the

engine and other components in the Data Integrator suite.

Data Integrator Product Overview

Introduction

2

• Data Integrator engine

The Data Integrator engine executes individual jobs defined

in the application you create using the Designer. When you

start your application, the Data Integrator Job Server

launches enough engines to effectively accomplish the

defined tasks.

• Data Integrator Repository

The repository is a database that stores Designer predefined

system objects and user-defined objects including source and

target metadata and transformation rules. In addition to the

local repository used by the Designer and Job Server, you can

optionally establish a central repository for object sharing

and version control.

Data Integrator Core Tutorial 19

Page 28

2

Data Integrator Product Overview

Introduction

The Designer handles all repository transactions. Direct

manipulation of the repository is unnecessary except for:

! Setup before installing Data Integrator

You must create space for a repository within your

RDBMS before installing Data Integrator.

! Security administration

Data Integrator uses your security at the network and

RDBMS levels.

! Backup and recovery

You can export your repository to a file. Additionally, you

should regularly back up the database where the

repository is stored.

• Data Integrator Access Server

The Access Server passes messages between web applications

and the Data Integrator Job Server and engines. It provides a

reliable and scalable interface for request-response

processing.

• Data Integrator Web Administrator

The Web Administrator provides browser-based

administration of Data Integrator resources, including:

! Scheduling, monitoring, and executing batch jobs

! Configuring, starting, and stopping real-time services

! Configuring Job Server, Access Server, and repository

! Configuring and managing adapters

! Managing users

! Publishing batch jobs and real-time services via Web

20 Data Integrator Core Tutorial

usage

services

Page 29

Data Integrator Product Overview

Introduction

The following diagram illustrates Data Integrator product

components and relationships.

2

Central Repository

(shared, optional)

Heterogeneous

Data Sources

Designer and

Local Admin

Local Repository

(user-specific)

Job Server and

Engine

Data Warehouse

(Target)

How do you use Data Integrator?

You use Data Integrator to design, produce, and run data

movement applications.

Using the Designer, you can build work flows and data flows that

specify data extraction, transformation, and loading processes.

In Data Integrator real-time, you have the added capability to

build real-time data flows that support e-business transactions.

Web-based

Administration

Access Server

Web

Applications

You create jobs to contain, organize, and run your flows. You

create projects to organize the jobs.

Refine and build on your design until you have created a

well-tested, production-quality application. In Data Integrator,

you can set applications to run in test mode or on a specific

schedule. Using Data Integrator real-time, you can run

applications in real time so they immediately respond to

web-based client requests.

Data Integrator Core Tutorial 21

Page 30

2

Data Integrator Product Overview

Introduction

Data Integrator system configurations

You can configure Data Integrator in various ways. The following

diagram illustrates one possible system configuration.

Designer

Job Server

Access Server

(Windows NT

or 2000)

TCP/IP Network

Sources

Job Server

(UNIX)

Data Warehouse

ERP Data

ERP

Application

Server

When integrating Data Integrator into your existing

environment, consider:

• The servers shown in the diagram can be separate physical

computers, or they can be installed on a single computer.

• For peak performance, install and create the Data Integrator

local repository on either the same computer as the Data

Integrator Job Server or on the same computer as the target

data warehouse.

In either of the previous configurations, the computer

should be on the same LAN segment as the rest of the Data

Integrator components.

22 Data Integrator Core Tutorial

Page 31

As shown in the diagram, most Data Integrator

components—the Designer, Job Server, and Access Server—can

run on the same Windows NT or 2000 system, or you can install

the Job Server on a UNIX system running Hewlett Packard

HP-UX, Sun Solaris, or IBM AIX.

Windows implementation

You can configure a Windows NT or 2000 system as either a

server or a workstation. A large-memory, multiprocessor system

is ideal because the multithreading, pipelining, and parallel

work flow execution features in Data Integrator take full

advantage of such a system.

You can create your target data warehouse on a database server

that may or may not be a separate physical computer.

You can use a shared disk or FTP to transfer data between your

source system and the Data Integrator Job Server.

Data Integrator Product Overview

Introduction

2

UNIX implementation

You can install the Data Integrator Job Server on a UNIX system

running Hewlett Packard HP-UX, Sun Solaris, or IBM AIX. You

can also configure the Job Server to start automatically when you

restart the computer.

Data Integrator Core Tutorial 23

Page 32

2

Data Integrator Product Overview

The Data Integrator Designer window

The Data Integrator Designer window

The following illustration shows the key areas of the Data

Integrator Designer window.

Workspace

Project area

Tool palette

Local object

library

The key areas of the Data Integrator application window are:

• Project area — Contains the current project (and the job(s)

and other objects within it) available to you at a given time.

In Data Integrator, all entities you create, modify, or work

with are objects.

• Workspace — The area of the application window in which

you define, display, and modify objects.

• Local object library — Provides access to local repository

objects including built-in system objects, such as transforms,

and the objects you build and save, such as jobs and data

flows.

24 Data Integrator Core Tutorial

Page 33

Data Integrator Product Overview

The Data Integrator Designer window

• Tool palette — Buttons on the tool palette enable you to add

new objects to the workspace.

2

Data Integrator Core Tutorial 25

Page 34

2

Data Integrator Product Overview

Data Integrator objects

Data Integrator objects

In Data Integrator, all entities you add, define, modify, or work

with are objects. Objects have:

Options Control the object. For example, to set up a

connection to a database, defining the database

name would be an option for the connection.

Properties Describe the object. For example, the name and

creation date. Attributes are properties used to

locate and organize objects.

Classes Type of object. You can copy reusable objects

from the object library. You cannot copy

single-use objects.

The following diagram shows transform objects in the Data

Integrator object library.

Object

list

To resize the object library, click and drag it until you see the

border size you want, then release. When you widen the object

library, the name of each object is visible next to its icon.

Object library tabs

26 Data Integrator Core Tutorial

Page 35

Object hierarchy

The following illustration shows the hierarchical relationships

for the key object types within Data Integrator.

Data Integrator Product Overview

Data Integrator objects

2

Key:

Data Integrator Core Tutorial 27

Page 36

2

Data Integrator Product Overview

Data Integrator objects

In the repository, the designer groups objects hierarchically from

a project, to jobs, to optional work flows, to data flows. In jobs,

work flows define a sequence of processing steps, and data flows

transform data from source(s) to target(s).

Projects and jobs

A project is the highest-level object in the Data Integrator

Designer window. Projects provide you with a way to organize

the other objects you create in Data Integrator. Only one project

is open at a time (where “open” means “visible in the project

area”).

A job is the smallest unit of work that you can schedule

independently for execution.

Work flows and data flows

Jobs are composed of work flows and/or data flows:

•A work flow is the incorporation of several data flows into a

coherent flow of work for an entire job.

•A data flow is the process by which source data is transformed

into target data.

A work flow orders data flows and operations that support them;

a work flow also defines the interdependencies between data

flows. For example, if one target table depends on values from

other tables, use the work flow to specify the order in which you

want Data Integrator to populate the tables. Also use work flows

to define strategies for handling errors that occur during project

execution. You can also use work flows to define conditions for

running sections of a project.

The following diagram illustrates a typical work flow.

28 Data Integrator Core Tutorial

Page 37

Work flow

Data Integrator Product Overview

Data Integrator objects

2

Control

Operations

A data flow defines the basic task that Data Integrator

accomplishes, which involves moving data from one or more

sources to one or more target tables or files. You define data

flows by identifying the sources from which to extract data, the

transformations the data should undergo, and targets.

Data flow

Source(s)

Object-naming conventions

Data Integrator recommends that you follow a consistent

naming convention to facilitate object identification. For

example, Rapid Marts use standards that are documented in the

Business Objects Component Development Guide. Here are some

examples:

Data Flow

Data

Transformation(s)

Data Flow

Control

Operations

Target(s)

Prefix Suffix Object Example

JOB Job JOB_SalesOrg

WF Work flow WF_SalesOrg

DF Data flow DF_Currency

DS Datastore ODS_ds

Data Integrator Core Tutorial 29

Page 38

2

Data Integrator Product Overview

New terms

New terms

Review the following terms.

Term Description

Attribute Property that can be used as a constraint for locating

objects.

Data flow Contains steps to define how source data becomes target

data. Called by a work flow or job.

Datastore Logical channel that connects Data Integrator to source

and target databases.

Job The smallest unit of work that you can schedule

independently for execution. A job is a special work flow

that cannot be called by another work flow or job.

Metadata Data that describes the objects maintained by Data

Integrator.

Object Any project, job, work flow, data flow, datastore, file

format, message, custom function, or transform created,

modified, or used in Data Integrator.

Object library Part of the Designer interface that represents a “window”

into the local repository and provides access to reusable

objects.

Option A choice in a dialog box that controls how an object

functions.

Project Grouping of jobs for the purpose of organized storage.

The Designer can open only one project at a time.

Property Characteristic used to define the state, appearance, or

value of an object; for example, the name of the object or

the date it was created.

Repository A database that stores Designer predefined system objects

and user-defined objects including source and target

metadata and transformation rules. Can be local or

central (shared).

Source Table, file, or legacy system from which Data Integrator

reads data.

Target Table or file to which Data Integrator loads data.

Work flow Contains steps to define the order of job execution. Calls

a data flow to manipulate data.

30 Data Integrator Core Tutorial

Page 39

Data Integrator Product Overview

Chapter summary and what to do next

Chapter summary and what to do next

This chapter has given you a short overview of the Data

Integrator product and terminology. For more information

about these topics, see the first few chapters of the Data Integrator

Getting Started Guide and Data Integrator Designer Guide.

In the next chapter, you will begin creating objects using the

Data Integrator Designer.

2

Data Integrator Core Tutorial 31

Page 40

2

Data Integrator Product Overview

Chapter summary and what to do next

32 Data Integrator Core Tutorial

Page 41

Data Integrator Core Tutorial

Defining Source and Target

3

Metadata in Data Integrator

In this chapter you will set up logical connections between Data

Integrator, a flat-file source, and a target data warehouse. You

will also create and import objects into the local repository.

Storing connection metadata in the repository enables you to

work within Data Integrator to manage tables that are stored in

various environments.

The tasks in this chapter include:

• Logging in to the repository

• Defining a datastore

• Importing metadata

• Defining a file format

Data Integrator Core Tutorial 33

Page 42

3

Defining Source and Target Metadata in Data Integrator

Logging in to the repository

Logging in to the repository

When you use Data Integrator, you save your work in the local

repository. So, when you open Data Integrator, a login window

for the local repository opens.

" To log in to Data Integrator

1. From the Start menu, click Programs > Business Objects Data

Integrator version > Data Integrator Designer

As Data Integrator starts, a repository login screen appears. A

sample login window (for the Oracle environment) appears

as follows.

.

2. Supply the appropriate login information for your

repository, then click

Data Integrator connects to the specified repository, then

opens the Designer.

See “Create repository, source, and target databases on an

existing RDBMS” on page 8 for login information.

34 Data Integrator Core Tutorial

OK.

Page 43

Defining Source and Target Metadata in Data Integrator

Logging in to the repository

In the next section you will define datastores (connections) for

your source and target.

3

Data Integrator Core Tutorial 35

Page 44

3

Defining Source and Target Metadata in Data Integrator

Defining a datastore

Defining a datastore

Datastores:

• Provide a logical channel (connection) to a database

• Must be specified for each source and target database

• Are used to import metadata for source and target databases

into the repository.

• Are used by Data Integrator to read data from source tables

and load data to target tables

The databases to which Data Integrator datastores can connect

include:

•Oracle

•IBM DB2

•Informix

• Microsoft SQL Server

• Sybase

•ODBC

• DETAIL (for mainframe sources)

Metadata consists of:

! Database tables

! RDBMS functions

! Application-specific data structures

36 Data Integrator Core Tutorial

•Table name

• Column names

• Column data types

• Primary key columns

• Table attributes

Page 45

Defining Source and Target Metadata in Data Integrator

Connection metadata is defined in the object library as

datastores (for tables) and file formats (for flat files).

The next task describes how to define datastores using the

Designer. Note that while you are designating the datastores as

sources or targets, datastores only function as connections. You

will define the actual source and target objects when you define

data flows later in the tutorial.

" To define a datastore for the source (ODS) database

1. From the Datastores tab of the object library, right-click in

the blank area and click

New.

Defining a datastore

3

TIP: To find the Datastores tab, roll your cursor over each

object library tab and read the text. You can also expand the

Object Library window by clicking and dragging it or

double-clicking it. Tab names display when you expand the

Object Library window.

Data Integrator Core Tutorial 37

Page 46

3

Defining Source and Target Metadata in Data Integrator

Defining a datastore

The Datastore Editor window opens. A sample Datastore

Editor window for the Oracle environment appears as

follows:

2. In the Name box, type ODS_DS.

This datastore name labels the connection to the database

you will use as a source. The datastore name will appear in

the local repository. When you create your own

projects/applications, remember to give your objects

meaningful names.

3. In the Application Type box, click Custom.

38 Data Integrator Core Tutorial

Page 47

Defining Source and Target Metadata in Data Integrator

Defining a datastore

4. In the Database Type box, click the option that corresponds

to the database software being used to store the source data.

3

The remainder of the boxes on the

Administrator

window depend on the Database Type

Datastore Web

selected.

5. Accept the default row commit size of 1000 for all databases.

The commit size option refers to batch array size (number of

rows).

6. The following table lists some of the options for the

Datastore Editor depending on the RDBMS you are using.

The options marked skip are not used in this tutorial. Enter

the information you recorded in “Create repository, source,

and target databases on an existing RDBMS” on page 8.

Oracle DB2 Informix SQL Server Sybase

Datastore name Datastore name Datastore name Datastore name Datastore name

Database type Database type Database type Database type Database type

CDC DB2 data source Informix data

source

Database

connection

name

Oracle version DB2 version Informix

version

Bulk loader

directory (skip)

Overflow file

directory (skip)

User name User name User name User name User name

Password Password Password Password Password

LiveLoad (skip) LiveLoad (skip) LiveLoad (skip) LiveLoad (skip) LiveLoad (skip)

Bulk loader

directory (skip)

Overflow file

directory (skip)

Bulk loader

directory (skip)

Overflow file

directory (skip)

Database server

name

Database name Database name

SQL server

version

Overflow file

directory (skip)

Database server

name

Sybase version

Overflow file

directory (skip)

7. Click OK.

Data Integrator saves a datastore for your source in the

repository.

Data Integrator Core Tutorial 39

Page 48

3

Defining Source and Target Metadata in Data Integrator

Defining a datastore

" To define a datastore for the target database

Define a datastore for the target database using the same

procedure as for the source (ODS) database.

•Use TARGET_DS for the datastore name.

• Use the information you recorded in “Create repository,

source, and target databases on an existing RDBMS” on

page 8.

40 Data Integrator Core Tutorial

Page 49

Importing metadata

With Data Integrator, you can import metadata for individual

tables using a datastore. You can import metadata by:

•Browsing

•Name

• Searching

The following procedure describes how to import by browsing.

" To import metadata for ODS source tables into Data Integrator

1. In the Datastores tab, right-click the ODS_DS datastore and

Open.

click

The names of all the tables in the database defined by the

datastore named ODS_DS display in a window in the

workspace.

Defining Source and Target Metadata in Data Integrator

Importing metadata

3

2. Move the cursor over the right edge of the Metadata column

heading until it changes to a resize cursor.

3. Double-click the column separator to automatically resize

the column.

Data Integrator Core Tutorial 41

Page 50

3

Defining Source and Target Metadata in Data Integrator

Importing metadata

4. Import the following tables by right-clicking each table name

and clicking

Import. Alternatively, because the tables are

grouped together, click the first name, Shift-click the last, and

import them together. (Use Ctrl-click for nonconsecutive

entries.)

ods.ods_customer

ods.ods_material

ods.ods_salesorder

ods.ods_salesitem

ods.ods_delivery

ods.ods_employee

ods.ods_region

NOTE: In MS SQL Server, the owner prefix might be dbo

instead of ODS.

Data Integrator imports the metadata for each table into the

local repository.

5. Expand the ODS_DS datastore’s Tables container in the

object library and verify the tables are listed in the repository.

42 Data Integrator Core Tutorial

Page 51

Defining Source and Target Metadata in Data Integrator

Importing metadata

" To import metadata for target tables into Data Integrator

1. Open the TARGET_DS datastore.

2. Import the following tables by right-clicking each table name

and clicking

them together.

target.status_table

target.cust_dim

target.employee_dim

target.mtrl_dim

target.sales_fact

target.salesorg_dim

target.time_dim

target.CDC_time

NOTE: In MS SQL Server, the owner prefix will be dbo

instead of TARGET.

Import. Alternatively, Use Ctrl-click and import

3

Data Integrator imports the metadata for each table into the

local repository.

Data Integrator Core Tutorial 43

Page 52

3

Defining Source and Target Metadata in Data Integrator

Importing metadata

3. Expand the Target_DS datastore’s Tables container in the

object library and verify the tables are listed in the repository.

44 Data Integrator Core Tutorial

Page 53

Defining Source and Target Metadata in Data Integrator

Defining a file format

If the source or target RDBMS includes data stored in flat files,

you must define file formats in Data Integrator. File formats are a

set of properties that describe the structure of a flat file.

Data Integrator includes a file format editor. Use it to define flat

file formats. The editor supports delimited and fixed-width

formats.

You can specify file formats for one file or a group of files. You

can define flat files from scratch or by import and modify an

existing flat file. Either way, Data Integrator saves a connection to

the file or file group.

NOTE: Data Integrator also includes a file format

(Transport_Format) that you can use to read flat files in SAP R/3.

In the next chapter you will use a flat file as your source data.

Therefore, you must create a file format and connection to the

file now.

Defining a file format

3

" To define a file format

1. In the object library, click the File Formats tab, right-click in a

blank area of the object library, and click

2. The file format editor opens.

3. Under General, leave Ty p e as Delimited. Change the Name to

Format_SalesOrg.

4.

Under Data Files, click the Files folder icon and navigate in

your Data Integrator install directory to \Tutorial

Files\sales_org.txt. Click

displays sample data from the sales_org.txt file in the (lower

right) Data Preview pane.

New > File Format.

Open. The file format editor

Data Integrator Core Tutorial 45

Page 54

3

Defining Source and Target Metadata in Data Integrator

Defining a file format

5. Under Default Format, change Date to ddmmyyyy.

The source data contains dates with a two-digit day number

followed by a two-digit month number, a four-digit year

(ddmmyyyy), and no time value. (Note that the sample dates

do not contain a delimiter between values. Using an

unsupported format string such as ddmmJJJJ will result in

incorrect dates, and no error message will appear.)

6. The first row contains the names of the column headings.

Under

Input/Output, change Skip row header to Ye s .

Data Integrator removes the first row and uses these column

heading values for the field names.

7. In the upper-right Column Attributes pane, click DateOpen

and change the data type to

lengths should appear as follows:

Field Name Data Type Field Size

SalesOffice Int

Region VarChar 2

DateOpen Date

Country VarChar 7

Date. The column types and

46 Data Integrator Core Tutorial

Page 55

Defining Source and Target Metadata in Data Integrator

8. Click Save & Close.

Defining a file format

3

Data Integrator Core Tutorial 47

Page 56

3

Defining Source and Target Metadata in Data Integrator

New terms

New terms

The terms examined in this chapter included:

Te r m M e a n i n g

Datastore Connection from Data Integrator to tables in source

or target databases. Stored as an object in the

repository.

Metadata Data that describes objects maintained by Data

Integrator. Metadata that Data Integrator stores in its

local repository includes:

•Table name

• Column name

• Column data types

• Primary key columns

• Table attributes

•RDBMS functions

Object library The GUI part of the Designer representing the local

repository.

File format A set of properties that define the table structure for a

flat file. Stored as an object in the repository.

48 Data Integrator Core Tutorial

Page 57

Defining Source and Target Metadata in Data Integrator

Chapter summary and what to do next

Chapter summary and what to do next

At this point, you have finished all the preparatory work to

define data movement specifications for a flat-file data source to

a target data warehouse. In this chapter you have:

• Defined a datastore from Data Integrator to your target data

warehouse

• Imported metadata from target tables into the local

repository so that you can use Data Integrator Designer to

work with these tables

• Defined file formats and a connection to flat-file source data

You are now ready to create a new project and define jobs that

will populate the target tables with source data. You will do that

for the sales organization dimension table in Chapter 4,

“Populating the SalesOrg Dimension from a Flat File”.

Sales Org

Dimension

3

You can now exit Data Integrator or go on to the next chapter.

The information you have created in this chapter has been saved

in the local repository and will be automatically available to you

the next time you use Data Integrator.

If you need more information about the topics covered in this

chapter, see the Data Integrator Designer Guide.

Data Integrator Core Tutorial 49

Page 58

3

Defining Source and Target Metadata in Data Integrator

Chapter summary and what to do next

50 Data Integrator Core Tutorial

Page 59

Data Integrator Core Tutorial

Populating the SalesOrg

4

Dimension from a Flat File

In this chapter, you will populate the sales organization

Sales Org

Dimension

dimension table in your target data warehouse with data from a

flat file called Format_SalesOrg.

The concepts and tasks in this chapter include:

• Objects and their hierarchical relationships

• Adding a new project

• Adding a job

• Adding a work flow

• About data flows

• Saving the project

• Executing the job

• About deleting objects

• New terms

• Chapter summary and what to do next

Data Integrator Core Tutorial 51

Page 60

4

Populating the SalesOrg Dimension from a Flat File

Objects and their hierarchical relationships

Objects and their hierarchical relationships

Everything in Data Integrator is an object. The key objects

involved in data movement activities (like projects, jobs, work

flows, and data flows) display in the Data Integrator Designer

project area according to their relationship in the object

hierarchy.

The following figure shows a display of the types of objects you

will be creating while you are working in this chapter.

Project

Job

Workflow

Dataflow

Object hierarchies are displayed in the project area of the

Designer.

52 Data Integrator Core Tutorial

Page 61

Adding a new project

Projects group and organize related objects. Projects display in

the project area of the Designer and can contain any number of

jobs, work flows, and data flows.

" To add a new project

1. In the Data Integrator Designer, from the Project menu click

New > Project.

2. Name the project Class_Exercises.

3. Click Create.

The project name appears as the only object in the project area of

the Designer.

Next, you will define the job that will be used to extract the

information from the flat-file source.

Populating the SalesOrg Dimension from a Flat File

Adding a new project

4

Data Integrator Core Tutorial 53

Page 62

4

Populating the SalesOrg Dimension from a Flat File

Adding a job

Adding a job

A job is a reusable object. It is also the second level in the project

hierarchy. It contains work flows (which contain the order of

steps to be executed) and data flows (which contain data

movement instructions). You can execute jobs manually or as

scheduled.

In this exercise you will define a job called JOB_SalesOrg.

" To add a new batch job

1. Right-click in the project area and click New Batch Job.

2. Right-click the new job and click Rename. Alternatively,

left-click the job twice (slowly) to make the name editable.

3. Type JOB_SalesOrg.

4. Left-click or press Enter.

The job appears in the project hierarchy under Class_Exercises

and in the project area of the object library.

54 Data Integrator Core Tutorial

Page 63

Adding a work flow

A work flow is a reusable object. It executes only within a Job.

Use work flows to:

•Call data flows

• Call another work flow

• Define the order of steps to be executed in your job

• Pass parameters to and from data flows

• Define conditions for executing sections of the project

• Specify how to handle errors that occur during execution

• Define conditions for executing sections of the project

Work flows are optional.

The Data Integrator objects you can use to create work flows

appear on the tool palette:

Populating the SalesOrg Dimension from a Flat File

Adding a work flow

4

Button Component Programming Analog

Work flow Procedure

Data Flow Declarative SQL select statement

Script Subset of lines in a procedure

Conditional If/then/else logic

Try Try block indicator

Catch Try block terminator and exception handler

Data Integrator Core Tutorial 55

Page 64

4

Populating the SalesOrg Dimension from a Flat File

Adding a work flow

" To ad d a wo r k f l o w

1. With JOB_SalesOrg selected in the project area, click the

work flow button on the tool palette.

2. Click the blank workspace area.

A work flow icon appears in the workspace. The work flow

also appears in the project area on the left under the job

name (expand the job to view).

NOTE: You can place a work flow anywhere in the

workspace, but because flows are best viewed from left to

right and top to bottom, place it near the top left corner.

3. Change the name of the work flow to WF_SalesOrg.

4. Click the name of the work flow.

An empty view for the work flow appears in the workspace.

You will use this view to define the elements of the work

flow. Notice the title bar changes to display the name of the

work flow.

56 Data Integrator Core Tutorial

Page 65

About data flows

A data flow defines the flow of data from sources to targets. It is

used to:

• Identify the source data you want to read

• Define the transformations you want to perform on the data

• Identify the target table to which you want to load data

A data flow is a reusable object. It is always called from a work

flow or a job.

The first data flow you need to create for this tutorial reads sales

organization data from a flat file and loads the data into the

sales organization dimension table in the target data warehouse.

Adding a data flow

The following procedure creates a data flow named DF_SalesOrg

inside the work flow WF_SalesOrg.

Populating the SalesOrg Dimension from a Flat File

About data flows

4

" To ad d a dat a f l ow

1. Make sure the work flow window is open in the workspace.

If it is not, click the WF_SalesOrg work flow in the project

area.

2. Click the data flow button on the tool palette.

3. Click the workspace.

A representation of a data flow appears in the workspace. The

data flow also appears in the project area.

4. Change the name of the data flow to DF_SalesOrg.

Data Integrator Core Tutorial 57

Page 66

4

Populating the SalesOrg Dimension from a Flat File

About data flows

Notice the project, job, work flow, and data flow objects

display in hierarchical form in both the project area and the

object library. To navigate to these levels, click their names in

the project area.

Data flow in the

workspace

Data flow in the

project area

Data flow in the local

object library

5. Click the data flow name.

A definition area or grid for the data flow appears in the

workspace. This area will be used to define the data flow as

described in the next section.

58 Data Integrator Core Tutorial

Page 67

Defining the data flow

Inside the data flow, you must specify the instructions to

transform source data into the form you want for the target table.

In this case, you will define the data flow instructions for

building the sales organization dimension table using the

following objects:

• An object representing the source file

• An object defining a query transform (or query). A query

transform maps columns from source to target. With a query,

you can:

! Select row sets

! Create joins

! Group and order by data values

! Create data set filters

! Execute functions

Populating the SalesOrg Dimension from a Flat File

About data flows

4

• An object representing the target table into which the data

loads

The next three exercises guide you through the steps necessary to

define a dataflow’s content:

1. Add objects to the data flow.

2. Connect them in the order that data will flow through them.

3. Define the query that maps the source columns to the target

columns.

" To add objects to a data flow

1. Verify the DF_SalesOrg data flow is open in the workspace.

In the object library, click the

Formats tab.

Data Integrator Core Tutorial 59

Page 68

4

Populating the SalesOrg Dimension from a Flat File

About data flows

2. The source for this data flow will be the flat file

Format_SalesOrg. Click and drag the Format_SalesOrg object

to the workspace.

3. Drop the Format_SalesOrg object on the left side of the

workspace to leave room for more objects on the right.

4. Click Make Source from the shortcut menu that appears

when you drop the object.

5. Click the query button on the tool palette.

6. Click to the right of the source file in the workspace.

7. In the object library, click the Datastores tab and expand the

datastore named Target_DS.

8. The target for this data flow will be the table

SALESORG_DIM. Click and drag the SALESORG_DIM object

to the workspace and drop it to the right of the query.

9. Click Make Target from the shortcut menu that appears

when you drop the table. Data Integrator creates a table with

the same schema as the query output schema.

All the elements necessary to create the sales organization

dimension table are now in the workspace. In the next section,

you will connect the steps in the correct order.

" To define the order of steps in a data flow

You now need to define the sequence for the data flow. The steps

execute in left-to-right order.

1. Click the square on the right edge of the source file and drag

it to the triangle on the left edge of the query transform.

60 Data Integrator Core Tutorial

Page 69

Populating the SalesOrg Dimension from a Flat File

About data flows

2. Use the same drag technique to connect the query transform

to the target table.

The next section introduces the query transform and the query

transform editor.

" To define a query transform that maps a source to a target

1. Click the name of the query in the project area or in the

workspace.

The query editor opens showing the source schema, the

target schema (which has automatically been copied from

the target table metadata to the output pane of the query),

and property sheets for defining the query.

4

There are four columns in the source schema: Sales_Office,

Region, DateOpen, and Country. You will specify that three

of them (Sales_Office, Region, and DateOpen) be copied to

the target table.

2. Map the source columns to target columns by dragging and

dropping each source column name onto the corresponding

column for the target schema. From the shortcut menu that

appears, click

imported from the target. Notice that the column icon next