BusinessObjects Data Integrator

Release Notes

Data Integrator 11.7.3

October 31, 2007

Copyright

© 2007 Business Objects. All rights reserved. Business Objects owns the following

U.S. patents, which may cover products that are offered and licensed by Business

Objects: 5,555,403; 6,247,008; 6,289,352; 6,490,593; 6,578,027; 6,768,986;

6,772,409; 6,831,668; 6,882,998 and 7,139,766. Business Objects and the Business

Objects logo, BusinessObjects, Crystal Reports, Crystal Xcelsius, Crystal Decisions,

Intelligent Question, Desktop Intelligence, Crystal Enterprise, Crystal Analysis,

Web Intelligence, RapidMarts, and BusinessQuery are trademarks or registered

trademarks of Business Objects in the United States and/or other countries. All

other names mentioned herein may be trademarks of their respective owners.

Third-party

Contributors

Business Objects products in this release may contain redistributions of software

licensed from third-party contributors. Some of these individual components may

also be available under alternative licenses. A partial listing of third-party

contributors that have requested or permitted acknowledgments, as well as required

notices, can be found at: http://www.businessobjects.com/thirdparty

Contents

About these Release Notes 7Chapter 1

Supported platforms and versions 9Chapter 2

Compatibility update..................................................................................10

Limitations update .....................................................................................15

Migration considerations 23Chapter 3

Logs in the Designer..................................................................................24

Data flow cache type.................................................................................24

Pageable cache for memory-intensive data flows.....................................24

Embedded data flows................................................................................25

Oracle repository upgrade.........................................................................25

Solaris and AIX platforms..........................................................................26

Data quality................................................................................................27

To migrate your data flow to use the new Data Quality transforms...........28

Distributed data flows................................................................................29

XML Schema enhancement......................................................................29

Password management.............................................................................30

Web applications.......................................................................................30

Web services.............................................................................................31

Supported products..............................................................................10

Unsupported products..........................................................................15

BusinessObjects Data Integrator Release Notes 3

Contents

Deployment on UNIX 33Chapter 4

Adapter interfaces 35Chapter 5

JMS adapter interface................................................................................36

Salesforce.com adapter interface..............................................................36

Documentation updates 37Chapter 6

New features in version 11.7.3..................................................................38

HP Neoview database support.............................................................38

Inxight integration for reading unstructured text ..................................38

base64_encode..............................................................................39

base64_decode..............................................................................40

Job Server enhancements...................................................................41

Getting Started Guide................................................................................41

Citrix Presentation Server version 4.0 .................................................41

AIX user resource limits.......................................................................42

Designer Guide..........................................................................................42

Changed-data capture for Microsoft SQL Server 2005 .......................42

To configure publications for Microsoft SQL Server 2005 CDC...........43

Creating Microsoft Excel workbook file formats on UNIX platforms.....44

To create a Microsoft Excel workbook file format on UNIX .................44

Reference Guide........................................................................................45

Maximum number of loaders................................................................45

Reserved words...................................................................................46

Microsoft Excel workbook format for UNIX..........................................46

Salesforce.com Adapter Interface Guide...................................................47

4 BusinessObjects Data Integrator Release Notes

Contents

Resolved issues 49Chapter 7

Known issues 83Chapter 8

Copyright information 89Chapter 9

SNMP copyright information......................................................................90

IBM ICU copyright information...................................................................92

BusinessObjects Data Integrator Release Notes 5

Contents

6 BusinessObjects Data Integrator Release Notes

About these Release Notes

1

About these Release Notes

1

Welcome to BusinessObjects Data Integrator XI Release 2 (XI R2)

Accelerated version 11.7.3.0. This version is a functional release that includes

the following new features:

• HP Neoview database support

• Inxight integration for reading unstructured text

• Job server enhancements that now allow up to 50 Designer clients with

no compromise in response time

See Documentation updates on page 37 for descriptions of how to use these

new features.

Please read this entire document before installing your Business Objects

software. It contains important information about this product release including

installation notes, details regarding the latest resolved and known issues,

and important information for existing customers.

To obtain the latest version of Data Integrator documentation including the

most up-to-date version of these Release Notes, visit the Customer Support

documentation download site (http://support.businessobjects.com/documen

tation/) and follow the appropriate product guide links.

8 BusinessObjects Data Integrator Release Notes

Supported platforms and versions

2

Supported platforms and versions

2

Compatibility update

This section describes changes in compatibility between Data Integrator and

other applications. It also summarizes limitations associated with this version

of Data Integrator.

For complete compatibility and availability details, see the Supported

Platforms documentation on the Business Objects Customer Assurance Web

site at:

http://support.businessobjects.com/documentation

Compatibility update

This section describes:

Supported products on page 10

•

Unsupported products on page 15

•

For complete compatibility and availability details, see the Supported

Platforms documentation on the Business Objects Customer Assurance Web

site at:

http://support.businessobjects.com/documentation

Supported products

Data Integrator now supports the following products as of this release.

• SAP BI 7

• Data Quality 11.7 and 11.7.1

• Microsoft Internet Explorer 7

• Sybase ASE 12.5 on Solaris and AIX

• HP Neoview 2.2 native support on Windows and Linux

• IBM DB2 9.1

• Netezza 3.1.4

• Oracle E-Business Suite Release 12

• Sybase IQ 12.7 (source and target)

• Teradata V2R6.2 on Windows and Linux

• Impact analysis will interoperate with BusinessObjects XI R2 SP3

universes, Web Intelligence documents, Desktop Intelligence documents,

and Business Views

10 BusinessObjects Data Integrator Release Notes

Supported platforms and versions

Compatibility update

• Citrix Presentation Server version 4.0. For more information, see

documentation for Citrix support on the Business Objects developer

community Web site at http://diamond.businessobjects.com/EIM/DataIn

tegrator.

• BusinessObjects Text Analysis for Data Integrator version 11.5. Check

the the Business Objects Customer Assurance Web site at http://sup

port.businessobjects.com/documentation/ for the availability of

BusinessObjects Text Analysis for Data Integrator version 11.5.

Data Integrator also supports the following products as of version 11.7.

• Support is available for the following platforms and configurations:

• DataDirect ODBC Driver version 5.2

• Data Federator XI R2 version 11.5

• Data Federator XI R2 Accelerated versions 11.6.2 and 11.7.0

• Salesforce.com AppExchange API for Enterprise version 7.0

• .NET support for Web services

• SAP ECC 6.0 via ABAP, BAPI and IDOC

Please note that this support is only available on the latest versions

of Rapid Marts. Check your Rapid Marts documentation to get the

latest support information for that product.

2

• Excel version 97, XP, 2000 and 2003 as a source (please review the

Limitations section for Excel support on UNIX)

• Impact analysis interoperates with:

• BusinessObjects universes 6.5.1, XI, and XI R2 SP2

• Web Intelligence documents versions 6.5.1, XI, and XI R2 SP2

• Desktop Intelligence documents versions 6.5.1, XI, and XI R2 SP2

• Business Views XI and XI R2 SP2

• The scheduling functionality is supported with BusinessObjects version

XI R2 or later versions of the BusinessObjects Enterprise scheduler

• The following Universe Builder versions are compatible with Data

Integrator 11.7:

• The stand-alone Universe Builder version 11.5 bundled with Data

Integrator 11.7. This stand-alone version of Universe Builder will

interoperate with Business Objects version 6.5.1 and XI. Find Universe

Builder documentation in the "Building Universes from Data Integrator

metadata Sources" chapter of the Universe Designer Guide, which

you can download from the Business Objects Customer Assurance

Web site at http://support.businessobjects.com/documentation/. Select

BusinessObjects Data Integrator Release Notes 11

Supported platforms and versions

2

Compatibility update

• The Universe Builder version bundled with BusinessObjects Enterprise

• ODBC to generic database support has been validated with the following

database servers and ODBC drivers. For complete compatibility details,

see the Business Objects Supported Platforms documentation on the

Business Objects Customer Assurance Web site at: http://support.busi

nessobjects.com/documentation

• Microsoft SQL Server 2000 via DataDirect Connect for ODBC 5.2.

• MySQL version 5.0 via ODBC driver version 3.51.12 on Windows and

• Red Brick version 6.3 via ODBC driver version IBM 6.3

• SQLAnywhere version 9.0.1 via ODBC driver version Adaptive Server

• Sybase IQ version 12.6 requires ESD 4

• Log-based changed-data capture (CDC) works with the following database

versions

• Oracle version 9.2 and above compatible versions for synchronous

BusinessObjects Enterprise as the product and Universe Designer

Guide as the document.

XI R2, XI R2 SP1, XI R2 SP2, and XI R2 SP3 (see the required

BusinessObjects Enterprise XI R2 SP1 CHF-13 patch information in

the Resolved issues section of this document for problem report

ADAPT00534412).

MySQL version 4.1 via ODBC driver version 3.51.10 on Windows and

UNIX.

Note: Driver version 3.51.12 is required in the multibyte environment.

Anywhere 9.0

CDC and Oracle version 10G and above compatible version for

asynchronous CDC

Note: Changed-data capture for Oracle 10G R2 does not support

multibyte table and column names due to a limitation in Oracle.

• Microsoft SQL Server 2000 and 2005

• IBM DB2 UDB for Windows version 8.2 using DB2 Information

Integrator for Replication Edition version 8.2 (DB2 II Replication) and

IBM WebSphere Message Queue version 5.3

• IBM DB2 UDB for z/OS using DB2 Information Integrator Event

Publisher for DB2 UDB for z/OS and IBM WebSphere Message Queue

version 5.3.1

• IBM IMS/DB using DB2 Information Integrator Classic Event Publisher

for IMS and IBM WebSphere Message Queue version 5.3.1

12 BusinessObjects Data Integrator Release Notes

Supported platforms and versions

Compatibility update

• IBM VSAM under CICS using DB2 Information Integrator Classic Event

Publisher for VSAM and IBM WebSphere Message Queue version

5.3.1

• Attunity for mainframe sources using Attunity Connect version 4.6.1

• NCR Teradata with the following server versions, client versions, and

ODBC drivers:

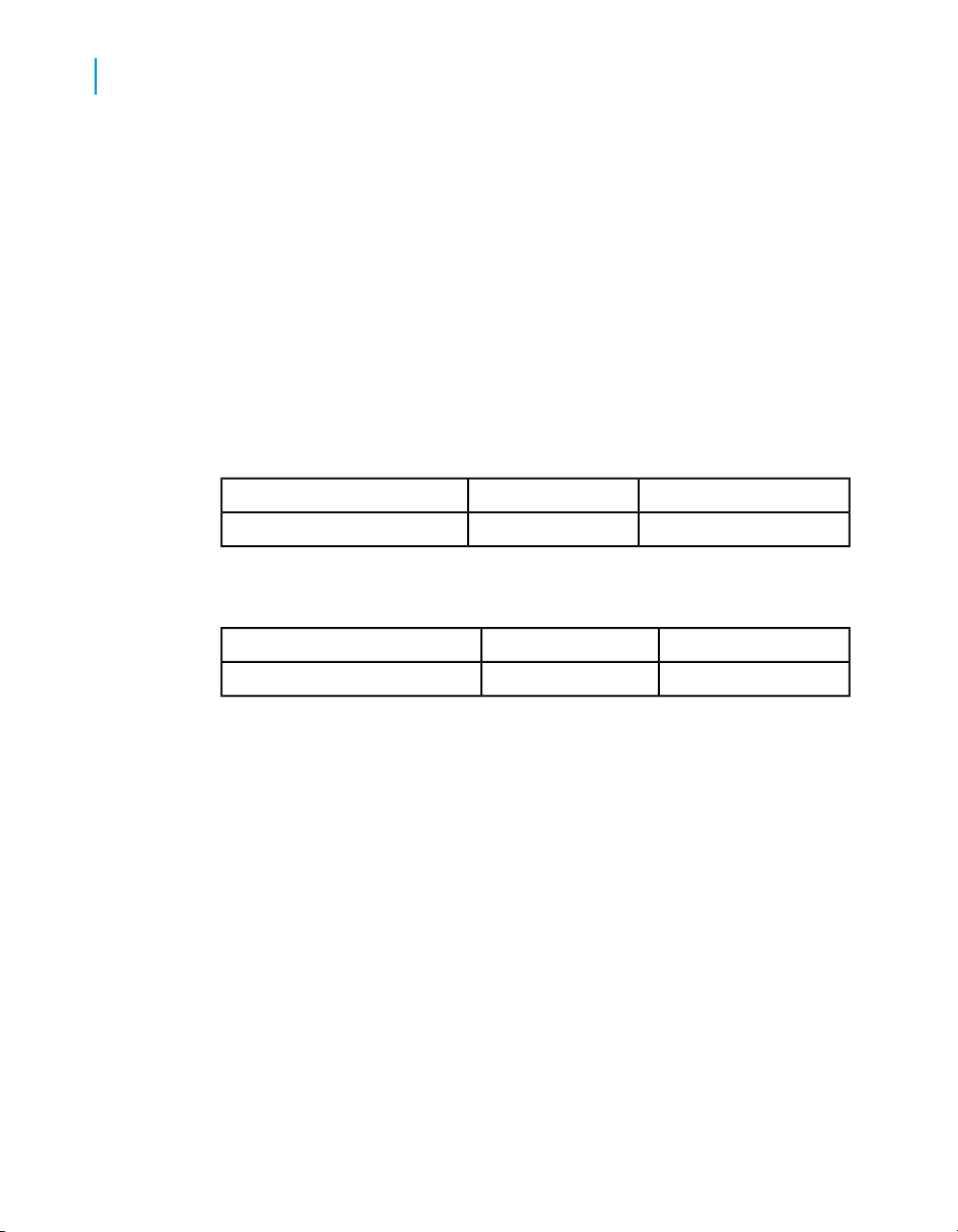

ODBC driverClient versionTeradata server version

03.06.00TTU 8.2V2R6.2

03.05.00TTU 8.1V2R6.1

03.04.00TTU 8.0V2R6

03.03.00TTU 7.1V2R5.1

This support requires the following TTU patches:

• On Windows platforms:

• cliv2.04.08.00.01.exe

• tbld5000.05.00.00.01.exe

• psel5000.05.00.00.01.exe

• pdtc5000.05.00.00.01.exe

• npaxsmod.01.03.01.00.exe

• npaxsmod.01.03.00.02.exe

• npaxsmod.01.03.00.01.exe

• On Linux platforms:

• cliv2.04.08.00.01.exe

• mqaxsmod.01.03.00.00.exe

• npaxsmod.01.03.01.00.exe

• npaxsmod.01.03.00.01.exe

2

With Teradata V2R6, the named pipes mechanism for Teradata bulk

loader is supported.

Note: Teradata does not recommend using Teradata Parallel Transporter

in TTU 7.0 and 7.1.

BusinessObjects Data Integrator Release Notes 13

Supported platforms and versions

2

Compatibility update

• Data Integrator uses Apache Axis version 1.1 for its Web Services support.

Axis version 1.1 provides support for WSDL version 1.1 and SOAP version

1.1 with the exception of the Axis servlet engine.

• Data Integrator connectivity to Informix servers is now only supported via

the native Informix ODBC driver. Version 2.90 or higher compatible version

of the Informix client SDK is required.

• Designers on Japanese operating systems working with Job Servers on

Japanese Windows computers.

When using the Designer on a Japanese operating system and working

with either an English Job Server on UNIX or a Job Server on Windows

on a non-Japanese operating system:

• Messages from the back end display in English

• Business Objects recommends using a Japanese Administrator on

• Java Virtual Machine—Version compatibility with Data Federator and

Data Integrator:

Windows so that metadata Reports and Impact Analysis correctly

display object descriptions in Japanese. If you use a Japanese

Administrator on UNIX, "junk" characters display if any of the object

descriptions have Japanese characters.

To access Data Federator data sources with Data Integrator, you must

modify your OpenAccess environment to use the Java Virtual Machine

that ships with Data Integrator. Data Federator installs a component called

OpenAccess ODBC to JDBC Bridge that requires these modifications.

Please make the following modifications (where Data Federator is installed

in D:\Program Files\DataFederatorXIR2, Data Integrator is installed in

D:\Program Files\DataIntegrator, and OpenAccess is installed in

D:\Program Files\OaJdbcBridge):

1. In the OpenAccess configuration file (D:\Program

Files\OaJdbcBridge\bin\iwinnt\openrda.ini), the path for the JVM must

be changed from:

JVM_DLL_NAME=D:\Program Files\DataFedera

torXIR2\jre\bin\client\jvm.dll

To

JVM_DLL_NAME=D:\Program Files\DataIntegra

tor\ext\Jre\Bin\client\jvm.dll

14 BusinessObjects Data Integrator Release Notes

2. Add the following OpenAccess jar files to the CLASSPATH

environment variable:

D:\ProgramFiles\OaJdbcBridge\jdbc\thindriver.jar

D:\ProgramFiles\OaJdbcBridge\oajava\oasql.jar

Unsupported products

Data Integrator no longer supports the following products as of this release.

• Microsoft Windows 2003 with no service packs

• Teradata V2R5.0

• BusinessObjects Data Quality 11.6

Data Integrator does not support the following products as of version 11.7.

• AIX 32-bit, SOLARIS 32-bit

• DataDirect ODBC Driver version 5.1

• Firstlogic Data Cleansing products including:

• International ACE versions 7.25c, 7.50c, 7.60, and 7.70

• DataRight IQ versions 7.10c, 7.50c, 7.60, and 7.70

• BusinessObjects Data Quality 11.5.1

• IBM Connector for the mainframe

• Citrix MetaFrame XP is not supported. To see the latest supported version,

go to the Supported Platforms documentation on the Business Objects

Customer Support Web site at: http://support.businessobjects.com/docu

mentation

• Informix (as a repository)

• Context-sensitive Help for Data Integrator

• Data Mart Accelerator for Crystal Reports is no longer supported; however,

it is still available on the Business Objects developer community Web

site at http://diamond.businessobjects.com/EIM/DataIntegrator.

Supported platforms and versions

Limitations update

2

Limitations update

The following limitations apply to Data Integrator version 11.7.3.

• Files read by an adapter must be encoded in either UTF-8 or the default

encoding of the default locale of the JVM running the adapter.

BusinessObjects Data Integrator Release Notes 15

Supported platforms and versions

2

Limitations update

• All Data Integrator features are available when you use an Attunity

Connector datastore except:

• Bulk loading

• Imported functions (imports metadata for tables only)

• Template tables (creating tables)

• The datetime data type supports up to 2 sub-seconds only

• Data Integrator cannot load timestamp data into a timestamp column

The Adapter SDK no longer supports Native SQL or Partial SQL.

•

Unsupported data type:

•

• Unsupported data type is only implemented for SQL Server, Oracle,

• Data Integrator can read, load, and invoke stored procedures involving

• Data Integrator might have a problem loading VARCHAR to a physical

in a table because Attunity truncates varchar data to 8 characters,

which is not enough to correctly represent a timestamp value.

Teradata, ODBC, DB2, Sybase ASE, Sybase IQ, Oracle Applications,

PeopleSoft, and Siebel.

unknown data types for SQL Server, Oracle, Teradata, ODBC, DB2,

Informix, Sybase ASE, and Sybase IQ assuming these database

servers can convert from VARCHAR to the native (unknown) data

type and from the native (unknown) data type to VARCHAR.

CLOB column (for example, bulk loading or auto-correct load could

fail).

Note: Use the VARCHAR column in the physical schema for loading.

• PeopleSoft 8 support is implemented for Oracle only.

Data Integrator jobs that ran against previous versions of PeopleSoft are

not guaranteed to work with PeopleSoft 8. You must update the jobs to

reflect metadata or schema differences between PeopleSoft 8 and

previous versions.

• Stored procedure support is implemented for DB2, Oracle, Microsoft SQL

Server, Sybase ASE, Sybase IQ, and ODBC only.

• Teradata support is only implemented for Windows and Linux.

• On Teradata, the named pipe implementation for Teradata Parallel

Transporter is supported with Teradata Tools and Utilities version 8 or

later compatible version. Teradata Tools and Utilities version 7.0 and 7.1

are not supported with named pipes.

• Bulk loading data to DB2 databases running on AS/400 or MVS systems

is not supported.

16 BusinessObjects Data Integrator Release Notes

Supported platforms and versions

Limitations update

• Data Integrator Management Console can be used on Microsoft Internet

Explorer version 6.0 SP1, 6.0 SP2, or 7.0 only. Earlier browser versions

may not support all of the Administrator functionality.

• Data Integrator's View Data feature is not supported for SAP R/3 IDocs.

For SAP R/3 and PeopleSoft, the Table Profile tab and Column Profile

tab options are not supported for hierarchies.

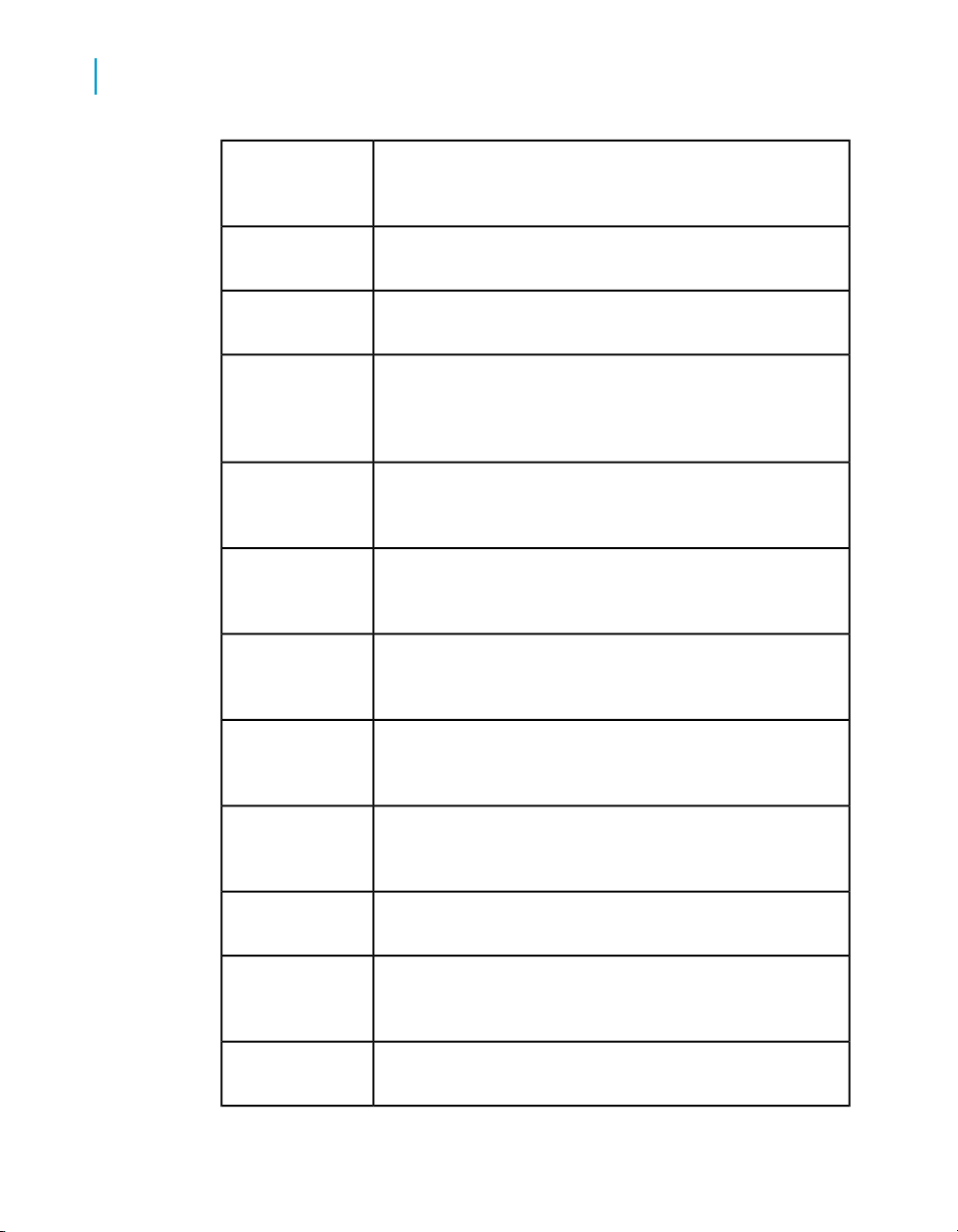

• Data Integrator now supports multibyte metadata for table names, column

names, file names, and file paths. The following table lists which sources

support multibyte and single-byte metadata and which support single-byte

only:

2

Multibyte and single-byte metadata

supported*

BusinessObjects Enterprise

Data Federator

DB2

Informix

MySQL

ODBC

Oracle

Siebel

Microsoft SQL Server

Sybase ASE

Sybase IQ

Teradata

XML

Single-byte metadata supported

Attunity connector for mainframe

databases

Data Quality

HP Neoview

JD Edwards

Netezza

Oracle Applications

PeopleTools

SAP R/3

SAP BW Server

* Support for multibyte metadata is dependent on comparable support in

the applications, databases, and technologies with which Data Integrator

interoperates.

• Support for the Data Profiler is provided for sources as follows:

BusinessObjects Data Integrator Release Notes 17

Supported platforms and versions

2

Limitations update

Not supportedSupported

Attunity Connector for mainframe

databases

DB2

Data Federator

Flat file

HP Neoview

Informix

Microsoft SQL Server

MySQL

Netezza

ODBC

Oracle

Oracle Applications

PeopleSoft

SAP R/3

Siebel

Sybase ASE

Sybase IQ

Teradata

COBOL copybooks

Excel

IDOC

JDE

Memory Datastore

SAP BW

XML

• Support for the LOOKUP_EXT function is provided for sources as follows:

18 BusinessObjects Data Integrator Release Notes

Supported platforms and versions

Limitations update

Not supportedSupported

2

DB2

Data Federator

Flat file

JDE

HP Neoview

Memory Datastore

Microsoft SQL Server

MySQL

Netezza

ODBC

Oracle

Oracle Applications

PeopleSoft

Siebel

Sybase ASE

Sybase IQ

Teradata

COBOL copybook

Excel

IDOC

SAP BW

SAP R/3

XML

• DB2 Java Library limitations

All Web applications in the Management Console will not work with a DB2

repository under any of the following conditions:

• db2java library is incompatible with DB2 client. For example, DB2

Client is version 8.1 and db2java library is version 8.2, or

• db2java library is not generated for JDBC 2 driver, or

• the java class path variable is not properly set to the corresponding

java library, or

• the DB2 JDBC shared library (e.g. libdb2jdbc.so on AIX) is not

compatible with the Java JDBC 2 driver

Under these conditions, you might see the following behavior:

• The Administrator stops working or crashes after you configure the

DB2 repository. Find any error and warning messages related to the

DB2 repository configuration in the log file.

BusinessObjects Data Integrator Release Notes 19

Supported platforms and versions

2

Limitations update

• When testing your DB2 connection from the Administrator, the following

In this release, the db2java JDBC 2 driver version of DB2 8.1 and DB2

8.2 are provided. On Windows, find the different version of these libraries

LINK_DIR/ext/lib

• db2java.zip (by default) DB2 8.2 version

• db2java_8.1.zip DB2 8.1 version

By default, the db2java.zip of version 8.2 will be used. If you run with a

DB2 8.1 Client, you must:

• replace with version 8.1 of db2java.zip

• make sure the compatible DB2 JDBC shared library is in the shared

• restart the Web server service on Windows or restart the job service

If you run with other DB2 Client versions, you must obtain the

corresponding java library with the JDBC 2 driver. Please refer to IBM

DB2 documentation for how to obtain the correct java library.

errors appear in the Administrator log:

• BODI-3016409: fail to connect to repository.

• BODI-3013014: didn't load DB2 database driver.

library path

on UNIX

• Informix native-driver support on UNIX requires a fix in the Informix Client

SDK for IBM bug number 167964. Please contact IBM for a patch that

includes this bug fix.

Note: IBM is targeting inclusion of this bug fix in Client SDK version 2.90.

• Data Integrator supports files greater than 2 GB only in the following

cases:

• Reading and loading large data files of type delimited and positional.

• Generating large files for bulk loader staging files for subsequent bulk

loading by a native bulk loader utility (such as SQL Loader).

• Previewing data files from the file format page in Designer when the

large files are on a UNIX Job Server.

• Reading COBOL copybook data files.

All other files generated by Data Integrator, such as log files and

configuration files, are limited to 2 GB.

• For the Microsoft Excel as a data source feature, the following limitations

apply:

20 BusinessObjects Data Integrator Release Notes

Supported platforms and versions

Limitations update

Concurrent access to the same Excel file might not work. For example,

•

View Data might not display if the file is currently open in Excel.

• Because an Excel column can contain mixed data types, some data

type conversions could produce unexpected results; for example,

dates might convert to integers.

• Boolean formulas not supported.

• Workbooks with AutoFilter applied are not supported. Remove the

filter before importing the workbook.

• Workbooks with hidden rows and/or columns are not supported.

• Stored procedures for MySQL are not supported due to limitations in

the MySQL ODBC library.

• The Data Integrator Salesforce.com adapter can connect to the

Salesforce.com version 7 API; however, the new functionality added in

API version 7 and above is not supported. The custom lookup field is the

only exception (new in API version 7) and is supported in the

Salesforce.com adapter. To ensure that your existing Salesforce.com

adapter works properly, you must update the URL in the datastore so

that it points to Salesforce.com 7.0 API, the new URL should be:

https://www.salesforce.com/services/Soap/u/7.0.

2

BusinessObjects Data Integrator Release Notes 21

Supported platforms and versions

Limitations update

2

22 BusinessObjects Data Integrator Release Notes

Migration considerations

3

Migration considerations

3

Logs in the Designer

Note: To use this version of Data Integrator, upgrade all existing Data

Integrator repositories to version 11.7.0.0.

This section lists and briefly describes all migration-specific behavior changes

associated with this version of Data Integrator. To view migration-specific

behavior changes among previous releases of Data Integrator, see the Data

Integrator Migration Behavior Changes guide available from the Business

Objects Customer Support documentation Web site (http://support.busines

sobjects.com/documentation/).

Logs in the Designer

In Data Integrator 11.7.3, you will only see the logs (trace, error, monitor) for

jobs that started from the Designer, not for jobs started via other methods

(command line, real-time, scheduled jobs, or Web services). To access these

other log files, use the Administrator in the Data Integrator Management

Console.

Data flow cache type

When upgrading your repository from versions earlier than 11.7 to an 11.7

repository using version 11.7.3.0, all of the data flows will have a default

Cache type value of pageable. This is different from the behavior in 11.7.2.0,

where the upgraded data flows have a default Cache type value of

in-memory.

Pageable cache for memory-intensive data flows

As a result of multibyte metadata support, Data Integrator might consume

more memory when processing and running jobs. If the memory consumption

of some of your jobs were running near the 2-gigabyte virtual memory limit

in a prior version, there is a chance that the same jobs could run out of virtual

memory. If your jobs run out of memory, take the following actions:

Set the data flow Cache type value to pageable.

•

• Specify a pageable cache directory that:

24 BusinessObjects Data Integrator Release Notes

Contains enough disk space for your data. To estimate the amount of

•

space required for pageable cache, consider factors such as:

• Number of concurrently running jobs or data flows

• Amount of pageable cache required for each concurrent data flow

• Exists on a separate disk or file system from the Data Integrator system

and operating system (such as the C: drive on Windows or the root

file system on UNIX).

Embedded data flows

In this version of Data Integrator, you cannot create embedded data flows

which have both an input port and an output port. You can create a new

embedded data flow only at the beginning or at the end of a data flow with

at most one port, which can be either an input or an output port.

However, after upgrading to Data Integrator version 11.7.2 or later, embedded

data flows created in previous versions will continue to run.

Migration considerations

Embedded data flows

3

Oracle repository upgrade

If you previously upgraded your repository to Data Integrator 11.7.0.0 and

you open the Object State Report on the Central repository from the

Administrator, you might see the error ORA04063 view ALVW_OBJ_CINOUT

has errors. This occurs if you had an Oracle central repository prior to

version 11.7.0.0 and you upgraded the central repository to 11.7.0.0.

Note: If you upgraded to 11.7.0.0 from a prior version of Data Integrator and

you are now upgrading to version 11.7.3 (this release), this issue might occur

and you must follow the instructions below. Alternatively, if you upgraded

from a version prior to 11.7.0.0 to 11.7.3 without upgrading to version 11.7.0.0,

this issue will not occur because it has been fixed in 11.7.2.0.

To fix this error, you must manually drop and recreate the view

ALVW_OBJ_CINOUT using an Oracle SQL editor, such as SQLPlus.

Use the following SQL statements to perform the upgrade:

DROP VIEW ALVW_OBJ_CINOUT;

CREATE VIEW ALVW_OBJ_CINOUT (OBJECT_TYPE, NAME, TYPE, NORMNAME,

VERSION, DATASTORE, OWNER,STATE, CHECKOUT_DT, CHECKOUT_REPO,

CHECKIN_DT,

CHECKIN_REPO, LABEL, LABEL_DT,COMMENTS,SEC_USER,SEC_USER_COUT)

AS

BusinessObjects Data Integrator Release Notes 25

Migration considerations

3

Solaris and AIX platforms

(

select OBJECT_TYPE*1000+TYPE,NAME, N'AL_LANG' , NORMNAME,VER

SION,DATASTORE, OWNER, STATE, CHECKOUT_DT, CHECKOUT_REPO,

CHECKIN_DT,

CHECKIN_REPO, LABEL, LABEL_DT,COMMENTS,SEC_USER ,SEC_USER_COUT

from AL_LANG L1 where NORMNAME NOT IN ( N'CD_DS_D0CAFAE2' ,

N'XML_TEMPLATE_FORMAT' , N'CD_JOB_D0CAFAE2' , N'CD_DF_D0CAFAE2'

, N'DI_JOB_AL_MACH_INFO' , N'DI_DF_AL_MACH_INFO' ,

N'DI_FF_AL_MACH_INFO' )

union

select 20001, NAME,FUNC_TYPE ,NORMNAME, VERSION, DATASTORE,

OWNER, STATE, CHECKOUT_DT, CHECKOUT_REPO, CHECKIN_DT,

CHECKIN_REPO, LABEL, LABEL_DT,COMMENTS,SEC_USER ,SEC_USER_COUT

from AL_FUNCINFO F1 where FUNC_TYPE = N'User_Script_Function'

OR OWNER <> N'acta_owner'

union

select 30001, NAME, N'PROJECT' , NORMNAME, VERSION, N'' , N''

, STATE, CHECKOUT_DT, CHECKOUT_REPO, CHECKIN_DT,

CHECKIN_REPO, LABEL, LABEL_DT,COMMENTS,SEC_USER ,SEC_USER_COUT

from AL_PROJECTS P1

union

select 40001, NAME,TABLE_TYPE, NORMNAME, VERSION, DATASTORE,

OWNER, STATE, CHECKOUT_DT, CHECKOUT_REPO, CHECKIN_DT,

CHECKIN_REPO, LABEL, LABEL_DT,COMMENTS,SEC_USER ,SEC_USER_COUT

from AL_SCHEMA DS1 where DATASTORE <> N'CD_DS_d0cafae2'

union

select 50001, NAME, N'DOMAIN' , NORMNAME, VERSION, DATASTORE,

N'' , STATE, CHECKOUT_DT, CHECKOUT_REPO, CHECKIN_DT,

CHECKIN_REPO, N'' ,to_date( N'01/01/1970' , N'MM/DD/YYYY' ),

N'' ,SEC_USER ,SEC_USER_COUT

from AL_DOMAIN_INFO D1

);

Solaris and AIX platforms

Data Integrator 11.7.3 on Solaris and AIX platforms is a 64-bit application

and requires 64-bit versions of the middleware client software (such as Oracle

and SAP) for effective connectivity. If you are upgrading to Data Integrator

11.7.3 from a previous version, you must also upgrade all associated

middleware client software to the 64-bit version of that client. You must also

update all library paths to ensure that Data Integrator uses the correct 64-bit

library paths.

26 BusinessObjects Data Integrator Release Notes

Data quality

Data Integrator now integrates the BusinessObjects Data Quality XI (formerly

known as Data Cleansing) application for your data quality needs, which

replaces Firstlogic's RAPID technology.

Note the following changes to data cleansing in Data Integrator:

Depending on the Firstlogic products you owned, you previously had up

•

to three separate transforms that represented data quality functionality:

Address_Enhancement, Match_Merge, and Name_Parsing.

Now, the data quality process takes place through a Data Quality Project.

To upgrade existing data cleansing data flows in Data Integrator, replace

each of the cleansing transforms with an imported Data Quality Project

using the Designer.

You will need to identify all of the data flows that contain any data

cleansing transforms and replace them with a new Data Quality Project

that connects to a Data Quality blueprint or custom project.

Data Quality includes many example blueprints that are sample projects

•

that can serve as a starting point when creating your own customized

projects. (You may need to modify the blueprints to work with your

installation. Please see your Data Quality Documentation, including the

Release Notes, for updated blueprint information.) If none of these

blueprints work to your satisfaction, you can either save these blueprints

as a project and edit them, or you can create a project from scratch.

Migration considerations

Data quality

3

You must use the Project Architect, Data Quality's graphical user interface,

•

to edit projects or create new ones. Business Objects strongly

recommends that you do not attempt to manually edit the XML of a project

or blueprint.

Each imported Data Quality project in Data Integrator represents a

•

reference to a project or blueprint on the data quality server. The Data

Integrator Data Quality projects allow field mapping.

BusinessObjects Data Integrator Release Notes 27

Migration considerations

3

To migrate your data flow to use the new Data Quality transforms

To migrate your data flow to use the new

Data Quality transforms

1. Install Data Quality XI, configure the server, and make sure it is started

before you can use it in Data Integrator. Please refer to the Data Quality

XI documentation for installation instructions.

2. In the Data Integrator Designer, create a new datastore of type Business

Objects Data Quality and connect to your Data Quality server.

3. Import the Data Quality projects that represent the data quality

transformations you want to use. Each project will appear as a Data

Quality project in your datastore. For the most common data quality

transformations, you can use existing blueprints (sample projects) in the

Data Quality repository.

4. Replace each occurrence of the old data cleansing transforms in your

data flows with one of the imported Data Quality transforms. You will also

need to reconnect the input and output schemas with the sources and

targets used in the data flow.

When opening a data flow containing one of the old data cleansing transforms

(address_enhancement, name_parsing, match_merge), you will still be able

to see the old transforms in this release (although they are not available

anymore in the object library). You can even open the properties and see

the details for each transform.

When validating a data flow that uses one of the old data cleansing

transforms, you will get an error such as:

[Custom Transform:Address_Enhancement] BODI-1116074: First

Logic support is obsolete. Please use the new Data Quality

feature.

It is not possible to execute a job that contains data flows using the old data

cleansing transforms (you will get the same error).

Contact Business Objects Customer Support at http://support.businessob

jects.com/ if you need help migrating your data cleansing data flows to the

new Data Quality transforms.

Note: Data Integrator 11.7.3 allows you to reconcile metadata for Data

Quality datastores.

28 BusinessObjects Data Integrator Release Notes

Distributed data flows

After upgrading to this version of Data Integrator, existing jobs have the

following default values and behaviors:

Job Distribution level: Job

•

All data flows within a job will be run on the same job server.

The default for Collect statistics for optimization and Collect statistics

•

for monitoring is cleared.

The default for Use collected statistics is selected.

•

Since no statistics are initially collected, Data Integrator will not initially

use statistics.

Every data flow runs as a process (not as a sub data flow process).

•

New jobs and data flows you create using this version of Data Integrator

have the following default values and behaviors:

Job Distribution level: Job

•

The Cache type for all data flows: pageable.

•

The default for Collect statistics for optimization and Collect statistics

•

for monitoring is cleared.

Migration considerations

Distributed data flows

3

The default for Use collected statistics is selected.

•

If you want Data Integrator to use statistics, you must collect statistics for

optimization first.

Every data flow is run as a single process. To run a data flow as multiple

•

sub data flow processes, you must use the Data_Transfer transform or

select the Run as a separate process option in transforms or functions.

All temporary cache files are created under LINK_DIR/log/PCache

•

directory. This option can be changed from the Server Manager.

XML Schema enhancement

Data Integrator 11.7 adds the new Include schema location option for XML

target objects. This option is selected by default.

BusinessObjects Data Integrator Release Notes 29

Migration considerations

3

Password management

In the Designer option Tools > Options > Job Server > General, Data

Integrator 11.5.2 provided the key XML_Namespace_No_SchemaLocation

for the section AL_Engine. The default value, FALSE, indicates that the

schema location is included. If you upgrade from 11.5.2 and had set

XML_Namespace_No_SchemaLocation to TRUE (indicates that the schema

location is NOT included), you must open the XML target in all data flows

and clear the Include schema location option to keep the old behavior for

your XML target objects.

Password management

All password fields are encrypted using two-fish algorithm starting in this

•

release of Data Integrator.

To simplify the process of updating new passwords for the repository

•

database, this version of Data Integrator introduces a new password file

feature. If you have no requirement to change the password to the

database hosting your repository, you may not need to use this optional

feature.

If you must change the password (for example, security requirements

stipulate that you must change your password every 90 days), then

Business Objects recommends that you migrate your scheduled or

external job command files to use this feature.

Migration requires that you regenerate every job command file to use the

password file. After migrating, when you update the repository password,

you need only regenerate the password file. If you do not migrate using

the password file feature, then you must regenerate every job command

file every time you change the associated password.

Web applications

The Data Integrator Administrator (formerly called the Web Administrator)

•

and metadata Reports interfaces have been combined into the new

Management Console in Data Integrator 11.7. Now, you can start any

Data Integrator Web application from the Management Console launch

pad (home page). If you have created a bookmark or favorite that points

to the previous Administrator URL, you must update the bookmark to

point to http:// computername : port /diAdmin.

30 BusinessObjects Data Integrator Release Notes

If in a previous version of Data Integrator you generated WSDL for Web

•

service calls, you must regenerate the WSDL because the URL to the

Administrator has been changed in Data Integrator 11.7.

Web services

Data Integrator is now using Xerces2 library. When upgrading to 11.7 or

above and configuring the Web Services adapter to use the xsdPath

parameter in the Web Service configuration file, delete the old Web Services

adapter and create a new one. It is no longer necessary to configure the

xsdPath parameter.

Migration considerations

Web services

3

BusinessObjects Data Integrator Release Notes 31

Migration considerations

Web services

3

32 BusinessObjects Data Integrator Release Notes

Deployment on UNIX

4

Deployment on UNIX

4

Please observe the following requirements for UNIX systems.

Install JDK version 1.4.2 as described in the vendor's documentation. (If

•

the Daylight Savings Time update affects you, install the JDK patch,

version 1.4.2_13 or later, 1.4.x compatible version).

The AIX maintenance level must be at least 5200-05. For all versions of

•

AIX, the following file sets must be installed:

DescriptionStateLevelFile set

COMMITTED6.0.0.13xlC.aix50.rte

COMMITTED6.0.0.0xlC.rte

COMMITTED6.0.0.0xlC.msg.en_US.rte

To find these xlC file sets and their levels on your AIX system, use the

command lslpp -l xlC*

Refer to the $LINK_DIR/log/DITC.log file for browser-related errors.

For Red Hat Linux 4, install the following patches:

•

glibc-2.3.4-2

•

libgcc-3.4.3-9.EL4

•

compat-libstdc++-296-2.96-132.7.2

•

compat-libstdc++-33-3.2.3-47.3

•

For Solaris 9, install the appropriate patch:

•

Install 111721-04 (for both 32-bit and 64-bit SPARC; see the vendor's

•

latest patch updates)

C Set ++ Runtime

for AIX 5.0

C Set ++ Runtime

C Set ++ Runtime Messages,

U.S.English

For Solaris 10 SPARC, install the following patch:

•

120470-01

•

34 BusinessObjects Data Integrator Release Notes

Adapter interfaces

5

Adapter interfaces

5

JMS adapter interface

This section contains notes related to installation, configuration, and use of

the adapter interfaces provided with this release.

For installation details, see the Data Integrator Getting Started Guide, JMS

and Salesforce.com interface integration subsection.

JMS adapter interface

Find the full technical documentation for the Data Integrator Adapter for JMS

in the same directory as your other Data Integrator documentation.

The JMS adapter is generic and can work with the JMS libraries of any

•

JMS provider.

This version of the JMS adapter has been tested using Weblogic JMS

•

libraries (from BEA Systems) as the JMS provider.

If you are running the JMS adapter with any other JMS provider, you

•

should include the location of the third-party jar files associated with the

specific JMS provider.

On the Adapter Instance Configuration page, the classpath field contains

•

the list of Data Integrator-provided jar files. Append the location of the

JMS jar files to the classpath field.

Salesforce.com adapter interface

Find the full technical documentation for the Data Integrator Salesforce.com

Adapter Interface in the same directory as your other Data Integrator

documentation.

The Salesforce.com adapter Interface is compatible with Data Integrator

version 11.6 and later.

36 BusinessObjects Data Integrator Release Notes

Documentation updates

6

Documentation updates

6

New features in version 11.7.3

Please note the following updates to the Data Integrator 11.7.2 technical

documentation. These changes will be incorporated into a future release of

the manual set.

Note: The numbers are ADAPT system Problem Report tracking numbers.

New features in version 11.7.3

HP Neoview database support

This version supports the HP Neoview database as a source or target via a

new datastore option in the Designer. Ensure the HP Neoview ODBC driver

is installed and configured on the client computer (where the Data Integrator

Designer and the Job Server are located).

For information on creating datastores, see the Data Integrator Designer

Guide, Datastores section and the Data Integrator Reference Guide, Data

Integrator Objects section, Datastore subsection.

For details on the ODBC options available for the HP Neoview datastore,

see the Data Integrator Reference Guide, Data Integrator Objects section,

Datastore subsection, ODBC subsection.

Inxight integration for reading unstructured text

Data Integrator version 11.7.3 provides support for extracting and

transforming contents from unstructured text by calling the Inxight SDX

(SmartDiscovery Extraction Server) via Web services. Two new functions

have been added to Data Integrator that enable you to pass data to Inxight

using the required base64 encoding.

The following procedure describes how to use Data Integrator with Inxight

SDX Web services.

1. In the Designer, create an adapter datastore that connects to the Inxight

SDX Web service.

2. From the Inxight adapter datastore, import an operation, which becomes

the adapter Web service function.

38 BusinessObjects Data Integrator Release Notes

3. Open the adapter function and edit the schema as necessary, for example

4. In the data flow query, use the base64_encode function to encode the

5. Use another query transform to call the Inxight Web service function and

6. Use the base64_decode function to decode the returned result as plain

Related Topics

• base64_encode on page 39

• base64_decode on page 40

base64_encode

Returns the base64-encoded data in the engine locale character set.

Syntax

base64_encode(input data, 'UTF-8')

Documentation updates

New features in version 11.7.3

increase the column length of the base64 text column.

input text to base64.

pass the encoded text as input.

text again.

6

Return Value

varchar

Returns base64-encoded data. If the input data is NULL or the size is 0, Data

Integrator returns NULL. Otherwise, it returns the base64-encoded data that

conforms to RFC 2045.

Where

input data

UTF-8

The input data that needs to be encoded to base64. Does not

support long or BLOB data types.

The code page of the input data. UTF-8 is required for Data Integrator version 11.7.3.

BusinessObjects Data Integrator Release Notes 39

Documentation updates

6

New features in version 11.7.3

Example:

You want to extract home address, city, state, and zip code information

from email content using the Inxight SDX Web service. One of the

requirements for the Inxight SDX Web service is to send the content in

base64-encoded format and to specify the character set of the content.

Inxight SDX Web service returns the extract type and name in

base64-encoded format.

For example, create a data flow with a flat file source that contains the data.

The column name of the content data is CONTENT.

Map the source in a query transform as follows. In the column mapping

editor for the CONTENT column, specify:

base64_encode(CONTENT, 'UTF-8')

Map this query to another query where you invoke the Inxight SDX Web

service and it gets a response in an NRDM schema called Extract Entities

Response. The extract fields have values in base64 encoding.

Map to another query transform and decode the base-64-encoded data as

in the following example:

base64_decode(extract_name, 'UTF-8')

Related Topics

• base64_decode on page 40

base64_decode

Returns the source data after decoding the base64-encoded input.

Syntax

base64_decode(base64-encoded input, 'UTF-8')

Return Value

varchar

40 BusinessObjects Data Integrator Release Notes

Documentation updates

Getting Started Guide

Returns the source data after decoding the base64-encoded input. If the

input is NULL or the size of the data is 0, Data Integrator returns NULL.

Otherwise, it returns the base64-decoded data that conforms to RFC 2045.

Where

6

base64-encoded input

UTF-8

Related Topics

• base64_encode on page 39

The base64-encoded input data. Does not support long

or BLOB data types.

The code page of the output data. UTF-8 is required for

Data Integrator version 11.7.3.

Job Server enhancements

Using multithreaded processing for incoming requests, each Data Integrator

Job Server can now accommodate up to 50 Designer clients simultaneously

with no compromise in response time. (To accommodate more than 50

Designers at a time, create more Job Servers.)

In addition, the Job Server now generates a Job Server log file for each day.

You can retain the Job Server logs for a fixed number of days using a new

setting on the Administrator Log retention period page.

Finally, each Designer client only displays the logs for jobs executed from

that Designer, not from jobs executed using the Management Console,

command line, or Web services.

Getting Started Guide

Citrix Presentation Server version 4.0

The documentation that describes Citrix was removed from the Data Integrator

Getting Started Guide in a prior version. Data Integrator now supports Citrix

BusinessObjects Data Integrator Release Notes 41

Documentation updates

6

Designer Guide

Presentation Server version 4.0. For more information, see documentation

for Citrix support on the Business Objects developer community Web site at

http://diamond.businessobjects.com/EIM/DataIntegrator.

AIX user resource limits

ADAPT00871860

In the section (chapter) Installing Data Integrator in UNIX Systems in the AIX

user resource limits subsection, change the following line in the User resource

limits table:

From:

To:

CommentsValueUser resource limit

At least 2GB2097151data (kbytes)

CommentsValueUser resource limit

(blank)unlimiteddata (kbytes)

Designer Guide

Changed-data capture for Microsoft SQL Server 2005

Data Integrator now supports changed-data capture (CDC) for Microsoft SQL

Server 2005.

In chapter 19, Techniques for Capturing Changed Data, the section "Setting

Up SQL Replication Server for CDC" was written for Microsoft SQL Server

2000.

The following procedure applies to Microsoft SQL Server 2005.

42 BusinessObjects Data Integrator Release Notes

Documentation updates

Designer Guide

To configure publications for Microsoft SQL Server 2005 CDC

1. Start the Microsoft SQL Server Management Studio.

2. Select the SQL Server, right-click Replication menu, then select New >

Publication. The New Publication Wizard opens.

3. In the New Publication Wizard click Next.

4. Select the database that you want to publish and click Next.

5. Under Publication type, select Transactional publication, and then click

Next to continue.

6. Click to select tables and columns to publish as articles. Then click to

open Article Properties.

7. Set the following to False: - Copy clustered index - Copy INSERT,

UPDATE and DELETE - Create schemas at subscriber

8. Set the "Action if name is in use" to "keep the existing table unchanged"

9. Set Update delivery format and Delete delivery format to XCALL <stored

procedure>. Click OK to save the article properties.

10. Configure Agent Security and specify the account connection setting.

Click Security Settings to set the Snapshot agent.

11. Configure the Agent Security account with system administration privileges

and click OK.

12. Enter the login password for the Log Reader Agent by clicking Security

Settings. Note that it has to be a login granting system administration

privileges.

13. In the Log Reader Agent Security window, enter and confirm password

information.

14. Click to select Create the publication then click Finish to create a new

publication.

15. To Complete the Wizard, enter a Publication name and click Finish to

create your publication.

6

BusinessObjects Data Integrator Release Notes 43

Documentation updates

6

Designer Guide

Creating Microsoft Excel workbook file formats on UNIX platforms

Note: The following new section will be added to Chapter 6: File Formats

in the Data Integrator Designer Guide.

This section describes how to use a Microsoft Excel workbook as a source

with a Job Server on a UNIX platform.

To create Microsoft Excel workbook file formats on Windows, see “Excel

workbook format” in the Data Integrator Reference Guide.

To access the workbook, you must create and configure an adapter instance

in the Administrator. The following procedure provides an overview of the

configuration process. For details about creating adapters, see Chapter 10,

“Adapters,” in the Data Integrator Management Console: Administrator Guide.

To create a Microsoft Excel workbook file format on UNIX

1. Using the Server Manager ($LINK_DIR/bin/svrcfg), ensure the UNIX Job

Server can support adapters. See “Configuring Job Servers and Access

Servers” in the Data Integrator Getting Started Guide.

2. Ensure a repository associated with the Job Server has been added to

the Administrator. To add a repository to the Administrator, see “Adding

repositories” in the Data Integrator Management Console: Administrator

Guide.

3. In the Administrator, add an adapter to access Excel workbooks. See

“Adding and configuring adapter instances” in the Data Integrator

Management Console: Administrator Guide.

You can only configure one Excel adapter per Job Server. Use the

following options:

• On the Installed Adapters tab, select MSExcelAdapter.

• On the Adapter Configuration tab for the Adapter instance name,

type BOExcelAdapter (required and case sensitive).

You may leave all other options at their default values except when

processing files larger than 1 MB. In that case, change the Additional

44 BusinessObjects Data Integrator Release Notes

Documentation updates

Reference Guide

Java Launcher Options value to -Xms64m -Xmx512 or -Xms128m

-Xmx1024m (the default is -Xms64m -Xmx256m). Note that Java

memory management can prevent processing very large files (or many

smaller files).

4. Start the adapter.

5. In the Designer on the Formats tab of the object library, create the file

format by importing the Excel workbook. For details, see “Excel format”

in the Data Integrator Reference Guide.

Note:

• To import the workbook, it must be available on a Windows file system.

You can later change the location of the actual file to use for processing

in the format source editor. See “Excel workbook source options” in the

Data Integrator Reference Guide.

• To reimport or view data in the Designer, the file must be available on

Windows.

• Entries in the error log file might be represented numerically for the date

and time fields.

Additionally, Data Integrator writes the records with errors to the output

(in Windows these records are ignored).

6

Reference Guide

The following updates apply to the Data Integrator Reference Guide.

Maximum number of loaders

ADAPT00667119

Data Integrator now supports a maximum number of loaders of 10.

The following sections describe the option Number of Loaders.

Chapter 2, Data Integrator Objects, Section "Target" in Table 2-30: Target

•

table options available in all datastores

Chapter 5, Transforms, section "Data_Transfer" in subsection "Target

•

table options"

BusinessObjects Data Integrator Release Notes 45

Documentation updates

6

Reference Guide

Replace the first paragraph in these descriptions of Number of Loaders

with the following paragraph:

Loading with one loader is known as "single loader loading." Loading when

the number of loaders is greater than one is known as "parallel loading." The

default number of loaders is 1. The maximum number of loaders is 10. If you

specify a number greater than this maximum value, Data Integrator uses 10

loaders.

Reserved words

ADAPT00621691

Reserved words should not be used as the user name when you create a

Data Integrator repository.

In chapter 10, Reserved Words, the section "About Reserved Words" lists

the reserved words that should not be used as names for design elements.

Replace the first paragraph with the following paragraph:

The following words have special meanings in Data Integrator and therefore

should not be used as names for work flows, data flows, transforms, or other

design elements that you create. They should also not be used as user

names when you create a Data Integrator repository. They are reserved with

any combination of upper- and lower-case letters.

Microsoft Excel workbook format for UNIX

In chapter 2, Objects, "Descriptions of objects," the "Excel workbook format"

entry, in the Notes section replace the fifth bulleted item with the following.

For workbook-specific (global) named ranges, Data Integrator would name

•

a range called range as range. However for worksheet-specific (local)

named ranges, Data Integrator would name a range called range that

belongs to the worksheet Sheet1 as range!Sheet1.

In UNIX, you must also include the worksheet name when defining a

workbook-specific (global) named range.

46 BusinessObjects Data Integrator Release Notes

Documentation updates

Salesforce.com Adapter Interface Guide

Salesforce.com Adapter Interface Guide

When you create a new Custom table in Salesforce.com and attempt to fetch

CDC data from that table from the Data Integrator Designer, if the CDC table

does not specify a starting date, Data Integrator will throw an error stating:

Error reading from <custom table name>: <There was an unexpected

error. Salesforce.com message is startDate before or replica

tion enabled date>.

Therefore, the "Using the CDC table source default start date" section of the

Salesforce.com Adapter Interface Guide should state the following:

When you do not specify a value for the start date:

Data Integrator uses the beginning of the Salesforce.com retention period

•

as the start date if a check-point is not available (during initial execution).

Data Integrator uses the check-point as the start date if a check-point is

•

available and occurs within the Salesforce.com retention period. If the

check-point occurs before the retention period, Data Integrator uses the

beginning of retention period as the start date.

6

Data Integrator may throw an error message. Business Objects

•

recommends that you specify a default start date for your CDC tables.

Please note the following correction to the Salesforce.com Adapter Interface

Guide version 11.7.0.0. These changes will be incorporated into a future

release of the manual set.

In Chapter 3, page 29, change the text under "Using the CDC table source

default start date" as follows:

When you do not specify a value for the start date:

Data Integrator uses the beginning of the Salesforce.com retention period

•

as the start date if a check-point is not available (during initial execution).

However, if a table is created within the Salesforce.com retention period

and a check-point is not available, the execution will return an error

message. Drill into the source object and enter a value for the CDC table

source default start date. The value must be a date that occurs after

the date the table was created to work around this problem.

BusinessObjects Data Integrator Release Notes 47

Documentation updates

Salesforce.com Adapter Interface Guide

6

48 BusinessObjects Data Integrator Release Notes

Resolved issues

7

Resolved issues

7

Please refer to http://support.businessobjects.com/documentation for the

latest version of these release notes, which includes resolved issues specific

to version 11.7.3.

The numbers in the following table are sorted in ascending order and refer

to the Business Objects ADAPT system Problem Report tracking number.

ADAPT00534412

ADAPT00613822

ADAPT00619275

ADAPT00619336

When creating a BusinessObjects Universe from Data Integrator using either Microsoft SQLServer or Sybase datastores, the table names are not qualified with the proper

database name and owner name in the BusinessObjects

Universe. This issue is resolved. Customers using Universe

Builder must apply the BusinessObjects Enterprise CHF-13

patch level or later for this fix to take effect. Contact Customer Support for information on how to download CHF

patches.

See also ADAPT00534412 in the CHF-13 documentation

for additional information regarding this fix.

When a DTD schema contains a standard XML header,

adapters could not import the schema. This issue has been

resolved.

In this release, Data Integrator uses a new version of the

Xerce2 library that, when using Data Integrator Web services, allows you to import a WSDL file that previously

caused the following error:

XML parser failed: Error <An exception occurred! Type:NetAccessorException.

This issue has been resolved.

When editing column and table descriptions in a datastore,

the descriptions sometimes got lost. This issue has been

addressed.

ADAPT00619350

50 BusinessObjects Data Integrator Release Notes

Data Integrator always works in binary collation for the Table

Comparison transform. If the comparison table sort order is

not binary, Data Integrator will generate inconsistent results

between cached, row-by-row, and sorted comparison

methods.

Resolved issues

7

ADAPT00619352

ADAPT00619355

ADAPT00619357

ADAPT00619387

ADAPT00619393

ADAPT00619403

ADAPT00619413

When using regular loading and bulk loading for Microsoft

SQL Server targets, decimal data rounding was not the

same with the two loading methods. This problem has been

addressed.

In metadata Reports, the start timestamp for data flows

displayed incorrectly. This problem has been fixed.

In a data flow with an XML source containing a join with a

nested query, the WHERE clause and ORDER BY clause

now compile properly without getting an Unknown type

error.

The push-down capability of Informix sources has been

improved. Data Integrator now pushes down more queries

to the Informix database for evaluation, and you can expect

better performance in those scenarios.

Metadata Reports was not showing column-mapping information. This is no longer an issue.

When importing Microsoft SQL Server tables from the Designer, locks would not release on SQL Server's tempdb

database. This problem has been resolved.

When working with the Query transform in the Data Integrator Designer, outer join entries sometimes disappeared from

the Query transform when modifying other parts of the Query

transform. This problem has been fixed.

ADAPT00619414

ADAPT00619421

The Profiler can now profile Microsoft SQL Server database

tables that use NT authentication.

When importing a SQLServer table that contained an index

with the index name size greater than 64, the table import

operation failed. This problem has been resolved.

BusinessObjects Data Integrator Release Notes 51

Resolved issues

7

ADAPT00619427

ADAPT00619438

ADAPT00619479

For Microsoft SQL Server datastores, if the table owner

name is DBO and the CMS connection is also Microsoft

SQL Server with a login user name of sa, the updated Universe will determine the new table schema to be new because it compares DBO to sa as the owner name.

Therefore, do not use Microsoft SQL Server as a CMS

connection with sa as the login name.

Data Integrator generated incorrect outer join syntax when

accessing DB2 on AS/400. This issue has been fixed.

Due to a known Informix issue, two phase commit (XA)

transactions in IBM® Informix® Dynamic Server could cause

Data Integrator to fail when loading the data to an IBM In

formix Dynamic Server or profiling data with Informix. The

typical Informix errors are:

Could not position within a table

and

Could not do a physical-order read to fetch

next row

The reference number from the IBM site is Reference#

1172548

http://www-1.ibm.com/sup

port/docview.wss?uid=swg21172548

ADAPT00619756

ADAPT00619781

ADAPT00619824

52 BusinessObjects Data Integrator Release Notes

An issue with post-load commands in the table loader getting

corrupted has been fixed.

After upgrading Data Integrator from 11.0 to 11.5 or 11.7,

metadata Reports failed with a Java error. This issue has

been fixed.

When using the Profiler to profile a Microsoft SQL Server

table containing columns of text data type, the profiling

server now works properly.

Resolved issues

7

ADAPT00619835

ADAPT00619871

ADAPT00619893

ADAPT00619934

ADAPT00619969

When running metadata Integrator with a clustered CMS

environment, CMS computer names were used when

gathering metadata. This caused the CMS computers to

appear as separate systems in Impact and Lineage reports.

In this release, the metadata integrator gathers the name

of the cluster. Because of this change in behavior, if you

are using a clustered environment and have already run the

metadata Integrator against the CMS system, you need to

delete the data previously collected. Please contact Business Objects Customer Support for details on how to perform this task.

When using an Informix version 7.3 datastore and client

software 2.90, the Informix datastore now correctly imports

tables into Data Integrator.

If the NLS_LENGTH_SEMANTICS flag was set to char in

a UTF-8 encoded repository, the profiler did not work properly for Oracle databases. This problem has been fixed.

The Data Integrator function is_valid_datetime() function

returned true if the hour contained a value greater than 24.

This problem has been fixed.

Data Integrator jobs sometimes exited abnormally on the

Linux platform if there was a lookup_ext function call to an

Oracle database with a datetime column in the condition

list. This fix applies to all UNIX platforms.

ADAPT00620002

ADAPT00620025

ADAPT00620158

ADAPT00620250

On Windows XP operating systems, columns in the query

editor now appear correctly highlighted.

In the Administrator, you can now sort schedules by job

name.

The Difference Viewer no longer shows that a column's

data type and nullable attribute change after changing the

owner name of the table.

Data Integrator did not support any non-ASCII characters

in which the eighth bit was being used in the metadata (for

example the umlaut in German). This is no longer an issue.

BusinessObjects Data Integrator Release Notes 53

Resolved issues

7

ADAPT00620297

ADAPT00620308

ADAPT00620314

ADAPT00620336

ADAPT00620338

ADAPT00620345

ADAPT00620352

Fixed-width file formats were not being created correctly

from existing flat files. This problem has been fixed.

When the Data Integrator-imported table schema contains

a long column that does not match its associated data type

in the database, it could cause an access violation error in

the Data Integrator engine. This problem has been resolved.

When a real-time job terminated, two more instances of the

jobs started. This problem has been fixed. Real-time jobs

now start only once if terminated abnormally.

The documentation for Catch error types and groups was

unclear. The Reference Guide and Designer Guide have

been corrected to clarify that you specify exception groups,

instead of individual error numbers, in a Try/Catch block.

The Designer could not import materialized views on an

Oracle 10g database. This issue has been addressed.

When validating a large job, the Designer sometimes

aborted because of depleted memory available on the system. This problem has been fixed.

When monitoring job execution, on the Monitor tab, the

columns Row count and Elapsed Time were not sorted in

ascending order. This problem has been fixed.

ADAPT00620375

ADAPT00620377

ADAPT00620665

54 BusinessObjects Data Integrator Release Notes

Data Integrator license keys sometimes did not work with

dual Ethernet cards. This problem has been resolved.

When a DTD schema contained a standard XML header,

adapters could not import the schema. This issue has been

resolved.

When data type conversion was required in a Query mapping (for example when mapping a varchar column to an

integer column), the Designer displayed warnings during

validation, but an error occurred at runtime. This issue has

been addressed.

Resolved issues

7

ADAPT00620689

ADAPT00620882

ADAPT00620958

ADAPT00621069

ADAPT00621143

The Data Integrator index() function was not pushed down

to Oracle. The fix addresses this problem. Please note that

there are slight differences between executing the index

function within Oracle and executing the index function

within Data Integrator. Check the instr() function documentation in the Oracle documentation for details.

Using the functions lpad and rpad sometimes lead to

crashes such as an access violation. This problem has been

fixed.

Jobs that were running did not get displayed in the Administrator if the log retention period had been reached. This

problem has been fixed.

The following error was encountered when working with

ABAP programs:

ABAP SYNTAX ERROR Without the addition "CLIENT

SPECIFIED", you cannot specify...

This problem has been resolved.

When using a variable of type datetime in the Reverse Pivot

transform in the Pivot Axis, the following warning is no longer

thrown: "Unexpected axis value <2003-08-15> found in

transform". This issue is resolved in this release by providing

variable support in the Reverse Pivot transform.

ADAPT00621184

ADAPT00621198

ADAPT00621229

ADAPT00621278

The passwords in some of the page of the Administrator

are now encrypted properly. The text of the password cannot

be viewed under any circumstances now.

When using multiple datastore configurations for SAP

datastores, the folder names between the configurations

are no longer switched.

WSDLs generated by Data Integrator can be imported into

a .NET environment.

In this release, Data Integrator has been enhanced to generate DTDs that can handle multiple rows.

BusinessObjects Data Integrator Release Notes 55

Resolved issues

7

ADAPT00621334

ADAPT00621340

ADAPT00621996

ADAPT00622074

ADAPT00622121

ADAPT00622188

Data Integrator can now import an XML file as a large varchar column. Please note that when loading this data into

a target database, most databases have a maximum size

restriction for their character data columns. Note the restrictions for your target database and design the Data Integrator

jobs accordingly.

When a job has multiple validation transforms, the labels

that appear in the DI_ERRORCOLUMN target table are now

correct.

When entering Japanese characters in the Case Transform

labels, you will no longer get an "Internal Application Error"

when saving the dataflow.

If you entered an invalid user name or password in the Administrator, the values remained in the fields. The password

field now clears.

When using a variable in the Error file name field in the flat

file editor, a runtime error occurred when running the job.

This problem has been resolved.

LONG and TEXT data type columns do not get profiled.ADAPT00622137

It is now possible to enter a text delimiter in the flat file format. The format rules and limitations for the text delimiter

are the same as the rules applicable to the field and column

delimiters.

ADAPT00622190

ADAPT00625180

ADAPT00627739

56 BusinessObjects Data Integrator Release Notes

If load triggers were set up in a table loader, sometimes

Data Integrator did not execute the regular load. This problem has been resolved.

Sometimes Data Integrator wrote incorrect data to the target

loader overflow file if using the data type char. This issue

has been solved.

The following error sometimes occurred when Data Integrator processed a complex nested COBOL copybook: Wrong

sequence number for field ...

This problem has been addressed.

Resolved issues

7

ADAPT00629178

ADAPT00631055

ADAPT00632204

ADAPT00633094

ADAPT00633872

ADAPT00633885

In a Case transform, when entering a tab character in the

expression field and saving the data flow, the text following

the tab character disappeared. This issue has been fixed.

While loading data from a source database to a target

database, Data Integrator generated the ORA-01461 error

when using an Oracle database as repository. The

NLS_CHARACTERSET of Oracle database is AL32UTF8.

This problem has been fixed.