Page 1

AWS Snowball

User Guide

AWS Snowball: User Guide

Copyright © 2018 Amazon Web Services, Inc. and/or its affiliates. All rights reserved.

Page 2

AWS Snowball User Guide

Amazon's trademarks and trade dress may not be used in connection with any product or service that is not Amazon's, in any manner

that is likely to cause confusion among customers, or in any manner that disparages or discredits Amazon. All other trademarks not

owned by Amazon are the property of their respective owners, who may or may not be affiliated with, connected to, or sponsored by

Amazon.

Page 3

AWS Snowball User Guide

Table of Contents

....................................................................................................................................................... vi

What Is a Snowball? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

Snowball Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

Prerequisites for Using AWS Snowball .......................................................................................... 2

Tools and Interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

Related Services ......................................................................................................................... 2

Are You a First-Time User of AWS Snowball? ................................................................................. 2

Pricing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

Device Differences . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

Use Case Differences .......................................................................................................... 3

Hardware Differences . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

Tool Differences ................................................................................................................. 5

Other Differences . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

How It Works ............................................................................................................................ 6

How It Works: Concepts ...................................................................................................... 7

How It Works: Implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Jobs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Job Types . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Job Details ...................................................................................................................... 12

Job Statuses .................................................................................................................... 13

Setting Up ............................................................................................................................... 14

Sign Up for AWS .............................................................................................................. 14

Create an IAM User . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Next Step ........................................................................................................................ 15

Getting Started . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Sign Up for AWS ...................................................................................................................... 16

Create an Administrator IAM User .............................................................................................. 16

Importing Data into Amazon S3 ................................................................................................. 16

Create an Import Job . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Receive the Snowball . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Connect the Snowball to Your Local Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

Transfer Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Return the Appliance ........................................................................................................ 24

Monitor the Import Status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

Exporting Data from Amazon S3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

Create an Export Job ........................................................................................................ 25

Receive the Snowball . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Connect the Snowball to Your Local Network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Transfer Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Return the Appliance ........................................................................................................ 32

Repeat the Process ........................................................................................................... 32

Where Do I Go from Here? ........................................................................................................ 32

Best Practices .................................................................................................................................. 33

Security Best Practices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Network Best Practices ............................................................................................................. 33

Resource Best Practices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Performance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

Speeding Up Data Transfer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

How to Transfer Petabytes of Data Efficiently . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Planning Your Large Transfer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Calibrating a Large Transfer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

Transferring Data in Parallel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Using the Snowball Console .............................................................................................................. 40

Cloning an Import Job .............................................................................................................. 40

iii

Page 4

AWS Snowball User Guide

Using Export Ranges . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

Export Range Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Getting Your Job Completion Report and Logs ............................................................................. 42

Canceling Jobs ......................................................................................................................... 43

Using a Snowball . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

Changing Your IP Address ......................................................................................................... 49

Transferring Data ..................................................................................................................... 51

Transferring Data with the Snowball Client . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Using the Snowball Client ................................................................................................. 52

Transferring Data with the Amazon S3 Adapter for Snowball ......................................................... 65

Downloading and Installing the Amazon S3 Adapter for Snowball . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

Using the Amazon S3 Adapter for Snowball ........................................................................ 66

Shipping Considerations .................................................................................................................... 73

Preparing a Snowball for Shipping ............................................................................................. 73

Region-Based Shipping Restrictions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Shipping a Snowball ................................................................................................................. 74

Shipping Carriers .............................................................................................................. 74

Security ........................................................................................................................................... 77

Encryption in AWS Snowball ...................................................................................................... 77

Server-Side Encryption . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

Authorization and Access Control . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

Authentication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

Access Control . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

AWS Key Management Service in Snowball .................................................................................. 84

Using the AWS-Managed Customer Master Key for Snowball .................................................. 85

Creating a Custom KMS Envelope Encryption Key ................................................................. 85

Authorization with the Amazon S3 API Adapter for Snowball . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

Other Security Considerations for Snowball . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

Data Validation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

Checksum Validation of Transferred Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

Common Validation Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

Manual Data Validation for Snowball During Transfer ................................................................... 88

Manual Data Validation for Snowball After Import into Amazon S3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

Notifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

Specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

Supported Network Hardware .................................................................................................... 91

Workstation Specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

Limits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

Regional Limitations for AWS Snowball ....................................................................................... 95

Limitations on Jobs in AWS Snowball ......................................................................................... 95

Limitations on Transferring On-Premises Data with a Snowball ...................................................... 96

Limitations on Shipping a Snowball ............................................................................................ 96

Limitations on Processing Your Returned Snowball for Import . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

Troubleshooting ............................................................................................................................... 98

Troubleshooting Connection Problems ........................................................................................ 98

Troubleshooting Data Transfer Problems ..................................................................................... 98

Troubleshooting Client Problems ................................................................................................ 99

Troubleshooting Snowball Client Validation Problems ........................................................... 99

HDFS Troubleshooting ..................................................................................................... 100

Troubleshooting Adapter Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

Troubleshooting Import Job Problems ....................................................................................... 101

Troubleshooting Export Job Problems ....................................................................................... 101

Job Management API ...................................................................................................................... 102

API Endpoint .......................................................................................................................... 102

API Version . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

API Permission Policy Reference . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

Related Topics ........................................................................................................................ 105

iv

Page 5

AWS Snowball User Guide

Document History . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

AWS Glossary ................................................................................................................................. 108

v

Page 6

AWS Snowball User Guide

This guide is for the Snowball (50 TB or 80 TB of storage space). If you are looking for documentation for

the Snowball Edge, see the AWS Snowball Edge Developer Guide.

vi

Page 7

AWS Snowball User Guide

Snowball Features

What Is an AWS Snowball Appliance?

AWS Snowball is a service that accelerates transferring large amounts of data into and out of AWS using

physical storage appliances, bypassing the Internet. Each AWS Snowball appliance type can transport

data at faster-than internet speeds. This transport is done by shipping the data in the appliances through

a regional carrier. The appliances are rugged shipping containers, complete with E Ink shipping labels.

With a Snowball, you can transfer hundreds of terabytes or petabytes of data between your on-premises

data centers and Amazon Simple Storage Service (Amazon S3). AWS Snowball uses Snowball appliances

and provides powerful interfaces that you can use to create jobs, transfer data, and track the status of

your jobs through to completion. By shipping your data in Snowballs, you can transfer large amounts

of data at a significantly faster rate than if you were transferring that data over the Internet, saving you

time and money.

Note

There are many options for transferring your data into AWS. Snowball is intended for

transferring large amounts of data. If you want to transfer less than 10 terabytes of data

between your on-premises data centers and Amazon S3, Snowball might not be your most

economical choice.

Snowball uses Snowball appliances shipped through your region's carrier. Each Snowball is protected

by AWS Key Management Service (AWS KMS) and made physically rugged to secure and protect your

data while the Snowball is in transit. In the US regions, Snowballs come in two sizes: 50 TB and 80 TB. All

other regions have 80 TB Snowballs only.

Snowball Features

Snowball with the Snowball appliance has the following features:

• You can import and export data between your on-premises data storage locations and Amazon S3.

• Snowball has an 80 TB model available in all regions, and a 50 TB model only available in the US

regions.

• Encryption is enforced, protecting your data at rest and in physical transit.

• You don't have to buy or maintain your own hardware devices.

• You can manage your jobs through the AWS Snowball Management Console, or programmatically with

the job management API.

• You can perform local data transfers between your on-premises data center and a Snowball.

These transfers can be done through the Snowball client, a standalone downloadable client, or

programmatically using Amazon S3 REST API calls with the downloadable Amazon S3 Adapter for

Snowball. For more information, see Transferring Data with a Snowball (p. 51).

• The Snowball is its own shipping container, and its E Ink display changes to show your shipping label

when the Snowball is ready to ship. For more information, see Shipping Considerations for AWS

Snowball (p. 73).

• For a list of regions where the Snowball appliance is available, see AWS Snowball in the AWS General

Reference.

Note

Snowball doesn't support international shipping or shipping between regions outside of the US.

For more information on shipping restrictions, see Region-Based Shipping Restrictions (p. 74).

1

Page 8

AWS Snowball User Guide

Prerequisites for Using AWS Snowball

Prerequisites for Using AWS Snowball

Before transferring data into Amazon S3 using Snowball, you should do the following:

• Create an AWS account and an administrator user in AWS Identity and Access Management (IAM). For

more information, see Creating an IAM User for Snowball (p. 79).

• If you are importing data, do the following:

• Confirm that the files and folders to transfer are named according to the Object Key Naming

Guidelines for Amazon S3. Any files or folders with names that don't meet these guidelines won't be

imported into Amazon S3.

• Plan what data you want to import into Amazon S3. For more information, see How to Transfer

Petabytes of Data Efficiently (p. 36).

• If you are exporting data, do the following:

• Understand what data will be exported when you create your job. For more information, see Using

Export Ranges (p. 40).

• For any files with a colon (:) in the file name, change the file names in Amazon S3 before you create

the export job to get these files. Files with a colon in the file name fail export to Microsoft Windows

Server.

Tools and Interfaces

Snowball uses the AWS Snowball Management Console and the job management API for creating and

managing jobs. To perform data transfers on the Snowball appliance locally, use the Snowball client or

the Amazon S3 Adapter for Snowball. To learn more about using these in detail, see the following topics:

• Using the AWS Snowball Management Console (p. 40)

• Using an AWS Snowball Appliance (p. 45)

• Transferring Data with a Snowball (p. 51)

We also recommend that you check out the job management API for AWS Snowball. For more

information, see AWS Snowball API Reference.

Services Related to AWS Snowball

This guide assumes that you are an Amazon S3 user.

Are You a First-Time User of AWS Snowball?

If you are a first-time user of the Snowball service with the Snowball appliance, we recommend that you

read the following sections in order:

1. To learn more about the different types of jobs, see Jobs for Standard Snowball Appliances (p. 11).

2. For an end-to-end overview of how Snowball works with the Snowball appliance, see How AWS

Snowball Works with the Standard Snowball Appliance (p. 6).

3. When you're ready to get started, see Getting Started with AWS Snowball (p. 16).

2

Page 9

AWS Snowball User Guide

Pricing

Pricing

For information about the pricing and fees associated with the AWS Snowball, see AWS Snowball Pricing.

AWS Snowball Device Differences

The Snowball and the Snowball Edge are two different devices. This guide is for the Snowball. If you are

looking for documentation for the Snowball Edge, see the AWS Snowball Edge Developer Guide. Both

devices allow you to move huge amounts of data into and out of Amazon S3, they both have the same

job management API, and they both use the same console. However, the two devices differ in hardware

specifications, some features, what transfer tools are used, and price.

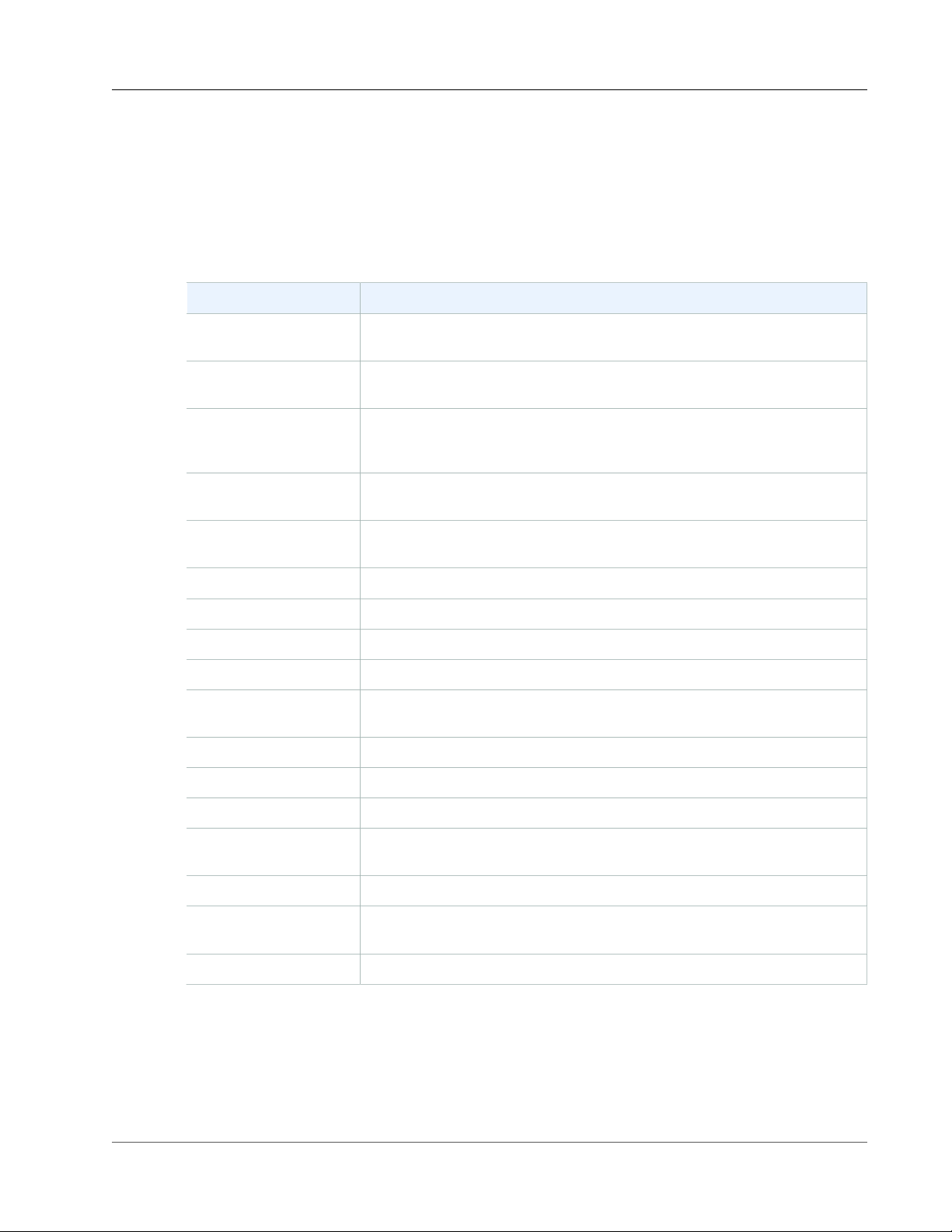

AWS Snowball Use Case Differences

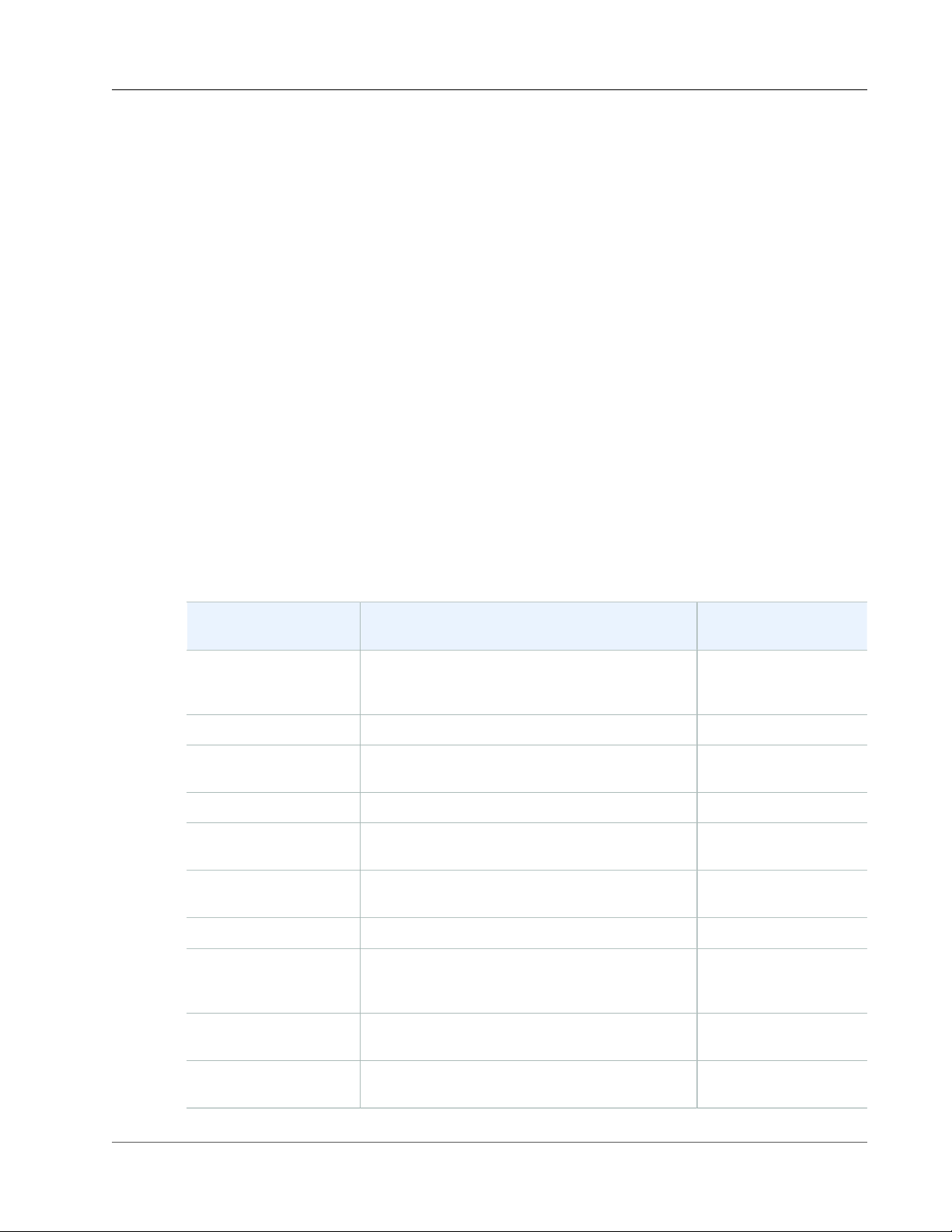

Following is a table that shows the different use cases for the different AWS Snowball devices:

Use case Snowball Snowball Edge

Import data into Amazon S3

Copy data directly from HDFS

Export from Amazon S3

Durable local storage

Use in a cluster of devices

Use with AWS Greengrass (IoT)

Transfer files through NFS with a

GUI

✓ ✓

✓

✓ ✓

✓

✓

✓

✓

AWS Snowball Hardware Differences

Following is a table that shows how the devices differ from each other, physically. For information

on specifications for the Snowball, see AWS Snowball Specifications (p. 91). For information on

specifications for the Snowball Edge, see AWS Snowball Edge Specifications.

3

Page 10

AWS Snowball User Guide

Hardware Differences

Snowball Snowball Edge

Each device has different storage capacities, as follows:

Storage capacity (usable

Snowball Snowball Edge

capacity)

50 TB (42 TB) - US regions only

80 TB (72 TB)

100 TB (83 TB)

100 TB Clustered (45 TB per

✓

✓

✓

✓

node)

Each device has the following physical interfaces for management purposes:

Physical interface Snowball Snowball Edge

E Ink display – used to track

✓ ✓

shipping information and

configure your IP address.

LCD display – used to manage

✓

connections and provide some

administrative functions.

4

Page 11

AWS Snowball User Guide

Tool Differences

AWS Snowball Tool Differences

The following outlines the different tools used with the AWS Snowball devices, and how they are used:

Snowball Tools

Snowball client with Snowball

• Must be downloaded from the AWS Snowball Tools Download page and installed on a powerful

workstation that you own.

• Can transfer data to or from the Snowball. For more information, see Using the Snowball

Client (p. 52).

• Encrypts data on your powerful workstation before the data is transferred to the Snowball.

Amazon S3 Adapter for Snowball with Snowball

• Must be downloaded from the AWS Snowball Tools Download page and installed on a powerful

workstation that you own.

• Can transfer data to or from the Snowball. For more information, see Transferring Data with the

Amazon S3 Adapter for Snowball (p. 65).

• Encrypts data on your powerful workstation before the data is transferred to the Snowball.

Snowball Edge Tools

Snowball client with Snowball Edge

• Must be downloaded from the AWS Snowball Tools Download page and installed on a computer that

you own.

• Must be used to unlock the Snowball Edge or the cluster of Snowball Edge devices. For more

information, see Using the Snowball Client.

• Can't be used to transfer data.

Amazon S3 Adapter for Snowball with Snowball Edge

• Is already installed on the Snowball Edge by default. It does not need to be downloaded or installed.

• Can transfer data to or from the Snowball Edge. For more information, see Using the Amazon S3

Adapter.

• Encrypts data on the Snowball Edge while the data is transferred to the device.

File interface with Snowball Edge

• Is already installed on the Snowball Edge by default. It does not need to be downloaded or installed.

• Can transfer data by dragging and dropping files up to 150 GB in size from your computer to the

buckets on the Snowball Edge through an easy-to-configure NFS mount point. For more information,

see Using the File Interface for the AWS Snowball Edge.

• Encrypts data on the Snowball Edge while the data is transferred to the device.

AWS Greengrass console with Snowball Edge

• With a Snowball Edge, you can use the AWS Greengrass console to update your AWS Greengrass group

and the core running on the Snowball Edge.

5

Page 12

AWS Snowball User Guide

Other Differences

Differences Between Items Provided for the Snowball and

Snowball Edge

The following outlines the differences between the network adapters, cables used, and cables provided

for the Snowball and Snowball Edge.

Network Interface Snowball Support Snowball Edge

Support

RJ45

SFP+

SFP+ (with optic

connector)

QSFP

For more information on the network interfaces, cables, and connectors that work with the different

device types, see the following topics:

• Supported Network Hardware (p. 91) for Snowballs in this guide.

• Supported Network Hardware in the AWS Snowball Edge Developer Guide.

✓ ✓

✓ ✓

✓ ✓

✓

Cables Provided with

Device

Only provided with

Snowball

Only provided with

Snowball

No cables provided for

either device. No optic

connector provided for

Snowball Edge devices.

An optic connector is

provided with each

Snowball

No cables or optics

provided

AWS Snowball Other Differences

For other differences, including FAQs and pricing information, see:

• https://aws.amazon.com/snowball

• https://aws.amazon.com/snowball-edge

How AWS Snowball Works with the Standard Snowball Appliance

Following, you can find information on how AWS Snowball works, including concepts and its end-to-end

implementation.

Topics

• How It Works: Concepts (p. 7)

• How It Works: Implementation (p. 9)

6

Page 13

AWS Snowball User Guide

How It Works: Concepts

How It Works: Concepts

How Import Works

Each import job uses a single Snowball appliance. After you create a job in the AWS Snowball

Management Console or the job management API, we ship you a Snowball. When it arrives in a few days,

you’ll connect the Snowball to your network and transfer the data that you want imported into Amazon

S3 onto that Snowball using the Snowball client or the Amazon S3 Adapter for Snowball.

When you’re done transferring data, ship the Snowball back to AWS, and we’ll import your data into

Amazon S3.

7

Page 14

How Export Works

AWS Snowball User Guide

How It Works: Concepts

Each export job can use any number of Snowball appliances. After you create a job in the AWS Snowball

Management Console or the job management API, a listing operation starts in Amazon S3. This listing

operation splits your job into parts. Each job part can be up to about 80 TB in size, and each job part has

exactly one Snowball associated with it. After your job parts are created, your first job part enters the

Preparing Snowball status.

Soon after that, we start exporting your data onto a Snowball. Typically, exporting data takes one

business day. However, this process can take longer. Once the export is done, AWS gets the Snowball

ready for pickup by your region's carrier. When the Snowball arrives at your data center or office in a few

days, you’ll connect the Snowball to your network and transfer the data that you want exported to your

servers by using the Snowball client or the Amazon S3 Adapter for Snowball.

When you’re done transferring data, ship the Snowball back to AWS. Once we receive a returned

Snowball for your export job part, we perform a complete erasure of the Snowball. This erasure follows

the National Institute of Standards and Technology (NIST) 800-88 standards. This step marks the

completion of that particular job part. If there are more job parts, the next job part now is prepared for

shipping.

Note

The listing operation is a function of Amazon S3. You are billed for it as you are for any Amazon

S3 operation, even if you cancel your export job.

8

Page 15

AWS Snowball User Guide

How It Works: Implementation

How It Works: Implementation

The following are overviews of how the Snowball is implemented for importing and exporting data. Both

overviews assume that you'll use the AWS Snowball Management Console to create your job and the

Snowball client to locally transfer your data. If you'd rather work programmatically, to create jobs you

can use the job management API for Snowball. For more information, see AWS Snowball API Reference.

To transfer your data programmatically, you can use the Amazon S3 Adapter for Snowball. For more

information, see Transferring Data with the Amazon S3 API Adapter for Snowball (p. 65).

End-to-End Import Implementation

1. Create an import job – Sign in to the AWS Snowball Management Console and create a job. The

status of your job is now Job created, and we have queued your job request for processing. If there’s a

problem with your request, you can cancel your job at this point.

2. A Snowball is prepared for your job – We prepare a Snowball for your job, and the status of your job

is now Preparing Snowball. For security purposes, data transfers must be completed within 90 days

of the Snowball being prepared.

3. A Snowball is shipped to you by your region's carrier – The carrier takes over from here, and the

status of your job is now In transit to you. You can find your tracking number and a link to the

tracking website on the AWS Snowball Management Console. For information on who your region's

carrier is, see Shipping Carriers (p. 74).

4. Receive the Snowball – A few days later, your region's carrier delivers the Snowball to the address

that you provided when you created the job, and the status of your job changes to Delivered to you.

When the Snowball arrives, you’ll notice that it didn’t arrive in a box, because the Snowball is its own

shipping container.

5. Get your credentials and download the Snowball client – Get ready to start transferring data by

getting your credentials, your job manifest, and the manifest's unlock code, and then downloading the

Snowball client.

• The Snowball client is the tool that you’ll use to manage the flow of data from your on-premises

data source to the Snowball. You can download the Snowball client from the AWS Snowball Tools

Download page.

• The manifest is used to authenticate your access to the Snowball, and it is encrypted so that only

the unlock code can decrypt it. You can get the manifest from the AWS Snowball Management

Console when the Snowball is on-premises at your location.

• The unlock code is a 29-character code that also appears when you get your manifest. We

recommend that you write it down and keep it separate from the manifest to prevent unauthorized

access to the Snowball while it’s at your facility. The unlock code is visible when you get your

manifest.

6. Install and set up the Snowball client – Install the Snowball client on the computer workstation that

has your data source mounted on it.

7. Position the hardware – Move the Snowball into your data center and open it following the

instructions on the case. Connect the Snowball to power and your local network.

8. Power on the Snowball – Next, power on the Snowball by pressing the power button above the E Ink

display. Wait a few minutes, and the Ready screen appears.

9. Start the Snowball client – When you start the Snowball client on your workstation, type the IP

address of the Snowball, the path to your manifest, and the unlock code. The Snowball client decrypts

the manifest and uses it to authenticate your access to the Snowball.

10.Transfer data – Use the Snowball client to transfer the data that you want to import into Amazon S3

from your data source into the Snowball.

11.Prepare the Snowball for its return trip – After your data transfer is complete, power off the

Snowball and unplug its cables. Secure the Snowball’s cables into the cable caddie on the inside of the

Snowball’s back panel and seal the Snowball. Now the Snowball is ready to be returned.

9

Page 16

AWS Snowball User Guide

How It Works: Implementation

12.Your region's carrier returns the Snowball to AWS – While the carrier has the Snowball for shipping,

the status for the job becomes In transit to AWS.

13.AWS gets the Snowball – The Snowball arrives at AWS, and the status for your job becomes At AWS.

On average, it takes about a day for AWS to begin importing your data into Amazon S3.

14.AWS imports your data into Amazon S3 – When import starts, your job’s status changes to

Importing. The import can take a few days. At this point, if there are any complications or issues, we

contact you through email.

Once the import is complete, your job status becomes Completed, and a PDF file of your job

completion report becomes available for download from the AWS Snowball Management Console.

15.Your imported data now resides in Amazon S3 – With the import complete, the data that you

transferred is now in Amazon S3.

Now that you know how an import job works, you're ready to create your first job. For more information,

see Importing Data into Amazon S3 with AWS Snowball (p. 16).

For more information about the job management API for Snowball, see AWS Snowball API Reference.

End-to-End Export Implementation

1. Create an export job – Sign in to the AWS Snowball Management Console and create a job. This

process begins a listing operation in Amazon S3 to determine the amount of data to be transferred,

and also any optional ranges for objects within your buckets that your job will transfer. Once the

listing is complete, the AWS Snowball Management Console creates all the job parts that you'll need

for your export job. At this point, you can cancel your job if you need to.

Note

The listing operation is a function of Amazon S3. You are billed for it as you are for any

Amazon S3 operation, even if you cancel your export job.

2. A Snowball is prepared for your job part – Soon after your job parts are created, your first job part

enters the Preparing Snowball status. For security purposes, data transfers must be completed

within 90 days of the Snowball being prepared. When the Snowball is prepared, the status changes

to Exporting. Typically, exporting takes one business day; however, this process can take longer. Once

the export is done, the job status becomes Preparing shipment, and AWS gets the Snowball ready for

pickup.

3. A Snowball is shipped to you by your region's carrier – The carrier takes over from here, and the

status of your job is now In transit to you. You can find your tracking number and a link to the

tracking website on the AWS Snowball Management Console. For information on who your region's

carrier is, see Shipping Carriers (p. 74).

4. Receive the Snowball – A few days later, the carrier delivers the Snowball to the address you provided

when you created the job, and the status of your first job part changes to Delivered to you. When the

Snowball arrives, you’ll notice that it didn’t arrive in a box, because the Snowball is its own shipping

container.

5. Get your credentials and download the Snowball client – Get ready to start transferring data by

getting your credentials, your job manifest, and the manifest's unlock code, and then downloading the

Snowball client.

• The Snowball client is the tool that you’ll use to manage the flow of data from the Snowball to your

on-premises data destination. You can download the Snowball client from the AWS Snowball Tools

Download page.

• The manifest is used to authenticate your access to the Snowball, and it is encrypted so that only

the unlock code can decrypt it. You can get the manifest from the AWS Snowball Management

Console when the Snowball is on-premises at your location.

• The unlock code is a 29-character code that also appears when you get your manifest. We

recommend that you write it down and keep it separate from the manifest to prevent unauthorized

10

Page 17

AWS Snowball User Guide

Jobs

access to the Snowball while it’s at your facility. The unlock code is visible when you get your

manifest.

6. Install and set up the Snowball client – Install the Snowball client on the computer workstation that

has your data source mounted on it.

7. Position the hardware – Move the Snowball into your data center and open it following the

instructions on the case. Connect the Snowball to power and your local network.

8. Power on the Snowball – Next, power on the Snowball by pressing the power button above the E Ink

display. Wait a few minutes, and the Ready screen appears.

9. Start the Snowball client – When you start the Snowball client on your workstation, type the IP

address of the Snowball, the path to your manifest, and the unlock code. The Snowball client decrypts

the manifest and uses it to authenticate your access to the Snowball.

10.Transfer data – Use the Snowball client to transfer the data that you want to export from the

Snowball appliance into your on-premises data destination.

11.Prepare the Snowball for its return trip – After your data transfer is complete, power off the

Snowball and unplug its cables. Secure the Snowball’s cables into the cable caddie on the inside of the

Snowball’s back panel and seal the Snowball. The Snowball is now ready to be returned.

12.Your region's carrier returns the Snowball to AWS – When the carrier has the Snowball, the status

for the job becomes In transit to AWS. At this point, if your export job has more job parts, the next job

part enters the Preparing Snowball status.

13.We erase the Snowball – Once we receive a returned Snowball we perform a complete erasure of the

Snowball. This erasure follows the NIST 800-88 standards.

Now that you know how an export job works, you're ready to create your first job. For more information,

see Exporting Data from Amazon S3 with Snowball (p. 24).

Jobs for Standard Snowball Appliances

A job in AWS Snowball (Snowball) is a discrete unit of work, defined when you create it in the console

or the job management API. Jobs have types, details, and statuses. Each of those elements is covered in

greater detail in the sections that follow.

Topics

• Job Types (p. 11)

• Job Details (p. 12)

• Job Statuses (p. 13)

Job Types

There are two different job types: import jobs and export jobs. Both of the Snowball job types are

summarized following, including the source of the data, how much data can be moved, and the result

you can expect at successful job completion. Although these two types of jobs have fundamental

differences, they share some common details The source can be local to your data center or office, or it

can be an Amazon S3 bucket.

Import into Amazon S3

An import job is the transfer of 80 TB or less of your data (located in an on-premises data source), copied

onto a single Snowball, and then moved into Amazon S3. For import jobs, Snowballs and jobs have a

one-to-one relationship, meaning that each job has exactly one Snowball associated with it. If you need

additional Snowballs, you can create new import jobs or clone existing ones.

11

Page 18

AWS Snowball User Guide

Job Details

Your data source for an import job should be on-premises. In other words, the storage devices that

hold the data to be transferred should be physically located at the address that you provided when you

created the job.

You can import any number of directories, files, and objects for each import job, provided the amount of

data you're importing fits within a single Snowball. In the US regions, Snowballs come in two sizes: 50 TB

and 80 TB. All other regions have 80 TB Snowballs only.

When you import files, each file becomes an object in Amazon S3 and each directory becomes a prefix.

If you import data into an existing bucket, any existing objects with the same names as newly imported

objects will be overwritten.

When the import has been processed and verified, AWS performs a complete erasure of the Snowball.

This erasure follows the National Institute of Standards and Technology (NIST) 800-88 standards.

After your import is complete, you can download a job report. This report alerts you to any objects that

failed the import process. You can find additional information in the success and failure logs.

Important

Don't delete your local copies of the transferred data until you can verify the results of the job

completion report and review your import logs.

Export from Amazon S3

An export job is the transfer of any amount of data (located in Amazon S3), copied onto any number of

Snowballs, and then moved one Snowball at a time into your on-premises data destination. When you

create an export job, it's split into job parts. Each job part is no more than 80 TB in size, and each job

part has exactly one Snowball associated with it.

Your data source for an export job is one or more Amazon S3 buckets. Once the data for a job part is

moved from Amazon S3 to a Snowball, you can download a job report. This report will alert you to any

objects that failed the transfer to the Snowball. You can find more information in your job's success and

failure logs.

You can export any number of objects for each export job, using as many Snowballs as it takes to

complete the transfer. Snowballs for an export job's job parts are delivered one after another, with

subsequent Snowballs shipping out to you once the previous job part has entered the In transit to AWS

status.

When you copy objects into your on-premises data destination from a Snowball, those objects are saved

as files. If you copy objects into a location that already holds files, any existing files with the same names

will be overwritten.

When AWS receives a returned Snowball, we perform a complete erasure of the Snowball. This erasure

follows the NIST 800-88 standards.

Important

Don't change, update, or delete the exported Amazon S3 objects until you can verify that all of

your contents for the entire job have been copied to your on-premises data destination.

When you create an export job, you can choose to export an entire Amazon S3 bucket or a specific range

of objects keys. For more information, see Using Export Ranges (p. 40).

Job Details

Each import or export job for Snowball is defined by the details that you specify when it's created. The

following list describes all the details of a job.

• Job name – A name for the job, containing alphanumeric characters, spaces, and any Unicode special

characters.

12

Page 19

AWS Snowball User Guide

Job Statuses

• Job type – The type of job, either import or export.

• Job ID – A unique 39-character label that identifies your job. The job ID appears at the bottom of the

shipping label that appears on the E Ink display, and in the name of a job's manifest file.

• Created date – The date that you created this job.

• Shipping speed – Speed options are based on region. For more information, see Shipping

Speeds (p. 76).

• IAM role ARN – This Amazon Resource Name (ARN) is the AWS Identity and Access Management

(IAM) role that is created during job creation with write permissions for your Amazon S3 buckets. The

creation process is automatic, and the IAM role that you allow Snowball to assume is only used to copy

your data between your Amazon S3 buckets and the Snowball. For more information, see Creating an

IAM Role for Snowball (p. 81).

• AWS KMS key – In Snowball, AWS Key Management Service (AWS KMS) encrypts the keys on each

Snowball. When you create your job, you also choose or create an ARN for an AWS KMS encryption key

that you own. For more information, see AWS Key Management Service in Snowball (p. 84).

• Snowball capacity – In the US regions, Snowballs come in two sizes: 50 TB and 80 TB. All other

regions have the 80 TB Snowballs only.

• Storage service – The AWS storage service associated with this job, in this case Amazon S3.

• Resources – The AWS storage service resources associated with your job. In this case, these are the

Amazon S3 buckets that your data is transferred to or from.

Job Statuses

Each job has a status, which changes to denote the current state of the job.

Job Status Description Job Type That Status

Applies To

Job created Your job has just been created. This status is the

only one during which you can cancel a job or its

job parts, if the job is an export job.

Preparing Snowball AWS is preparing a Snowball for your job. Both

Exporting AWS is exporting your data from Amazon S3 onto

a Snowball.

Preparing shipment AWS is preparing to ship a Snowball to you. Both

In transit to you The Snowball has been shipped to the address

you provided during job creation.

Delivered to you The Snowball has arrived at the address you

provided during job creation.

In transit to AWS You have shipped the Snowball back to AWS. Both

At AWS Your shipment has arrived at AWS. If you're

importing data, your import typically begins

within a day of its arrival.

Both

Export

Both

Both

Both

Importing AWS is importing your data into Amazon Simple

Storage Service (Amazon S3).

Completed Your import job or export job part has completed

successfully.

13

Import

Both

Page 20

AWS Snowball User Guide

Setting Up

Job Status Description Job Type That Status

Applies To

Canceled Your job has been canceled. You can only cancel

Snowball import jobs during the Job created

status.

Setting Up Your AWS Access

Before you use AWS Snowball (Snowball) for the first time, you need to complete the following tasks:

1. Sign Up for AWS (p. 14).

2. Create an IAM User (p. 14).

Sign Up for AWS

When you sign up for Amazon Web Services (AWS), your AWS account is automatically signed up for all

services in AWS, including AWS Import/Export. You are charged only for the services that you use. For

more information about pricing and fees for Snowball, see AWS Snowball Pricing. Snowball is not free to

use; for more information on what AWS services are free, see AWS Free Usage Tier.

If you have an AWS account already, skip to the next task. If you don't have an AWS account, use the

following procedure to create one.

To create an AWS account

1. Open https://aws.amazon.com/, and then choose Create an AWS Account.

Note

This might be unavailable in your browser if you previously signed into the AWS

Management Console. In that case, choose Sign in to a different account, and then choose

Create a new AWS account.

2. Follow the online instructions.

Both

Part of the sign-up procedure involves receiving a phone call and entering a PIN using the phone

keypad.

Note your AWS account number, because you'll need it for the next task.

Create an IAM User

Services in AWS, such as AWS Import/Export, require that you provide credentials when you access

them, so that the service can determine whether you have permission to access its resources. AWS

recommends not using the root credentials of your AWS account to make requests. Instead, create an

AWS Identity and Access Management (IAM) user, and grant that user full access. We refer to these users

as administrator users.

You can use the administrator user credentials, instead of root credentials of your account, to interact

with AWS and perform tasks, such as to create an Amazon S3 bucket, create users, and grant them

permissions. For more information, see Root Account Credentials vs. IAM User Credentials in the AWS

General Reference and IAM Best Practices in IAM User Guide.

If you signed up for AWS but have not created an IAM user for yourself, you can create one using the IAM

console.

14

Page 21

AWS Snowball User Guide

Next Step

To create an IAM user for yourself and add the user to an Administrators group

1. Use your AWS account email address and password to sign in as the AWS account root user to the

IAM console at https://console.aws.amazon.com/iam/.

Note

We strongly recommend that you adhere to the best practice of using the Administrator

IAM user below and securely lock away the root user credentials. Sign in as the root user

only to perform a few account and service management tasks.

2. In the navigation pane of the console, choose Users, and then choose Add user.

3. For User name, type Administrator.

4. Select the check box next to AWS Management Console access, select Custom password, and then

type the new user's password in the text box. You can optionally select Require password reset to

force the user to create a new password the next time the user signs in.

5. Choose Next: Permissions.

6. On the Set permissions for user page, choose Add user to group.

7. Choose Create group.

8. In the Create group dialog box, type Administrators.

9. For Filter, choose Job function.

10. In the policy list, select the check box for AdministratorAccess. Then choose Create group.

11. Back in the list of groups, select the check box for your new group. Choose Refresh if necessary to

see the group in the list.

12. Choose Next: Review to see the list of group memberships to be added to the new user. When you

are ready to proceed, choose Create user.

You can use this same process to create more groups and users, and to give your users access to your

AWS account resources. To learn about using policies to restrict users' permissions to specific AWS

resources, go to Access Management and Example Policies.

To sign in as this new IAM user, sign out of the AWS Management Console, then use the following URL,

where your_aws_account_id is your AWS account number without the hyphens (for example, if your

AWS account number is 1234-5678-9012, your AWS account ID is 123456789012):

https://your_aws_account_id.signin.aws.amazon.com/console/

Type the IAM user name and password that you just created. When you're signed in, the navigation bar

displays "your_user_name @ your_aws_account_id".

If you don't want the URL for your sign-in page to contain your AWS account ID, you can create an

account alias. From the IAM dashboard, choose Create Account Alias and type an alias, such as your

company name. To sign in after you create an account alias, use the following URL:

https://your_account_alias.signin.aws.amazon.com/console/

To verify the sign-in link for IAM users for your account, open the IAM console and check under AWS

Account Alias on the dashboard.

If you're going to create Snowball jobs through an IAM user that is not an administrator user, that

user needs certain permissions to use the AWS Snowball Management Console effectively. For more

information on those permissions, see Creating an IAM User for Snowball (p. 79).

Next Step

Getting Started with AWS Snowball (p. 16)

15

Page 22

AWS Snowball User Guide

Sign Up for AWS

Getting Started with AWS Snowball

With AWS Snowball (Snowball), you can transfer hundreds of terabytes or petabytes of data between

your on-premises data centers and Amazon Simple Storage Service (Amazon S3). Following, you can

find general instructions for creating and completing your first data transfer job. You can find more

information on specific components of Snowball later in this documentation. For an overview of the

service as a whole, see How AWS Snowball Works with the Standard Snowball Appliance (p. 6).

Both sets of instructions assume that you'll use the AWS Snowball Management Console to create your

job and the Snowball client to locally transfer your data. If you'd rather work programmatically, to create

jobs you can use the job management API for Snowball. For more information, see AWS Snowball API

Reference. To transfer your data programmatically, you can use the Amazon S3 Adapter for Snowball. For

more information, see Transferring Data with the Amazon S3 Adapter for Snowball (p. 65).

Sign Up for AWS

If you already have an AWS account, go ahead and skip to the next section: Create an Administrator IAM

User (p. 16). Otherwise, see Sign Up for AWS (p. 14).

Create an Administrator IAM User

If you already have an administrator AWS Identity and Access Management (IAM) user account, go

ahead and skip to one of the sections listed following. If you don't have an administrator IAM user, we

recommend that you create one and not use the root credentials of your AWS account to make requests.

To do so, see Create an IAM User (p. 14).

Important

There is no free tier for Snowball. To avoid unwanted charges and delays, read through the

relevant import or export section following before you start creating your jobs.

Next:

• Importing Data into Amazon S3 with AWS Snowball (p. 16)

• Exporting Data from Amazon S3 with Snowball (p. 24)

Importing Data into Amazon S3 with AWS Snowball

The process for importing data into Amazon S3 with Snowball has the following steps.

Topics

• Create an Import Job (p. 17)

• Receive the AWS Snowball Appliance (p. 18)

• Connect the AWS Snowball Appliance to Your Local Network (p. 20)

• Transfer Data (p. 21)

• Return the Appliance (p. 24)

• Monitor the Import Status (p. 24)

16

Page 23

AWS Snowball User Guide

Create an Import Job

Create an Import Job

To create an import job from the console

1. Sign in to the AWS Management Console and open the AWS Snowball Management Console.

2. Choose Create Job.

3. Plan your job.

In this optional step, you determine the number of jobs you need to create to finish transferring all

the data you want to import into Amazon S3. The answer you provide helps you better plan for your

data transfer.

Once you've finished this page, choose Next.

Note

If you're performing a petabyte scale data transfer, we recommend that you read How to

Transfer Petabytes of Data Efficiently (p. 36) before you create your first job.

4. Give shipping details.

On this page, you provide the shipping address that you want the Snowball for this job delivered

to. In some regions you choose your shipping speed as well. For more information, see Shipping

Speeds (p. 76).

Once you've finished this page, choose Next.

5. Give job details.

On this page, specify the details of your job. These details include the name of your import job,

the region for your destination Amazon S3 bucket, the specific Amazon S3 bucket to receive your

imported data, and the storage size of the Snowball. If you don't already have an Amazon S3 bucket,

you can create one on this page. If you create a new Amazon S3 bucket for your destination, note

that the Amazon S3 namespace for buckets is shared universally by all AWS users as a feature of the

service. Use a bucket name that is specific and clear for your usage.

Once you've finished this page, choose Next.

6. Set security.

On this page, you specify the following:

• The Amazon Resource Name (ARN) for the IAM role that Snowball assumes to import your data to

your destination S3 bucket when you return the Snowball.

• The ARN for the AWS Key Management Service (AWS KMS) master key to be used to protect your

data within the Snowball. For more information, see Security in AWS Snowball (p. 77).

Once you've finished this page, choose Next.

7. Set notifications.

On this page, specify the Amazon Simple Notification Service (Amazon SNS) notification options

for your job and provide a list of comma-separated email addresses to receive email notifications

for this job. You can also choose which job status values trigger these notifications. For more

information, see Snowball Notifications (p. 90).

Once you've finished this page, choose Next.

8. Review.

On the next page, review the information you've provided. To make changes, choose the Edit button

next to the step to change in the navigation pane, or choose Back.

17

Page 24

AWS Snowball User Guide

Receive the Snowball

Important

Review this information carefully, because incorrect information can result in unwanted

delays.

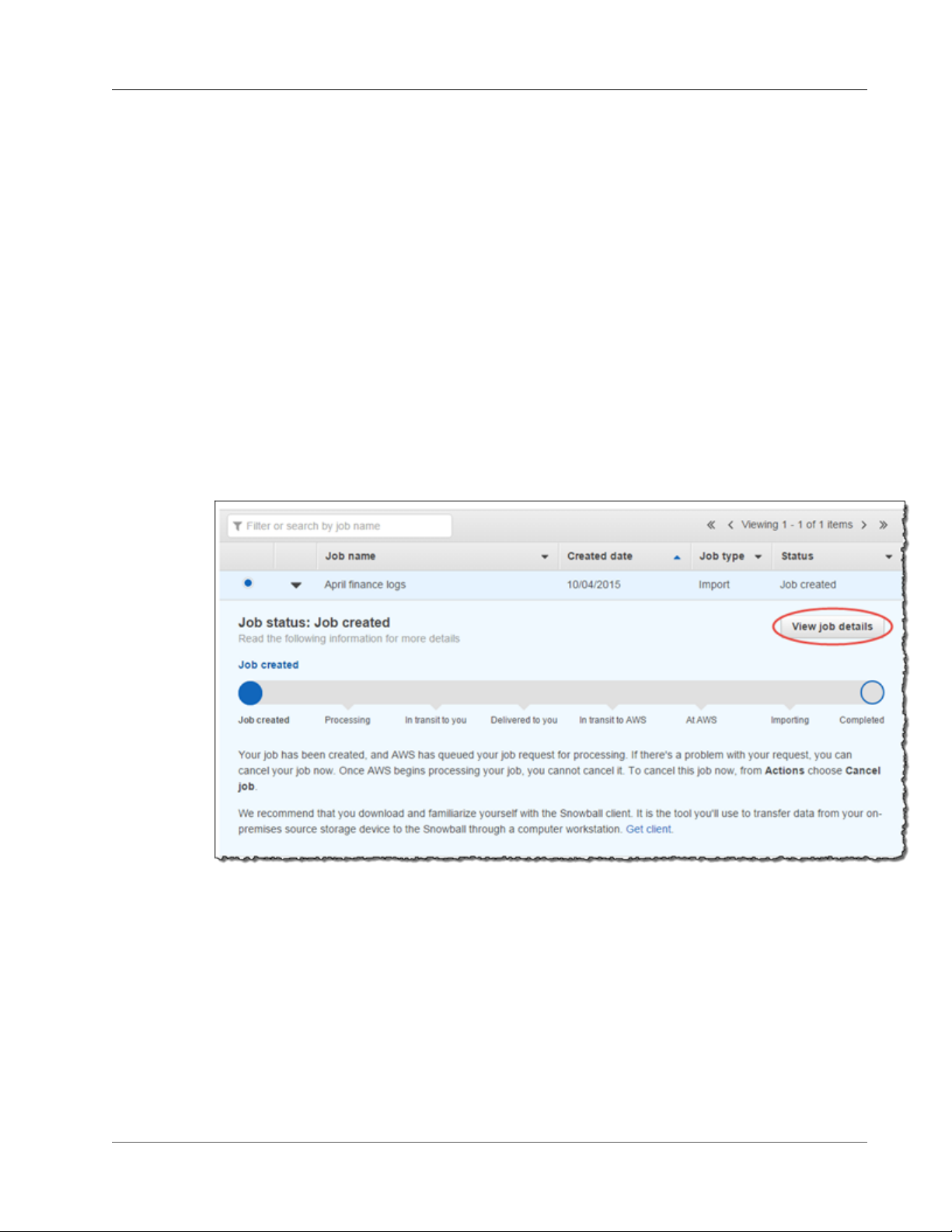

Once your job is created, you're taken to the job dashboard, where you can view and manage your jobs.

The last job you created is selected by default, with its Job status pane open.

Note

The Job created status is the only status during which you can cancel a job.

For more information on managing jobs from the AWS Snowball Management Console and tracking

job status, see Using the AWS Snowball Management Console (p. 40). Jobs can also be created and

managed with the job management API. For more information, see the AWS Snowball API Reference.

After you created your first import job, AWS processes the information you provided and prepares a

Snowball specifically for your import job into Amazon S3. During the processing stage, if there's an

issue with your job, we contact you by email. Otherwise, we ship a Snowball to the address you provided

when you created the job. Shipping can take a few days, but you can track the shipping status of the

Snowball we prepared for your job. In your job's details, you'll see a link to the tracking webpage with

your tracking number provided.

Next: Receive the AWS Snowball Appliance (p. 18)

Receive the AWS Snowball Appliance

When you receive the Snowball appliance, you'll notice that it doesn't come in a box. The Snowball is

its own physically rugged shipping container. When the Snowball first arrives, inspect it for damage

or obvious tampering. If you notice anything that looks suspicious about the Snowball, don't connect

it to your internal network. Instead, contact AWS Support and inform them of the issue so that a new

Snowball can be shipped to you.

Important

The Snowball is the property of AWS. Tampering with a Snowball is a violation of the AWS

Acceptable Use Policy. For more information, see http://aws.amazon.com/aup/.

Before you connect the Snowball to your network and begin transferring data, it's important to cover a

few basic elements of your data transfer.

• The Snowball – The following is what the Snowball will look like.

18

Page 25

AWS Snowball User Guide

Receive the Snowball

• Data source – This device holds the data that you want to transfer from your on-premises data center

into Amazon S3. It can be a single device, such as a hard drive or USB stick, or it can be separate

sources of data within your data center. The data source or sources must be mounted onto your

workstation in order to transfer data from them.

• Workstation – This computer hosts your mounted data source. You'll use this workstation to transfer

data to the Snowball. We highly recommend that your workstation be a powerful computer, able

to meet high demands in terms of processing, memory, and networking. For more information, see

Workstation Specifications (p. 93).

Next: Connect the AWS Snowball Appliance to Your Local Network (p. 20)

19

Page 26

AWS Snowball User Guide

Connect the Snowball to Your Local Network

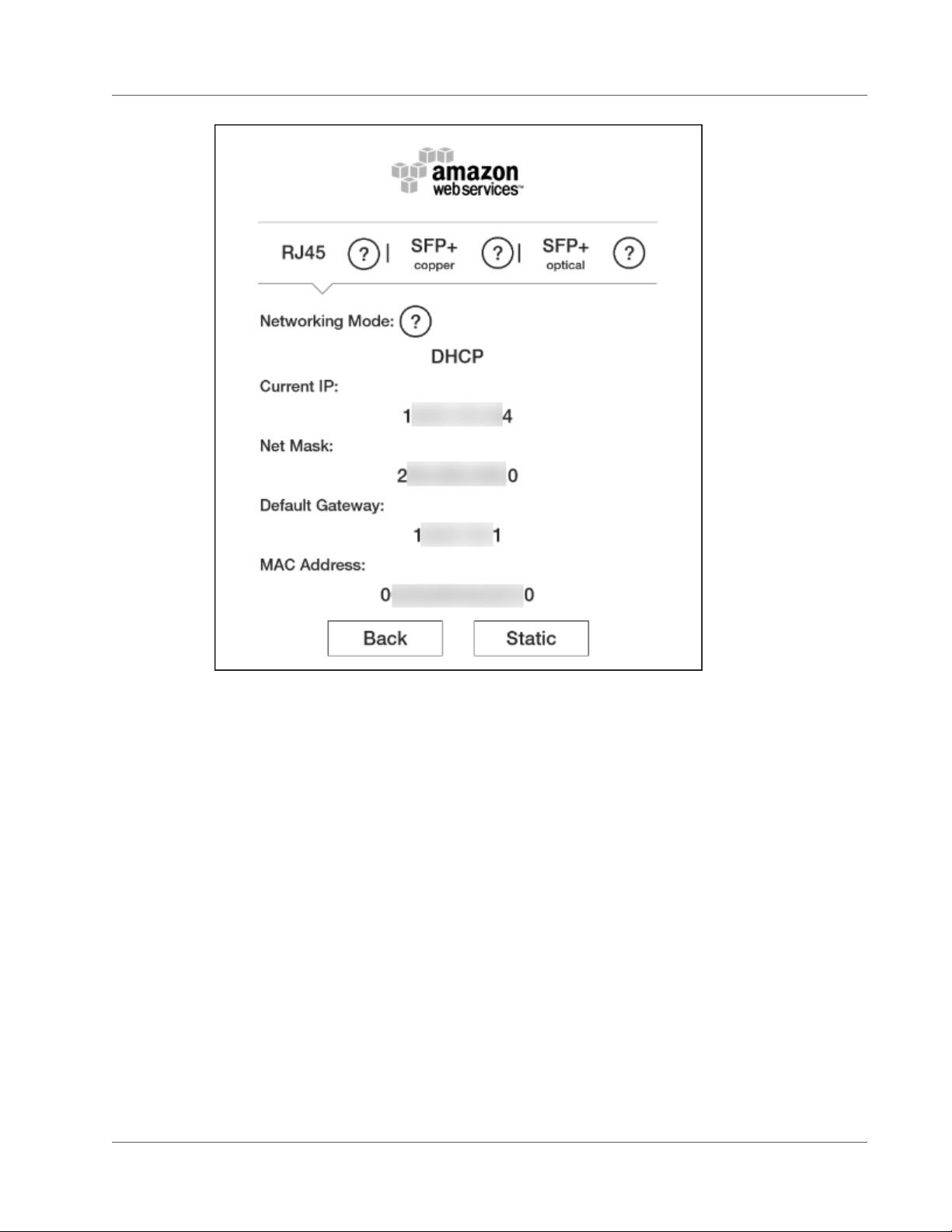

Connect the AWS Snowball Appliance to Your Local Network

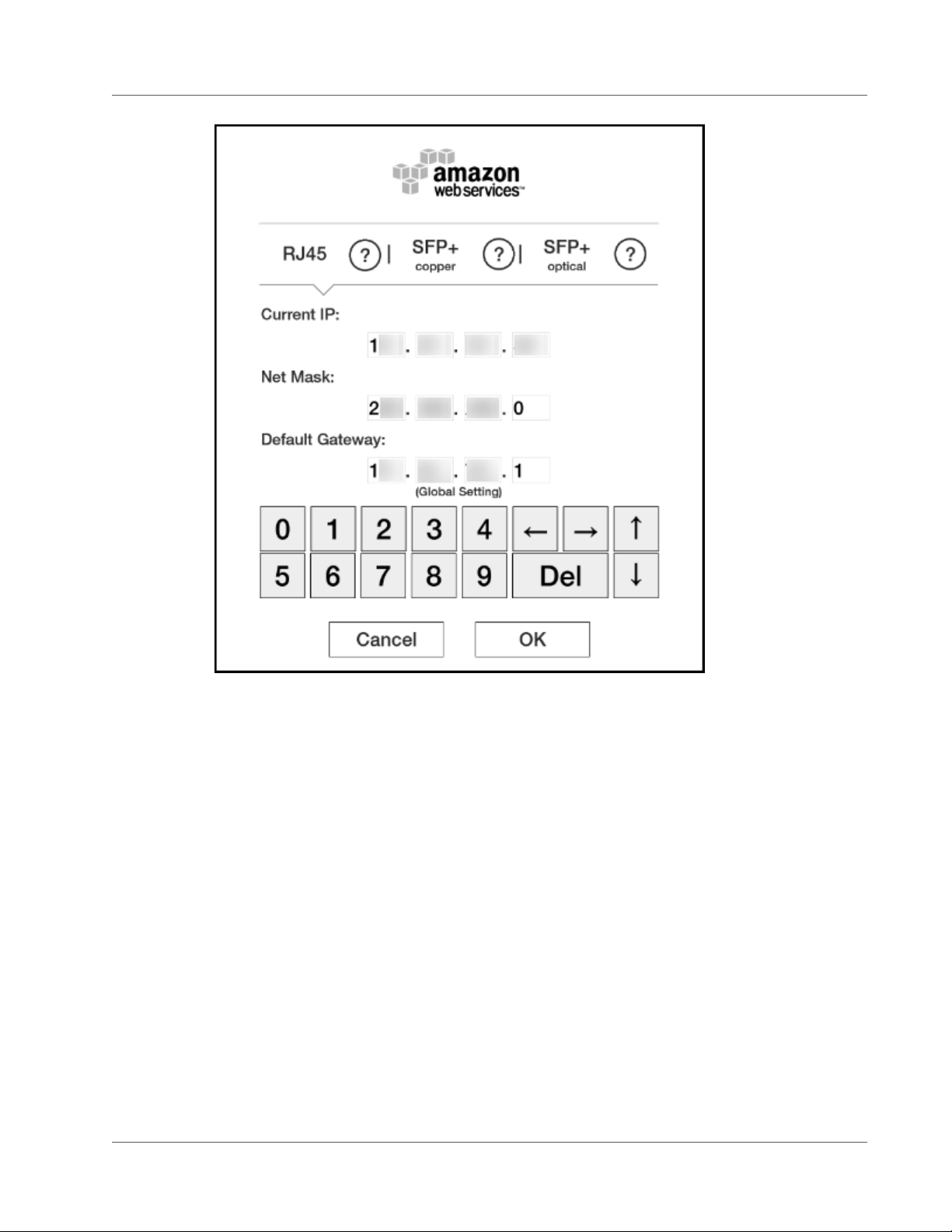

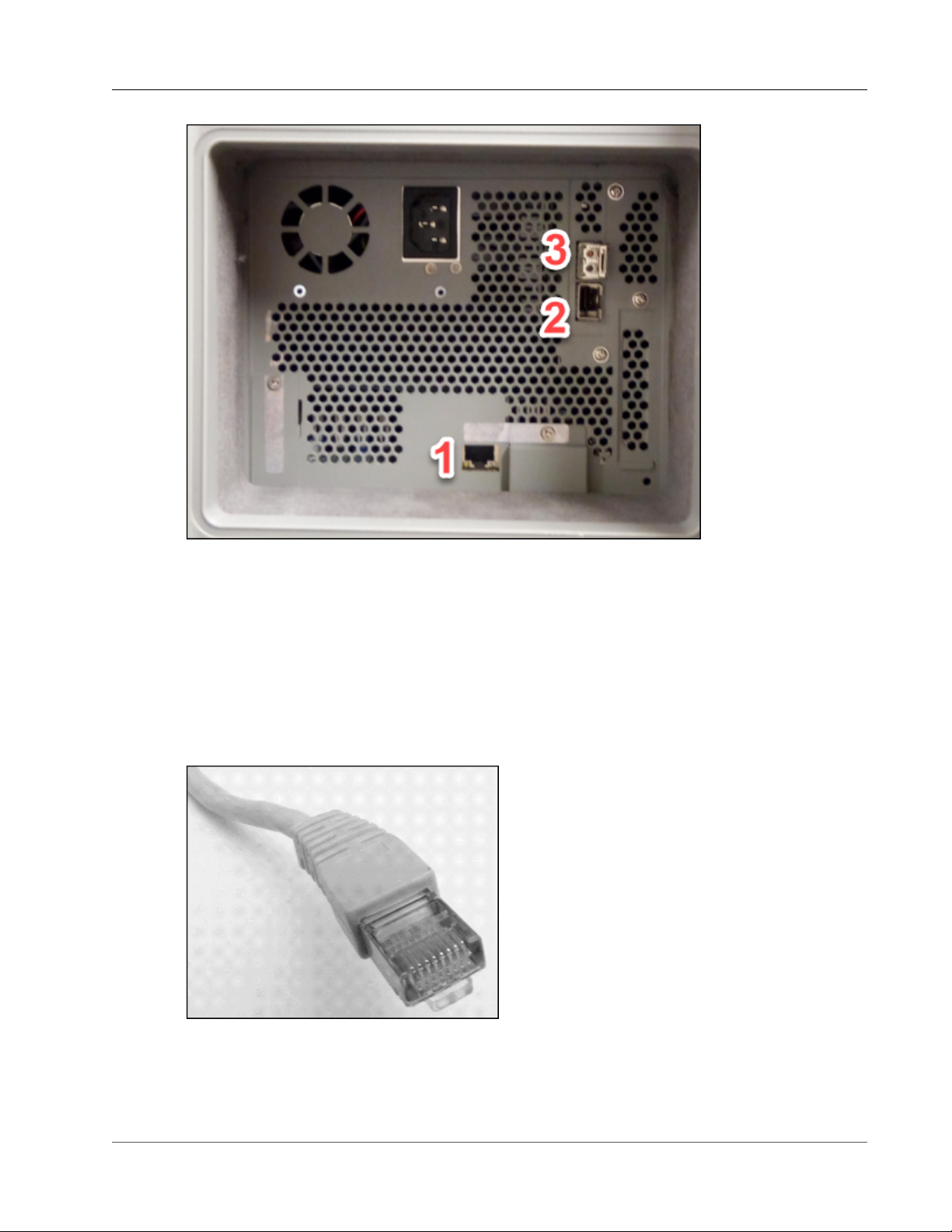

In this step, you'll connect the Snowball to your network. The Snowball appliance has two panels, a front

and a back, which are opened by latches and flipped up to rest on the top of the Snowball. Open the

front panel first, flip it on top of the Snowball, and then open the back panel, flipping it up to rest on the

first. Doing this gives you access to the touch screen on the E Ink display embedded in the front side of

the Snowball, and the power and network ports in the back.

Remove the cables from the cable catch, and plug the Snowball into power. Each Snowball has been

engineered to support data transfer over RJ45, SFP+ copper, or SFP+ optical 10 gigabit Ethernet. For SFP

+ optical, you'll have to use your own cable, connected to the SFP+ optical adapter in one of the SFP+

ports. For more information on cables and ports, see Supported Network Hardware (p. 91). Choose

a networking option, and plug the Snowball into your network. Power on the Snowball by pressing the

power button above the E Ink display.

1. Connect the powered-off Snowball to your network.

Note

We recommend that you set up your network connections so that there are as few hops as

possible between the data source, the workstation, and the Snowball.

2. Attach the power cable to the back of the Snowball, and then plug it in to a reliable source of power.

Then press the power button, located above the E Ink display, and wait for the E Ink display to read

Ready.

3. When the Snowball is ready, the E Ink display shows the following screen.

20

Page 27

AWS Snowball User Guide

Transfer Data

At this point, you can change the default network settings through the E Ink display by choosing

Network. To learn more about specifying network settings for the Snowball, see Changing Your IP

Address (p. 49).

Make a note of the IP address shown, because you'll need it to configure the Snowball client.

Important

To prevent corrupting your data, do not disconnect the Snowball or change its network

settings while transferring data.

The Snowball is now connected to your network.

Next: Transfer Data (p. 21)

Transfer Data

The following section discuss the steps involved in transferring data. These steps involve getting your

credentials, downloading and installing the Snowball client tool, and then transferring data from your

data source into the Snowball using the Snowball client.

Note

You can also transfer data programmatically with the Amazon S3 Adapter for Snowball. For

more information, see Transferring Data with the Amazon S3 Adapter for Snowball (p. 65).

Topics

21

Page 28

AWS Snowball User Guide

Transfer Data

• Get Your Credentials (p. 22)

• Install the AWS Snowball Client (p. 22)

• Use the AWS Snowball Client (p. 22)

• Stop the AWS Snowball Client, and Power Off the Snowball (p. 23)

• Disconnect the Appliance (p. 23)

Get Your Credentials

Each AWS Snowball job has a set of credentials that you must get from the AWS Snowball Management

Console or the job management API to authenticate your access to the Snowball. These credentials are

an encrypted manifest file and an unlock code. The manifest file contains important information about

the job and permissions associated with it. Without it, you won't be able to transfer data. The unlock

code is used to decrypt the manifest. Without it, you won't be able to communicate with the Snowball.

Note

You can only get your credentials after the Snowball appliance has been delivered to you. After

the appliance has been returned to AWS, the credentials for your job are no longer available.

To get your credentials by using the console

1. Sign in to the AWS Management Console and open the AWS Snowball Management Console at AWS

Snowball Management Console.

2. In the AWS Snowball Management Console, search the table for the specific job to download the job

manifest for, and then choose that job.

3. Expand that job's Job status pane, and select View job details

4. In the details pane that appears, expand Credentials. Make a note of the unlock code (including

the hyphens), because you'll need to provide all 29 characters to transfer data. Choose Download

manifest in the dialog box and follow the instructions to download the job manifest file to your

computer. The name of your manifest file includes your Job ID.

Note

As a best practice, we recommend that you don't save a copy of the unlock code in the same

location in the workstation as the manifest for that job. For more information, see Best

Practices for AWS Snowball (p. 33).

Now that you have your credentials, you're ready to transfer data.

Install the AWS Snowball Client

The Snowball client is one of the tools that you can use transfer from your on-premises data source to

the Snowball. You can download the Snowball client for your operating system from AWS Snowball

Tools Download page.

Use the AWS Snowball Client

In this step, you'll run the Snowball client from the workstation first to authenticate your access to the

Snowball for this job, and then to transfer data.

To authenticate your access to the Snowball, open a terminal or command prompt window on your

workstation and type the following command:

snowball start -i [Snowball IP Address] -m [Path/to/manifest/file] -u [29

character unlock code]

Following is an example of the command to configure the Snowball client.

22

Page 29

AWS Snowball User Guide

Transfer Data

snowball start -i 192.0.2.0 -m /Downloads/JID2EXAMPLE-0c40-49a7-9f53-916aEXAMPLE81manifest.bin -u 12345-abcde-12345-ABCDE-12345

In this example, the IP address for the Snowball is 192.0.2.0, the job manifest file that you downloaded is

JID2EXAMPLE-0c40-49a7-9f53-916aEXAMPLE81-manifest.bin, and the 29 character unlock code

is 12345-abcde-12345-ABCDE-12345.

When you've entered the preceding command with the right variables for your job, you get a

confirmation message. This message means that you're authorized to access the Snowball for this job.

Now you can begin transferring data onto the Snowball. Similarly to how Linux allows you to copy files

and folders with the copy (or cp) command, the Snowball client also uses a cp command. As in Linux,

when you use the copy command you'll provide the values of two paths in your command. One path

represents the source location of the data to be copied, and the second path represents the destination

where the data will be pasted. When you're transferring data, destination paths to the Snowball must

start with the s3:// root directory identifier.

During data transfer, you'll notice that there is at least one folder at the root level of the Snowball. This

folder and any others at this level have the same names as the destination buckets that were chosen

when this job was created. Data cannot be transferred directly into the root directory; it must instead go

into one of the bucket folders or into their subfolders.

To transfer data using the Snowball client, open a terminal or command prompt window on your

workstation and type the following command:

snowball cp [options] [path/to/data/source] s3://[path/to/data/destination]

Following is an example of the command to copy data using the client to the Snowball.

snowball cp --recursive /Logs/April s3://MyBucket/Logs

For more information on using the Snowball client tool, see Using the Snowball Client (p. 52). Use the

Snowball client commands to finish transferring your data into the Snowball. When you finish, it's time

to prepare the Snowball for its return trip.

Stop the AWS Snowball Client, and Power Off the Snowball

When you've finished transferring data on to the Snowball, prepare it for its return trip to AWS. To

prepare it, run the snowball stop command in the terminal of your workstation. Running this

command stops all communication to the Snowball from your workstation and performs local cleanup

operations in the background. When that command has finished, power off the Snowball by pressing the

power button above the E Ink display.

Disconnect the Appliance

Disconnect the Snowball cables. Secure the Snowball's cables into the cable caddie on the inside of the

Snowball back panel and seal the Snowball. When the return shipping label appears on the Snowball's E

Ink display, you're ready to drop it off with your region's carrier to be shipped back to AWS. To see who

your region's carrier is, see Shipping Carriers (p. 74).

Important

Don't delete your local copies of the transferred data until the import into Amazon S3 is

successful at the end of the process and you can verify the results of the data transfer.

Next:

Return the Appliance (p. 24)

23

Page 30

AWS Snowball User Guide

Return the Appliance

Return the Appliance

The prepaid shipping label on the E Ink display contains the correct address to return the Snowball.

For information on how to return your Snowball, see Shipping Carriers (p. 74). The Snowball will be

delivered to an AWS sorting facility and forwarded to the AWS data center. The carrier will automatically

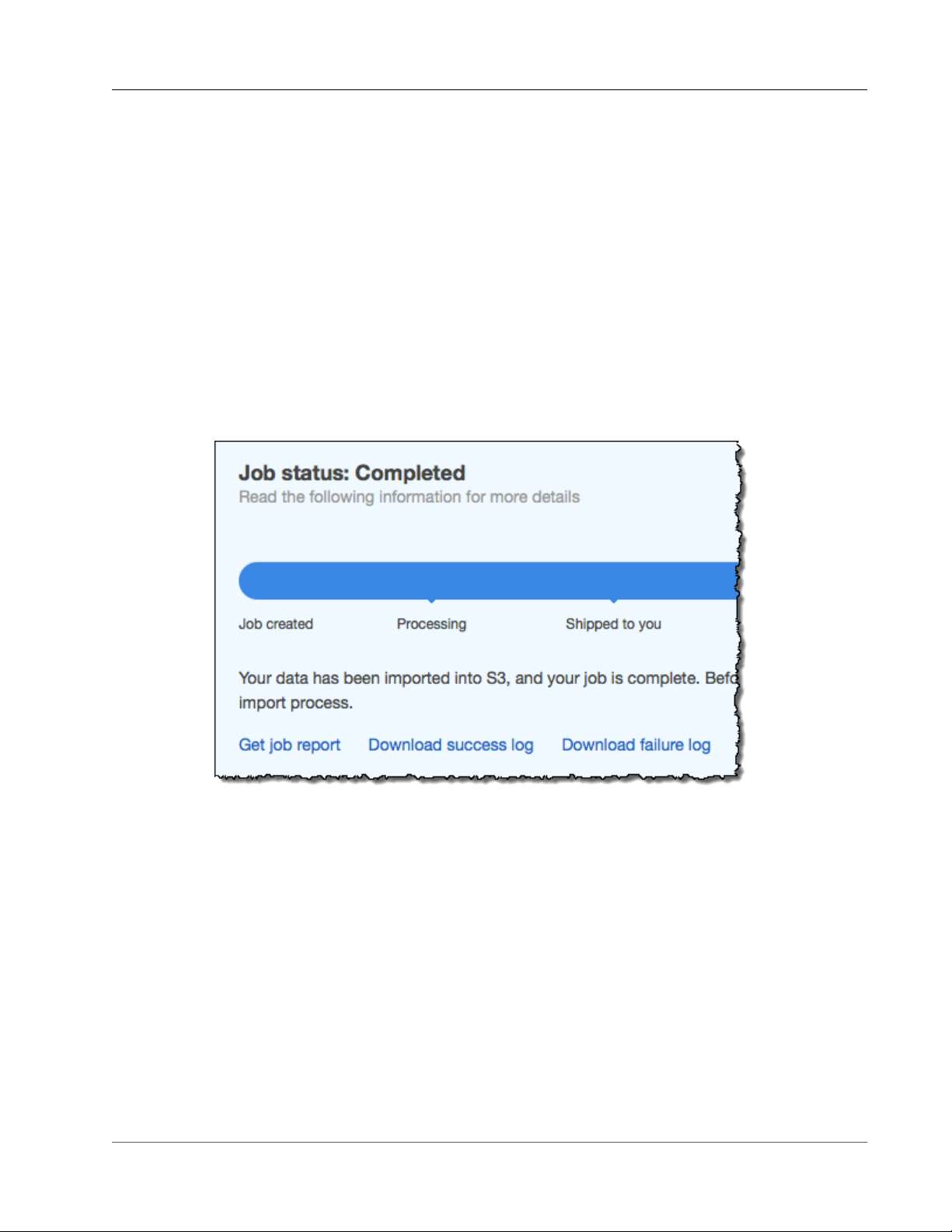

report back a tracking number for your job to the AWS Snowball Management Console. You can access