IBM z-OS User Manual

IBM ^zSeries 990 and z/OS

Reference Guide

August 2004

Table of Contents

z/Architecture |

page 5 |

|

IBM ~zSeries 990 |

page 6 |

|

z990 |

Family Models |

page 10 |

z990 and z900 Performance Comparison |

page 12 |

|

z990 |

I/O SubSystem |

page 13 |

z990 |

Channels and I/O Connectivity |

page 15 |

Fibre Channel Connectivity |

page 17 |

|

Open Systems Adapter-Express Features |

|

|

(OSA-Express) |

page 21 |

|

HiperSockets |

page 26 |

|

Cryptography |

page 28 |

|

Availability |

page 29 |

|

Advanced Availability Functions |

page 31 |

|

Parallel Sysplex Cluster Technology |

page 32 |

|

z990 |

Support for Linux |

page 46 |

zSeries 990 Family Configuration Detail |

page 50 |

|

Physical Characteristics |

page 53 |

|

Coupling Facility - CF Level of Support |

page 54 |

|

z/OS |

|

page 56 |

z/VM |

|

page 79 |

To Learn More |

page 88 |

|

2

zSeries Overview

Technology has always accelerated the pace of change. New technologies enable new ways of doing business, shifting markets, changing customer expectations, and redefi ning business models. Each major enhancement to technology presents opportunities. Companies that understand and prepare for changes can gain advantage over competitors and lead their industries.

Customers of every size, and in every industry are looking for ways to make their businesses more resilient in the face of change and uncertainty. They want the ability to react to rapidly changing market conditions, manage risk, outpace their competitors with new capabilities and deliver clear returns on investments.

Welcome to the on demand era, the next phase of e-business, in which companies move beyond simply integrating their processes to actually being able to sense and respond to fl uctuating market conditions and provide products and services to customers on demand. While the former notion of on demand as a utility capability is a key component, on demand companies have much broader capabilities.

What does an on demand company look like?

•Responsive: It can sense and respond in real time to the changing needs of customers, employees, suppliers and partners

•Variable: It must be capable of employing variable cost structures to do business at high levels of productivity, cost control, capital effi ciency and fi nancial predictability.

•Focused: It concentrates on its core competencies – areas where it has a differentiating advantage – and draws on the skills of strategic partners to manage needs outside of these competencies.

•Resilient: It can handle the ups and downs of the global market, and manage changes and threats with consistent availability, security and privacy – around the world, around the clock.

To support an on demand business, the IT infrastructure must evolve to support it. At its heart the data center must transition to refl ect these needs; the data center must be responsive to changing demands, it must be variable to support the diverse environment, it must be fl exible so that applications can run on the optimal resources at any point in time, and it must be resilient to support an always open for business environment.

The on demand era plays to the strengths of the IBM

^® zSeries®. The IBM ^zSeries 900 (z900) was launched in 2000 and was the fi rst IBM server "designed from the ground up for e-business." The latest

member of the family, the IBM ^zSeries 990 (z990), brings enriched functions that are required for the on demand data center.

The "responsive" data center needs to have systems that are managed to the quality of service goals of the business; they need systems that can be upgraded transparently

to the user and they must be adaptable to the changing requirements of the business. With the zSeries you have a server with high levels of reliability and a balanced design to ensure high levels of utilization and consistently high service to the user. The capacity on demand features continue to evolve, helping to ensure that upgrading the servers is timely and meets the needs of your business. It’s not just the capacity of the servers that can be changed on demand, but also the mix of workload and the allocation of resources to refl ect the evolving needs and priorities of the business.

3

The variable data center needs to be able to respond to the ever-changing demands that occur when you support multiple diverse workloads as a single entity. It must respond to maintain the quality of service required and the cost of utilizing the resources must refl ect the changing environment. The zSeries Intelligent Resource Director (IRD), which combines three key zSeries technologies, z/OS® Workload Manager (WLM), Logical Partitioning and Parallel Sysplex® technology, helps ensure that your most important workloads get the resources they need and constantly manages the resources according to the changing priorities of the business. With Workload License Charges (WLC), as the resources required by different applications, middleware and operating systems change over time,

the software costs change to refl ect this. In addition, new virtual Linux servers can be added in just minutes with zSeries virtualization technology to respond rapidly

to huge increases in user activity.

The fl exible data center must be adaptable to support change and ease integration. This is achieved through a combination of open and industry standards along with the adaptability to direct resources where they are required. The zSeries, along with other IBM servers, has been investing in standards for years. Key is the support for Linux, but let’s not forget Java™ and XML and industry standard technologies, such as FCP, Ethernet and SCSI.

Finally the on demand data center must be designed to be resilient. The zSeries has been renowned for reliability and availability. The zSeries platform will help protect against both scheduled and unscheduled outages, and GDPS® enables protection from loss of complete sites.

The New zSeries from IBM – Impressive Investment -

Unprecedented Performance

IBM’s ongoing investment in zSeries technology has produced a re-invention of the zSeries server — the z990. The z990 makes the mainframe platform more relevant to current business success than ever before. Developed at an investment in excess of $1 billion, the new z990 introduces a host of new benefi ts to meet today’s on demand business.

The major difference is the innovative book structure of the z990. This new packaging of processors, memory and I/O connections allows you to add incremental capacity to a zSeries server as you need it. This makes the z990 a fl exible and cost-effective zSeries server to date.

IBM’s investment in zSeries doesn’t stop here. To solidify the commitment to zSeries, IBM introduces the “Mainframe Charter” that provides a framework for future investment and a statement of IBM’s dedication to deliver ongoing value to zSeries customers in their transformation to on demand business.

Tools for Managing e-business

The IBM ^product line is backed by a comprehensive suite of offerings and resources that provide value at every stage of IT implementation. These tools can help customers test possible solutions, obtain fi nancing, plan and implement applications and middleware, manage capacity and availability, improve performance and obtain technical support across the entire infrastructure. The result is an easier way to handle the complexities and rapid growth of e-business. In addition, IBM Global Services experts can help with business and IT consulting, business transformation and total systems management services, as well as customized e-business solutions.

4

z/Architecture

The zSeries is based on the z/Architecture™, which is designed to reduce bottlenecks associated with the lack

of addressable memory and automatically directs resources to priority work through Intelligent Resource Director. The z/Architecture is a 64-bit superset of ESA/390.

z/Architecture is implemented on the z990 to allow full 64-bit real and virtual storage support. A maximum 256 GB of real storage is available on z990 servers. z990 can defi ne any LPAR as having 31-bit or 64-bit addressability.

z/Architecture has:

•64-bit general registers.

•New 64-bit integer instructions. Most ESA/390 architecture instructions with 32-bit operands have new 64-bit and 32to 64-bit analogs.

•64-bit addressing is supported for both operands and instructions for both real addressing and virtual addressing.

•64-bit address generation. z/Architecture provides 64-bit virtual addressing in an address space, and 64-bit real addressing.

•64-bit control registers. z/Architecture control registers can specify regions, segments, or can force virtual addresses to be treated as real addresses.

•The prefi x area is expanded from 4K to 8K bytes.

•New instructions provide quad-word storage consistency.

•The 64-bit I/O architecture allows CCW indirect data addressing to designate data addresses above 2 GB for both format-0 and format-1 CCWs.

•IEEE Floating Point architecture adds twelve new instructions for 64-bit integer conversion.

•The 64-bit SIE architecture allows a z/Architecture server to support both ESA/390 (31-bit) and z/Architecture (64-bit) guests. Zone Relocation is expanded to 64-bit for LPAR and z/VM®.

•64-bit operands and general registers are used for all Cryptographic instructions

•The implementation of 64-bit z/Architecture can help reduce problems associated with lack of addressable memory by making the addressing capability virtually unlimited (16 Exabytes).

z/Architecture Operating System Support

The z/Architecture is a tri-modal architecture capable of executing in 24-bit, 31-bit, or 64-bit addressing modes. Operating systems and middleware products have been modifi ed to exploit the new capabilities of the z/Architecture. Immediate benefi t can be realized by the elimination of the overhead of Central Storage to Expanded Storage page movement and the relief provided for those constrained by the 2 GB real storage limit of ESA/390. Application programs can run unmodifi ed on the zSeries family of servers.

Expanded Storage (ES) is still supported for operating systems running in ESA/390 mode (31-bit). For z/Architecture mode (64-bit), ES is supported by z/VM. ES is not supported by z/OS in z/Architecture mode.

Although z/OS does not support Expanded Storage when running under the new architecture, all of the Hiperspace™ and VIO APIs, as well as the Move Page (MVPG) instruction, continue to operate in a compatible manner. There is no need to change products that use Hiperspaces.

Some of the exploiters of z/Architecture for z/OS include:

•DB2 Universal Database™ Server for z/OS

•IMS™

•Virtual Storage Access Method (VSAM)

•Remote Dual Copy (XRC)

•Tape and DASD access method

5

IBM ^zSeries 990

Operating System |

ESA/390 |

z/Arch |

Compati |

Exploita |

|

(31-bit) |

(64-bit) |

bility |

tion |

OS/390® Version 2 Release 10 |

Yes |

Yes |

Yes |

No |

z/OS Version 1 Release 2 |

No* |

Yes |

Yes |

No |

z/OS Version 1 Release 3 |

No* |

Yes |

Yes |

No |

z/OS Version 1 Release 4 |

No* |

Yes |

Yes |

Yes |

z/OS Version 1 Release 5, 6 |

No |

Yes |

Included |

Included |

Linux on S/390® |

Yes |

No |

Yes |

Yes |

Linux on zSeries |

No |

Yes |

Yes |

Yes |

z/VM Version 3 Release 1 |

Yes |

Yes |

Yes |

No |

z/VM Version 4 Release 3 |

Yes |

Yes |

Yes |

No |

z/VM Version 4 Release 4 |

Yes |

Yes |

Included |

Yes |

z/VM Version 5 Release 1 (3Q04) |

No |

Yes |

Included |

Yes |

VSE/ESA™ Ver. 2 Release 6, 7 |

Yes |

No |

Yes |

Yes |

z/VSE Version 3 Release 1 |

Yes |

No |

Yes |

Yes |

TPF Version 4 Release 1 |

Yes |

No |

Yes |

No |

(ESA mode only) |

|

|

|

|

|

|

|

|

|

* Customers with z/OS Bimodal Migration Accommodation Offering may run in 31-bit support per the terms and conditions of the Offering. Bimodal Offering available for z/OS ONLY.

IBM ^zSeries is the enterprise class e-business server optimized for the integration, transactions and data of the next generation e-business world. In implementing the z/Architecture with new technology solutions, the zSeries models are designed to facilitate the IT business transformation and reduce the stress of business-to-busi- ness and business-to-customer growth pressure. The zSeries represents an advanced generation of servers that feature enhanced performance, support for zSeries Parallel Sysplex clustering, improved hardware management controls and innovative functions to address e-business processing.

The z990 server enhances performance by exploiting new technology through many design enhancements. With a new superscalar microprocessor and the CMOS 9S-SOI technology, the z990 is designed to further extend and integrate key platform characteristics such as dynamic

fl exible partitioning and resource management in mixed and unpredictable workload environments, providing scalability, high availability and Quality of Service to emerging e-business applications such as WebSphere®, Java and Linux.

The z990 has 4 models available as new build systems and as upgrades from the z900.

The four z990 models are designed with a multi-book system structure which provides up to 32 Processor Units (PUs) that can be characterized prior to the shipment of the machine as either Central Processors (CPs), Integrated Facility for Linux (IFLs), or Internal Coupling Facilities (ICFs).

6

The new IBM ^zSeries Application Assist Processor (zAAP), planned to be available on the IBM

^zSeries 990 (z990) and zSeries 890 (z890) servers, is an attractively priced specialized processing unit that provides strategic z/OS Java execution environment for customers who desire the powerful integration advantages and traditional Qualities of Service a of the zSeries platform.

When confi gured with general purpose Central Processors (CPs) within logical partitions running z/OS, zAAPs can help you to extend the value of your existing zSeries investments and strategically integrate and run e-business Java workloads on the same server as your database, helping to simplify and reduce the infrastructure required for Web applications while helping to lower your overall total cost of ownership.

zAAPs are designed to operate asynchronously with the general purpose CPs to execute Java programming under control of the IBM Java Virtual Machine (JVM). This can help reduce the demands and capacity requirements

on general purpose CPs which may then be available for reallocation to other zSeries workloads. The amount of general purpose CP savings may vary based on the amount of Java application code executed by zAAP(s). And best of all, IBM JVM processing cycles can be executed on the confi gured zAAPs with no anticipated modifi cations to the Java application(s). Execution of the

JVM processing cycles on a zAAP is a function of the IBM Software Developer’s Kit (SDK) for z/OS Java 2 Technology Edition, z/OS 1.6 (or z/OS.e 1.6) and the innovative Processor Resource/Systems Manager™ (PR/SM™).

Notably execution of the Java applications on zAAPs, within the same z/OS LPAR as their associated database subsystems, can also help simplify the server infrastruc-

tures and improve operational effi ciencies. For example, use of zAAPs to strategically integrate Java Web applications with backend databases could reduce the number of TCP/IP programming stacks, fi rewalls, and physical interconnections (and their associated processing) that might otherwise be required when the application servers and their database servers are deployed on separate physical server platforms.

Essentially, zAAPs allow customers to purchase additional processing power exclusively for z/OS Java application execution without affecting the total MSU rating or machine model designation. Conceptually, zAAPs are very similar to a System Assist Processor (SAP); they cannot execute an Initial Program Load and only assist the general purpose CPs for the execution of Java programming. Moreover, IBM does not impose software charges on zAAP capacity. Additional IBM software charges will apply when additional general purpose CP capacity is used.

Customers are encouraged to contact their specifi c ISVs/ USVs directly to determine if their charges will be affected.

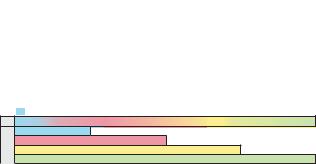

With the introduction of the z990, customers can expect to see the following performance improvements:

Number of CPs |

Base |

Ratio |

|

1 |

z900 |

2C1 |

1.54 - 1.61 |

|

|

|

|

8 |

z900 |

2C8 |

1.52 - 1.56 |

|

|

|

|

16 |

z900 |

2C16 |

1.51 - 1.55 |

|

|

|

|

32 |

z900 |

2C16 |

2.46 - 2.98 |

Note: Greater than 16 CPs requires a minimum of two operating system images

The Large System Performance Reference (LSPR) should be referenced when considering performance on the z990. Visit: ibm.com/servers/eserver/zseries/lspr/ for more information on LSPR.

7

To support the new scalability of the z990 a new improvement to the I/O Subsystem has been introduced to “break the barrier” of 256 channels per Central Electronic Complex (CEC). This provides “horizontal” growth by allowing the defi nition of up to four Logical Channel SubSystems each capable of supporting up to 256 channels giving a total of up to 1024 CHPIDs per CEC. The increased scalability is further supported by the increase in the number of Logical Partitions available from the current 15 LPARs to a new 30 LPARs. There is still a 256-channel limit per operating system image.

These are some of the signifi cant enhancements in the zSeries 990 server that bring improved performance, availability and function to the platform. The following sections highlight the functions and features of the server.

z990 Design and Technology

The z990 is designed to provide balanced system performance. From processor storage to the system’s I/O and network channels, end-to-end bandwidth is provided and designed to deliver data where and when it is needed.

The z990 provides a signifi cant increase in system scalability and opportunity for server consolidation by providing four models, from one to four MultiChip Modules (MCMs), delivering up to a maximum 32-way confi guration. The MCMs are confi gured in a book package, with each book comprised of a MultiChip Module (MCM), memory cards and Self-Timed Interconnects. The MCM, which measures approximately 93 x 93 millimeters (42%

smaller than the z900), contains the processor unit (PU) chips, the cache structure chips and the processor storage controller chips. The MCM contains 101 glass ceramic layers to provide interconnection between the chips and the off-module environment. In total, there is approximately 0.4 kilometer of internal copper wiring on this module.

This new MCM packaging delivers an MCM 42% smaller than the z900, with 23% more I/O connections and 133% I/O density improvement. Each MCM provides support for 12 PUs and 32 MB level 2 cache. Each PU contains 122 million transistors and measures 14.1 mm x 18.9 mm. The design of the MCM technology on the z990 provides the fl exibility to confi gure the PUs for different uses; two of

the PUs are reserved for use as System Assist Processors (SAPs), two are reserved as spares. The remaining inactive 8 PUs on the MCM are available to be characterized as either CPs, ICF processors for Coupling Facility applications, IFLs for Linux applications, IBM ^zSeries Application Assist Processor (zAAPs) for Java applications or as optional SAPs, providing the customer with tremendous fl exibility in establishing the best system for running applications. Each model of the z990 must always be ordered with at least one CP, IFL or ICF.

The PU, which uses the latest chip technology from IBM semiconductor laboratories, is built on CMOS 9S-SOI with copper interconnections. The 14.1 mm x 18.9 mm chip has a cycle time of 0.83 nanoseconds. Implemented on this chip is the z/Architecture with its 64-bit capabilities including instructions, 64-bit General Purpose Registers and translation facilities.

8

Each book can support up to 64 GB of Memory, delivered on two memory cards, and 12 STIs giving a total of 256 GB of memory and 48 STIs on the D32 model. The memory is delivered on 8 GB, 16 GB or 32 GB memory cards which can be purchased in 8 GB increments. The minimum memory is 16 GB. The two memory cards associated with each book must be the same size. Each book has 3 MBAs and each MBA supports 4 STIs.

All books are interconnected with a super-fast bi-direc- tional redundant ring structure which allows the system to be operated and controlled by PR/SM operating in LPAR mode as a symmetrical, memory coherent, multiprocessor. PR/SM provides the ability to confi gure and operate as many as 30 Logical Partitions which may be assigned processors, memory and I/O resources from any of the available books. The z990 supports LPAR mode only (i.e. basic mode is no longer supported).

The MultiChip Module is the technology cornerstone for fl exible PU deployment in the z990 models. For most

models, the ability of the MCM to have inactive PUs allows such features as Capacity Upgrade on Demand (CUoD), Customer Initiated Upgrades (CIU), and the ability to add CPs, ICFs, IFLs, and zAAPs dynamically providing nondisruptive upgrade of processing capability. Also, the ability to add CPs lets a z990 with spare PU capacity become a backup for other systems in the enterprise; expanding the z990 system to meet an emergency outage situation. This is called Capacity BackUp (CBU). The greater capacity of the z990 offers customers even more fl exibility for using this feature to backup critical systems in their enterprise.

In order to support the highly scalable multi-book system design the I/O SubSystem has been enhanced by introducing a new Logical Channel SubSystem (LCSS) which provides the capability to install up to 1024 CHPIDs across three I/O cages (256 per operating system image). I/O improvements in the Parallel Sysplex Coupling Link architecture and technology support faster and more effi cient transmission between the Coupling Facility and production systems. HiperSockets™ provides high-speed capability to communicate among virtual servers and Logical Partitions; this is based on high-speed TCP/IP memory speed transfers and provides value in allowing applications running

in one partition to communicate with applications running in another without dependency on an external network. Industry standard and openness are design objectives for I/O in z990. The improved I/O subsystem is delivering new horizons in I/O capability and has eliminated the 256 limit to I/O attachments for a mainframe.

9

z990 Family Models

The z990 offers 4 models, the A08, B16, C24 and D32, which can be confi gured to give customers a highly scalable solution to meet the needs of both high transaction processing applications and the demands of e-business. The new model structure provides between 1-32 confi gurable Processor Units (PUs) which can be characterized as either CPs, IFLs, ICFs, or zAAPs. A new easy-to-enable ability to “turn off” CPs is available on z990 (a similar offering was available via RPQ on z900). The objective is to allow customers to purchase capacity for future use with minimal or no impact on software billing. An MES feature will enable the CPs for use where the customer requires the increased capacity. There are a wide range of upgrade options available which are indicated in the z990 models chart.

Unlike other zSeries server offerings, it is no longer possible to tell by the hardware model (A08, B16, C24, D32) the number of PUs that are being used as CPs. For software billing purposes only, there will be a “software” model associated with the number of PUs that are characterized as CPs. This number will be reported by the Store System Information (STSI) instruction for software billing purposes only. There is no affi nity between the hardware model and the number of CPs. For example, it is possible to have a Model B16 which has 5 PUs characterized as CPs, so for software billing purposes, the STSI instruction would report 305. The more normal confi guration for a 5-way would be an A08 with 5 PUs characterized as CPs. The STSI instruction would also report 305 for that confi guration.

z990 Models

*S/W |

301 302 303 |

304 305 306 307 |

308 309 310 |

311 312 313 314 |

315 316 317 318 |

319 320 |

321 322 |

323 324 325 326 |

327 328 329 |

330 |

331 332 |

Model |

|

|

|

|

|

|

|

|

|

|

|

|

Model A08 |

|

|

|

|

|

|

|

|

|

|

Model B16

Model C24

Model D32

*S/W Model refers to number of installed CPs. Reported by STSI instruction. Model 300 does not have any CPs. Note: For MSU values, refer to: ibm.com/servers/eserver/zseries/library/swpriceinfo/

z990 and IBM ^On/Off Capacity on Demand

IBM ^On/Off Capacity on Demand (On/Off CoD) is offered with z990 processors to provide a temporary increase in capacity to meet customer's peak workload requirements. The scope of On/Off Capacity on Demand is to allow customers to temporarily turn on unassigned/ unowned PUs available within the current model for use as CPs or IFLs. Temporary use of CFs, memory and channels is not supported.

Before customers can order temporary capacity, they must have a signed agreement for Customer Initiated upgrade (CIU) facility. In addition to that agreement, they must agree to specifi c terms and conditions which govern the use of temporary capacity.

Typically, On/Off Capacity on Demand will be ordered through CIU, however there will be an RPQ available if no RSF connection is present.

10

Although CBU and On/Off Capacity on Demand can both reside on the server, the activation of On/Off Capacity

on Demand is mutually exclusive with Capacity BackUp (CBU) and no physical hardware upgrade will be supported while On/Off Capacity on Demand is active.

This important new function for zSeries gives customers greater control and ability to add capacity to meet the requirements of an unpredictable on demand application environment. On/Off CoD extends zSeries capacity on demand offerings to the next level of fl exibility. It is designed to help customers match cost with capacity utilization and manage periodic business spikes. On/Off

Capacity on Demand is designed to provide a low-risk way to deploy pilot applications, and it is designed to enable a customer to grow capacity rationally and proportionately with market demand.

Customers can also take advantage of Capacity Upgrade on Demand (CUoD), Customer Initiated Upgrade (CIU), and Capacity BackUp (CBU) which are described later in the document.

The z990 has also been designed to offer a high performance and effi cient I/O structure. All z990 models ship with two frames the A-Frame and the Z-Frame; this supports the installation of up to three I/O cages. Each I/O cage has the capability of plugging up to 28 I/O cards. When used in conjunction with the software that supports Logical Channel SubSystems, it is possible to have up to 420 ESCON® channels in a single I/O cage and a maximum of 1024 channels across 3 I/O cages. Alternatively, three I/O cages will support up to 120 FICON™ channels

and up to 360 ESCON channels. Each book will support up to 12 STIs for I/O connectivity. Seven STIs are required to support the 28 channel slots in each I/O cage so in order to support a fully confi gured three I/O cage system 21 STIs are required. To achieve this maximum I/O connectivity requires at least a B16 model which provides 24 STIs.

The following chart shows the upgrade from z900 to z990. There are any to any upgrades from any of the z900 general purpose models. A z900 Coupling Facility Model 100 must fi rst be upgraded to a z900 general purpose model before upgrading to a z990. There are no upgrades from 9672 G5/G6 or IBM ^zSeries 800 (z800).

Model Upgrades

z900 z990

100

101 - 109  A08

A08

1C1 - 116 B16

2C1 - 216 C24

D32

11

z990 and z900 Performance Comparison

The performance design of the z/Architecture enables the entire server to support a new standard of performance for applications through expanding upon a balanced system approach. As CMOS technology has been enhanced to support not only additional processing power, but also more engines, the entire server is modifi ed to support the increase in processing power. The I/O subsystem supports a great amount of bandwidth through internal changes, thus providing for larger and quicker data movement into and out of the server. Support of larger amounts of data within the server required improved management of storage confi gurations made available through integration of the software operating system and hardware support of 64-bit addressing. The combined balanced system effect allows for increases in performance across a broad spectrum of work. However, due to the increased fl exibility in the z990 model structure and resource management in the system, it is expected that there will be larger performance variability than has been previously seen by our traditional customer set. This variability may be observed in several ways. The range of performance ratings across the individual LSPR workloads is likely to have a larger spread than past processors. There will also be more performance variation of individual LPAR partitions as the impact of fl uctuating resource requirements of other partitions can be more pronounced with the increased number of partitions and additional CPs available on the z990. The customer impact of this increased variability will be seen as increased deviations of workloads from single-number- metric based factors such as MIPS, MSUs and CPU time chargeback algorithms. It is important to realize the z990 has been optimized to run many workloads at high utilization rates.

It is also important to notice that the LSPR workloads for z990 have been updated to refl ect more closely our customers’ current and growth workloads. The traditional TSO LSPR workload is replaced by a new, heavy Java tech- nology-based online workload referred to as Trade2-EJB (a stock trading application). The traditional CICS®/DB2® LSPR online workload has been updated to have a Webfrontend which then connects to CICS. This updated workload is referred to as WEB/CICS/DB2 and is representative of customers who Web-enable access to their legacy applications. Continuing in the LSPR for z990 will be the legacy online workload, IMS, and two legacy batch workloads CB84 and CBW2. The z990 LSPR will provide performance ratios for individual workloads as well as a “default mixed workload” which is used to establish single- number-metrics such as MIPS, MSUs and SRM constants. The z990 default mixed workload will be composed of equal amounts of fi ve workloads, Trade2-EJB, WEB/CICS/ DB2, IMS, CB84 and CBW2. Additionally, the z990 LSPR will rate all z/Architecture processors running in LPAR mode and 64-bit mode. The existing z900 processors have all been re-measured using the new workloads — all running in LPAR mode and 64-bit mode.

Using the new LSPR ‘default mixed workload’, and with all processors executing in 64-bit and LPAR mode, the following results have been estimated:

•Comparing a one-way z900 Model 2C1 to a z990 model with one CP enabled, it is estimated that the z990 model has 1.52 to 1.58 times the capacity of the 2C1.

•Comparing an 8-way z900 Model 2C8 to a z990 model with eight CPs enabled, it is estimated that the z990 model has 1.48 to 1.55 times the capacity of the 2C8.

12

z990 I/O SubSystem

•Comparing a 16-way z900 Model 216 to a z990 model with sixteen CPs enabled, it is estimated that the z990 model has 1.45 to 1.53 times the capacity of the 216.

•Comparing a 16-way z900 Model 216 to a z990 model with thirty-two CPs enabled, and the workload executing on the z990 executing in two 16-way LPARs, it is estimated that the z990 model has 2.4 to 2.9 times the capacity of the 216.

Model D32

Model C24 |

Model B16 |

Model A08 |

z900 |

2C1 2C2 2C3 2C4 2C5 2C6 2C7 2C8 2C9 210 211 212 |

213 214 215 |

216 |

Turbo |

|

|

|

z900 |

1C1 1C2 1C3 1C4 1C5 1C6 1C7 1C8 1C9 110 111 112 |

113 114 115 |

116 |

|

|

|

|

Note: Expected performance improvements are based on hardware changes. Additional performance benefits may be obtained as the z/Architecture is fully exploited.

The z990 contains an I/O subsystem infrastructure which uses an I/O cage that provides 28 I/O slots and the ability to have one to three I/O cages delivering a total of 84 I/O slots. ESCON, FICON Express™ and OSA-Express features plug into the z990 I/O cage along with any ISC3s, STI-2 and STI-3 distribution cards, and PCICA and PCIXCC features. All I/O features and their support cards can be hot-plugged in the I/O cage. Installation of an I/O cage remains a disruptive MES, so the Plan Ahead feature remains an important consideration when ordering a z990 system. The A08 model has 12 available STIs and so has connectivity to a maximum of 12 I/O domains, i.e. 48 I/O slots, so if more than 48 I/O slots are required a Model B16 is required. Each model ships with one I/O cage as standard in the A-Frame (the A-Frame also contains the processor CEC cage) any additional I/O cages are installed in the Z-Frame. The z990 provides a 400 percent increase in I/O bandwidth provided by the STIs.

z990 Cage Layout

Z-Frame A-Frame

3rd |

CEC |

|

I/O Cage |

||

|

2nd |

1st |

I/O Cage |

I/O Cage |

13

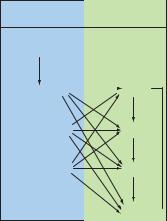

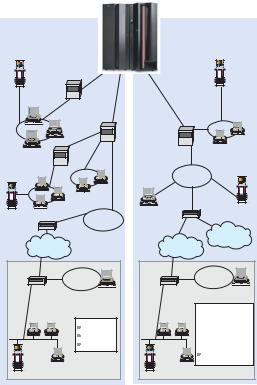

z990 Logical Channel SubSystems (LCSSs) and support for

greater than 15 Logical Partitions (LP)

In order to provide the increased channel connectivity required to support the scalability of the z990, the z990 channel I/O SubSystem delivers a breakthrough in connectivity, by providing up to 4 LCSS per CEC, each of which can support up to 256 CHPIDs with exploitation software installed. This support is provided in such a way that is transparent to the programs operating in the logical partition. Each Logical Channel SubSystem may have from 1 to 256 CHPIDs and may in turn be confi gured with 1 to 15 logical partitions. Each Logical Partition (LPAR) runs under a single LCSS. As with previous zSeries servers, Multiple Image Facility (MIF) channel sharing as well as all other channel subsystem features are available to each Logical Partition confi gured to each Logical Channel SubSystem.

Up tp 30 Logical Partitions

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Up to 256 |

|

|

Up to 256 |

|

|

|

|

Up to 256 |

|

|

|

Up to 256 |

|||||||||

LCSS0 CHPIDs |

LCSS1 |

|

CHPIDs |

LCSS2 |

|

CHPIDs |

LCSS3 |

CHPIDs |

|||||||||||||||||

Physical Channel IDs (PCHIDs) SubSystem

In order to accommodate the new support for up to 1024 CHPIDs introduced with the Logical Channel SubSystem (LCSS) a new Physical Channel ID (PCHID) is being introduced. The PCHID represents the physical location of an I/O feature in the I/O cage. CHPID numbers are no longer pre-assigned and it is now a customer responsibility to do this assignment via IOCP/HCD. CHPID assignment is done by associating a CHPID number with a physical location, the PCHID. It is important to note that although it is possible to have LCSSs, there is still a single IOCDS to defi ne the I/O subsystem. There is a new CHPID mapping tool available to aid in the mapping of CHPIDs to PCHIDs. The CHPID Mapping tool is available from Resource Link™, at ibm.com/servers/resourcelink.

IOCP - IOCDS

|

|

|

|

Partitions |

|

|

|

|

|

|

|

Partitions |

|

|

|

|||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

LCSS0 |

|

|

|

|

LCSS1 |

|||||||||

|

|

|

CHPIDs |

|

|

CHPIDs |

|

|

|

|

||||||||

|

4F |

|

|

12 |

52 |

EF |

|

00 |

|

|

02 |

|

12 |

2F |

EF |

|||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

HCD - HSA or IOCDS - HSA

102 |

103 |

104 |

110 |

|

200 |

|

201 |

|

202 |

|

2B0 |

2C5 |

Physical Channels (PCHIDs)

Note: Crypto no longer requires a CHPID

14

z990 Channels and I/O Connectivity

Logical Channel SubSystem (LCSS) Spanning

The concept of spanning channels provides the ability for a channel to be confi gured to multiple LCSSs and therefore they may be transparently shared by any/all of the logical partitions in those LCSSs. Normal Multiple Image Facility (MIF) sharing of a channel is confi ned to a single LCSS. The z990 supports the spanning of the channels types: IC, HiperSockets, FICON Express, OSA-Express. Note: ESCON architecture helps prevent the spanning of ESCON channels.

IC Channel Spanning

|

PRD1 |

PRD2 |

PRD3 |

PRD4 |

LNX1 |

ICF1 |

|

|

|

|

|

|

|

IC |

|

|

|

LCSS0 |

CHPID 22 |

CHPID 22 |

LCSS1 |

||

A z990 with all three I/O cages installed has a total of 84 I/O slots. These slots can be plugged with a mixture of features providing the I/O connectivity, networking connectivity, coupling and cryptographic capability of the server.

Up to 1024 ESCON Channels

The high density ESCON feature has 16 ports, 15 of which can be activated for customer use. One port is always reserved as a spare which is activated in the event of a failure of one of the other ports. For high availability the initial order of ESCON features will deliver two cards and the active ports will be distributed across those cards. After the initial install the ESCON features are installed in increments of one. ESCON channels are available in four-port increments and are activated using IBM Licensed Internal Code, Confi guration Control (LIC CC).

Up to 120 FICON Express Channels

The z990 supports up to 120 FICON Express channels. FICON Express is available in long wavelength (LX) and short wavelength (SX) features. Each FICON Express feature has two independent ports which support two channels per card. The LX and SX cannot be intermixed on a single feature. The maximum number of FICON Express features is 60 which can be installed across three I/O cages with a maximum of 20 features per I/O cage.

The z990 supports up to 120 FCP channels for attachment to Small Computer System Interface (SCSI) disks in a Linux environment. The same two-port FICON Express feature card used for FICON Express channels can also be used for Fibre Channel Protocol (FCP) channels. FCP

15

channels are enabled on these existing features via a License Internal Code (LIC) with a new mode of operation and new CHPID defi nition. FCP is available in long wavelength (LX) and short wavelength (SX) features, though the LX and SX cannot be intermixed on a single feature. Note, the maximum quantity of FICON Express, OSAExpress, PCICA, and PCIXCC features in combination cannot exceed 20 features per I/O cage and 60 features per server.

InterSystem Channel-3 (ISC-3)

A four feature ISC-3 link is provided on the z990 family of servers. It consists of a mother card with two daughter cards which have two links each. Each of the four is capable of operation at 1 gigabit per second (Gbps) in Compatibility Mode or 2 Gbps in Peer Mode up to an unrepeated distance of 10 km (6.2 miles). The mode is selected for each port via the CHPID type in the IOCDS. The ports are orderable in one-port increments.

An RPQ card (8P2197) is available to allow ISC-3 distances up to 20 km. This card runs in Peer Mode at 1 Gbps and/or Compatibility Mode at 1 Gbps.

Integrated Cluster Bus-2 (ICB-2)

The ICB-2 feature is a coupling link used to provide highspeed communication between a 9672 G5/G6 server and a z990 server over a short distance (less than 7 meters).

The ICB-2 is supported via an STI-2 card which resides in the I/O cage and converts the 2.0 GigaBytes per second (GBps) input into two 333 MegaBytes per second (MBps) ICB-2s. ICB-2 is not supported between z990 and other zSeries servers. ICB-2s cannot be used to connect to a z900 server.

Integrated Cluster Bus-3 (ICB-3)

The ICB-3 feature is a coupling link used to provide highspeed communication between a z990 Server and a z900 General Purpose Server or Model 100 Coupling Facility over a short distance (less than 7 meters). The ICB-3 is supported via an STI-3 card which resides in the I/O cage and converts the 2.0 GBps input into two 1 GBps ICB-3s.

Integrated Cluster Bus-4 (ICB-4)

The ICB-4 feature is a coupling link used to provide highspeed communication between a z990 and/or z890 server over a short distance (less than 7 meters). The ICB-4 consists of a link that attaches directly to a 2.0 GBps STI port on the server and does not require connectivity to an I/O cage.

Internal Coupling Channel (IC)

IC links emulate the coupling links between images within a single server. IC links are defi ned in the IOCP. There is no physical channel involved. A z/OS image can connect to a coupling facility on the same server using IC capabilities.

16

Fibre Channel Connectivity

The on demand operating environment requires fast data access, continuous data availability, and improved fl exibility all with lower cost of ownership. The new increased number of FICON Express features available on the z990 helps distinguish this new server family, further setting it apart as enterprise class in terms of the number of simultaneous I/O connections available for these FICON Express features.

FICON Express Channel Card Features

Performance

With its 2 Gigabit per second link data rate capability, the FICON Express channel card feature (feature codes 2319, 2320) is the latest zSeries implementation for the Fibre Channel Architecture. The FICON Express card has two links and can achieve improved performance over the previous generation FICON channel card. For example, attached to a 100 MBps link (1 Gbps), a single FICON Express feature confi gured as a native FICON channel

is capable of supporting up to 7,200 I/O operations/sec (channel is 100% utilized) and an aggregate total throughput of 120 MBps on z990.

With 2 Gbps links, customers may expect up to 170 MBps of total throughput. The 2 Gbps link data rates are applicable to native FICON and FCP channels on zSeries only and for full benefi t, require 2 Gbps capable devices as well. Customers can leverage this additional bandwidth capacity to consolidate channels and reduce confi guration complexity, infrastructure costs, and the number of channels that must be managed. Please note, no additional

hardware or code is needed in order to obtain 2 Gbps links. The functionality was incorporated in all zSeries with March 2002 LIC. The link data rate is auto-negotiated between server and devices.

Flexibility - Three channel types supported

The FICON Express features support three different channel types: 1) FCV Mode for FICON Bridge Channels, 2) FC mode for Native FICON channels (including the FICON CTC function), and 3) FCP mode for Fibre Channels (FCP channels). Support for FCP devices means that zSeries servers will be capable of attaching to select fi bre channel switches/directors and FCP/SCSI disks and may access these devices from Linux on zSeries and, new with z/VM Version 5 Release 1, installation and operation of z/VM on a SCSI disk.

Distance

All channels defi ned on FICON Express LX channel card features at 1 Gbps link data rates support a maximum unrepeated distance of up to 10 km (6.2 miles, or up to 20 km via RPQ, or up to 100 km with repeaters) over nine micron single mode fi ber and up to 550 meters (1,804 feet) over 50 or 62.5 micron multimode fi ber through Mode Conditioning Patch (MCP) cables. At 2 Gbps link speeds FICON Express LX channel card features support up to 10 km (6.2 miles, or up to 12 km via RPQ, or up to 100

km with repeaters) over nine micron single mode fi ber. At 2 Gbps link speeds, Mode Conditioning Patch (MCP) cables on 50 or 62.5 micron multimode fi ber are not supported. The maximum unrepeated distances for 1 Gbps

17

links defi ned on the FICON Express SX channel cards are up to 500 meters (1,640 feet) and 250 meters (820 feet) for 50 and 62.5 micron multimode fi ber, respectively. The maximum unrepeated distances for 2 Gbps links defi ned on the FICON Express SX channel cards are up to 300 meters and 120 meters for 50 and 62.5 micron multimode fi ber, respectively. The FICON Express channel cards are designed to reduce the data droop effect that made long distances not viable for ESCON. This distance capability is becoming increasingly important as enterprises are moving toward remote I/O, vaulting for disaster recovery and Geographically Dispersed Parallel Sysplex™ for availability.

Shared infrastructure

FICON (FC-SB-2 Fibre Channel Single-Byte Command Code Set-2) has been adopted by INCITS (International Committee for Information Technology Standards) as a standard to the Fibre Channel Architecture. Using open connectivity standards leads to shared I/O fi ber cabling and switch infrastructures, facilitated data sharing, storage management and SAN implementation, and integration between the mainframe and UNIX®/Intel® technologies.

Native FICON Channels

Native FICON channels and devices can help to reduce bandwidth constraints and channel contention to enable easier server consolidation, new application growth, large business intelligence queries and exploitation of e-business.

Currently, the IBM TotalStorage® Enterprise Storage Server® (ESS) Models F10, F20 and 800 have two host adapters to support native FICON. These host adapters each have one port per card and can either be FC 3021 for long

wavelength or FC 3032 for short wavelength on the F10/ F20, or FC 3024 for long wavelength and 3025 for short wavelength on the 800. All three models can support up to 16 FICON ports per ESS. The Model 800 is 2 Gb link capable. The IBM TotalStorage Enterprise Tape Controller 3590 Model A60 provides up to two FICON interfaces which can coexist with ESCON on the same box. Enterprise Tape Controller 3592-J70 provides up to four FICON interfaces, which can exist with ESCON on the same box. The 3592-J70 is designed to provide up to 1.5 times the throughput of the Model A60. Customers can utilize IBM’s highest capacity, highest performance tape drive to support their new business models.

Many Fibre Channel directors provide dynamic connectivity to native FICON control units. The IBM 2032 models 001, 064 and 140 (resell of the McDATA ED-5000, and Intrepid 6000 Series Directors) are 32-, 64and 140-port high availability directors. The IBM 2042 Models 001, 128 and 256 (resell of the CNT FC/9000 Directors) are 64-, 128and 256-port high availability directors. All have features that provide interface support to allow the unit to be managed by System Automation for OS/390. The McDATA Intrepid 6000 Series Directors and CNT FC/9000 Directors support 2 Gbps link data rates as well.

The FICON Express features now support attachment to the IBM M12 Director (2109-M12). The IBM M12 Director supports attachment of FICON Express channels on the z990 via native FICON (FC CHPID type) and Fibre Channel Protocol (FCP CHPID type) supporting attachment to SCSI disks in Linux environments.

18

Wave Division Multiplexor and Optical Amplifi ers that support 2 Gbps FICON Express links are: Cisco Systems ONS 15530 and 15540 ESP (LX, SX) and optical amplifi er (LX, SX), Nortel Networks Optera Metro 5100, 5200 and 5300E and optical amplifi er, ADVA Fiber Service Platform (FSP) 2000 system and the IBM 2029 Fiber Saver.

The raw bandwidth and distance capabilities that native FICON end-to-end connectivity has to offer makes them of interest for anyone with a need for high performance, large data transfers or enhanced multi-site solutions.

FICON CTC function

Native FICON channels support channel-to-channel (CTC) on the z990, z890, z900 and z800. G5 and G6 servers can connect to a zSeries FICON CTC as well. This FICON CTC connectivity will increase bandwidth between G5, G6, z990, z890, z900, and z800 systems.

Because the FICON CTC function is included as part of the native FICON (FC) mode of operation on zSeries, FICON CTC is not limited to intersystem connectivity (as is the case with ESCON), but will also support multiple device definitions. For example, ESCON channels that are dedicated as CTC cannot communicate with any other device, whereas native FICON (FC) channels are not dedicated to CTC only. Native can support both device and CTC mode definition concurrently, allowing for greater connectivity fl exibility.

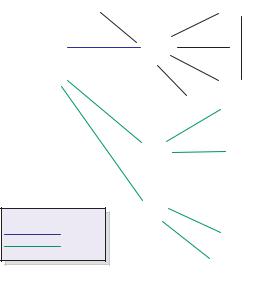

FICON Connectivity

FICON

Bridge

ESCD 9032 Model 5

2032

2042

All FICON Channels = 100MB/s

=LX ONLY

=LX or SX

|

|

ESCON |

|

|

CU |

|

|

|

|

|

ESCON |

|

|

CU |

|

|

|

|

|

ESCON |

|

|

CU |

FICON |

|

|

Bridge |

ESS |

|

|

|

F10, F20, 800 |

|

140 |

|

|

or |

|

64 |

Enterprise |

|

32, |

PORT |

|

|

|

Tape |

|

|

Controller |

|

|

3590 A60 |

|

256 |

|

|

or |

|

|

128 |

|

64, |

PORT |

|

|

|

|

|

|

Enterprise |

|

|

Tape |

|

|

Controller |

|

|

3590 A60 |

ESS

F10, F20, 800

FICON Support for Cascaded Directors

Native FICON (FC) channels now support cascaded directors. This support is for a single hop confi guration only. Two-director cascading requires a single vendor high integrity fabric. Directors must be from the same vendor since cascaded architecture implementations can be unique. This type of cascaded support is important for disaster recovery and business continuity solutions because it can help provide high availability, extended distance connectivity, and (particularly with the implementation of 2 Gbps Inter Switch Links), has the potential for

fi ber infrastructure cost savings by reducing the number of channels for interconnecting the 2 sites.

19

FICON cascaded directors have the added value of high integrity connectivity. New integrity features introduced within the FICON Express channel and the FICON cascaded switch fabric to aid in the detection and reporting of any miscabling actions occurring within the fabric can prevent data from being delivered to the wrong end point.

FICON cascaded directors are offered in conjunction with IBM, CNT, and McDATA directors.

Two site non-cascaded director topology. Each CEC connects to directors in both sites.

With Inter Switch Links (ISLs),

less fiber cabling may be needed for cross-site connectivity

Two Site cascaded director topology. Each CEC connects to local directors only.

FICON Bridge Channel

Introduced fi rst on the 9672 G5 processors, the FICON Bridge (FCV) channel is still an effective way to use FICON bandwidth with existing ESCON control units. FICON Express LX channel cards in FCV (FICON Converted) Mode of operation can attach to the 9032 Model 005 ESCON Director through the use of a director bridge card. Up to 16 bridge cards are supportable on a single 9032 Model 005 with each card capable of sustaining up to eight concurrent ESCON data transfers. 9032 Model 005 ESCON Directors can be fi eld upgradeable at no charge to support the bridge cards, and bridge cards and ESCON cards can coexist in the same director.

FCP Channels

zSeries supports FCP channels, switches and FCP/SCSI disks with full fabric connectivity under Linux on zSeries and z/VM Version 4 Release 3 and later for Linux as a guest under z/VM. Support for FCP devices means that zSeries servers will be capable of attaching to select FCP/ SCSI devices and may access these devices from Linux on zSeries. This expanded attachability means that enterprises have more choices for new storage solutions, or may have the ability to use existing storage devices, thus leveraging existing investments and lowering total cost of ownership for their Linux implementation.

For details of supported FICON and FCP attachments access Resource Link at ibm.com/servers/resourcelink and in the Planning section, go to z890/z990 I/O Connection information.

The support for FCP channels is for Linux on zSeries and z/VM 4.3 and later for Linux as a guest under z/VM. Linux may be the native operating system on the zSeries server (note z990 runs LPAR mode only), or it can be in LPAR mode or, operating as a guest under z/VM 4.3 or later. The z990 now provides support for IPL of Linux guest images from appropriate FCP attached devices.

Now, z/VM V5.1 supports SCSI FCP disks enabling the deployment of a Linux server farm running under VM con- fi gured only with SCSI disks. With this support you can install, IPL, and operate z/VM from SCSI disks.

The 2 Gbps capability on the FICON Express channel cards means that 2 Gbps link speeds are available for FCP channels as well.

20

Open Systems Adapter-Express Features (OSA-Express)

FCP Full fabric connectivity

FCP full fabric support means that any number of (single vendor) FCP directors/ switches can be placed between the server and FCP/ SCSI device, thereby allowing many “hops” through a storage network for I/O connectivity.

This support along with 2 Gbps link capability is being delivered together with IBM switch vendors IBM, CNT, and McDATA. FCP full fabric connectivity enables multiple FCP switches/ directors on a fabric to share links and therefore provides improved utilization of inter-site connected resources and infrastructure. Further savings may be realized in the reduction of the number of fi ber optic cabling and director ports.

When confi gured as FCP CHPID type, the z990 FICON Express features support the industry standard interface for Storage Area Network (SAN) management tools.

|

FCP |

|

|

|

Device |

|

|

|

FCP |

|

|

|

Device |

|

|

|

FCP |

FCP |

|

|

Device |

||

|

Device |

||

Fibre Channel |

FCP |

FCP |

|

Device |

Device |

||

Directors |

|||

|

|

||

|

FCP |

FCP |

|

|

Device |

||

|

Device |

||

|

|

||

|

FCP |

FCP |

|

|

Device |

||

|

Device |

||

|

|

||

|

|

FCP |

|

|

|

Device |

|

|

|

FCP |

|

|

|

Device |

With the introduction of the z990, its increased processing capacity, and the availability of Logical Channel SubSystems, the OSA-Express Adapter family of Local Area Network (LAN) features is also expanding by offering a maximum of up to 24 features per server, versus the maximum of up to 12 features per server on prior generations. This expands the z990 balanced solution to increase throughput and responsiveness in an on demand operating environment. These features combined with z/OS, or OS/390, z/VM, Linux on zSeries, TPF, and VSE/ESA can deliver a balanced system solution to increase throughput and decrease host interrupts to help satisfy your business goals.

Each of the OSA-Express features offers two ports for connectivity delivered in a single I/O slot, with up to a maximum of 48 ports (24 features) per z990. Each port uses

a single CHPID and can be separately confi gured. For a new z990 build, you can choose any combination of OSAExpress features: the new OSA-Express Gigabit Ethernet LX or SX, the new OSA-Express 1000BASE-T Ethernet or OSA-Express Token-Ring. The prior OSA-Express Gigabit LX and SX, the OSA-Express Fast Ethernet, and the OSAExpress Token-Ring can be carried forward on an upgrade from z900.

21

z990 OSA-Express 1000BASE-T Ethernet

The new OSA-Express 1000BASE-T Ethernet feature replaces the current Fast Ethernet (10/100 Mbps) feature. This new feature is capable of operating at 10,100 or 1000 Mbps (1 Gbps) using the same copper cabling infrastructure as Fast Ethernet making transition to this higher speed Ethernet feature a straightforward process. It is designed to support Auto-negotiation, QDIO and non-QDIO environments on each port, allowing you to make the most of your TCP/IP and SNA/APPN® and HPR environments at up to gigabit speeds.

When this adapter is operating at gigabit Ethernet speed it runs full duplex only. It also can support standard (1492 or 1500 byte) and jumbo (8992 byte) frames.

The new Checksum offl oad support on the 1000BASE-T Ethernet feature when operating in QDIO mode at gigabit speed is designed to offl oad z/OS 1.5 and Linux TCP/IP stack processing of Checksum packet headers for TCP/IP and UDP.

non-QDIO mode |

QDIO Mode - TCP/IP |

||

SNA Passthru |

|

|

|

TCP/IP Passthru |

|

|

|

HPDT MPC |

10/100/1000 Mbps |

||

|

|||

|

Ethernet (copper) |

||

|

|

IBM ^pSeries®, |

|

10/100/1000 Mbps |

|

RS/6000 |

|

|

|

||

Ethernet (copper) |

|

|

|

|

|

IBM ^xSeries®, |

|

|

|

Netfinity |

|

Switch/ |

10/100/1000 |

Switch/ |

|

Hub/ |

|||

Hub/ |

Mbps |

||

Router |

|||

Router |

Ethernet |

||

|

|||

10/100 Mbps |

|

|

|

Ethernet |

|

|

|

|

Server |

10/100 Mbps |

|

Server |

Ethernet |

||

DLSw Router |

|

IP Router |

|

|

|

Internet or |

IP WAN |

IP WAN |

extranet |

Intranet |

Intranet |

Remote Office |

Remote Office |

|

|

DLSw |

IP Router |

|

Router |

|

|

|

|

|

4/16 Mbps |

|

4/16 Mbps |

Token-Ring |

|

Token-Ring |

|

|

TCP/IP |

|

|

applications |

|

SNA DLSw |

TN3270 browser |

|

access to SNA |

|

|

TCP/IP |

|

|

applications |

|

|

Native SNA |

|

|

Enterprise |

|

|

|

|

|

|

Extender for |

|

|

SNA end points |

10/100 Mbps |

|

10/100 Mbps |

Server Ethernet |

|

Server Ethernet |

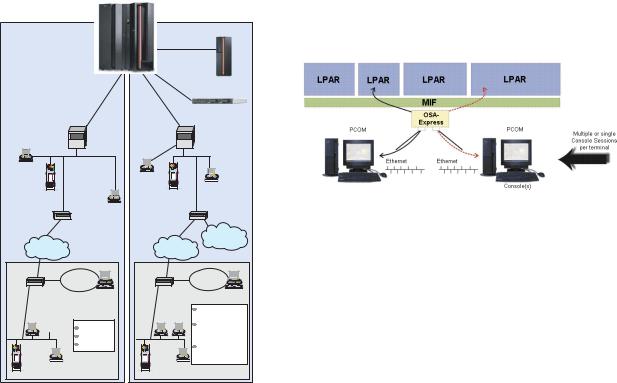

OSA-Express Integrated Console Controller

The new Open Systems Adapter-Express Integrated Console Controller function (OSA-ICC), which is exclusive to IBM and the IBM z890 and z990 servers since it is based on the OSA-Express feature, supports the attachment of non-SNA 3270 terminals for operator console applications. Now, 3270 emulation for console session connections

is integrated in the zSeries and can help eliminate the requirement for external console controllers (2074, 3174) helping to reduce cost and complexity.

The OSA-ICC uses one or both ports on an OSA-Express 1000BASE-T Ethernet feature with the appropriate Licensed Internal Code (LIC). The OSA-ICC is enabled using CHPID type OSC.

The OSA-ICC supports up to 120 client console sessions either locally or remotely.

Support for this new function will be available for z/VM Version 4 Release 4 and later, z/OS Version 1 Release3, VSE/ESA Version 2 Release 6 onwards and TPF.

22

Queued Direct Input/Output (QDIO)

The OSA-Express Gigabit Ethernet, 1000BASE-T Ethernet and Token-Ring features support QDIO (CHPID type OSD), which is unique to IBM. QDIO was fi rst introduced to the world on the z900, in Communication Server for OS/390 2.7.

Queued Direct Input/Output (QDIO), a highly effi cient data transfer architecture, breaks the barriers associated with the Channel Control Word (CCW) architecture increasing data rates and reducing CPU cycle consumption. QDIO allows an OSA-Express feature to directly communicate with the server’s communications program through the use of data queues in memory. QDIO helps eliminate the use of channel program and channel control words (CCWs), helping to reduce host interrupts and accelerate TCP/IP packet transmission.

TCP/IP connectivity is increased with the capability to allow up to a maximum of 160 IP stacks per OSA-Express port and 480 devices. This support is applicable to all the OSA-Express features available on the z990 and is provided through the Licensed Code (LIC).

Full Virtual Local Area Network (VLAN) support is available on z990 in z/OS V1.5 Communications Server (CS) for the OSA-Express 1000BASE-T Ethernet, Fast Ethernet and Gigabit Ethernet features when confi gured in QDIO mode. Full VLAN is also available with z/OS V1.2 on z900 and z800 using appropriate LIC upgrade on Fast Ethernet and Gigabit Ethernet features. Full VLAN support in a Linux on zSeries environment was delivered for QDIO mode in April 2002 for z800 and z900. z/VM V4.4, and later, also exploits the VLAN technology offering one global VLAN ID for IPv4. z/VM V5.1 provides the support for one global VLAN ID for IPv6.

z990 OSA-Express Gigabit Ethernet

The new z990 OSA-Express Gigabit Ethernet LX and Gigabit Ethernet SX features replace the z900 Gigabit Ethernet features for new build z990. The new OSA-Express GbE features have a new connector type, LC Duplex, replacing the current SC Duplex connectors used on the prior z900 Gigabit Ethernet features. The new Checksum offl oad support on these z990 features is designed to offl oad z/OS V1.5 and Linux on zSeries TCP/IP stack processing of Checksum packet headers for TCP/IP and UDP.

QDIO Mode - TCP/IP

pSeries, RS/6000

Gigabit Ethernet (fiber or copper)

xSeries, Netfinity

4/16/100 Mbps Gigabit Ethernet Token-Ring

Switch / (fiber or copper)

(fiber or copper)

Router

Gigabit |

|

Server |

|

Ethernet |

|

||

|

|

||

|

10/100 Mbps |

IP |

|

|

Router |

||

Server |

Ethernet |

||

|

|||

|

Server |

|

|

|

IP WAN |

Internet or |

|

|

Intranet |

extranet |

TCP/IP applications |

Remote Office |

TN3270 browser |

IP Router |

access to SNA appls. |

|

Enterprise Extender |

|

for SNA end points |

|

|

10/100 Mbps |

4/16 Mbps |

Ethernet |

Token-Ring |

|

|

Server |

23

NON-QDIO operational mode

The OSA-Express 1000BASE-T Ethernet, Fast Ethernet and Token-Ring also support the non-QDIO mode of operation (CHPID type OSE). The adapter can only be set (via the CHPID type parameter) to one mode at a time. The non-QDIO mode does not provide the benefi ts of QDIO. However, this support includes native SNA/APPN, High Performance Routing, TCP/IP passthrough, and HPDT MPC. The new OSA-Express 1000BASE-T Ethernet provides support for TCP/IP and SNA/APPN/HPR up to 1 gigabit per second over the copper wiring infrastructure.

z990 OSA-Express Token-Ring

The same OSA-Express Token-Ring feature is supported on z990 and z900. This Token-Ring supports a range of speed including 4, 16 and 100 Mbps, and can operate in both QDIO and non-QDIO modes.

Note: OSA-Express 155 ATM and OSA-2 FDDI are no longer supported. If ATM or FDDI support are still required, a multiprotocol switch or router with the appropriate network interface for example, 1000BASE-T Ethernet, GbE LX or GbE SX can be used to provide connectivity between the LAN and the ATM network or FDDI LAN.

Server to User connections

A key strength of OSA-Express and associated Communications Server protocol support is the ability to accommodate the customer’s attachment requirements, spanning combinations of TCP/IP and SNA applications and devices. Customers can use TCP/IP connections from the

non-QDIO mode |

QDIO Mode - TCP/IP |

SNA Passthru |

|

TCP/IP Passthru |

|

HPDT MPC |

|

4/16 Mbps |

|

Token-Ring |

|

Switch/ |

Server |

Hub/ |

|

Router |

4/16/100 Mbps |

Server |

Token-Ring |

100 Mbps |

Backbone |

Token-Ring |

100 Mbps |

Backbone |

|

|

Token-Ring |

Switch/ |

|

Hub/ |

Switch/ |

Router |

|

|

Hub/ |

|

Router |

4/16 Mbps

Token-Ring

|

|

4/16 Mbps |

|

|

|

Token-Ring |

|

|

16/100 Mbps |

|

|

|

Token-Ring |

|

Server |

|

|

|

|

Server |

4/16 Mbps |

IP Router |

|

|

|

|

|

DSLw |

Token-Ring |

|

|

Router |

|

|

Internet or |

IP WAN |

|

IP WAN |

extranet |

Intranet |

Remote Office |

Intranet |

Remote Office |

|

|

||

4/16 Mbps |

Token-Ring |

SNA DLSw |

TCP/IP |

Native SNA |

10/100 Mbps |

Ethernet |

4/16 Mbps

Token-Ring

TN3270 browser access to SNA appls.

TN3270 browser access to SNA appls.  Enterprise Extender for SNA end points

Enterprise Extender for SNA end points

TCP/IP |

applications |

10/100 Mbps

Ethernet

remote site to either their TCP/IP or SNA applications on zSeries and S/390 by confi guring OSA-Express with QDIO and using either direct TCP/IP access or use appropriate SNA to IP integration technologies, such as TN3270 Server and Enterprise Extender for access to SNA applications. Customers who require the use of native SNA-based connections from the remote site can use a TCP/IP or SNA transport to the data center and then connect into zSeries and S/390 using appropriate SNA support on OSAExpress features confi gured in non-QDIO mode.

24

LPAR Support of OSA-Express

For z990 customers or customers who use the Processor Resource/Systems Manager (PR/SM) capabilities IBM offers the Multiple Image Facility (MIF), allowing the sharing of physical channels by any number of LPARs. Since a port on an OSA-Express feature operates as a channel, sharing of an OSA-Express port is done using MIF. The LPARs are defi ned in the Hardware Confi guration Defi nition (HCD). Depending upon the feature, and how it is

defi ned, SNA/APPN/HPR and TCP/IP traffi c can fl ow simultaneously through any given port.

IPv6 Support

IPv6 requires the use of an OSA-Express adapter running in QDIO mode and is supported only on OSA-Express features on zSeries at driver level 3G or above. IPv6 is supported on OSA-Express for zSeries Fast Ethernet, 1000BASE-T Ethernet and Gigabit Ethernet when running with Linux on zSeries, z/VM V5.1, and z/OS V1.4 and later. z/VM V4.4 provided IPv6 support for guest LANs.

more effi cient technique for I/O interruptions designed to reduce path lengths and overhead in both the host operating system and in the adapter. This benefi ts OSAExpress TCP/IP support in both Linux for zSeries and z/VM.

•The z990’s support of virtual machine technology has been enhanced to include a new performance assist for virtualization of adapter interruptions. This new z990

performance assist is available to V=V guests (pageable guests) that support QDIO on z/VM V4.4 and later. The deployment of adapter interruptions improves effi ciency and performance by reducing z/VM Control Program overhead.

Performance enhancements for virtual servers

Two important networking technology advancements are announced in z/VM V4.4 and Linux on z990:

•The high performance adapter interrupt handling fi rst introduced with HiperSockets is now available for both OSA-Express in QDIO mode (CHPID=OSD) and FICON Express (CHPID=FCP). This advancement provides a

25

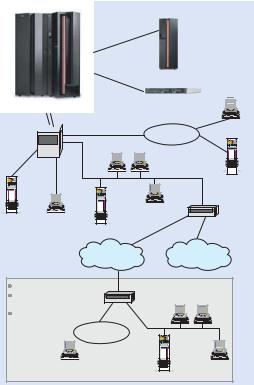

HiperSockets

HiperSockets, a function unique to the zSeries, provides a “TCP/IP network in the server” that allows high-speed any-to-any connectivity among virtual servers (TCP/IP images) and LPARs within a zSeries system without any

physical cabling. HiperSockets decreases network latency and increases bandwidth between combinations of Linux, z/OS and z/VM virtual servers. These OS images can be

fi rst level (directly under an LPAR), or second level images (virtual servers under z/VM).

With new support for up to 16 HiperSockets the z990 provides four times more HiperSockets, and up to 4,096 TCP/ IP images (stack) connections, which is also four times more capacity than the z900. The increased HiperSockets capacity and expanded connectivity provides additional

fl exibility in designing the networking to accommodate consolidated and multiple partitioned systems. HiperSockets can be divided among Logical Channel SubSystems for separation between various LPARs, while at the same time a single LPAR could have access to all 16 HiperSockets if the HiperSockets all are assigned to the same LCSS.

A HiperSockets channel also supports spanned channels in order to communicate between LPARs connected to different LCSSs. HiperSockets (IQD CHPID type) can be confi gured to Channel SubSystems and transparently shared by any or all confi gured LPARs without regard to the LCSS to which the LPAR is confi gured. This means one HiperSockets could be common to all 30 z990 LPARs. This support is exclusive to z990. Different HiperSockets can be used for security (separation of traffi c, no external wire-tap- ping, monitoring) and performance and management reasons (separate sysplex traffi c, Linux or non-sysplex LPAR traffi c).

z/VM |

|

|

z/OS |

Linux |

z/VM |

Linux |

|

Linux |

|||

|

|

LPAR |

LPAR |

LPAR |

LPAR |

|

LPAR |

||||

LPAR 1 |

|

|

|

||||||||

|

|

14 |

|

15 |

17 |

18 |

|

30 |

|||

|

|

|

LCSS0 |

|

|

|

LCSS1 |

|

|

||

|

MIF-1 MIF-2 |

MIF-F |

|

MIF-1 MIF-2 MIF-3 |

MIF-F |

||||||

CHPID CHPID CHPID CHPID |

CHPID |

CHPID |

CHPID CHPID |

CHPID CHPID CHPID |

|||||||

00 |

01 |

02 |

03 |

|

FF |

00 |

01 |

05 |

22 |

FF |

|

Share |

|

04 |

Share |

||||||||

|

|

|

|

|

|

|

|

|

|||

PCHID PCHID PCHID |

|

PCHID |

SPAN |

PCHID PCHID |

|

PCHID PCHID |

|||||

010B |

010C |

010D |

|

020A |

|

0245 |

0246 |

|

0248 |

0249 |

|

HiperSockets CHPID 03 |

HiperSockets CHPID 05 |

HiperSockets CHPID 04

HiperSockets does not use an external network, therefore, it can free up system and network resources, reducing attachment cost while improving availability and performance. HiperSockets can have signifi cant value in server consolidation, for example, by connecting multiple Linux virtual servers under z/VM to z/OS LPARs within the same z990. Furthermore, HiperSockets can be utilized by TCP/IP in place of XCF for sysplex connectivity between images which exist in the same server. Thus z/OS TCP/IP uses HiperSockets for connectivity between sysplex images in the same server and uses XCF for connectivity between images in different servers. Management and administration cost reductions over existing confi gurations are possible.

HiperSockets acts like any other TCP/IP network interface, so TCP/IP features like IP Security (IPSec) in Virtual Private Networks (VPN) and Secure Sockets Layer (SSL) can be used to provide heightened security for fl ows within the same CHPID. HiperSockets supports multiple frame sizes, which is confi gured on a per HiperSockets CHPID basis.

26

This support gives the user the fl exibility to optimize and tune each HiperSockets to the predominant traffi c profi le, for example to distinguish between “high bandwidth” workloads such as FTP versus lower bandwidth interactive workloads.

The HiperSockets function provides many possibilities for improved integration between workloads in different LPARs, bound only by the combinations of operating systems and their respective applications. HiperSockets is intended to provide the fastest zSeries connection between e-business and Enterprise Resource Planning (ERP) solutions sharing information while running on the same server. WebSphere http and Web Application Servers or Apache http servers can be running in a Linux image (LPAR or z/VM guest) and will be able to use HiperSockets for very fast TCP/IP traffi c transfer to a DB2 database server running in a z/OS LPAR. System performance is optimized because this allows you to keep your Web and transaction application environments in close proximity to your data and helps eliminate any exposure to network related outages, thus improving availability.

The z/OS HiperSockets Accelerator function can improve performance and cost effi ciencies when attaching a high number of TCP/IP images via HiperSockets to a “front end” z/OS system for shared access to a set of OSA-Express adapters.

HiperSockets VLAN support in a Linux environment: Virtual Local Area Networks (VLANs), IEEE standard 802.1q, is now being offered for HiperSockets in a Linux for zSeries environment. VLANs can help reduce overhead by allowing networks to be organized for optimum traffi c fl ow; the

network is organized by traffi c patterns rather than physical location. This enhancement permits traffi c to fl ow on a VLAN connection between applications over HiperSockets and between applications on HiperSockets connecting to an OSA-Express Gigabit Ethernet, 1000BASE-T Ethernet, or Fast Ethernet feature.

HiperSockets broadcast support for IPv4 packets – Linux, z/OS, z/VM: Internet Protocol Version 4 (IPv4) broadcast packets are now supported over HiperSockets internal LANs. TCP/IP applications that support IPv4 broadcast, such as z/OS OMPROUTE when running Routing Information Protocol Version 1 (RIPv1), can send and receive broadcast packets over HiperSockets interfaces. This support is exclusive to z990. Broadcast for IPv4 packets is supported by Linux for zSeries. Support is planned to be available in z/OS V1.5. Support is also offered in z/VM V4.4 and later.

HiperSockets Network Concentrator

HiperSockets Network Concentrator support, exclusive to z890 and z990 can simplify network addressing between HiperSockets and OSA-Express. You can now integrate HiperSockets-connected operating systems into external networks, without requiring intervening network routing overhead, thus helping to increase performance and simplify confi guration. With HiperSockets Network Concentrator support, you can confi gure a special purpose Linux operating system instance, which can transparently bridge traffi c between a HiperSockets internal Local Area Network (LAN) and an external OSA-Express network attachment, similar to a real Layer 2 switch which bridges between different network segments. This support can make the internal HiperSockets network address connection appear as if it were directly connected to the external network.

27

Loading...

Loading...